azure-search-vector-samples

A repository of code samples for Vector search capabilities in Azure AI Search.

Stars: 740

This repository provides code samples in Python, C#, REST, and JavaScript for vector support in Azure AI Search. It includes demos for various languages showcasing vectorization of data, creating indexes, and querying vector data. Additionally, it offers tools like Azure AI Search Lab for experimenting with AI-enabled search scenarios in Azure and templates for deploying custom chat-with-your-data solutions. The repository also features documentation on vector search, hybrid search, creating and querying vector indexes, and REST API references for Azure AI Search and Azure OpenAI Service.

README:

This repository provides Python, C#, REST, and JavaScript code samples for vector support in Azure AI Search.

There are breaking changes from REST API version 2023-07-01-Preview to newer API versions. These breaking changes also apply to the Azure SDK beta packages targeting that REST API version. See Upgrade REST APIs for migration guidance.

Vector support consists of generally available features and preview features.

| Feature | Status |

|---|---|

| vector indexing | generally available (2023-11-01 and stable SDK packages) |

| vector queries | generally available (2023-11-01 and stable SDK packages) |

| integrated data chunking | public preview (2023-10-01-preview and later, plus beta SDK packages) |

| integrated embedding | public preview (2023-10-01-preview and later, plus beta SDK packages) |

| index projections | public preview (2023-10-01-preview and later, plus beta SDK packages) |

| vectorizers | public preview (2023-10-01-preview and later, plus beta SDK packages) |

| scalar quantization | public preview (2024-03-01-preview and later, plus beta SDK packages) |

| OneLake indexer | public preview (2024-05-01-preview, plus beta SDK packages) |

| Binary vectors support | public preview (2024-05-01-preview, plus beta SDK packages) |

Preview features are available under Supplemental Terms of Use.

| Sample | Description | Status |

|---|---|---|

| demo-python readme | A growing collection of notebooks that demonstrate aspects of vector search support, including data chunking and embedding of both text and image content and queries, using a variety of frameworks and techniques. | GA and preview |

| Sample | Description | Status |

|---|---|---|

| DotNetVectorDemo | A .NET console app that calls Azure OpenAI to vectorize data. It then calls Azure AI Search to create, load, and query vector data. | Generally available (GA) |

| DotNetIntegratedVectorizationDemo | A .NET console app that calls Azure AI Search to create an index, indexer, data source, and skillset. An Azure Storage account provides the data. Azure OpenAI is called by the skillset during indexing, and again during query execution to vectorize text queries. | Public preview |

| QuantizationAndStorageOptions | A .NET console app that demonstrates narrow data types and built-in scalar quantization, reducing vector index size in memory and on disk. It also disables storage of vectors returned in query response, which you don't need if you're not returning vectors in a query. | Public preview |

| Sample | Description | Status |

|---|---|---|

| demo-vectors | A Java console app that calls Azure OpenAI to vectorize data. It then calls Azure AI Search to create, load, and query vector data. | GA |

| demo-integrated-vectorization | A Java console app that calls Azure AI Search to create an index, indexer, data source, and skillset. An Azure Storage account provides the data. Azure OpenAI is called by the skillset during indexing, and again during query execution to vectorize text queries. | GA and preview |

| Sample | Description | Status |

|---|---|---|

| JavaScriptVectorDemo | A single folder contains three code samples. The azure-search-vector-sample.js script calls just Azure OpenAI and is used to generate embeddings for fields in an index. The docs-text-openai-embeddings.js program is an end-to-end code sample that calls Azure OpenAI for embeddings and Azure AI Seach to create, load, and query an index that contains vectors. The query-text-openai-embeddings.js script generates an embedding for a vector query. |

GA and preview |

- azure-ai-search-lab A learning and experimentation lab for trying out various AI-enabled search scenarios in Azure. It includes web application front-end which uses Azure AI Search and Azure OpenAI to execute searches with a variety of options - ranging from simple keyword search, to semantic ranking, vector and hybrid search, and using generative AI to answer search queries in various ways. This allows you to quickly understand what each option does, how it affects the search results, and how various approaches compare against each other.

- chat-with-your-data-solution-accelerator A template that deploys multiple Azure resources for a custom chat-with-your-data solution. Use this accelerator to create a production-ready solution that implements coding best practices.

- Azure Search OpenAI Demo A sample app for the Retrieval-Augmented Generation pattern running in Azure, using Azure AI Search for retrieval and Azure OpenAI large language models to power ChatGPT-style and Q&A experiences. Use the "vectors" branch to leverage Vector retrieval.

- Azure Search OpenAI Demo - C# A sample app for the Retrieval-Augmented Generation pattern running in Azure, using Azure AI Search for retrieval and Azure OpenAI large language models to power ChatGPT-style and Q&A experiences using C#.

- Azure OpenAI Embeddings QnA with Azure Search as a Vector Store (github.com) A simple web application for a OpenAI-enabled document search. This repo uses Azure OpenAI Service for creating embeddings vectors from documents. For answering the question of a user, using Azure AI Search for retrieval and Azure OpenAI large language models to power ChatGPT-style and Q&A experiences.

- ChatGPT Retreival Plugin Azure Search Vector Database The ChatGPT Retrieval Plugin lets you easily find personal or work documents by asking questions in natural language. Azure AI Search now supported as an official vector database.

- Azure Search Vector Search Demo Web App Template A Vector Search Demo React Web App Template using Azure OpenAI for Text Search and Cognitive Services Florence Vision API for Image Search.

- Azure Cognitive Search Comparison Tool

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for azure-search-vector-samples

Similar Open Source Tools

azure-search-vector-samples

This repository provides code samples in Python, C#, REST, and JavaScript for vector support in Azure AI Search. It includes demos for various languages showcasing vectorization of data, creating indexes, and querying vector data. Additionally, it offers tools like Azure AI Search Lab for experimenting with AI-enabled search scenarios in Azure and templates for deploying custom chat-with-your-data solutions. The repository also features documentation on vector search, hybrid search, creating and querying vector indexes, and REST API references for Azure AI Search and Azure OpenAI Service.

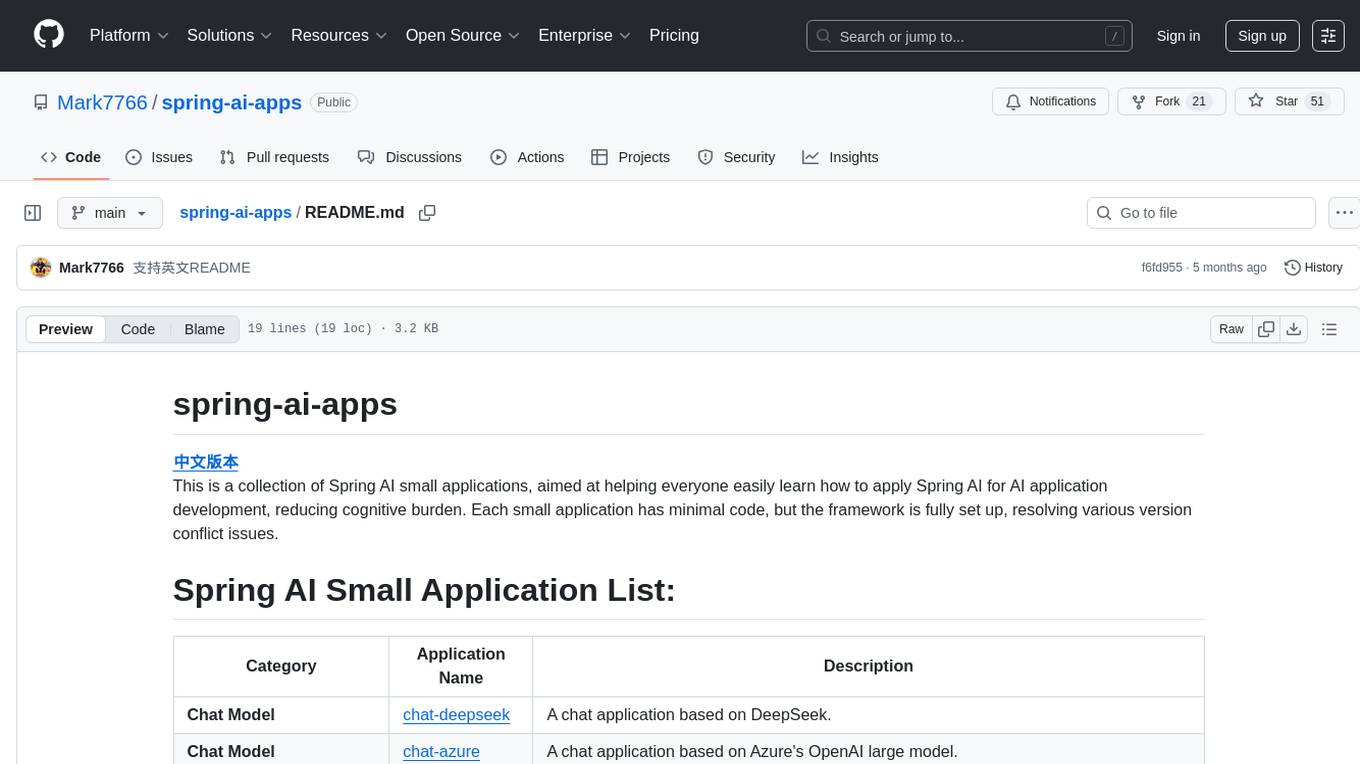

spring-ai-apps

spring-ai-apps is a collection of Spring AI small applications designed to help users easily apply Spring AI for AI application development. Each small application comes with minimal code and a fully set up framework to resolve version conflict issues.

AI-in-a-Box

AI-in-a-Box is a curated collection of solution accelerators that can help engineers establish their AI/ML environments and solutions rapidly and with minimal friction, while maintaining the highest standards of quality and efficiency. It provides essential guidance on the responsible use of AI and LLM technologies, specific security guidance for Generative AI (GenAI) applications, and best practices for scaling OpenAI applications within Azure. The available accelerators include: Azure ML Operationalization in-a-box, Edge AI in-a-box, Doc Intelligence in-a-box, Image and Video Analysis in-a-box, Cognitive Services Landing Zone in-a-box, Semantic Kernel Bot in-a-box, NLP to SQL in-a-box, Assistants API in-a-box, and Assistants API Bot in-a-box.

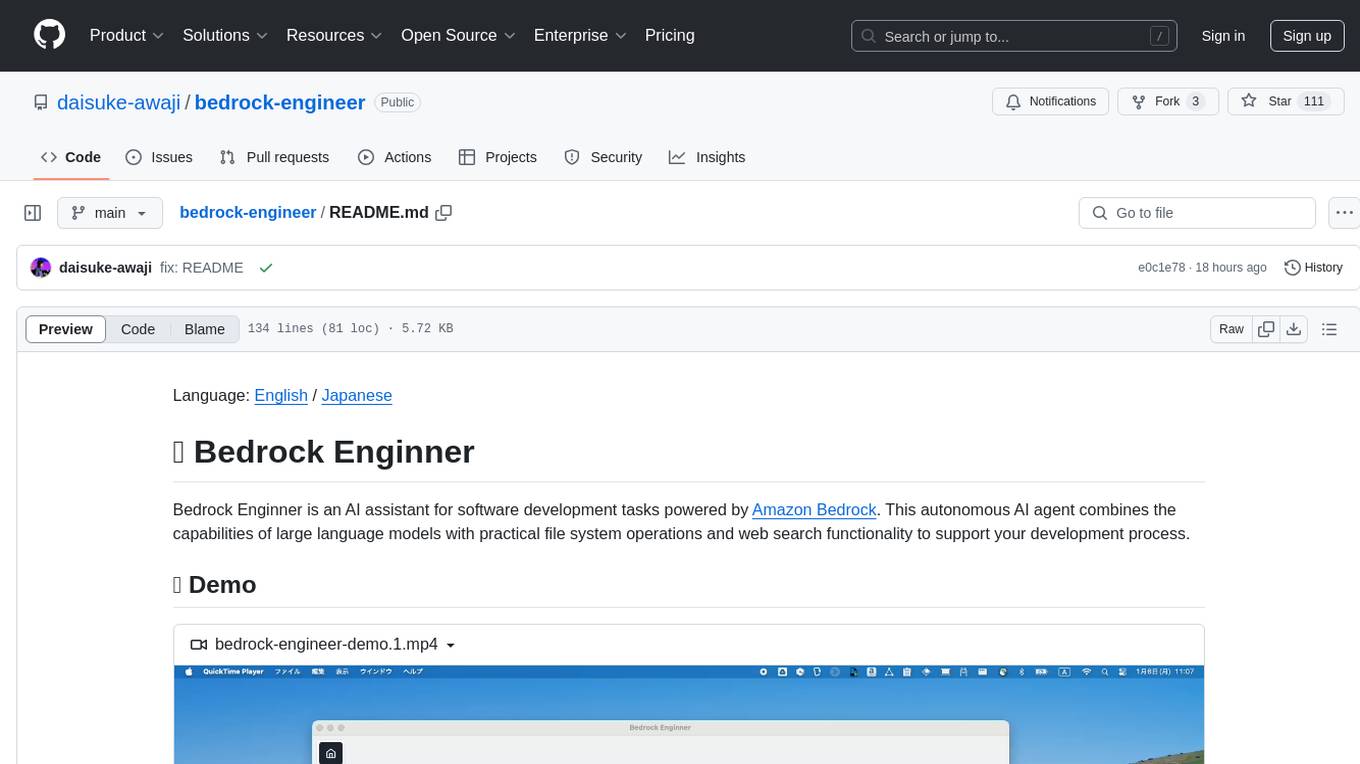

bedrock-engineer

Bedrock Engineer is an AI assistant for software development tasks powered by Amazon Bedrock. It combines large language models with file system operations and web search functionality to support development processes. The autonomous AI agent provides interactive chat, file system operations, web search, project structure management, code analysis, code generation, data analysis, agent and tool customization, chat history management, and multi-language support. Users can select agents, customize them, select tools, and customize tools. The tool also includes a website generator for React.js, Vue.js, Svelte.js, and Vanilla.js, with support for inline styling, Tailwind.css, and Material UI. Users can connect to design system data sources and generate AWS Step Functions ASL definitions.

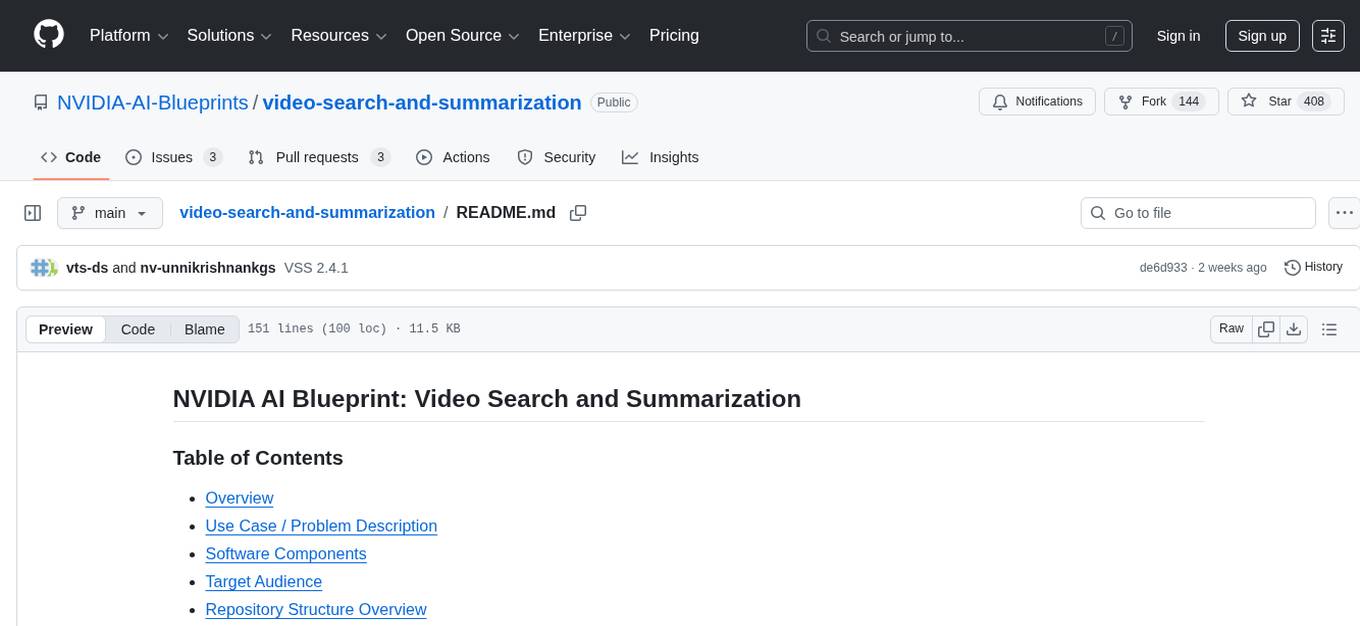

video-search-and-summarization

The NVIDIA AI Blueprint for Video Search and Summarization is a repository showcasing video search and summarization agent with NVIDIA NIM microservices. It enables industries to make better decisions faster by providing insightful, accurate, and interactive video analytics AI agents. These agents can perform tasks like video summarization and visual question-answering, unlocking new application possibilities. The repository includes software components like NIM microservices, ingestion pipeline, and CA-RAG module, offering a comprehensive solution for analyzing and summarizing large volumes of video data. The target audience includes video analysts, IT engineers, and GenAI developers who can benefit from the blueprint's 1-click deployment steps, easy-to-manage configurations, and customization options. The repository structure overview includes directories for deployment, source code, and training notebooks, along with documentation for detailed instructions. Hardware requirements vary based on deployment topology and dependencies like VLM and LLM, with different deployment methods such as Launchable Deployment, Docker Compose Deployment, and Helm Chart Deployment provided for various use cases.

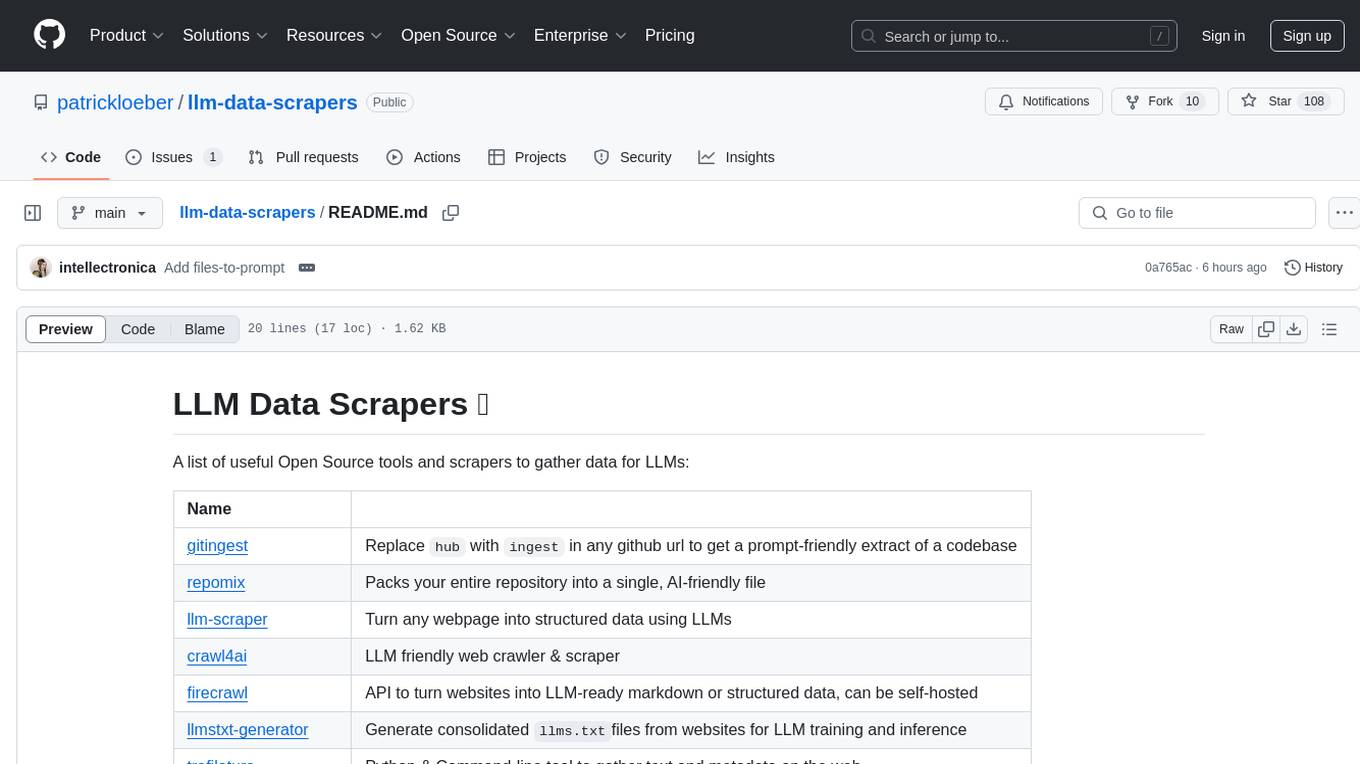

llm-data-scrapers

LLM Data Scrapers is a collection of open source tools and scrapers designed to gather data for Large Language Models (LLMs). The repository includes various tools such as gitingest for extracting codebases, repomix for packing repositories into AI-friendly files, llm-scraper for converting webpages into structured data, crawl4ai for web crawling, and firecrawl for turning websites into LLM-ready markdown or structured data. Additionally, the repository offers tools like llmstxt-generator for generating training data, trafilatura for gathering web text and metadata, RepoToTextForLLMs for fetching repo content, marker for converting PDFs, reader for converting URLs to LLM-friendly inputs, and files-to-prompt for concatenating files into prompts for LLMs.

dotnet-ai-workshop

The .NET AI Workshop is a comprehensive guide designed to help developers add AI features to .NET applications. It covers various AI-based features such as classification, summarization, data extraction/cleaning, anomaly detection, translation, sentiment detection, semantic search, Q&A chatbots, and voice assistants. The workshop is tailored for developers new to AI in .NET applications, focusing on the usage of AI services without the need for prior AI technology knowledge. It provides examples using .NET and C# with a focus on AI topics, aiming to enhance productivity and automation in applications.

free-for-life

A massive list including a huge amount of products and services that are completely free! ⭐ Star on GitHub • 🤝 Contribute # Table of Contents * APIs, Data & ML * Artificial Intelligence * BaaS * Code Editors * Code Generation * DNS * Databases * Design & UI * Domains * Email * Font * For Students * Forms * Linux Distributions * Messaging & Streaming * PaaS * Payments & Billing * SSL

arthur-engine

The Arthur Engine is a comprehensive tool for monitoring and governing AI/ML workloads. It provides evaluation and benchmarking of machine learning models, guardrails enforcement, and extensibility for fitting into various application architectures. With support for a wide range of evaluation metrics and customizable features, the tool aims to improve model understanding, optimize generative AI outputs, and prevent data-security and compliance risks. Key features include real-time guardrails, model performance monitoring, feature importance visualization, error breakdowns, and support for custom metrics and models integration.

aiops-modules

AIOps Modules is a collection of reusable Infrastructure as Code (IAC) modules that work with SeedFarmer CLI. The modules are decoupled and can be aggregated using GitOps principles to achieve desired use cases, removing heavy lifting for end users. They must be generic for reuse in Machine Learning and Foundation Model Operations domain, adhering to SeedFarmer Guide structure. The repository includes deployment steps, project manifests, and various modules for SageMaker, Mlflow, FMOps/LLMOps, MWAA, Step Functions, EKS, and example use cases. It also supports Industry Data Framework (IDF) and Autonomous Driving Data Framework (ADDF) Modules.

generative-ai-cdk-constructs

The AWS Generative AI Constructs Library is an open-source extension of the AWS Cloud Development Kit (AWS CDK) that provides multi-service, well-architected patterns for quickly defining solutions in code to create predictable and repeatable infrastructure, called constructs. The goal of AWS Generative AI CDK Constructs is to help developers build generative AI solutions using pattern-based definitions for their architecture. The patterns defined in AWS Generative AI CDK Constructs are high level, multi-service abstractions of AWS CDK constructs that have default configurations based on well-architected best practices. The library is organized into logical modules using object-oriented techniques to create each architectural pattern model.

aip-community-registry

AIP Community Registry is a collection of community-built applications and projects leveraging Palantir's AIP Platform. It showcases real-world implementations from developers using AIP in production. The registry features various solutions demonstrating practical implementations and integration patterns across different use cases.

awesome-llm-json

This repository is an awesome list dedicated to resources for using Large Language Models (LLMs) to generate JSON or other structured outputs. It includes terminology explanations, hosted and local models, Python libraries, blog articles, videos, Jupyter notebooks, and leaderboards related to LLMs and JSON generation. The repository covers various aspects such as function calling, JSON mode, guided generation, and tool usage with different providers and models.

crewAI-quickstart

CrewAI quickstart is a small project providing starter templates for an easy start with CrewAI. It includes notebooks, Python scripts, GUI with Streamlit, and Local LLMs for various tasks like web search, CSV lookup, web scraping, PDF search, and more. Contributions are welcome to enhance the project.

GenAIExamples

This project provides a collective list of Generative AI (GenAI) and Retrieval-Augmented Generation (RAG) examples such as chatbot with question and answering (ChatQnA), code generation (CodeGen), document summary (DocSum), etc.

applied-ai-engineering-samples

The Google Cloud Applied AI Engineering repository provides reference guides, blueprints, code samples, and hands-on labs developed by the Google Cloud Applied AI Engineering team. It contains resources for Generative AI on Vertex AI, including code samples and hands-on labs demonstrating the use of Generative AI models and tools in Vertex AI. Additionally, it offers reference guides and blueprints that compile best practices and prescriptive guidance for running large-scale AI/ML workloads on Google Cloud AI/ML infrastructure.

For similar tasks

pinecone-ts-client

The official Node.js client for Pinecone, written in TypeScript. This client library provides a high-level interface for interacting with the Pinecone vector database service. With this client, you can create and manage indexes, upsert and query vector data, and perform other operations related to vector search and retrieval. The client is designed to be easy to use and provides a consistent and idiomatic experience for Node.js developers. It supports all the features and functionality of the Pinecone API, making it a comprehensive solution for building vector-powered applications in Node.js.

azure-search-vector-samples

This repository provides code samples in Python, C#, REST, and JavaScript for vector support in Azure AI Search. It includes demos for various languages showcasing vectorization of data, creating indexes, and querying vector data. Additionally, it offers tools like Azure AI Search Lab for experimenting with AI-enabled search scenarios in Azure and templates for deploying custom chat-with-your-data solutions. The repository also features documentation on vector search, hybrid search, creating and querying vector indexes, and REST API references for Azure AI Search and Azure OpenAI Service.

blockoli

Blockoli is a high-performance tool for code indexing, embedding generation, and semantic search tool for use with LLMs. It is built in Rust and uses the ASTerisk crate for semantic code parsing. Blockoli allows you to efficiently index, store, and search code blocks and their embeddings using vector similarity. Key features include indexing code blocks from a codebase, generating vector embeddings for code blocks using a pre-trained model, storing code blocks and their embeddings in a SQLite database, performing efficient similarity search on code blocks using vector embeddings, providing a REST API for easy integration with other tools and platforms, and being fast and memory-efficient due to its implementation in Rust.

client-js

The Mistral JavaScript client is a library that allows you to interact with the Mistral AI API. With this client, you can perform various tasks such as listing models, chatting with streaming, chatting without streaming, and generating embeddings. To use the client, you can install it in your project using npm and then set up the client with your API key. Once the client is set up, you can use it to perform the desired tasks. For example, you can use the client to chat with a model by providing a list of messages. The client will then return the response from the model. You can also use the client to generate embeddings for a given input. The embeddings can then be used for various downstream tasks such as clustering or classification.

fastllm

A collection of LLM services you can self host via docker or modal labs to support your applications development. The goal is to provide docker containers or modal labs deployments of common patterns when using LLMs and endpoints to integrate easily with existing codebases using the openai api. It supports GPT4all's embedding api, JSONFormer api for chat completion, Cross Encoders based on sentence transformers, and provides documentation using MkDocs.

openai-kotlin

OpenAI Kotlin API client is a Kotlin client for OpenAI's API with multiplatform and coroutines capabilities. It allows users to interact with OpenAI's API using Kotlin programming language. The client supports various features such as models, chat, images, embeddings, files, fine-tuning, moderations, audio, assistants, threads, messages, and runs. It also provides guides on getting started, chat & function call, file source guide, and assistants. Sample apps are available for reference, and troubleshooting guides are provided for common issues. The project is open-source and licensed under the MIT license, allowing contributions from the community.

llm

LLM is a CLI utility and Python library for interacting with Large Language Models, both via remote APIs and models that can be installed and run on your own machine. It allows users to run prompts from the command-line, store results in SQLite, generate embeddings, and more. The tool supports self-hosted language models via plugins and provides access to remote and local models. Users can install plugins to access models by different providers, including models that can be installed and run on their own device. LLM offers various options for running Mistral models in the terminal and enables users to start chat sessions with models. Additionally, users can use a system prompt to provide instructions for processing input to the tool.

GenAI-Showcase

The Generative AI Use Cases Repository showcases a wide range of applications in generative AI, including Retrieval-Augmented Generation (RAG), AI Agents, and industry-specific use cases. It provides practical notebooks and guidance on utilizing frameworks such as LlamaIndex and LangChain, and demonstrates how to integrate models from leading AI research companies like Anthropic and OpenAI.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.