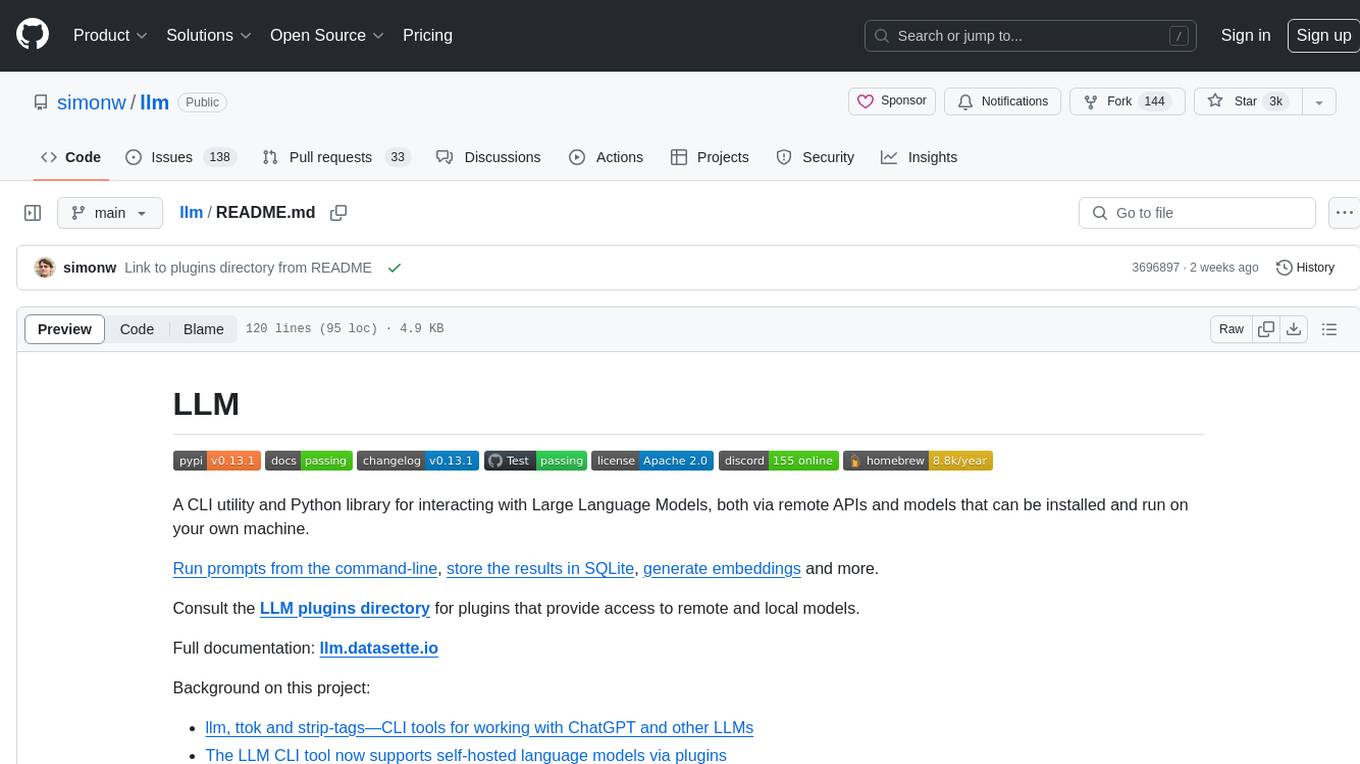

llm

Access large language models from the command-line

Stars: 6907

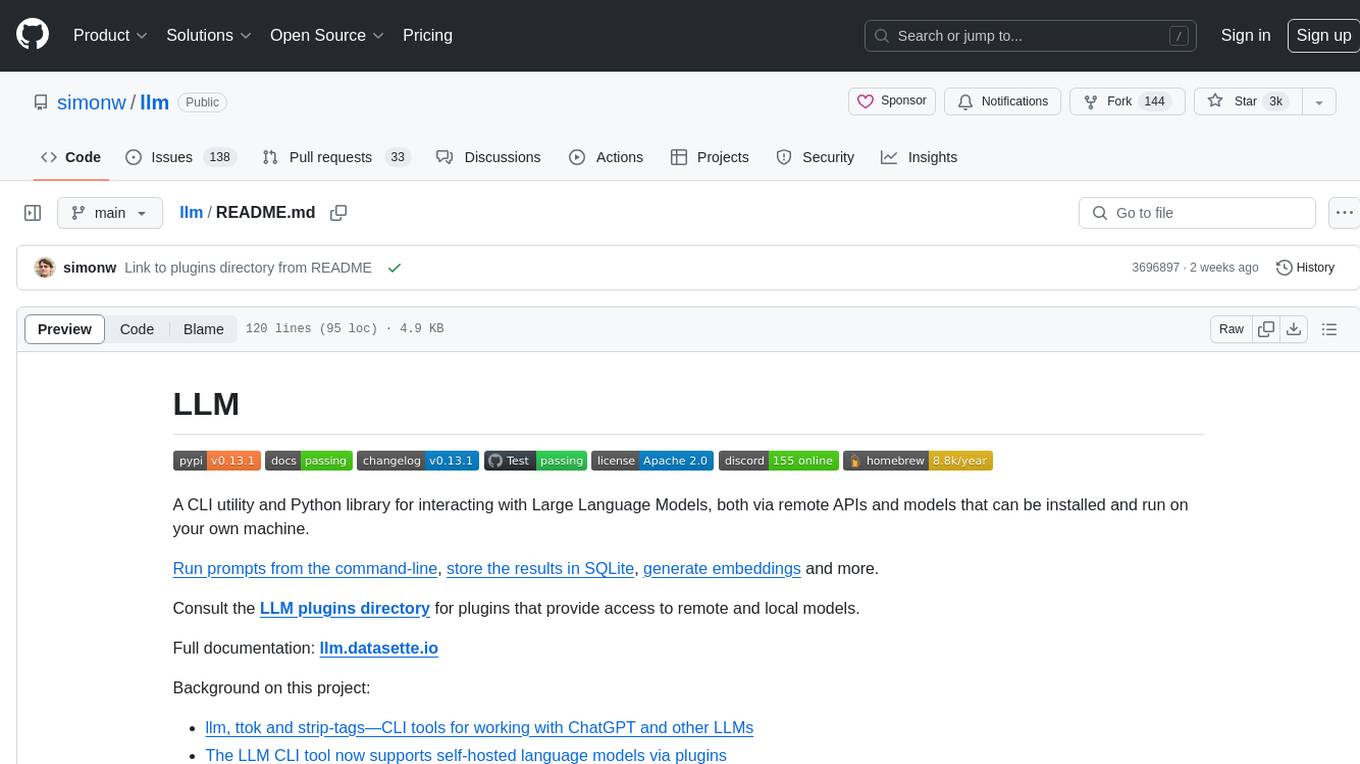

LLM is a CLI utility and Python library for interacting with Large Language Models, both via remote APIs and models that can be installed and run on your own machine. It allows users to run prompts from the command-line, store results in SQLite, generate embeddings, and more. The tool supports self-hosted language models via plugins and provides access to remote and local models. Users can install plugins to access models by different providers, including models that can be installed and run on their own device. LLM offers various options for running Mistral models in the terminal and enables users to start chat sessions with models. Additionally, users can use a system prompt to provide instructions for processing input to the tool.

README:

A CLI utility and Python library for interacting with Large Language Models, both via remote APIs and models that can be installed and run on your own machine.

Run prompts from the command-line, store the results in SQLite, generate embeddings and more.

Consult the LLM plugins directory for plugins that provide access to remote and local models.

Full documentation: llm.datasette.io

Background on this project:

- llm, ttok and strip-tags—CLI tools for working with ChatGPT and other LLMs

- The LLM CLI tool now supports self-hosted language models via plugins

- Accessing Llama 2 from the command-line with the llm-replicate plugin

- Run Llama 2 on your own Mac using LLM and Homebrew

- Catching up on the weird world of LLMs

- LLM now provides tools for working with embeddings

- Build an image search engine with llm-clip, chat with models with llm chat

- Many options for running Mistral models in your terminal using LLM

Install this tool using pip:

pip install llmOr using Homebrew:

brew install llmDetailed installation instructions.

If you have an OpenAI API key you can get started using the OpenAI models right away.

As an alternative to OpenAI, you can install plugins to access models by other providers, including models that can be installed and run on your own device.

Save your OpenAI API key like this:

llm keys set openaiThis will prompt you for your key like so:

Enter key: <paste here>

Now that you've saved a key you can run a prompt like this:

llm "Five cute names for a pet penguin"1. Waddles

2. Pebbles

3. Bubbles

4. Flappy

5. Chilly

Read the usage instructions for more.

LLM plugins can add support for alternative models, including models that run on your own machine.

To download and run Mistral 7B Instruct locally, you can install the llm-gpt4all plugin:

llm install llm-gpt4allThen run this command to see which models it makes available:

llm modelsgpt4all: all-MiniLM-L6-v2-f16 - SBert, 43.76MB download, needs 1GB RAM

gpt4all: orca-mini-3b-gguf2-q4_0 - Mini Orca (Small), 1.84GB download, needs 4GB RAM

gpt4all: mistral-7b-instruct-v0 - Mistral Instruct, 3.83GB download, needs 8GB RAM

...

Each model file will be downloaded once the first time you use it. Try Mistral out like this:

llm -m mistral-7b-instruct-v0 'difference between a pelican and a walrus'You can also start a chat session with the model using the llm chat command:

llm chat -m mistral-7b-instruct-v0Chatting with mistral-7b-instruct-v0

Type 'exit' or 'quit' to exit

Type '!multi' to enter multiple lines, then '!end' to finish

>

You can use the -s/--system option to set a system prompt, providing instructions for processing other input to the tool.

To describe how the code in a file works, try this:

cat mycode.py | llm -s "Explain this code"For help, run:

llm --help

You can also use:

python -m llm --help

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llm

Similar Open Source Tools

llm

LLM is a CLI utility and Python library for interacting with Large Language Models, both via remote APIs and models that can be installed and run on your own machine. It allows users to run prompts from the command-line, store results in SQLite, generate embeddings, and more. The tool supports self-hosted language models via plugins and provides access to remote and local models. Users can install plugins to access models by different providers, including models that can be installed and run on their own device. LLM offers various options for running Mistral models in the terminal and enables users to start chat sessions with models. Additionally, users can use a system prompt to provide instructions for processing input to the tool.

h2o-llmstudio

H2O LLM Studio is a framework and no-code GUI designed for fine-tuning state-of-the-art large language models (LLMs). With H2O LLM Studio, you can easily and effectively fine-tune LLMs without the need for any coding experience. The GUI is specially designed for large language models, and you can finetune any LLM using a large variety of hyperparameters. You can also use recent finetuning techniques such as Low-Rank Adaptation (LoRA) and 8-bit model training with a low memory footprint. Additionally, you can use Reinforcement Learning (RL) to finetune your model (experimental), use advanced evaluation metrics to judge generated answers by the model, track and compare your model performance visually, and easily export your model to the Hugging Face Hub and share it with the community.

torchchat

torchchat is a codebase showcasing the ability to run large language models (LLMs) seamlessly. It allows running LLMs using Python in various environments such as desktop, server, iOS, and Android. The tool supports running models via PyTorch, chatting, generating text, running chat in the browser, and running models on desktop/server without Python. It also provides features like AOT Inductor for faster execution, running in C++ using the runner, and deploying and running on iOS and Android. The tool supports popular hardware and OS including Linux, Mac OS, Android, and iOS, with various data types and execution modes available.

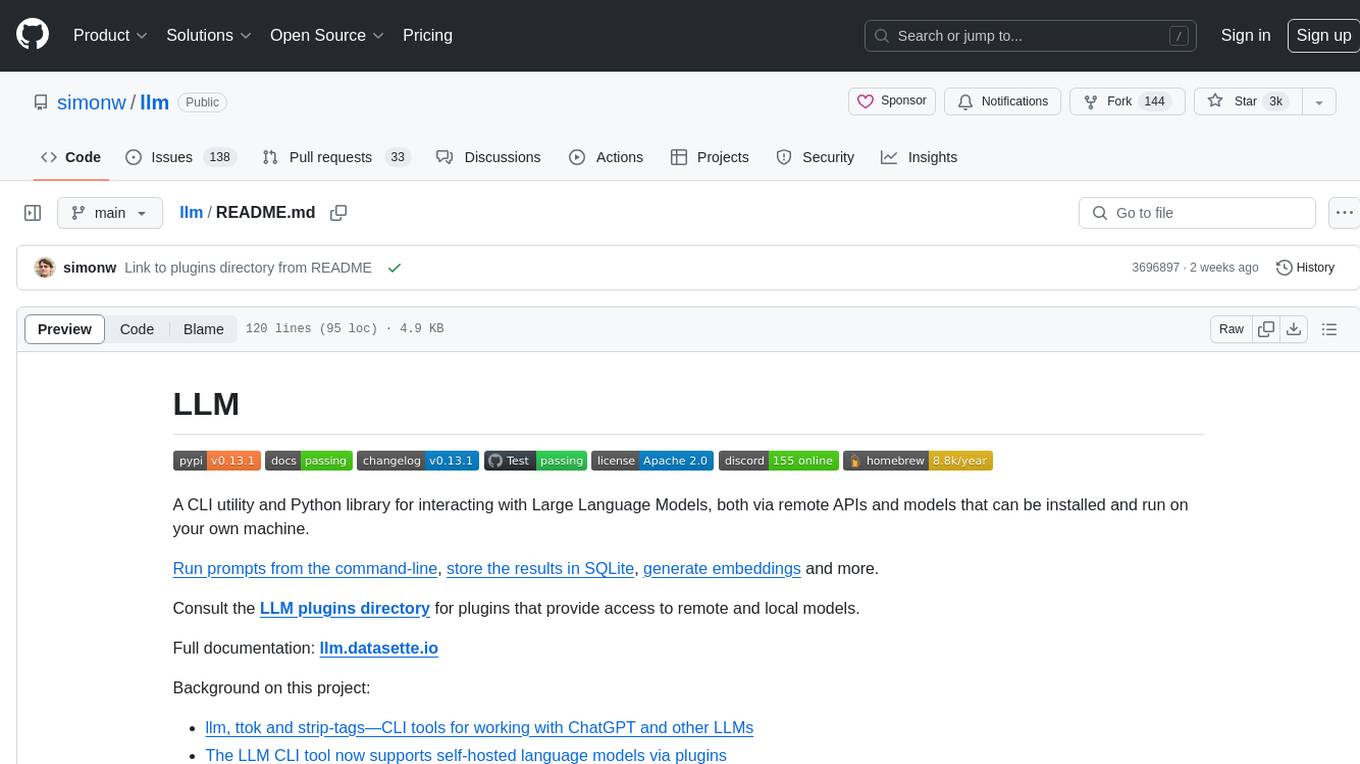

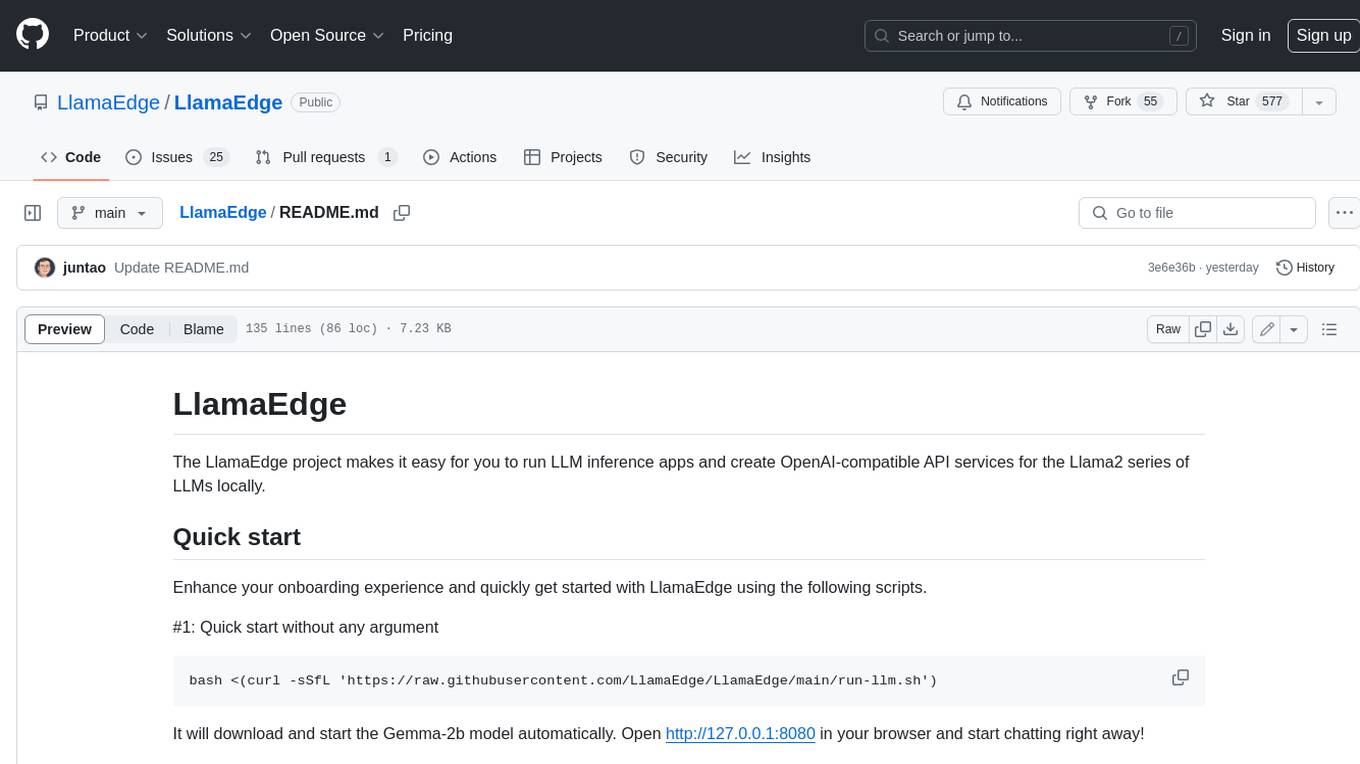

LlamaEdge

The LlamaEdge project makes it easy to run LLM inference apps and create OpenAI-compatible API services for the Llama2 series of LLMs locally. It provides a Rust+Wasm stack for fast, portable, and secure LLM inference on heterogeneous edge devices. The project includes source code for text generation, chatbot, and API server applications, supporting all LLMs based on the llama2 framework in the GGUF format. LlamaEdge is committed to continuously testing and validating new open-source models and offers a list of supported models with download links and startup commands. It is cross-platform, supporting various OSes, CPUs, and GPUs, and provides troubleshooting tips for common errors.

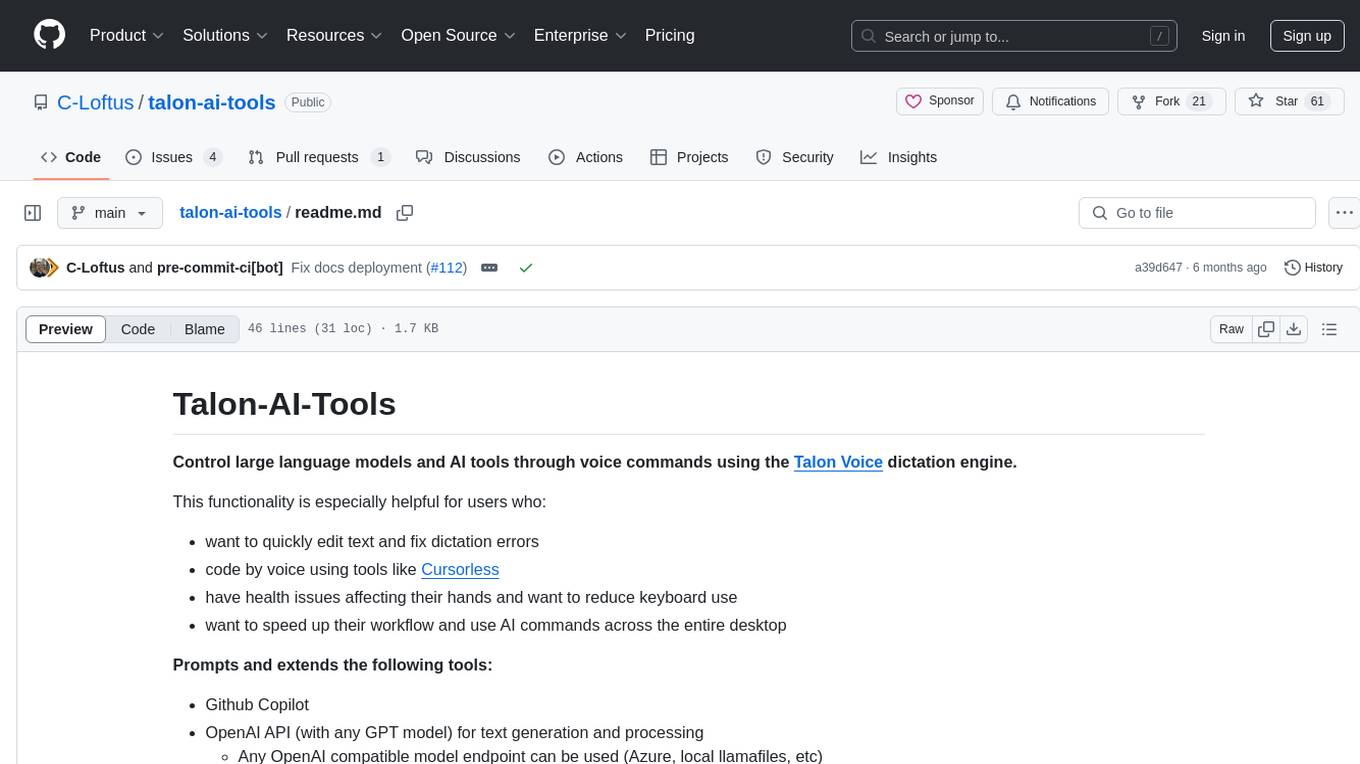

talon-ai-tools

Control large language models and AI tools through voice commands using the Talon Voice dictation engine. This tool is designed to help users quickly edit text, code by voice, reduce keyboard use for those with health issues, and speed up workflow by using AI commands across the desktop. It prompts and extends tools like Github Copilot and OpenAI API for text and image generation. Users can set up the tool by downloading the repo, obtaining an OpenAI API key, and customizing the endpoint URL for preferred models. The tool can be used without an OpenAI key and can be exclusively used with Copilot for those not needing LLM integration.

ai-starter-kit

SambaNova AI Starter Kits is a collection of open-source examples and guides designed to facilitate the deployment of AI-driven use cases for developers and enterprises. The kits cover various categories such as Data Ingestion & Preparation, Model Development & Optimization, Intelligent Information Retrieval, and Advanced AI Capabilities. Users can obtain a free API key using SambaNova Cloud or deploy models using SambaStudio. Most examples are written in Python but can be applied to any programming language. The kits provide resources for tasks like text extraction, fine-tuning embeddings, prompt engineering, question-answering, image search, post-call analysis, and more.

LLM_AppDev-HandsOn

This repository showcases how to build a simple LLM-based chatbot for answering questions based on documents using retrieval augmented generation (RAG) technique. It also provides guidance on deploying the chatbot using Podman or on the OpenShift Container Platform. The workshop associated with this repository introduces participants to LLMs & RAG concepts and demonstrates how to customize the chatbot for specific purposes. The software stack relies on open-source tools like streamlit, LlamaIndex, and local open LLMs via Ollama, making it accessible for GPU-constrained environments.

IOPaint

IOPaint is a free and open-source inpainting & outpainting tool powered by SOTA AI model. It supports various AI models to perform erase, inpainting, or outpainting tasks. Users can remove unwanted objects, defects, watermarks, or people from images using erase models. Additionally, diffusion models can replace objects or perform outpainting. The tool also offers plugins for interactive object segmentation, background removal, anime segmentation, super resolution, face restoration, and file management. IOPaint provides a web UI for easy access to the latest AI models and supports batch processing of images through the command line. Developers can contribute to the project by installing front-end dependencies, setting up the backend, and starting the development environment for both front-end and back-end components.

LLMFlex

LLMFlex is a python package designed for developing AI applications with local Large Language Models (LLMs). It provides classes to load LLM models, embedding models, and vector databases to create AI-powered solutions with prompt engineering and RAG techniques. The package supports multiple LLMs with different generation configurations, embedding toolkits, vector databases, chat memories, prompt templates, custom tools, and a chatbot frontend interface. Users can easily create LLMs, load embeddings toolkit, use tools, chat with models in a Streamlit web app, and serve an OpenAI API with a GGUF model. LLMFlex aims to offer a simple interface for developers to work with LLMs and build private AI solutions using local resources.

minimal-llm-ui

This minimalistic UI serves as a simple interface for Ollama models, enabling real-time interaction with Local Language Models (LLMs). Users can chat with models, switch between different LLMs, save conversations, and create parameter-driven prompt templates. The tool is built using React, Next.js, and Tailwind CSS, with seamless integration with LangchainJs and Ollama for efficient model switching and context storage.

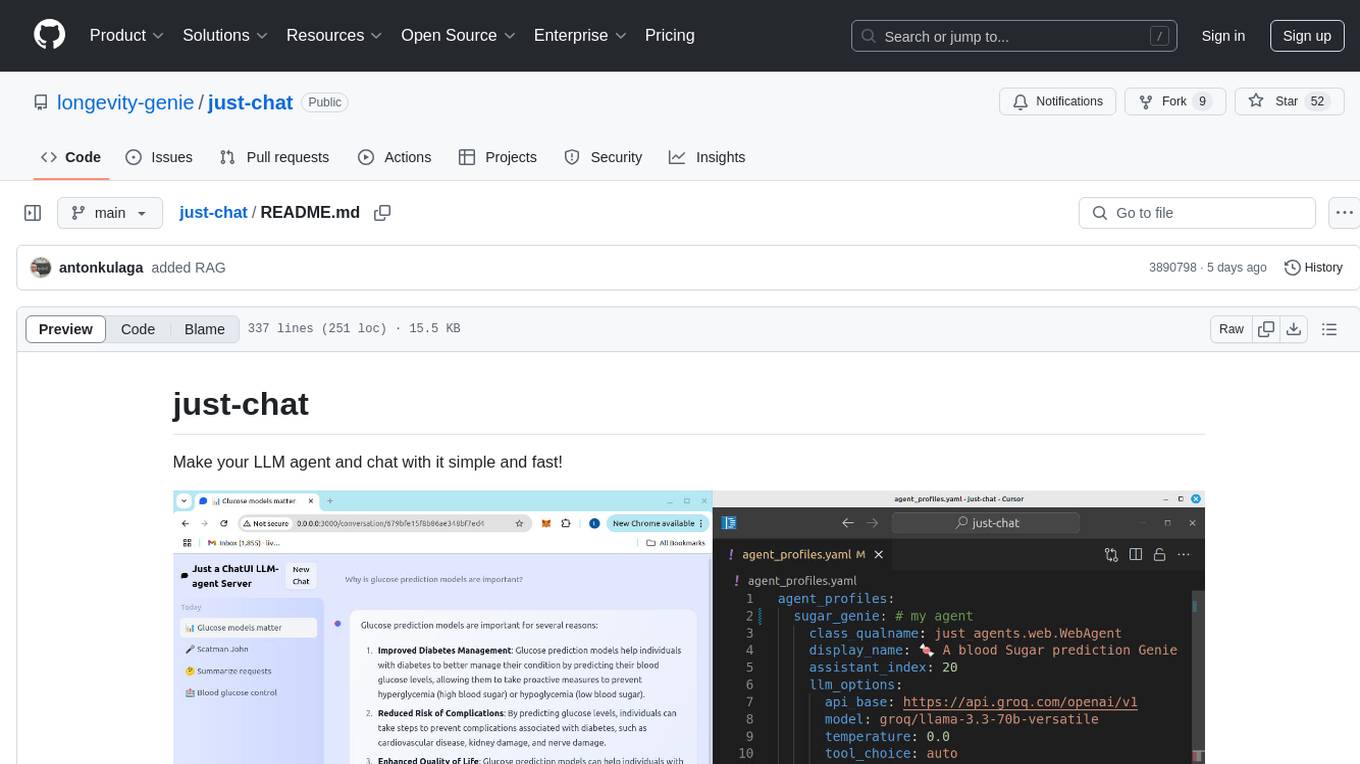

just-chat

Just-Chat is a containerized application that allows users to easily set up and chat with their AI agent. Users can customize their AI assistant using a YAML file, add new capabilities with Python tools, and interact with the agent through a chat web interface. The tool supports various modern models like DeepSeek Reasoner, ChatGPT, LLAMA3.3, etc. Users can also use semantic search capabilities with MeiliSearch to find and reference relevant information based on meaning. Just-Chat requires Docker or Podman for operation and provides detailed installation instructions for both Linux and Windows users.

whisplay-ai-chatbot

Whisplay-AI-Chatbot is a pocket-sized AI chatbot device built using a Raspberry Pi Zero 2w. It features a PiSugar Whisplay HAT with an LCD screen, on-board speaker, and microphone. Users can interact with the chatbot by pressing a button, speaking, and receiving responses, similar to a futuristic walkie-talkie. The tool supports various functionalities such as adjusting volume autonomously, resetting conversation history, local ASR and TTS capabilities, image generation, and integration with APIs like Google Gemini and Grok. It also offers support for LLM8850 AI Accelerator for offline capabilities like ASR, TTS, and LLM API. The chatbot saves conversation history and generated images in a data folder, and users can customize the tool with different enclosure cases available for Pi02 and Pi5 models.

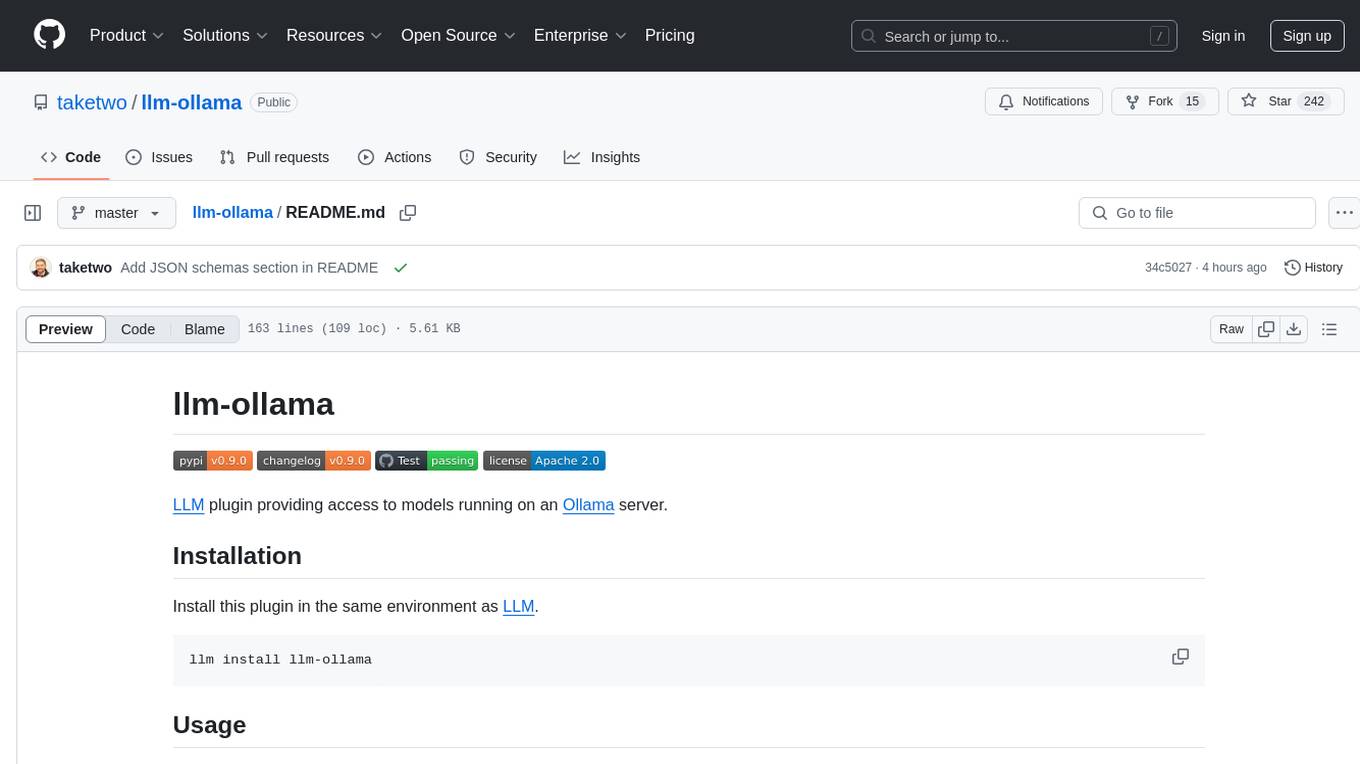

llm-ollama

LLM-ollama is a plugin that provides access to models running on an Ollama server. It allows users to query the Ollama server for a list of models, register them with LLM, and use them for prompting, chatting, and embedding. The plugin supports image attachments, embeddings, JSON schemas, async models, model aliases, and model options. Users can interact with Ollama models through the plugin in a seamless and efficient manner.

TypeGPT

TypeGPT is a Python application that enables users to interact with ChatGPT or Google Gemini from any text field in their operating system using keyboard shortcuts. It provides global accessibility, keyboard shortcuts for communication, and clipboard integration for larger text inputs. Users need to have Python 3.x installed along with specific packages and API keys from OpenAI for ChatGPT access. The tool allows users to run the program normally or in the background, manage processes, and stop the program. Users can use keyboard shortcuts like `/ask`, `/see`, `/stop`, `/chatgpt`, `/gemini`, `/check`, and `Shift + Cmd + Enter` to interact with the application in any text field. Customization options are available by modifying files like `keys.txt` and `system_prompt.txt`. Contributions are welcome, and future plans include adding support for other APIs and a user-friendly GUI.

nobodywho

NobodyWho is a plugin for the Godot game engine that enables interaction with local LLMs for interactive storytelling. Users can install it from Godot editor or GitHub releases page, providing their own LLM in GGUF format. The plugin consists of `NobodyWhoModel` node for model file, `NobodyWhoChat` node for chat interaction, and `NobodyWhoEmbedding` node for generating embeddings. It offers a programming interface for sending text to LLM, receiving responses, and starting the LLM worker.

LARS

LARS is an application that enables users to run Large Language Models (LLMs) locally on their devices, upload their own documents, and engage in conversations where the LLM grounds its responses with the uploaded content. The application focuses on Retrieval Augmented Generation (RAG) to increase accuracy and reduce AI-generated inaccuracies. LARS provides advanced citations, supports various file formats, allows follow-up questions, provides full chat history, and offers customization options for LLM settings. Users can force enable or disable RAG, change system prompts, and tweak advanced LLM settings. The application also supports GPU-accelerated inferencing, multiple embedding models, and text extraction methods. LARS is open-source and aims to be the ultimate RAG-centric LLM application.

For similar tasks

llm

LLM is a CLI utility and Python library for interacting with Large Language Models, both via remote APIs and models that can be installed and run on your own machine. It allows users to run prompts from the command-line, store results in SQLite, generate embeddings, and more. The tool supports self-hosted language models via plugins and provides access to remote and local models. Users can install plugins to access models by different providers, including models that can be installed and run on their own device. LLM offers various options for running Mistral models in the terminal and enables users to start chat sessions with models. Additionally, users can use a system prompt to provide instructions for processing input to the tool.

blockoli

Blockoli is a high-performance tool for code indexing, embedding generation, and semantic search tool for use with LLMs. It is built in Rust and uses the ASTerisk crate for semantic code parsing. Blockoli allows you to efficiently index, store, and search code blocks and their embeddings using vector similarity. Key features include indexing code blocks from a codebase, generating vector embeddings for code blocks using a pre-trained model, storing code blocks and their embeddings in a SQLite database, performing efficient similarity search on code blocks using vector embeddings, providing a REST API for easy integration with other tools and platforms, and being fast and memory-efficient due to its implementation in Rust.

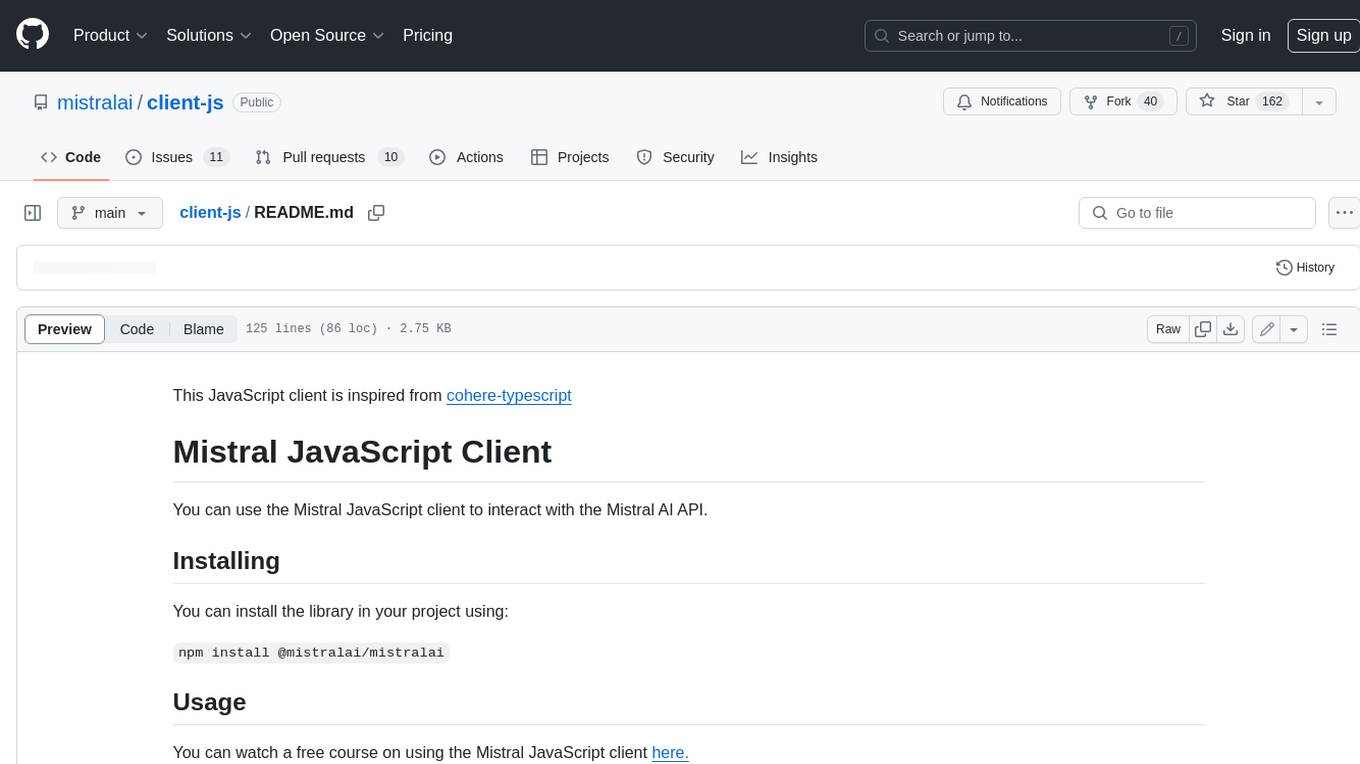

client-js

The Mistral JavaScript client is a library that allows you to interact with the Mistral AI API. With this client, you can perform various tasks such as listing models, chatting with streaming, chatting without streaming, and generating embeddings. To use the client, you can install it in your project using npm and then set up the client with your API key. Once the client is set up, you can use it to perform the desired tasks. For example, you can use the client to chat with a model by providing a list of messages. The client will then return the response from the model. You can also use the client to generate embeddings for a given input. The embeddings can then be used for various downstream tasks such as clustering or classification.

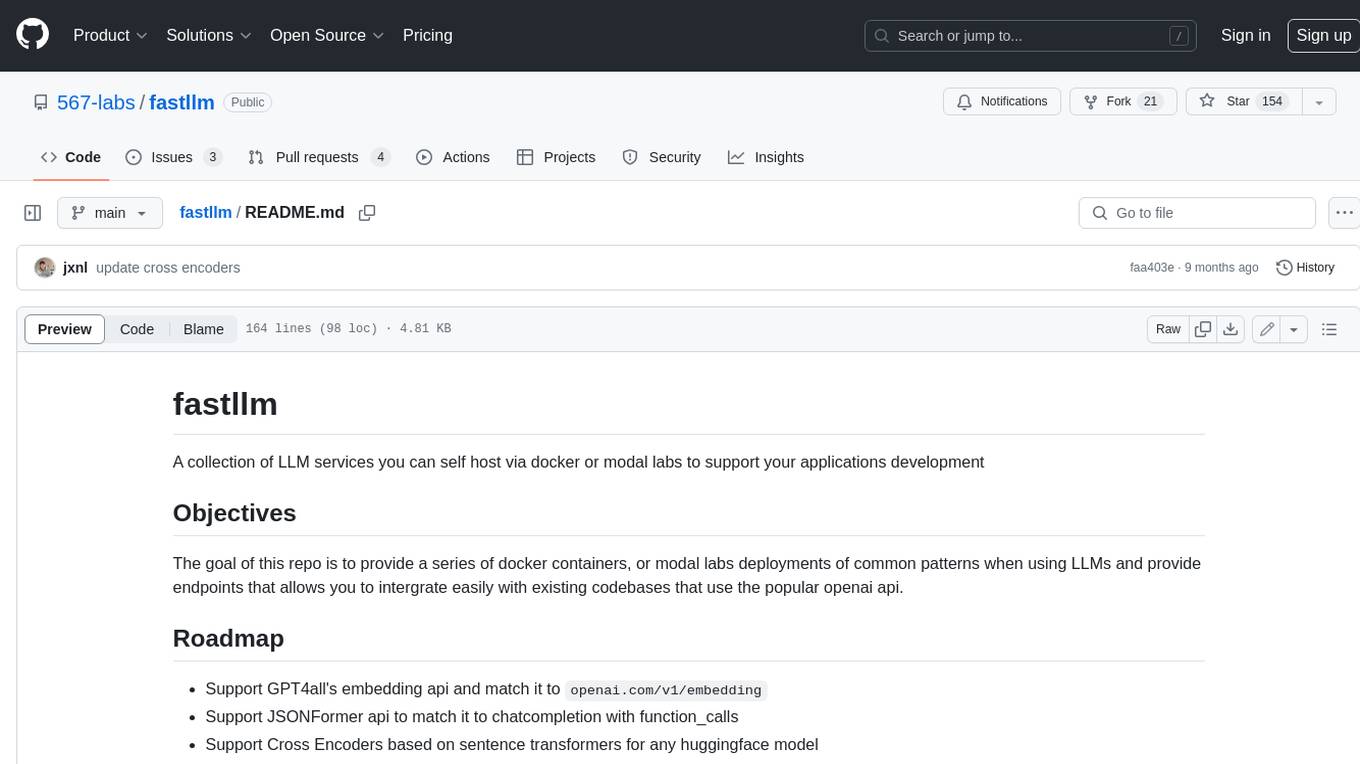

fastllm

A collection of LLM services you can self host via docker or modal labs to support your applications development. The goal is to provide docker containers or modal labs deployments of common patterns when using LLMs and endpoints to integrate easily with existing codebases using the openai api. It supports GPT4all's embedding api, JSONFormer api for chat completion, Cross Encoders based on sentence transformers, and provides documentation using MkDocs.

openai-kotlin

OpenAI Kotlin API client is a Kotlin client for OpenAI's API with multiplatform and coroutines capabilities. It allows users to interact with OpenAI's API using Kotlin programming language. The client supports various features such as models, chat, images, embeddings, files, fine-tuning, moderations, audio, assistants, threads, messages, and runs. It also provides guides on getting started, chat & function call, file source guide, and assistants. Sample apps are available for reference, and troubleshooting guides are provided for common issues. The project is open-source and licensed under the MIT license, allowing contributions from the community.

azure-search-vector-samples

This repository provides code samples in Python, C#, REST, and JavaScript for vector support in Azure AI Search. It includes demos for various languages showcasing vectorization of data, creating indexes, and querying vector data. Additionally, it offers tools like Azure AI Search Lab for experimenting with AI-enabled search scenarios in Azure and templates for deploying custom chat-with-your-data solutions. The repository also features documentation on vector search, hybrid search, creating and querying vector indexes, and REST API references for Azure AI Search and Azure OpenAI Service.

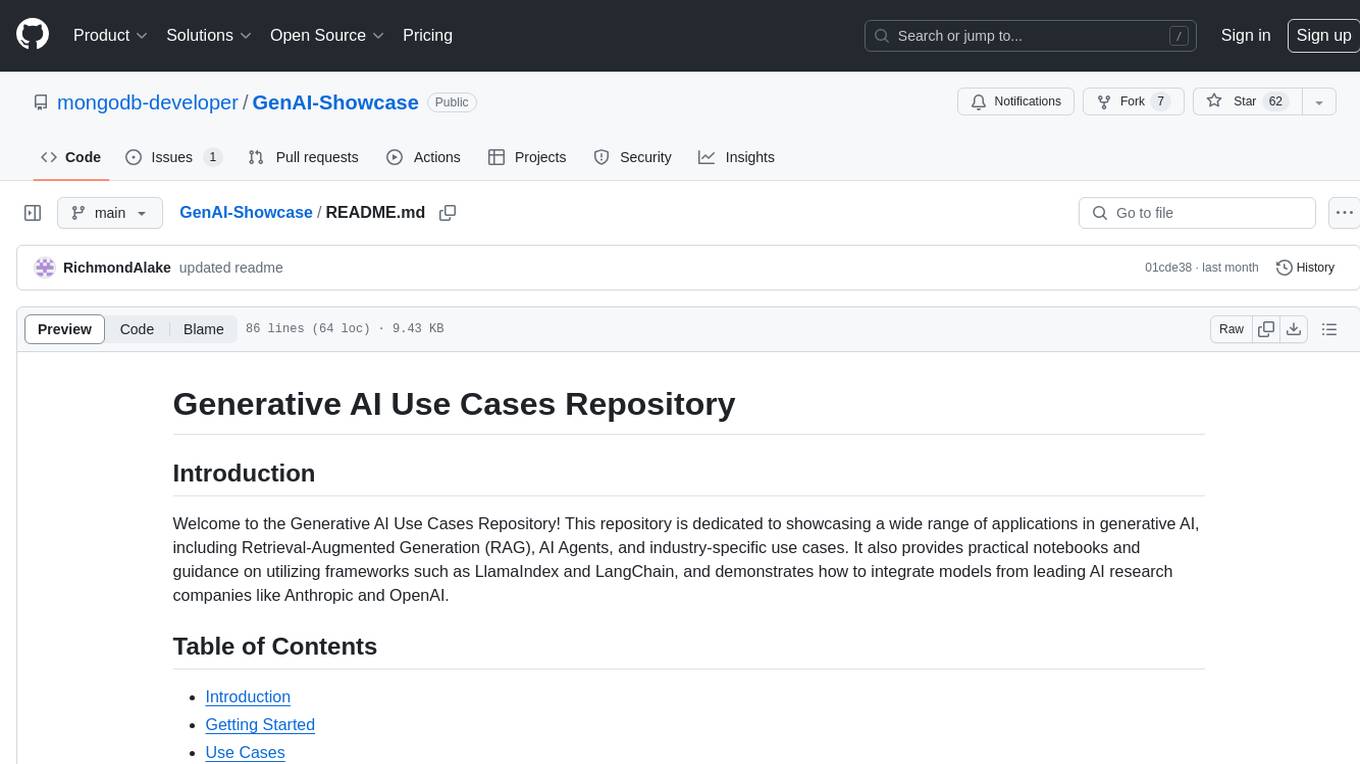

GenAI-Showcase

The Generative AI Use Cases Repository showcases a wide range of applications in generative AI, including Retrieval-Augmented Generation (RAG), AI Agents, and industry-specific use cases. It provides practical notebooks and guidance on utilizing frameworks such as LlamaIndex and LangChain, and demonstrates how to integrate models from leading AI research companies like Anthropic and OpenAI.

warc-gpt

WARC-GPT is an experimental retrieval augmented generation pipeline for web archive collections. It allows users to interact with WARC files, extract text, generate text embeddings, visualize embeddings, and interact with a web UI and API. The tool is highly customizable, supporting various LLMs, providers, and embedding models. Users can configure the application using environment variables, ingest WARC files, start the server, and interact with the web UI and API to search for content and generate text completions. WARC-GPT is designed for exploration and experimentation in exploring web archives using AI.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.