aiops-modules

AIOps modules is a collection of reusable Infrastructure as Code (IaC) modules for Machine Learning (ML), Foundation Models (FM), Large Language Models (LLM) and GenAI development and operations on AWS

Stars: 101

AIOps Modules is a collection of reusable Infrastructure as Code (IAC) modules that work with SeedFarmer CLI. The modules are decoupled and can be aggregated using GitOps principles to achieve desired use cases, removing heavy lifting for end users. They must be generic for reuse in Machine Learning and Foundation Model Operations domain, adhering to SeedFarmer Guide structure. The repository includes deployment steps, project manifests, and various modules for SageMaker, Mlflow, FMOps/LLMOps, MWAA, Step Functions, EKS, and example use cases. It also supports Industry Data Framework (IDF) and Autonomous Driving Data Framework (ADDF) Modules.

README:

AIOps modules is a collection of reusable Infrastructure as Code (IAC) modules that works with SeedFarmer CLI. Please see the DOCS for all things seed-farmer.

The modules in this repository are decoupled from each other and can be aggregated together using GitOps (manifest file) principles provided by seedfarmer and achieve the desired use cases. It removes the undifferentiated heavy lifting for an end user by providing hardended modules and enables them to focus on building business on top of them.

The modules in this repository are / must be generic for reuse without affiliation to any one particular project in Machine Learning and Foundation Model Operations domain.

All modules in this repository adhere to the module structure defined in the SeedFarmer Guide

See deployment steps in the Deployment Guide.

End-to-end example use-cases built using modules in this repository.

| Type | Description |

|---|---|

| MLOps with Amazon SageMaker | Set up environment for MLOps with Amazon SageMaker. Deploy secure Amazon SageMaker Studio Domain, and provisions SageMaker Project Templates using Service Catalog, including model training and deployment. |

| Amazon SageMaker HyperPod on Amazon EKS | Deploy Amazon SageMaker HyperPod cluster orchestrated by Amazon EKS with FSx Lustre for high-performance distributed training workloads. |

| Ray on Amazon Elastic Kubernetes Service (EKS) | Run Ray on AWS EKS. Deploys an AWS EKS cluster, KubeRay Ray Operator, and a Ray Cluster with autoscaling enabled. |

| Fine-tune 6B LLM (GPT-J) using Ray on Amazon EKS | Run fine-tuning of 6B GPT-J LLM. Deploys an AWS EKS cluster, KubeRay Ray Operator, and a Ray Cluster with autoscaling enabled, and runs a fine-tuning job. How to fine tune a 6B LLM simply and cost-effective using Ray on Amazon EKS? |

| DeepSeek R1 on Amazon SageMaker | An example using DeepSeek R1 Distill Llama 8B on Amazon SageMaker. Deploys a VPC, and Amazon SageMaker endpoint and Amazon SageMaker Studio IDE. |

| Deploy a Weather Agent with Strands and Amazon EKS Auto Mode | An example using Strands agent on Amazon EKS Auto Mode. |

| Mlflow tracking server and model registry with Amazon SageMaker | An example using Mlflow experiments tracking, model registry, and LLM tracing with Amazon SageMaker. Deploy self-hosted Mlflow tracking server and model registry on AWS Fargate, and Amazon SageMaker Studio Domain environment. |

| Managed Workflows with Apache Airflow (MWAA) for Machine Learning Training | An example orchestrating ML training jobs with Managed Workflows for Apache Airflow (MWAA). Deploys MWAA and an example ML training DAG. |

| MLOps with Step Functions | Automate machine learning lifecycle using Amazon SageMaker and AWS Step Functions. |

| Bedrock Fine-Tuning with Step Functions | Continuously Fine-tune a Foundation Model with Bedrock Fine-Tuning jobs and AWS Step Functions. |

| AppSync Knowledge Base Ingestion and Question and Answering RAG | Creates an Graphql endpoint for ingestion of data and and use ingested as knowledge base for a Question and Answering model using RAG. |

| Type | Description |

|---|---|

| SageMaker Studio Module | Provisions secure SageMaker Studio Domain environment, creates example User Profiles for Data Scientist and Lead Data Scientist linked to IAM Roles, and adds lifecycle config |

| SageMaker HyperPod EKS Module | Creates a complete Amazon SageMaker HyperPod cluster infrastructure orchestrated by Amazon EKS. Deploys networking components, EKS cluster, HyperPod cluster, and supporting resources including FSx Lustre for high-performance storage. |

| SageMaker Project Templates Factory Module | Provisions SageMaker Project Templates for an organization based on the specified template type. Available templates: - Train a model on Abalone dataset using XGBoost - Perform batch inference - Multi-account model deployment - HuggingFace model import template - LLM fine-tuning and evaluation |

| SageMaker Notebook Instance Module | Creates secure SageMaker Notebook Instance for the Data Scientist, clones the source code to the workspace |

| SageMaker Ground Truth Labeling Module | Creates a state machine to allow labeling of images and text file, uploaded to the upload bucket, using various built-in task types in SageMaker Ground Truth. |

| SageMaker Model Monitoring Module | Deploy data quality, model quality, model bias, and model explainability monitoring jobs which run against a SageMaker Endpoint. |

| SageMaker Endpoint Module | Creates SageMaker real-time inference endpoint for the specified model package or latest approved model from the model package group |

| SageMaker Custom Kernel Module | Builds custom kernel for SageMaker Studio from a Dockerfile |

| SageMaker Model Package Group Module | Creates a SageMaker Model Package Group to register and version SageMaker Machine Learning (ML) models and setups an Amazon EventBridge Rule to send model package group state change events to an Amazon EventBridge Bus |

| SageMaker Model Package Promote Pipeline Module | Deploy a Pipeline to promote SageMaker Model Packages in a multi-account setup. The pipeline can be triggered through an EventBridge rule in reaction of a SageMaker Model Package Group state event change (Approved/Rejected). Once the pipeline is triggered, it will promote the latest approved model package, if one is found. |

| SageMaker Model CICD Module | Creates a comprehensive CICD pipeline using AWS CodePipelines to build and deploy a ML model on SageMaker. |

| Type | Description |

|---|---|

| Mlflow Image Module | Creates Mlflow Tracing Server Docker image and pushes the image to Elastic Container Registry |

| Mlflow on AWS Fargate Module | Runs Mlflow container on AWS Fargate in a load-balanced Elastic Container Service. Supports Elastic File System and Relational Database Store for metadata persistence, and S3 for artifact store |

| Mlflow AI Gateway Image Module | Creates Mlflow AI Gateway Docker image and pushes the image to Elastic Container Registry |

| Type | Description |

|---|---|

| SageMaker JumpStart Foundation Model Endpoint Module | Creates an endpoint for a SageMaker JumpStart Foundation Model. |

| SageMaker Hugging Face Foundation Model Endpoint Module | Creates an endpoint for a SageMaker Hugging Face Foundation Model. |

| Amazon Bedrock Finetuning Module | Creates a pipeline that automatically triggers Amazon Bedrock Finetuning. |

| AppSync Knowledge Base Ingestion and Question and Answering RAG Module | Creates an Graphql endpoint for ingestion of data and and use ingested as knowledge base for a Question and Answering model using RAG. |

| Type | Description |

|---|---|

| Strands Agent on Amazon EKS Auto Mode | Creates an Amazon EKS Auto Mode cluster and deploys an example Strands Weather Agent. |

| Type | Description |

|---|---|

| Example DAG for MLOps Module | Deploys a Sample DAG in MWAA demonstrating MLOPs and it is using MWAA module from IDF |

| Type | Description |

|---|---|

| Example for MLOps using Step Functions | Deploys a AWS State Machine in AWS Step Functions demonstrating how to implement the MLOPs using AWS Step Functions |

| Type | Description |

|---|---|

| Ray Operator Module | Provisions a Ray Operator on EKS. |

| Ray Cluster Module | Provisions a Ray Cluster on EKS. Requires a Ray Operator. |

| Ray Orchestrator Module | Creates a Step Function to orcehstrate submission of a sample Ray job that fine-tunes GPT-J 6B parameters Large Language Model on tiny shakespeare dataset and performs inference. |

| Ray Image Module | An example that builds a custom Ray image and pushes to ECR. |

| Type | Description |

|---|---|

| Event Bus Module | Creates an Amazon EventBridge Bus for cross-account events. |

| Personas Module | This module is an example that creates various roles required for an AI/ML project. |

The modules in this repository are compatible with Industry Data Framework (IDF) Modules and can be used together within the same deployment. Refer to examples/manifests for examples.

The modules in this repository are compatible with Autonomous Driving Data Framework (ADDF) Modules and can be used together within the same deployment.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aiops-modules

Similar Open Source Tools

aiops-modules

AIOps Modules is a collection of reusable Infrastructure as Code (IAC) modules that work with SeedFarmer CLI. The modules are decoupled and can be aggregated using GitOps principles to achieve desired use cases, removing heavy lifting for end users. They must be generic for reuse in Machine Learning and Foundation Model Operations domain, adhering to SeedFarmer Guide structure. The repository includes deployment steps, project manifests, and various modules for SageMaker, Mlflow, FMOps/LLMOps, MWAA, Step Functions, EKS, and example use cases. It also supports Industry Data Framework (IDF) and Autonomous Driving Data Framework (ADDF) Modules.

fish-identification

Fishial.ai is a project focused on training and validating scripts for fish segmentation and classification models. It includes various scripts for automatic training with different loss functions, dataset manipulation, and model setup using Detectron2 API. The project also provides tools for converting classification models to TorchScript format and creating training datasets. The models available include MaskRCNN for fish segmentation and various versions of ResNet18 for fish classification with different class counts and features. The project aims to facilitate fish identification and analysis through machine learning techniques.

AI-in-a-Box

AI-in-a-Box is a curated collection of solution accelerators that can help engineers establish their AI/ML environments and solutions rapidly and with minimal friction, while maintaining the highest standards of quality and efficiency. It provides essential guidance on the responsible use of AI and LLM technologies, specific security guidance for Generative AI (GenAI) applications, and best practices for scaling OpenAI applications within Azure. The available accelerators include: Azure ML Operationalization in-a-box, Edge AI in-a-box, Doc Intelligence in-a-box, Image and Video Analysis in-a-box, Cognitive Services Landing Zone in-a-box, Semantic Kernel Bot in-a-box, NLP to SQL in-a-box, Assistants API in-a-box, and Assistants API Bot in-a-box.

aip-community-registry

AIP Community Registry is a collection of community-built applications and projects leveraging Palantir's AIP Platform. It showcases real-world implementations from developers using AIP in production. The registry features various solutions demonstrating practical implementations and integration patterns across different use cases.

generative-ai-cdk-constructs

The AWS Generative AI Constructs Library is an open-source extension of the AWS Cloud Development Kit (AWS CDK) that provides multi-service, well-architected patterns for quickly defining solutions in code to create predictable and repeatable infrastructure, called constructs. The goal of AWS Generative AI CDK Constructs is to help developers build generative AI solutions using pattern-based definitions for their architecture. The patterns defined in AWS Generative AI CDK Constructs are high level, multi-service abstractions of AWS CDK constructs that have default configurations based on well-architected best practices. The library is organized into logical modules using object-oriented techniques to create each architectural pattern model.

arthur-engine

The Arthur Engine is a comprehensive tool for monitoring and governing AI/ML workloads. It provides evaluation and benchmarking of machine learning models, guardrails enforcement, and extensibility for fitting into various application architectures. With support for a wide range of evaluation metrics and customizable features, the tool aims to improve model understanding, optimize generative AI outputs, and prevent data-security and compliance risks. Key features include real-time guardrails, model performance monitoring, feature importance visualization, error breakdowns, and support for custom metrics and models integration.

GenAIExamples

This project provides a collective list of Generative AI (GenAI) and Retrieval-Augmented Generation (RAG) examples such as chatbot with question and answering (ChatQnA), code generation (CodeGen), document summary (DocSum), etc.

fenic

fenic is an opinionated DataFrame framework from typedef.ai for building AI and agentic applications. It transforms unstructured and structured data into insights using familiar DataFrame operations enhanced with semantic intelligence. With support for markdown, transcripts, and semantic operators, plus efficient batch inference across various model providers. fenic is purpose-built for LLM inference, providing a query engine designed for AI workloads, semantic operators as first-class citizens, native unstructured data support, production-ready infrastructure, and a familiar DataFrame API.

awesome-llm-json

This repository is an awesome list dedicated to resources for using Large Language Models (LLMs) to generate JSON or other structured outputs. It includes terminology explanations, hosted and local models, Python libraries, blog articles, videos, Jupyter notebooks, and leaderboards related to LLMs and JSON generation. The repository covers various aspects such as function calling, JSON mode, guided generation, and tool usage with different providers and models.

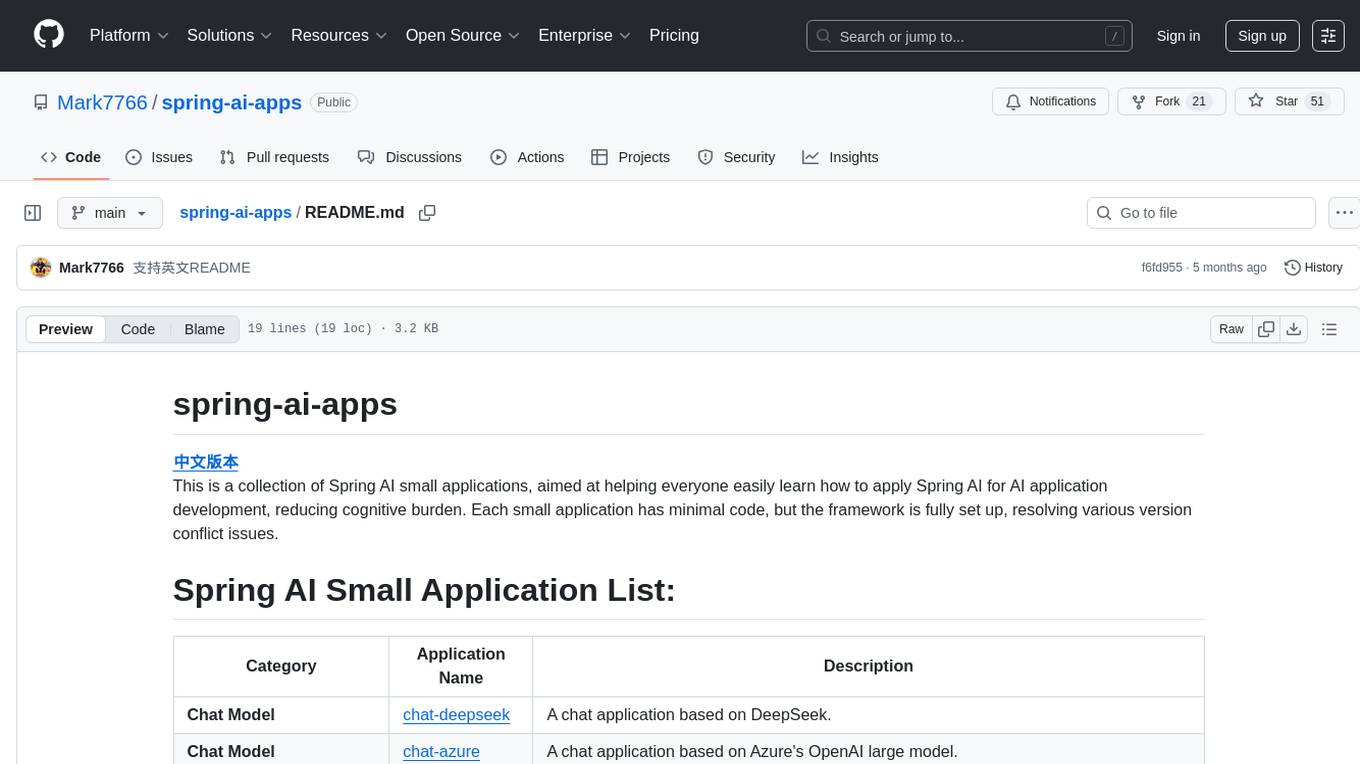

spring-ai-apps

spring-ai-apps is a collection of Spring AI small applications designed to help users easily apply Spring AI for AI application development. Each small application comes with minimal code and a fully set up framework to resolve version conflict issues.

azure-search-vector-samples

This repository provides code samples in Python, C#, REST, and JavaScript for vector support in Azure AI Search. It includes demos for various languages showcasing vectorization of data, creating indexes, and querying vector data. Additionally, it offers tools like Azure AI Search Lab for experimenting with AI-enabled search scenarios in Azure and templates for deploying custom chat-with-your-data solutions. The repository also features documentation on vector search, hybrid search, creating and querying vector indexes, and REST API references for Azure AI Search and Azure OpenAI Service.

ByteMLPerf

ByteMLPerf is an AI Accelerator Benchmark that focuses on evaluating AI Accelerators from a practical production perspective, including the ease of use and versatility of software and hardware. Byte MLPerf has the following characteristics: - Models and runtime environments are more closely aligned with practical business use cases. - For ASIC hardware evaluation, besides evaluate performance and accuracy, it also measure metrics like compiler usability and coverage. - Performance and accuracy results obtained from testing on the open Model Zoo serve as reference metrics for evaluating ASIC hardware integration.

evalkit

EvalKit is an open-source TypeScript library for evaluating and improving the performance of large language models (LLMs). It helps developers ensure the reliability, accuracy, and trustworthiness of their AI models. The library provides various metrics such as Bias Detection, Coherence, Faithfulness, Hallucination, Intent Detection, and Semantic Similarity. EvalKit is designed to be user-friendly with detailed documentation, tutorials, and recipes for different use cases and LLM providers. It requires Node.js 18+ and an OpenAI API Key for installation and usage. Contributions from the community are welcome under the Apache 2.0 License.

craftgen

Craftgen.ai is an innovative AI platform designed for both technical and non-technical users. It's built on a foundation of graph architecture for scalability and the Actor Model for efficient concurrent operations, tailored to both technical and non-technical users. A key aspect of Craftgen.ai is its modular AI approach, allowing users to assemble and customize AI components like building blocks to fit their specific needs. The platform's robustness is enhanced by its event-driven architecture, ensuring reliable data processing and featuring browser web technologies for universal access. Craftgen.ai excels in dynamic tool and workflow generation, with strong offline capabilities for secure environments and plans for desktop application integration. A unique and valuable feature of Craftgen.ai is its marketplace, where users can access a variety of pre-built AI solutions. This marketplace accelerates the deployment of AI tools but also fosters a community of sharing and innovation. Users can contribute to and leverage this repository of solutions, enhancing the platform's versatility and practicality. Craftgen.ai uses JSON schema for industry-standard alignment, enabling seamless integration with any API following the OpenAPI spec. This allows for a broad range of applications, from automating data analysis to streamlining content management. The platform is designed to bridge the gap between advanced AI technology and practical usability. It's a flexible, secure, and intuitive platform that empowers users, from developers seeking to create custom AI solutions to businesses looking to automate routine tasks. Craftgen.ai's goal is to make AI technology an integral, seamless part of everyday problem-solving and innovation, providing a platform where modular AI and a thriving marketplace converge to meet the diverse needs of its users.

mcp-for-beginners

The Model Context Protocol (MCP) Curriculum for Beginners is an open-source framework designed to standardize interactions between AI models and client applications. It offers a structured learning path with practical coding examples and real-world use cases in popular programming languages like C#, Java, JavaScript, Rust, Python, and TypeScript. Whether you're an AI developer, system architect, or software engineer, this guide provides comprehensive resources for mastering MCP fundamentals and implementation strategies.

For similar tasks

aiops-modules

AIOps Modules is a collection of reusable Infrastructure as Code (IAC) modules that work with SeedFarmer CLI. The modules are decoupled and can be aggregated using GitOps principles to achieve desired use cases, removing heavy lifting for end users. They must be generic for reuse in Machine Learning and Foundation Model Operations domain, adhering to SeedFarmer Guide structure. The repository includes deployment steps, project manifests, and various modules for SageMaker, Mlflow, FMOps/LLMOps, MWAA, Step Functions, EKS, and example use cases. It also supports Industry Data Framework (IDF) and Autonomous Driving Data Framework (ADDF) Modules.

mosec

Mosec is a high-performance and flexible model serving framework for building ML model-enabled backend and microservices. It bridges the gap between any machine learning models you just trained and the efficient online service API. * **Highly performant** : web layer and task coordination built with Rust 🦀, which offers blazing speed in addition to efficient CPU utilization powered by async I/O * **Ease of use** : user interface purely in Python 🐍, by which users can serve their models in an ML framework-agnostic manner using the same code as they do for offline testing * **Dynamic batching** : aggregate requests from different users for batched inference and distribute results back * **Pipelined stages** : spawn multiple processes for pipelined stages to handle CPU/GPU/IO mixed workloads * **Cloud friendly** : designed to run in the cloud, with the model warmup, graceful shutdown, and Prometheus monitoring metrics, easily managed by Kubernetes or any container orchestration systems * **Do one thing well** : focus on the online serving part, users can pay attention to the model optimization and business logic

seismometer

Seismometer is a suite of tools designed to evaluate AI model performance in healthcare settings. It helps healthcare organizations assess the accuracy of AI models and ensure equitable care for diverse patient populations. The tool allows users to validate model performance using standardized evaluation criteria based on local data and workflows. It includes templates for analyzing statistical performance, fairness across different cohorts, and the impact of interventions on outcomes. Seismometer is continuously evolving to incorporate new validation and analysis techniques.

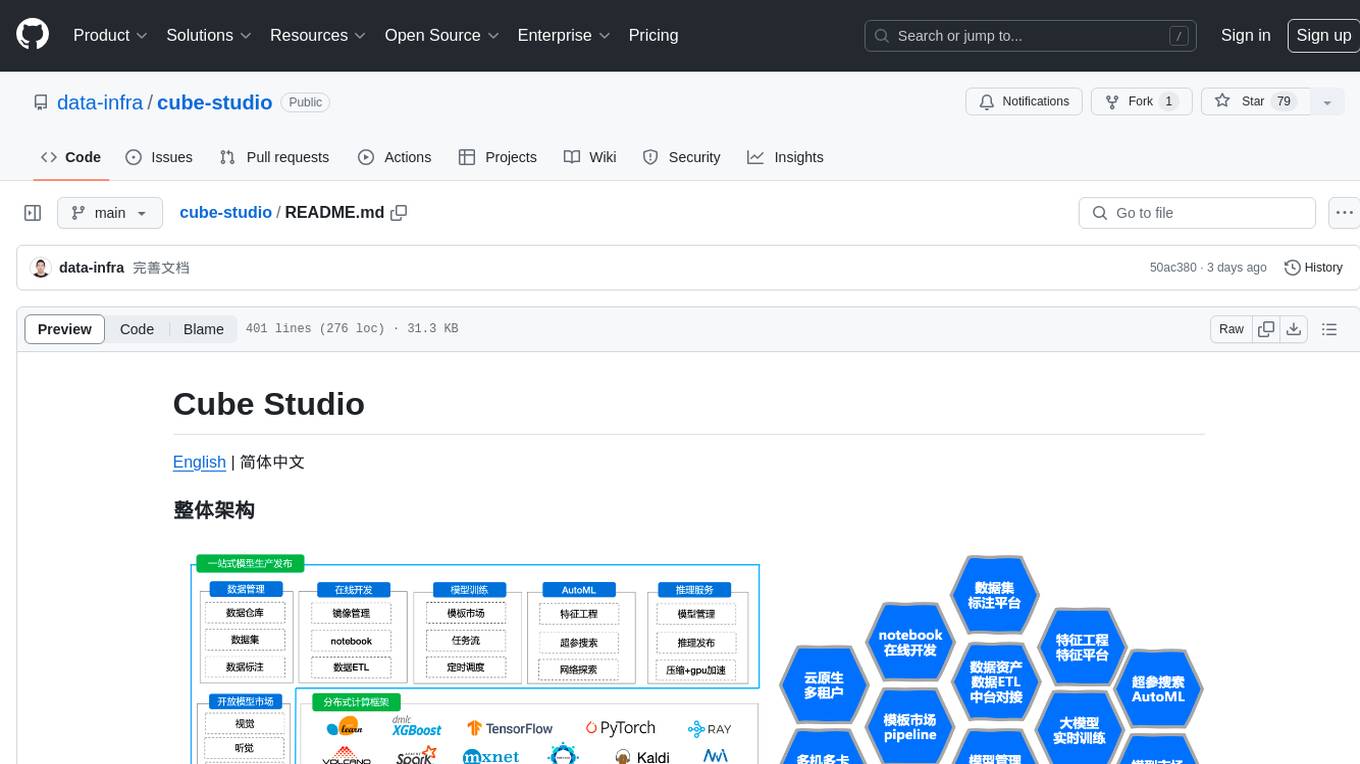

cube-studio

Cube Studio is an open-source all-in-one cloud-native machine learning platform that provides various functionalities such as project group management, network configuration, user management, role management, billing functions, SSO single sign-on, support for multiple computing power types, support for multiple resource groups and clusters, edge cluster support, serverless cluster mode support, database storage support, machine resource management, storage disk management, internationalization capabilities, data map management, data calculation, ETL orchestration, data set management, data annotation, image/audio/text dataset support, feature processing, traditional machine learning algorithms, distributed deep learning frameworks, distributed acceleration frameworks, model evaluation, model format conversion, model registration, model deployment, distributed media processing, custom operators, automatic learning, custom training images, automatic parameter tuning, TensorBoard jobs, internal services, model management, inference services, monitoring, model application management, model marketplace, model development, model fine-tuning, web model deployment, automated annotation, dataset SDK, notebook SDK, pipeline training SDK, inference service SDK, large model distributed training, large model inference, large model fine-tuning, intelligent conversation, private knowledge base, model deployment for WeChat public accounts, enterprise WeChat group chatbot integration, DingTalk group chatbot integration, and more. Cube Studio offers template-based functionality for data import/export, data processing, feature processing, machine learning frameworks, machine learning algorithms, deep learning frameworks, model processing, model serving, monitoring, and more.

clearml-serving

ClearML Serving is a command line utility for model deployment and orchestration, enabling model deployment including serving and preprocessing code to a Kubernetes cluster or custom container based solution. It supports machine learning models like Scikit Learn, XGBoost, LightGBM, and deep learning models like TensorFlow, PyTorch, ONNX. It provides a customizable RestAPI for serving, online model deployment, scalable solutions, multi-model per container, automatic deployment, canary A/B deployment, model monitoring, usage metric reporting, metric dashboard, and model performance metrics. ClearML Serving is modular, scalable, flexible, customizable, and open source.

arthur-engine

The Arthur Engine is a comprehensive tool for monitoring and governing AI/ML workloads. It provides evaluation and benchmarking of machine learning models, guardrails enforcement, and extensibility for fitting into various application architectures. With support for a wide range of evaluation metrics and customizable features, the tool aims to improve model understanding, optimize generative AI outputs, and prevent data-security and compliance risks. Key features include real-time guardrails, model performance monitoring, feature importance visualization, error breakdowns, and support for custom metrics and models integration.

trainer

Kubeflow Trainer is a Kubernetes-native project for fine-tuning large language models (LLMs) and enabling scalable, distributed training of machine learning (ML) models across various frameworks. It allows integration with ML libraries like HuggingFace, DeepSpeed, or Megatron-LM to orchestrate ML training on Kubernetes. Develop LLMs effortlessly with the Kubeflow Python SDK and build Kubernetes-native Training Runtimes with Kubernetes Custom Resources APIs.

For similar jobs

runbooks

Runbooks is a repository that is no longer active. The project has been deprecated in favor of KubeAI, a platform designed to simplify the operationalization of AI on Kubernetes. For more information, please refer to the new repository at https://github.com/substratusai/kubeai.

aiops-modules

AIOps Modules is a collection of reusable Infrastructure as Code (IAC) modules that work with SeedFarmer CLI. The modules are decoupled and can be aggregated using GitOps principles to achieve desired use cases, removing heavy lifting for end users. They must be generic for reuse in Machine Learning and Foundation Model Operations domain, adhering to SeedFarmer Guide structure. The repository includes deployment steps, project manifests, and various modules for SageMaker, Mlflow, FMOps/LLMOps, MWAA, Step Functions, EKS, and example use cases. It also supports Industry Data Framework (IDF) and Autonomous Driving Data Framework (ADDF) Modules.

Awesome-LLMOps

Awesome-LLMOps is a curated list of the best LLMOps tools, providing a comprehensive collection of frameworks and tools for building, deploying, and managing large language models (LLMs) and AI agents. The repository includes a wide range of tools for tasks such as building multimodal AI agents, fine-tuning models, orchestrating applications, evaluating models, and serving models for inference. It covers various aspects of the machine learning operations (MLOps) lifecycle, from training to deployment and observability. The tools listed in this repository cater to the needs of developers, data scientists, and machine learning engineers working with large language models and AI applications.

skyflo

Skyflo.ai is an AI agent designed for Cloud Native operations, providing seamless infrastructure management through natural language interactions. It serves as a safety-first co-pilot with a human-in-the-loop design. The tool offers flexible deployment options for both production and local Kubernetes environments, supporting various LLM providers and self-hosted models. Users can explore the architecture of Skyflo.ai and contribute to its development following the provided guidelines and Code of Conduct. The community engagement includes Discord, Twitter, YouTube, and GitHub Discussions.

AI-CloudOps

AI+CloudOps is a cloud-native operations management platform designed for enterprises. It aims to integrate artificial intelligence technology with cloud-native practices to significantly improve the efficiency and level of operations work. The platform offers features such as AIOps for monitoring data analysis and alerts, multi-dimensional permission management, visual CMDB for resource management, efficient ticketing system, deep integration with Prometheus for real-time monitoring, and unified Kubernetes management for cluster optimization.

kubectl-mcp-server

Control your entire Kubernetes infrastructure through natural language conversations with AI. Talk to your clusters like you talk to a DevOps expert. Debug crashed pods, optimize costs, deploy applications, audit security, manage Helm charts, and visualize dashboards—all through natural language. The tool provides 253 powerful tools, 8 workflow prompts, 8 data resources, and works with all major AI assistants. It offers AI-powered diagnostics, built-in cost optimization, enterprise-ready features, zero learning curve, universal compatibility, visual insights, and production-grade deployment options. From debugging crashed pods to optimizing cluster costs, kubectl-mcp-server is your AI-powered DevOps companion.

flux-aio

Flux All-In-One is a lightweight distribution optimized for running the GitOps Toolkit controllers as a single deployable unit on Kubernetes clusters. It is designed for bare clusters, edge clusters, clusters with restricted communication, clusters with egress via proxies, and serverless clusters. The distribution follows semver versioning and provides documentation for specifications, installation, upgrade, OCI sync configuration, Git sync configuration, and multi-tenancy configuration. Users can deploy Flux using Timoni CLI and a Timoni Bundle file, fine-tune installation options, sync from public Git repositories, bootstrap repositories, and uninstall Flux without affecting reconciled workloads.

paddler

Paddler is an open-source load balancer and reverse proxy designed specifically for optimizing servers running llama.cpp. It overcomes typical load balancing challenges by maintaining a stateful load balancer that is aware of each server's available slots, ensuring efficient request distribution. Paddler also supports dynamic addition or removal of servers, enabling integration with autoscaling tools.