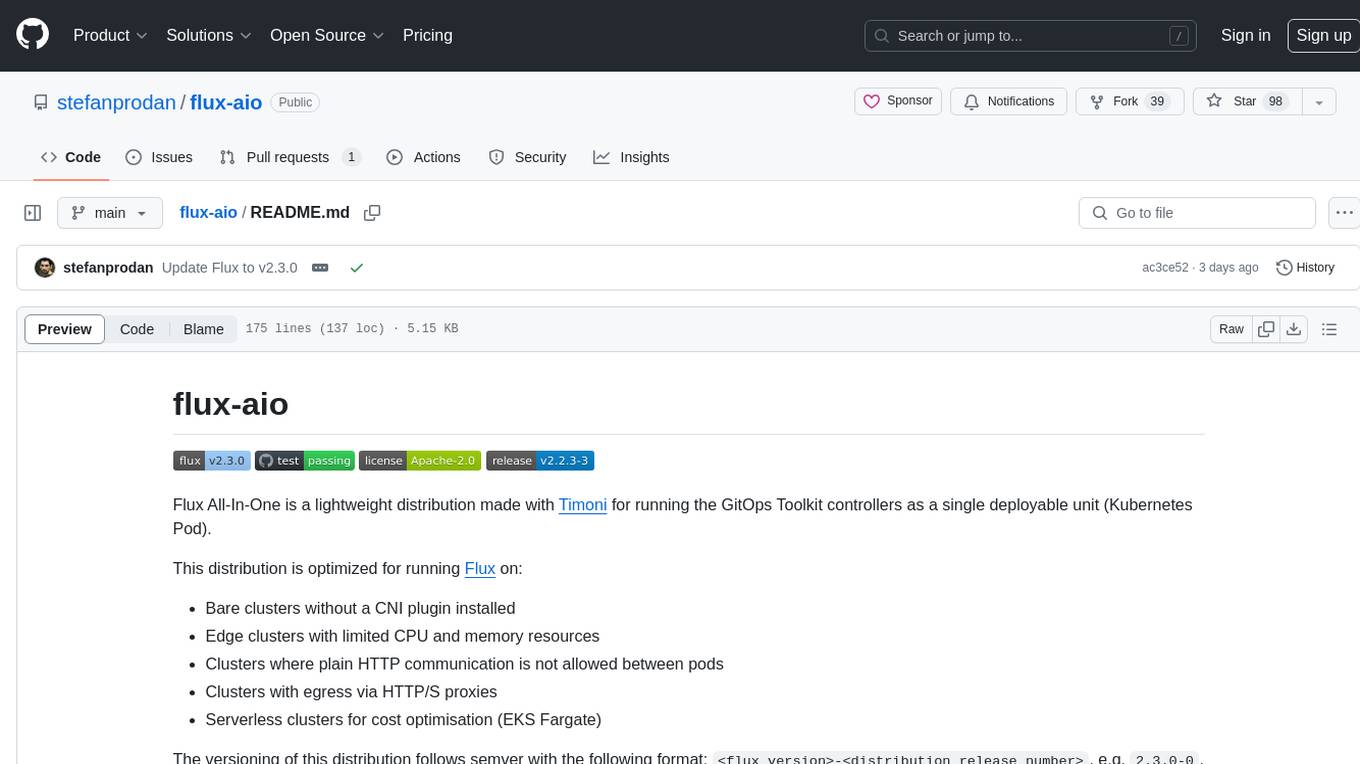

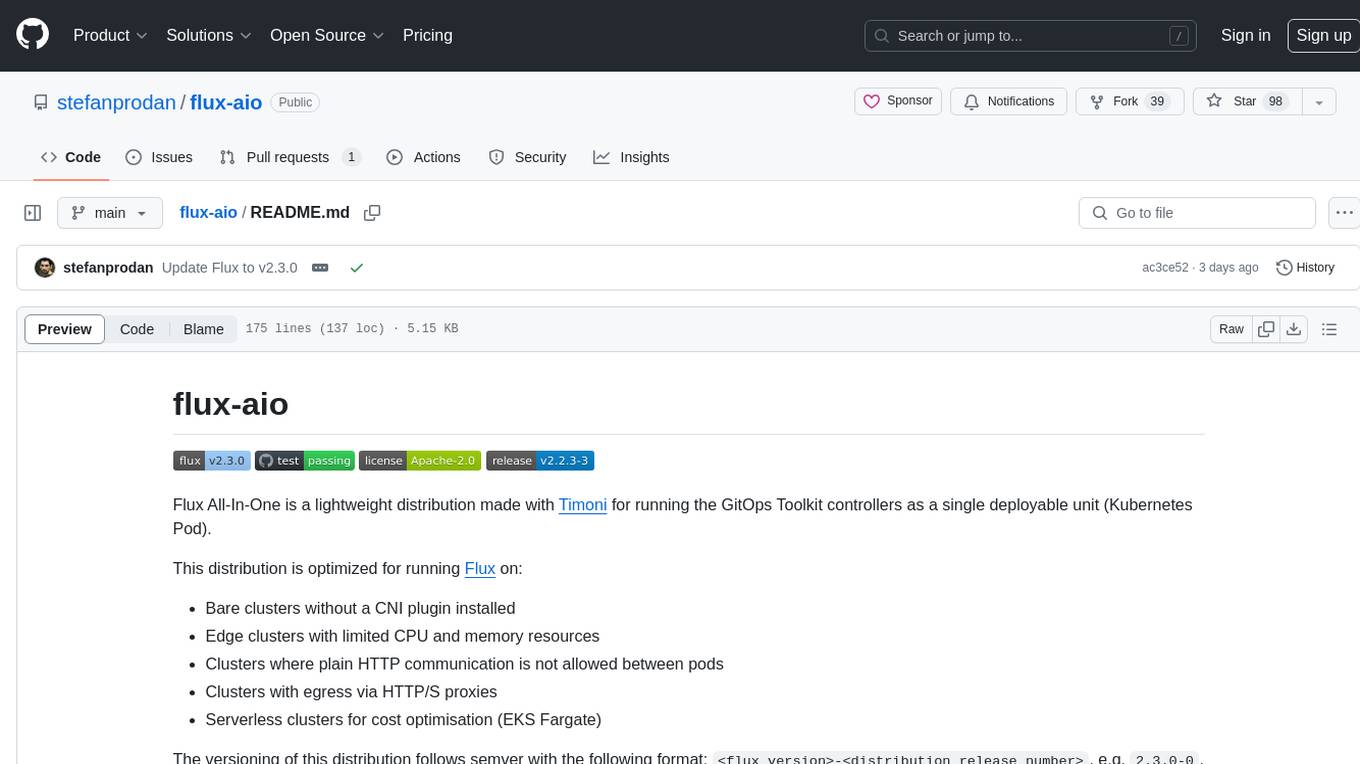

flux-aio

Flux All-In-One distribution made with Timoni

Stars: 111

Flux All-In-One is a lightweight distribution optimized for running the GitOps Toolkit controllers as a single deployable unit on Kubernetes clusters. It is designed for bare clusters, edge clusters, clusters with restricted communication, clusters with egress via proxies, and serverless clusters. The distribution follows semver versioning and provides documentation for specifications, installation, upgrade, OCI sync configuration, Git sync configuration, and multi-tenancy configuration. Users can deploy Flux using Timoni CLI and a Timoni Bundle file, fine-tune installation options, sync from public Git repositories, bootstrap repositories, and uninstall Flux without affecting reconciled workloads.

README:

Flux All-In-One is a lightweight distribution made with Timoni for running the GitOps Toolkit controllers as a single deployable unit (Kubernetes Pod).

This distribution is optimized for running Flux on:

- Bare clusters without a CNI plugin installed

- Edge clusters with limited CPU and memory resources

- Clusters where plain HTTP communication is not allowed between pods

- Clusters with egress via HTTP/S proxies

- Serverless clusters for cost optimisation (EKS Fargate)

The versioning of this distribution follows semver with the following format:

<flux version>-<distribution release number>, e.g. 2.5.0-0.

- Distribution specifications

- Flux installation and upgrade

- Flux OCI sync configuration

- Flux Git sync configuration

- Flux multi-tenancy configuration

To deploy Flux on Kubernetes clusters, you'll be using the Timoni CLI and a Timoni Bundle file where you'll define the configuration of the Flux controllers and their settings.

Install the Timoni CLI with:

brew install stefanprodan/tap/timoniFor other installation methods, see timoni.sh.

To deploy Flux AIO on a cluster without a CNI, create a Timoni Bundle file

named flux-aio.cue with the following content:

bundle: {

apiVersion: "v1alpha1"

name: "flux-aio"

instances: {

"flux": {

module: {

url: "oci://ghcr.io/stefanprodan/modules/flux-aio"

version: "latest"

}

namespace: "flux-system"

values: {

hostNetwork: true

securityProfile: "privileged"

controllers: notification: enabled: false

}

}

}

}

Apply the bundle with:

timoni bundle apply -f flux-aio.cueNote that on clusters without kube-proxy, you'll have to add the following env vars to values:

values: env: {

"KUBERNETES_SERVICE_HOST": "<host>"

"KUBERNETES_SERVICE_PORT": "<port>"

}Note that on Talos clusters, you'll have to set the pod security profile to

privileged:

values: {

hostNetwork: true

podSecurityProfile: "privileged"

}You can fine tune the Flux installation using various options, for more information see the installation guide.

Changes to the flux-aio.cue bundle, can be applied in dry-run mode

to see how Timoni will reconfigure Flux on the cluster:

timoni bundle apply -f flux-aio.cue --dry-run --diffTo deploy the latest version of Cilium CNI and the metrics-server cluster addon,

add the cluster-addons instance to the flux-aio.cue bundle:

bundle: {

apiVersion: "v1alpha1"

name: "flux-aio"

instances: {

// flux instance omitted for brevity

"cluster-addons": {

module: url: "oci://ghcr.io/stefanprodan/modules/flux-git-sync"

namespace: "flux-system"

values: git: {

url: "https://github.com/stefanprodan/flux-aio"

ref: "refs/heads/main"

path: "./test/cluster-addons"

}

}

}

}The above configuration, will instruct Flux to reconcile the HelmRelease manifests

from the test/cluster-addons directory.

Apply the bundle with:

timoni bundle apply -f flux-aio.cueTimoni will configure the Flux Git sync and will wait for Flux to pull the repo and deploy the cluster addons.

For more details on how to sync from private Git repositories and self-hosted Git servers, see the Git sync documentation.

If you want to use Flux AIO with a bootstrap repository layout, you'll have to add an ignore

rule for the flux-system directory and name the sync instance flux-system:

bundle: {

apiVersion: "v1alpha1"

name: "flux-aio"

instances: {

// flux instance omitted for brevity

"flux-system": {

module: url: "oci://ghcr.io/stefanprodan/modules/flux-git-sync"

namespace: "flux-system"

values: {

git: {

token: string @timoni(runtime:string:GITHUB_TOKEN)

url: "https://github.com/fluxcd/flux2-kustomize-helm-example.git"

ref: "refs/heads/main"

path: "clusters/production"

ignore: "clusters/**/flux-system/"

}

sync: wait: false

}

}

}

}The above configuration, generates the same flux-system objects (GitRepository, Secret, Kustomization)

as the flux bootstrap command.

To remove Flux from your cluster, without affecting any reconciled workloads:

flux -n flux-system uninstallFor Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for flux-aio

Similar Open Source Tools

flux-aio

Flux All-In-One is a lightweight distribution optimized for running the GitOps Toolkit controllers as a single deployable unit on Kubernetes clusters. It is designed for bare clusters, edge clusters, clusters with restricted communication, clusters with egress via proxies, and serverless clusters. The distribution follows semver versioning and provides documentation for specifications, installation, upgrade, OCI sync configuration, Git sync configuration, and multi-tenancy configuration. Users can deploy Flux using Timoni CLI and a Timoni Bundle file, fine-tune installation options, sync from public Git repositories, bootstrap repositories, and uninstall Flux without affecting reconciled workloads.

flapi

flAPI is a powerful service that automatically generates read-only APIs for datasets by utilizing SQL templates. Built on top of DuckDB, it offers features like automatic API generation, support for Model Context Protocol (MCP), connecting to multiple data sources, caching, security implementation, and easy deployment. The tool allows users to create APIs without coding and enables the creation of AI tools alongside REST endpoints using SQL templates. It supports unified configuration for REST endpoints and MCP tools/resources, concurrent servers for REST API and MCP server, and automatic tool discovery. The tool also provides DuckLake-backed caching for modern, snapshot-based caching with features like full refresh, incremental sync, retention, compaction, and audit logs.

aimeos-symfony

Aimeos Symfony bundle is a professional, full-featured, and ultra-fast e-commerce package for Symfony. It can be easily installed and customized within an existing Symfony application. The bundle provides comprehensive features for setting up an e-commerce platform, including authentication, routing configuration, database setup, and administration interface setup. It offers flexibility for adapting, extending, overwriting, and customizing various aspects to meet specific business needs. The bundle is designed to streamline the development process and provide a robust foundation for building e-commerce applications with Symfony.

supabase-mcp

Supabase MCP Server standardizes how Large Language Models (LLMs) interact with Supabase, enabling AI assistants to manage tables, fetch config, and query data. It provides tools for project management, database operations, project configuration, branching (experimental), and development tools. The server is pre-1.0, so expect some breaking changes between versions.

notebook-intelligence

Notebook Intelligence (NBI) is an AI coding assistant and extensible AI framework for JupyterLab. It greatly boosts the productivity of JupyterLab users with AI assistance by providing features such as code generation with inline chat, auto-complete, and chat interface. NBI supports various LLM Providers and AI Models, including local models from Ollama. Users can configure model provider and model options, remember GitHub Copilot login, and save configuration files. NBI seamlessly integrates with Model Context Protocol (MCP) servers, supporting both Standard Input/Output (stdio) and Server-Sent Events (SSE) transports. Users can easily add MCP servers to NBI, auto-approve tools, set environment variables, and group servers based on functionality. Additionally, NBI allows access to built-in tools from an MCP participant, enhancing the user experience and productivity.

raglite

RAGLite is a Python toolkit for Retrieval-Augmented Generation (RAG) with PostgreSQL or SQLite. It offers configurable options for choosing LLM providers, database types, and rerankers. The toolkit is fast and permissive, utilizing lightweight dependencies and hardware acceleration. RAGLite provides features like PDF to Markdown conversion, multi-vector chunk embedding, optimal semantic chunking, hybrid search capabilities, adaptive retrieval, and improved output quality. It is extensible with a built-in Model Context Protocol server, customizable ChatGPT-like frontend, document conversion to Markdown, and evaluation tools. Users can configure RAGLite for various tasks like configuring, inserting documents, running RAG pipelines, computing query adapters, evaluating performance, running MCP servers, and serving frontends.

odoo-expert

RAG-Powered Odoo Documentation Assistant is a comprehensive documentation processing and chat system that converts Odoo's documentation to a searchable knowledge base with an AI-powered chat interface. It supports multiple Odoo versions (16.0, 17.0, 18.0) and provides semantic search capabilities powered by OpenAI embeddings. The tool automates the conversion of RST to Markdown, offers real-time semantic search, context-aware AI-powered chat responses, and multi-version support. It includes a Streamlit-based web UI, REST API for programmatic access, and a CLI for document processing and chat. The system operates through a pipeline of data processing steps and an interface layer for UI and API access to the knowledge base.

langchain-extract

LangChain Extract is a simple web server that allows you to extract information from text and files using LLMs. It is built using FastAPI, LangChain, and Postgresql. The backend closely follows the extraction use-case documentation and provides a reference implementation of an app that helps to do extraction over data using LLMs. This repository is meant to be a starting point for building your own extraction application which may have slightly different requirements or use cases.

sdfx

SDFX is the ultimate no-code platform for building and sharing AI apps with beautiful UI. It enables the creation of user-friendly interfaces for complex workflows by combining Comfy workflow with a UI. The tool is designed to merge the benefits of form-based UI and graph-node based UI, allowing users to create intricate graphs with a high-level UI overlay. SDFX is fully compatible with ComfyUI, abstracting the need for installing ComfyUI. It offers features like animated graph navigation, node bookmarks, UI debugger, custom nodes manager, app and template export, image and mask editor, and more. The tool compiles as a native app or web app, making it easy to maintain and add new features.

mcp-redis

The Redis MCP Server is a natural language interface designed for agentic applications to efficiently manage and search data in Redis. It integrates seamlessly with MCP (Model Content Protocol) clients, enabling AI-driven workflows to interact with structured and unstructured data in Redis. The server supports natural language queries, seamless MCP integration, full Redis support for various data types, search and filtering capabilities, scalability, and lightweight design. It provides tools for managing data stored in Redis, such as string, hash, list, set, sorted set, pub/sub, streams, JSON, query engine, and server management. Installation can be done from PyPI or GitHub, with options for testing, development, and Docker deployment. Configuration can be via command line arguments or environment variables. Integrations include OpenAI Agents SDK, Augment, Claude Desktop, and VS Code with GitHub Copilot. Use cases include AI assistants, chatbots, data search & analytics, and event processing. Contributions are welcome under the MIT License.

bot-on-anything

The 'bot-on-anything' repository allows developers to integrate various AI models into messaging applications, enabling the creation of intelligent chatbots. By configuring the connections between models and applications, developers can easily switch between multiple channels within a project. The architecture is highly scalable, allowing the reuse of algorithmic capabilities for each new application and model integration. Supported models include ChatGPT, GPT-3.0, New Bing, and Google Bard, while supported applications range from terminals and web platforms to messaging apps like WeChat, Telegram, QQ, and more. The repository provides detailed instructions for setting up the environment, configuring the models and channels, and running the chatbot for various tasks across different messaging platforms.

shortest

Shortest is an AI-powered natural language end-to-end testing framework built on Playwright. It provides a seamless testing experience by allowing users to write tests in natural language and execute them using Anthropic Claude API. The framework also offers GitHub integration with 2FA support, making it suitable for testing web applications with complex authentication flows. Shortest simplifies the testing process by enabling users to run tests locally or in CI/CD pipelines, ensuring the reliability and efficiency of web applications.

gitleaks

Gitleaks is a tool for detecting secrets like passwords, API keys, and tokens in git repos, files, and whatever else you wanna throw at it via stdin. It can be installed using Homebrew, Docker, or Go, and is available in binary form for many popular platforms and OS types. Gitleaks can be implemented as a pre-commit hook directly in your repo or as a GitHub action. It offers scanning modes for git repositories, directories, and stdin, and allows creating baselines for ignoring old findings. Gitleaks also provides configuration options for custom secret detection rules and supports features like decoding encoded text and generating reports in various formats.

exo

Run your own AI cluster at home with everyday devices. Exo is experimental software that unifies existing devices into a powerful GPU, supporting wide model compatibility, dynamic model partitioning, automatic device discovery, ChatGPT-compatible API, and device equality. It does not use a master-worker architecture, allowing devices to connect peer-to-peer. Exo supports different partitioning strategies like ring memory weighted partitioning. Installation is recommended from source. Documentation includes example usage on multiple MacOS devices and information on inference engines and networking modules. Known issues include the iOS implementation lagging behind Python.

well-architected-iac-analyzer

Well-Architected Infrastructure as Code (IaC) Analyzer is a project demonstrating how generative AI can evaluate infrastructure code for alignment with best practices. It features a modern web application allowing users to upload IaC documents, complete IaC projects, or architecture diagrams for assessment. The tool provides insights into infrastructure code alignment with AWS best practices, offers suggestions for improving cloud architecture designs, and can generate IaC templates from architecture diagrams. Users can analyze CloudFormation, Terraform, or AWS CDK templates, architecture diagrams in PNG or JPEG format, and complete IaC projects with supporting documents. Real-time analysis against Well-Architected best practices, integration with AWS Well-Architected Tool, and export of analysis results and recommendations are included.

agent-mimir

Agent Mimir is a command line and Discord chat client 'agent' manager for LLM's like Chat-GPT that provides the models with access to tooling and a framework with which accomplish multi-step tasks. It is easy to configure your own agent with a custom personality or profession as well as enabling access to all tools that are compatible with LangchainJS. Agent Mimir is based on LangchainJS, every tool or LLM that works on Langchain should also work with Mimir. The tasking system is based on Auto-GPT and BabyAGI where the agent needs to come up with a plan, iterate over its steps and review as it completes the task.

For similar tasks

flux-aio

Flux All-In-One is a lightweight distribution optimized for running the GitOps Toolkit controllers as a single deployable unit on Kubernetes clusters. It is designed for bare clusters, edge clusters, clusters with restricted communication, clusters with egress via proxies, and serverless clusters. The distribution follows semver versioning and provides documentation for specifications, installation, upgrade, OCI sync configuration, Git sync configuration, and multi-tenancy configuration. Users can deploy Flux using Timoni CLI and a Timoni Bundle file, fine-tune installation options, sync from public Git repositories, bootstrap repositories, and uninstall Flux without affecting reconciled workloads.

For similar jobs

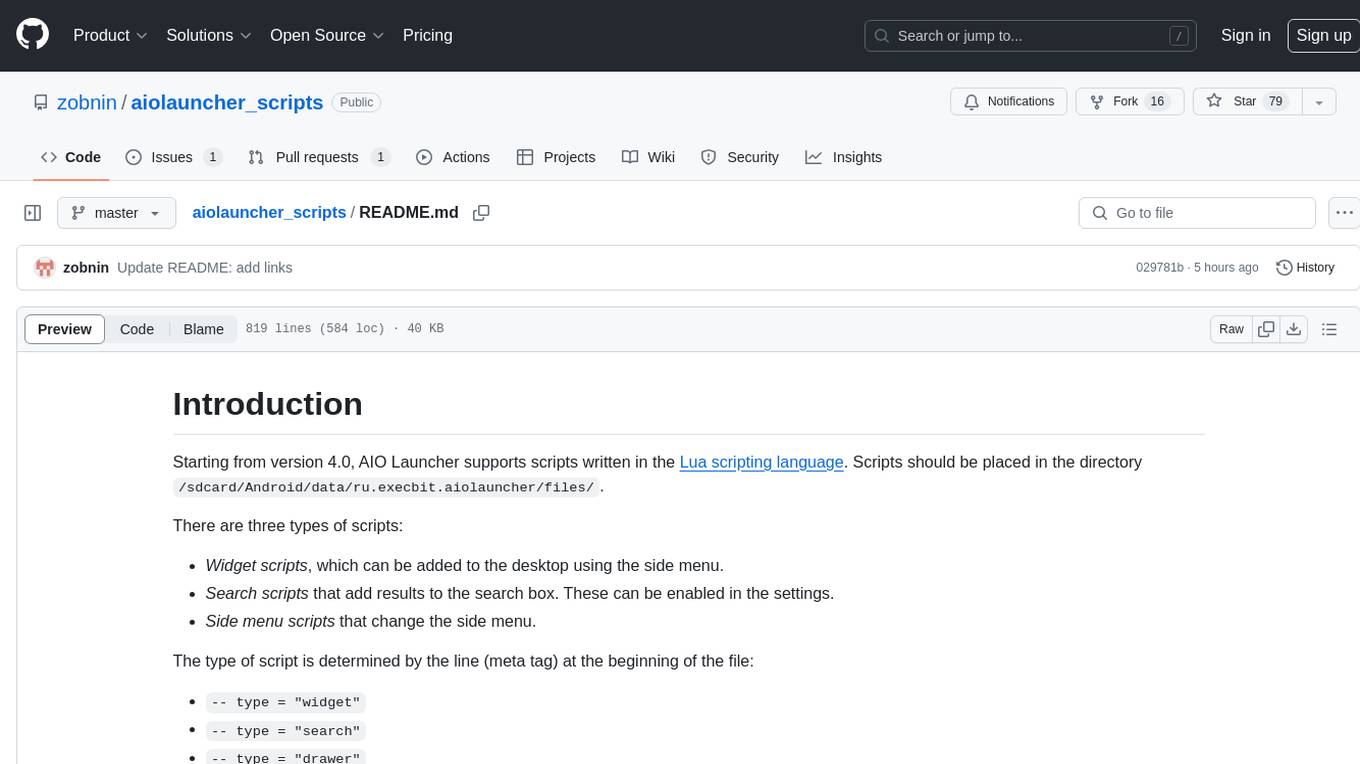

aiolauncher_scripts

AIO Launcher Scripts is a collection of Lua scripts that can be used with AIO Launcher to enhance its functionality. These scripts can be used to create widget scripts, search scripts, and side menu scripts. They provide various functions such as displaying text, buttons, progress bars, charts, and interacting with app widgets. The scripts can be used to customize the appearance and behavior of the launcher, add new features, and interact with external services.

flux-aio

Flux All-In-One is a lightweight distribution optimized for running the GitOps Toolkit controllers as a single deployable unit on Kubernetes clusters. It is designed for bare clusters, edge clusters, clusters with restricted communication, clusters with egress via proxies, and serverless clusters. The distribution follows semver versioning and provides documentation for specifications, installation, upgrade, OCI sync configuration, Git sync configuration, and multi-tenancy configuration. Users can deploy Flux using Timoni CLI and a Timoni Bundle file, fine-tune installation options, sync from public Git repositories, bootstrap repositories, and uninstall Flux without affecting reconciled workloads.

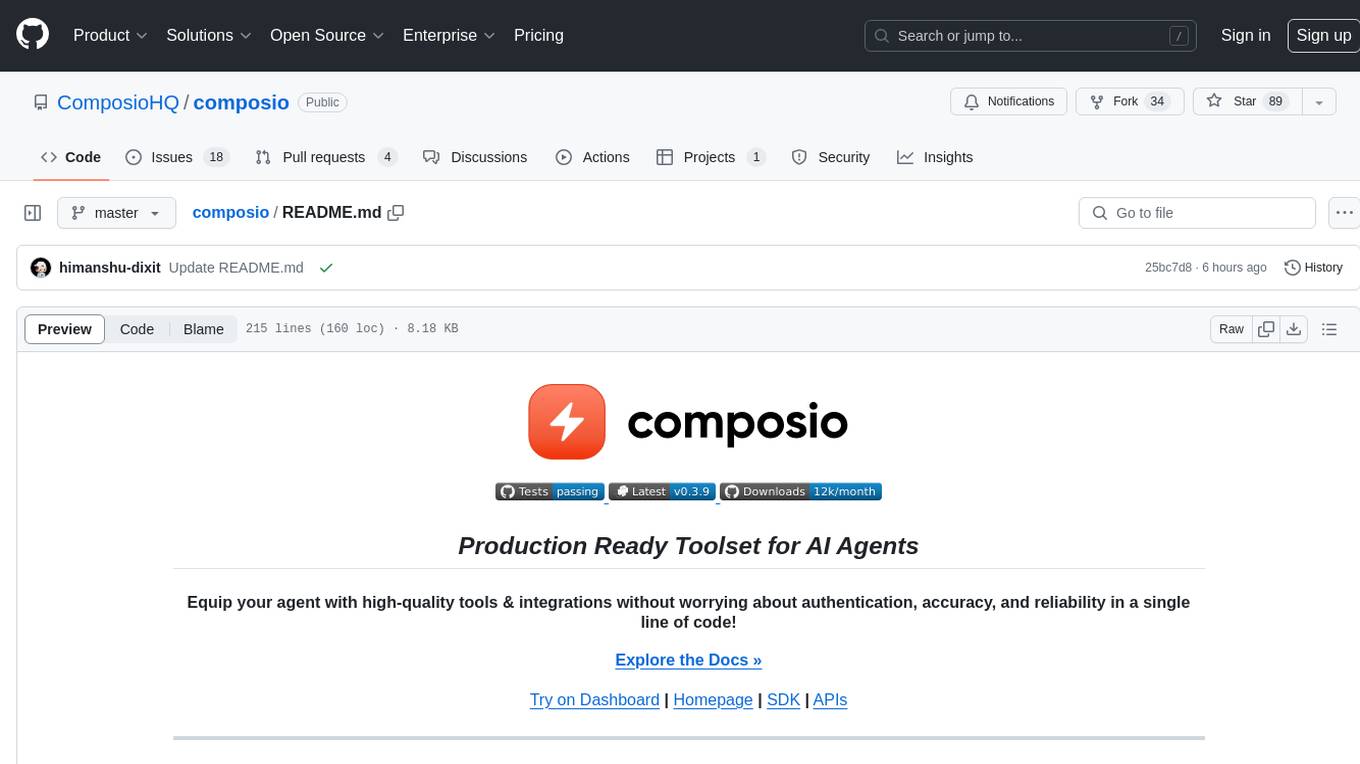

composio

Composio is a production-ready toolset for AI agents that enables users to integrate AI agents with various agentic tools effortlessly. It provides support for over 100 tools across different categories, including popular softwares like GitHub, Notion, Linear, Gmail, Slack, and more. Composio ensures managed authorization with support for six different authentication protocols, offering better agentic accuracy and ease of use. Users can easily extend Composio with additional tools, frameworks, and authorization protocols. The toolset is designed to be embeddable and pluggable, allowing for seamless integration and consistent user experience.

IKBT

IKBT is a Python-based system for generating closed-form solutions to the manipulator inverse kinematics problem using behavior trees for action selection. Solutions are fully symbolic and are output as LaTex, Python, and C++. The tool automates closed-form kinematics solving by organizing solution algorithms in a behavior tree, incorporating frequently used knowledge, generating a dependency graph of joint variables, and providing features for automatic documentation and code generation. It is implemented in Python with minimal dependencies outside of the standard Python distribution.

ai2apps

AI2Apps is a visual IDE for building LLM-based AI agent applications, enabling developers to efficiently create AI agents through drag-and-drop, with features like design-to-development for rapid prototyping, direct packaging of agents into apps, powerful debugging capabilities, enhanced user interaction, efficient team collaboration, flexible deployment, multilingual support, simplified product maintenance, and extensibility through plugins.

flowgen

FlowGen is a tool built for AutoGen, a great agent framework from Microsoft and a lot of contributors. It provides intuitive visual tools that streamline the construction and oversight of complex agent-based workflows, simplifying the process for creators and developers. Users can create Autoflows, chat with agents, and share flow templates. The tool is fully dockerized and supports deployment on Railway.app. Contributions to the project are welcome, and the platform uses semantic-release for versioning and releases.

aiohue

Aiohue is an asynchronous library designed to control Philips Hue lights. It requires Python 3.10+ and utilizes asyncio and aiohttp. The library supports both V1 and V2 APIs of the Hue Bridge, with V2 API offering event-based updates to eliminate the need for polling. The contribution guidelines emphasize matching object hierarchy and property/method names with the Philips Hue API.

rosa

ROSA is an AI Agent designed to interact with ROS-based robotics systems using natural language queries. It can generate system reports, read and parse ROS log files, adapt to new robots, and run various ROS commands using natural language. The tool is versatile for robotics research and development, providing an easy way to interact with robots and the ROS environment.