gemini-srt-translator

Python tool for translating subtitles using Google Gemini AI

Stars: 67

Gemini SRT Translator is a tool that utilizes Google Generative AI to provide accurate and efficient translations for subtitle files. Users can customize translation settings, such as model name and batch size, and list available models from the Gemini API. The tool requires a free API key from Google AI Studio for setup and offers features like translating subtitles to a specified target language and resuming partial translations. Users can further customize translation settings with optional parameters like gemini_api_key2, output_file, start_line, model_name, batch_size, and more.

README:

Gemini SRT Translator is a tool designed to translate subtitle files using Google Generative AI. It leverages the power of the Gemini API to provide accurate and efficient translations for your subtitle files.

- Translate subtitle files to a specified target language.

- Customize translation settings such as model name and batch size.

- List available models from the Gemini API.

To install Gemini SRT Translator, use pip:

pip install --upgrade gemini-srt-translatorTo use Gemini SRT Translator, you need to generate a free API key from Google AI Studio. Follow these steps:

- Go to Google AI Studio API Key.

- Sign in with your Google account.

- Click on "Generate API Key".

- Copy the generated API key and keep it secure.

You will use this API key for setting up the script before starting the translation process.

You can translate subtitles using the translate command:

import gemini_srt_translator as gst

gst.gemini_api_key = "your_gemini_api_key_here"

gst.target_language = "French"

gst.input_file = "subtitle.srt"

gst.translate()This will translate the subtitles in the subtitle.srt file to French.

If you want to resume a partial translation (due to interruption or failure), you can simply re-run the script with the same parameters. GST will automatically detect the existence of a partially translated file and prompt if you want to resume.

You can also specify the start_line parameter directly in the script and skip the prompt:

import gemini_srt_translator as gst

gst.gemini_api_key = "your_gemini_api_key_here"

gst.target_language = "French"

gst.input_file = "subtitle.srt"

gst.start_line = 20

gst.translate()You can further customize the translation settings by providing optional parameters:

-

gemini_api_key2: Second Gemini API key for additional quota. (for those using the free version of Pro models) -

output_file: Path and name of the translated subtitle file. -

start_line: Starting line number for translation. -

description: Description of the translation job. -

model_name: Model name to use for translation. (default: "gemini-2.0-flash") -

batch_size: Batch size for translation. (default: 30) -

free_quota: Use free quota for translation (default: True). -

skip_upgrade: Skip version upgrade check (default: False). -

use_colors: Use colors in the console output (default: True).

Example:

import gemini_srt_translator as gst

gst.gemini_api_key = "your_gemini_api_key_here"

gst.gemini_api_key2 = "your_gemini_api_key2_here"

gst.target_language = "French"

gst.input_file = "subtitle.srt"

gst.output_file = "subtitle_translated.srt"

gst.start_line = 20

gst.description = "Translation of a medical television series, use medical terms"

gst.model_name = "gemini-2.0-flash"

gst.batch_size = 30

gst.free_quota = True

gst.skip_upgrade = True

gst.translate()You can list the available models using the listmodels command:

import gemini_srt_translator as gst

gst.gemini_api_key = "your_gemini_api_key_here"

gst.listmodels()This will print a list of available models to the console.

This project is licensed under the MIT License. See the LICENSE file for details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for gemini-srt-translator

Similar Open Source Tools

gemini-srt-translator

Gemini SRT Translator is a tool that utilizes Google Generative AI to provide accurate and efficient translations for subtitle files. Users can customize translation settings, such as model name and batch size, and list available models from the Gemini API. The tool requires a free API key from Google AI Studio for setup and offers features like translating subtitles to a specified target language and resuming partial translations. Users can further customize translation settings with optional parameters like gemini_api_key2, output_file, start_line, model_name, batch_size, and more.

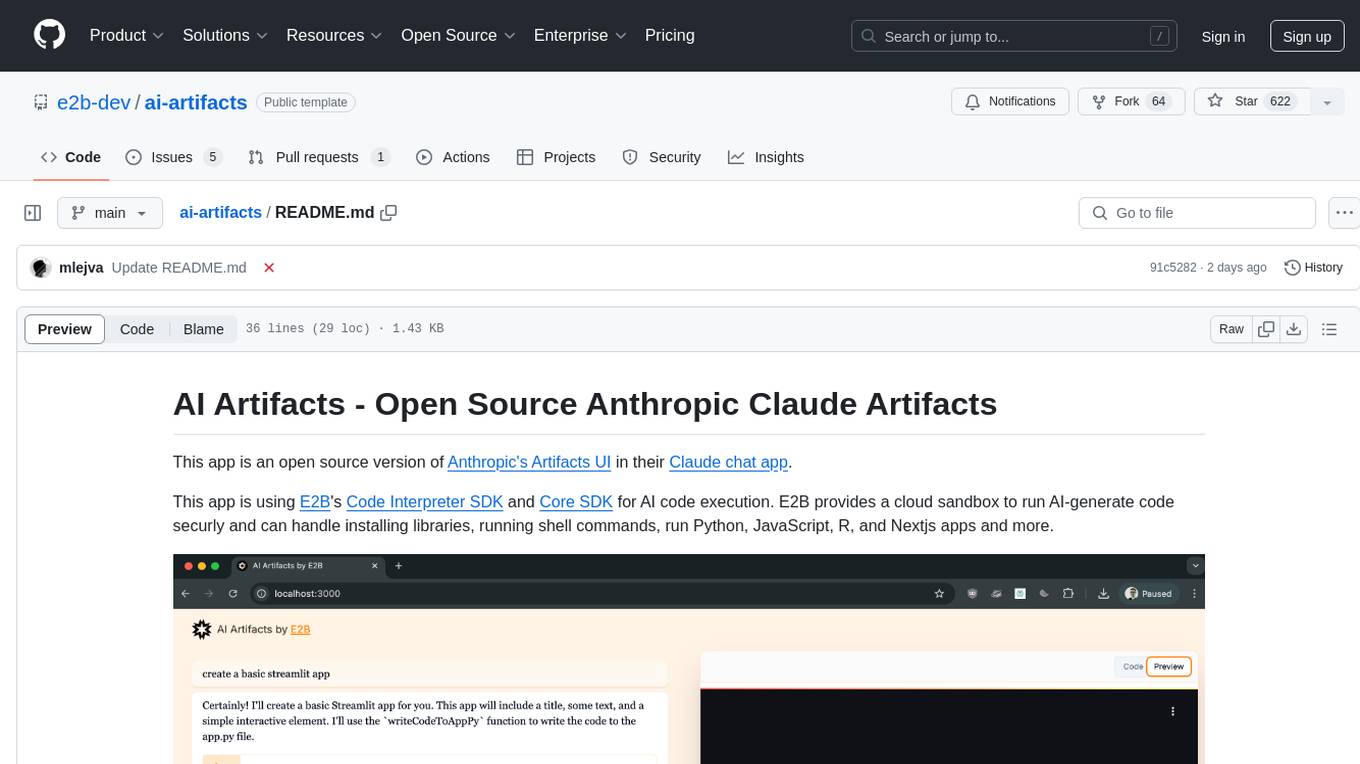

ai-artifacts

AI Artifacts is an open source tool that replicates Anthropic's Artifacts UI in the Claude chat app. It utilizes E2B's Code Interpreter SDK and Core SDK for secure AI code execution in a cloud sandbox environment. Users can run AI-generated code in various languages such as Python, JavaScript, R, and Nextjs apps. The tool also supports running AI-generated Python in Jupyter notebook, Next.js apps, and Streamlit apps. Additionally, it offers integration with Vercel AI SDK for tool calling and streaming responses from the model.

ppt2desc

ppt2desc is a command-line tool that converts PowerPoint presentations into detailed textual descriptions using vision language models. It interprets and describes visual elements, capturing the full semantic meaning of each slide in a machine-readable format. The tool supports various model providers and offers features like converting PPT/PPTX files to semantic descriptions, processing individual files or directories, visual elements interpretation, rate limiting for API calls, customizable prompts, and JSON output format for easy integration.

langserve

LangServe helps developers deploy `LangChain` runnables and chains as a REST API. This library is integrated with FastAPI and uses pydantic for data validation. In addition, it provides a client that can be used to call into runnables deployed on a server. A JavaScript client is available in LangChain.js.

Lumos

Lumos is a Chrome extension powered by a local LLM co-pilot for browsing the web. It allows users to summarize long threads, news articles, and technical documentation. Users can ask questions about reviews and product pages. The tool requires a local Ollama server for LLM inference and embedding database. Lumos supports multimodal models and file attachments for processing text and image content. It also provides options to customize models, hosts, and content parsers. The extension can be easily accessed through keyboard shortcuts and offers tools for automatic invocation based on prompts.

aiohttp-cors

The aiohttp_cors library provides Cross Origin Resource Sharing (CORS) support for aiohttp, an asyncio-powered asynchronous HTTP server. CORS allows overriding the Same-origin policy for specific resources, enabling web pages to access resources from different origins. The library helps configure CORS settings for resources and routes in aiohttp applications, allowing control over origins, credentials passing, headers, and preflight requests.

sdfx

SDFX is the ultimate no-code platform for building and sharing AI apps with beautiful UI. It enables the creation of user-friendly interfaces for complex workflows by combining Comfy workflow with a UI. The tool is designed to merge the benefits of form-based UI and graph-node based UI, allowing users to create intricate graphs with a high-level UI overlay. SDFX is fully compatible with ComfyUI, abstracting the need for installing ComfyUI. It offers features like animated graph navigation, node bookmarks, UI debugger, custom nodes manager, app and template export, image and mask editor, and more. The tool compiles as a native app or web app, making it easy to maintain and add new features.

fragments

Fragments is an open-source tool that leverages Anthropic's Claude Artifacts, Vercel v0, and GPT Engineer. It is powered by E2B Sandbox SDK and Code Interpreter SDK, allowing secure execution of AI-generated code. The tool is based on Next.js 14, shadcn/ui, TailwindCSS, and Vercel AI SDK. Users can stream in the UI, install packages from npm and pip, and add custom stacks and LLM providers. Fragments enables users to build web apps with Python interpreter, Next.js, Vue.js, Streamlit, and Gradio, utilizing providers like OpenAI, Anthropic, Google AI, and more.

parsera

Parsera is a lightweight Python library designed for scraping websites using LLMs. It offers simplicity and efficiency by minimizing token usage, enhancing speed, and reducing costs. Users can easily set up and run the tool to extract specific elements from web pages, generating JSON output with relevant data. Additionally, Parsera supports integration with various chat models, such as Azure, expanding its functionality and customization options for web scraping tasks.

golf

Golf is a simple command-line tool for calculating the distance between two geographic coordinates. It uses the Haversine formula to accurately determine the distance between two points on the Earth's surface. This tool is useful for developers working on location-based applications or projects that require distance calculations. With Golf, users can easily input latitude and longitude coordinates and get the precise distance in kilometers or miles. The tool is lightweight, easy to use, and can be integrated into various programming workflows.

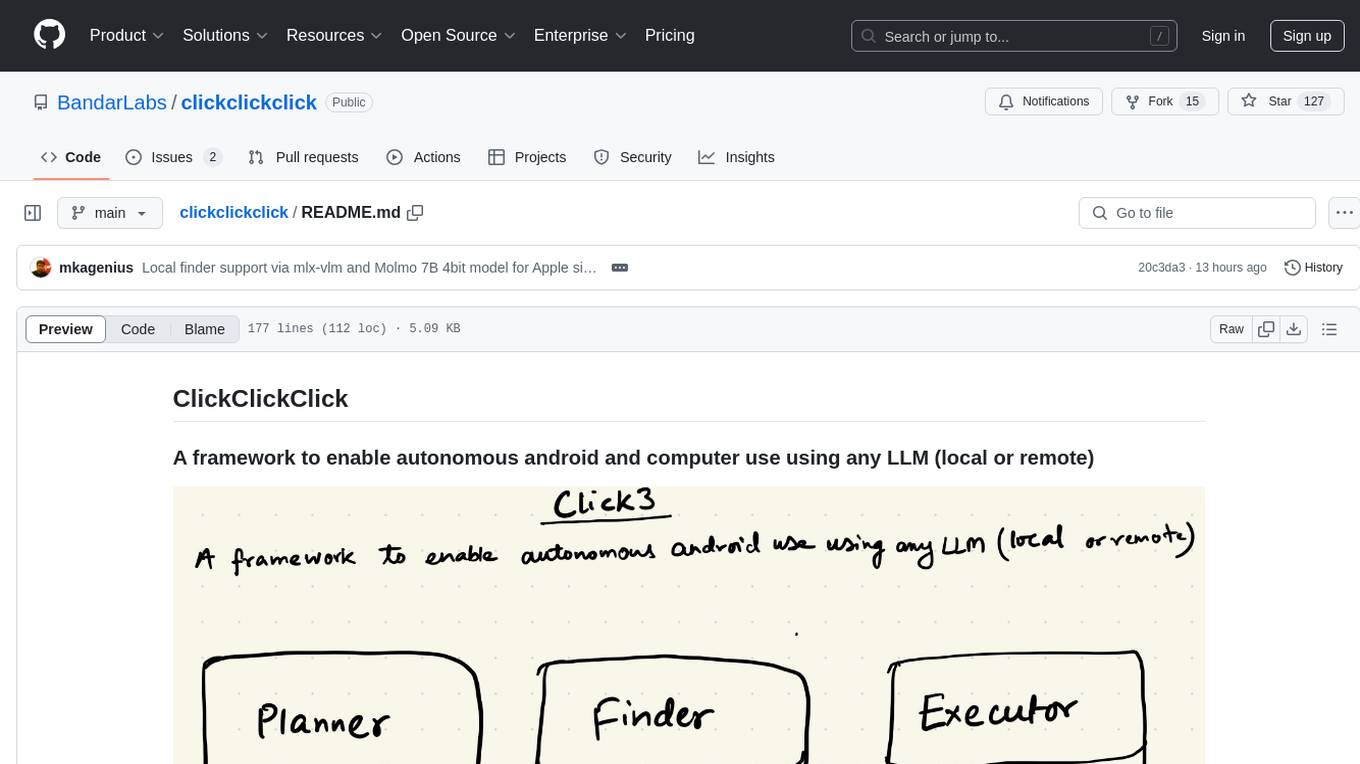

clickclickclick

ClickClickClick is a framework designed to enable autonomous Android and computer use using various LLM models, both locally and remotely. It supports tasks such as drafting emails, opening browsers, and starting games, with current support for local models via Ollama, Gemini, and GPT 4o. The tool is highly experimental and evolving, with the best results achieved using specific model combinations. Users need prerequisites like `adb` installation and USB debugging enabled on Android phones. The tool can be installed via cloning the repository, setting up a virtual environment, and installing dependencies. It can be used as a CLI tool or script, allowing users to configure planner and finder models for different tasks. Additionally, it can be used as an API to execute tasks based on provided prompts, platform, and models.

shortest

Shortest is an AI-powered natural language end-to-end testing framework built on Playwright. It provides a seamless testing experience by allowing users to write tests in natural language and execute them using Anthropic Claude API. The framework also offers GitHub integration with 2FA support, making it suitable for testing web applications with complex authentication flows. Shortest simplifies the testing process by enabling users to run tests locally or in CI/CD pipelines, ensuring the reliability and efficiency of web applications.

syncode

SynCode is a novel framework for the grammar-guided generation of Large Language Models (LLMs) that ensures syntactically valid output based on a Context-Free Grammar (CFG). It supports various programming languages like Python, Go, SQL, Math, JSON, and more. Users can define custom grammars using EBNF syntax. SynCode offers fast generation, seamless integration with HuggingFace Language Models, and the ability to sample with different decoding strategies.

openedai-speech

OpenedAI Speech is a free, private text-to-speech server compatible with the OpenAI audio/speech API. It offers custom voice cloning and supports various models like tts-1 and tts-1-hd. Users can map their own piper voices and create custom cloned voices. The server provides multilingual support with XTTS voices and allows fixing incorrect sounds with regex. Recent changes include bug fixes, improved error handling, and updates for multilingual support. Installation can be done via Docker or manual setup, with usage instructions provided. Custom voices can be created using Piper or Coqui XTTS v2, with guidelines for preparing audio files. The tool is suitable for tasks like generating speech from text, creating custom voices, and multilingual text-to-speech applications.

syncode

SynCode is a novel framework for the grammar-guided generation of Large Language Models (LLMs) that ensures syntactically valid output with respect to defined Context-Free Grammar (CFG) rules. It supports general-purpose programming languages like Python, Go, SQL, JSON, and more, allowing users to define custom grammars using EBNF syntax. The tool compares favorably to other constrained decoders and offers features like fast grammar-guided generation, compatibility with HuggingFace Language Models, and the ability to work with various decoding strategies.

hordelib

horde-engine is a wrapper around ComfyUI designed to run inference pipelines visually designed in the ComfyUI GUI. It enables users to design inference pipelines in ComfyUI and then call them programmatically, maintaining compatibility with the existing horde implementation. The library provides features for processing Horde payloads, initializing the library, downloading and validating models, and generating images based on input data. It also includes custom nodes for preprocessing and tasks such as face restoration and QR code generation. The project depends on various open source projects and bundles some dependencies within the library itself. Users can design ComfyUI pipelines, convert them to the backend format, and run them using the run_image_pipeline() method in hordelib.comfy.Comfy(). The project is actively developed and tested using git, tox, and a specific model directory structure.

For similar tasks

aimp-discord-presence

AIMP - Discord Presence is a plugin for AIMP that changes the status of Discord based on the music you are listening to. It allows users to share their detected activity with others on Discord. The plugin settings are stored in the AIMP configuration file, and users can customize various options such as application ID, timestamp, album art display, and image settings for different playback states.

ChatDev

ChatDev is a virtual software company powered by intelligent agents like CEO, CPO, CTO, programmer, reviewer, tester, and art designer. These agents collaborate to revolutionize the digital world through programming. The platform offers an easy-to-use, highly customizable, and extendable framework based on large language models, ideal for studying collective intelligence. ChatDev introduces innovative methods like Iterative Experience Refinement and Experiential Co-Learning to enhance software development efficiency. It supports features like incremental development, Docker integration, Git mode, and Human-Agent-Interaction mode. Users can customize ChatChain, Phase, and Role settings, and share their software creations easily. The project is open-source under the Apache 2.0 License and utilizes data licensed under CC BY-NC 4.0.

novelai-bot

This repository contains a drawing plugin based on NovelAI. It allows users to draw images, change models, samplers, and image sizes, use advanced request syntax, customize prohibited word lists, automatically translate Chinese keywords, automatically retract messages after a certain time, and connect to private servers. Thanks to Koishi's plugin mechanism, users can achieve more functionalities by combining it with other plugins, such as multi-platform support, rate limiting, context management, and multi-language support.

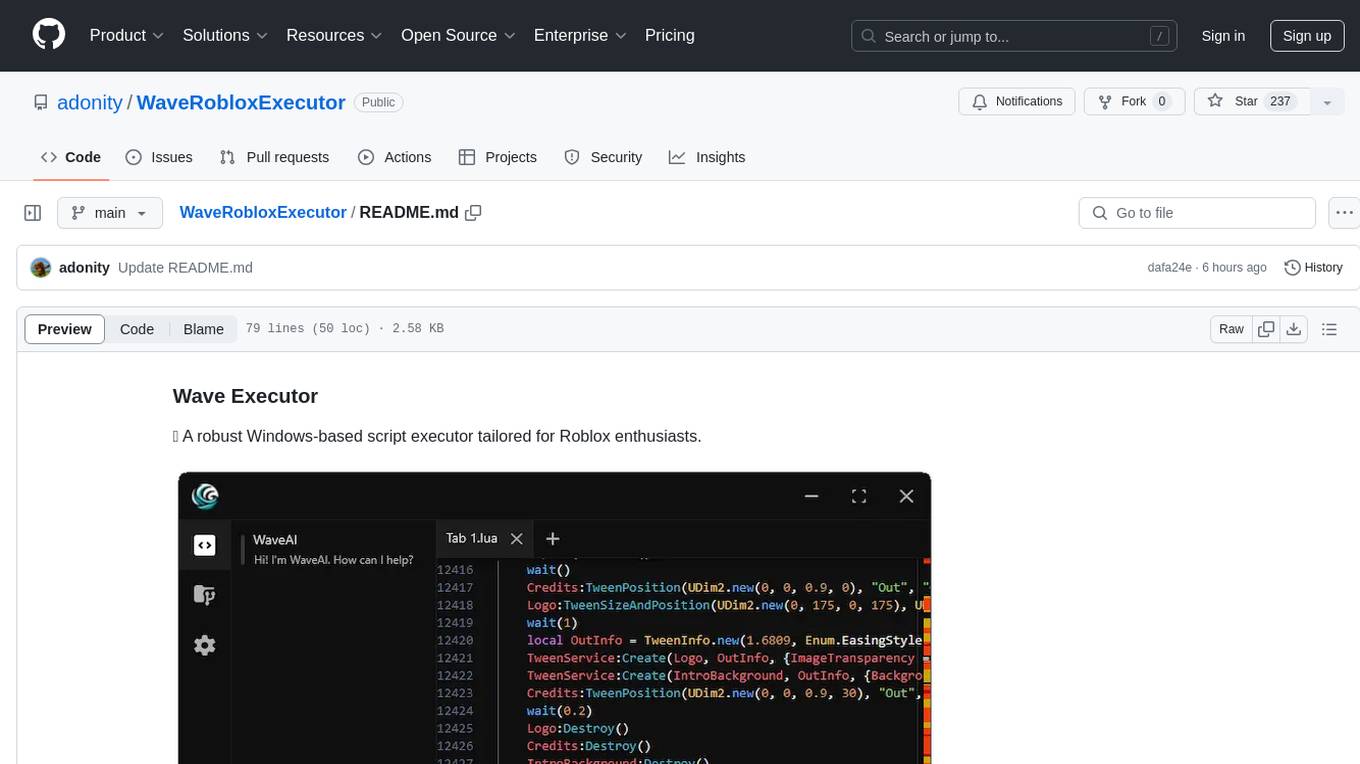

WaveRobloxExecutor

Wave Executor is a cutting-edge script executor tailored for Roblox enthusiasts, offering AI integration for seamless script development, ad-free premium features, and 24/7 customer support. It enhances your Roblox gameplay experience by providing a wide range of features to take your gameplay to new heights.

gpt_mobile

GPT Mobile is a chat assistant for Android that allows users to chat with multiple models at once. It supports various platforms such as OpenAI GPT, Anthropic Claude, and Google Gemini. Users can customize temperature, top p (Nucleus sampling), and system prompt. The app features local chat history, Material You style UI, dark mode support, and per app language setting for Android 13+. It is built using 100% Kotlin, Jetpack Compose, and follows a modern app architecture for Android developers.

hey

Hey is a free CLI-based AI assistant powered by LLMs, allowing users to connect Hey to different LLM services. It provides commands for quick usage, customization options, and integration with code editors. Hey was created for a hackathon and is licensed under the MIT License.

jiwu-mall-chat-tauri

Jiwu Chat Tauri APP is a desktop chat application based on Nuxt3 + Tauri + Element Plus framework. It provides a beautiful user interface with integrated chat and social functions. It also supports AI shopping chat and global dark mode. Users can engage in real-time chat, share updates, and interact with AI customer service through this application.

tiddlywiki-starter-kit

TiddlyWiki Starter Kit is a pre-configured setup for TiddlyWiki, utilizing Tailwind CSS for responsive design and providing multiple wiki support for different purposes. It offers quick operations with keyboard shortcuts, simplified configuration through editing the .env file, and one-click installation using npm create command.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.