fragments

Open-source Next.js template for building apps that are fully generated by AI. By E2B.

Stars: 5834

Fragments is an open-source tool that leverages Anthropic's Claude Artifacts, Vercel v0, and GPT Engineer. It is powered by E2B Sandbox SDK and Code Interpreter SDK, allowing secure execution of AI-generated code. The tool is based on Next.js 14, shadcn/ui, TailwindCSS, and Vercel AI SDK. Users can stream in the UI, install packages from npm and pip, and add custom stacks and LLM providers. Fragments enables users to build web apps with Python interpreter, Next.js, Vue.js, Streamlit, and Gradio, utilizing providers like OpenAI, Anthropic, Google AI, and more.

README:

This is an open-source version of apps like Anthropic's Claude Artifacts, Vercel v0, or GPT Engineer.

Powered by the E2B SDK.

- Based on Next.js 14 (App Router, Server Actions), shadcn/ui, TailwindCSS, Vercel AI SDK.

- Uses the E2B SDK by E2B to securely execute code generated by AI.

- Streaming in the UI.

- Can install and use any package from npm, pip.

- Supported stacks (add your own):

- 🔸 Python interpreter

- 🔸 Next.js

- 🔸 Vue.js

- 🔸 Streamlit

- 🔸 Gradio

- Supported LLM Providers (add your own):

- 🔸 OpenAI

- 🔸 Anthropic

- 🔸 Google AI

- 🔸 Mistral

- 🔸 Groq

- 🔸 Fireworks

- 🔸 Together AI

- 🔸 Ollama

- Integrates with Morph Apply model for token efficient, accurate and faster code editing.

Make sure to give us a star!

- git

- Recent version of Node.js and npm package manager

- E2B API Key

- LLM Provider API Key

In your terminal:

git clone https://github.com/e2b-dev/fragments.git

Enter the repository:

cd fragments

Run the following to install the required dependencies:

npm i

Create a .env.local file and set the following:

# Get your API key here - https://e2b.dev/

E2B_API_KEY="your-e2b-api-key"

# OpenAI API Key

OPENAI_API_KEY=

# Other providers

ANTHROPIC_API_KEY=

GROQ_API_KEY=

FIREWORKS_API_KEY=

TOGETHER_API_KEY=

GOOGLE_AI_API_KEY=

GOOGLE_VERTEX_CREDENTIALS=

MISTRAL_API_KEY=

XAI_API_KEY=

### Optional env vars

# (on by default) Get your MORPH key here - https://morphllm.com/dashboard/api-keys

MORPH_API_KEY=

# Domain of the site

NEXT_PUBLIC_SITE_URL=

# Rate limit

RATE_LIMIT_MAX_REQUESTS=

RATE_LIMIT_WINDOW=

# Vercel/Upstash KV (short URLs, rate limiting)

KV_REST_API_URL=

KV_REST_API_TOKEN=

# Supabase (auth)

SUPABASE_URL=

SUPABASE_ANON_KEY=

# PostHog (analytics)

NEXT_PUBLIC_POSTHOG_KEY=

NEXT_PUBLIC_POSTHOG_HOST=

### Disabling functionality (when uncommented)

# Disable API key and base URL input in the chat

# NEXT_PUBLIC_NO_API_KEY_INPUT=

# NEXT_PUBLIC_NO_BASE_URL_INPUT=

# Hide local models from the list of available models

# NEXT_PUBLIC_HIDE_LOCAL_MODELS=npm run dev

npm run build

-

Make sure E2B CLI is installed and you're logged in.

-

Add a new folder under sandbox-templates/

-

Initialize a new template using E2B CLI:

e2b template initThis will create a new file called

e2b.Dockerfile. -

Adjust the

e2b.DockerfileHere's an example streamlit template:

# You can use most Debian-based base images FROM python:3.19-slim RUN pip3 install --no-cache-dir streamlit pandas numpy matplotlib requests seaborn plotly # Copy the code to the container WORKDIR /home/user COPY . /home/user

-

Specify a custom start command in

e2b.toml:start_cmd = "cd /home/user && streamlit run app.py"

-

Deploy the template with the E2B CLI

e2b template build --name <template-name>After the build has finished, you should get the following message:

✅ Building sandbox template <template-id> <template-name> finished. -

Open lib/templates.json in your code editor.

Add your new template to the list. Here's an example for Streamlit:

"streamlit-developer": { "name": "Streamlit developer", "lib": [ "streamlit", "pandas", "numpy", "matplotlib", "request", "seaborn", "plotly" ], "file": "app.py", "instructions": "A streamlit app that reloads automatically.", "port": 8501 // can be null },

Provide a template id (as key), name, list of dependencies, entrypoint and a port (optional). You can also add additional instructions that will be given to the LLM.

-

Optionally, add a new logo under public/thirdparty/templates

-

Open lib/models.json in your code editor.

-

Add a new entry to the models list:

{ "id": "mistral-large", "name": "Mistral Large", "provider": "Ollama", "providerId": "ollama" }Where id is the model id, name is the model name (visible in the UI), provider is the provider name and providerId is the provider tag (see adding providers below).

-

Open lib/models.ts in your code editor.

-

Add a new entry to the

providerConfigslist:Example for fireworks:

fireworks: () => createOpenAI({ apiKey: apiKey || process.env.FIREWORKS_API_KEY, baseURL: baseURL || 'https://api.fireworks.ai/inference/v1' })(modelNameString),

-

Optionally, adjust the default structured output mode in the

getDefaultModefunction:if (providerId === 'fireworks') { return 'json' }

-

Optionally, add a new logo under public/thirdparty/logos

As an open-source project, we welcome contributions from the community. If you are experiencing any bugs or want to add some improvements, please feel free to open an issue or pull request.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for fragments

Similar Open Source Tools

fragments

Fragments is an open-source tool that leverages Anthropic's Claude Artifacts, Vercel v0, and GPT Engineer. It is powered by E2B Sandbox SDK and Code Interpreter SDK, allowing secure execution of AI-generated code. The tool is based on Next.js 14, shadcn/ui, TailwindCSS, and Vercel AI SDK. Users can stream in the UI, install packages from npm and pip, and add custom stacks and LLM providers. Fragments enables users to build web apps with Python interpreter, Next.js, Vue.js, Streamlit, and Gradio, utilizing providers like OpenAI, Anthropic, Google AI, and more.

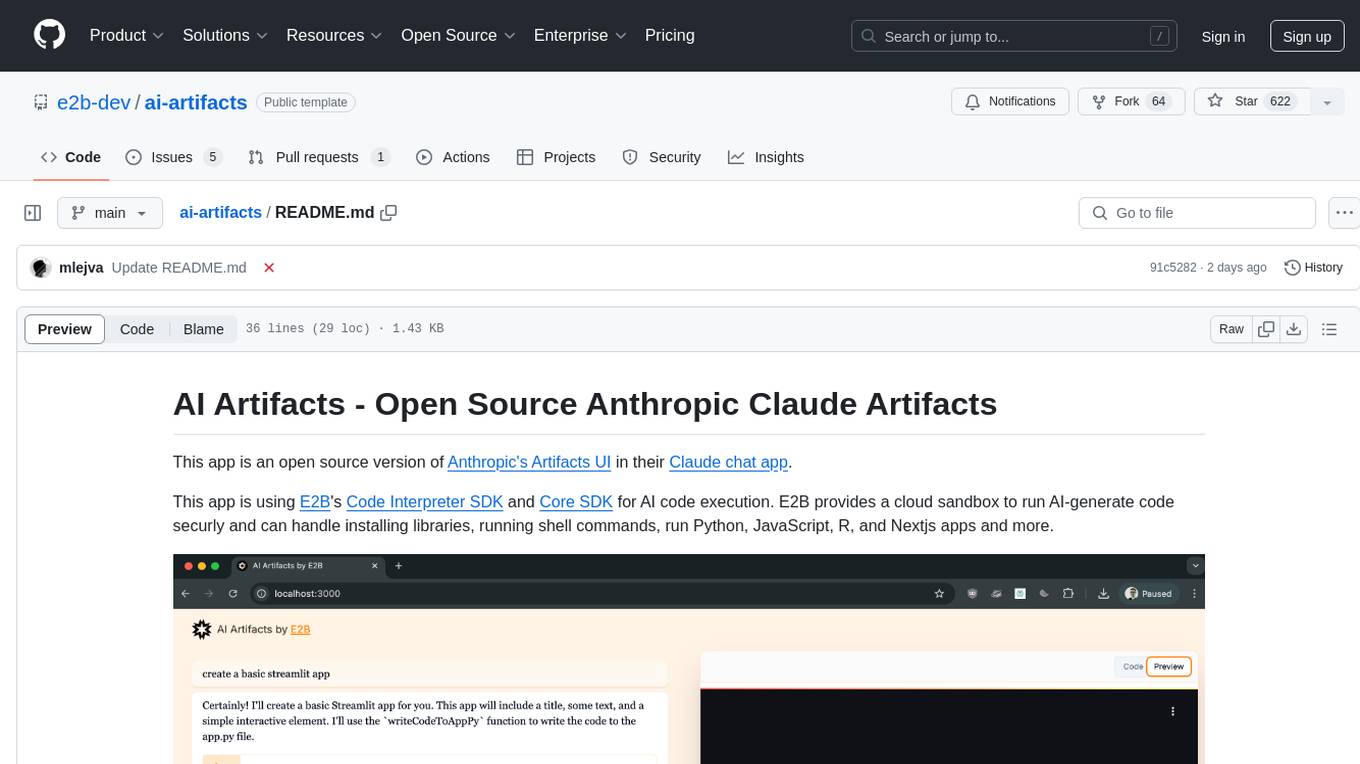

ai-artifacts

AI Artifacts is an open source tool that replicates Anthropic's Artifacts UI in the Claude chat app. It utilizes E2B's Code Interpreter SDK and Core SDK for secure AI code execution in a cloud sandbox environment. Users can run AI-generated code in various languages such as Python, JavaScript, R, and Nextjs apps. The tool also supports running AI-generated Python in Jupyter notebook, Next.js apps, and Streamlit apps. Additionally, it offers integration with Vercel AI SDK for tool calling and streaming responses from the model.

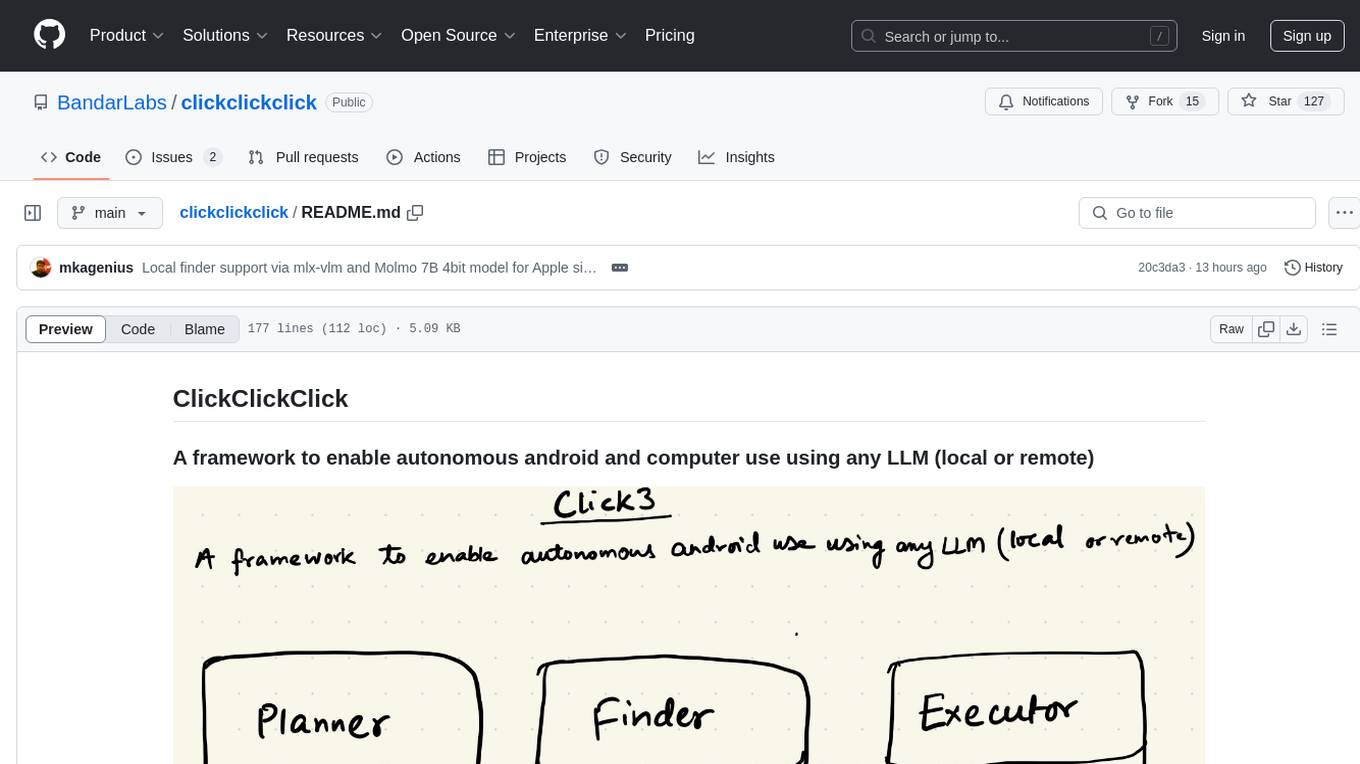

clickclickclick

ClickClickClick is a framework designed to enable autonomous Android and computer use using various LLM models, both locally and remotely. It supports tasks such as drafting emails, opening browsers, and starting games, with current support for local models via Ollama, Gemini, and GPT 4o. The tool is highly experimental and evolving, with the best results achieved using specific model combinations. Users need prerequisites like `adb` installation and USB debugging enabled on Android phones. The tool can be installed via cloning the repository, setting up a virtual environment, and installing dependencies. It can be used as a CLI tool or script, allowing users to configure planner and finder models for different tasks. Additionally, it can be used as an API to execute tasks based on provided prompts, platform, and models.

sdfx

SDFX is the ultimate no-code platform for building and sharing AI apps with beautiful UI. It enables the creation of user-friendly interfaces for complex workflows by combining Comfy workflow with a UI. The tool is designed to merge the benefits of form-based UI and graph-node based UI, allowing users to create intricate graphs with a high-level UI overlay. SDFX is fully compatible with ComfyUI, abstracting the need for installing ComfyUI. It offers features like animated graph navigation, node bookmarks, UI debugger, custom nodes manager, app and template export, image and mask editor, and more. The tool compiles as a native app or web app, making it easy to maintain and add new features.

SimplerLLM

SimplerLLM is an open-source Python library that simplifies interactions with Large Language Models (LLMs) for researchers and beginners. It provides a unified interface for different LLM providers, tools for enhancing language model capabilities, and easy development of AI-powered tools and apps. The library offers features like unified LLM interface, generic text loader, RapidAPI connector, SERP integration, prompt template builder, and more. Users can easily set up environment variables, create LLM instances, use tools like SERP, generic text loader, calling RapidAPI APIs, and prompt template builder. Additionally, the library includes chunking functions to split texts into manageable chunks based on different criteria. Future updates will bring more tools, interactions with local LLMs, prompt optimization, response evaluation, GPT Trainer, document chunker, advanced document loader, integration with more providers, Simple RAG with SimplerVectors, integration with vector databases, agent builder, and LLM server.

langserve

LangServe helps developers deploy `LangChain` runnables and chains as a REST API. This library is integrated with FastAPI and uses pydantic for data validation. In addition, it provides a client that can be used to call into runnables deployed on a server. A JavaScript client is available in LangChain.js.

tiledesk-dashboard

Tiledesk is an open-source live chat platform with integrated chatbots written in Node.js and Express. It is designed to be a multi-channel platform for web, Android, and iOS, and it can be used to increase sales or provide post-sales customer service. Tiledesk's chatbot technology allows for automation of conversations, and it also provides APIs and webhooks for connecting external applications. Additionally, it offers a marketplace for apps and features such as CRM, ticketing, and data export.

lexido

Lexido is an innovative assistant for the Linux command line, designed to boost your productivity and efficiency. Powered by Gemini Pro 1.0 and utilizing the free API, Lexido offers smart suggestions for commands based on your prompts and importantly your current environment. Whether you're installing software, managing files, or configuring system settings, Lexido streamlines the process, making it faster and more intuitive.

raglite

RAGLite is a Python toolkit for Retrieval-Augmented Generation (RAG) with PostgreSQL or SQLite. It offers configurable options for choosing LLM providers, database types, and rerankers. The toolkit is fast and permissive, utilizing lightweight dependencies and hardware acceleration. RAGLite provides features like PDF to Markdown conversion, multi-vector chunk embedding, optimal semantic chunking, hybrid search capabilities, adaptive retrieval, and improved output quality. It is extensible with a built-in Model Context Protocol server, customizable ChatGPT-like frontend, document conversion to Markdown, and evaluation tools. Users can configure RAGLite for various tasks like configuring, inserting documents, running RAG pipelines, computing query adapters, evaluating performance, running MCP servers, and serving frontends.

client-python

The Mistral Python Client is a tool inspired by cohere-python that allows users to interact with the Mistral AI API. It provides functionalities to access and utilize the AI capabilities offered by Mistral. Users can easily install the client using pip and manage dependencies using poetry. The client includes examples demonstrating how to use the API for various tasks, such as chat interactions. To get started, users need to obtain a Mistral API Key and set it as an environment variable. Overall, the Mistral Python Client simplifies the integration of Mistral AI services into Python applications.

AI-Video-Boilerplate-Simple

AI-video-boilerplate-simple is a free Live AI Video boilerplate for testing out live video AI experiments. It includes a simple Flask server that serves files, supports live video from various sources, and integrates with Roboflow for AI vision. Users can use this template for projects, research, business ideas, and homework. It is lightweight and can be deployed on popular cloud platforms like Replit, Vercel, Digital Ocean, or Heroku.

supabase-mcp

Supabase MCP Server standardizes how Large Language Models (LLMs) interact with Supabase, enabling AI assistants to manage tables, fetch config, and query data. It provides tools for project management, database operations, project configuration, branching (experimental), and development tools. The server is pre-1.0, so expect some breaking changes between versions.

fast-mcp

Fast MCP is a Ruby gem that simplifies the integration of AI models with your Ruby applications. It provides a clean implementation of the Model Context Protocol, eliminating complex communication protocols, integration challenges, and compatibility issues. With Fast MCP, you can easily connect AI models to your servers, share data resources, choose from multiple transports, integrate with frameworks like Rails and Sinatra, and secure your AI-powered endpoints. The gem also offers real-time updates and authentication support, making AI integration a seamless experience for developers.

Lumos

Lumos is a Chrome extension powered by a local LLM co-pilot for browsing the web. It allows users to summarize long threads, news articles, and technical documentation. Users can ask questions about reviews and product pages. The tool requires a local Ollama server for LLM inference and embedding database. Lumos supports multimodal models and file attachments for processing text and image content. It also provides options to customize models, hosts, and content parsers. The extension can be easily accessed through keyboard shortcuts and offers tools for automatic invocation based on prompts.

raycast_api_proxy

The Raycast AI Proxy is a tool that acts as a proxy for the Raycast AI application, allowing users to utilize the application without subscribing. It intercepts and forwards Raycast requests to various AI APIs, then reformats the responses for Raycast. The tool supports multiple AI providers and allows for custom model configurations. Users can generate self-signed certificates, add them to the system keychain, and modify DNS settings to redirect requests to the proxy. The tool is designed to work with providers like OpenAI, Azure OpenAI, Google, and more, enabling tasks such as AI chat completions, translations, and image generation.

steel-browser

Steel is an open-source browser API designed for AI agents and applications, simplifying the process of building live web agents and browser automation tools. It serves as a core building block for a production-ready, containerized browser sandbox with features like stealth capabilities, text-to-markdown session management, UI for session viewing/debugging, and full browser control through popular automation frameworks. Steel allows users to control, run, and manage a production-ready browser environment via a REST API, offering features such as full browser control, session management, proxy support, extension support, debugging tools, anti-detection mechanisms, resource management, and various browser tools. It aims to streamline complex browsing tasks programmatically, enabling users to focus on their AI applications while Steel handles the underlying complexity.

For similar tasks

spatz

Spatz is a complete, fullstack template for Svelte that includes features such as Sveltekit for building fast web apps, Pocketbase for User Auth and Database, OpenAI for chatbots, Vercel AI SDK for AI/ML models, TailwindCSS for UI development, DaisyUI for components, and Zod for schema declaration and validation. The template provides a structured project setup with components, stores, routes, and APIs. It also offers theming and styling options with pre-loaded themes from DaisyUI. Contributions are welcomed through feature requests or pull requests.

mesop

Mesop is a Python-based UI framework designed for rapid web app development, particularly for demos and internal apps. It offers an intuitive interface for UI novices, frictionless developer workflows with hot reload and IDE support, and flexibility to build custom UIs without the need for JavaScript/CSS/HTML. Mesop allows users to write UI in idiomatic Python code and compose UI into components using Python functions. It is used at Google for internal app development and provides a quick way to build delightful web apps in Python.

spatz-2

Spatz-2 is a complete, fullstack template for Svelte, utilizing technologies such as Sveltekit, Pocketbase, OpenAI, Vercel AI SDK, TailwindCSS, svelte-animations, and Zod. It offers features like user authentication, admin dashboard, dark/light mode themes, AI chatbot, guestbook, and forms with client/server validation. The project structure includes components, stores, routes, APIs, and icons. Spatz-2 aims to provide a futuristic web framework for building fast web apps with advanced functionalities and easy customization.

ryoma

Ryoma is an AI Powered Data Agent framework that offers a comprehensive solution for data analysis, engineering, and visualization. It leverages cutting-edge technologies like Langchain, Reflex, Apache Arrow, Jupyter Ai Magics, Amundsen, Ibis, and Feast to provide seamless integration of language models, build interactive web applications, handle in-memory data efficiently, work with AI models, and manage machine learning features in production. Ryoma also supports various data sources like Snowflake, Sqlite, BigQuery, Postgres, MySQL, and different engines like Apache Spark and Apache Flink. The tool enables users to connect to databases, run SQL queries, and interact with data and AI models through a user-friendly UI called Ryoma Lab.

fragments

Fragments is an open-source tool that leverages Anthropic's Claude Artifacts, Vercel v0, and GPT Engineer. It is powered by E2B Sandbox SDK and Code Interpreter SDK, allowing secure execution of AI-generated code. The tool is based on Next.js 14, shadcn/ui, TailwindCSS, and Vercel AI SDK. Users can stream in the UI, install packages from npm and pip, and add custom stacks and LLM providers. Fragments enables users to build web apps with Python interpreter, Next.js, Vue.js, Streamlit, and Gradio, utilizing providers like OpenAI, Anthropic, Google AI, and more.

lihil

Lihil is a performant, productive, and professional web framework designed to make Python the mainstream programming language for web development. It is 100% test covered and strictly typed, offering fast performance, ergonomic API, and built-in solutions for common problems. Lihil is suitable for enterprise web development, delivering robust and scalable solutions with best practices in microservice architecture and related patterns. It features dependency injection, OpenAPI docs generation, error response generation, data validation, message system, testability, and strong support for AI features. Lihil is ASGI compatible and uses starlette as its ASGI toolkit, ensuring compatibility with starlette classes and middlewares. The framework follows semantic versioning and has a roadmap for future enhancements and features.

enferno

Enferno is a modern Flask framework optimized for AI-assisted development workflows. It combines carefully crafted development patterns, smart Cursor Rules, and modern libraries to enable developers to build sophisticated web applications with unprecedented speed. Enferno's intelligent patterns and contextual guides help create production-ready SAAS applications faster than ever. It includes features like modern stack, authentication, OAuth integration, database support, task queue, frontend components, security measures, Docker readiness, and more.

mesop

Mesop is a Python-based UI framework designed for rapid web app development, particularly for demos and internal apps. It allows users to write UI in Python code, offers reactive UI paradigm, ready-to-use components, hot reload feature, rich IDE support, and the ability to build custom UIs without writing Javascript/CSS/HTML. Mesop is intuitive for UI novices, provides frictionless developer workflows, and is flexible for creating delightful demos. It is used at Google for rapid internal app development.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.