enferno

Enferno is a modern Flask framework optimized for AI-assisted development. By combining smart patterns and Cursor Rules with modern libraries, it enables developers to build sophisticated web applications with unprecedented speed

Stars: 523

Enferno is a modern Flask framework optimized for AI-assisted development workflows. It combines carefully crafted development patterns, smart Cursor Rules, and modern libraries to enable developers to build sophisticated web applications with unprecedented speed. Enferno's intelligent patterns and contextual guides help create production-ready SAAS applications faster than ever. It includes features like modern stack, authentication, OAuth integration, database support, task queue, frontend components, security measures, Docker readiness, and more.

README:

Enferno is a modern Flask framework optimized for AI-assisted development workflows. By combining carefully crafted development patterns, smart Cursor Rules, and modern libraries, it enables developers to build sophisticated web applications with unprecedented speed. Whether you're using AI-powered IDEs like Cursor or traditional tools, Enferno's intelligent patterns and contextual guides help you create production-ready SAAS applications faster than ever.

- Modern Stack: Python 3.11+, Flask, Vue 3, Vuetify 3

- Authentication: Flask-Security with role-based access control

- OAuth Integration: Google and GitHub login via Flask-Dance

- Database: SQLAlchemy ORM with PostgreSQL/SQLite support

- Task Queue: Celery with Redis for background tasks

- Frontend: Client-side Vue.js with Vuetify components

- Security: CSRF protection, secure session handling

- Docker Ready: Production-grade Docker configuration

- Cursor Rules: Smart IDE-based code generation and assistance

- Package Management: Fast installation with uv

- Vue.js without build tools - direct browser integration

- Vuetify Material Design components

- Axios for API calls

- Snackbar notifications pattern

- Dialog forms pattern

- Data table server pattern

- Authentication state integration

- Material Design Icons

Supports social login with:

- Google (profile and email scope)

- GitHub (user:email scope)

Configure in .env:

# Google OAuth

GOOGLE_AUTH_ENABLED=true

GOOGLE_OAUTH_CLIENT_ID=your_client_id

GOOGLE_OAUTH_CLIENT_SECRET=your_client_secret

# GitHub OAuth

GITHUB_AUTH_ENABLED=true

GITHUB_OAUTH_CLIENT_ID=your_client_id

GITHUB_OAUTH_CLIENT_SECRET=your_client_secret- Python 3.11+

- Redis (for caching and sessions)

- PostgreSQL (optional, SQLite works for development)

- Git

- uv (fast Python package installer and resolver)

- Install uv:

# Install using pip

pip install uv

# Or using the installer script

curl -sSf https://astral.sh/uv/install.sh | bash- Clone and setup:

git clone [email protected]:level09/enferno.git

cd enferno

./setup.sh # Creates Python environment, installs requirements, and generates secure .env- Activate Environment:

source .venv/bin/activate- Initialize application:

flask create-db # Setup database

flask install # Create admin user- Run development server:

flask runOne-command setup with Docker:

docker compose up --buildThe Docker setup includes:

- Redis for caching and session management

- PostgreSQL database

- Nginx for serving static files

- Celery for background tasks

Key environment variables (.env):

# Core

FLASK_APP=run.py

FLASK_DEBUG=1 # 0 in production

SECRET_KEY=your_secret_key

# Database (choose one)

SQLALCHEMY_DATABASE_URI=sqlite:///enferno.sqlite3

# Or for PostgreSQL:

# SQLALCHEMY_DATABASE_URI=postgresql://username:password@localhost/dbname

# Redis & Celery

REDIS_URL=redis://localhost:6379/0

CELERY_BROKER_URL=redis://localhost:6379/1

CELERY_RESULT_BACKEND=redis://localhost:6379/2

# Email Settings (optional)

MAIL_SERVER=smtp.example.com

MAIL_PORT=465

MAIL_USE_SSL=True

MAIL_USERNAME=your_email

MAIL_PASSWORD=your_password

[email protected]

# OAuth (optional)

GOOGLE_AUTH_ENABLED=true

GOOGLE_OAUTH_CLIENT_ID=your_client_id

GOOGLE_OAUTH_CLIENT_SECRET=your_client_secret

GITHUB_AUTH_ENABLED=true

GITHUB_OAUTH_CLIENT_ID=your_client_id

GITHUB_OAUTH_CLIENT_SECRET=your_client_secret

# Security Settings

SECURITY_PASSWORD_SALT=your_secure_salt

SECURITY_TOTP_SECRETS=your_totp_secrets- Two-factor authentication (2FA)

- WebAuthn support

- OAuth integration

- Password policies

- Session protection

- CSRF protection

- Secure cookie settings

- Rate limiting

- XSS protection

For detailed documentation, visit docs.enferno.io

Contributions welcome! Please read our Contributing Guide.

MIT licensed.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for enferno

Similar Open Source Tools

enferno

Enferno is a modern Flask framework optimized for AI-assisted development workflows. It combines carefully crafted development patterns, smart Cursor Rules, and modern libraries to enable developers to build sophisticated web applications with unprecedented speed. Enferno's intelligent patterns and contextual guides help create production-ready SAAS applications faster than ever. It includes features like modern stack, authentication, OAuth integration, database support, task queue, frontend components, security measures, Docker readiness, and more.

backend.ai

Backend.AI is a streamlined, container-based computing cluster platform that hosts popular computing/ML frameworks and diverse programming languages, with pluggable heterogeneous accelerator support including CUDA GPU, ROCm GPU, TPU, IPU and other NPUs. It allocates and isolates the underlying computing resources for multi-tenant computation sessions on-demand or in batches with customizable job schedulers with its own orchestrator. All its functions are exposed as REST/GraphQL/WebSocket APIs.

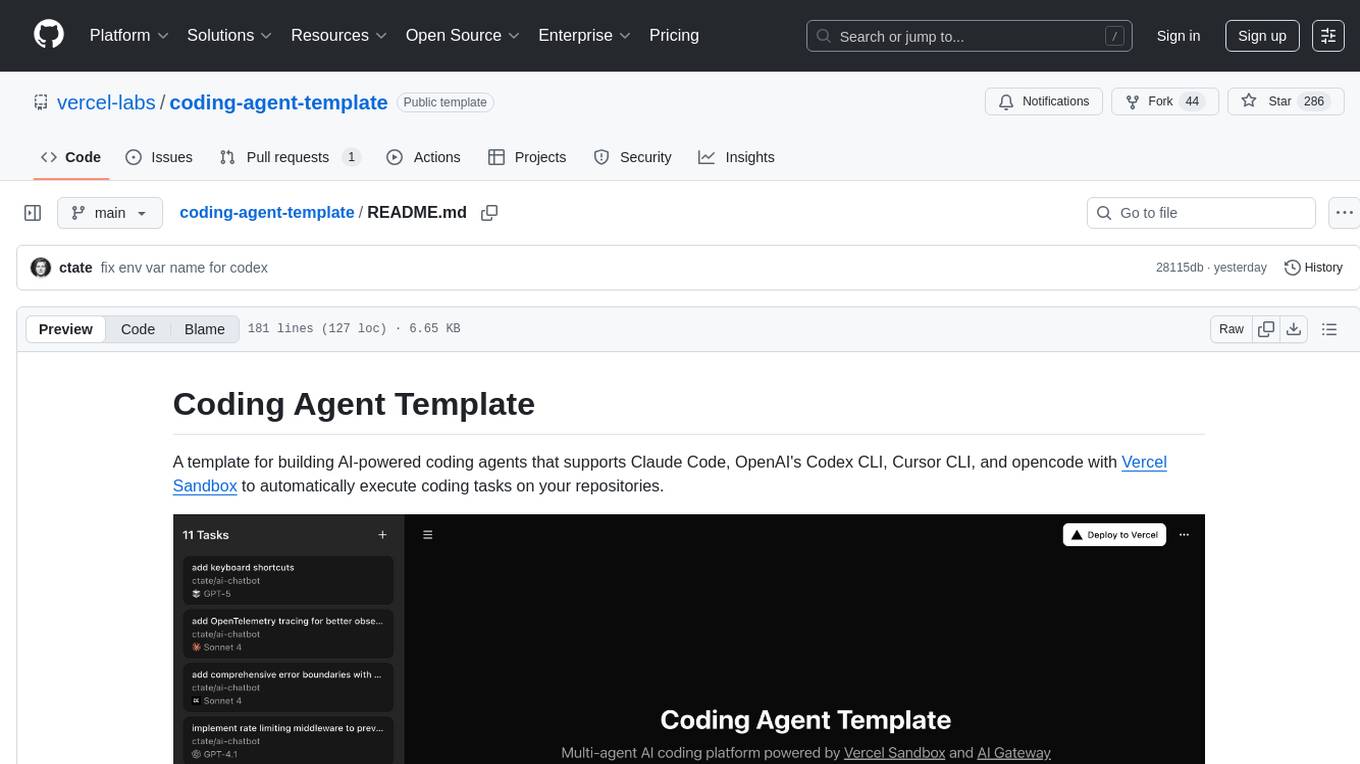

coding-agent-template

Coding Agent Template is a versatile tool for building AI-powered coding agents that support various coding tasks using Claude Code, OpenAI's Codex CLI, Cursor CLI, and opencode with Vercel Sandbox. It offers features like multi-agent support, Vercel Sandbox for secure code execution, AI Gateway integration, AI-generated branch names, task management, persistent storage, Git integration, and a modern UI built with Next.js and Tailwind CSS. Users can easily deploy their own version of the template to Vercel and set up the tool by cloning the repository, installing dependencies, configuring environment variables, setting up the database, and starting the development server. The tool simplifies the process of creating tasks, monitoring progress, reviewing results, and managing tasks, making it ideal for developers looking to automate coding tasks with AI agents.

lyraios

LYRAIOS (LLM-based Your Reliable AI Operating System) is an advanced AI assistant platform built with FastAPI and Streamlit, designed to serve as an operating system for AI applications. It offers core features such as AI process management, memory system, and I/O system. The platform includes built-in tools like Calculator, Web Search, Financial Analysis, File Management, and Research Tools. It also provides specialized assistant teams for Python and research tasks. LYRAIOS is built on a technical architecture comprising FastAPI backend, Streamlit frontend, Vector Database, PostgreSQL storage, and Docker support. It offers features like knowledge management, process control, and security & access control. The roadmap includes enhancements in core platform, AI process management, memory system, tools & integrations, security & access control, open protocol architecture, multi-agent collaboration, and cross-platform support.

fastapi-langgraph-agent-production-ready-template

A production-ready FastAPI template for building AI agent applications with LangGraph integration. This template provides a robust foundation for building scalable, secure, and maintainable AI agent services. It includes features like FastAPI for high-performance async API endpoints, LangGraph integration, structured logging, rate limiting, PostgreSQL for data persistence, Docker support, security measures like JWT-based authentication and input sanitization, developer-friendly features like environment-specific configuration and type hints, a model evaluation framework with automated metric-based evaluation and detailed JSON reports, and a configuration system with environment-specific settings.

astron-rpa

AstronRPA is an enterprise-grade Robotic Process Automation (RPA) desktop application that supports low-code/no-code development. It enables users to rapidly build workflows and automate desktop software and web pages. The tool offers comprehensive automation support for various applications, highly component-based design, enterprise-grade security and collaboration features, developer-friendly experience, native agent empowerment, and multi-channel trigger integration. It follows a frontend-backend separation architecture with components for system operations, browser automation, GUI automation, AI integration, and more. The tool is deployed via Docker and designed for complex RPA scenarios.

gemini-cli

Gemini CLI is an open-source AI agent that provides lightweight access to Gemini, offering powerful capabilities like code understanding, generation, automation, integration, and advanced features. It is designed for developers who prefer working in the command line and offers extensibility through MCP support. The tool integrates directly into GitHub workflows and offers various authentication options for individual developers, enterprise teams, and production workloads. With features like code querying, editing, app generation, debugging, and GitHub integration, Gemini CLI aims to streamline development workflows and enhance productivity.

aiogram-django-template

Aiogram & Django API Template is a robust and secure Django template with advanced features like Docker integration, Celery for asynchronous tasks, Sentry for error tracking, Django Rest Framework for building APIs, and more. It provides scalability options, up-to-date dependencies, and integration with AWS S3 for storage. The template includes configuration guides for secrets, ports, performance tuning, application settings, CORS and CSRF settings, and database configuration. Security, scalability, and monitoring are emphasized for efficient Django API development.

sim

Sim is a platform that allows users to build and deploy AI agent workflows quickly and easily. It provides cloud-hosted and self-hosted options, along with support for local AI models. Users can set up the application using Docker Compose, Dev Containers, or manual setup with PostgreSQL and pgvector extension. The platform utilizes technologies like Next.js, Bun, PostgreSQL with Drizzle ORM, Better Auth for authentication, Shadcn and Tailwind CSS for UI, Zustand for state management, ReactFlow for flow editor, Fumadocs for documentation, Turborepo for monorepo management, Socket.io for real-time communication, and Trigger.dev for background jobs.

Dive

Dive is an open-source MCP Host Desktop Application that seamlessly integrates with any LLMs supporting function calling capabilities. It offers universal LLM support, cross-platform compatibility, model context protocol for AI agent integration, OAP cloud integration, dual architecture for optimal performance, multi-language support, advanced API management, granular tool control, custom instructions, auto-update mechanism, and more. Dive provides a user-friendly interface for managing multiple AI models and tools, with recent updates introducing major architecture changes, new features, improvements, and platform availability. Users can easily download and install Dive on Windows, MacOS, and Linux, and set up MCP tools through local servers or OAP cloud services.

llamafarm

LlamaFarm is a comprehensive AI framework that empowers users to build powerful AI applications locally, with full control over costs and deployment options. It provides modular components for RAG systems, vector databases, model management, prompt engineering, and fine-tuning. Users can create differentiated AI products without needing extensive ML expertise, using simple CLI commands and YAML configs. The framework supports local-first development, production-ready components, strategy-based configuration, and deployment anywhere from laptops to the cloud.

simba

Simba is an open source, portable Knowledge Management System (KMS) designed to seamlessly integrate with any Retrieval-Augmented Generation (RAG) system. It features a modern UI and modular architecture, allowing developers to focus on building advanced AI solutions without the complexities of knowledge management. Simba offers a user-friendly interface to visualize and modify document chunks, supports various vector stores and embedding models, and simplifies knowledge management for developers. It is community-driven, extensible, and aims to enhance AI functionality by providing a seamless integration with RAG-based systems.

tingly-box

Tingly Box is a tool that helps in deciding which model to call, compressing context, and routing requests efficiently. It offers secure, reliable, and customizable functional extensions. With features like unified API, smart routing, context compression, auto API translation, blazing fast performance, flexible authentication, visual control panel, and client-side usage stats, Tingly Box provides a comprehensive solution for managing AI models and tokens. It supports integration with various IDEs, CLI tools, SDKs, and AI applications, making it versatile and easy to use. The tool also allows seamless integration with OAuth providers like Claude Code, enabling users to utilize existing quotas in OpenAI-compatible tools. Tingly Box aims to simplify AI model management and usage by providing a single endpoint for multiple providers with minimal configuration, promoting seamless integration with SDKs and CLI tools.

zcf

ZCF (Zero-Config Claude-Code Flow) is a tool that provides zero-configuration, one-click setup for Claude Code with bilingual support, intelligent agent system, and personalized AI assistant. It offers an interactive menu for easy operations and direct commands for quick execution. The tool supports bilingual operation with automatic language switching and customizable AI output styles. ZCF also includes features like BMad Workflow for enterprise-grade workflow system, Spec Workflow for structured feature development, CCR (Claude Code Router) support for proxy routing, and CCometixLine for real-time usage tracking. It provides smart installation, complete configuration management, and core features like professional agents, command system, and smart configuration. ZCF is cross-platform compatible, supports Windows and Termux environments, and includes security features like dangerous operation confirmation mechanism.

codemie-code

Unified AI Coding Assistant CLI for managing multiple AI agents like Claude Code, Google Gemini, OpenCode, and custom AI agents. Supports OpenAI, Azure OpenAI, AWS Bedrock, LiteLLM, Ollama, and Enterprise SSO. Features built-in LangGraph agent with file operations, command execution, and planning tools. Cross-platform support for Windows, Linux, and macOS. Ideal for developers seeking a powerful alternative to GitHub Copilot or Cursor.

ai-doc-gen

An AI-powered code documentation generator that automatically analyzes repositories and creates comprehensive documentation using advanced language models. The system employs a multi-agent architecture to perform specialized code analysis and generate structured documentation.

For similar tasks

spatz

Spatz is a complete, fullstack template for Svelte that includes features such as Sveltekit for building fast web apps, Pocketbase for User Auth and Database, OpenAI for chatbots, Vercel AI SDK for AI/ML models, TailwindCSS for UI development, DaisyUI for components, and Zod for schema declaration and validation. The template provides a structured project setup with components, stores, routes, and APIs. It also offers theming and styling options with pre-loaded themes from DaisyUI. Contributions are welcomed through feature requests or pull requests.

mesop

Mesop is a Python-based UI framework designed for rapid web app development, particularly for demos and internal apps. It offers an intuitive interface for UI novices, frictionless developer workflows with hot reload and IDE support, and flexibility to build custom UIs without the need for JavaScript/CSS/HTML. Mesop allows users to write UI in idiomatic Python code and compose UI into components using Python functions. It is used at Google for internal app development and provides a quick way to build delightful web apps in Python.

spatz-2

Spatz-2 is a complete, fullstack template for Svelte, utilizing technologies such as Sveltekit, Pocketbase, OpenAI, Vercel AI SDK, TailwindCSS, svelte-animations, and Zod. It offers features like user authentication, admin dashboard, dark/light mode themes, AI chatbot, guestbook, and forms with client/server validation. The project structure includes components, stores, routes, APIs, and icons. Spatz-2 aims to provide a futuristic web framework for building fast web apps with advanced functionalities and easy customization.

ryoma

Ryoma is an AI Powered Data Agent framework that offers a comprehensive solution for data analysis, engineering, and visualization. It leverages cutting-edge technologies like Langchain, Reflex, Apache Arrow, Jupyter Ai Magics, Amundsen, Ibis, and Feast to provide seamless integration of language models, build interactive web applications, handle in-memory data efficiently, work with AI models, and manage machine learning features in production. Ryoma also supports various data sources like Snowflake, Sqlite, BigQuery, Postgres, MySQL, and different engines like Apache Spark and Apache Flink. The tool enables users to connect to databases, run SQL queries, and interact with data and AI models through a user-friendly UI called Ryoma Lab.

fragments

Fragments is an open-source tool that leverages Anthropic's Claude Artifacts, Vercel v0, and GPT Engineer. It is powered by E2B Sandbox SDK and Code Interpreter SDK, allowing secure execution of AI-generated code. The tool is based on Next.js 14, shadcn/ui, TailwindCSS, and Vercel AI SDK. Users can stream in the UI, install packages from npm and pip, and add custom stacks and LLM providers. Fragments enables users to build web apps with Python interpreter, Next.js, Vue.js, Streamlit, and Gradio, utilizing providers like OpenAI, Anthropic, Google AI, and more.

lihil

Lihil is a performant, productive, and professional web framework designed to make Python the mainstream programming language for web development. It is 100% test covered and strictly typed, offering fast performance, ergonomic API, and built-in solutions for common problems. Lihil is suitable for enterprise web development, delivering robust and scalable solutions with best practices in microservice architecture and related patterns. It features dependency injection, OpenAPI docs generation, error response generation, data validation, message system, testability, and strong support for AI features. Lihil is ASGI compatible and uses starlette as its ASGI toolkit, ensuring compatibility with starlette classes and middlewares. The framework follows semantic versioning and has a roadmap for future enhancements and features.

enferno

Enferno is a modern Flask framework optimized for AI-assisted development workflows. It combines carefully crafted development patterns, smart Cursor Rules, and modern libraries to enable developers to build sophisticated web applications with unprecedented speed. Enferno's intelligent patterns and contextual guides help create production-ready SAAS applications faster than ever. It includes features like modern stack, authentication, OAuth integration, database support, task queue, frontend components, security measures, Docker readiness, and more.

mesop

Mesop is a Python-based UI framework designed for rapid web app development, particularly for demos and internal apps. It allows users to write UI in Python code, offers reactive UI paradigm, ready-to-use components, hot reload feature, rich IDE support, and the ability to build custom UIs without writing Javascript/CSS/HTML. Mesop is intuitive for UI novices, provides frictionless developer workflows, and is flexible for creating delightful demos. It is used at Google for rapid internal app development.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.