moling

MoLing is a computer-use and browser-use based MCP server. It is a locally deployed, dependency-free office AI assistant.

Stars: 125

MoLing is a computer-use and browser-use MCP Server that implements system interaction through operating system APIs, enabling file system operations such as reading, writing, merging, statistics, and aggregation, as well as the ability to execute system commands. It is a dependency-free local office automation assistant. Requiring no installation of any dependencies, MoLing can be run directly and is compatible with multiple operating systems, including Windows, Linux, and macOS. This eliminates the hassle of dealing with environment conflicts involving Node.js, Python, Docker, and other development environments. Command-line operations are dangerous and should be used with caution. MoLing supports features like file system operations, command-line terminal execution, browser control powered by 'github.com/chromedp/chromedp', and future plans for personal PC data organization, document writing assistance, schedule planning, and life assistant features. MoLing has been tested on macOS but may have issues on other operating systems.

README:

MoLing is a computer-use and browser-use MCP Server that implements system interaction through operating system APIs, enabling file system operations such as reading, writing, merging, statistics, and aggregation, as well as the ability to execute system commands. It is a dependency-free local office automation assistant.

[!IMPORTANT] Requiring no installation of any dependencies, MoLing can be run directly and is compatible with multiple operating systems, including Windows, Linux, and macOS. This eliminates the hassle of dealing with environment conflicts involving Node.js, Python, Docker and other development environments.

[!CAUTION] Command-line operations are dangerous and should be used with caution.

- File System Operations: Reading, writing, merging, statistics, and aggregation

- Command-line Terminal: Execute system commands directly

-

Browser Control: Powered by

github.com/chromedp/chromedp -

Future Plans:

- Personal PC data organization

- Document writing assistance

- Schedule planning

- Life assistant features

[!WARNING] Currently, MoLing has only been tested on macOS, and other operating systems may have issues.

- Claude

- Cline

- Cherry Studio

- etc. (who support MCP protocol)

MoLing in Claude

The configuration file will be generated at /Users/username/.moling/config/config.json, and you can modify its

contents as needed.

If the file does not exist, you can create it using moling config --init.

For example, to configure the Claude client, add the following configuration:

[!TIP]

Only 3-6 lines of configuration are needed.

Claude config path:

~/Library/Application\ Support/Claude/claude_desktop_config

{

"mcpServers": {

"MoLing": {

"command": "/usr/local/bin/moling",

"args": []

}

}

}and, /usr/local/bin/moling is the path to the MoLing server binary you downloaded.

Automatic Configuration

run moling client --install to automatically install the configuration for the MCP client.

MoLing will automatically detect the MCP client and install the configuration for you. including: Cline, Claude, Roo Code, etc.

- Stdio Mode: CLI-based interactive mode for user-friendly experience

- SSR Mode: Server-Side Rendering mode optimized for headless/automated environments

/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/gojue/moling/HEAD/install/install.sh)"[!WARNING] Not tested, unsure if it works.

powershell -ExecutionPolicy ByPass -c "irm https://raw.githubusercontent.com/gojue/moling/HEAD/install/install.ps1 | iex"- Download the installation package from releases page

- Extract the package

- Run the server:

./moling

- Clone the repository:

git clone https://github.com/gojue/moling.git

cd moling- Build the project (requires Golang toolchain):

make build- Run the compiled binary:

./bin/molingAfter starting the server, connect using any supported MCP client by configuring it to point to your MoLing server address.

Apache License 2.0. See LICENSE for details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for moling

Similar Open Source Tools

moling

MoLing is a computer-use and browser-use MCP Server that implements system interaction through operating system APIs, enabling file system operations such as reading, writing, merging, statistics, and aggregation, as well as the ability to execute system commands. It is a dependency-free local office automation assistant. Requiring no installation of any dependencies, MoLing can be run directly and is compatible with multiple operating systems, including Windows, Linux, and macOS. This eliminates the hassle of dealing with environment conflicts involving Node.js, Python, Docker, and other development environments. Command-line operations are dangerous and should be used with caution. MoLing supports features like file system operations, command-line terminal execution, browser control powered by 'github.com/chromedp/chromedp', and future plans for personal PC data organization, document writing assistance, schedule planning, and life assistant features. MoLing has been tested on macOS but may have issues on other operating systems.

kubewall

kubewall is an open-source, single-binary Kubernetes dashboard with multi-cluster management and AI integration. It provides a simple and rich real-time interface to manage and investigate your clusters. With features like multi-cluster management, AI-powered troubleshooting, real-time monitoring, single-binary deployment, in-depth resource views, browser-based access, search and filter capabilities, privacy by default, port forwarding, live refresh, aggregated pod logs, and clean resource management, kubewall offers a comprehensive solution for Kubernetes cluster management.

mcpm.sh

MCPM is an open source CLI tool for managing MCP servers, providing a simplified global configuration approach to install servers once, organize them with profiles, and integrate them into any MCP client. Features include server discovery, direct execution, sharing capabilities, and client integration tools. It eliminates the complexity of v1's target-based system in favor of a clean global workspace model. The tool is designed to be AI agent friendly with comprehensive automation support and a rich CLI interface.

Shellsage

Shell Sage is an intelligent terminal companion and AI-powered terminal assistant that enhances the terminal experience with features like local and cloud AI support, context-aware error diagnosis, natural language to command translation, and safe command execution workflows. It offers interactive workflows, supports various API providers, and allows for custom model selection. Users can configure the tool for local or API mode, select specific models, and switch between modes easily. Currently in alpha development, Shell Sage has known limitations like limited Windows support and occasional false positives in error detection. The roadmap includes improvements like better context awareness, Windows PowerShell integration, Tmux integration, and CI/CD error pattern database.

bytebot

Bytebot is an open-source AI desktop agent that provides a virtual employee with its own computer to complete tasks for users. It can use various applications, download and organize files, log into websites, process documents, and perform complex multi-step workflows. By giving AI access to a complete desktop environment, Bytebot unlocks capabilities not possible with browser-only agents or API integrations, enabling complete task autonomy, document processing, and usage of real applications.

airstore

Airstore is a filesystem for AI agents that adds any source of data into a virtual filesystem, allowing users to connect services like Gmail, GitHub, Linear, and more, and describe data needs in plain English. Results are presented as files that can be read by Claude Code. Features include smart folders for natural language queries, integrations with various services, executable MCP servers, team workspaces, and local mode operation on user infrastructure. Users can sign up, connect integrations, create smart folders, install the CLI, mount the filesystem, and use with Claude Code to perform tasks like summarizing invoices, identifying unpaid invoices, and extracting data into CSV format.

eairp

Next generation artificial intelligent ERP system. On the basis of ERP business, we have expanded GPT-3.5. Individually or company can fine-tune your model through our system. You can provide fully automated business form submission operations through your simple description, and you can chat, interact, and consult information with GPT. You can deploy through Docker to quickly start and use. Completely free project. Enginsh / 简体中文.

codemie-code

Unified AI Coding Assistant CLI for managing multiple AI agents like Claude Code, Google Gemini, OpenCode, and custom AI agents. Supports OpenAI, Azure OpenAI, AWS Bedrock, LiteLLM, Ollama, and Enterprise SSO. Features built-in LangGraph agent with file operations, command execution, and planning tools. Cross-platform support for Windows, Linux, and macOS. Ideal for developers seeking a powerful alternative to GitHub Copilot or Cursor.

CrewAI-Studio

CrewAI Studio is an application with a user-friendly interface for interacting with CrewAI, offering support for multiple platforms and various backend providers. It allows users to run crews in the background, export single-page apps, and use custom tools for APIs and file writing. The roadmap includes features like better import/export, human input, chat functionality, automatic crew creation, and multiuser environment support.

llamactl

llamactl is a tool for unified management and routing of llama.cpp, MLX, and vLLM models with a web dashboard. It offers easy model management with built-in model downloader, dynamic multi-model instances, smart resource management, and a modern React UI dashboard. It provides flexible integration with API compatibility for OpenAI chat completions and resources endpoints, multi-backend support, and Docker readiness. The tool supports distributed deployment with remote instances and central management. Users can quickly start by installing a backend, downloading llamactl, creating an instance, and starting inferencing.

code2prompt

code2prompt is a command-line tool that converts your codebase into a single LLM prompt with a source tree, prompt templating, and token counting. It automates generating LLM prompts from codebases of any size, customizing prompt generation with Handlebars templates, respecting .gitignore, filtering and excluding files using glob patterns, displaying token count, including Git diff output, copying prompt to clipboard, saving prompt to an output file, excluding files and folders, adding line numbers to source code blocks, and more. It helps streamline the process of creating LLM prompts for code analysis, generation, and other tasks.

drivebase

Drivebase is a cloud-agnostic file management platform that provides a unified workspace across multiple storage providers. It offers Vault for encrypted uploads and Smart Uploads for rule-based file routing, aiming to reduce provider lock-in while maintaining storage ownership and control. The platform supports various cloud providers and can be installed using Docker Compose or manually. Users can access the Drivebase dashboard through a web browser and interact with different cloud storage services seamlessly.

Windows-Use

Windows-Use is a powerful automation agent that interacts directly with the Windows OS at the GUI layer. It bridges the gap between AI agents and Windows to perform tasks such as opening apps, clicking buttons, typing, executing shell commands, and capturing UI state without relying on traditional computer vision models. It enables any large language model (LLM) to perform computer automation instead of relying on specific models for it.

OpenAlice

Open Alice is an AI trading agent that provides users with their own research desk, quant team, trading floor, and risk management, all running on their laptop 24/7. It is file-driven, reasoning-driven, and OS-native, allowing interactions with the operating system. Features include dual AI providers, crypto trading, securities trading, market analysis, cognitive state, event log, cron scheduling, evolution mode, and a web UI. The architecture includes providers, core components, extensions, tasks, and interfaces. Users can quickly start by setting up prerequisites, AI providers, crypto trading, securities trading, environment variables, and running the tool. Configuration files, project structure, and license information are also provided.

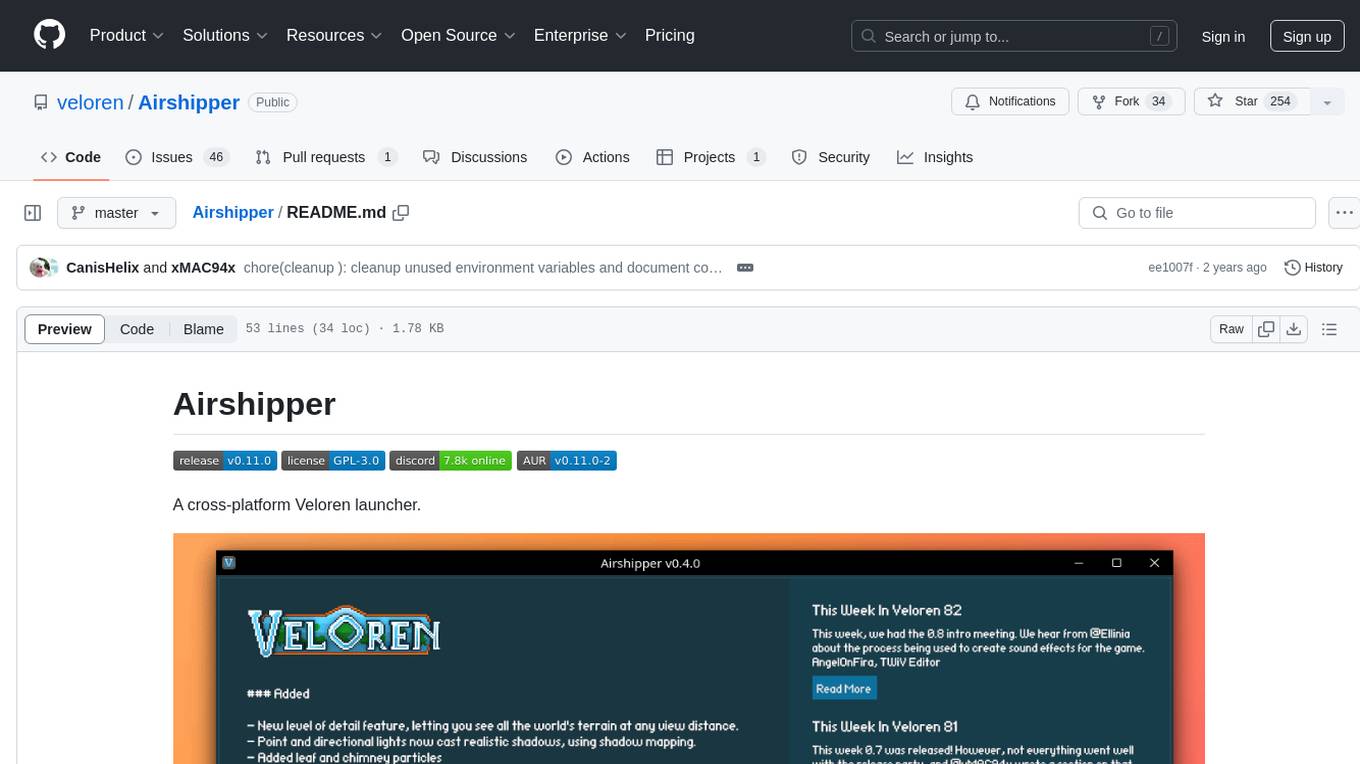

Airshipper

Airshipper is a cross-platform Veloren launcher that allows users to update/download and start nightly builds of the game. It features a fancy UI with self-updating capabilities on Windows. Users can compile it from source and also have the option to install Airshipper-Server for advanced configurations. Note that Airshipper is still in development and may not be stable for all users.

growi

GROWI is a collaborative wiki platform that allows users to create hierarchical pages with markdown, edit simultaneously with multiple people, and support authentication with LDAP/Active Directory, OAuth, and SAML. It also integrates with Slack/Mattermost, IFTTT, and allows for plugin customization. GROWI is Docker and Docker Compose ready, supports multiple sites, HTTPS with Let's Encrypt proxy integration, and offers migration guides for on-premise installations. The tool is built with Node.js, npm, pnpm, Turborepo, and requires MongoDB, with optional dependencies on Redis and ElasticSearch for full-text search functionality.

For similar tasks

moling

MoLing is a computer-use and browser-use MCP Server that implements system interaction through operating system APIs, enabling file system operations such as reading, writing, merging, statistics, and aggregation, as well as the ability to execute system commands. It is a dependency-free local office automation assistant. Requiring no installation of any dependencies, MoLing can be run directly and is compatible with multiple operating systems, including Windows, Linux, and macOS. This eliminates the hassle of dealing with environment conflicts involving Node.js, Python, Docker, and other development environments. Command-line operations are dangerous and should be used with caution. MoLing supports features like file system operations, command-line terminal execution, browser control powered by 'github.com/chromedp/chromedp', and future plans for personal PC data organization, document writing assistance, schedule planning, and life assistant features. MoLing has been tested on macOS but may have issues on other operating systems.

vibium

Vibium is a browser automation infrastructure designed for AI agents, providing a single binary that manages browser lifecycle, WebDriver BiDi protocol, and an MCP server. It offers zero configuration, AI-native capabilities, and is lightweight with no runtime dependencies. It is suitable for AI agents, test automation, and any tasks requiring browser interaction.

clawd-cursor

Clawd Cursor is an AI Desktop Agent that operates on a Smart 3-Layer Pipeline. It can work with any AI provider, run with local models for free, and acts as a self-healing doctor. The tool offers features like Install/Uninstall commands, Auto OpenClaw registration, Dashboard favorites, Credential detection, and Doctor UX. It integrates with OpenClaw to allow desktop control through natural language. Users can interact with Clawd Cursor through a Web Dashboard, browser foreground focus, and smart task handoff. The tool's 5-layer pipeline ensures efficient task execution, with different layers handling various aspects of the tasks. Clawd Cursor also provides self-healing capabilities to adapt at runtime in case of model failures or API rate limitations.

aichat

Aichat is an AI-powered CLI chat and copilot tool that seamlessly integrates with over 10 leading AI platforms, providing a powerful combination of chat-based interaction, context-aware conversations, and AI-assisted shell capabilities, all within a customizable and user-friendly environment.

wingman-ai

Wingman AI allows you to use your voice to talk to various AI providers and LLMs, process your conversations, and ultimately trigger actions such as pressing buttons or reading answers. Our _Wingmen_ are like characters and your interface to this world, and you can easily control their behavior and characteristics, even if you're not a developer. AI is complex and it scares people. It's also **not just ChatGPT**. We want to make it as easy as possible for you to get started. That's what _Wingman AI_ is all about. It's a **framework** that allows you to build your own Wingmen and use them in your games and programs. The idea is simple, but the possibilities are endless. For example, you could: * **Role play** with an AI while playing for more immersion. Have air traffic control (ATC) in _Star Citizen_ or _Flight Simulator_. Talk to Shadowheart in Baldur's Gate 3 and have her respond in her own (cloned) voice. * Get live data such as trade information, build guides, or wiki content and have it read to you in-game by a _character_ and voice you control. * Execute keystrokes in games/applications and create complex macros. Trigger them in natural conversations with **no need for exact phrases.** The AI understands the context of your dialog and is quite _smart_ in recognizing your intent. Say _"It's raining! I can't see a thing!"_ and have it trigger a command you simply named _WipeVisors_. * Automate tasks on your computer * improve accessibility * ... and much more

letmedoit

LetMeDoIt AI is a virtual assistant designed to revolutionize the way you work. It goes beyond being a mere chatbot by offering a unique and powerful capability - the ability to execute commands and perform computing tasks on your behalf. With LetMeDoIt AI, you can access OpenAI ChatGPT-4, Google Gemini Pro, and Microsoft AutoGen, local LLMs, all in one place, to enhance your productivity.

shell-ai

Shell-AI (`shai`) is a CLI utility that enables users to input commands in natural language and receive single-line command suggestions. It leverages natural language understanding and interactive CLI tools to enhance command line interactions. Users can describe tasks in plain English and receive corresponding command suggestions, making it easier to execute commands efficiently. Shell-AI supports cross-platform usage and is compatible with Azure OpenAI deployments, offering a user-friendly and efficient way to interact with the command line.

AIRAVAT

AIRAVAT is a multifunctional Android Remote Access Tool (RAT) with a GUI-based Web Panel that does not require port forwarding. It allows users to access various features on the victim's device, such as reading files, downloading media, retrieving system information, managing applications, SMS, call logs, contacts, notifications, keylogging, admin permissions, phishing, audio recording, music playback, device control (vibration, torch light, wallpaper), executing shell commands, clipboard text retrieval, URL launching, and background operation. The tool requires a Firebase account and tools like ApkEasy Tool or ApkTool M for building. Users can set up Firebase, host the web panel, modify Instagram.apk for RAT functionality, and connect the victim's device to the web panel. The tool is intended for educational purposes only, and users are solely responsible for its use.

For similar jobs

aiscript

AiScript is a lightweight scripting language that runs on JavaScript. It supports arrays, objects, and functions as first-class citizens, and is easy to write without the need for semicolons or commas. AiScript runs in a secure sandbox environment, preventing infinite loops from freezing the host. It also allows for easy provision of variables and functions from the host.

askui

AskUI is a reliable, automated end-to-end automation tool that only depends on what is shown on your screen instead of the technology or platform you are running on.

bots

The 'bots' repository is a collection of guides, tools, and example bots for programming bots to play video games. It provides resources on running bots live, installing the BotLab client, debugging bots, testing bots in simulated environments, and more. The repository also includes example bots for games like EVE Online, Tribal Wars 2, and Elvenar. Users can learn about developing bots for specific games, syntax of the Elm programming language, and tools for memory reading development. Additionally, there are guides on bot programming, contributing to BotLab, and exploring Elm syntax and core library.

ain

Ain is a terminal HTTP API client designed for scripting input and processing output via pipes. It allows flexible organization of APIs using files and folders, supports shell-scripts and executables for common tasks, handles url-encoding, and enables sharing the resulting curl, wget, or httpie command-line. Users can put things that change in environment variables or .env-files, and pipe the API output for further processing. Ain targets users who work with many APIs using a simple file format and uses curl, wget, or httpie to make the actual calls.

LaVague

LaVague is an open-source Large Action Model framework that uses advanced AI techniques to compile natural language instructions into browser automation code. It leverages Selenium or Playwright for browser actions. Users can interact with LaVague through an interactive Gradio interface to automate web interactions. The tool requires an OpenAI API key for default examples and offers a Playwright integration guide. Contributors can help by working on outlined tasks, submitting PRs, and engaging with the community on Discord. The project roadmap is available to track progress, but users should exercise caution when executing LLM-generated code using 'exec'.

robocorp

Robocorp is a platform that allows users to create, deploy, and operate Python automations and AI actions. It provides an easy way to extend the capabilities of AI agents, assistants, and copilots with custom actions written in Python. Users can create and deploy tools, skills, loaders, and plugins that securely connect any AI Assistant platform to their data and applications. The Robocorp Action Server makes Python scripts compatible with ChatGPT and LangChain by automatically creating and exposing an API based on function declaration, type hints, and docstrings. It simplifies the process of developing and deploying AI actions, enabling users to interact with AI frameworks effortlessly.

Open-Interface

Open Interface is a self-driving software that automates computer tasks by sending user requests to a language model backend (e.g., GPT-4V) and simulating keyboard and mouse inputs to execute the steps. It course-corrects by sending current screenshots to the language models. The tool supports MacOS, Linux, and Windows, and requires setting up the OpenAI API key for access to GPT-4V. It can automate tasks like creating meal plans, setting up custom language model backends, and more. Open Interface is currently not efficient in accurate spatial reasoning, tracking itself in tabular contexts, and navigating complex GUI-rich applications. Future improvements aim to enhance the tool's capabilities with better models trained on video walkthroughs. The tool is cost-effective, with user requests priced between $0.05 - $0.20, and offers features like interrupting the app and primary display visibility in multi-monitor setups.

AI-Case-Sorter-CS7.1

AI-Case-Sorter-CS7.1 is a project focused on building a case sorter using machine vision and machine learning AI to sort cases by headstamp. The repository includes Arduino code and 3D models necessary for the project.