letmedoit

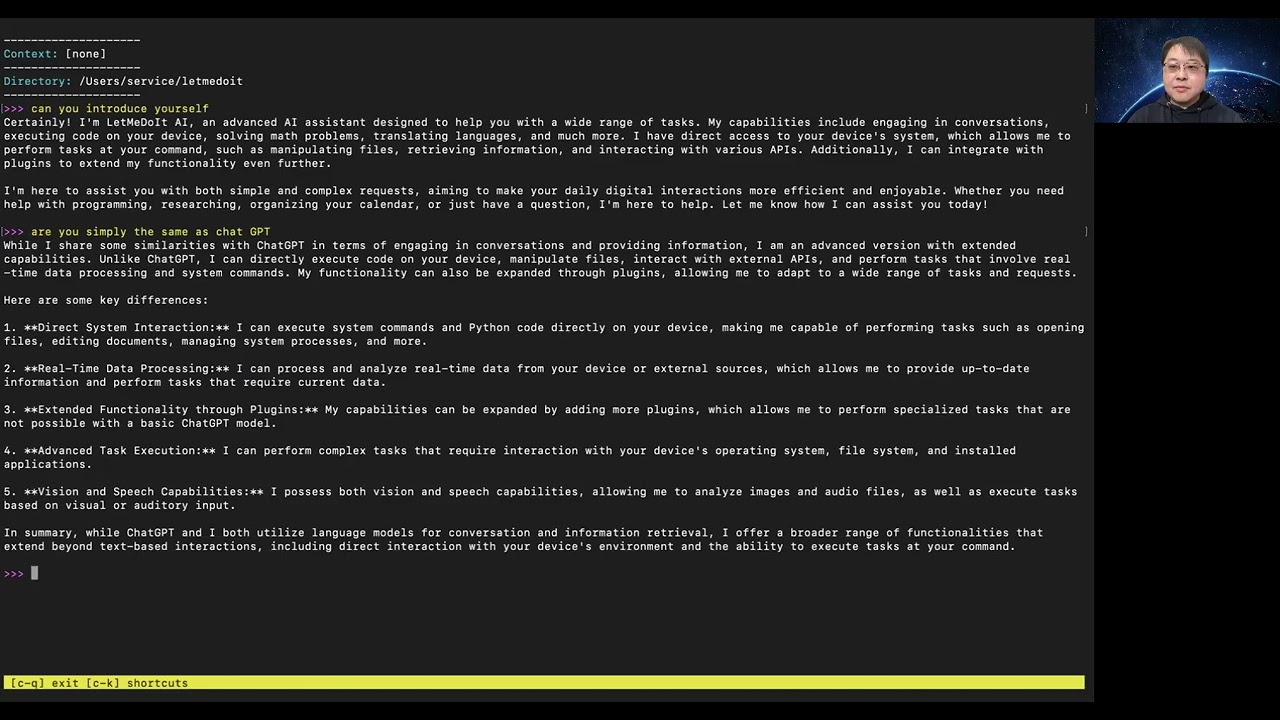

An advanced AI assistant that leverages the capabilities of ChatGPT API, Gemini Pro, AutoGen, and open-source LLMs, enabling it both to engage in conversations and to execute computing tasks on local devices.

Stars: 124

LetMeDoIt AI is a virtual assistant designed to revolutionize the way you work. It goes beyond being a mere chatbot by offering a unique and powerful capability - the ability to execute commands and perform computing tasks on your behalf. With LetMeDoIt AI, you can access OpenAI ChatGPT-4, Google Gemini Pro, and Microsoft AutoGen, local LLMs, all in one place, to enhance your productivity.

README:

LetMeDoIt AI (version 3+) is a fully automatic AI agent, built on AgentMake AI tools, to resolve complex tasks.

The version 3.0 is completely written with AgentMake AI SDK. The following features distinguish it from the previous version:

Fully automatic:

- Automate prompt engineering

- Automate tool instruction refinement

- Automate task resolution

- Automate action plan crafting

- Automate agent creation tailor-made to resolve user request

- Automate multiple tools selection

- Automate multiple steps execution

- Automate Quality Control

- Automate Report Generation

As version 3.0 is completely written with AgentMake AI SDK, it supports 14 AI backends. It runs with less dependencies than that required by preivious versions. It starts up much faster. Much more ...

In response to your instructions, LetMeDoIt AI is capable of applying tools to generate files or make changes on your devices. Please use it with your sound judgment and at your own risk. We will not take any responsibility for any negative impacts, such as data loss or other issues.

pip install letmedoit

Setting up a virtual environment is recommended, e.g.

python3 -m venv tm

source tm/bin/activate

pip install --upgrade letmedoit

# setup

ai -m

Install extra package genai to support backend Vertex AI via google-genai library:

python3 -m venv tm

source tm/bin/activate

pip install --upgrade "letmedoit[genai]"

# setup

ai -m

LetMeDoIt AI 3.0.2+ offers mainly two commands:

-

letmedoit/lmdito resolve complex tasks. -

letmedoitlite/lmdilto resolve simple tasks.

Below are the default tool choices:

@chat @search/google @files/extract_text @install_python_package @magic

To resolve tasks that involves multiple tools or multiple steps, e.g.:

letmedoit "Tidy up my Desktop content."

To specify additional tools for a task, e.g.:

letmedoit "@azure/deepseekr1 @perplexica/googleai Conduct a deep research on the limitations of Generative AI"

To resolve simple task, e.g.:

letmedoitlite "Create three folders, named 'test1' 'test2' 'test3', on my Desktop."

Remarks: lmdi is an alias to letmedoit whereas lmdil is an alias to letmedoitlite

To make a persistent change, locate and edit the configuration item DEFAULT_TOOL_CHOICES:

ai -ec

To make a temporary change, use CLI option --default_tool_choices or -dtc:

letmedoit -dtc "@chat @magic" "Tell me a joke"

letmedoitlite -dtc "@chat @magic" "Create a folder named 'testing'"

For more CLI options, run:

letmedoit -h

LetMeDoIt AI uses AgentMake AI configurations. The default AI backend is Ollama, but you can easily edit the default backend and other configurations. To configure, run:

ai -ec

AgentMake AI is built with a large set of tools for problem solving. To list all of them, run:

ai -lt

Limitation: As LetMeDoIt AI uses AgentMake AI tools, it can only solve requests within the capbilities of AgentMake AI tools. Though there are numerous tools that have been built for solving different tasks, there may be some use cases that are out of range.

Go Beyond the limitations: AgentMake AI supports custom tools to extend its capabilities. You can create AgentMake AI custom tools to meet your own needs.

Welcome to LetMeDoIt AI, your premier virtual assistant designed to revolutionize the way you work! More than a mere chatbot, I am equipped with the capability to conduct meaningful interactions and actively carry out computing tasks as per your directives. My real-time code generation and execution prowess guarantees not only effectiveness but also efficiency in task fulfillment. With an advanced auto-correction feature, I autonomously repair any malfunctioning code segments and automatically install necessary libraries, ensuring uninterrupted workflow. My commitment to your digital safety is paramount, with inbuilt risk assessments and tailored user confirmation protocols to protect your data and device.

With LetMeDoIt AI, you can access OpenAI ChatGPT-4, Google Gemini Pro, and Microsoft AutoGen, local LLMs, all in one place, to enhance your productivity. Read more ...

Developer: Eliran Wong

Website: https://LetMeDoIt.ai

Source: https://github.com/eliranwong/letmedoit

Installation: https://github.com/eliranwong/letmedoit/wiki/Installation

Quick-Guide: https://github.com/eliranwong/letmedoit/wiki/Quick-Guide

Wiki: https://github.com/eliranwong/letmedoit/wiki

Video Demo: https://www.youtube.com/watch?v=Eeat6h_ktbQ&list=PLo4xQ5NqC8SEMM71xC4NNhOHJCFlW-jaJ

Support this project: https://www.paypal.me/letmedoitai

Youtube Playlist: https://www.youtube.com/watch?v=Eeat6h_ktbQ&list=PLo4xQ5NqC8SEMM71xC4NNhOHJCFlW-jaJ

You can utilize Google Gemini or open-source LLMs through Ollama for chat features in the LetMeDoIt AI.

If you're seeking the complete functionality of LetMeDoIt, which includes both chat and task execution features, without the need for an Open AI API key, we offer support for Gemini Pro, Ollama, and Llama.cpp in our related project, FreeGenius AI:

https://github.com/eliranwong/freegenius

-

ChatGPT API key (read https://github.com/eliranwong/letmedoit/wiki/ChatGPT-API-Key)

-

Python version 3.8-3.11; read Install a Supported Python Version

-

Supported OS: Windows / macOS / Linux / ChromeOS / Android (Termux)

Talk to LetMeDoIt in Multiple Languages

Search / Analyze Financial Data

Search and Load Old Conversations

Support Android & Termux-API Commands

Work with text selection in third-party applications

Modify your images with simple words

LetMeDoIt AI just got smarter with memory retention!

Plugin - create statistical graphics

Execute code with auto-healing and risk assessment

-

enhanced screening for task execution

-

safety measures, such as risk assessment on code execution

-

support latest OpenAI models, GPT-4 and GPT-4 Turbo, GPT-3.5, DALL·E, etc.

-

higly customizable, e.g. you can even change the assistant name

-

Support predefined contexts

-

Key bindings for quick actions - press ctrl+k to display a full list of key bindings

-

Integrated text editor for prompt editing

-

developer mode available

Latest LetMeDoIt Plugins allow you to acheive variety of tasks with natural language:

- [NEW] generate tweets

Post a short tweet about LetMeDoIt AI

- [NEW] analyze audio

transcribe "meeting_records.mp3"

- [NEW] search / analyze financial data

What was the average stock price of Apple Inc. in 2023?

Analyze Apple Inc's stock price over last 5 years.

- [NEW] search weather information

what is the current weather in New York?

- [NEW] search latest news

tell me the latest news about ChatGPT

- [NEW] search old conversations

search for "joke" in chat records

- [NEW] load old conversations

load chat records with this ID: 2024-01-20_19_21_04

- [NEW] connect a sqlite file and fetch data or make changes

connect /temp/my_database.sqlite and tell me about the tables that it contains

- [NEW] integrated Google Gemini Pro (+Vision) multiturn chat, e.g.

ask Gemini Pro to write an article about Google

- [NEW] integrated Google PaLM 2 multiturn chat, e.g.

ask PaLM 2 to write an article about Google

- [NEW] integrated Google Codey multiturn chat, e.g.

ask Codey how to use decorators in python

- [NEW] create ai assistants based on the requested task, e.g.

create a team of AI assistants to write a Christmas drama

create a team of AI assistants to build a scalable and customisable python application to remove image noise

- execute python codes with auto-healing feature and risk assessment, e.g.

join "01.mp3" and "02.mp3" into a single file

- execute system commands to achieve specific tasks, e.g.

Launch VLC player and play music in folder "music_folder"

- manipulate files, e.g.

remove all desktop files with names starting with "Screenshot"

zip "folder1"

- save memory, e.g.

Remember, my birthday is January 1st.

- send Whatsapp messages, e.g.

send Whatsapp message "come to office 9am tomorrow" to "staff" group

- retrieve memory, e.g.

When is my birthday?

- search for online information when ChatGPT lacks information, e.g.

Tell me somtheing about LetMeDoIt AI?

- add google or outlook calendar events, e.g.

I am going to London on Friday. Add it to my outlook calendar

- send google or outlook emails, e.g.

Email an appreciation letter to [email protected]

- analyze files, e.g.

Summarize 'Hello_World.docx'

- analyze web content, e.g.

Give me a summary on https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/1171397/CC3_feb20.pdf

- analyze images, e.g.

Describe the image 'Hello.png' in detail

Compare images insider folder 'images'

- create images, e.g.

Create an app icon for "LetMeDoIt AI"

- modify images, e.g.

Make a cartoon verion of image "my_photo.png"

- remove image background, e.g.

Remove image background of "my_photo.png"

- create qrcode, e.g.

Create a QR code for the website: https://letmedoit.ai

- create maps, e.g.

Show me a map with Hype Park Corner and Victoria stations pinned

- create statistical graphics, e.g.

Create a bar chart that illustrates the correlation between each of the 12 months and their respective number of days

Create a pie chart: Mary £10, Peter 8£, John £15

- solve queries about dates and times, e.g.

What is the current time in Hong Kong?

- solve math problem, e.g.

You have a standard deck of 52 playing cards, which is composed of 4 suits: hearts, diamonds, clubs, and spades. Each suit has 13 cards: Ace through 10, and the face cards Jack, Queen, and King. If you draw 5 cards from the deck, in how many ways can you draw exactly 3 cards of one suit and exactly 2 cards of another suit?

- pronounce words in different dialects, e.g.

read tomato in American English

read tomato in British English

read 中文 in Mandarin

read 中文 in Cantonese

- download Youtube video files, e.g.

- download Youtube audio files and convert them into mp3 format, e.g.

Download https://www.youtube.com/watch?v=CDdvReNKKuk and convert it into mp3

- edit text with built-in or custom text editors, e.g.

Edit README.md

- improve language skills, e.g. British English trainer, e.g.

Improve my writing according to British English style

- convert text display, e.g. from simplified Chinese to traditional Chinese, e.g.

Translate your last response into Chinese

- create entry aliases, input suggestions, predefined contexts and instructions, e.g.

!auto

Read more about LetMeDoIt Plugins at https://github.com/eliranwong/letmedoit/wiki/Plugins-%E2%80%90-Overview

Read https://github.com/eliranwong/letmedoit/wiki

pip install --upgrade letmedoit

letmedoit

Alternately, you may install "myhand", "cybertask" and "taskwiz":

pip install --upgrade myhand cybertask taskwiz

myhand

cybertask

taskwiz

Tips: You can change the assistant's name regardless of the package you choose to install.

pip install --upgrade letmedoit_android

letmedoit

Remarks: Please note that the name of the Android package is "letmedoit_android" but the cli command remains the same, i.e. "letmedoit"

Read more at: https://github.com/eliranwong/letmedoit/wiki/Android-Support

python3 -m venv letmedoit

source letmedoit/bin/activate

pip install --upgrade letmedoit

letmedoit

python -m venv letmedoit

.\letmedoit\Scripts\activate

pip install --upgrade letmedoit

letmedoit

cd

python -m venv --system-site-packages letmedoit

source letmedoit/bin/activate

pip install letmedoit_android

letmedoit

Read more at: https://github.com/eliranwong/letmedoit/wiki/Installation

https://github.com/eliranwong/letmedoit/wiki/Command-Line-Interface-Options

https://github.com/eliranwong/letmedoit/wiki/Quick-Guide

You can manually upgrade by running:

pip install --upgrade letmedoit

You can also enable Automatic Upgrade Option on macOS and Linux.

LetMeDoIt is an advanced AI assistant that brings a wide range of powerful features to enhance your virtual assistance experience. Here are some key features of LetMeDoIt:

-

Open source

-

Cross-Platform Compatibility

-

Access to Real-time Internet Information

-

Versatile Task Execution

-

Harnessing the Power of Python

-

Customizable and Extensible

-

Seamless Integration with Other Virtual Assistants

-

Natural Language Support

Read more at https://github.com/eliranwong/letmedoit/wiki/Features

Developers can write their own plugins to add functionalities or to run customised tasks with LetMeDoIt

Read more at https://github.com/eliranwong/letmedoit/wiki/Plugins-%E2%80%90-Overview

Check our built-in plugins at: https://github.com/eliranwong/letmedoit/tree/main/plugins

LetMeDoIt AI is now equipped with an auto-healing feature for Python code.

Overview: Command execution enables you to:

- Retrieve the requested information from your device.

- Perform computing tasks on your device.

- Interact with third-party applications.

- Construct anything that system commands and Python libraries are capable of executing.

LetMeDoIt goes beyond just being a chatbot by offering a unique and powerful capability - the ability to execute commands and perform computing tasks on your behalf. Unlike a mere chatbot, LetMeDoIt can interact with your computer system and carry out specific commands to accomplish various computing tasks. This feature allows you to leverage the expertise and efficiency of LetMeDoIt to automate processes, streamline workflows, and perform complex tasks with ease. However, it is essential to remember that with great power comes great responsibility, and users should exercise caution and use this feature at their own risk.

Confirmation Prompt Options for Command Execution

Read more at https://github.com/eliranwong/letmedoit/wiki/Command-Execution

LetMeDoIt offers advanced features beyond standard ChatGPT, including task execution on local devices and real-time access to the internet.

Read https://github.com/eliranwong/letmedoit/wiki/Compare-with-ChatGPT

ShellGPT only supports platform that run shell command-prompt. Therefore, ShellGPT does not support Windows.

In most cases, LetMeDoIt run Python codes for task execution. This makes LetMeDoIt terms of platforms, LetMeDoIt was developed and tested on Windows, macOS, Linux, ChromeOS and Termux (Android).

In addition, LetMeDoIt offers more options for risk managements:

Both LetMeDoIt AI and the Open Interpreter have the ability to execute code on a local device to accomplish specific tasks. Both platforms employ the same principle for code execution, which involves using ChatGPT function calls along with the Python exec() function.

However, LetMeDoIt AI offers additional advantages, particularly in terms of customization and extensibility through the use of plugins. These plugins allow users to tailor LetMeDoIt AI to their specific needs and enhance its functionality beyond basic code execution.

One key advantage of LetMeDoIt AI is the seamless integration with the Open Interpreter. You can conveniently launch the Open Interpreter directly from LetMeDoIt AI by running the command "!interpreter" [read more]. This integration eliminates the need to choose between the two platforms; you can utilize both simultaneously.

Additionally, LetMeDoIt integrates AutoGen Assistants and Builder and Google AI tools, like Gemini Pro, Gemini Pro Vision & PaLM 2, making it convenient to have all these powerful tools in one place.

Unlike popular options such as Siri (macOS, iOS), Cortana (Windows), and Google Assistant (Android), LetMeDoIt offers enhanced power, customization, flexibility, and compatibility.

Read https://github.com/eliranwong/letmedoit/wiki/Features

Integration with Google AI Tools

Launch Open Interpreter from LetMeDoIt AI

LetMeDoIt is also tested on Termux. LetMeDoIt also integrates Termux:API for task execution.

For examples, users can run on Android:

open Google Chrome and perform a search for "ChatGPT"

share text "Hello World!" on Android

Read more at: https://github.com/eliranwong/letmedoit/wiki/Android-Support

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for letmedoit

Similar Open Source Tools

letmedoit

LetMeDoIt AI is a virtual assistant designed to revolutionize the way you work. It goes beyond being a mere chatbot by offering a unique and powerful capability - the ability to execute commands and perform computing tasks on your behalf. With LetMeDoIt AI, you can access OpenAI ChatGPT-4, Google Gemini Pro, and Microsoft AutoGen, local LLMs, all in one place, to enhance your productivity.

Revornix

Revornix is an information management tool designed for the AI era. It allows users to conveniently integrate all visible information and generates comprehensive reports at specific times. The tool offers cross-platform availability, all-in-one content aggregation, document transformation & vectorized storage, native multi-tenancy, localization & open-source features, smart assistant & built-in MCP, seamless LLM integration, and multilingual & responsive experience for users.

gptme

GPTMe is a tool that allows users to interact with an LLM assistant directly in their terminal in a chat-style interface. The tool provides features for the assistant to run shell commands, execute code, read/write files, and more, making it suitable for various development and terminal-based tasks. It serves as a local alternative to ChatGPT's 'Code Interpreter,' offering flexibility and privacy when using a local model. GPTMe supports code execution, file manipulation, context passing, self-correction, and works with various AI models like GPT-4. It also includes a GitHub Bot for requesting changes and operates entirely in GitHub Actions. In progress features include handling long contexts intelligently, a web UI and API for conversations, web and desktop vision, and a tree-based conversation structure.

Sentient

Sentient is a personal, private, and interactive AI companion developed by Existence. The project aims to build a completely private AI companion that is deeply personalized and context-aware of the user. It utilizes automation and privacy to create a true companion for humans. The tool is designed to remember information about the user and use it to respond to queries and perform various actions. Sentient features a local and private environment, MBTI personality test, integrations with LinkedIn, Reddit, and more, self-managed graph memory, web search capabilities, multi-chat functionality, and auto-updates for the app. The project is built using technologies like ElectronJS, Next.js, TailwindCSS, FastAPI, Neo4j, and various APIs.

HeyGem.ai

Heygem is an open-source, affordable alternative to Heygen, offering a fully offline video synthesis tool for Windows systems. It enables precise appearance and voice cloning, allowing users to digitalize their image and drive virtual avatars through text and voice for video production. With core features like efficient video synthesis and multi-language support, Heygem ensures a user-friendly experience with fully offline operation and support for multiple models. The tool leverages advanced AI algorithms for voice cloning, automatic speech recognition, and computer vision technology to enhance the virtual avatar's performance and synchronization.

FunClip

FunClip is an open-source, locally deployed automated video clipping tool that leverages Alibaba TONGYI speech lab's FunASR Paraformer series models for speech recognition on videos. Users can select text segments or speakers from recognition results to obtain corresponding video clips. It integrates industrial-grade models for accurate predictions and offers hotword customization and speaker recognition features. The tool is user-friendly with Gradio interaction, supporting multi-segment clipping and providing full video and target segment subtitles. FunClip is suitable for users looking to automate video clipping tasks with advanced AI capabilities.

chatdev

ChatDev IDE is a tool for building your AI agent, Whether it's NPCs in games or powerful agent tools, you can design what you want for this platform. It accelerates prompt engineering through **JavaScript Support** that allows implementing complex prompting techniques.

OpenHands

OpenDevin is a platform for autonomous software engineers powered by AI and LLMs. It allows human developers to collaborate with agents to write code, fix bugs, and ship features. The tool operates in a secured docker sandbox and provides access to different LLM providers for advanced configuration options. Users can contribute to the project through code contributions, research and evaluation of LLMs in software engineering, and providing feedback and testing. OpenDevin is community-driven and welcomes contributions from developers, researchers, and enthusiasts looking to advance software engineering with AI.

FunClip

FunClip is an open-source, locally deployable automated video editing tool that utilizes the FunASR Paraformer series models from Alibaba DAMO Academy for speech recognition in videos. Users can select text segments or speakers from the recognition results and click the clip button to obtain the corresponding video segments. FunClip integrates advanced features such as the Paraformer-Large model for accurate Chinese ASR, SeACo-Paraformer for customized hotword recognition, CAM++ speaker recognition model, Gradio interactive interface for easy usage, support for multiple free edits with automatic SRT subtitles generation, and segment-specific SRT subtitles.

nobodywho

NobodyWho is a plugin for the Godot game engine that enables interaction with local LLMs for interactive storytelling. Users can install it from Godot editor or GitHub releases page, providing their own LLM in GGUF format. The plugin consists of `NobodyWhoModel` node for model file, `NobodyWhoChat` node for chat interaction, and `NobodyWhoEmbedding` node for generating embeddings. It offers a programming interface for sending text to LLM, receiving responses, and starting the LLM worker.

superduper

superduper.io is a Python framework that integrates AI models, APIs, and vector search engines directly with existing databases. It allows hosting of models, streaming inference, and scalable model training/fine-tuning. Key features include integration of AI with data infrastructure, inference via change-data-capture, scalable model training, model chaining, simple Python interface, Python-first approach, working with difficult data types, feature storing, and vector search capabilities. The tool enables users to turn their existing databases into centralized repositories for managing AI model inputs and outputs, as well as conducting vector searches without the need for specialized databases.

chainlit

Chainlit is an open-source async Python framework which allows developers to build scalable Conversational AI or agentic applications. It enables users to create ChatGPT-like applications, embedded chatbots, custom frontends, and API endpoints. The framework provides features such as multi-modal chats, chain of thought visualization, data persistence, human feedback, and an in-context prompt playground. Chainlit is compatible with various Python programs and libraries, including LangChain, Llama Index, Autogen, OpenAI Assistant, and Haystack. It offers a range of examples and a cookbook to showcase its capabilities and inspire users. Chainlit welcomes contributions and is licensed under the Apache 2.0 license.

agent

Xata Agent is an open source tool designed to monitor PostgreSQL databases, identify issues, and provide recommendations for improvements. It acts as an AI expert, offering proactive suggestions for configuration tuning, troubleshooting performance issues, and common database problems. The tool is extensible, supports monitoring from cloud services like RDS & Aurora, and uses preset SQL commands to ensure database safety. Xata Agent can run troubleshooting statements, notify users of issues via Slack, and supports multiple AI models for enhanced functionality. It is actively used by the Xata team to manage Postgres databases efficiently.

job-llm

ResumeFlow is an automated system utilizing Large Language Models (LLMs) to streamline the job application process. It aims to reduce human effort in various steps of job hunting by integrating LLM technology. Users can access ResumeFlow as a web tool, install it as a Python package, or download the source code. The project focuses on leveraging LLMs to automate tasks such as resume generation and refinement, making job applications smoother and more efficient.

Stellar-Chat

Stellar Chat is a multi-modal chat application that enables users to create custom agents and integrate with local language models and OpenAI models. It provides capabilities for generating images, visual recognition, text-to-speech, and speech-to-text functionalities. Users can engage in multimodal conversations, create custom agents, search messages and conversations, and integrate with various applications for enhanced productivity. The project is part of the '100 Commits' competition, challenging participants to make meaningful commits daily for 100 consecutive days.

archgw

Arch is an intelligent Layer 7 gateway designed to protect, observe, and personalize AI agents with APIs. It handles tasks related to prompts, including detecting jailbreak attempts, calling backend APIs, routing between LLMs, and managing observability. Built on Envoy Proxy, it offers features like function calling, prompt guardrails, traffic management, and observability. Users can build fast, observable, and personalized AI agents using Arch to improve speed, security, and personalization of GenAI apps.

For similar tasks

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

jupyter-ai

Jupyter AI connects generative AI with Jupyter notebooks. It provides a user-friendly and powerful way to explore generative AI models in notebooks and improve your productivity in JupyterLab and the Jupyter Notebook. Specifically, Jupyter AI offers: * An `%%ai` magic that turns the Jupyter notebook into a reproducible generative AI playground. This works anywhere the IPython kernel runs (JupyterLab, Jupyter Notebook, Google Colab, Kaggle, VSCode, etc.). * A native chat UI in JupyterLab that enables you to work with generative AI as a conversational assistant. * Support for a wide range of generative model providers, including AI21, Anthropic, AWS, Cohere, Gemini, Hugging Face, NVIDIA, and OpenAI. * Local model support through GPT4All, enabling use of generative AI models on consumer grade machines with ease and privacy.

khoj

Khoj is an open-source, personal AI assistant that extends your capabilities by creating always-available AI agents. You can share your notes and documents to extend your digital brain, and your AI agents have access to the internet, allowing you to incorporate real-time information. Khoj is accessible on Desktop, Emacs, Obsidian, Web, and Whatsapp, and you can share PDF, markdown, org-mode, notion files, and GitHub repositories. You'll get fast, accurate semantic search on top of your docs, and your agents can create deeply personal images and understand your speech. Khoj is self-hostable and always will be.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

infinity

Infinity is an AI-native database designed for LLM applications, providing incredibly fast full-text and vector search capabilities. It supports a wide range of data types, including vectors, full-text, and structured data, and offers a fused search feature that combines multiple embeddings and full text. Infinity is easy to use, with an intuitive Python API and a single-binary architecture that simplifies deployment. It achieves high performance, with 0.1 milliseconds query latency on million-scale vector datasets and up to 15K QPS.

For similar jobs

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

sourcegraph

Sourcegraph is a code search and navigation tool that helps developers read, write, and fix code in large, complex codebases. It provides features such as code search across all repositories and branches, code intelligence for navigation and refactoring, and the ability to fix and refactor code across multiple repositories at once.

llm-verified-with-monte-carlo-tree-search

This prototype synthesizes verified code with an LLM using Monte Carlo Tree Search (MCTS). It explores the space of possible generation of a verified program and checks at every step that it's on the right track by calling the verifier. This prototype uses Dafny, Coq, Lean, Scala, or Rust. By using this technique, weaker models that might not even know the generated language all that well can compete with stronger models.

anterion

Anterion is an open-source AI software engineer that extends the capabilities of `SWE-agent` to plan and execute open-ended engineering tasks, with a frontend inspired by `OpenDevin`. It is designed to help users fix bugs and prototype ideas with ease. Anterion is equipped with easy deployment and a user-friendly interface, making it accessible to users of all skill levels.

LafTools

LafTools is a privacy-first, self-hosted, fully open source toolbox designed for programmers. It offers a wide range of tools, including code generation, translation, encryption, compression, data analysis, and more. LafTools is highly integrated with a productive UI and supports full GPT-alike functionality. It is available as Docker images and portable edition, with desktop edition support planned for the future.

ChatDBG

ChatDBG is an AI-based debugging assistant for C/C++/Python/Rust code that integrates large language models into a standard debugger (`pdb`, `lldb`, `gdb`, and `windbg`) to help debug your code. With ChatDBG, you can engage in a dialog with your debugger, asking open-ended questions about your program, like `why is x null?`. ChatDBG will _take the wheel_ and steer the debugger to answer your queries. ChatDBG can provide error diagnoses and suggest fixes. As far as we are aware, ChatDBG is the _first_ debugger to automatically perform root cause analysis and to provide suggested fixes.