rkllama

Ollama alternative for Rockchip NPU: An efficient solution for running AI and Deep learning models on Rockchip devices with optimized NPU support ( rkllm )

Stars: 422

RKLLama is a server and client tool designed for running and interacting with LLM models optimized for Rockchip RK3588(S) and RK3576 platforms. It allows models to run on the NPU, with features such as running models on NPU, partial Ollama API compatibility, pulling models from Huggingface, API REST with documentation, dynamic loading/unloading of models, inference requests with streaming modes, simplified model naming, CPU model auto-detection, and optional debug mode. The tool supports Python 3.8 to 3.12 and has been tested on Orange Pi 5 Pro and Orange Pi 5 Plus with specific OS versions.

README:

Video demo ( version 0.0.1 ):

- Without Miniconda: This version runs without Miniconda.

- Rkllama Docker: A fully isolated version running in a Docker container.

- Support All Models: This branch ensures all models are tested before being merged into the main branch.

- Docker Package

A server to run and interact with LLM models optimized for Rockchip RK3588(S) and RK3576 platforms. The difference from other software of this type like Ollama or Llama.cpp is that RKLLama allows models to run on the NPU.

- Version

Lib rkllm-runtime: V 1.2.3. - Version

Lib rknn-runtime: V 2.3.2.

-

./models: contains your rkllm models (wihh their rknn models if multimodal) . -

./lib: C++rkllmandrklnnlibrary used for inference andfix_freqence_platform. -

./app.py: API Rest server. -

./client.py: Client to interact with the server.

- Python 3.9 to 3.12

- Hardware: Orange Pi 5 Pro: (Rockchip RK3588S, NPU 6 TOPS), 16GB RAM.

- Hardware: Orange Pi 5 Plus: (Rockchip RK3588S, NPU 6 TOPS), 16GB RAM.

- Hardware: Orange Pi 5 Max: (Rockchip RK3588S, NPU 6 TOPS), 16GB RAM.

- OS: Ubuntu 24.04 arm64.

- OS: Armbian Linux 6.1.99-vendor-rk35xx (Debian stable bookworm), v25.2.2.

- Running models on NPU.

-

Ollama API compatibility - Support for:

/api/chat/api/generate/api/ps/api/tags-

/api/embed(and legacy/api/embeddings) /api/version/api/pull

-

Partial OpenAI API compatibility - Support for:

/v1/completions/v1/chat/completions/v1/embeddings/v1/images/generations/v1/audio/speech/v1/audio/transcriptions-

/v1/audio/translations(to English only for now like OpenAI with Whisper models)

- Tool/Function Calling - Complete support for tool calls with multiple LLM formats (Qwen, Llama 3.2+, others).

- Pull models directly from Huggingface.

- Include a API REST with documentation.

- Listing available models.

- Multiples RKLLM models running in memory simultaniusly (parallels executions between distintct models in stream mode, FIFO if non stream)

-

Dynamic loading and unloading of models:

- Load the model after new request (if not in memory already)

- Unload when model expires after inactivity (default 30 min)

- Unload the oldest model in memory if new model is required to be loaded and there is not memory available in the server

- Inference requests with streaming and non-streaming modes.

- Message history.

- Simplified custom model naming - Use models with familiar names like "qwen2.5:3b".

- CPU Model Auto-detection - Automatic detection of RK3588 or RK3576 platform.

-

Optional Debug Mode - Detailed debugging with

--debugflag. - Multimodal Suport - Use Qwen2VL/Qwen2.5VL/Qwen3VL/MiniCPMV4/MiniCPMV4.5/InternVL3.5 vision models to ask questions about images (base64, local file or URL image address). More than one image in the same request is allowed.

- Image Generation - Generate images with OpenAI Image generation endpoint using model LCM Stable Diffusion 1.5 RKNN models.

- Text to Speech (TTS) - Generate speech with OpenAI Audio Speech endpoint using models for Piper TTS running encoder with ONNX and decoder with RKNN and MMS-TTS with RKNN.

- Speech to Text (STT) - Generate transcriptions with OpenAI Audio Transcriptions endpoint using models for omniASR-CTC or whisper running the model with RKNN.

- French version: click

- Client : Installation guide.

- API REST : English documentation

- API REST : French documentation

- Ollama API: Compatibility guide

- Model Naming: Naming convention

- Tool Calling: Tool/Function calling guide

- Clone the repository:

git clone https://github.com/notpunchnox/rkllama

cd rkllama- Install RKLLama:

python -m pip install .Pull the RKLLama Docker image:

docker pull ghcr.io/notpunchnox/rkllama:mainrun server

docker run -it --privileged -p 8080:8080 -v <local_models_dir>:/opt/rkllama/models ghcr.io/notpunchnox/rkllama:main Set up by: ichlaffterlalu

Docker Compose facilities much of the extra flags declaration such as volumes:

docker compose up --detach --remove-orphansVirtualization with conda is started automatically, as well as the NPU frequency setting.

- Start the server

rkllama_server --models <models_dir>To enable debug mode:

rkllama_server --debug --models <models_dir>- Command to start the client

rkllama_clientor

rkllama_client help- See the available models

rkllama_client list- Run a model

rkllama_client run <model_name>Then start chatting ( verbose mode: display statistics )

In version 0.0.60, the verbose command returns this information:

RKLLama supports advanced tool/function calling for enhanced AI interactions:

# Example: Weather tool call

curl -X POST http://localhost:8080/api/chat \

-H "Content-Type: application/json" \

-d '{

"model": "qwen2.5:3b",

"messages": [{"role": "user", "content": "What is the weather in Paris?"}],

"tools": [{

"type": "function",

"function": {

"name": "get_weather",

"description": "Get current weather",

"parameters": {

"type": "object",

"properties": {

"location": {"type": "string", "description": "City name"}

},

"required": ["location"]

}

}

}]

}'Features:

- 🔧 Multiple model support (Qwen, Llama 3.2+, others)

- 🌊 Streaming & non-streaming modes

- 🎯 Robust JSON parsing with fallback methods

- 🔄 Auto format normalization

- 📋 Multiple tools in single request

For complete documentation: Tool Calling Guide

You can download and install a model from the Hugging Face platform with the following command:

rkllama_client pull username/repo_id/model_file.rkllm/custom_model_nameAlternatively, you can run the command interactively:

rkllama_client pull

Repo ID ( example: punchnox/Tinnyllama-1.1B-rk3588-rkllm-1.1.4): <your response>

File ( example: TinyLlama-1.1B-Chat-v1.0-rk3588-w8a8-opt-0-hybrid-ratio-0.5.rkllm): <your response>

Custom Model Name ( example: tinyllama-chat:1.1b ): <your response>This will automatically download the specified model file and prepare it for use with RKLLAMA.

Example with Qwen2.5 3b from c01zaut: https://huggingface.co/c01zaut/Qwen2.5-3B-Instruct-RK3588-1.1.4

-

Download the Model

- Download

.rkllmmodels directly from Hugging Face. - Alternatively, convert your GGUF models into

.rkllmformat (conversion tool coming soon on my GitHub).

- Download

-

Place the Model

- Create the

modelsdirectory on your system. - Make a new subdirectory with model name.

- Place the

.rkllmfiles in this directory. - Create

Modelfileand add this :

FROM="file.rkllm" HUGGINGFACE_PATH="huggingface_repository" SYSTEM="Your system prompt" TEMPERATURE=1.0

Example directory structure:

~/RKLLAMA/models/ └── TinyLlama-1.1B-Chat-v1.0 |── Modelfile └── TinyLlama-1.1B-Chat-v1.0.rkllmYou must provide a link to a HuggingFace repository to retrieve the tokenizer and chattemplate. An internet connection is required for the tokenizer initialization (only once), and you can use a repository different from that of the model as long as the tokenizer is compatible and the chattemplate meets your needs. Tokenizer gets downloaded for the first time in the models directory

- Create the

-

Download the encoder model .rknn

- Download

.rknnmodels directly from Hugging Face. - Alternatively, convert your ONNX models into

.rknnformat. - Place the

.rknnmodel inside themodelsdirectory. RKLLama detected the encoder model present in the directory. - Include manually the following properties in the

Modelfileaccording to the conversion properties used for the conversion of the vision encoder.rknn:

IMAGE_WIDTH=448 IMAGE_HEIGHT= N_IMAGE_TOKENS= IMG_START= IMG_END= IMG_CONTENT= # For example, for Qwen2VL/Qwen2.5VL: IMAGE_WIDTH=392 IMAGE_HEIGHT=392 N_IMAGE_TOKENS=196 IMG_START=<|vision_start|> IMG_END=<|vision_end|> IMG_CONTENT=<|image_pad|> # For example, for MiniCPMV4: IMAGE_WIDTH=448 IMAGE_HEIGHT=448 N_IMAGE_TOKENS=64 IMG_START=<image> IMG_END=</image> IMG_CONTENT=<unk>

- Download

Example directory structure for multimodal:

~/RKLLAMA/models/

└── qwen2-vision\:2b

|── Modelfile

└── Qwen2-VL-2B-Instruct.rkllm

└── Qwen2-VL-2B-Instruct.rknn

-

In a temporary folder, clone the repository https://huggingface.co/danielferr85/lcm-sd-1.5-rknn-2.3.2-rk3588 or https://huggingface.co/danielferr85/lcm-ssd-1b-rknn-2.3.2-rk3588 from Hugging Face for the desired SD model

-

Execute the ONNX to RKNN convertion of the models for your needs WITH RKNN TOOLKIT LIBRARY VERSION 2.3.2. For example:

For LCM SD 1.5

python convert-onnx-to-rknn.py --model-dir <directory_download_model> --resolutions 512x512 --components "text_encoder,unet,vae_decoder" --target_platform rk3588or

For LCM SSD1B

python convert-onnx-to-rknn.py --model-dir <directory_download_model> --resolutions 1024x1024 --components "text_encoder,text_encoder_2,unet,vae_decoder" --target_platform rk3588 -

Create a folder inside the models directory in RKLLAMA for the Stable Diffusion RKNN models, For example: lcm-stable-diffusion or lcm-segmind-stable-diffusion

-

Copy the folders: "scheduler, text_encoder, text_encoder_2 (for SSD1B only), unet, vae_decoder" from the cloned repo to the new directory model created in RKLLMA. Just copy the *.json and *.rknn files.

-

The structure of the model MUST be like this:

For LCM SD 1.5

~/RKLLAMA/models/ └── lcm-stable-diffusion |── scheduler |── scheduler_config.json └── text_encoder |── config.json |── model.rknn └── unet |── config.json |── model.rknn └── vae_decoder |── config.json |── model.rknnor

For LCM SSD1B

~/RKLLAMA/models/ └── lcm-segmind-stable-diffusion |── scheduler |── scheduler_config.json └── text_encoder |── config.json |── model.rknn └── text_encoder_2 |── config.json |── model.rknn └── unet |── config.json |── model.rknn └── vae_decoder |── config.json |── model.rknn -

Done! You are ready to test the OpenAI endpoint /v1/images/generations to generate images. You can add it to OpenWebUI in the Image Generation section.

-

Available converted models for RK3588 and RKNN 2.3.2 at: https://huggingface.co/danielferr85/lcm-sd-1.5-rknn-2.3.2-rk3588 (only 512x512 resolutions) and https://huggingface.co/danielferr85/lcm-ssd-1b-rknn-2.3.2-rk3588 (only 1024x1024 resolutions)

For Piper:

-

Download a voice from https://huggingface.co/danielferr85/piper-checkpoints-rknn from Hugging Face. (You can convert new ones, see below)

-

Create a folder inside the models directory in RKLLAMA for the piper Audio model, For example: es_AR-daniela-high

-

Copy the encoder (.onnx), decoder (.rknn) and config (piper.json) file from the choosed voice to the new directory model created in RKLLMA.

-

The structure of the model MUST be like this:

~/RKLLAMA/models/ └── es_AR-daniela-high |── encoder.onnx └── decoder.rknn └── piper.json

For MMS-TTS:

-

Download a voice from https://huggingface.co/danielferr85/mms-tts-rknn from Hugging Face. (You can convert new ones, see below)

-

Create a folder inside the models directory in RKLLAMA for the piper Audio model, For example: mms_tts_spa

-

Copy the encoder (.rknn), decoder (.rknn) and vocab (mms_tts.json) file from the choosed voice to the new directory model created in RKLLMA.

-

The structure of the model MUST be like this:

~/RKLLAMA/models/ └── mms_tts_spa |── encoder/ |── encoder.rknn └── decoder/ └── decoder.rknn └── mms_tts.json -

Done! You are ready to test the OpenAI endpoint /v1/audio/speech to generate audio. You can add it to OpenWebUI in the Audio section for TTS.

IMPORTANT For Piper:

- You must convert only your decoder (.rknn) for your specific platform (for example rk3588).

- The encoder can have any name but must ended with extension .onnx

- The decoder can have any name but must ended with extension .rknn

- The config of the model must have the name piper.json

- You must use rknn-toolkit 2.3.2 for RKNN conversion because is the one used by RKLLAMA

- Always CHECK THE LICENSE of the voice that you are going to use.

- In OpenAI request, the argument model is the name of the model folder that you create and the argument voice is the speaker of the voice if the voice is multispeaker (For example 'F' (Female) or 'M' (Male). Check the config of the model). If the model is monospeaker, then voice can be skipped.

- You can convert any Piper TTS model (or create one, or finetuning one) creating first the encoder and decoder in ONNX format and then converting the decoder in RKNN:

- Clone this repo and branch streaming: https://github.com/mush42/piper/tree/streaming

- Create a virtual environemnt and install that repo

- Clone the repository https://huggingface.co/danielferr85/piper-checkpoints-rknn from Hugging Face

- Download the entire folder of the voice to convert from dataset: https://huggingface.co/datasets/rhasspy/piper-checkpoints and put it inside the repo piper-checkpoints-rknn (the structure must be for example: es/es_MX/ald/medium/). You can also use the script download_models.py from download automatically the model you want.

- Execute the script export_encoder_decoder.py to export the encoder and decoder IN ONNX format.

- Execute the script export_rknn.py to export the decoder in RKNN format (you must uhave installed the rknn-toolkit version 2.3.2).

For MMS-TTS:

- You must convert your encoder and decoder (.rknn) for your specific platform (for example rk3588).

- The encoder can have any name but must ended with extension .rknn and must be placed inside a folder called encoder

- The decoder can have any name but must ended with extension .rknn and must be placed inside a folder called decoder

- The vocab of the model must have the name mms_tts.json

- You must use rknn-toolkit 2.3.2 for RKNN conversion because is the one used by RKLLAMA

- Always CHECK THE LICENSE of the voice that you are going to use.

- In OpenAI request, the argument model is the name of the model folder that you create and the argument voice is the speaker of the voice if the voice is multispeaker but in MMS-TTS always is monospeaker, then voice is skipped.

- You can convert more mms_tts models. Check: https://github.com/airockchip/rknn_model_zoo/tree/main/examples/mms_tts

- For OmniASR CTC models:

-

Download a model from https://huggingface.co/danielferr85/omniASR-ctc-rknn from Hugging Face.

-

Create a folder inside the models directory in RKLLAMA for the model, For example: omniasr-ctc:300m

-

Copy the model (.rknn) and vocabulary (omniasr.txt) file from the choosed model to the new directory model created in RKLLMA.

-

The structure of the model MUST be like this:

~/RKLLAMA/models/ └── omniasr-ctc:300m └── model.rknn └── omniasr.txt

IMPORTANT

-

The model can have any name but must ended with extension .rknn

-

The vocabulary of the model must be the name omniasr.txt

-

You must use rknn-toolkit 2.3.2 for RKNN conversion because is the one used by RKLLAMA

-

For Whisper models:

-

Download a model from https://huggingface.co/danielferr85/whisper-with_past-models-rknn from Hugging Face.

-

Create a folder inside the models directory in RKLLAMA for the model, For example: whisper-large-v3-turbo

-

Copy the encoder model (.rknn), decoder model (.rknn), decoder with past model (.rknn), whisper.ini and tokenizer folder from the choosed model to the new directory model created in RKLLMA.

-

The structure of the model MUST be like this:

~/RKLLAMA/models/ └── whisper-large-v3-turbo └── encoder └── model_encoder.rknn └── decoder └── model_decoder.rknn └── decoder_with_past └── model_decoder_with_past.rknn └── tokenizer └── tokenizer_config.json └── tokenizer.json └── whisper.ini

IMPORTANT

- The models can have any name but must ended with extension .rknn and must be in the correct folder structure

- The tokenizer folder is the same as the original official Whisper model

- The whisper.ini file can be modified to adjust the properties of the VAD if needed

- You must use rknn-toolkit 2.3.2 for RKNN conversion because is the one used by RKLLAMA

Done! You are ready to test the OpenAI endpoint /v1/audio/transcriptions to generate transcriptions. You can add it to OpenWebUI in the Audio section for STT.

RKLLAMA uses a flexible configuration system that loads settings from multiple sources in a priority order:

See the Configuration Documentation for complete details.

- Remove the pyhton package rkllama

pip uninstall rkllama

Ollama API Compatibility: RKLLAMA now implements key Ollama API endpoints, with primary focus on /api/chat and /api/generate, allowing integration with many Ollama clients. Additional endpoints are in various stages of implementation.

Enhanced Model Naming: Simplified model naming convention allows using models with familiar names like "qwen2.5:3b" or "llama3-instruct:8b" while handling the full file paths internally.

Improved Performance and Reliability: Enhanced streaming responses with better handling of completion signals and optimized token processing.

CPU Auto-detection: Automatic detection of RK3588 or RK3576 platform with fallback to interactive selection.

Debug Mode: Optional debugging tools with detailed logs that can be enabled with the --debug flag.

Simplified Model Management:

- Delete models with one command using the simplified name

- Pull models directly from Hugging Face with automatic Modelfile creation

- Custom model configurations through Modelfiles

- Smart collision handling for models with similar names

If you have already downloaded models and do not wish to reinstall everything, please follow this guide: Rebuild Architecture

- Add RKNN for onnx models (TTS, image classification/segmentation...)

-

GGUF/HF to RKLLMconversion software

System Monitor:

- ichlaffterlalu: Contributed with a pull request for Docker-Rkllama and fixed multiple errors.

- TomJacobsUK: Contributed with pull requests for Ollama API compatibility and model naming improvements, and fixed CPU detection errors.

- Yoann Vanitou: Contributed with Docker implementation improvements and fixed merge conflicts.

- Daniel Ferreira: Contributed with Tools Support, OpenAI API compatibility and multiload RKLLM models in memory. Also improvements and fixes. Multimodal support implementation.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for rkllama

Similar Open Source Tools

rkllama

RKLLama is a server and client tool designed for running and interacting with LLM models optimized for Rockchip RK3588(S) and RK3576 platforms. It allows models to run on the NPU, with features such as running models on NPU, partial Ollama API compatibility, pulling models from Huggingface, API REST with documentation, dynamic loading/unloading of models, inference requests with streaming modes, simplified model naming, CPU model auto-detection, and optional debug mode. The tool supports Python 3.8 to 3.12 and has been tested on Orange Pi 5 Pro and Orange Pi 5 Plus with specific OS versions.

probe

Probe is an AI-friendly, fully local, semantic code search tool designed to power the next generation of AI coding assistants. It combines the speed of ripgrep with the code-aware parsing of tree-sitter to deliver precise results with complete code blocks, making it perfect for large codebases and AI-driven development workflows. Probe is fully local, keeping code on the user's machine without relying on external APIs. It supports multiple languages, offers various search options, and can be used in CLI mode, MCP server mode, AI chat mode, and web interface. The tool is designed to be flexible, fast, and accurate, providing developers and AI models with full context and relevant code blocks for efficient code exploration and understanding.

ebook2audiobook

ebook2audiobook is a CPU/GPU converter tool that converts eBooks to audiobooks with chapters and metadata using tools like Calibre, ffmpeg, XTTSv2, and Fairseq. It supports voice cloning and a wide range of languages. The tool is designed to run on 4GB RAM and provides a new v2.0 Web GUI interface for user-friendly interaction. Users can convert eBooks to text format, split eBooks into chapters, and utilize high-quality text-to-speech functionalities. Supported languages include Arabic, Chinese, English, French, German, Hindi, and many more. The tool can be used for legal, non-DRM eBooks only and should be used responsibly in compliance with applicable laws.

open-webui-tools

Open WebUI Tools Collection is a set of tools for structured planning, arXiv paper search, Hugging Face text-to-image generation, prompt enhancement, and multi-model conversations. It enhances LLM interactions with academic research, image generation, and conversation management. Tools include arXiv Search Tool and Hugging Face Image Generator. Function Pipes like Planner Agent offer autonomous plan generation and execution. Filters like Prompt Enhancer improve prompt quality. Installation and configuration instructions are provided for each tool and pipe.

RealtimeSTT_LLM_TTS

RealtimeSTT is an easy-to-use, low-latency speech-to-text library for realtime applications. It listens to the microphone and transcribes voice into text, making it ideal for voice assistants and applications requiring fast and precise speech-to-text conversion. The library utilizes Voice Activity Detection, Realtime Transcription, and Wake Word Activation features. It supports GPU-accelerated transcription using PyTorch with CUDA support. RealtimeSTT offers various customization options for different parameters to enhance user experience and performance. The library is designed to provide a seamless experience for developers integrating speech-to-text functionality into their applications.

Visionatrix

Visionatrix is a project aimed at providing easy use of ComfyUI workflows. It offers simplified setup and update processes, a minimalistic UI for daily workflow use, stable workflows with versioning and update support, scalability for multiple instances and task workers, multiple user support with integration of different user backends, LLM power for integration with Ollama/Gemini, and seamless integration as a service with backend endpoints and webhook support. The project is approaching version 1.0 release and welcomes new ideas for further implementation.

Groqqle

Groqqle 2.1 is a revolutionary, free AI web search and API that instantly returns ORIGINAL content derived from source articles, websites, videos, and even foreign language sources, for ANY target market of ANY reading comprehension level! It combines the power of large language models with advanced web and news search capabilities, offering a user-friendly web interface, a robust API, and now a powerful Groqqle_web_tool for seamless integration into your projects. Developers can instantly incorporate Groqqle into their applications, providing a powerful tool for content generation, research, and analysis across various domains and languages.

OrChat

OrChat is a powerful CLI tool for chatting with AI models through OpenRouter. It offers features like universal model access, interactive chat with real-time streaming responses, rich markdown rendering, agentic shell access, security gating, performance analytics, command auto-completion, pricing display, auto-update system, multi-line input support, conversation management, auto-summarization, session persistence, web scraping, file and media support, smart thinking mode, conversation export, customizable themes, interactive input features, and more.

alexandria-audiobook

Alexandria Audiobook Generator is a tool that transforms any book or novel into a fully-voiced audiobook using AI-powered script annotation and text-to-speech. It features a built-in Qwen3-TTS engine with batch processing and a browser-based editor for fine-tuning every line before final export. The tool offers AI-powered pipeline for automatic script annotation, smart chunking, and context preservation. It also provides voice generation capabilities with built-in TTS engine, multi-language support, custom voices, voice cloning, and LoRA voice training. The web UI editor allows users to edit, preview, and export the audiobook. Export options include combined audiobook, individual voicelines, and Audacity export for DAW editing.

LlamaBarn

LlamaBarn is a macOS menu bar app designed for running local LLMs. It allows users to install models from a built-in catalog, connect various applications such as chat UIs, editors, CLI tools, and scripts, and manage the loading and unloading of models based on usage. The app ensures all processing is done locally on the user's device, with a small app footprint and zero configuration required. It offers a smart model catalog, self-contained storage for models and configurations, and is built on llama.cpp from the GGML org.

openwhispr

OpenWhispr is an open source desktop dictation application that converts speech to text using OpenAI Whisper. It features both local and cloud processing options for maximum flexibility and privacy. The application supports multiple AI providers, customizable hotkeys, agent naming, and various AI processing models. It offers a modern UI built with React 19, TypeScript, and Tailwind CSS v4, and is optimized for speed using Vite and modern tooling. Users can manage settings, view history, configure API keys, and download/manage local Whisper models. The application is cross-platform, supporting macOS, Windows, and Linux, and offers features like automatic pasting, draggable interface, global hotkeys, and compound hotkeys.

figma-console-mcp

Figma Console MCP is a Model Context Protocol server that bridges design and development, giving AI assistants complete access to Figma for extraction, creation, and debugging. It connects AI assistants like Claude to Figma, enabling plugin debugging, visual debugging, design system extraction, design creation, variable management, real-time monitoring, and three installation methods. The server offers 53+ tools for NPX and Local Git setups, while Remote SSE provides read-only access with 16 tools. Users can create and modify designs with AI, contribute to projects, or explore design data. The server supports authentication via personal access tokens and OAuth, and offers tools for navigation, console debugging, visual debugging, design system extraction, design creation, design-code parity, variable management, and AI-assisted design creation.

vibesdk

Cloudflare VibeSDK is an open source full-stack AI webapp generator built on Cloudflare's developer platform. It allows companies to build AI-powered platforms, enables internal development for non-technical teams, and supports SaaS platforms to extend product functionality. The platform features AI code generation, live previews, interactive chat, modern stack generation, one-click deploy, and GitHub integration. It is built on Cloudflare's platform with frontend in React + Vite, backend in Workers with Durable Objects, database in D1 (SQLite) with Drizzle ORM, AI integration via multiple LLM providers, sandboxed app previews and execution in containers, and deployment to Workers for Platforms with dispatch namespaces. The platform also offers an SDK for programmatic access to build apps programmatically using TypeScript SDK.

TranslateBookWithLLM

TranslateBookWithLLM is a Python application designed for large-scale text translation, such as entire books (.EPUB), subtitle files (.SRT), and plain text. It leverages local LLMs via the Ollama API or Gemini API. The tool offers both a web interface for ease of use and a command-line interface for advanced users. It supports multiple format translations, provides a user-friendly browser-based interface, CLI support for automation, multiple LLM providers including local Ollama models and Google Gemini API, and Docker support for easy deployment.

mini-sglang

Mini-SGLang is a lightweight yet high-performance inference framework for Large Language Models. With a compact codebase of ~5,000 lines of Python, it serves as both a capable inference engine and a transparent reference for researchers and developers. It achieves state-of-the-art throughput and latency with advanced optimizations such as Radix Cache, Chunked Prefill, Overlap Scheduling, Tensor Parallelism, and Optimized Kernels integrating FlashAttention and FlashInfer for maximum efficiency. Mini-SGLang is designed to demystify the complexities of modern LLM serving systems, providing a clean, modular, and fully type-annotated codebase that is easy to understand and modify.

SINQ

SINQ (Sinkhorn-Normalized Quantization) is a novel, fast, and high-quality quantization method designed to make any Large Language Models smaller while keeping their accuracy almost intact. It offers a model-agnostic quantization technique that delivers state-of-the-art performance for Large Language Models without sacrificing accuracy. With SINQ, users can deploy models that would otherwise be too big, drastically reducing memory usage while preserving LLM quality. The tool quantizes models using dual scaling for better quantization and achieves a more even error distribution, leading to stable behavior across layers and consistently higher accuracy even at very low bit-widths.

For similar tasks

neutone_sdk

The Neutone SDK is a tool designed for researchers to wrap their own audio models and run them in a DAW using the Neutone Plugin. It simplifies the process by allowing models to be built using PyTorch and minimal Python code, eliminating the need for extensive C++ knowledge. The SDK provides support for buffering inputs and outputs, sample rate conversion, and profiling tools for model performance testing. It also offers examples, notebooks, and a submission process for sharing models with the community.

rkllama

RKLLama is a server and client tool designed for running and interacting with LLM models optimized for Rockchip RK3588(S) and RK3576 platforms. It allows models to run on the NPU, with features such as running models on NPU, partial Ollama API compatibility, pulling models from Huggingface, API REST with documentation, dynamic loading/unloading of models, inference requests with streaming modes, simplified model naming, CPU model auto-detection, and optional debug mode. The tool supports Python 3.8 to 3.12 and has been tested on Orange Pi 5 Pro and Orange Pi 5 Plus with specific OS versions.

lmql

LMQL is a programming language designed for large language models (LLMs) that offers a unique way of integrating traditional programming with LLM interaction. It allows users to write programs that combine algorithmic logic with LLM calls, enabling model reasoning capabilities within the context of the program. LMQL provides features such as Python syntax integration, rich control-flow options, advanced decoding techniques, powerful constraints via logit masking, runtime optimization, sync and async API support, multi-model compatibility, and extensive applications like JSON decoding and interactive chat interfaces. The tool also offers library integration, flexible tooling, and output streaming options for easy model output handling.

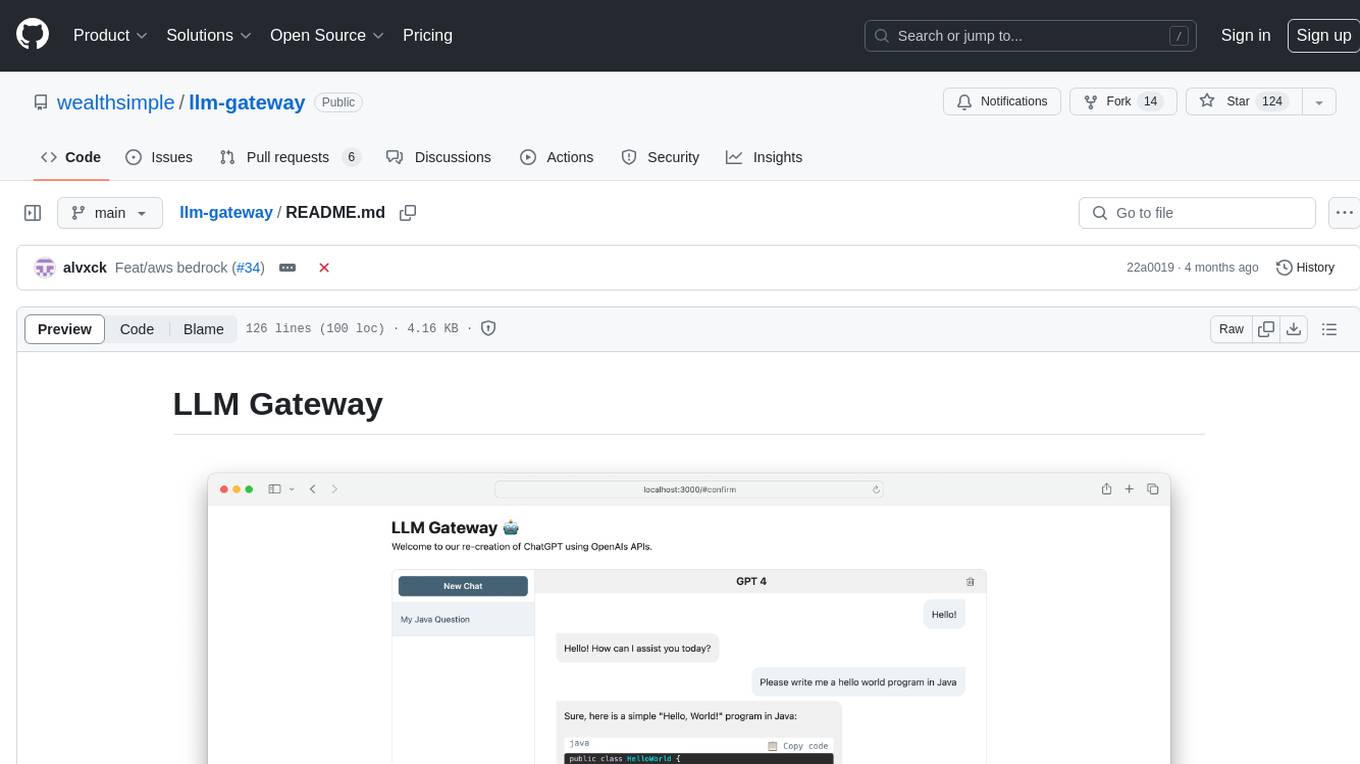

llm-gateway

llm-gateway is a gateway tool designed for interacting with third-party LLM providers such as OpenAI, Cohere, etc. It tracks data exchanged with these providers in a postgres database, applies PII scrubbing heuristics, and ensures safe communication with OpenAI's services. The tool supports various models from different providers and offers API and Python usage examples. Developers can set up the tool using Poetry, Pyenv, npm, and yarn for dependency management. The project also includes Docker setup for backend and frontend development.

Ollamac

Ollamac is a macOS app designed for interacting with Ollama models. It is optimized for macOS, allowing users to easily use any model from the Ollama library. The app features a user-friendly interface, chat archive for saving interactions, and real-time communication using HTTP streaming technology. Ollamac is open-source, enabling users to contribute to its development and enhance its capabilities. It requires macOS 14 or later and the Ollama system to be installed on the user's Mac with at least one Ollama model downloaded.

llmops-duke-aipi

LLMOps Duke AIPI is a course focused on operationalizing Large Language Models, teaching methodologies for developing applications using software development best practices with large language models. The course covers various topics such as generative AI concepts, setting up development environments, interacting with large language models, using local large language models, applied solutions with LLMs, extensibility using plugins and functions, retrieval augmented generation, introduction to Python web frameworks for APIs, DevOps principles, deploying machine learning APIs, LLM platforms, and final presentations. Students will learn to build, share, and present portfolios using Github, YouTube, and Linkedin, as well as develop non-linear life-long learning skills. Prerequisites include basic Linux and programming skills, with coursework available in Python or Rust. Additional resources and references are provided for further learning and exploration.

mistral-ai-kmp

Mistral AI SDK for Kotlin Multiplatform (KMP) allows communication with Mistral API to get AI models, start a chat with the assistant, and create embeddings. The library is based on Mistral API documentation and built with Kotlin Multiplatform and Ktor client library. Sample projects like ZeChat showcase the capabilities of Mistral AI SDK. Users can interact with different Mistral AI models through ZeChat apps on Android, Desktop, and Web platforms. The library is not yet published on Maven, but users can fork the project and use it as a module dependency in their apps.

LightRAG

LightRAG is a PyTorch library designed for building and optimizing Retriever-Agent-Generator (RAG) pipelines. It follows principles of simplicity, quality, and optimization, offering developers maximum customizability with minimal abstraction. The library includes components for model interaction, output parsing, and structured data generation. LightRAG facilitates tasks like providing explanations and examples for concepts through a question-answering pipeline.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.