probe

Probe is an AI-friendly, fully local, semantic code search engine which which works with for large codebases. The final missing building block for next generation of AI coding tools.

Stars: 110

Probe is an AI-friendly, fully local, semantic code search tool designed to power the next generation of AI coding assistants. It combines the speed of ripgrep with the code-aware parsing of tree-sitter to deliver precise results with complete code blocks, making it perfect for large codebases and AI-driven development workflows. Probe is fully local, keeping code on the user's machine without relying on external APIs. It supports multiple languages, offers various search options, and can be used in CLI mode, MCP server mode, AI chat mode, and web interface. The tool is designed to be flexible, fast, and accurate, providing developers and AI models with full context and relevant code blocks for efficient code exploration and understanding.

README:

Probe is an AI-friendly, fully local, semantic code search tool designed to power the next generation of AI coding assistants. By combining the speed of ripgrep with the code-aware parsing of tree-sitter, Probe delivers precise results with complete code blocks—perfect for large codebases and AI-driven development workflows.

- Quick Start

- Features

- Installation

-

Usage

- CLI Mode

- MCP Server Mode

- AI Chat Mode (Example in examples/chat)

- Web Interface

- Supported Languages

- How It Works

- Adding Support for New Languages

- Releasing New Versions

NPM Installation The easiest way to install Probe is via npm, which also installs the binary:

npm install -g @buger/probeBasic Search Example Search for code containing the phrase "llm pricing" in the current directory:

probe search "llm pricing" ./Advanced Search (with Token Limiting) Search for "partial prompt injection" in the current directory but limit the total tokens to 10000 (useful for AI tools with context window constraints):

probe search "prompt injection" ./ --max-tokens 10000Elastic Search Queries Use advanced query syntax for more powerful searches:

# Use AND operator for terms that must appear together

probe search "error AND handling" ./

# Use OR operator for alternative terms

probe search "login OR authentication OR auth" ./src

# Group terms with parentheses for complex queries

probe search "(error OR exception) AND (handle OR process)" ./

# Use wildcards for partial matching

probe search "auth* connect*" ./

# Exclude terms with NOT operator

probe search "database NOT sqlite" ./Extract Code Blocks Extract a specific function or code block containing line 42 in main.rs:

probe extract src/main.rs:42You can even paste failing test output and it will extract needed files and AST out of it, like:

go test | probe extractInteractive AI Chat Use the AI assistant to ask questions about your codebase:

# Run directly with npx (no installation needed)

npx -y @buger/probe-chat

# Set your API key first

export ANTHROPIC_API_KEY=your_api_key

# Or for OpenAI

# export OPENAI_API_KEY=your_api_key

# Specify a directory to search (optional)

npx -y @buger/probe-chat /path/to/your/projectNode.js SDK Usage Use Probe programmatically in your Node.js applications with the Vercel AI SDK:

import { ProbeChat } from '@buger/probe-chat';

import { StreamingTextResponse } from 'ai';

// Create a chat instance

const chat = new ProbeChat({

model: 'claude-3-sonnet-20240229',

anthropicApiKey: process.env.ANTHROPIC_API_KEY,

allowedFolders: ['/path/to/your/project']

});

// In an API route or Express handler

export async function POST(req) {

const { messages } = await req.json();

const userMessage = messages[messages.length - 1].content;

// Get a streaming response from the AI

const stream = await chat.chat(userMessage, { stream: true });

// Return a streaming response

return new StreamingTextResponse(stream);

}

// Or use it in a non-streaming way

const response = await chat.chat('How is authentication implemented?');

console.log(response);MCP server

Integrate with any AI editor:

{

"mcpServers": {

"memory": {

"command": "npx",

"args": [

"-y",

"@buger/probe-mcp"

]

}

}

}Example queries:

"Do the probe and search my codebase for implementations of the ranking algorithm"

"Using probe find all functions related to error handling in the src directory"

- AI-Friendly: Extracts entire functions, classes, or structs so AI models get full context.

- Fully Local: Keeps your code on your machine—no external APIs.

- Powered by ripgrep: Extremely fast scanning of large codebases.

- Tree-sitter Integration: Parses and understands code structure accurately.

- Re-Rankers & NLP: Uses tokenization, stemming, BM25, TF-IDF, or hybrid ranking methods for better search results.

-

Code Extraction: Extract specific code blocks or entire files with the

extractcommand. - Multi-Language: Works with popular languages like Rust, Python, JavaScript, TypeScript, Java, Go, C/C++, Swift, C#, and more.

- Interactive AI Chat: AI assistant example in the examples directory that can answer questions about your codebase using Claude or GPT models.

- Flexible: Run as a CLI tool, an MCP server, or an interactive AI chat.

You can install Probe with a single command using npm, curl, or PowerShell:

Using npm (Recommended for Node.js users)

npm install -g @buger/probeUsing curl (For macOS and Linux)

curl -fsSL https://raw.githubusercontent.com/buger/probe/main/install.sh | bashWhat the curl script does:

- Detects your operating system and architecture

- Fetches the latest release from GitHub

- Downloads the appropriate binary for your system

- Verifies the checksum for security

- Installs the binary to

/usr/local/bin

Using PowerShell (For Windows)

iwr -useb https://raw.githubusercontent.com/buger/probe/main/install.ps1 | iexWhat the PowerShell script does:

- Detects your system architecture (x86_64 or ARM64)

- Fetches the latest release from GitHub

- Downloads the appropriate Windows binary

- Verifies the checksum for security

- Installs the binary to your user directory (

%LOCALAPPDATA%\Probe) by default - Provides instructions to add the binary to your PATH if needed

Installation options:

The PowerShell script supports several options:

# Install for current user (default)

iwr -useb https://raw.githubusercontent.com/buger/probe/main/install.ps1 | iex

# Install system-wide (requires admin privileges)

iwr -useb https://raw.githubusercontent.com/buger/probe/main/install.ps1 | iex -args "--system"

# Install to a custom directory

iwr -useb https://raw.githubusercontent.com/buger/probe/main/install.ps1 | iex -args "--dir", "C:\Tools\Probe"

# Show help

iwr -useb https://raw.githubusercontent.com/buger/probe/main/install.ps1 | iex -args "--help"- Operating Systems: macOS, Linux, or Windows

- Architectures: x86_64 (all platforms) or ARM64 (macOS and Windows)

-

Tools:

- For macOS/Linux:

curl,bash, andsudo/root privileges - For Windows: PowerShell 5.1 or later

- For macOS/Linux:

- Download the appropriate binary for your platform from the GitHub Releases page:

-

probe-x86_64-linux.tar.gzfor Linux (x86_64) -

probe-x86_64-darwin.tar.gzfor macOS (Intel) -

probe-aarch64-darwin.tar.gzfor macOS (Apple Silicon) -

probe-x86_64-windows.zipfor Windows (x86_64) -

probe-aarch64-windows.zipfor Windows (ARM64)

-

- Extract the archive:

# For Linux/macOS tar -xzf probe-*-*.tar.gz # For Windows (using PowerShell) Expand-Archive -Path probe-*-windows.zip -DestinationPath .\probe

- Move the binary to a location in your PATH:

# For Linux/macOS sudo mv probe /usr/local/bin/ # For Windows (using PowerShell) # Create a directory for the binary (if it doesn't exist) $installDir = "$env:LOCALAPPDATA\Probe" New-Item -ItemType Directory -Path $installDir -Force # Move the binary Move-Item -Path .\probe\probe.exe -Destination $installDir # Add to PATH (optional) [Environment]::SetEnvironmentVariable('PATH', [Environment]::GetEnvironmentVariable('PATH', 'User') + ";$installDir", 'User')

-

Install Rust and Cargo (if not already installed):

For macOS/Linux:

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

For Windows:

# Download and run the Rust installer Invoke-WebRequest -Uri https://win.rustup.rs/x86_64 -OutFile rustup-init.exe .\rustup-init.exe # Follow the on-screen instructions

-

Clone this repository:

git clone https://github.com/buger/probe.git cd code-search -

Build the project:

cargo build --release

-

(Optional) Install globally:

cargo install --path .This will install the binary to your Cargo bin directory, which is typically:

-

$HOME/.cargo/binon macOS/Linux -

%USERPROFILE%\.cargo\binon Windows

-

For macOS/Linux:

probe --versionFor Windows:

probe --versionIf you get a "command not found" error on Windows, make sure the installation directory is in your PATH or use the full path to the executable:

# If installed to the default user location

& "$env:LOCALAPPDATA\Probe\probe.exe" --version

# If installed to the default system location

& "$env:ProgramFiles\Probe\probe.exe" --version-

Permissions:

- For macOS/Linux: Ensure you can write to

/usr/local/bin - For Windows: Ensure you have write permissions to the installation directory

- For macOS/Linux: Ensure you can write to

- System Requirements: Double-check your OS/architecture compatibility

-

PATH Issues:

- For Windows: Restart your terminal after adding the installation directory to PATH

- For macOS/Linux: Verify that

/usr/local/binis in your PATH

- Manual Install: If the quick install script fails, try Manual Installation

- GitHub Issues: Report issues on the GitHub repository

For macOS/Linux:

# If installed via npm

npm uninstall -g @buger/probe

# If installed via curl script or manually

sudo rm /usr/local/bin/probeFor Windows:

# If installed via PowerShell script to default user location

Remove-Item -Path "$env:LOCALAPPDATA\Probe\probe.exe" -Force

# If installed via PowerShell script to system location

Remove-Item -Path "$env:ProgramFiles\Probe\probe.exe" -Force

# If you added the installation directory to PATH, you may want to remove it

# For user PATH:

$userPath = [Environment]::GetEnvironmentVariable('PATH', 'User')

$userPath = ($userPath -split ';' | Where-Object { $_ -ne "$env:LOCALAPPDATA\Probe" }) -join ';'

[Environment]::SetEnvironmentVariable('PATH', $userPath, 'User')Probe can be used in three main modes:

- CLI Mode: Direct code search and extraction from the command line

- MCP Server Mode: Run as a server exposing search functionality via MCP

- Web Interface: Browser-based UI for code exploration

Additionally, there are example implementations in the examples directory:

- AI Chat Example: Interactive AI assistant for code exploration (in examples/chat)

- Web Interface Example: Browser-based UI for code exploration (in examples/web)

probe search <SEARCH_PATTERN> [OPTIONS]-

<SEARCH_PATTERN>: Pattern to search for (required) -

--files-only: Skip AST parsing; only list files with matches -

--ignore: Custom ignore patterns (in addition to.gitignore) -

--exclude-filenames, -n: Exclude files whose names match query words (filename matching is enabled by default) -

--reranker, -r: Choose a re-ranking algorithm (hybrid,hybrid2,bm25,tfidf) -

--frequency, -s: Frequency-based search (tokenization, stemming, stopword removal) ======= -

--max-results: Maximum number of results to return -

--max-bytes: Maximum total bytes of code to return -

--max-tokens: Maximum total tokens of code to return (useful for AI) -

--allow-tests: Include test files and test code blocks -

--any-term: Match files containing any query terms (default behavior) -

--no-merge: Disable merging of adjacent code blocks after ranking (merging enabled by default) -

--merge-threshold: Max lines between code blocks to consider them adjacent for merging (default: 5)

# 1) Search for "setTools" in the current directory with frequency-based search

probe search "setTools"

# 2) Search for "impl" in ./src

probe search "impl" ./src

# 3) Search for "keyword" returning only the top 5 results

probe search "keyword" --max-tokens 10000

# 4) Search for "function" and disable merging of adjacent code blocks

probe search "function" --no-mergeThe extract command allows you to extract code blocks from files. When a line number is specified, it uses tree-sitter to find the closest suitable parent node (function, struct, class, etc.) for that line. You can also specify a symbol name to extract the code block for that specific symbol.

probe extract <FILES> [OPTIONS]-

<FILES>: Files to extract from (can include line numbers with colon, e.g.,file.rs:10, or symbol names with hash, e.g.,file.rs#function_name) -

--allow-tests: Include test files and test code blocks in results -

-c, --context <LINES>: Number of context lines to include before and after the extracted block (default: 0) -

-f, --format <FORMAT>: Output format (markdown,plain,json) (default:markdown)

# 1) Extract a function containing line 42 from main.rs

probe extract src/main.rs:42

# 2) Extract multiple files or blocks

probe extract src/main.rs:42 src/lib.rs:15 src/cli.rs

# 3) Extract with JSON output format

probe extract src/main.rs:42 --format json

# 4) Extract with 5 lines of context around the specified line

probe extract src/main.rs:42 --context 5

# 5) Extract a specific function by name (using # symbol syntax)

probe extract src/main.rs#handle_extract

# 6) Extract a specific line range (using : syntax)

probe extract src/main.rs:10-20

# 7) Extract from stdin (useful with error messages or compiler output)

cat error_log.txt | probe extractThe extract command can also read file paths from stdin, making it useful for processing compiler errors or log files:

# Extract code blocks from files mentioned in error logs

grep -r "error" ./logs/ | probe extractAdd the following to your AI editor's MCP configuration file:

{

"mcpServers": {

"memory": {

"command": "npx",

"args": [

"-y",

"@buger/probe-mcp"

]

}

}

}-

Example Usage in AI Editors:

Once configured, you can ask your AI assistant to search your codebase with natural language queries like:

"Do the probe and search my codebase for implementations of the ranking algorithm"

"Using probe find all functions related to error handling in the src directory"

The AI chat functionality is available as a standalone npm package that can be run directly with npx.

# Run directly with npx (no installation needed)

npx -y @buger/probe-chat

# Set your API key

export ANTHROPIC_API_KEY=your_api_key

# Or for OpenAI

# export OPENAI_API_KEY=your_api_key

# Or specify a directory to search

npx -y @buger/probe-chat /path/to/your/project# Install globally

npm install -g @buger/probe-chat

# Start the chat interface

probe-chat# Navigate to the examples directory

cd examples/chat

# Install dependencies

npm install

# Set your API key

export ANTHROPIC_API_KEY=your_api_key

# Or for OpenAI

# export OPENAI_API_KEY=your_api_key

# Start the chat interface

node index.jsThis starts an interactive CLI interface where you can ask questions about your codebase and get AI-powered responses.

- AI-Powered Search: Uses LLMs to understand your questions and search the codebase intelligently

- Multi-Model Support: Works with both Anthropic's Claude and OpenAI's GPT models

- Token Tracking: Monitors token usage for both requests and responses

- Conversation History: Maintains context across multiple interactions

- Colored Output: Provides a user-friendly terminal interface with syntax highlighting

Configure the chat using environment variables:

# Use Claude models (recommended)

export ANTHROPIC_API_KEY=your_api_key

# Or use OpenAI models

export OPENAI_API_KEY=your_api_key

# Override the default model

export MODEL_NAME=claude-3-opus-20240229

# Override API URLs (useful for proxies or enterprise deployments)

export ANTHROPIC_API_URL=https://your-anthropic-proxy.com

export OPENAI_API_URL=https://your-openai-proxy.com/v1

# Enable debug mode for detailed logging

export DEBUG=1❯ How does the ranking algorithm work?

─ Response ─────────────────────────────────────────────────────────

I'll explain how the ranking algorithm works in the codebase.

The ranking system in Probe uses multiple algorithms to sort search results by relevance. The main ranking algorithms are:

1. TF-IDF (Term Frequency-Inverse Document Frequency)

2. BM25 (Best Matching 25)

3. Hybrid (a combination approach)

Let me break down each approach:

## TF-IDF Ranking

TF-IDF weighs terms based on how frequently they appear in a document versus how common they are across all documents.

Key implementation details:

- Term frequency (TF) measures how often a term appears in a document

- Inverse document frequency (IDF) measures how rare a term is across all documents

- Final score is calculated as TF × IDF

## BM25 Ranking

BM25 is an advanced ranking function that improves upon TF-IDF by adding document length normalization.

Key implementation details:

- Uses parameters k1 (term frequency saturation) and b (document length normalization)

- Handles edge cases like empty documents and rare terms

- Provides better results for longer documents

## Hybrid Ranking

The hybrid approach combines multiple ranking signals for better results:

1. Combines scores from both TF-IDF and BM25

2. Considers document length and term positions

3. Applies normalization to ensure fair comparison

The default reranker is "hybrid" which provides the best overall results for code search.

The ranking implementation can be found in `src/search/result_ranking.rs`.

─────────────────────────────────────────────────────────────────────

Token Usage: Request: 1245 Response: 1532 (Current message only: ~1532)

Total: 2777 tokens (Cumulative for entire session)

─────────────────────────────────────────────────────────────────────

Probe includes a web-based chat interface that provides a user-friendly way to interact with your codebase using AI. You can run it directly with npx or set it up manually.

# Run directly with npx (no installation needed)

npx -y @buger/probe-web

# Set your API key first

export ANTHROPIC_API_KEY=your_api_key

# Configure allowed folders (optional)

export ALLOWED_FOLDERS=/path/to/folder1,/path/to/folder2-

Navigate to the web directory:

cd web -

Install dependencies:

npm install

-

Configure environment variables: Create or edit the

.envfile in the web directory:ANTHROPIC_API_KEY=your_anthropic_api_key PORT=8080 ALLOWED_FOLDERS=/path/to/folder1,/path/to/folder2 -

Start the server:

npm start

-

Access the web interface: Open your browser and navigate to

http://localhost:8080

- Built with vanilla JavaScript and Node.js

- Uses the Vercel AI SDK for Claude integration

- Executes Probe commands via the probeTool.js module

- Renders markdown with Marked.js and syntax highlighting with Highlight.js

- Supports Mermaid.js for diagram generation and visualization

Probe currently supports:

-

Rust (

.rs) -

JavaScript / JSX (

.js,.jsx) -

TypeScript / TSX (

.ts,.tsx) -

Python (

.py) -

Go (

.go) -

C / C++ (

.c,.h,.cpp,.cc,.cxx,.hpp,.hxx) -

Java (

.java) -

Ruby (

.rb) -

PHP (

.php) -

Swift (

.swift) -

C# (

.cs) -

Markdown (

.md,.markdown)

Probe combines fast file scanning with deep code parsing to provide highly relevant, context-aware results:

-

Ripgrep Scanning

Probe uses ripgrep to quickly search across your files, identifying lines that match your query. Ripgrep's efficiency allows it to handle massive codebases at lightning speed. -

AST Parsing with Tree-sitter

For each file containing matches, Probe uses tree-sitter to parse the file into an Abstract Syntax Tree (AST). This process ensures that code blocks (functions, classes, structs) can be identified precisely. -

NLP & Re-Rankers

Next, Probe applies classical NLP methods—tokenization, stemming, and stopword removal—alongside re-rankers such as BM25, TF-IDF, or the hybrid approach (combining multiple ranking signals). This step elevates the most relevant code blocks to the top, especially helpful for AI-driven searches. -

Block Extraction

Probe identifies the smallest complete AST node containing each match (e.g., a full function or class). It extracts these code blocks and aggregates them into search results. -

Context for AI

Finally, these structured blocks can be returned directly or fed into an AI system. By providing the full context of each code segment, Probe helps AI models navigate large codebases and produce more accurate insights.

-

Tree-sitter Grammar: In

Cargo.toml, add the tree-sitter parser for the new language. -

Language Module: Create a new file in

src/language/for parsing logic. - Implement Language Trait: Adapt the parse method for the new language constructs.

- Factory Update: Register your new language in Probe's detection mechanism.

Probe uses GitHub Actions for multi-platform builds and releases.

-

Update

Cargo.tomlwith the new version. -

Create a new Git tag:

git tag -a vX.Y.Z -m "Release vX.Y.Z" git push origin vX.Y.Z - GitHub Actions will build, package, and draft a new release with checksums.

Each release includes:

- Linux binary (x86_64)

- macOS binaries (x86_64 and aarch64)

- Windows binaries (x86_64 and aarch64)

- SHA256 checksums

We believe that local, privacy-focused, semantic code search is essential for the future of AI-assisted development. Probe is built to empower developers and AI alike to navigate and comprehend large codebases more effectively.

For questions or contributions, please open an issue on GitHub. Happy coding—and searching!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for probe

Similar Open Source Tools

probe

Probe is an AI-friendly, fully local, semantic code search tool designed to power the next generation of AI coding assistants. It combines the speed of ripgrep with the code-aware parsing of tree-sitter to deliver precise results with complete code blocks, making it perfect for large codebases and AI-driven development workflows. Probe is fully local, keeping code on the user's machine without relying on external APIs. It supports multiple languages, offers various search options, and can be used in CLI mode, MCP server mode, AI chat mode, and web interface. The tool is designed to be flexible, fast, and accurate, providing developers and AI models with full context and relevant code blocks for efficient code exploration and understanding.

TranslateBookWithLLM

TranslateBookWithLLM is a Python application designed for large-scale text translation, such as entire books (.EPUB), subtitle files (.SRT), and plain text. It leverages local LLMs via the Ollama API or Gemini API. The tool offers both a web interface for ease of use and a command-line interface for advanced users. It supports multiple format translations, provides a user-friendly browser-based interface, CLI support for automation, multiple LLM providers including local Ollama models and Google Gemini API, and Docker support for easy deployment.

CodeRAG

CodeRAG is an AI-powered code retrieval and assistance tool that combines Retrieval-Augmented Generation (RAG) with AI to provide intelligent coding assistance. It indexes your entire codebase for contextual suggestions based on your complete project, offering real-time indexing, semantic code search, and contextual AI responses. The tool monitors your code directory, generates embeddings for Python files, stores them in a FAISS vector database, matches user queries against the code database, and sends retrieved code context to GPT models for intelligent responses. CodeRAG also features a Streamlit web interface with a chat-like experience for easy usage.

Groqqle

Groqqle 2.1 is a revolutionary, free AI web search and API that instantly returns ORIGINAL content derived from source articles, websites, videos, and even foreign language sources, for ANY target market of ANY reading comprehension level! It combines the power of large language models with advanced web and news search capabilities, offering a user-friendly web interface, a robust API, and now a powerful Groqqle_web_tool for seamless integration into your projects. Developers can instantly incorporate Groqqle into their applications, providing a powerful tool for content generation, research, and analysis across various domains and languages.

claudian

Claudian is an Obsidian plugin that embeds Claude Code as an AI collaborator in your vault. It provides full agentic capabilities, including file read/write, search, bash commands, and multi-step workflows. Users can leverage Claude Code's power to interact with their vault, analyze images, edit text inline, add custom instructions, create reusable prompt templates, extend capabilities with skills and agents, connect external tools via Model Context Protocol servers, control models and thinking budget, toggle plan mode, ensure security with permission modes and vault confinement, and interact with Chrome. The plugin requires Claude Code CLI, Obsidian v1.8.9+, Claude subscription/API or custom model provider, and desktop platforms (macOS, Linux, Windows).

stenoai

StenoAI is an AI-powered meeting intelligence tool that allows users to record, transcribe, summarize, and query meetings using local AI models. It prioritizes privacy by processing data entirely on the user's device. The tool offers multiple AI models optimized for different use cases, making it ideal for healthcare, legal, and finance professionals with confidential data needs. StenoAI also features a macOS desktop app with a user-friendly interface, making it convenient for users to access its functionalities. The project is open-source and not affiliated with any specific company, emphasizing its focus on meeting-notes productivity and community collaboration.

rkllama

RKLLama is a server and client tool designed for running and interacting with LLM models optimized for Rockchip RK3588(S) and RK3576 platforms. It allows models to run on the NPU, with features such as running models on NPU, partial Ollama API compatibility, pulling models from Huggingface, API REST with documentation, dynamic loading/unloading of models, inference requests with streaming modes, simplified model naming, CPU model auto-detection, and optional debug mode. The tool supports Python 3.8 to 3.12 and has been tested on Orange Pi 5 Pro and Orange Pi 5 Plus with specific OS versions.

crawl4ai

Crawl4AI is a powerful and free web crawling service that extracts valuable data from websites and provides LLM-friendly output formats. It supports crawling multiple URLs simultaneously, replaces media tags with ALT, and is completely free to use and open-source. Users can integrate Crawl4AI into Python projects as a library or run it as a standalone local server. The tool allows users to crawl and extract data from specified URLs using different providers and models, with options to include raw HTML content, force fresh crawls, and extract meaningful text blocks. Configuration settings can be adjusted in the `crawler/config.py` file to customize providers, API keys, chunk processing, and word thresholds. Contributions to Crawl4AI are welcome from the open-source community to enhance its value for AI enthusiasts and developers.

WebAI-to-API

This project implements a web API that offers a unified interface to Google Gemini and Claude 3. It provides a self-hosted, lightweight, and scalable solution for accessing these AI models through a streaming API. The API supports both Claude and Gemini models, allowing users to interact with them in real-time. The project includes a user-friendly web UI for configuration and documentation, making it easy to get started and explore the capabilities of the API.

CyberStrikeAI

CyberStrikeAI is an AI-native security testing platform built in Go that integrates 100+ security tools, an intelligent orchestration engine, role-based testing with predefined security roles, a skills system with specialized testing skills, and comprehensive lifecycle management capabilities. It enables end-to-end automation from conversational commands to vulnerability discovery, attack-chain analysis, knowledge retrieval, and result visualization, delivering an auditable, traceable, and collaborative testing environment for security teams. The platform features an AI decision engine with OpenAI-compatible models, native MCP implementation with various transports, prebuilt tool recipes, large-result pagination, attack-chain graph, password-protected web UI, knowledge base with vector search, vulnerability management, batch task management, role-based testing, and skills system.

routilux

Routilux is a powerful event-driven workflow orchestration framework designed for building complex data pipelines and workflows effortlessly. It offers features like event queue architecture, flexible connections, built-in state management, robust error handling, concurrent execution, persistence & recovery, and simplified API. Perfect for tasks such as data pipelines, API orchestration, event processing, workflow automation, microservices coordination, and LLM agent workflows.

unity-mcp

MCP for Unity is a tool that acts as a bridge, enabling AI assistants to interact with the Unity Editor via a local MCP Client. Users can instruct their LLM to manage assets, scenes, scripts, and automate tasks within Unity. The tool offers natural language control, powerful tools for asset management, scene manipulation, and automation of workflows. It is extensible and designed to work with various MCP Clients, providing a range of functions for precise text edits, script management, GameObject operations, and more.

LEANN

LEANN is an innovative vector database that democratizes personal AI, transforming your laptop into a powerful RAG system that can index and search through millions of documents using 97% less storage than traditional solutions without accuracy loss. It achieves this through graph-based selective recomputation and high-degree preserving pruning, computing embeddings on-demand instead of storing them all. LEANN allows semantic search of file system, emails, browser history, chat history, codebase, or external knowledge bases on your laptop with zero cloud costs and complete privacy. It is a drop-in semantic search MCP service fully compatible with Claude Code, enabling intelligent retrieval without changing your workflow.

mcp-pointer

MCP Pointer is a local tool that combines an MCP Server with a Chrome Extension to allow users to visually select DOM elements in the browser and make textual context available to agentic coding tools like Claude Code. It bridges between the browser and AI tools via the Model Context Protocol, enabling real-time communication and compatibility with various AI tools. The tool extracts detailed information about selected elements, including text content, CSS properties, React component detection, and more, making it a valuable asset for developers working with AI-powered web development.

AIClient-2-API

AIClient-2-API is a versatile and lightweight API proxy designed for developers, providing ample free API request quotas and comprehensive support for various mainstream large models like Gemini, Qwen Code, Claude, etc. It converts multiple backend APIs into standard OpenAI format interfaces through a Node.js HTTP server. The project adopts a modern modular architecture, supports strategy and adapter patterns, comes with complete test coverage and health check mechanisms, and is ready to use after 'npm install'. By easily switching model service providers in the configuration file, any OpenAI-compatible client or application can seamlessly access different large model capabilities through the same API address, eliminating the hassle of maintaining multiple sets of configurations for different services and dealing with incompatible interfaces.

uLoopMCP

uLoopMCP is a Unity integration tool designed to let AI drive your Unity project forward with minimal human intervention. It provides a 'self-hosted development loop' where an AI can compile, run tests, inspect logs, and fix issues using tools like compile, run-tests, get-logs, and clear-console. It also allows AI to operate the Unity Editor itself—creating objects, calling menu items, inspecting scenes, and refining UI layouts from screenshots via tools like execute-dynamic-code, execute-menu-item, and capture-window. The tool enables AI-driven development loops to run autonomously inside existing Unity projects.

For similar tasks

probe

Probe is an AI-friendly, fully local, semantic code search tool designed to power the next generation of AI coding assistants. It combines the speed of ripgrep with the code-aware parsing of tree-sitter to deliver precise results with complete code blocks, making it perfect for large codebases and AI-driven development workflows. Probe is fully local, keeping code on the user's machine without relying on external APIs. It supports multiple languages, offers various search options, and can be used in CLI mode, MCP server mode, AI chat mode, and web interface. The tool is designed to be flexible, fast, and accurate, providing developers and AI models with full context and relevant code blocks for efficient code exploration and understanding.

probe

Probe is an AI-friendly, fully local, semantic code search tool designed to power the next generation of AI coding assistants. It combines the speed of ripgrep with the code-aware parsing of tree-sitter to deliver precise results with complete code blocks, making it perfect for large codebases and AI-driven development workflows. Probe supports various features like AI-friendly code extraction, fully local operation without external APIs, fast scanning of large codebases, accurate code structure parsing, re-rankers and NLP methods for better search results, multi-language support, interactive AI chat mode, and flexibility to run as a CLI tool, MCP server, or interactive AI chat.

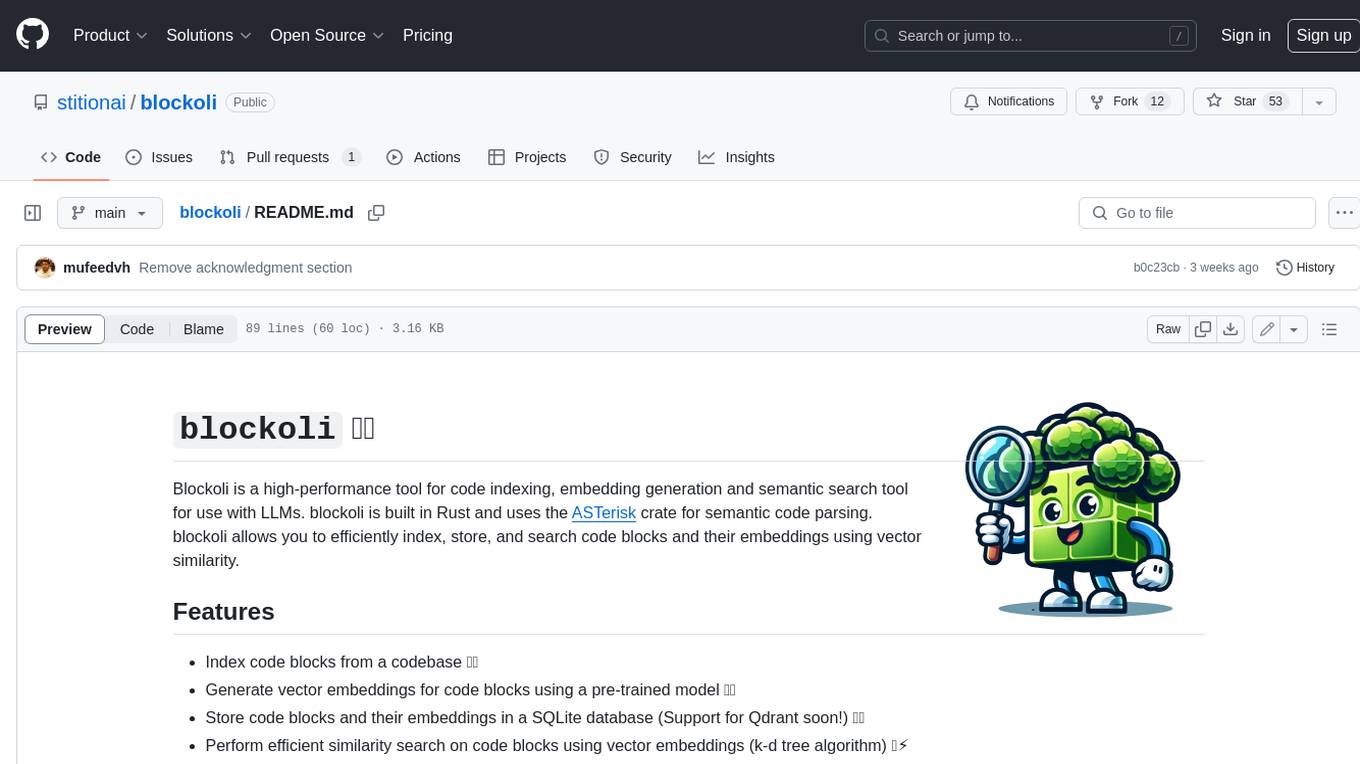

blockoli

Blockoli is a high-performance tool for code indexing, embedding generation, and semantic search tool for use with LLMs. It is built in Rust and uses the ASTerisk crate for semantic code parsing. Blockoli allows you to efficiently index, store, and search code blocks and their embeddings using vector similarity. Key features include indexing code blocks from a codebase, generating vector embeddings for code blocks using a pre-trained model, storing code blocks and their embeddings in a SQLite database, performing efficient similarity search on code blocks using vector embeddings, providing a REST API for easy integration with other tools and platforms, and being fast and memory-efficient due to its implementation in Rust.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.