ryoma

Common AI agent framework solving your data problems

Stars: 130

Ryoma is an AI Powered Data Agent framework that offers a comprehensive solution for data analysis, engineering, and visualization. It leverages cutting-edge technologies like Langchain, Reflex, Apache Arrow, Jupyter Ai Magics, Amundsen, Ibis, and Feast to provide seamless integration of language models, build interactive web applications, handle in-memory data efficiently, work with AI models, and manage machine learning features in production. Ryoma also supports various data sources like Snowflake, Sqlite, BigQuery, Postgres, MySQL, and different engines like Apache Spark and Apache Flink. The tool enables users to connect to databases, run SQL queries, and interact with data and AI models through a user-friendly UI called Ryoma Lab.

README:

AI Powered Data Agent framework, a comprehensive solution for data analysis, engineering, and visualization.

Our platform leverages a combination of cutting-edge technologies and frameworks:

- Langchain: Facilitates the seamless integration of language models into application workflows, significantly enhancing AI interaction capabilities.

- Reflex: An open-source framework for quickly building beautiful, interactive web applications in pure Python

- Apache Arrow: A cross-language development platform for in-memory data that specifies a standardized language-independent columnar memory format for flat and hierarchical data, organized for efficient analytic operations on modern hardware like CPUs and GPUs.

- Jupyter Ai Magics: A JupyterLab extension that provides a set of magics for working with AI models.

- Amundsen: A data discovery and metadata platform that helps users discover, understand, and trust the data they use.

- Ibis: A Python data analysis framework that provides a pandas-like API for analytics on large datasets.

- Feast: An operational feature store for managing and serving machine learning features to models in production.

Simply install the package using pip:

pip install ryoma_aiOr with extra dependencies:

pip install ryoma_ai[snowflake]Below is an example of using SqlAgent to connect to a PostgreSQL database and ask a question. You can read more details in the documentation.

from ryoma_ai.agent.sql import SqlAgent

from ryoma_ai.datasource.postgresql import PostgreSqlDataSource

# Connect to a PostgreSQL catalog

datasource = PostgreSqlDataSource("postgresql://user:password@localhost:5432/dbname")

# Create a SQL agent

sql_agent = SqlAgent("gpt-3.5-turbo").add_datasource(datasource)

# ask question to the agent

sql_agent.stream("I want to get the top 5 customers which making the most purchases", display=True)The Sql agent will try to run the tool as shown below:

================================ Human Message =================================

I want to get the top 5 customers which making the most purchases

================================== Ai Message ==================================

Tool Calls:

sql_database_query (call_mWCPB3GQGOTLYsvp21DGlpOb)

Call ID: call_mWCPB3GQGOTLYsvp21DGlpOb

Args:

query: SELECT C.C_NAME, SUM(L.L_EXTENDEDPRICE) AS TOTAL_PURCHASES FROM CUSTOMER C JOIN ORDERS O ON C.C_CUSTKEY = O.O_CUSTKEY JOIN LINEITEM L ON O.O_ORDERKEY = L.L_ORDERKEY GROUP BY C.C_NAME ORDER BY TOTAL_PURCHASES DESC LIMIT 5

result_format: pandas

Continue to run the tool with the following code:

sql_agent.stream(tool_mode=ToolMode.ONCE)Output will look like after running the tool:

================================== Ai Message ==================================

The top 5 customers who have made the most purchases are as follows:

1. Customer#000143500 - Total Purchases: $7,154,828.98

2. Customer#000095257 - Total Purchases: $6,645,071.02

3. Customer#000087115 - Total Purchases: $6,528,332.52

4. Customer#000134380 - Total Purchases: $6,405,556.97

5. Customer#000103834 - Total Purchases: $6,397,480.12

Ryoma lab is an application that allows you to interact with your data and AI models in UI. The ryoma lab is built with Reflex.

- Create Ryoma lab configuration file

rxconfig.pyin your project:

import logging

import reflex as rx

from reflex.constants import LogLevel

config = rx.Config(

app_name="ryoma_lab",

loglevel=LogLevel.INFO,

)

# Setup basic configuration for logging

logging.basicConfig(level=logging.INFO, format="%(asctime)s - %(levelname)s - %(message)s")- You can start the ryoma lab by running the following command:

ryoma_lab runthe ryoma lab will be available at http://localhost:3000.

Model provider are supported by jupyter ai magics. Ensure the corresponding environment variables are set before using the Ryoma agent.

| Provider | Provider ID | Environment variable(s) | Python package(s) |

|---|---|---|---|

| AI21 | ai21 |

AI21_API_KEY |

ai21 |

| Anthropic | anthropic |

ANTHROPIC_API_KEY |

langchain-anthropic |

| Anthropic (playground) | anthropic-playground |

ANTHROPIC_API_KEY |

langchain-anthropic |

| Bedrock | bedrock |

N/A | boto3 |

| Bedrock (playground) | bedrock-playground |

N/A | boto3 |

| Cohere | cohere |

COHERE_API_KEY |

cohere |

| ERNIE-Bot | qianfan |

QIANFAN_AK, QIANFAN_SK

|

qianfan |

| Gemini | gemini |

GOOGLE_API_KEY |

langchain-google-genai |

| GPT4All | gpt4all |

N/A | gpt4all |

| Hugging Face Hub | huggingface_hub |

HUGGINGFACEHUB_API_TOKEN |

huggingface_hub, ipywidgets, pillow

|

| NVIDIA | nvidia-playground |

NVIDIA_API_KEY |

langchain_nvidia_ai_endpoints |

| OpenAI | openai |

OPENAI_API_KEY |

langchain-openai |

| OpenAI (playground) | openai-playground |

OPENAI_API_KEY |

langchain-openai |

| SageMaker | sagemaker-endpoint |

N/A | boto3 |

- [x] Snowflake

- [x] Sqlite

- [x] BigQuery

- [x] Postgres

- [x] MySQL

- [x] File (CSV, Excel, Parquet, etc.)

- [ ] Redshift

- [ ] DynamoDB

- [x] Apache Spark

- [x] Apache Flink

- [ ] Presto

This project is licensed under the terms of the Apache Software License 2.0 license. See LICENSE for more details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ryoma

Similar Open Source Tools

ryoma

Ryoma is an AI Powered Data Agent framework that offers a comprehensive solution for data analysis, engineering, and visualization. It leverages cutting-edge technologies like Langchain, Reflex, Apache Arrow, Jupyter Ai Magics, Amundsen, Ibis, and Feast to provide seamless integration of language models, build interactive web applications, handle in-memory data efficiently, work with AI models, and manage machine learning features in production. Ryoma also supports various data sources like Snowflake, Sqlite, BigQuery, Postgres, MySQL, and different engines like Apache Spark and Apache Flink. The tool enables users to connect to databases, run SQL queries, and interact with data and AI models through a user-friendly UI called Ryoma Lab.

env-doctor

Env-Doctor is a tool designed to diagnose and fix mismatched CUDA versions between NVIDIA driver, system toolkit, cuDNN, and Python libraries, providing a quick solution to the common frustration in GPU computing. It offers one-command diagnosis, safe install commands, extension library support, AI model compatibility checks, WSL2 GPU support, deep CUDA analysis, container validation, MCP server integration, and CI/CD readiness. The tool helps users identify and resolve environment issues efficiently, ensuring smooth operation of AI libraries on their GPUs.

nncase

nncase is a neural network compiler for AI accelerators that supports multiple inputs and outputs, static memory allocation, operators fusion and optimizations, float and quantized uint8 inference, post quantization from float model with calibration dataset, and flat model with zero copy loading. It can be installed via pip and supports TFLite, Caffe, and ONNX ops. Users can compile nncase from source using Ninja or make. The tool is suitable for tasks like image classification, object detection, image segmentation, pose estimation, and more.

LLaVA-OneVision-1.5

LLaVA-OneVision 1.5 is a fully open framework for democratized multimodal training, introducing a novel family of large multimodal models achieving state-of-the-art performance at lower cost through training on native resolution images. It offers superior performance across multiple benchmarks, high-quality data at scale with concept-balanced and diverse caption data, and an ultra-efficient training framework with support for MoE, FP8, and long sequence parallelization. The framework is fully open for community access and reproducibility, providing high-quality pre-training & SFT data, complete training framework & code, training recipes & configurations, and comprehensive training logs & metrics.

onnxruntime-server

ONNX Runtime Server is a server that provides TCP and HTTP/HTTPS REST APIs for ONNX inference. It aims to offer simple, high-performance ML inference and a good developer experience. Users can provide inference APIs for ONNX models without writing additional code by placing the models in the directory structure. Each session can choose between CPU or CUDA, analyze input/output, and provide Swagger API documentation for easy testing. Ready-to-run Docker images are available, making it convenient to deploy the server.

fittencode.nvim

Fitten Code AI Programming Assistant for Neovim provides fast completion using AI, asynchronous I/O, and support for various actions like document code, edit code, explain code, find bugs, generate unit test, implement features, optimize code, refactor code, start chat, and more. It offers features like accepting suggestions with Tab, accepting line with Ctrl + Down, accepting word with Ctrl + Right, undoing accepted text, automatic scrolling, and multiple HTTP/REST backends. It can run as a coc.nvim source or nvim-cmp source.

aiocron

aiocron is a Python library that provides crontab functionality for asyncio. It allows users to schedule functions to run at specific times using a decorator or as an object. Users can also await a crontab, use it as a sleep coroutine, and customize functions without decorator magic. aiocron has switched from croniter to cronsim for cron expression parsing since Dec 31, 2024.

turing

Viglet Turing is an enterprise search platform that combines semantic navigation, chatbots, and generative artificial intelligence. It offers integrations for authentication APIs, OCR, content indexing, CMS connectors, web crawling, database connectors, and file system indexing.

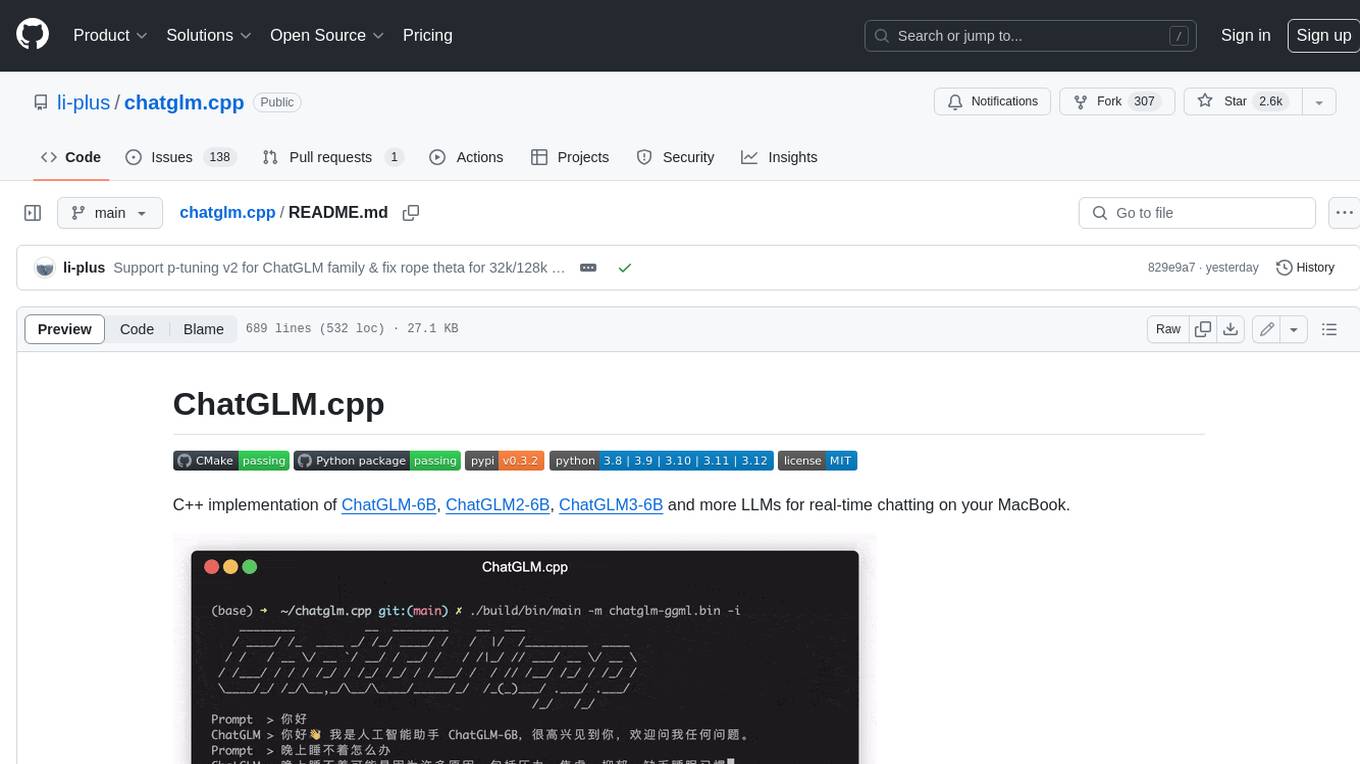

chatglm.cpp

ChatGLM.cpp is a C++ implementation of ChatGLM-6B, ChatGLM2-6B, ChatGLM3-6B and more LLMs for real-time chatting on your MacBook. It is based on ggml, working in the same way as llama.cpp. ChatGLM.cpp features accelerated memory-efficient CPU inference with int4/int8 quantization, optimized KV cache and parallel computing. It also supports P-Tuning v2 and LoRA finetuned models, streaming generation with typewriter effect, Python binding, web demo, api servers and more possibilities.

openai-scala-client

This is a no-nonsense async Scala client for OpenAI API supporting all the available endpoints and params including streaming, chat completion, vision, and voice routines. It provides a single service called OpenAIService that supports various calls such as Models, Completions, Chat Completions, Edits, Images, Embeddings, Batches, Audio, Files, Fine-tunes, Moderations, Assistants, Threads, Thread Messages, Runs, Run Steps, Vector Stores, Vector Store Files, and Vector Store File Batches. The library aims to be self-contained with minimal dependencies and supports API-compatible providers like Azure OpenAI, Azure AI, Anthropic, Google Vertex AI, Groq, Grok, Fireworks AI, OctoAI, TogetherAI, Cerebras, Mistral, Deepseek, Ollama, FastChat, and more.

openlrc

Open-Lyrics is a Python library that transcribes voice files using faster-whisper and translates/polishes the resulting text into `.lrc` files in the desired language using LLM, e.g. OpenAI-GPT, Anthropic-Claude. It offers well preprocessed audio to reduce hallucination and context-aware translation to improve translation quality. Users can install the library from PyPI or GitHub and follow the installation steps to set up the environment. The tool supports GUI usage and provides Python code examples for transcription and translation tasks. It also includes features like utilizing context and glossary for translation enhancement, pricing information for different models, and a list of todo tasks for future improvements.

GraphGen

GraphGen is a framework for synthetic data generation guided by knowledge graphs. It enhances supervised fine-tuning for large language models (LLMs) by generating synthetic data based on a fine-grained knowledge graph. The tool identifies knowledge gaps in LLMs, prioritizes generating QA pairs targeting high-value knowledge, incorporates multi-hop neighborhood sampling, and employs style-controlled generation to diversify QA data. Users can use LLaMA-Factory and xtuner for fine-tuning LLMs after data generation.

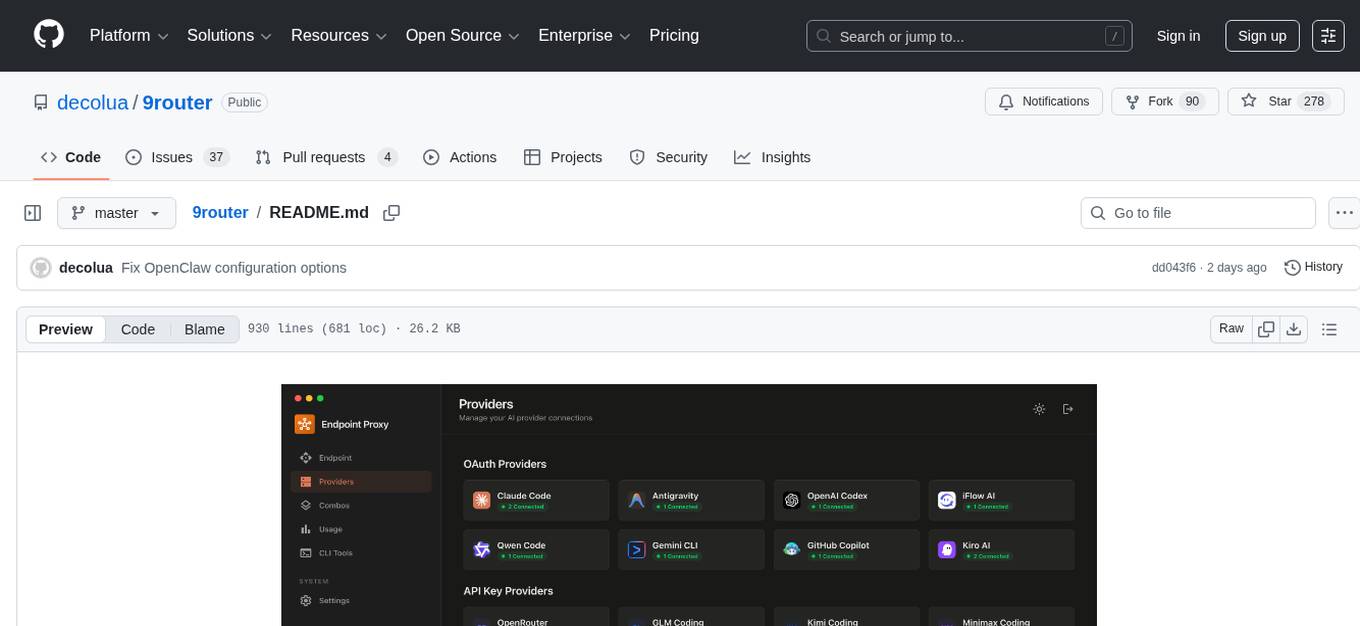

9router

9Router is a free AI router tool designed to help developers maximize their AI subscriptions, auto-route to free and cheap AI models with smart fallback, and avoid hitting limits and wasting money. It offers features like real-time quota tracking, format translation between OpenAI, Claude, and Gemini, multi-account support, auto token refresh, custom model combinations, request logging, cloud sync, usage analytics, and flexible deployment options. The tool supports various providers like Claude Code, Codex, Gemini CLI, GitHub Copilot, GLM, MiniMax, iFlow, Qwen, and Kiro, and allows users to create combos for different scenarios. Users can connect to the tool via CLI tools like Cursor, Claude Code, Codex, OpenClaw, and Cline, and deploy it on VPS, Docker, or Cloudflare Workers.

aiohttp

aiohttp is an async http client/server framework that supports both client and server side of HTTP protocol. It also supports both client and server Web-Sockets out-of-the-box and avoids Callback Hell. aiohttp provides a Web-server with middleware and pluggable routing.

mcp-context-forge

MCP Context Forge is a powerful tool for generating context-aware data for machine learning models. It provides functionalities to create diverse datasets with contextual information, enhancing the performance of AI algorithms. The tool supports various data formats and allows users to customize the context generation process easily. With MCP Context Forge, users can efficiently prepare training data for tasks requiring contextual understanding, such as sentiment analysis, recommendation systems, and natural language processing.

evalplus

EvalPlus is a rigorous evaluation framework for LLM4Code, providing HumanEval+ and MBPP+ tests to evaluate large language models on code generation tasks. It offers precise evaluation and ranking, coding rigorousness analysis, and pre-generated code samples. Users can use EvalPlus to generate code solutions, post-process code, and evaluate code quality. The tool includes tools for code generation and test input generation using various backends.

For similar tasks

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

sorrentum

Sorrentum is an open-source project that aims to combine open-source development, startups, and brilliant students to build machine learning, AI, and Web3 / DeFi protocols geared towards finance and economics. The project provides opportunities for internships, research assistantships, and development grants, as well as the chance to work on cutting-edge problems, learn about startups, write academic papers, and get internships and full-time positions at companies working on Sorrentum applications.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

mojo

Mojo is a new programming language that bridges the gap between research and production by combining Python syntax and ecosystem with systems programming and metaprogramming features. Mojo is still young, but it is designed to become a superset of Python over time.

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.