repopack

📦 Repopack is a powerful tool that packs your entire repository into a single, AI-friendly file. Perfect for when you need to feed your codebase to Large Language Models (LLMs) or other AI tools like Claude, ChatGPT, and Gemini.

Stars: 1656

Repopack is a powerful tool that packs your entire repository into a single, AI-friendly file. It optimizes your codebase for AI comprehension, is simple to use with customizable options, and respects Gitignore files for security. The tool generates a packed file with clear separators and AI-oriented explanations, making it ideal for use with Generative AI tools like Claude or ChatGPT. Repopack offers command line options, configuration settings, and multiple methods for setting ignore patterns to exclude specific files or directories during the packing process. It includes features like comment removal for supported file types and a security check using Secretlint to detect sensitive information in files.

README:

Repopack is a powerful tool that packs your entire repository into a single, AI-friendly file.

It is perfect for when you need to feed your codebase to Large Language Models (LLMs) or other AI tools like Claude, ChatGPT, and Gemini.

- AI-Optimized: Formats your codebase in a way that's easy for AI to understand and process.

- Token Counting: Provides token counts for each file and the entire repository, useful for LLM context limits.

- Simple to Use: You need just one command to pack your entire repository.

- Customizable: Easily configure what to include or exclude.

- Git-Aware: Automatically respects your .gitignore files.

- Security-Focused: Incorporates Secretlint for robust security checks to detect and prevent inclusion of sensitive information.

You can try Repopack instantly in your project directory without installation:

npx repopackOr install globally for repeated use:

# Install using npm

npm install -g repopack

# Alternatively using yarn

yarn global add repopack

# Alternatively using Homebrew (macOS)

brew install repopack

# Then run in any project directory

repopackThat's it! Repopack will generate a repopack-output.txt file in your current directory, containing your entire repository in an AI-friendly format.

To pack your entire repository:

repopackTo pack a specific directory:

repopack path/to/directoryTo pack specific files or directories using glob patterns:

repopack --include "src/**/*.ts,**/*.md"To exclude specific files or directories:

repopack --ignore "**/*.log,tmp/"To pack a remote repository:

repopack --remote https://github.com/yamadashy/repopack

# You can also use GitHub shorthand:

repopack --remote yamadashy/repopackTo initialize a new configuration file (repopack.config.json):

repopack --initOnce you have generated the packed file, you can use it with Generative AI tools like Claude, ChatGPT, and Gemini.

Once you have generated the packed file with Repopack, you can use it with AI tools like Claude, ChatGPT, and Gemini. Here are some example prompts to get you started:

For a comprehensive code review and refactoring suggestions:

This file contains my entire codebase. Please review the overall structure and suggest any improvements or refactoring opportunities, focusing on maintainability and scalability.

To generate project documentation:

Based on the codebase in this file, please generate a detailed README.md that includes an overview of the project, its main features, setup instructions, and usage examples.

For generating test cases:

Analyze the code in this file and suggest a comprehensive set of unit tests for the main functions and classes. Include edge cases and potential error scenarios.

Evaluate code quality and adherence to best practices:

Review the codebase for adherence to coding best practices and industry standards. Identify areas where the code could be improved in terms of readability, maintainability, and efficiency. Suggest specific changes to align the code with best practices.

Get a high-level understanding of the library

This file contains the entire codebase of library. Please provide a comprehensive overview of the library, including its main purpose, key features, and overall architecture.

Feel free to modify these prompts based on your specific needs and the capabilities of the AI tool you're using.

Repopack generates a single file with clear separators between different parts of your codebase.

To enhance AI comprehension, the output file begins with an AI-oriented explanation, making it easier for AI models to understand the context and structure of the packed repository.

This file is a merged representation of the entire codebase, combining all repository files into a single document.

================================================================

File Summary

================================================================

(Metadata and usage AI instructions)

================================================================

Repository Structure

================================================================

src/

cli/

cliOutput.ts

index.ts

config/

configLoader.ts

(...remaining directories)

================================================================

Repository Files

================================================================

================

File: src/index.js

================

// File contents here

================

File: src/utils.js

================

// File contents here

(...remaining files)

================================================================

Instruction

================================================================

(Custom instructions from `output.instructionFilePath`)

To generate output in XML format, use the --style xml option:

repopack --style xmlThe XML format structures the content in a hierarchical manner:

This file is a merged representation of the entire codebase, combining all repository files into a single document.

<file_summary>

(Metadata and usage AI instructions)

</file_summary>

<repository_structure>

src/

cli/

cliOutput.ts

index.ts

(...remaining directories)

</repository_structure>

<repository_files>

<file path="src/index.js">

// File contents here

</file>

(...remaining files)

</repository_files>

<instruction>

(Custom instructions from `output.instructionFilePath`)

</instruction>For those interested in the potential of XML tags in AI contexts:

https://docs.anthropic.com/en/docs/build-with-claude/prompt-engineering/use-xml-tags

When your prompts involve multiple components like context, instructions, and examples, XML tags can be a game-changer. They help Claude parse your prompts more accurately, leading to higher-quality outputs.

This means that the XML output from Repopack is not just a different format, but potentially a more effective way to feed your codebase into AI systems for analysis, code review, or other tasks.

To generate output in Markdown format, use the --style markdown option:

repopack --style markdownThe Markdown format structures the content in a hierarchical manner:

This file is a merged representation of the entire codebase, combining all repository files into a single document.

# File Summary

(Metadata and usage AI instructions)

# Repository Structure

```

src/

cli/

cliOutput.ts

index.ts

```

(...remaining directories)

# Repository Files

## File: src/index.js

```

// File contents here

```

(...remaining files)

# Instruction

(Custom instructions from `output.instructionFilePath`)This format provides a clean, readable structure that is both human-friendly and easily parseable by AI systems.

-

-v, --version: Show tool version -

-o, --output <file>: Specify the output file name -

--include <patterns>: List of include patterns (comma-separated) -

-i, --ignore <patterns>: Additional ignore patterns (comma-separated) -

-c, --config <path>: Path to a custom config file -

--style <style>: Specify the output style (plain,xml,markdown) -

--top-files-len <number>: Number of top files to display in the summary -

--output-show-line-numbers: Show line numbers in the output -

--remote <url>: Process a remote Git repository -

--verbose: Enable verbose logging

Examples:

repopack -o custom-output.txt

repopack -i "*.log,tmp" -v

repopack -c ./custom-config.json

repopack --style xml

repopack --remote https://github.com/user/repo.git

npx repopack srcTo update a globally installed Repopack:

# Using npm

npm update -g repopack

# Using yarn

yarn global upgrade repopackUsing npx repopack is generally more convenient as it always uses the latest version.

Repopack supports processing remote Git repositories without the need for manual cloning. This feature allows you to quickly analyze any public Git repository with a single command.

To process a remote repository, use the --remote option followed by the repository URL:

repopack --remote https://github.com/user/repo.gitYou can also use GitHub's shorthand format:

repopack --remote user/repoCreate a repopack.config.json file in your project root for custom configurations.

repopack --initHere's an explanation of the configuration options:

| Option | Description | Default |

|---|---|---|

output.filePath |

The name of the output file | "repopack-output.txt" |

output.style |

The style of the output (plain, xml, markdown) |

"plain" |

output.headerText |

Custom text to include in the file header | null |

output.instructionFilePath |

Path to a file containing detailed custom instructions | null |

output.removeComments |

Whether to remove comments from supported file types | false |

output.removeEmptyLines |

Whether to remove empty lines from the output | false |

output.showLineNumbers |

Whether to add line numbers to each line in the output | false |

output.topFilesLength |

Number of top files to display in the summary. If set to 0, no summary will be displayed | 5 |

include |

Patterns of files to include (using glob patterns) | [] |

ignore.useGitignore |

Whether to use patterns from the project's .gitignore file |

true |

ignore.useDefaultPatterns |

Whether to use default ignore patterns | true |

ignore.customPatterns |

Additional patterns to ignore (using glob patterns) | [] |

security.enableSecurityCheck |

Whether to perform security checks on files | true |

Example configuration:

{

"output": {

"filePath": "repopack-output.xml",

"style": "xml",

"headerText": "Custom header information for the packed file.",

"removeComments": false,

"removeEmptyLines": false,

"showLineNumbers": false,

"topFilesLength": 5

},

"include": ["**/*"],

"ignore": {

"useGitignore": true,

"useDefaultPatterns": true,

"customPatterns": ["additional-folder", "**/*.log"]

},

"security": {

"enableSecurityCheck": true

}

}To create a global configuration file:

repopack --init --globalThe global configuration file will be created in:

- Windows:

%LOCALAPPDATA%\Repopack\repopack.config.json - macOS/Linux:

$XDG_CONFIG_HOME/repopack/repopack.config.jsonor~/.config/repopack/repopack.config.json

Note: Local configuration (if present) takes precedence over global configuration.

Repopack now supports specifying files to include using glob patterns. This allows for more flexible and powerful file selection:

- Use

**/*.jsto include all JavaScript files in any directory - Use

src/**/*to include all files within thesrcdirectory and its subdirectories - Combine multiple patterns like

["src/**/*.js", "**/*.md"]to include JavaScript files insrcand all Markdown files

Repopack offers multiple methods to set ignore patterns for excluding specific files or directories during the packing process:

-

.gitignore: By default, patterns listed in your project's

.gitignorefile are used. This behavior can be controlled with theignore.useGitignoresetting. -

Default patterns: Repopack includes a default list of commonly excluded files and directories (e.g., node_modules, .git, binary files). This feature can be controlled with the

ignore.useDefaultPatternssetting. Please see defaultIgnore.ts for more details. -

.repopackignore: You can create a

.repopackignorefile in your project root to define Repopack-specific ignore patterns. This file follows the same format as.gitignore. -

Custom patterns: Additional ignore patterns can be specified using the

ignore.customPatternsoption in the configuration file. You can overwrite this setting with the-i, --ignorecommand line option.

Priority Order (from highest to lowest):

- Custom patterns

ignore.customPatterns .repopackignore-

.gitignore(ifignore.useGitignoreis true) - Default patterns (if

ignore.useDefaultPatternsis true)

This approach allows for flexible file exclusion configuration based on your project's needs. It helps optimize the size of the generated pack file by ensuring the exclusion of security-sensitive files and large binary files, while preventing the leakage of confidential information.

Note: Binary files are not included in the packed output by default, but their paths are listed in the "Repository Structure" section of the output file. This provides a complete overview of the repository structure while keeping the packed file efficient and text-based.

The output.instructionFilePath option allows you to specify a separate file containing detailed instructions or context about your project. This allows AI systems to understand the specific context and requirements of your project, potentially leading to more relevant and tailored analysis or suggestions.

Here's an example of how you might use this feature:

- Create a file named

repopack-instruction.mdin your project root:

# Coding Guidelines

- Follow the Airbnb JavaScript Style Guide

- Suggest splitting files into smaller, focused units when appropriate

- Add comments for non-obvious logic. Keep all text in English

- All new features should have corresponding unit tests

# Generate Comprehensive Output

- Include all content without abbreviation, unless specified otherwise

- Optimize for handling large codebases while maintaining output quality- In your

repopack.config.json, add theinstructionFilePathoption:

{

"output": {

"instructionFilePath": "repopack-instruction.md",

// other options...

}

}When Repopack generates the output, it will include the contents of repopack-instruction.md in a dedicated section.

Note: The instruction content is appended at the end of the output file. This placement can be particularly effective for AI systems. For those interested in understanding why this might be beneficial, Anthropic provides some insights in their documentation:

https://docs.anthropic.com/en/docs/build-with-claude/prompt-engineering/long-context-tips

Put long-form data at the top: Place your long documents and inputs (~20K+ tokens) near the top of your prompt, above your query, instructions, and examples. This can significantly improve Claude's performance across all models. Queries at the end can improve response quality by up to 30% in tests, especially with complex, multi-document inputs.

When output.removeComments is set to true, Repopack will attempt to remove comments from supported file types. This feature can help reduce the size of the output file and focus on the essential code content.

Supported languages include:

HTML, CSS, JavaScript, TypeScript, Vue, Svelte, Python, PHP, Ruby, C, C#, Java, Go, Rust, Swift, Kotlin, Dart, Shell, and YAML.

Note: The comment removal process is conservative to avoid accidentally removing code. In complex cases, some comments might be retained.

Repopack includes a security check feature that uses Secretlint to detect potentially sensitive information in your files. This feature helps you identify possible security risks before sharing your packed repository.

The security check results will be displayed in the CLI output after the packing process is complete. If any suspicious files are detected, you'll see a list of these files along with a warning message.

Example output:

🔍 Security Check:

──────────────────

2 suspicious file(s) detected:

1. src/utils/test.txt

2. tests/utils/secretLintUtils.test.ts

Please review these files for potentially sensitive information.

By default, Repopack's security check feature is enabled. You can disable it by setting security.enableSecurityCheck to false in your configuration file:

{

"security": {

"enableSecurityCheck": false

}

}We welcome contributions from the community! To get started, please refer to our Contributing Guide.

This project is licensed under the MIT License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for repopack

Similar Open Source Tools

repopack

Repopack is a powerful tool that packs your entire repository into a single, AI-friendly file. It optimizes your codebase for AI comprehension, is simple to use with customizable options, and respects Gitignore files for security. The tool generates a packed file with clear separators and AI-oriented explanations, making it ideal for use with Generative AI tools like Claude or ChatGPT. Repopack offers command line options, configuration settings, and multiple methods for setting ignore patterns to exclude specific files or directories during the packing process. It includes features like comment removal for supported file types and a security check using Secretlint to detect sensitive information in files.

CodeGPT

CodeGPT is a CLI tool written in Go that helps you write git commit messages or do a code review brief using ChatGPT AI (gpt-3.5-turbo, gpt-4 model) and automatically installs a git prepare-commit-msg hook. It supports Azure OpenAI Service or OpenAI API, conventional commits specification, Git prepare-commit-msg Hook, customizing the number of lines of context in diffs, excluding files from the git diff command, translating commit messages into different languages, using socks or custom network HTTP proxies, specifying model lists, and doing brief code reviews.

repomix

Repomix is a powerful tool that packs your entire repository into a single, AI-friendly file. It is designed to format your codebase for easy understanding by AI tools like Large Language Models (LLMs), Claude, ChatGPT, and Gemini. Repomix offers features such as AI optimization, token counting, simplicity in usage, customization options, Git awareness, and security-focused checks using Secretlint. It allows users to pack their entire repository or specific directories/files using glob patterns, and even supports processing remote Git repositories. The tool generates output in plain text, XML, or Markdown formats, with options for including/excluding files, removing comments, and performing security checks. Repomix also provides a global configuration option, custom instructions for AI context, and a security check feature to detect sensitive information in files.

dvc

DVC, or Data Version Control, is a command-line tool and VS Code extension that helps you develop reproducible machine learning projects. With DVC, you can version your data and models, iterate fast with lightweight pipelines, track experiments in your local Git repo, compare any data, code, parameters, model, or performance plots, and share experiments and automatically reproduce anyone's experiment.

llm.nvim

llm.nvim is a plugin for Neovim that enables code completion using LLM models. It supports 'ghost-text' code completion similar to Copilot and allows users to choose their model for code generation via HTTP requests. The plugin interfaces with multiple backends like Hugging Face, Ollama, Open AI, and TGI, providing flexibility in model selection and configuration. Users can customize the behavior of suggestions, tokenization, and model parameters to enhance their coding experience. llm.nvim also includes commands for toggling auto-suggestions and manually requesting suggestions, making it a versatile tool for developers using Neovim.

model.nvim

model.nvim is a tool designed for Neovim users who want to utilize AI models for completions or chat within their text editor. It allows users to build prompts programmatically with Lua, customize prompts, experiment with multiple providers, and use both hosted and local models. The tool supports features like provider agnosticism, programmatic prompts in Lua, async and multistep prompts, streaming completions, and chat functionality in 'mchat' filetype buffer. Users can customize prompts, manage responses, and context, and utilize various providers like OpenAI ChatGPT, Google PaLM, llama.cpp, ollama, and more. The tool also supports treesitter highlights and folds for chat buffers.

minuet-ai.nvim

Minuet AI is a Neovim plugin that integrates with nvim-cmp to provide AI-powered code completion using multiple AI providers such as OpenAI, Claude, Gemini, Codestral, and Huggingface. It offers customizable configuration options and streaming support for completion delivery. Users can manually invoke completion or use cost-effective models for auto-completion. The plugin requires API keys for supported AI providers and allows customization of system prompts. Minuet AI also supports changing providers, toggling auto-completion, and provides solutions for input delay issues. Integration with lazyvim is possible, and future plans include implementing RAG on the codebase and virtual text UI support.

avante.nvim

avante.nvim is a Neovim plugin that emulates the behavior of the Cursor AI IDE, providing AI-driven code suggestions and enabling users to apply recommendations to their source files effortlessly. It offers AI-powered code assistance and one-click application of suggested changes, streamlining the editing process and saving time. The plugin is still in early development, with functionalities like setting API keys, querying AI about code, reviewing suggestions, and applying changes. Key bindings are available for various actions, and the roadmap includes enhancing AI interactions, stability improvements, and introducing new features for coding tasks.

parrot.nvim

Parrot.nvim is a Neovim plugin that prioritizes a seamless out-of-the-box experience for text generation. It simplifies functionality and focuses solely on text generation, excluding integration of DALLE and Whisper. It supports persistent conversations as markdown files, custom hooks for inline text editing, multiple providers like Anthropic API, perplexity.ai API, OpenAI API, Mistral API, and local/offline serving via ollama. It allows custom agent definitions, flexible API credential support, and repository-specific instructions with a `.parrot.md` file. It does not have autocompletion or hidden requests in the background to analyze files.

mcphub.nvim

MCPHub.nvim is a powerful Neovim plugin that integrates MCP (Model Context Protocol) servers into your workflow. It offers a centralized config file for managing servers and tools, with an intuitive UI for testing resources. Ideal for LLM integration, it provides programmatic API access and interactive testing through the `:MCPHub` command.

litegraph

LiteGraph is a property graph database designed for knowledge and artificial intelligence applications. It supports graph relationships, tags, labels, metadata, data, and vectors. LiteGraph can be used in-process with LiteGraphClient or as a standalone RESTful server with LiteGraph.Server. The latest version includes major internal refactor, batch APIs, enumeration APIs, statistics APIs, database caching, vector search enhancements, and bug fixes. LiteGraph allows for simple embedding into applications without user configuration. Users can create tenants, graphs, nodes, edges, and perform operations like finding routes and exporting to GEXF file. It also provides features for working with object labels, tags, data, and vectors, enabling filtering and searching based on various criteria. LiteGraph offers REST API deployment with LiteGraph.Server and Docker support with a Docker image available on Docker Hub.

clarifai-python

The Clarifai Python SDK offers a comprehensive set of tools to integrate Clarifai's AI platform to leverage computer vision capabilities like classification , detection ,segementation and natural language capabilities like classification , summarisation , generation , Q&A ,etc into your applications. With just a few lines of code, you can leverage cutting-edge artificial intelligence to unlock valuable insights from visual and textual content.

deepgram-js-sdk

Deepgram JavaScript SDK. Power your apps with world-class speech and Language AI models.

instructor

Instructor is a Python library that makes it a breeze to work with structured outputs from large language models (LLMs). Built on top of Pydantic, it provides a simple, transparent, and user-friendly API to manage validation, retries, and streaming responses. Get ready to supercharge your LLM workflows!

Tutel

Tutel MoE is an optimized Mixture-of-Experts implementation that offers a parallel solution with 'No-penalty Parallism/Sparsity/Capacity/Switching' for modern training and inference. It supports Pytorch framework (version >= 1.10) and various GPUs including CUDA and ROCm. The tool enables Full Precision Inference of MoE-based Deepseek R1 671B on AMD MI300. Tutel provides features like all-to-all benchmarking, tensorcore option, NCCL timeout settings, Megablocks solution, and dynamic switchable configurations. Users can run Tutel in distributed mode across multiple GPUs and machines. The tool allows for custom MoE implementations and offers detailed usage examples and reference documentation.

litdata

LitData is a tool designed for blazingly fast, distributed streaming of training data from any cloud storage. It allows users to transform and optimize data in cloud storage environments efficiently and intuitively, supporting various data types like images, text, video, audio, geo-spatial, and multimodal data. LitData integrates smoothly with frameworks such as LitGPT and PyTorch, enabling seamless streaming of data to multiple machines. Key features include multi-GPU/multi-node support, easy data mixing, pause & resume functionality, support for profiling, memory footprint reduction, cache size configuration, and on-prem optimizations. The tool also provides benchmarks for measuring streaming speed and conversion efficiency, along with runnable templates for different data types. LitData enables infinite cloud data processing by utilizing the Lightning.ai platform to scale data processing with optimized machines.

For similar tasks

repopack

Repopack is a powerful tool that packs your entire repository into a single, AI-friendly file. It optimizes your codebase for AI comprehension, is simple to use with customizable options, and respects Gitignore files for security. The tool generates a packed file with clear separators and AI-oriented explanations, making it ideal for use with Generative AI tools like Claude or ChatGPT. Repopack offers command line options, configuration settings, and multiple methods for setting ignore patterns to exclude specific files or directories during the packing process. It includes features like comment removal for supported file types and a security check using Secretlint to detect sensitive information in files.

pr-agent

PR-Agent is a tool that helps to efficiently review and handle pull requests by providing AI feedbacks and suggestions. It supports various commands such as generating PR descriptions, providing code suggestions, answering questions about the PR, and updating the CHANGELOG.md file. PR-Agent can be used via CLI, GitHub Action, GitHub App, Docker, and supports multiple git providers and models. It emphasizes real-life practical usage, with each tool having a single GPT-4 call for quick and affordable responses. The PR Compression strategy enables effective handling of both short and long PRs, while the JSON prompting strategy allows for modular and customizable tools. PR-Agent Pro, the hosted version by CodiumAI, provides additional benefits such as full management, improved privacy, priority support, and extra features.

shell_gpt

ShellGPT is a command-line productivity tool powered by AI large language models (LLMs). This command-line tool offers streamlined generation of shell commands, code snippets, documentation, eliminating the need for external resources (like Google search). Supports Linux, macOS, Windows and compatible with all major Shells like PowerShell, CMD, Bash, Zsh, etc.

gpt-pilot

GPT Pilot is a core technology for the Pythagora VS Code extension, aiming to provide the first real AI developer companion. It goes beyond autocomplete, helping with writing full features, debugging, issue discussions, and reviews. The tool utilizes LLMs to generate production-ready apps, with developers overseeing the implementation. GPT Pilot works step by step like a developer, debugging issues as they arise. It can work at any scale, filtering out code to show only relevant parts to the AI during tasks. Contributions are welcome, with debugging and telemetry being key areas of focus for improvement.

sirji

Sirji is an agentic AI framework for software development where various AI agents collaborate via a messaging protocol to solve software problems. It uses standard or user-generated recipes to list tasks and tips for problem-solving. Agents in Sirji are modular AI components that perform specific tasks based on custom pseudo code. The framework is currently implemented as a Visual Studio Code extension, providing an interactive chat interface for problem submission and feedback. Sirji sets up local or remote development environments by installing dependencies and executing generated code.

awesome-ai-devtools

Awesome AI-Powered Developer Tools is a curated list of AI-powered developer tools that leverage AI to assist developers in tasks such as code completion, refactoring, debugging, documentation, and more. The repository includes a wide range of tools, from IDEs and Git clients to assistants, agents, app generators, UI generators, snippet generators, documentation tools, code generation tools, agent platforms, OpenAI plugins, search tools, and testing tools. These tools are designed to enhance developer productivity and streamline various development tasks by integrating AI capabilities.

doc-comments-ai

doc-comments-ai is a tool designed to automatically generate code documentation using language models. It allows users to easily create documentation comment blocks for methods in various programming languages such as Python, Typescript, Javascript, Java, Rust, and more. The tool supports both OpenAI and local LLMs, ensuring data privacy and security. Users can generate documentation comments for methods in files, inline comments in method bodies, and choose from different models like GPT-3.5-Turbo, GPT-4, and Azure OpenAI. Additionally, the tool provides support for Treesitter integration and offers guidance on selecting the appropriate model for comprehensive documentation needs.

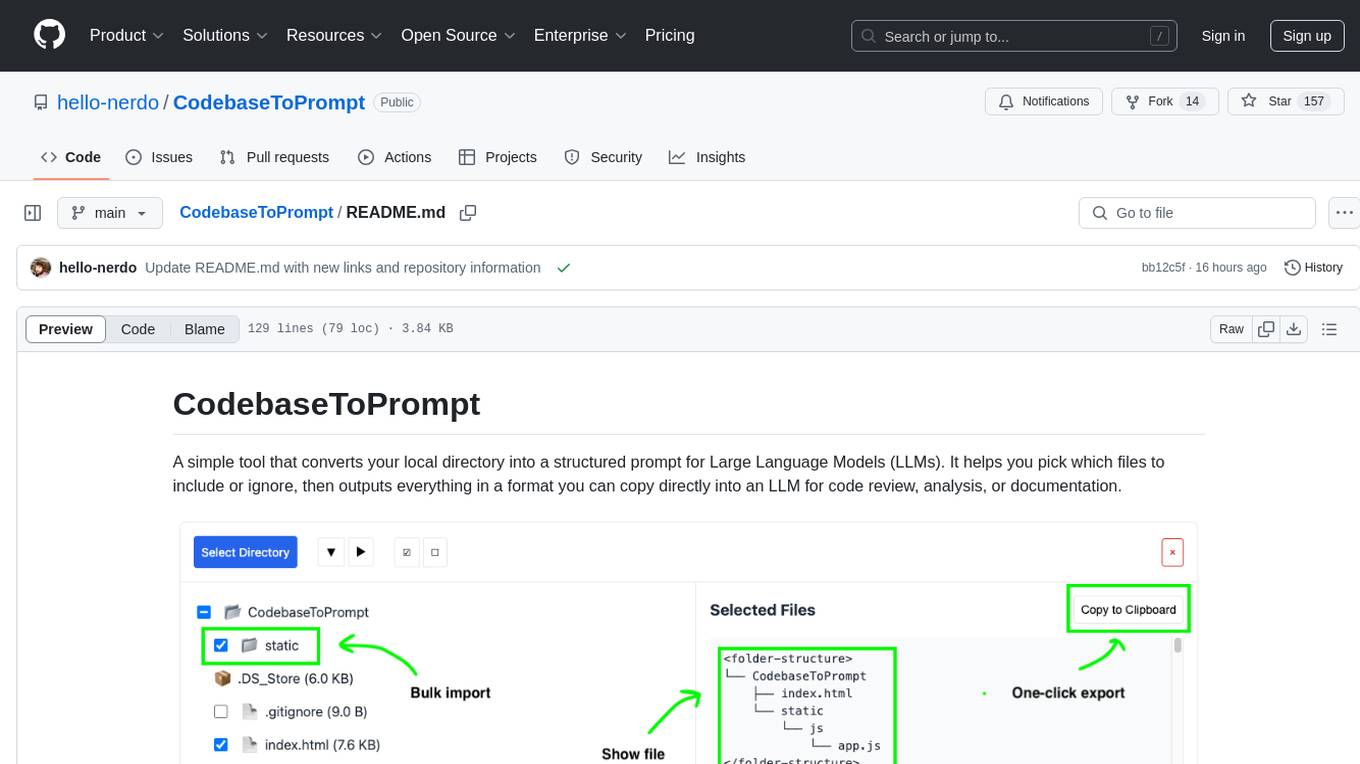

CodebaseToPrompt

CodebaseToPrompt is a tool that converts a local directory into a structured prompt for Large Language Models (LLMs). It allows users to select specific files for code review, analysis, or documentation by exploring and filtering through the file tree in an interactive interface. The tool generates a formatted output that can be directly used with LLMs, estimates token count, and supports flexible text selection. Users can deploy the tool using Docker for self-contained usage and can contribute to the project by opening issues or submitting pull requests.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.