shell_gpt

A command-line productivity tool powered by AI large language models like GPT-4, will help you accomplish your tasks faster and more efficiently.

Stars: 9019

ShellGPT is a command-line productivity tool powered by AI large language models (LLMs). This command-line tool offers streamlined generation of shell commands, code snippets, documentation, eliminating the need for external resources (like Google search). Supports Linux, macOS, Windows and compatible with all major Shells like PowerShell, CMD, Bash, Zsh, etc.

README:

A command-line productivity tool powered by AI large language models (LLM). This command-line tool offers streamlined generation of shell commands, code snippets, documentation, eliminating the need for external resources (like Google search). Supports Linux, macOS, Windows and compatible with all major Shells like PowerShell, CMD, Bash, Zsh, etc.

https://github.com/TheR1D/shell_gpt/assets/16740832/9197283c-db6a-4b46-bfea-3eb776dd9093

pip install shell-gptBy default, ShellGPT uses OpenAI's API and GPT-4 model. You'll need an API key, you can generate one here. You will be prompted for your key which will then be stored in ~/.config/shell_gpt/.sgptrc. OpenAI API is not free of charge, please refer to the OpenAI pricing for more information.

[!TIP] Alternatively, you can use locally hosted open source models which are available for free. To use local models, you will need to run your own LLM backend server such as Ollama. To set up ShellGPT with Ollama, please follow this comprehensive guide.

❗️Note that ShellGPT is not optimized for local models and may not work as expected.

ShellGPT is designed to quickly analyse and retrieve information. It's useful for straightforward requests ranging from technical configurations to general knowledge.

sgpt "What is the fibonacci sequence"

# -> The Fibonacci sequence is a series of numbers where each number ...ShellGPT accepts prompt from both stdin and command line argument. Whether you prefer piping input through the terminal or specifying it directly as arguments, sgpt got you covered. For example, you can easily generate a git commit message based on a diff:

git diff | sgpt "Generate git commit message, for my changes"

# -> Added main feature details into README.mdYou can analyze logs from various sources by passing them using stdin, along with a prompt. For instance, we can use it to quickly analyze logs, identify errors and get suggestions for possible solutions:

docker logs -n 20 my_app | sgpt "check logs, find errors, provide possible solutions"Error Detected: Connection timeout at line 7.

Possible Solution: Check network connectivity and firewall settings.

Error Detected: Memory allocation failed at line 12.

Possible Solution: Consider increasing memory allocation or optimizing application memory usage.

You can also use all kind of redirection operators to pass input:

sgpt "summarise" < document.txt

# -> The document discusses the impact...

sgpt << EOF

What is the best way to lear Golang?

Provide simple hello world example.

EOF

# -> The best way to learn Golang...

sgpt <<< "What is the best way to learn shell redirects?"

# -> The best way to learn shell redirects is through...Have you ever found yourself forgetting common shell commands, such as find, and needing to look up the syntax online? With --shell or shortcut -s option, you can quickly generate and execute the commands you need right in the terminal.

sgpt --shell "find all json files in current folder"

# -> find . -type f -name "*.json"

# -> [E]xecute, [D]escribe, [A]bort: eShell GPT is aware of OS and $SHELL you are using, it will provide shell command for specific system you have. For instance, if you ask sgpt to update your system, it will return a command based on your OS. Here's an example using macOS:

sgpt -s "update my system"

# -> sudo softwareupdate -i -a

# -> [E]xecute, [D]escribe, [A]bort: eThe same prompt, when used on Ubuntu, will generate a different suggestion:

sgpt -s "update my system"

# -> sudo apt update && sudo apt upgrade -y

# -> [E]xecute, [D]escribe, [A]bort: eLet's try it with Docker:

sgpt -s "start nginx container, mount ./index.html"

# -> docker run -d -p 80:80 -v $(pwd)/index.html:/usr/share/nginx/html/index.html nginx

# -> [E]xecute, [D]escribe, [A]bort: eWe can still use pipes to pass input to sgpt and generate shell commands:

sgpt -s "POST localhost with" < data.json

# -> curl -X POST -H "Content-Type: application/json" -d '{"a": 1, "b": 2}' http://localhost

# -> [E]xecute, [D]escribe, [A]bort: eApplying additional shell magic in our prompt, in this example passing file names to ffmpeg:

ls

# -> 1.mp4 2.mp4 3.mp4

sgpt -s "ffmpeg combine $(ls -m) into one video file without audio."

# -> ffmpeg -i 1.mp4 -i 2.mp4 -i 3.mp4 -filter_complex "[0:v] [1:v] [2:v] concat=n=3:v=1 [v]" -map "[v]" out.mp4

# -> [E]xecute, [D]escribe, [A]bort: eIf you would like to pass generated shell command using pipe, you can use --no-interaction option. This will disable interactive mode and will print generated command to stdout. In this example we are using pbcopy to copy generated command to clipboard:

sgpt -s "find all json files in current folder" --no-interaction | pbcopyThis is a very handy feature, which allows you to use sgpt shell completions directly in your terminal, without the need to type sgpt with prompt and arguments. Shell integration enables the use of ShellGPT with hotkeys in your terminal, supported by both Bash and ZSH shells. This feature puts sgpt completions directly into terminal buffer (input line), allowing for immediate editing of suggested commands.

https://github.com/TheR1D/shell_gpt/assets/16740832/bead0dab-0dd9-436d-88b7-6abfb2c556c1

To install shell integration, run sgpt --install-integration and restart your terminal to apply changes. This will add few lines to your .bashrc or .zshrc file. After that, you can use Ctrl+l (by default) to invoke ShellGPT. When you press Ctrl+l it will replace you current input line (buffer) with suggested command. You can then edit it and just press Enter to execute.

By using the --code or -c parameter, you can specifically request pure code output, for instance:

sgpt --code "solve fizz buzz problem using python"for i in range(1, 101):

if i % 3 == 0 and i % 5 == 0:

print("FizzBuzz")

elif i % 3 == 0:

print("Fizz")

elif i % 5 == 0:

print("Buzz")

else:

print(i)Since it is valid python code, we can redirect the output to a file:

sgpt --code "solve classic fizz buzz problem using Python" > fizz_buzz.py

python fizz_buzz.py

# 1

# 2

# Fizz

# 4

# Buzz

# ...We can also use pipes to pass input:

cat fizz_buzz.py | sgpt --code "Generate comments for each line of my code"# Loop through numbers 1 to 100

for i in range(1, 101):

# Check if number is divisible by both 3 and 5

if i % 3 == 0 and i % 5 == 0:

# Print "FizzBuzz" if number is divisible by both 3 and 5

print("FizzBuzz")

# Check if number is divisible by 3

elif i % 3 == 0:

# Print "Fizz" if number is divisible by 3

print("Fizz")

# Check if number is divisible by 5

elif i % 5 == 0:

# Print "Buzz" if number is divisible by 5

print("Buzz")

# If number is not divisible by 3 or 5, print the number itself

else:

print(i)Often it is important to preserve and recall a conversation. sgpt creates conversational dialogue with each LLM completion requested. The dialogue can develop one-by-one (chat mode) or interactively, in a REPL loop (REPL mode). Both ways rely on the same underlying object, called a chat session. The session is located at the configurable CHAT_CACHE_PATH.

To start a conversation, use the --chat option followed by a unique session name and a prompt.

sgpt --chat conversation_1 "please remember my favorite number: 4"

# -> I will remember that your favorite number is 4.

sgpt --chat conversation_1 "what would be my favorite number + 4?"

# -> Your favorite number is 4, so if we add 4 to it, the result would be 8.You can use chat sessions to iteratively improve GPT suggestions by providing additional details. It is possible to use --code or --shell options to initiate --chat:

sgpt --chat conversation_2 --code "make a request to localhost using python"import requests

response = requests.get('http://localhost')

print(response.text)Let's ask LLM to add caching to our request:

sgpt --chat conversation_2 --code "add caching"import requests

from cachecontrol import CacheControl

sess = requests.session()

cached_sess = CacheControl(sess)

response = cached_sess.get('http://localhost')

print(response.text)Same applies for shell commands:

sgpt --chat conversation_3 --shell "what is in current folder"

# -> ls

sgpt --chat conversation_3 "Sort by name"

# -> ls | sort

sgpt --chat conversation_3 "Concatenate them using FFMPEG"

# -> ffmpeg -i "concat:$(ls | sort | tr '\n' '|')" -codec copy output.mp4

sgpt --chat conversation_3 "Convert the resulting file into an MP3"

# -> ffmpeg -i output.mp4 -vn -acodec libmp3lame -ac 2 -ab 160k -ar 48000 final_output.mp3To list all the sessions from either conversational mode, use the --list-chats or -lc option:

sgpt --list-chats

# .../shell_gpt/chat_cache/conversation_1

# .../shell_gpt/chat_cache/conversation_2To show all the messages related to a specific conversation, use the --show-chat option followed by the session name:

sgpt --show-chat conversation_1

# user: please remember my favorite number: 4

# assistant: I will remember that your favorite number is 4.

# user: what would be my favorite number + 4?

# assistant: Your favorite number is 4, so if we add 4 to it, the result would be 8.There is very handy REPL (read–eval–print loop) mode, which allows you to interactively chat with GPT models. To start a chat session in REPL mode, use the --repl option followed by a unique session name. You can also use "temp" as a session name to start a temporary REPL session. Note that --chat and --repl are using same underlying object, so you can use --chat to start a chat session and then pick it up with --repl to continue the conversation in REPL mode.

sgpt --repl temp

Entering REPL mode, press Ctrl+C to exit.

>>> What is REPL?

REPL stands for Read-Eval-Print Loop. It is a programming environment ...

>>> How can I use Python with REPL?

To use Python with REPL, you can simply open a terminal or command prompt ...

REPL mode can work with --shell and --code options, which makes it very handy for interactive shell commands and code generation:

sgpt --repl temp --shell

Entering shell REPL mode, type [e] to execute commands or press Ctrl+C to exit.

>>> What is in current folder?

ls

>>> Show file sizes

ls -lh

>>> Sort them by file sizes

ls -lhS

>>> e (enter just e to execute commands, or d to describe them)

To provide multiline prompt use triple quotes """:

sgpt --repl temp

Entering REPL mode, press Ctrl+C to exit.

>>> """

... Explain following code:

... import random

... print(random.randint(1, 10))

... """

It is a Python script that uses the random module to generate and print a random integer.

You can also enter REPL mode with initial prompt by passing it as an argument or stdin or even both:

sgpt --repl temp < my_app.pyEntering REPL mode, press Ctrl+C to exit.

──────────────────────────────────── Input ────────────────────────────────────

name = input("What is your name?")

print(f"Hello {name}")

───────────────────────────────────────────────────────────────────────────────

>>> What is this code about?

The snippet of code you've provided is written in Python. It prompts the user...

>>> Follow up questions...

Function calls is a powerful feature OpenAI provides. It allows LLM to execute functions in your system, which can be used to accomplish a variety of tasks. To install default functions run:

sgpt --install-functionsShellGPT has a convenient way to define functions and use them. In order to create your custom function, navigate to ~/.config/shell_gpt/functions and create a new .py file with the function name. Inside this file, you can define your function using the following syntax:

# execute_shell_command.py

import subprocess

from pydantic import Field

from instructor import OpenAISchema

class Function(OpenAISchema):

"""

Executes a shell command and returns the output (result).

"""

shell_command: str = Field(..., example="ls -la", descriptions="Shell command to execute.")

class Config:

title = "execute_shell_command"

@classmethod

def execute(cls, shell_command: str) -> str:

result = subprocess.run(shell_command.split(), capture_output=True, text=True)

return f"Exit code: {result.returncode}, Output:\n{result.stdout}"The docstring comment inside the class will be passed to OpenAI API as a description for the function, along with the title attribute and parameters descriptions. The execute function will be called if LLM decides to use your function. In this case we are allowing LLM to execute any Shell commands in our system. Since we are returning the output of the command, LLM will be able to analyze it and decide if it is a good fit for the prompt. Here is an example how the function might be executed by LLM:

sgpt "What are the files in /tmp folder?"

# -> @FunctionCall execute_shell_command(shell_command="ls /tmp")

# -> The /tmp folder contains the following files and directories:

# -> test.txt

# -> test.jsonNote that if for some reason the function (execute_shell_command) will return an error, LLM might try to accomplish the task based on the output. Let's say we don't have installed jq in our system, and we ask LLM to parse JSON file:

sgpt "parse /tmp/test.json file using jq and return only email value"

# -> @FunctionCall execute_shell_command(shell_command="jq -r '.email' /tmp/test.json")

# -> It appears that jq is not installed on the system. Let me try to install it using brew.

# -> @FunctionCall execute_shell_command(shell_command="brew install jq")

# -> jq has been successfully installed. Let me try to parse the file again.

# -> @FunctionCall execute_shell_command(shell_command="jq -r '.email' /tmp/test.json")

# -> The email value in /tmp/test.json is johndoe@example.It is also possible to chain multiple function calls in the prompt:

sgpt "Play music and open hacker news"

# -> @FunctionCall play_music()

# -> @FunctionCall open_url(url="https://news.ycombinator.com")

# -> Music is now playing, and Hacker News has been opened in your browser. Enjoy!This is just a simple example of how you can use function calls. It is truly a powerful feature that can be used to accomplish a variety of complex tasks. We have dedicated category in GitHub Discussions for sharing and discussing functions. LLM might execute destructive commands, so please use it at your own risk❗️

ShellGPT allows you to create custom roles, which can be utilized to generate code, shell commands, or to fulfill your specific needs. To create a new role, use the --create-role option followed by the role name. You will be prompted to provide a description for the role, along with other details. This will create a JSON file in ~/.config/shell_gpt/roles with the role name. Inside this directory, you can also edit default sgpt roles, such as shell, code, and default. Use the --list-roles option to list all available roles, and the --show-role option to display the details of a specific role. Here's an example of a custom role:

sgpt --create-role json_generator

# Enter role description: Provide only valid json as response.

sgpt --role json_generator "random: user, password, email, address"{

"user": "JohnDoe",

"password": "p@ssw0rd",

"email": "[email protected]",

"address": {

"street": "123 Main St",

"city": "Anytown",

"state": "CA",

"zip": "12345"

}

}If the description of the role contains the words "APPLY MARKDOWN" (case sensitive), then chats will be displayed using markdown formatting.

Control cache using --cache (default) and --no-cache options. This caching applies for all sgpt requests to OpenAI API:

sgpt "what are the colors of a rainbow"

# -> The colors of a rainbow are red, orange, yellow, green, blue, indigo, and violet.Next time, same exact query will get results from local cache instantly. Note that sgpt "what are the colors of a rainbow" --temperature 0.5 will make a new request, since we didn't provide --temperature (same applies to --top-probability) on previous request.

This is just some examples of what we can do using OpenAI GPT models, I'm sure you will find it useful for your specific use cases.

You can setup some parameters in runtime configuration file ~/.config/shell_gpt/.sgptrc:

# API key, also it is possible to define OPENAI_API_KEY env.

OPENAI_API_KEY=your_api_key

# Base URL of the backend server. If "default" URL will be resolved based on --model.

API_BASE_URL=default

# Max amount of cached message per chat session.

CHAT_CACHE_LENGTH=100

# Chat cache folder.

CHAT_CACHE_PATH=/tmp/shell_gpt/chat_cache

# Request cache length (amount).

CACHE_LENGTH=100

# Request cache folder.

CACHE_PATH=/tmp/shell_gpt/cache

# Request timeout in seconds.

REQUEST_TIMEOUT=60

# Default OpenAI model to use.

DEFAULT_MODEL=gpt-4o

# Default color for shell and code completions.

DEFAULT_COLOR=magenta

# When in --shell mode, default to "Y" for no input.

DEFAULT_EXECUTE_SHELL_CMD=false

# Disable streaming of responses

DISABLE_STREAMING=false

# The pygment theme to view markdown (default/describe role).

CODE_THEME=default

# Path to a directory with functions.

OPENAI_FUNCTIONS_PATH=/Users/user/.config/shell_gpt/functions

# Print output of functions when LLM uses them.

SHOW_FUNCTIONS_OUTPUT=false

# Allows LLM to use functions.

OPENAI_USE_FUNCTIONS=true

# Enforce LiteLLM usage (for local LLMs).

USE_LITELLM=false

Possible options for DEFAULT_COLOR: black, red, green, yellow, blue, magenta, cyan, white, bright_black, bright_red, bright_green, bright_yellow, bright_blue, bright_magenta, bright_cyan, bright_white.

Possible options for CODE_THEME: https://pygments.org/styles/

╭─ Arguments ──────────────────────────────────────────────────────────────────────────────────────────────╮

│ prompt [PROMPT] The prompt to generate completions for. │

╰──────────────────────────────────────────────────────────────────────────────────────────────────────────╯

╭─ Options ────────────────────────────────────────────────────────────────────────────────────────────────╮

│ --model TEXT Large language model to use. [default: gpt-4o] │

│ --temperature FLOAT RANGE [0.0<=x<=2.0] Randomness of generated output. [default: 0.0] │

│ --top-p FLOAT RANGE [0.0<=x<=1.0] Limits highest probable tokens (words). [default: 1.0] │

│ --md --no-md Prettify markdown output. [default: md] │

│ --editor Open $EDITOR to provide a prompt. [default: no-editor] │

│ --cache Cache completion results. [default: cache] │

│ --version Show version. │

│ --help Show this message and exit. │

╰──────────────────────────────────────────────────────────────────────────────────────────────────────────╯

╭─ Assistance Options ─────────────────────────────────────────────────────────────────────────────────────╮

│ --shell -s Generate and execute shell commands. │

│ --interaction --no-interaction Interactive mode for --shell option. [default: interaction] │

│ --describe-shell -d Describe a shell command. │

│ --code -c Generate only code. │

│ --functions --no-functions Allow function calls. [default: functions] │

╰──────────────────────────────────────────────────────────────────────────────────────────────────────────╯

╭─ Chat Options ───────────────────────────────────────────────────────────────────────────────────────────╮

│ --chat TEXT Follow conversation with id, use "temp" for quick session. [default: None] │

│ --repl TEXT Start a REPL (Read–eval–print loop) session. [default: None] │

│ --show-chat TEXT Show all messages from provided chat id. [default: None] │

│ --list-chats -lc List all existing chat ids. │

╰──────────────────────────────────────────────────────────────────────────────────────────────────────────╯

╭─ Role Options ───────────────────────────────────────────────────────────────────────────────────────────╮

│ --role TEXT System role for GPT model. [default: None] │

│ --create-role TEXT Create role. [default: None] │

│ --show-role TEXT Show role. [default: None] │

│ --list-roles -lr List roles. │

╰──────────────────────────────────────────────────────────────────────────────────────────────────────────╯

Run the container using the OPENAI_API_KEY environment variable, and a docker volume to store cache:

docker run --rm \

--env OPENAI_API_KEY="your OPENAI API key" \

--volume gpt-cache:/tmp/shell_gpt \

ghcr.io/ther1d/shell_gpt --chat rainbow "what are the colors of a rainbow"Example of a conversation, using an alias and the OPENAI_API_KEY environment variable:

alias sgpt="docker run --rm --env OPENAI_API_KEY --volume gpt-cache:/tmp/shell_gpt ghcr.io/ther1d/shell_gpt"

export OPENAI_API_KEY="your OPENAI API key"

sgpt --chat rainbow "what are the colors of a rainbow"

sgpt --chat rainbow "inverse the list of your last answer"

sgpt --chat rainbow "translate your last answer in french"You also can use the provided Dockerfile to build your own image:

docker build -t sgpt .Additional documentation: Azure integration, Ollama integration.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for shell_gpt

Similar Open Source Tools

shell_gpt

ShellGPT is a command-line productivity tool powered by AI large language models (LLMs). This command-line tool offers streamlined generation of shell commands, code snippets, documentation, eliminating the need for external resources (like Google search). Supports Linux, macOS, Windows and compatible with all major Shells like PowerShell, CMD, Bash, Zsh, etc.

gpt-all-star

GPT-All-Star is an AI-powered code generation tool designed for scratch development of web applications with team collaboration of autonomous AI agents. The primary focus of this research project is to explore the potential of autonomous AI agents in software development. Users can organize their team, choose leaders for each step, create action plans, and work together to complete tasks. The tool supports various endpoints like OpenAI, Azure, and Anthropic, and provides functionalities for project management, code generation, and team collaboration.

httpjail

httpjail is a cross-platform tool designed for monitoring and restricting HTTP/HTTPS requests from processes using network isolation and transparent proxy interception. It provides process-level network isolation, HTTP/HTTPS interception with TLS certificate injection, script-based and JavaScript evaluation for custom request logic, request logging, default deny behavior, and zero-configuration setup. The tool operates on Linux and macOS, creating an isolated network environment for target processes and intercepting all HTTP/HTTPS traffic through a transparent proxy enforcing user-defined rules.

blendsql

BlendSQL is a superset of SQLite designed for problem decomposition and hybrid question-answering with Large Language Models (LLMs). It allows users to blend operations over heterogeneous data sources like tables, text, and images, combining the structured and interpretable reasoning of SQL with the generalizable reasoning of LLMs. Users can oversee all calls (LLM + SQL) within a unified query language, enabling tasks such as building LLM chatbots for travel planning and answering complex questions by injecting 'ingredients' as callable functions.

distill

Distill is a reliability layer for LLM context that provides deterministic deduplication to remove redundancy before reaching the model. It aims to reduce redundant data, lower costs, provide faster responses, and offer more efficient and deterministic results. The tool works by deduplicating, compressing, summarizing, and caching context to ensure reliable outputs. It offers various installation methods, including binary download, Go install, Docker usage, and building from source. Distill can be used for tasks like deduplicating chunks, connecting to vector databases, integrating with AI assistants, analyzing files for duplicates, syncing vectors to Pinecone, querying from the command line, and managing configuration files. The tool supports self-hosting via Docker, Docker Compose, building from source, Fly.io deployment, Render deployment, and Railway integration. Distill also provides monitoring capabilities with Prometheus-compatible metrics, Grafana dashboard, and OpenTelemetry tracing.

lihil

Lihil is a performant, productive, and professional web framework designed to make Python the mainstream programming language for web development. It is 100% test covered and strictly typed, offering fast performance, ergonomic API, and built-in solutions for common problems. Lihil is suitable for enterprise web development, delivering robust and scalable solutions with best practices in microservice architecture and related patterns. It features dependency injection, OpenAPI docs generation, error response generation, data validation, message system, testability, and strong support for AI features. Lihil is ASGI compatible and uses starlette as its ASGI toolkit, ensuring compatibility with starlette classes and middlewares. The framework follows semantic versioning and has a roadmap for future enhancements and features.

mimiclaw

MimiClaw is a pocket AI assistant that runs on a $5 chip, specifically designed for the ESP32-S3 board. It operates without Linux or Node.js, using pure C language. Users can interact with MimiClaw through Telegram, enabling it to handle various tasks and learn from local memory. The tool is energy-efficient, running on USB power 24/7. With MimiClaw, users can have a personal AI assistant on a chip the size of a thumb, making it convenient and accessible for everyday use.

FinMem-LLM-StockTrading

This repository contains the Python source code for FINMEM, a Performance-Enhanced Large Language Model Trading Agent with Layered Memory and Character Design. It introduces FinMem, a novel LLM-based agent framework devised for financial decision-making, encompassing three core modules: Profiling, Memory with layered processing, and Decision-making. FinMem's memory module aligns closely with the cognitive structure of human traders, offering robust interpretability and real-time tuning. The framework enables the agent to self-evolve its professional knowledge, react agilely to new investment cues, and continuously refine trading decisions in the volatile financial environment. It presents a cutting-edge LLM agent framework for automated trading, boosting cumulative investment returns.

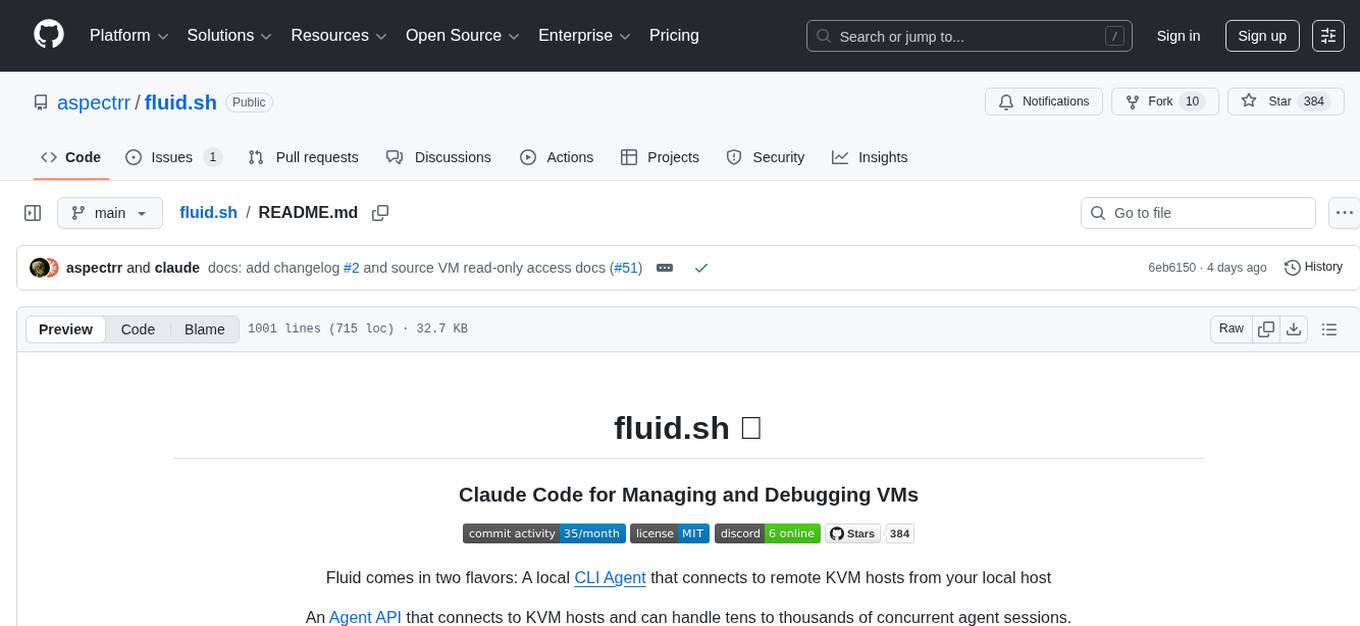

fluid.sh

fluid.sh is a tool designed to manage and debug VMs using AI agents in isolated environments before applying changes to production. It provides a workflow where AI agents work autonomously in sandbox VMs, and human approval is required before any changes are made to production. The tool offers features like autonomous execution, full VM isolation, human-in-the-loop approval workflow, Ansible export, and a Python SDK for building autonomous agents.

Shannon

Shannon is a battle-tested infrastructure for AI agents that solves problems at scale, such as runaway costs, non-deterministic failures, and security concerns. It offers features like intelligent caching, deterministic replay of workflows, time-travel debugging, WASI sandboxing, and hot-swapping between LLM providers. Shannon allows users to ship faster with zero configuration multi-agent setup, multiple AI patterns, time-travel debugging, and hot configuration changes. It is production-ready with features like WASI sandbox, token budget control, policy engine (OPA), and multi-tenancy. Shannon helps scale without breaking by reducing costs, being provider agnostic, observable by default, and designed for horizontal scaling with Temporal workflow orchestration.

solo-server

Solo Server is a lightweight server designed for managing hardware-aware inference. It provides seamless setup through a simple CLI and HTTP servers, an open model registry for pulling models from platforms like Ollama and Hugging Face, cross-platform compatibility for effortless deployment of AI models on hardware, and a configurable framework that auto-detects hardware components (CPU, GPU, RAM) and sets optimal configurations.

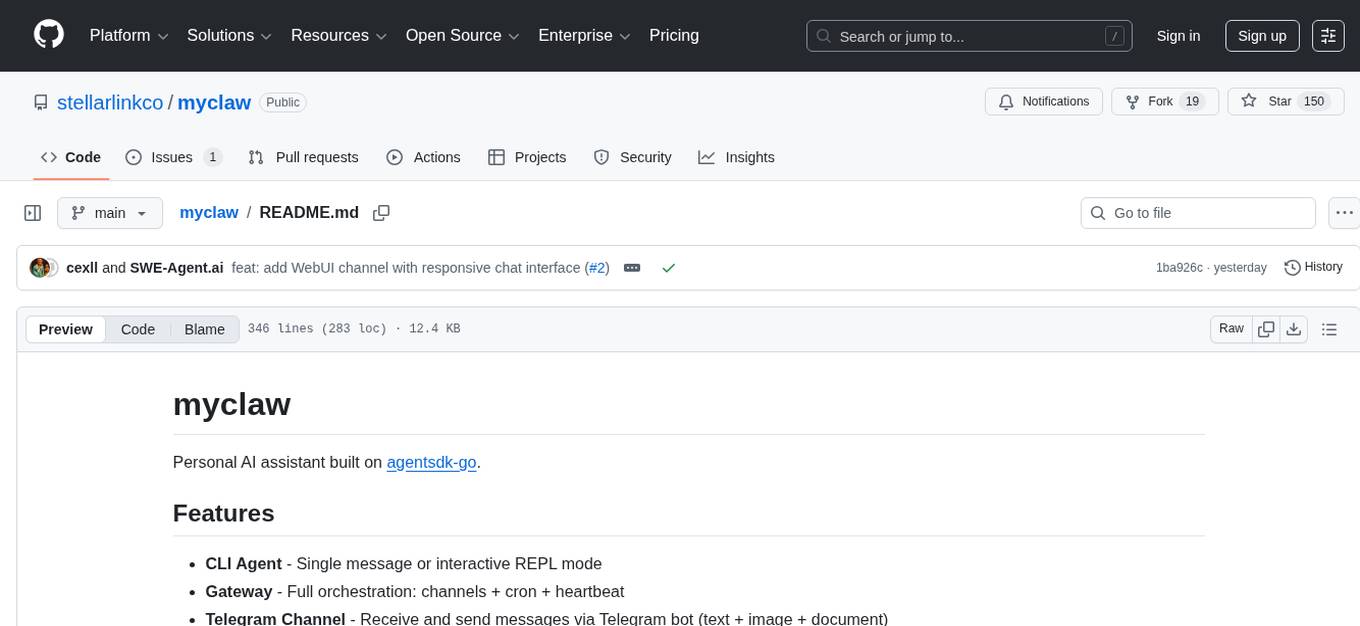

myclaw

myclaw is a personal AI assistant built on agentsdk-go that offers a CLI agent for single message or interactive REPL mode, full orchestration with channels, cron, and heartbeat, support for various messaging channels like Telegram, Feishu, WeCom, WhatsApp, and a web UI, multi-provider support for Anthropic and OpenAI models, image recognition and document processing, scheduled tasks with JSON persistence, long-term and daily memory storage, custom skill loading, and more. It provides a comprehensive solution for interacting with AI models and managing tasks efficiently.

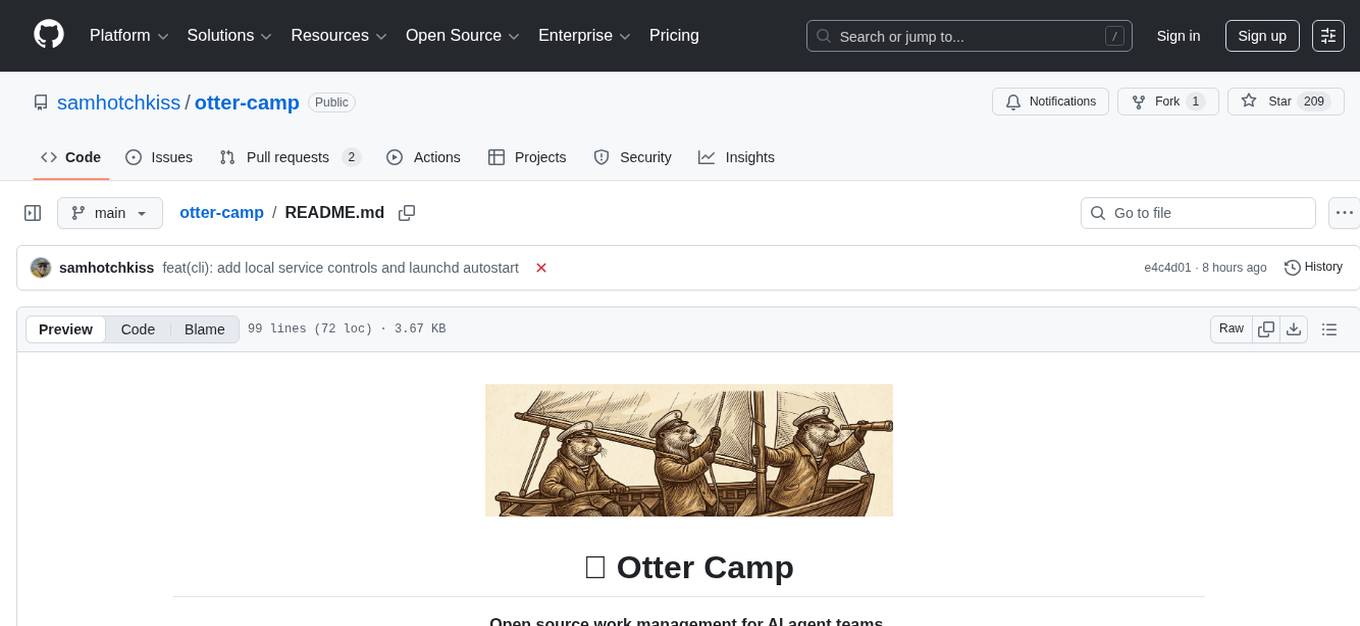

otter-camp

Otter Camp is an open source work management tool designed for AI agent teams. It provides a centralized platform for managing AI agents, ensuring that important context is not lost, enabling quick hiring and firing of agents, maintaining a single pipeline for all work types, keeping context organized within projects, facilitating work review processes, tracking team activities, and offering self-hosted data security. The tool integrates with OpenClaw to run agents and provides a user-friendly interface for managing agent teams efficiently.

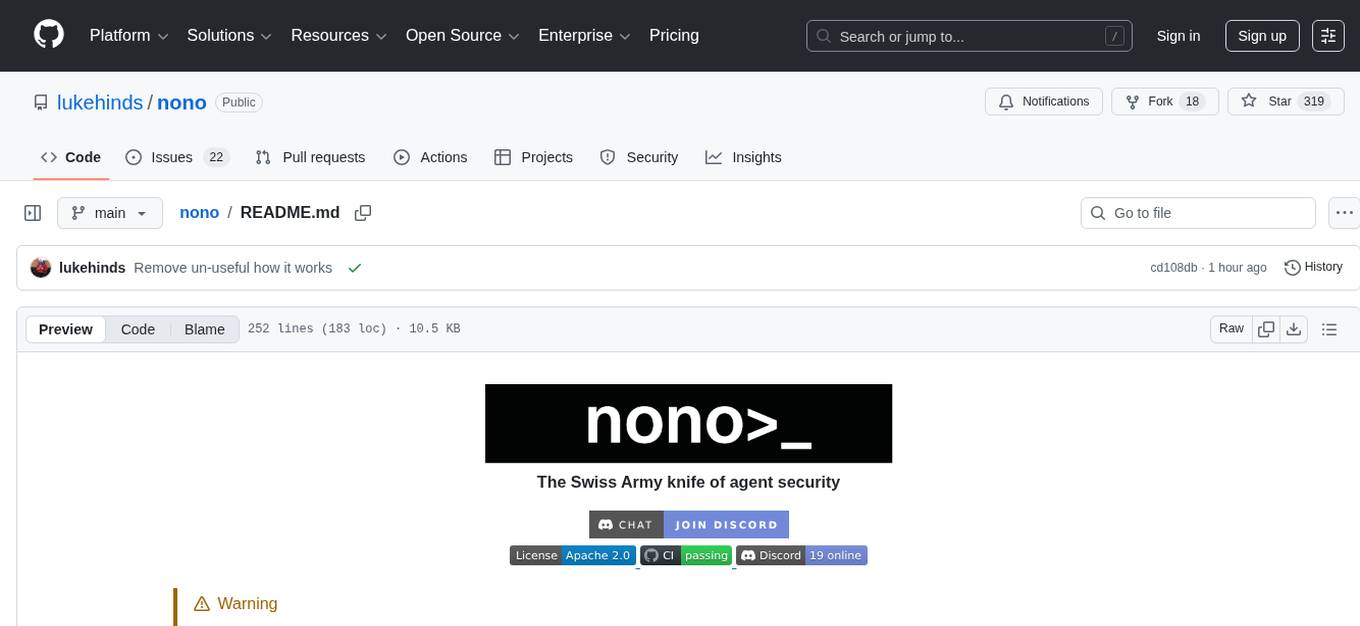

nono

nono is a secure, kernel-enforced capability shell for running AI agents and any POSIX style process. It leverages OS security primitives to create an environment where unauthorized operations are structurally impossible. It provides protections against destructive commands and securely stores API keys, tokens, and secrets. The tool is agent-agnostic, works with any AI agent or process, and blocks dangerous commands by default. It follows a capability-based security model with defense-in-depth, ensuring secure execution of commands and protecting sensitive data.

claudex

Claudex is an open-source, self-hosted Claude Code UI that runs entirely on your machine. It provides multiple sandboxes, allows users to use their own plans, offers a full IDE experience with VS Code in the browser, and is extensible with skills, agents, slash commands, and MCP servers. Users can run AI agents in isolated environments, view and interact with a browser via VNC, switch between multiple AI providers, automate tasks with Celery workers, and enjoy various chat features and preview capabilities. Claudex also supports marketplace plugins, secrets management, integrations like Gmail, and custom instructions. The tool is configured through providers and supports various providers like Anthropic, OpenAI, OpenRouter, and Custom. It has a tech stack consisting of React, FastAPI, Python, PostgreSQL, Celery, Redis, and more.

cordum

Cordum is a control plane for AI agents designed to close the Trust Gap by providing safety, observability, and control features. It allows teams to deploy autonomous agents with built-in governance mechanisms, including safety policies, workflow orchestration, job routing, observability, and human-in-the-loop approvals. The tool aims to address the challenges of deploying AI agents in production by offering visibility, safety rails, audit trails, and approval mechanisms for sensitive operations.

For similar tasks

shell_gpt

ShellGPT is a command-line productivity tool powered by AI large language models (LLMs). This command-line tool offers streamlined generation of shell commands, code snippets, documentation, eliminating the need for external resources (like Google search). Supports Linux, macOS, Windows and compatible with all major Shells like PowerShell, CMD, Bash, Zsh, etc.

holoinsight

HoloInsight is a cloud-native observability platform that provides low-cost and high-performance monitoring services for cloud-native applications. It offers deep insights through real-time log analysis and AI integration. The platform is designed to help users gain a comprehensive understanding of their applications' performance and behavior in the cloud environment. HoloInsight is easy to deploy using Docker and Kubernetes, making it a versatile tool for monitoring and optimizing cloud-native applications. With a focus on scalability and efficiency, HoloInsight is suitable for organizations looking to enhance their observability and monitoring capabilities in the cloud.

WatchAlert

WatchAlert is a lightweight monitoring and alerting engine tailored for cloud-native environments, focusing on observability and stability themes. It provides comprehensive monitoring and alerting support, including AI-powered alert analysis for efficient troubleshooting. WatchAlert integrates with various data sources such as Prometheus, VictoriaMetrics, Loki, Elasticsearch, AliCloud SLS, Jaeger, Kubernetes, and different network protocols for monitoring and supports alert notifications via multiple channels like Feishu, DingTalk, WeChat Work, email, and custom hooks. It is optimized for cloud-native environments, easy to use, offers flexible alert rule configurations, and specializes in stability scenarios to help users quickly identify and resolve issues, providing a reliable monitoring and alerting solution to enhance operational efficiency and reduce maintenance costs.

gonzo

Gonzo is a powerful, real-time log analysis terminal UI tool inspired by k9s. It allows users to analyze log streams with beautiful charts, AI-powered insights, and advanced filtering directly from the terminal. The tool provides features like live streaming log processing, OTLP support, interactive dashboard with real-time charts, advanced filtering options including regex support, and AI-powered insights such as pattern detection, anomaly analysis, and root cause suggestions. Users can also configure AI models from providers like OpenAI, LM Studio, and Ollama for intelligent log analysis. Gonzo is built with Bubble Tea, Lipgloss, Cobra, Viper, and OpenTelemetry, following a clean architecture with separate modules for TUI, log analysis, frequency tracking, OTLP handling, and AI integration.

claude-container

Claude Container is a Docker container pre-installed with Claude Code, providing an isolated environment for running Claude Code with optional API request logging in a local SQLite database. It includes three images: main container with Claude Code CLI, optional HTTP proxy for logging requests, and a web UI for visualizing and querying logs. The tool offers compatibility with different versions of Claude Code, quick start guides using a helper script or Docker Compose, authentication process, integration with existing projects, API request logging proxy setup, and data visualization with Datasette.

code-review-gpt

Code Review GPT uses Large Language Models to review code in your CI/CD pipeline. It helps streamline the code review process by providing feedback on code that may have issues or areas for improvement. It should pick up on common issues such as exposed secrets, slow or inefficient code, and unreadable code. It can also be run locally in your command line to review staged files. Code Review GPT is in alpha and should be used for fun only. It may provide useful feedback but please check any suggestions thoroughly.

syncode

SynCode is a novel framework for the grammar-guided generation of Large Language Models (LLMs) that ensures syntactically valid output with respect to defined Context-Free Grammar (CFG) rules. It supports general-purpose programming languages like Python, Go, SQL, JSON, and more, allowing users to define custom grammars using EBNF syntax. The tool compares favorably to other constrained decoders and offers features like fast grammar-guided generation, compatibility with HuggingFace Language Models, and the ability to work with various decoding strategies.

llm.nvim

llm.nvim is a plugin for Neovim that enables code completion using LLM models. It supports 'ghost-text' code completion similar to Copilot and allows users to choose their model for code generation via HTTP requests. The plugin interfaces with multiple backends like Hugging Face, Ollama, Open AI, and TGI, providing flexibility in model selection and configuration. Users can customize the behavior of suggestions, tokenization, and model parameters to enhance their coding experience. llm.nvim also includes commands for toggling auto-suggestions and manually requesting suggestions, making it a versatile tool for developers using Neovim.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.