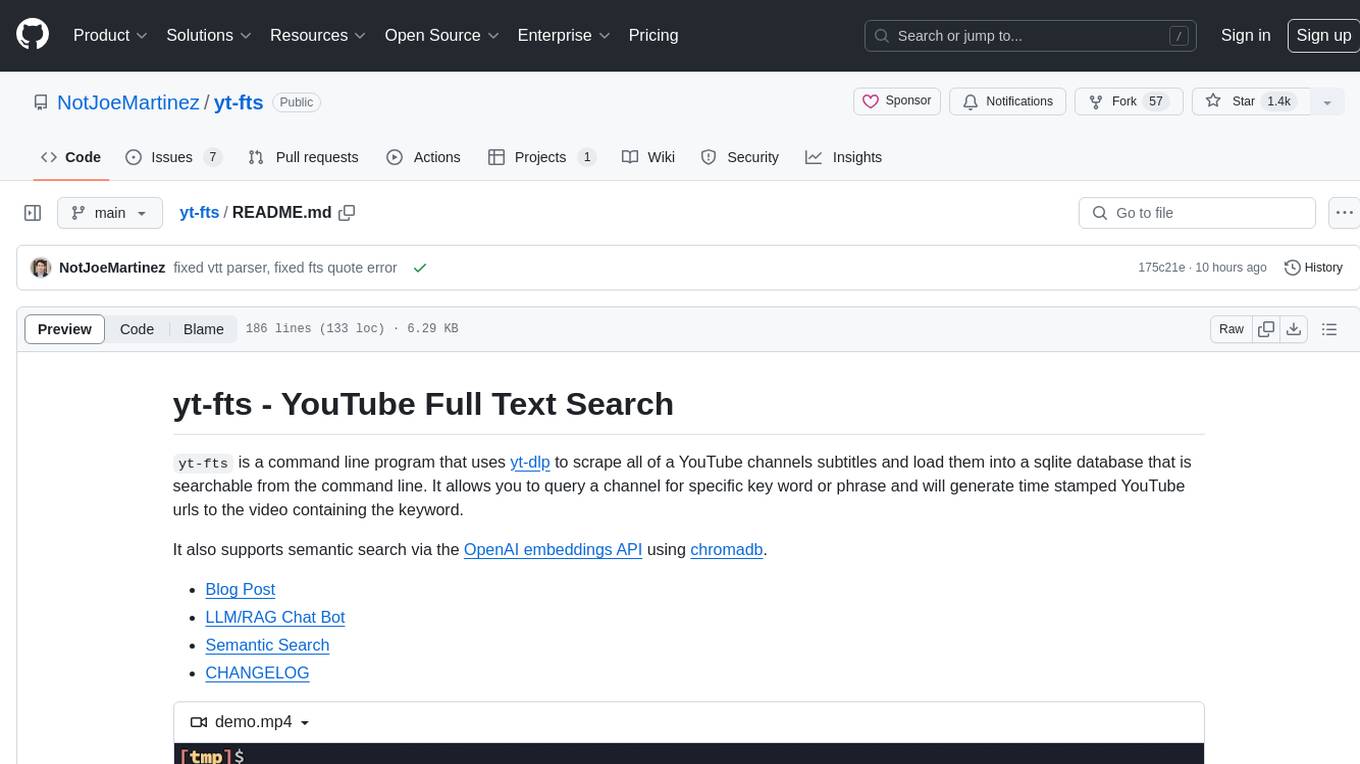

yt-fts

YouTube Full Text Search - Search all of a YouTube channel from the command line

Stars: 1552

yt-fts is a command line program that uses yt-dlp to scrape all of a YouTube channels subtitles and load them into a sqlite database for full text search. It allows users to query a channel for specific keywords or phrases and generates time stamped YouTube URLs to the videos containing the keyword. Additionally, it supports semantic search via the OpenAI embeddings API using chromadb.

README:

yt-fts is a command line program that uses yt-dlp to scrape all of a YouTube

channels subtitles and load them into a sqlite database that is searchable from the command line. It allows you to

query a channel for specific key word or phrase and will generate time stamped YouTube urls to

the video containing the keyword.

It also supports semantic search via the OpenAI embeddings API using chromadb.

https://github.com/NotJoeMartinez/yt-fts/assets/39905973/6ffd8962-d060-490f-9e73-9ab179402f14

pip

pip install yt-ftsDownload subtitles for a channel.

Takes a channel url or id as an argument. Specify the number of jobs to parallelize the download with the --number-of-jobs option.

yt-fts download --number-of-jobs 5 "https://www.youtube.com/@3blue1brown"List saved channels.

The (ss) next to the channel name indicates that the channel has semantic search enabled.

yt-fts list┏━━━━┳━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━━━┓

┃ ID ┃ Name ┃ Count ┃ Channel ID ┃

┡━━━━╇━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━━━┩

│ 1 │ ChessPage1 (ss) │ 19 │ UCO2QPmnJFjdvJ6ch-pe27dQ │

│ 2 │ 3Blue1Brown │ 127 │ UCYO_jab_esuFRV4b17AJtAw │

│ 3 │ george hotz archive │ 410 │ UCwgKmJM4ZJQRJ-U5NjvR2dg │

│ 4 │ The Tim Dillon Show │ 288 │ UC4woSp8ITBoYDmjkukhEhxg │

│ 5 │ Academy of Ideas (ss) │ 190 │ UCiRiQGCHGjDLT9FQXFW0I3A │

└────┴───────────────────────┴───────┴──────────────────────────┘

Full text search for a string in saved channels.

- The search string does not have to be a word for word and match

- Search strings are limited to 40 characters.

# search in all channels

yt-fts search "[search query]"

# search in channel

yt-fts search "[search query]" --channel "[channel name or id]"

# search in specific video

yt-fts search "[search query]" --video "[video id]"

# limit results

yt-fts search "[search query]" --limit "[number of results]" --channel "[channel name or id]"

# export results to csv

yt-fts search "[search query]" --export --channel "[channel name or id]" Advanced Search Syntax:

The search string supports sqlite Enhanced Query Syntax. which includes things like prefix queries which you can use to match parts of a word.

# AND search

yt-fts search "knife AND Malibu" --channel "The Tim Dillon Show"

# OR SEARCH

yt-fts search "knife OR Malibu" --channel "The Tim Dillon Show"

# wild cards

yt-fts search "rea* kni* Mali*" --channel "The Tim Dillon Show" You can enable semantic search for a channel by using the get-embeddings command.

This requires an OpenAI API key set in the environment variable OPENAI_API_KEY, or

you can pass the key with the --openai-api-key flag.

Fetches OpenAI embeddings for specified channel

# make sure openAI key is set

# export OPENAI_API_KEY="[yourOpenAIKey]"

yt-fts embeddings --channel "3Blue1Brown"

# specify time interval in seconds to split text by default is 30

# the larger the interval the more accurate the llm response

# but semantic search will have more text for you to read.

yt-fts embeddings --interval 60 --channel "3Blue1Brown" After the embeddings are saved you will see a (ss) next to the channel name when you

list channels, and you will be able to use the vsearch command for that channel.

Starts interactive chat session with gpt-4o OpenAI model using

the semantic search results of your initial prompt as the context

to answer questions. If it can't answer your question, it has a

mechanism to update the context by running targeted query based

off the conversation. The channel must have semantic search enabled.

yt-fts llm --channel "3Blue1Brown" "How does back propagation work?"vsearch is for "Vector search". This requires that you enable semantic

search for a channel with embeddings. It has the same options as

search but output will be sorted by similarity to the search string and

the default return limit is 10.

# search by channel name

yt-fts vsearch "[search query]" --channel "[channel name or id]"

# search in specific video

yt-fts vsearch "[search query]" --video "[video id]"

# limit results

yt-fts vsearch "[search query]" --limit "[number of results]" --channel "[channel name or id]"

# export results to csv

yt-fts vsearch "[search query]" --export --channel "[channel name or id]"

Export search results:

For both the search and vsearch commands you can export the results to a csv file with

the --export flag. and it will save the results to a csv file in the current directory.

yt-fts search "life in the big city" --export

yt-fts vsearch "existing in large metropolaten center" --exportDelete a channel:

You can delete a channel with the delete command.

yt-fts delete --channel "3Blue1Brown"Update a channel: The update command currently only works for full text search and will not update the semantic search embeddings.

yt-fts update --channel "3Blue1Brown"Export all of a channel's transcript:

This command will create a directory in current working directory with the YouTube channel id of the specified channel.

# Export to vtt

yt-fts export --channel "[id/name]" --format "[vtt/txt]"For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for yt-fts

Similar Open Source Tools

yt-fts

yt-fts is a command line program that uses yt-dlp to scrape all of a YouTube channels subtitles and load them into a sqlite database for full text search. It allows users to query a channel for specific keywords or phrases and generates time stamped YouTube URLs to the videos containing the keyword. Additionally, it supports semantic search via the OpenAI embeddings API using chromadb.

gitleaks

Gitleaks is a tool for detecting secrets like passwords, API keys, and tokens in git repos, files, and whatever else you wanna throw at it via stdin. It can be installed using Homebrew, Docker, or Go, and is available in binary form for many popular platforms and OS types. Gitleaks can be implemented as a pre-commit hook directly in your repo or as a GitHub action. It offers scanning modes for git repositories, directories, and stdin, and allows creating baselines for ignoring old findings. Gitleaks also provides configuration options for custom secret detection rules and supports features like decoding encoded text and generating reports in various formats.

rlama

RLAMA is a powerful AI-driven question-answering tool that seamlessly integrates with local Ollama models. It enables users to create, manage, and interact with Retrieval-Augmented Generation (RAG) systems tailored to their documentation needs. RLAMA follows a clean architecture pattern with clear separation of concerns, focusing on lightweight and portable RAG capabilities with minimal dependencies. The tool processes documents, generates embeddings, stores RAG systems locally, and provides contextually-informed responses to user queries. Supported document formats include text, code, and various document types, with troubleshooting steps available for common issues like Ollama accessibility, text extraction problems, and relevance of answers.

symbolicai

SymbolicAI is a neuro-symbolic framework that combines classical Python programming with the differentiable, programmable nature of Large Language Models (LLMs). It allows for easy extensibility and customization, enabling users to write their own engines, host local engines, or interface with tools like web search and image generation. The framework introduces two key concepts: 'primitives' and 'contracts'. Primitives include syntactic and semantic Symbol objects, while contracts bring Design by Contract principles to LLMs, ensuring correctness in code design. SymbolicAI also features a configuration management system with priority-based loading for settings. The tool supports various engines for text, speech, and image processing, along with search engine access. Users can install SymbolicAI via pip and set up optional features and dependencies as needed.

code-graph-rag

Graph-Code is an accurate Retrieval-Augmented Generation (RAG) system that analyzes multi-language codebases using Tree-sitter. It builds comprehensive knowledge graphs, enabling natural language querying of codebase structure and relationships, along with editing capabilities. The system supports various languages, uses Tree-sitter for parsing, Memgraph for storage, and AI models for natural language to Cypher translation. It offers features like code snippet retrieval, advanced file editing, shell command execution, interactive code optimization, reference-guided optimization, dependency analysis, and more. The architecture consists of a multi-language parser and an interactive CLI for querying the knowledge graph.

AI-Agent-Starter-Kit

AI Agent Starter Kit is a modern full-stack AI-enabled template using Next.js for frontend and Express.js for backend, with Telegram and OpenAI integrations. It offers AI-assisted development, smart environment variable setup assistance, intelligent error resolution, context-aware code completion, and built-in debugging helpers. The kit provides a structured environment for developers to interact with AI tools seamlessly, enhancing the development process and productivity.

connectonion

ConnectOnion is a simple, elegant open-source framework for production-ready AI agents. It provides a platform for creating and using AI agents with a focus on simplicity and efficiency. The framework allows users to easily add tools, debug agents, make them production-ready, and enable multi-agent capabilities. ConnectOnion offers a simple API, is production-ready with battle-tested models, and is open-source under the MIT license. It features a plugin system for adding reflection and reasoning capabilities, interactive debugging for easy troubleshooting, and no boilerplate code for seamless scaling from prototypes to production systems.

LongRecipe

LongRecipe is a tool designed for efficient long context generalization in large language models. It provides a recipe for extending the context window of language models while maintaining their original capabilities. The tool includes data preprocessing steps, model training stages, and a process for merging fine-tuned models to enhance foundational capabilities. Users can follow the provided commands and scripts to preprocess data, train models in multiple stages, and merge models effectively.

orbiton

Orbiton is a text editor and simple IDE designed with minimal annoyance in mind, not highly configurable to help users stay focused, and supports rapid edit-format-compile cycles. It is suitable for writing git commit messages, editing README.md and TODO.md files, writing Markdown and exporting to HTML or PDF, learning programming languages, editing files within larger projects, solving Advent of Code tasks, and providing a distraction-free environment for writing. The tool offers unique features like smart cursor movement, paste and copy shortcuts, portal for copying lines across files, code building and formatting shortcuts, and more.

paperbanana

PaperBanana is an automated academic illustration tool designed for AI scientists. It implements an agentic framework for generating publication-quality academic diagrams and statistical plots from text descriptions. The tool utilizes a two-phase multi-agent pipeline with iterative refinement, Gemini-based VLM planning, and image generation. It offers a CLI, Python API, and MCP server for IDE integration, along with Claude Code skills for generating diagrams, plots, and evaluating diagrams. PaperBanana is not affiliated with or endorsed by the original authors or Google Research, and it may differ from the original system described in the paper.

text-extract-api

The text-extract-api is a powerful tool that allows users to convert images, PDFs, or Office documents to Markdown text or JSON structured documents with high accuracy. It is built using FastAPI and utilizes Celery for asynchronous task processing, with Redis for caching OCR results. The tool provides features such as PDF/Office to Markdown and JSON conversion, improving OCR results with LLama, removing Personally Identifiable Information from documents, distributed queue processing, caching using Redis, switchable storage strategies, and a CLI tool for task management. Users can run the tool locally or on cloud services, with support for GPU processing. The tool also offers an online demo for testing purposes.

vue-markdown-render

vue-renderer-markdown is a high-performance tool designed for streaming and rendering Markdown content in real-time. It is optimized for handling incomplete or rapidly changing Markdown blocks, making it ideal for scenarios like AI model responses, live content updates, and real-time Markdown rendering. The tool offers features such as ultra-high performance, streaming-first design, Monaco integration, progressive Mermaid rendering, custom components integration, complete Markdown support, real-time updates, TypeScript support, and zero configuration setup. It solves challenges like incomplete syntax blocks, rapid content changes, cursor positioning complexities, and graceful handling of partial tokens with a streaming-optimized architecture.

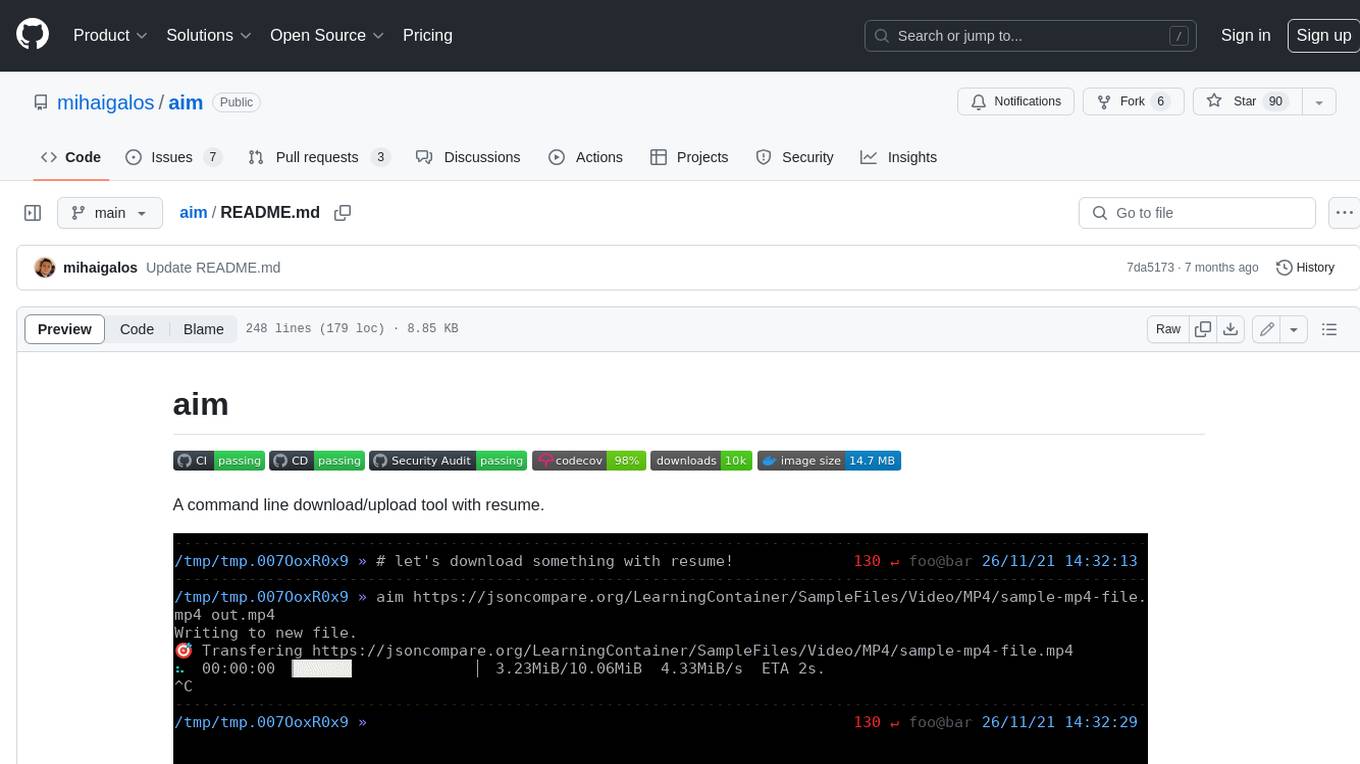

aim

Aim is a command-line tool for downloading and uploading files with resume support. It supports various protocols including HTTP, FTP, SFTP, SSH, and S3. Aim features an interactive mode for easy navigation and selection of files, as well as the ability to share folders over HTTP for easy access from other devices. Additionally, it offers customizable progress indicators and output formats, and can be integrated with other commands through piping. Aim can be installed via pre-built binaries or by compiling from source, and is also available as a Docker image for platform-independent usage.

hud-python

hud-python is a Python library for creating interactive heads-up displays (HUDs) in video games. It provides a simple and flexible way to overlay information on the screen, such as player health, score, and notifications. The library is designed to be easy to use and customizable, allowing game developers to enhance the user experience by adding dynamic elements to their games. With hud-python, developers can create engaging HUDs that improve gameplay and provide important feedback to players.

llm-rankers

llm-rankers is a repository that provides implementations for Pointwise, Listwise, Pairwise, and Setwise Document Ranking using Large Language Models. It includes various methods for reranking documents retrieved by a first-stage retriever, such as BM25. The repository offers examples and code snippets for using LLMs to improve document ranking performance in information retrieval tasks. Additionally, it introduces a new setwise reranker called Rank-R1 with reasoning ability.

python-tgpt

Python-tgpt is a Python package that enables seamless interaction with over 45 free LLM providers without requiring an API key. It also provides image generation capabilities. The name _python-tgpt_ draws inspiration from its parent project tgpt, which operates on Golang. Through this Python adaptation, users can effortlessly engage with a number of free LLMs available, fostering a smoother AI interaction experience.

For similar tasks

yt-fts

yt-fts is a command line program that uses yt-dlp to scrape all of a YouTube channels subtitles and load them into a sqlite database for full text search. It allows users to query a channel for specific keywords or phrases and generates time stamped YouTube URLs to the videos containing the keyword. Additionally, it supports semantic search via the OpenAI embeddings API using chromadb.

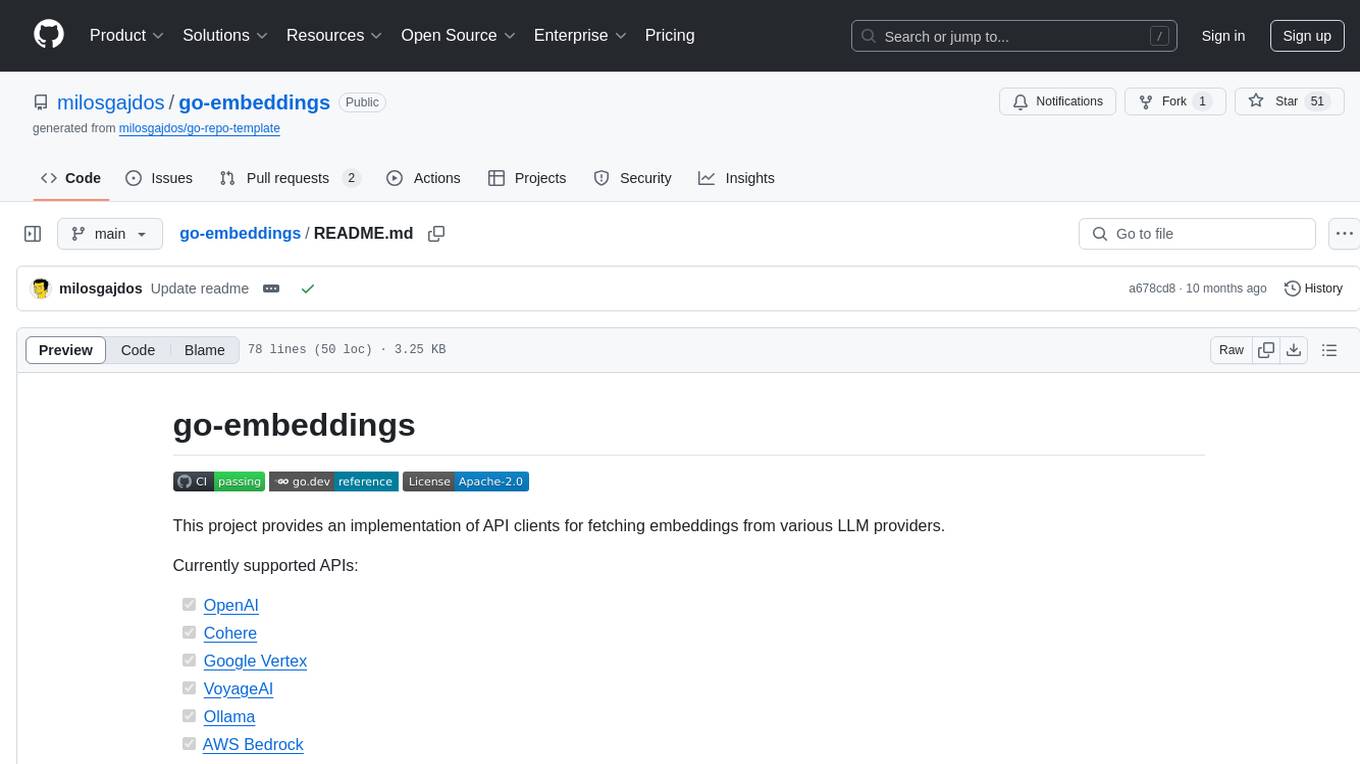

go-embeddings

This project provides API clients for fetching embeddings from various LLM providers. It includes implementations for OpenAI, Cohere, Google Vertex, VoyageAI, Ollama, and AWS Bedrock. Sample programs demonstrate how to use the client packages. The 'document' package offers text splitters inspired by Langchain framework. Environment variables are used to initialize API clients for each provider. Contributions are welcome.

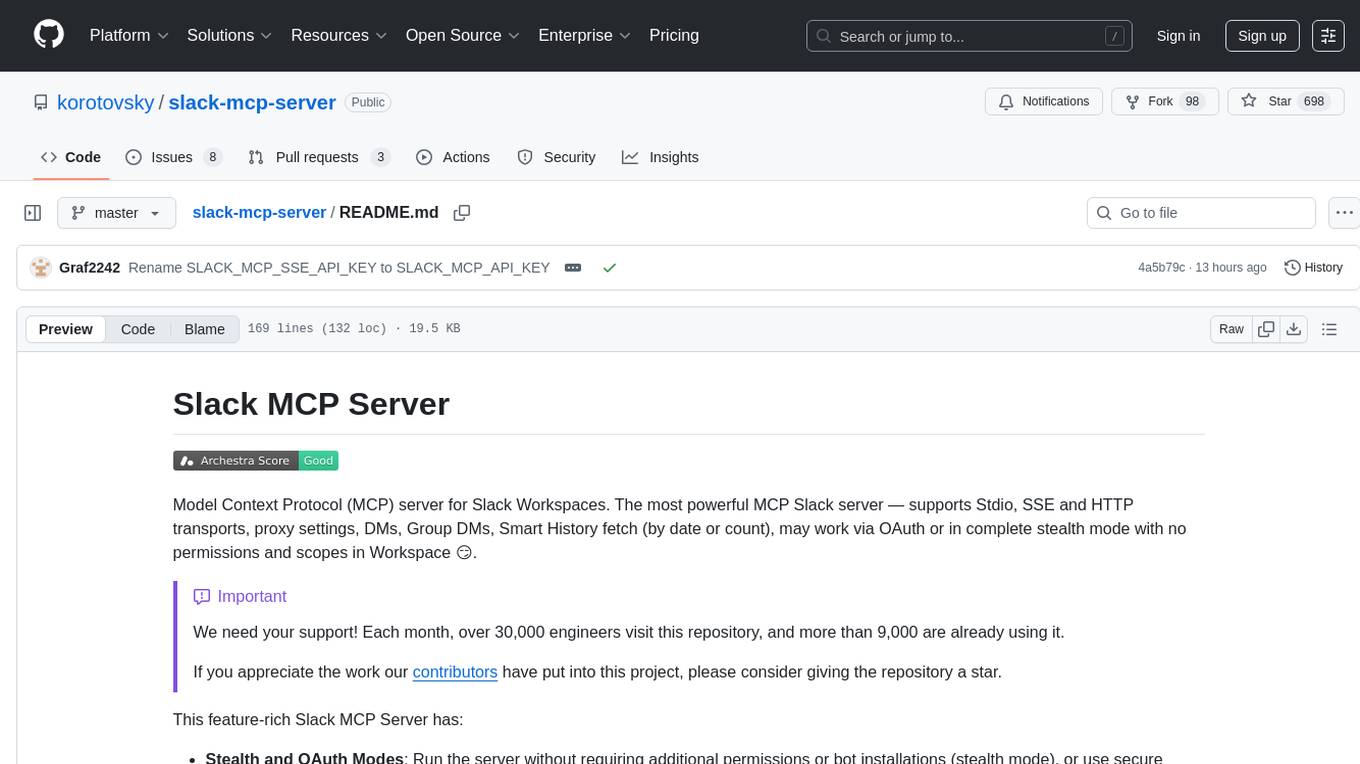

slack-mcp-server

Slack MCP Server is a Model Context Protocol server for Slack Workspaces, offering powerful features like Stealth and OAuth Modes, Enterprise Workspaces Support, Channel and Thread Support, Smart History, Search Messages, Safe Message Posting, DM and Group DM support, Embedded user information, Cache support, and multiple transport options. It provides tools like conversations_history, conversations_replies, conversations_add_message, conversations_search_messages, and channels_list for managing messages, threads, adding messages, searching messages, and listing channels. The server also exposes directory resources for workspace metadata access. The tool is designed to enhance Slack workspace functionality and improve user experience.

MyScaleDB

MyScaleDB is a SQL vector database optimized for AI applications, enabling developers to manage and process massive volumes of data efficiently. It offers fast and powerful vector search, filtered search, and SQL-vector join queries, making it fully SQL-compatible. MyScaleDB provides unmatched performance and scalability by leveraging cutting-edge OLAP database architecture and advanced vector algorithms. It is production-ready for AI applications, supporting structured data, text, vector, JSON, geospatial, and time-series data. MyScale Cloud offers fully-managed MyScaleDB with premium features on billion-scale data, making it cost-effective and simpler to use compared to specialized vector databases. Built on top of ClickHouse, MyScaleDB combines structured and vector search efficiently, ensuring high accuracy and performance in filtered search operations.

redis-vl-python

The Python Redis Vector Library (RedisVL) is a tailor-made client for AI applications leveraging Redis. It enhances applications with Redis' speed, flexibility, and reliability, incorporating capabilities like vector-based semantic search, full-text search, and geo-spatial search. The library bridges the gap between the emerging AI-native developer ecosystem and the capabilities of Redis by providing a lightweight, elegant, and intuitive interface. It abstracts the features of Redis into a grammar that is more aligned to the needs of today's AI/ML Engineers or Data Scientists.

NornicDB

NornicDB is a high-performance graph database designed for AI agents and knowledge systems. It is Neo4j-compatible, GPU-accelerated, and features memory that evolves. The database automatically discovers and manages relationships in the data, allowing meaning to emerge from the knowledge graph. NornicDB is suitable for AI agent memory, knowledge graphs, RAG systems, session context, and research tools. It offers features like intelligent memory, auto-relationships, performance benchmarks, vector search, Heimdall AI assistant, APOC functions, and various Docker images for different platforms. The tool is built with Neo4j Bolt protocol, Cypher query engine, memory decay system, GPU acceleration, vector search, auto-relationship engine, and more.

MaterialSearch

MaterialSearch is a tool for searching local images and videos using natural language. It provides functionalities such as text search for images, image search for images, text search for videos (providing matching video clips), image search for videos (searching for the segment in a video through a screenshot), image-text similarity calculation, and Pexels video search. The tool can be deployed through the source code or Docker image, and it supports GPU acceleration. Users can configure the tool through environment variables or a .env file. The tool is still under development, and configurations may change frequently. Users can report issues or suggest improvements through issues or pull requests.

ai-video-search-engine

AI Video Search Engine (AVSE) is a video search engine powered by the latest tools in AI. It allows users to search for specific answers within millions of videos by indexing video content. The tool extracts video transcription, elements like thumbnail and description, and generates vector embeddings using AI models. Users can search for relevant results based on questions, view timestamped transcripts, and get video summaries. AVSE requires a paid Supabase & Fly.io account for hosting and can handle millions of videos with the current setup.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.