yek

A fast Rust based tool to serialize text-based files in a repository or directory for LLM consumption

Stars: 2332

Yek is a fast Rust-based tool designed to read text-based files in a repository or directory, chunk them, and serialize them for Large Language Models (LLM) consumption. It utilizes .gitignore rules to skip unwanted files, Git history to infer important files, and additional ignore patterns. Yek splits content into chunks based on token count or byte size, supports processing multiple directories, and can stream content when output is piped. It is configurable via a 'yek.toml' file and prioritizes important files at the end of the output.

README:

A fast Rust based tool to serialize text-based files in a repository or directory for LLM consumption.1

By default:

- Uses

.gitignorerules to skip unwanted files. - Uses the Git history to infer what files are more important.

- Infers additional ignore patterns (binary, large, etc.).

- Automatically detects if output is being piped and streams content instead of writing to files.

- Supports processing multiple directories in a single command.

- Supports glob patterns and individual file selection.

- Configurable via a

yek.yamlfile.

Yek يک means "One" in Farsi/Persian.

Consider having a simple repo like this:

.

├── README.md

├── src

│ ├── main.rs

│ └── utils.rs

└── tests

└── test.rs

Running yek in this directory will produce a single file and write it to the temp directory with the following content:

>>>> README.md

... content ...

>>>> tests/test.rs

... content ...

>>>> src/utils.rs

... content ...

>>>> src/main.rs

... content ...[!NOTE]

yekwill prioritize more important files to come last in the output. This is useful for LLM consumption since LLMs tend to pay more attention to content that appears later in the context.

Choose the installation method for your platform:

curl -fsSL https://bodo.run/yek.sh | bashFor Windows (PowerShell):

irm https://bodo.run/yek.ps1 | iexBuild from Source

git clone https://github.com/bodo-run/yek

cd yek

cargo install --path .yek has sensible defaults, you can simply run yek in a directory to serialize the entire repository. It will serialize all files in the repository and write them into a temporary file. The path to the file will be printed to the console.

Process current directory and write to temp directory:

yekPipe output to clipboard (macOS):

yek src/ | pbcopyCap the max output size to 128K tokens:

yek --tokens 128k[!NOTE]

yekwill remove any files that won't fit in the capped context size. It will try to fit in more important files

yek --max-size 100KB --output-dir /tmp/yek src/Process multiple directories:

yek src/ tests/Process multiple files

yek file1.txt file2.txt file3.txtUse glob patterns:

yek "src/**/*.ts"yek "src/main.rs" "tests/*.rs" "docs/README.md"[!NOTE] When using glob patterns, make sure to quote them to prevent shell expansion.

yek --help

Usage: yek [OPTIONS] [input-paths]...

Arguments:

[input-paths]... Input files and/or directories to process

Options:

--no-config Do not use a config file

--config-file <CONFIG_FILE> Path to the config file

-V, --version Print version of yek

--max-size <MAX_SIZE> Max size per chunk. e.g. "10MB" or "128K" or when using token counting mode, "100" or "128K" [default: 10MB]

--tokens <TOKENS> Use token mode instead of byte mode

--json Enable JSON output

--debug Enable debug output

--line-numbers Include line numbers in output

--output-dir [<OUTPUT_DIR>] Output directory. If none is provided & stdout is a TTY, we pick a temp dir

--output-name [<OUTPUT_NAME>] Output filename. If provided, write output to this file in current directory

--output-template [<OUTPUT_TEMPLATE>] Output template. Defaults to ">>>> FILE_PATH\nFILE_CONTENT"

--ignore-patterns <IGNORE_PATTERNS>... Ignore patterns

--unignore-patterns <UNIGNORE_PATTERNS>... Unignore patterns. Yek has some built-in ignore patterns, but you can override them here.

-t, --tree-header Include directory tree header in output (incompatible with JSON output)

--tree-only Show only the directory tree (no file contents, incompatible with JSON output)

-h, --help Print help-

[input-paths]...- Files or directories to process. Supports glob patterns (quote them to prevent shell expansion) -

--no-config- Skip loading any configuration file -

--config-file <CONFIG_FILE>- Use a specific configuration file path instead of searching for default config files -

-V, --version- Print version information and exit -

--max-size <MAX_SIZE>- Maximum size limit per output (e.g., "10MB", "128K"). Used in byte mode -

--tokens <TOKENS>- Use token-based counting instead of bytes (e.g., "128k", "100"). Enables token mode -

--json- Output results in JSON format instead of text -

--debug- Enable debug logging for troubleshooting -

--line-numbers- Include line numbers in the output for each file -

--output-dir [<OUTPUT_DIR>]- Directory to write output files. If not specified and not streaming, uses temp directory -

--output-name [<OUTPUT_NAME>]- Specific filename for output. If specified, writes to current directory with this name -

--output-template [<OUTPUT_TEMPLATE>]- Template for formatting output. UseFILE_PATHandFILE_CONTENTplaceholders -

--ignore-patterns <IGNORE_PATTERNS>...- Additional patterns to ignore (extends .gitignore and defaults) -

--unignore-patterns <UNIGNORE_PATTERNS>...- Patterns to override built-in ignore rules -

-t, --tree-header- Include a directory tree at the beginning of output (incompatible with JSON) -

--tree-only- Show only the directory tree structure without file contents (incompatible with JSON)

You can place a file called yek.yaml at your project root or pass a custom path via --config-file. The configuration file allows you to:

- Add custom ignore patterns

- Define file priority rules for processing order

- Add additional binary file extensions to ignore (extends the built-in list)

- Configure Git-based priority boost

- Define output directory and output filename

- Define output template and other output options

Most CLI options can be configured in the config file. The following options can be set:

File Processing:

-

max_size- Size limit (same as--max-size) -

tokens- Token count limit (same as--tokens) -

ignore_patterns- Additional ignore patterns (same as--ignore-patterns) -

unignore_patterns- Override built-in ignores (same as--unignore-patterns)

Output Configuration:

-

json- Enable JSON output (same as--json) -

debug- Enable debug mode (same as--debug) -

line_numbers- Include line numbers (same as--line-numbers) -

output_dir- Output directory (same as--output-dir) -

output_name- Output filename (same as--output-name) -

output_template- Output template (same as--output-template) -

tree_header- Include directory tree header (same as--tree-header) -

tree_only- Show only directory tree (same as--tree-only)

Config-only Options:

-

priority_rules- File priority rules (config file only) -

binary_extensions- Additional binary file extensions (config file only) -

git_boost_max- Maximum Git-based priority boost (config file only)

[!NOTE] Some CLI options like

--no-config,--config-file, and--versionare CLI-only and cannot be set in config files.

You can also use yek.toml or yek.json instead of yek.yaml.

This is optional, you can configure the yek.yaml file at the root of your project.

# Add patterns to ignore (in addition to .gitignore)

ignore_patterns:

- "ai-prompts/**"

- "__generated__/**"

# Configure Git-based priority boost (optional)

git_boost_max: 50 # Maximum score boost based on Git history (default: 100)

# Define priority rules for processing order

# Higher scores are processed first

priority_rules:

- score: 100

pattern: "^src/lib/"

- score: 90

pattern: "^src/"

- score: 80

pattern: "^docs/"

# Add additional binary file extensions to ignore

# These extend the built-in list (.jpg, .png, .exe, etc.)

binary_extensions:

- ".blend" # Blender files

- ".fbx" # 3D model files

- ".max" # 3ds Max files

- ".psd" # Photoshop files

# Output configuration

max_size: "128K" # Size limit (can also use tokens: "100k")

json: false # Enable JSON output

debug: false # Enable debug logging

line_numbers: false # Include line numbers in output

tree_header: false # Include directory tree at start

# Define output directory

output_dir: /tmp/yek

# Define output filename (writes to current directory with this name)

output_name: yek-output.txt

# Define output template.

# FILE_PATH and FILE_CONTENT are expected to be present in the template.

output_template: "FILE_PATH\n\nFILE_CONTENT"yek is fast. It's written in Rust and does many things in parallel to speed up processing.

Here is a benchmark comparing it to Repomix serializing the Next.js project:

time yek

Executed in 5.19 secs fish external

usr time 2.85 secs 54.00 micros 2.85 secs

sys time 6.31 secs 629.00 micros 6.31 secstime repomix

Executed in 22.24 mins fish external

usr time 21.99 mins 0.18 millis 21.99 mins

sys time 0.23 mins 1.72 millis 0.23 minsyek is 230x faster than repomix.

See proposed features. I am open to accepting new feature requests. Please write a detailed proposal to discuss new features.

-

Repomix: A tool to serialize a repository into a single file in a similar way to

yek. - Aider: A full IDE like experience for coding using AI

-

yekis not "blazingly" fast. It's just fast, as fast as your computer can be. ↩

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for yek

Similar Open Source Tools

yek

Yek is a fast Rust-based tool designed to read text-based files in a repository or directory, chunk them, and serialize them for Large Language Models (LLM) consumption. It utilizes .gitignore rules to skip unwanted files, Git history to infer important files, and additional ignore patterns. Yek splits content into chunks based on token count or byte size, supports processing multiple directories, and can stream content when output is piped. It is configurable via a 'yek.toml' file and prioritizes important files at the end of the output.

sycamore

Sycamore is a conversational search and analytics platform for complex unstructured data, such as documents, presentations, transcripts, embedded tables, and internal knowledge repositories. It retrieves and synthesizes high-quality answers through bringing AI to data preparation, indexing, and retrieval. Sycamore makes it easy to prepare unstructured data for search and analytics, providing a toolkit for data cleaning, information extraction, enrichment, summarization, and generation of vector embeddings that encapsulate the semantics of data. Sycamore uses your choice of generative AI models to make these operations simple and effective, and it enables quick experimentation and iteration. Additionally, Sycamore uses OpenSearch for indexing, enabling hybrid (vector + keyword) search, retrieval-augmented generation (RAG) pipelining, filtering, analytical functions, conversational memory, and other features to improve information retrieval.

embedJs

EmbedJs is a NodeJS framework that simplifies RAG application development by efficiently processing unstructured data. It segments data, creates relevant embeddings, and stores them in a vector database for quick retrieval.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

oio-sds

OpenIO SDS is a software solution for object storage, targeting very large-scale unstructured data volumes.

public

This public repository contains API, tools, and packages for Datagrok, a web-based data analytics platform. It offers support for scientific domains, applications, connectors to web services, visualizations, file importing, scientific methods in R, Python, or Julia, file metadata extractors, custom predictive models, platform enhancements, and more. The open-source packages are free to use, with restrictions on server computational capacities for the public environment. Academic institutions can use Datagrok for research and education, benefiting from reproducible and scalable computations and data augmentation capabilities. Developers can contribute by creating visualizations, scientific methods, file editors, connectors to web services, and more.

llm-search

pyLLMSearch is an advanced RAG system that offers a convenient question-answering system with a simple YAML-based configuration. It enables interaction with multiple collections of local documents, with improvements in document parsing, hybrid search, chat history, deep linking, re-ranking, customizable embeddings, and more. The package is designed to work with custom Large Language Models (LLMs) from OpenAI or installed locally. It supports various document formats, incremental embedding updates, dense and sparse embeddings, multiple embedding models, 'Retrieve and Re-rank' strategy, HyDE (Hypothetical Document Embeddings), multi-querying, chat history, and interaction with embedded documents using different models. It also offers simple CLI and web interfaces, deep linking, offline response saving, and an experimental API.

doris

Doris is a lightweight and user-friendly data visualization tool designed for quick and easy exploration of datasets. It provides a simple interface for users to upload their data and generate interactive visualizations without the need for coding. With Doris, users can easily create charts, graphs, and dashboards to analyze and present their data in a visually appealing way. The tool supports various data formats and offers customization options to tailor visualizations to specific needs. Whether you are a data analyst, researcher, or student, Doris simplifies the process of data exploration and presentation.

document-ai-samples

The Google Cloud Document AI Samples repository contains code samples and Community Samples demonstrating how to analyze, classify, and search documents using Google Cloud Document AI. It includes various projects showcasing different functionalities such as integrating with Google Drive, processing documents using Python, content moderation with Dialogflow CX, fraud detection, language extraction, paper summarization, tax processing pipeline, and more. The repository also provides access to test document files stored in a publicly-accessible Google Cloud Storage Bucket. Additionally, there are codelabs available for optical character recognition (OCR), form parsing, specialized processors, and managing Document AI processors. Community samples, like the PDF Annotator Sample, are also included. Contributions are welcome, and users can seek help or report issues through the repository's issues page. Please note that this repository is not an officially supported Google product and is intended for demonstrative purposes only.

RAGFoundry

RAG Foundry is a library designed to enhance Large Language Models (LLMs) by fine-tuning models on RAG-augmented datasets. It helps create training data, train models using parameter-efficient finetuning (PEFT), and measure performance using RAG-specific metrics. The library is modular, customizable using configuration files, and facilitates prototyping with various RAG settings and configurations for tasks like data processing, retrieval, training, inference, and evaluation.

ShadowCrawl

ShadowCrawl is a web scraping tool designed to extract data from websites. It provides a user-friendly interface to easily configure scraping tasks and obtain structured data. With ShadowCrawl, users can automate the process of collecting information from various websites without the need for manual intervention. The tool supports various data formats and allows users to schedule scraping tasks for regular updates. Whether you need to gather pricing information, monitor competitor websites, or extract contact details, ShadowCrawl offers a versatile solution for web data extraction.

dagger

Dagger is an open-source runtime for composable workflows, ideal for systems requiring repeatability, modularity, observability, and cross-platform support. It features a reproducible execution engine, a universal type system, a powerful data layer, native SDKs for multiple languages, an open ecosystem, an interactive command-line environment, batteries-included observability, and seamless integration with various platforms and frameworks. It also offers LLM augmentation for connecting to LLM endpoints. Dagger is suitable for AI agents and CI/CD workflows.

cube

Cube is a semantic layer for building data applications, helping data engineers and application developers access data from modern data stores, organize it into consistent definitions, and deliver it to every application. It works with SQL-enabled data sources, providing sub-second latency and high concurrency for API requests. Cube addresses SQL code organization, performance, and access control issues in data applications, enabling efficient data modeling, access control, and performance optimizations for various tools like embedded analytics, dashboarding, reporting, and data notebooks.

n8n-docs

n8n is an extendable workflow automation tool that enables you to connect anything to everything. It is open-source and can be self-hosted or used as a service. n8n provides a visual interface for creating workflows, which can be used to automate tasks such as data integration, data transformation, and data analysis. n8n also includes a library of pre-built nodes that can be used to connect to a variety of applications and services. This makes it easy to create complex workflows without having to write any code.

unified-cache-management

Unified Cache Manager (UCM) is a tool designed to persist the LLM KVCache and replace redundant computations through various retrieval mechanisms. It supports prefix caching and offers training-free sparse attention retrieval methods, enhancing performance for long sequence inference tasks. UCM also provides a PD disaggregation solution based on a storage-compute separation architecture, enabling easier management of heterogeneous computing resources. When integrated with vLLM, UCM significantly reduces inference latency in scenarios like multi-turn dialogue and long-context reasoning tasks.

For similar tasks

yek

Yek is a fast Rust-based tool designed to read text-based files in a repository or directory, chunk them, and serialize them for Large Language Models (LLM) consumption. It utilizes .gitignore rules to skip unwanted files, Git history to infer important files, and additional ignore patterns. Yek splits content into chunks based on token count or byte size, supports processing multiple directories, and can stream content when output is piped. It is configurable via a 'yek.toml' file and prioritizes important files at the end of the output.

emigo

Emigo is an AI-powered development tool for Emacs that integrates large language models to interact with projects, read files, write code, execute commands, and more. It acts as an agentic AI assistant, leveraging tool use to enhance development workflows within Emacs. Emigo is actively developed, offering features like agentic tool use, Emacs integration, flexible LLM support, and context-aware interactions. Users can install Emigo with Python dependencies and configure it within Emacs for seamless integration. The tool's core strength lies in its agentic tool use, where the AI analyzes requests, selects appropriate tools, executes actions, and provides feedback, enabling users to accomplish complex tasks efficiently.

Code

A3S Code is an embeddable AI coding agent framework in Rust that allows users to build agents capable of reading, writing, and executing code with tool access, planning, and safety controls. It is production-ready with features like permission system, HITL confirmation, skill-based tool restrictions, and error recovery. The framework is extensible with 19 trait-based extension points and supports lane-based priority queue for scalable multi-machine task distribution.

atomic_agents

Atomic Agents is a modular and extensible framework designed for creating powerful applications. It follows the principles of Atomic Design, emphasizing small and single-purpose components. Leveraging Pydantic for data validation and serialization, the framework offers a set of tools and agents that can be combined to build AI applications. It depends on the Instructor package and supports various APIs like OpenAI, Cohere, Anthropic, and Gemini. Atomic Agents is suitable for developers looking to create AI agents with a focus on modularity and flexibility.

atomic-agents

The Atomic Agents framework is a modular and extensible tool designed for creating powerful applications. It leverages Pydantic for data validation and serialization. The framework follows the principles of Atomic Design, providing small and single-purpose components that can be combined. It integrates with Instructor for AI agent architecture and supports various APIs like Cohere, Anthropic, and Gemini. The tool includes documentation, examples, and testing features to ensure smooth development and usage.

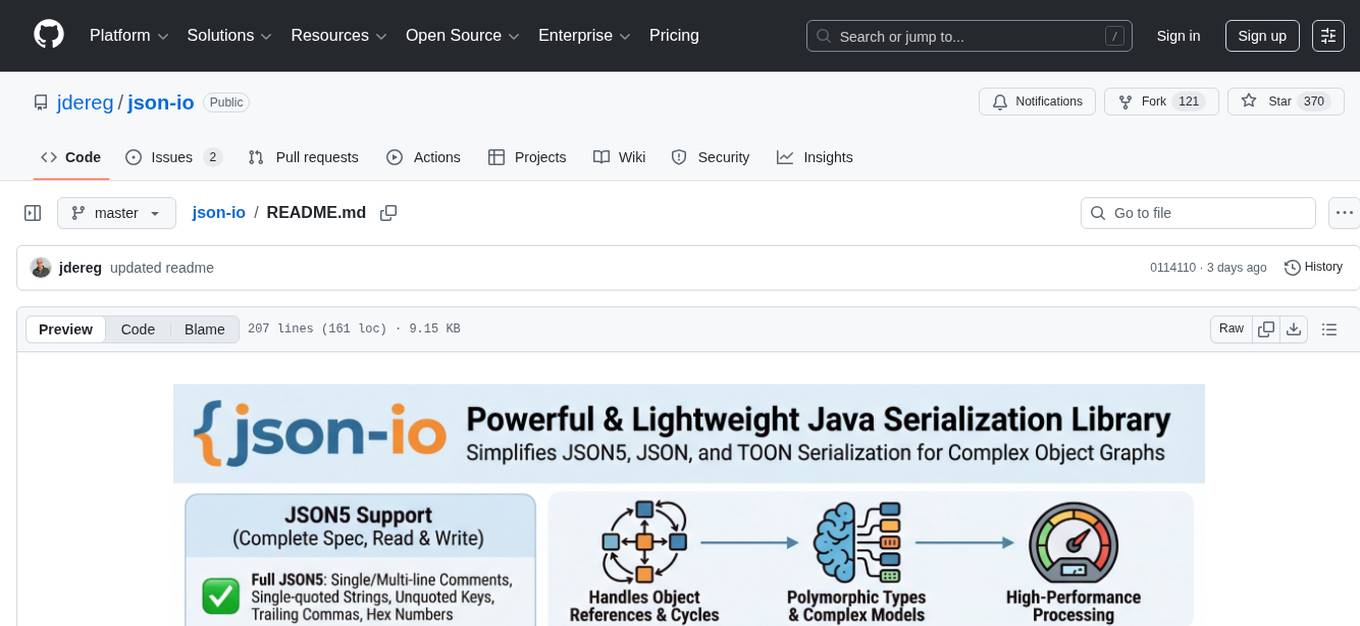

json-io

json-io is a powerful and lightweight Java library that simplifies JSON5, JSON, and TOON serialization and deserialization while handling complex object graphs with ease. It preserves object references, handles polymorphic types, and maintains cyclic relationships in data structures. It offers full JSON5 support, TOON read/write capabilities, and is compatible with JDK 1.8 through JDK 24. The library is built with a focus on correctness over speed, providing extensive configuration options and two modes for data representation. json-io is designed for developers who require advanced serialization features and support for various Java types without external dependencies.

worker-vllm

The worker-vLLM repository provides a serverless endpoint for deploying OpenAI-compatible vLLM models with blazing-fast performance. It supports deploying various model architectures, such as Aquila, Baichuan, BLOOM, ChatGLM, Command-R, DBRX, DeciLM, Falcon, Gemma, GPT-2, GPT BigCode, GPT-J, GPT-NeoX, InternLM, Jais, LLaMA, MiniCPM, Mistral, Mixtral, MPT, OLMo, OPT, Orion, Phi, Phi-3, Qwen, Qwen2, Qwen2MoE, StableLM, Starcoder2, Xverse, and Yi. Users can deploy models using pre-built Docker images or build custom images with specified arguments. The repository also supports OpenAI compatibility for chat completions, completions, and models, with customizable input parameters. Users can modify their OpenAI codebase to use the deployed vLLM worker and access a list of available models for deployment.

open-assistant-api

Open Assistant API is an open-source, self-hosted AI intelligent assistant API compatible with the official OpenAI interface. It supports integration with more commercial and private models, R2R RAG engine, internet search, custom functions, built-in tools, code interpreter, multimodal support, LLM support, and message streaming output. Users can deploy the service locally and expand existing features. The API provides user isolation based on tokens for SaaS deployment requirements and allows integration of various tools to enhance its capability to connect with the external world.

For similar jobs

db2rest

DB2Rest is a modern low-code REST DATA API platform that simplifies the development of intelligent applications. It seamlessly integrates existing and new databases with language models (LMs/LLMs) and vector stores, enabling the rapid delivery of context-aware, reasoning applications without vendor lock-in.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

airflow

Apache Airflow (or simply Airflow) is a platform to programmatically author, schedule, and monitor workflows. When workflows are defined as code, they become more maintainable, versionable, testable, and collaborative. Use Airflow to author workflows as directed acyclic graphs (DAGs) of tasks. The Airflow scheduler executes your tasks on an array of workers while following the specified dependencies. Rich command line utilities make performing complex surgeries on DAGs a snap. The rich user interface makes it easy to visualize pipelines running in production, monitor progress, and troubleshoot issues when needed.

airbyte-platform

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's low-code Connector Development Kit (CDK). Airbyte is used by data engineers and analysts at companies of all sizes to move data for a variety of purposes, including data warehousing, data analysis, and machine learning.

chronon

Chronon is a platform that simplifies and improves ML workflows by providing a central place to define features, ensuring point-in-time correctness for backfills, simplifying orchestration for batch and streaming pipelines, offering easy endpoints for feature fetching, and guaranteeing and measuring consistency. It offers benefits over other approaches by enabling the use of a broad set of data for training, handling large aggregations and other computationally intensive transformations, and abstracting away the infrastructure complexity of data plumbing.