exif-photo-blog

Photo blog, reporting 🤓 EXIF camera details (aperture, shutter speed, ISO) for each image.

Stars: 1656

EXIF Photo Blog is a full-stack photo blog application built with Next.js, Vercel, and Postgres. It features built-in authentication, photo upload with EXIF extraction, photo organization by tag, infinite scroll, light/dark mode, automatic OG image generation, a CMD-K menu with photo search, experimental support for AI-generated descriptions, and support for Fujifilm simulations. The application is easy to deploy to Vercel with just a few clicks and can be customized with a variety of environment variables.

README:

https://github.com/sambecker/exif-photo-blog/assets/169298/4253ea54-558a-4358-8834-89943cfbafb4

- Built-in auth

- Photo upload with EXIF extraction

- Organize photos by tag

- Infinite scroll

- Light/dark mode

- Automatic OG image generation

- CMD-K menu with photo search

- AI-generated text descriptions

- RSS/JSON feeds

- Support for Fujifilm recipes and film simulations

- Click Deploy

- Add required storage (Vercel Postgres + Vercel Blob) as part of template installation

- Configure environment variable for production domain in project settings

-

NEXT_PUBLIC_DOMAIN(e.g., photos.domain.com—used in absolute urls and seen in navigation if no explicit nav title is set)

-

-

Generate auth secret and add to environment variables:

AUTH_SECRET

- Add admin user to environment variables:

ADMIN_EMAILADMIN_PASSWORD

- Trigger redeploy

- Visit project on Vercel, navigate to "Deployments" tab, click ••• button next to most recent deployment, and select "Redeploy"

- Visit

/admin - Sign in with credentials supplied in Step 2

- Click "Upload Photos"

- Add optional title

- Click "Create"

If you don't plan to change the code, or don't mind making your updates public, consider forking this repo to easily receive future updates. If you've already set up your project on Vercel see these migration instructions.

- Clone code

- Run

pnpm ito install dependencies - If necessary, install Vercel CLI and authenticate by running

vercel login - Run

vercel linkto connect CLI to your project - Run

vercel devto start dev server with Vercel-managed environment variables

See FAQ for limitations of local development

-

NEXT_PUBLIC_META_TITLE(seen in search results and browser tab) -

NEXT_PUBLIC_META_DESCRIPTION(seen in search results) -

NEXT_PUBLIC_NAV_TITLE(seen in top-right navigation, defaults to domain when not configured) -

NEXT_PUBLIC_NAV_CAPTION(seen in top-right navigation, beneath title) -

NEXT_PUBLIC_PAGE_ABOUT(seen in grid sidebar—accepts rich formatting tags:<b>,<strong>,<i>,<em>,<u>,<br>) -

NEXT_PUBLIC_DOMAIN_SHARE(seen in share modals where a shorter url may be desirable)

⚠️ Enabling may result in increased project usage. See FAQ for static optimization troubleshooting hints.

-

NEXT_PUBLIC_STATICALLY_OPTIMIZE_PHOTOS = 1enables static optimization for photo pages (p/[photoId]), i.e., renders pages at build time -

NEXT_PUBLIC_STATICALLY_OPTIMIZE_PHOTO_OG_IMAGES = 1enables static optimization for OG images, i.e., renders images at build time -

NEXT_PUBLIC_STATICALLY_OPTIMIZE_PHOTO_CATEGORIES = 1enables static optimization for photo categories (tag/[tag],shot-on/[make]/[model], etc.), i.e., renders pages at build time -

NEXT_PUBLIC_STATICALLY_OPTIMIZE_PHOTO_CATEGORY_OG_IMAGES = 1enables static optimization for photo category (tag/[tag],shot-on/[make]/[model], etc.) OG images, i.e., renders images at build time -

NEXT_PUBLIC_PRESERVE_ORIGINAL_UPLOADS = 1prevents photo uploads being compressed before storing -

NEXT_PUBLIC_IMAGE_QUALITY = 1-100controls the quality of large photos -

NEXT_PUBLIC_BLUR_DISABLED = 1prevents image blur data being stored and displayed (potentially useful for limiting Postgres usage)

To auto-generate text descriptions of photo:

- Setup OpenAI

- Create OpenAI account and fund it (see thread if you're having issues)

- Setup usage limits to avoid unexpected charges (recommended)

- Set

OPENAI_MODELto choose a specific model (set to 'compatible' to use gpt-4o) - Set

OPENAI_BASE_URLto use alternate OpenAI-compatible providers (experimental)

- Generate API key and store in environment variable

OPENAI_SECRET_KEY(enable Responses API write access if customizing permissions) - Add rate limiting (recommended)

- Configure auto-generated fields (optional)

- Set which text fields auto-generate when uploading a photo by storing a comma-separated list, e.g.,

AI_TEXT_AUTO_GENERATED_FIELDS = title,semantic - Accepted values:

all-

title(default) caption-

tags(default) -

semantic(default) none

- Set which text fields auto-generate when uploading a photo by storing a comma-separated list, e.g.,

To add location meta to entities like albums:

- Setup Google Places API

- Create Google Cloud project if necessary

- Select Create credentials and choose "API key"

- Choose "Restrict key" and select "Places API (new)"

- Store API key in

GOOGLE_PLACES_API_KEY - Add rate limiting (recommended)

Create Upstash Redis store from storage tab of Vercel dashboard and link to your project (if required, add environment variable prefix EXIF) in order to enable rate limiting—no further configuration necessary.

-

NEXT_PUBLIC_CATEGORY_VISIBILITY- Comma-separated value controlling which photo sets appear in grid sidebar and CMD-K menu, and in what order. For example, you could move cameras above tags, and hide film simulations, by updating to

cameras,tags,lenses,recipes. - Accepted values:

-

recents(default) years-

tags(default) -

cameras(default) -

lenses(default) -

recipes(default) -

films(default) focal-lengths

-

- Comma-separated value controlling which photo sets appear in grid sidebar and CMD-K menu, and in what order. For example, you could move cameras above tags, and hide film simulations, by updating to

-

NEXT_PUBLIC_HIDE_CATEGORIES_ON_MOBILE = 1prevents categories displaying on mobile grid view -

NEXT_PUBLIC_HIDE_CATEGORY_IMAGE_HOVERS = 1prevents images displaying when hovering over category links -

NEXT_PUBLIC_EXHAUSTIVE_SIDEBAR_CATEGORIES = 1always shows expanded sidebar content -

NEXT_PUBLIC_HIDE_TAGS_WITH_ONE_PHOTO = 1to only show tags with 2 or more photos

-

NEXT_PUBLIC_DEFAULT_SORT- Sets default sort on grid/full homepages

- Accepted values:

-

taken-at(default) taken-at-oldest-firstuploaded-atuploaded-at-oldest-first

-

-

NEXT_PUBLIC_NAV_SORT_CONTROL- Controls sort UI on grid/full homepages

- Accepted values:

none-

toggle(default) menu

- Color-based sorting (experimental)

-

NEXT_PUBLIC_SORT_BY_COLOR = 1enables color-based sorting (forces nav sort control to "menu," flags photos missing color data in admin dashboard)—color identification benefits greatly from AI being enabled -

NEXT_PUBLIC_COLOR_SORT_STARTING_HUEcontrols which colors start first (accepts a hue of 0 to 360, default: 80) -

NEXT_PUBLIC_COLOR_SORT_CHROMA_CUTOFFcontrols which colors are considered sufficiently vibrant (accepts a chroma of 0 to 0.37, default: 0.05):

-

-

NEXT_PUBLIC_PRIORITY_BASED_SORTING = 1takes priority field into account when sorting photos (⚠️ enabling may have performance consequences)

-

NEXT_PUBLIC_HIDE_KEYBOARD_SHORTCUT_TOOLTIPS = 1hides keyboard shortcut hints in areas like the main nav, and previous/next photo links -

NEXT_PUBLIC_HIDE_EXIF_DATA = 1hides EXIF data in photo details and OG images (potentially useful for portfolios, which don't focus on photography) -

NEXT_PUBLIC_HIDE_ZOOM_CONTROLS = 1hides fullscreen photo zoom controls -

NEXT_PUBLIC_HIDE_TAKEN_AT_TIME = 1hides taken at time from photo meta -

NEXT_PUBLIC_HIDE_REPO_LINK = 1removes footer link to repo

-

NEXT_PUBLIC_GRID_HOMEPAGE = 1shows grid layout on homepage -

NEXT_PUBLIC_GRID_ASPECT_RATIO = 1.5sets aspect ratio for grid tiles (defaults to1—setting to0removes the constraint) -

NEXT_PUBLIC_SHOW_LARGE_THUMBNAILS = 1ensures large thumbnails on photo grid views (if not configured, density is based on aspect ratio)

-

NEXT_PUBLIC_DEFAULT_THEME = light | darksets preferred initial theme (defaults tosystemwhen not configured) -

NEXT_PUBLIC_MATTE_PHOTOS = 1constrains the size of each photo, and displays a surrounding border, potentially useful for photos with tall aspect ratios (colors can be customized viaNEXT_PUBLIC_MATTE_COLOR+NEXT_PUBLIC_MATTE_COLOR_DARK)

-

NEXT_PUBLIC_GEO_PRIVACY = 1disables collection/display of location-based data (⚠️ re-compresses uploaded images in order to remove GPS information) -

NEXT_PUBLIC_ALLOW_PUBLIC_DOWNLOADS = 1enables public photo downloads for all visitors (⚠️ may result in increased bandwidth usage) -

NEXT_PUBLIC_SOCIAL_NETWORKS- Comma-separated list of share modal options

- Accepted values:

-

x(default) threadsfacebooklinkedinqrcodeallnone

-

-

NEXT_PUBLIC_SITE_FEEDS = 1enables feeds at/feed.jsonand/rss.xml -

NEXT_PUBLIC_OG_TEXT_ALIGNMENT = BOTTOMkeeps OG image text bottom aligned (default is top)

- Web Analytics

- Open project on Vercel

- Click "Analytics" tab

- Follow "Enable Web Analytics" instructions (

@vercel/analyticsalready included)

- Speed Insights

- Open project on Vercel

- Click "Speed Insights" tab

- Follow "Enable Speed Insights" instructions (

@vercel/speed-insightsalready included)

-

PAGE_SCRIPT_URLS- comma-separated list of URLs to be added to the bottom of the body tag via "next/script"

- urls must begin with 'https'

⚠️ this will invoke arbitrary script execution on every page—use with caution

-

DISABLE_DEBUG_OUTPUTS = 1- removes build identifier in

<head /> - disables

/admin/configuration/export.json

- removes build identifier in

Only one storage adapter—Vercel Blob, Cloudflare R2, AWS S3, or MinIO—can be used at a time. Ideally, this is configured before photos are uploaded (see Issue #34 for migration considerations). If you have multiple adapters, you can set one as preferred by storing aws-s3, cloudflare-r2, minio, or vercel-blob in NEXT_PUBLIC_STORAGE_PREFERENCE. See FAQ regarding unsupported providers.

- Setup bucket

- Create R2 bucket with default settings

- Setup CORS under bucket settings:

[{ "AllowedHeaders": ["*"], "AllowedMethods": [ "GET", "PUT" ], "AllowedOrigins": [ "http://localhost:3000", "https://{VERCEL_PROJECT_NAME}*.vercel.app", "{PRODUCTION_DOMAIN}" ] }]- Enable public hosting by doing one of the following:

- Select "Connect Custom Domain" and choose a Cloudflare domain

- OR

- Select "Allow Access" from R2.dev subdomain

- Store public configuration:

-

NEXT_PUBLIC_CLOUDFLARE_R2_BUCKET: bucket name -

NEXT_PUBLIC_CLOUDFLARE_R2_ACCOUNT_ID: account id (found on R2 overview page) -

NEXT_PUBLIC_CLOUDFLARE_R2_PUBLIC_DOMAIN: either "your-custom-domain.com" or "pub-jf90908...s0d9f8s0s9df.r2.dev"

-

- Setup private credentials

- Create API token by selecting "Manage R2 API Tokens," and clicking "Create API Token"

- Select "Object Read & Write," choose "Apply to specific buckets only," and select the bucket created in Step 1

- Store credentials (

⚠️ Ensure access keys are not prefixed withNEXT_PUBLIC):CLOUDFLARE_R2_ACCESS_KEYCLOUDFLARE_R2_SECRET_ACCESS_KEY

- Setup bucket

- Create S3 bucket with "ACLs enabled," and "Block all public access" turned off

- Setup CORS under bucket permissions:

[{ "AllowedHeaders": ["*"], "AllowedMethods": [ "GET", "PUT" ], "AllowedOrigins": [ "http://localhost:*", "https://{VERCEL_PROJECT_NAME}*.vercel.app", "{PRODUCTION_DOMAIN}" ], "ExposeHeaders": [] }] - Store public configuration

-

NEXT_PUBLIC_AWS_S3_BUCKET: bucket name -

NEXT_PUBLIC_AWS_S3_REGION: bucket region, e.g., "us-east-1"

-

- Setup private credentials

-

Create IAM policy using JSON editor:

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": [ "s3:PutObject", "s3:PutObjectACL", "s3:GetObject", "s3:ListBucket", "s3:DeleteObject" ], "Resource": [ "arn:aws:s3:::{BUCKET_NAME}", "arn:aws:s3:::{BUCKET_NAME}/*" ] } ] } -

Create IAM user by choosing "Attach policies directly," and selecting the policy created above. Create "Access key" under "Security credentials," choose "Application running outside AWS," and store credentials (

⚠️ Ensure access keys are not prefixed withNEXT_PUBLIC):AWS_S3_ACCESS_KEYAWS_S3_SECRET_ACCESS_KEY

-

Create IAM policy using JSON editor:

MinIO is a self-hosted S3-compatible object storage server.

First, install and deploy the MinIO server, then create a bucket with public read access.

-

Install MinIO: Follow official documentation to install and deploy MinIO.

-

Create bucket:

mc mb myminio/{BUCKET_NAME} -

Set public read policy: Create file named

bucket-policy.jsonwith the following content to allow read-only access:{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Principal": { "AWS": [ "*" ] }, "Action": [ "s3:GetObject" ], "Resource": [ "arn:aws:s3:::{BUCKET_NAME}/*" ] } ] }Next, apply this policy to your bucket:

mc policy set myminio/photos bucket-policy.json -

Store public configuration: Set the following public environment variables for your application:

-

NEXT_PUBLIC_MINIO_BUCKET: Bucket name -

NEXT_PUBLIC_MINIO_DOMAIN: MinIO server endpoint, e.g., "minio.yourdomain.com" -

NEXT_PUBLIC_MINIO_PORT: (optional) -

NEXT_PUBLIC_MINIO_DISABLE_SSL: Set to1to disable SSL (defaults to HTTPS)

-

Create a dedicated user and a policy that grants permission to manage objects within your BUCKET_NAME.

-

Define user policy: Create file named

user-policy.json. This policy will allow the user to list the bucket contents and to get, put, and delete objects within it.{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": [ "s3:DeleteObject", "s3:GetObject", "s3:ListBucket", "s3:PutObject" ], "Resource": [ "arn:aws:s3:::{BUCKET_NAME}/*", "arn:aws:s3:::{BUCKET_NAME}" ] } ] } -

Create policy: Add named policy to MinIO.

mc admin policy add myminio photos-manager-policy user-policy.json

-

Create user: Create new user with access key and secret key.

mc admin user add myminio {MINIO_ACCESS_KEY} {MINIO_SECRET_ACCESS_KEY} -

Attach policy to user: Assign

photos-manager-policyto the user.mc admin policy set myminio photos-manager-policy user=MINIO_ACCESS_KEY -

Store private credentials: Set the following private environment variables for your application.

⚠️ Ensure these access keys are not prefixed withNEXT_PUBLIC.-

MINIO_ACCESS_KEY: Your MINIO_ACCESS_KEY -

MINIO_SECRET_ACCESS_KEY: Your MINIO_SECRET_ACCESS_KEY

-

Vercel Postgres can be switched to another Postgres-compatible, pooling provider by updating POSTGRES_URL. Some providers only work when SSL is disabled, which can configured by setting DISABLE_POSTGRES_SSL = 1.

- Ensure connection string is set to "Transaction Mode" via port

6543 - Disable SSL by setting

DISABLE_POSTGRES_SSL = 1

Partial internationalization (for non-admin, user-facing text) provided for a handful of languages. Configure locale by setting environment variable NEXT_PUBLIC_LOCALE.

bd-bnen-gben-ushi-inid-idpt-brpt-pttr-trvi-vnzh-cn

To add support for a new language, open a PR following instructions in /src/i18n/index.ts, using en-us.ts as reference.

Thank you ❤️ translators: @sconetto (pt-br, pt-pt), @brandnholl (id-id), @TongEc (zh-cn), @xahidex (bd-bn, hi-in), @mehmetabak (tr-tr), @simondeeley (en-gb), @jasonquache (vi-vn)

For forked repos, click "Code," then "Update branch" from the main repo page. If you originally cloned the code, you can create a fork from GitHub, then update your Git connection from your Vercel project settings. Once you've done this, you may need to go to your project deployments page, click •••, select "Create deployment," and choose

main.

In the admin menu, select "Batch edit ..." From there, you can perform bulk tag, favorite, and delete actions.

This template statically optimizes core views such as

/and/gridto minimize visitor load times. Consequently, when photos are added, edited, or removed, it might take several minutes for those changes to propagate. If it seems like a change is not taking effect, try navigating to/admin/configurationand clicking "Clear Cache."

There have been reports (#184 + #185) that having large photos (over 30MB), or a CDN, e.g., Cloudflare in front of Vercel, may destabilize static optimization.

As the template has evolved, EXIF fields (such as lenses) have been added, blur data is generated through a different method, and AI/privacy features have been added. In order to bring older photos up to date, either click the 'sync' button next to a photo or go to photo updates (

/admin/photos/updates) to sync all photos that need updates.

Many services such as iMessage, Slack, and X, require near-instant responses when unfurling link-based content. In order to guarantee sufficient responsiveness, consider rendering pages and image assets ahead of time by enabling static optimization by setting

NEXT_PUBLIC_STATICALLY_OPTIMIZE_PHOTOS = 1andNEXT_PUBLIC_STATICALLY_OPTIMIZE_PHOTO_OG_IMAGES = 1. Keep in mind that this will increase platform usage.

By default, all photos are shown full-width, regardless of orientation. Enable matting to showcase horizontal and vertical photos at similar scales by setting

NEXT_PUBLIC_MATTE_PHOTOS = 1.

Thumbnail grid density (seen on

/grid, tag overviews, and other photo sets) is dependent on aspect ratio configuration (ratios of 1 or less have more photos per row). This can be overridden by settingNEXT_PUBLIC_SHOW_LARGE_THUMBNAILS = 1.

While all private paths (

/tag/private/*) require authentication, raw links to individual photo assets remain publicly accessible. Randomly generated urls from storage providers are only secure via obscurity. Use with caution.

My images/content have fallen out of sync with my database and/or my production site no longer matches local development. What do I do?

Navigate to

/admin/configurationand click "Clear Cache."

Navigate to

/admin/configurationand click "Clear Cache." If this doesn't help, open an issue.

This template relies on

sharpto manipulate images andnext/imageto serve them, neither of which currently support HEIC (https://github.com/vercel/next.js/discussions/30043 + https://github.com/lovell/sharp/issues/3981). Fortunately, you can still upload HEIC files directly from native share controls on Apple platforms and they will automatically be converted to JPG upon upload. If you think you have a viable HEIC strategy, feel free to open a PR. See https://github.com/sambecker/exif-photo-blog/issues/229 for discussion.

Absent configuration, the default grid aspect ratio is

1.NEXT_PUBLIC_GRID_ASPECT_RATIOcan be set to any number (for instance,1.5for 3:2 images) or ignored by setting to0.

Fujifilm simulation data is stored in vendor-specific Makernote binaries embedded in EXIF data. Under certain circumstances an intermediary may strip out this data. For instance, there is a known issue on iOS where editing an image, e.g., cropping it, causes Makernote data loss. If simulation data appears to be missing, try importing the original file as it was stored by the camera. Additionally, if you can confirm the simulation mode, you can edit the photo and manually select it.

If you don't see a recipe, first try syncing your photo from the ••• menu, or from

/admin/photos. If the data looks incorrect, open an issue with the file in question attached in order for it to be investigated. Fujifilm file specifications have evolved over time and recipe parsing may need to be adjusted based on camera model/vintage.

This can be accomplished by setting

NEXT_PUBLIC_CATEGORY_VISIBILITY(which has a default value oftags,cameras,lenses,recipes,films) totags,cameras,lenses.

For a number of reasons, only EXIF orientations: 1, 3, 6, and 8 are supported. Orientations 2, 4, 5, and 7—which make use of mirroring—are not supported.

Earlier versions of this template generated blur data on the client, which varied visually from browser to browser. Data is now generated consistently on the server. If you wish to update blur data for a particular photo, edit the photo in question, make no changes, and choose "Update."

The default timeout for processing multiple uploads is 60 seconds (the limit for Hobby accounts). This can be extended to 5 minutes on Pro accounts by setting

maxDuration = 300insrc/app/admin/uploads/page.tsx.

You may need to pre-purchase credits before accessing the OpenAI API. See #110 for discussion. If you've customized key permissions, make sure write access to the Responses API is enabled.

Once AI text generation is configured, photos missing text will show up in photo updates (

/admin/photos/updates).

At this time, there are no plans to introduce support for new storage providers. The template now supports Vercel Blob, AWS S3, Cloudflare R2, and MinIO (self-hosted S3-compatible storage). While configuring other AWS-compatible providers should not be too difficult, there's nuance to consider surrounding details like IAM, CORS, and domain configuration, which can differ slightly from platform to platform. If you'd like to contribute an implementation for a new storage provider, please open a PR.

At this time, an external storage provider is necessary in order to develop locally. If you have a strategy to propose which allows files to be locally uploaded and served to

next/imagein away that mirrors an external storage provider for debugging purposes, please open a PR.

Possibly. See #116 and #132 for discussion around image hosting and docker usage.

Previous versions of this template stored Next.js "App Router" files in

/src, and app-level functionality in/src/site. If you've made customizations and are having difficulty merging updates, consider moving/src/appfiles to/, and renamingsrc/siteto/src/app. Other structural changes include movingtailwind.cssandmiddleware.tsto/. Additionally, it may be helpful to review PR #195 for an overview of the most significant changes.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for exif-photo-blog

Similar Open Source Tools

exif-photo-blog

EXIF Photo Blog is a full-stack photo blog application built with Next.js, Vercel, and Postgres. It features built-in authentication, photo upload with EXIF extraction, photo organization by tag, infinite scroll, light/dark mode, automatic OG image generation, a CMD-K menu with photo search, experimental support for AI-generated descriptions, and support for Fujifilm simulations. The application is easy to deploy to Vercel with just a few clicks and can be customized with a variety of environment variables.

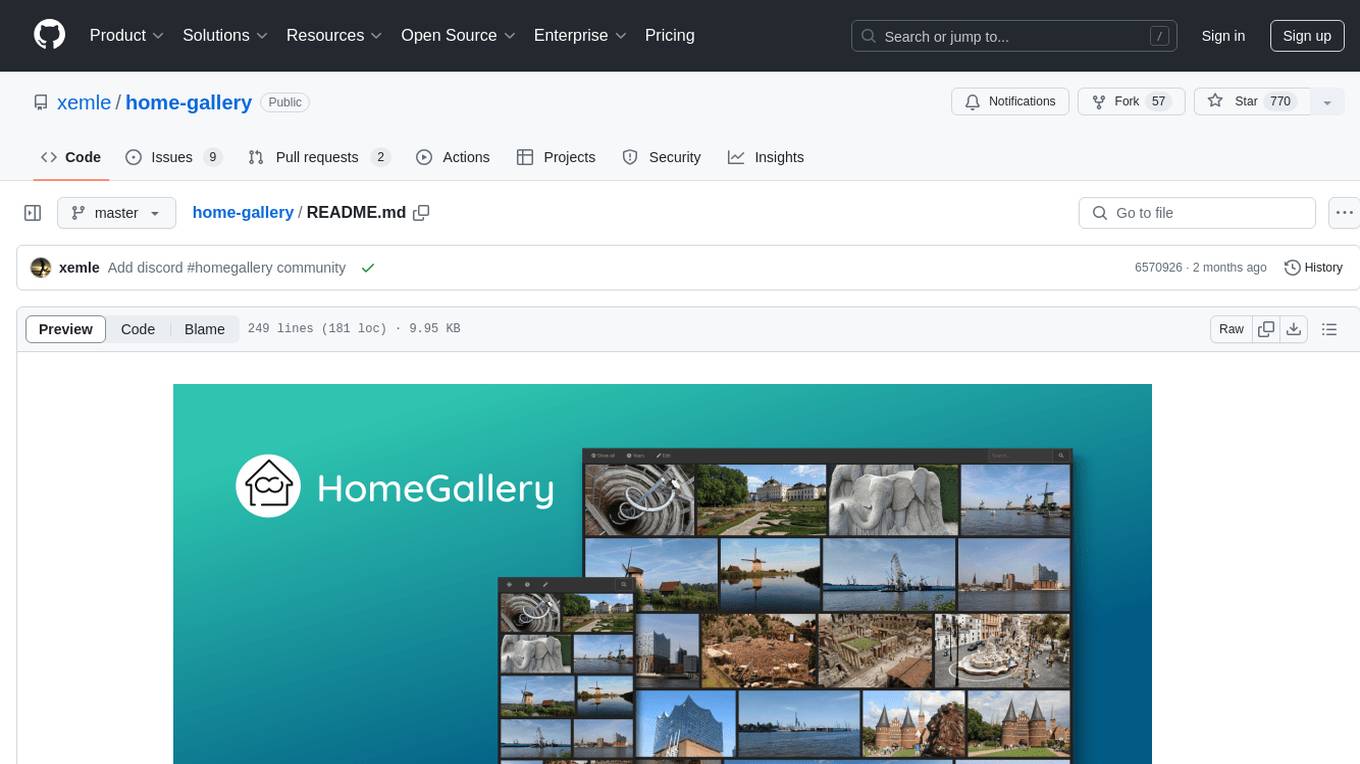

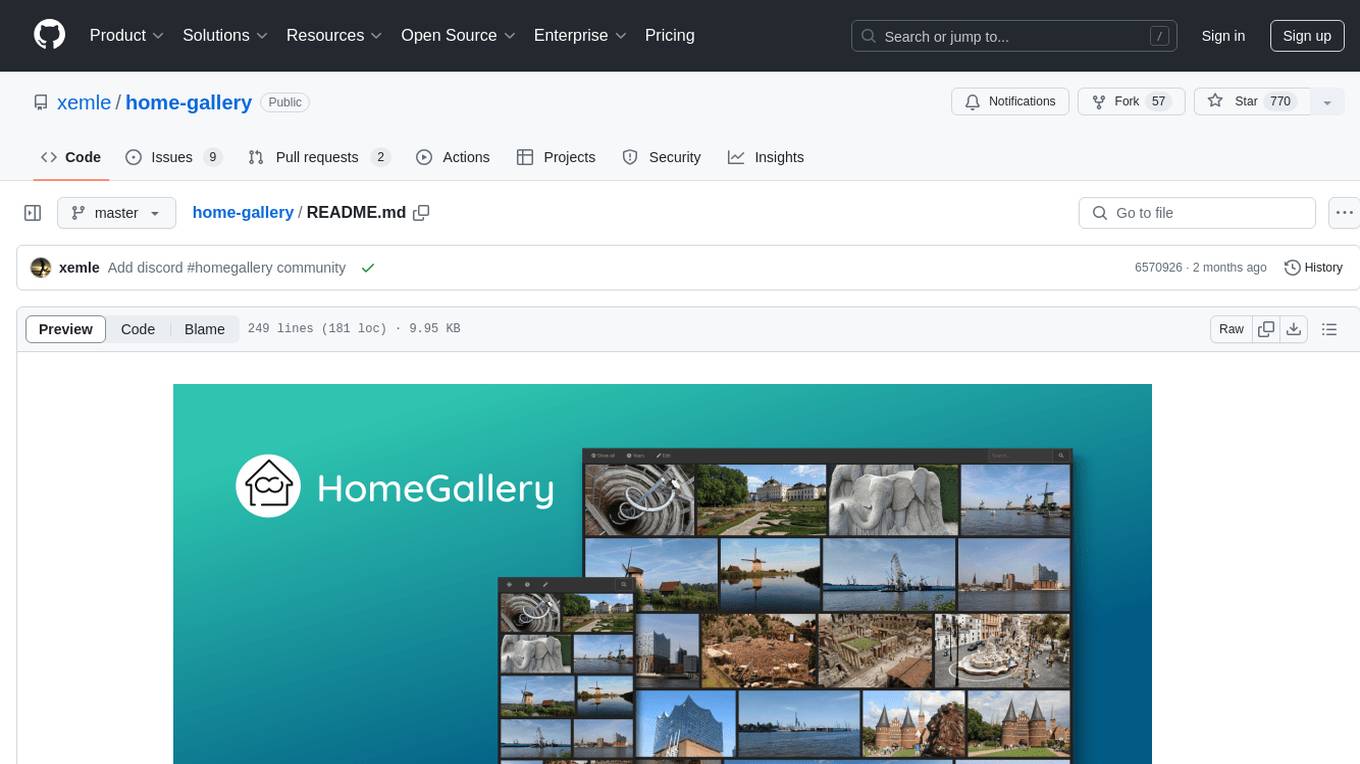

home-gallery

Home-Gallery.org is a self-hosted open-source web gallery for browsing personal photos and videos with tagging, mobile-friendly interface, and AI-powered image and face discovery. It aims to provide a fast user experience on mobile phones and help users browse and rediscover memories from their media archive. The tool allows users to serve their local data without relying on cloud services, view photos and videos from mobile phones, and manage images from multiple media source directories. Features include endless photo stream, video transcoding, reverse image lookup, face detection, GEO location reverse lookups, tagging, and more. The tool runs on NodeJS and supports various platforms like Linux, Mac, and Windows.

ComfyUI-GigapixelAI

ComfyUI-GigapixelAI is a repository that provides custom nodes for using GigapixelAI in ComfyUI. It requires a licensed installation of Gigapixel 8 with the path of gigapixel.exe in the installation folder of Topaz Gigapixel AI. Users need GigapixelAI version 7.3.0 or higher to use this tool effectively.

chatwise-releases

ChatWise is an offline tool that supports various AI models such as OpenAI, Anthropic, Google AI, Groq, and Ollama. It is multi-modal, allowing text-to-speech powered by OpenAI and ElevenLabs. The tool supports text files, PDFs, audio, and images across different models. ChatWise is currently available for macOS (Apple Silicon & Intel) with Windows support coming soon.

pocketpaw

PocketPaw is a lightweight and user-friendly tool designed for managing and organizing your digital assets. It provides a simple interface for users to easily categorize, tag, and search for files across different platforms. With PocketPaw, you can efficiently organize your photos, documents, and other files in a centralized location, making it easier to access and share them. Whether you are a student looking to organize your study materials, a professional managing project files, or a casual user wanting to declutter your digital space, PocketPaw is the perfect solution for all your file management needs.

repomix

Repomix is a powerful tool that packs your entire repository into a single, AI-friendly file. It is designed to format your codebase for easy understanding by AI tools like Large Language Models (LLMs), Claude, ChatGPT, and Gemini. Repomix offers features such as AI optimization, token counting, simplicity in usage, customization options, Git awareness, and security-focused checks using Secretlint. It allows users to pack their entire repository or specific directories/files using glob patterns, and even supports processing remote Git repositories. The tool generates output in plain text, XML, or Markdown formats, with options for including/excluding files, removing comments, and performing security checks. Repomix also provides a global configuration option, custom instructions for AI context, and a security check feature to detect sensitive information in files.

memfree

MemFree is an open-source hybrid AI search engine that allows users to simultaneously search their personal knowledge base (bookmarks, notes, documents, etc.) and the Internet. It features a self-hosted super fast serverless vector database, local embedding and rerank service, one-click Chrome bookmarks index, and full code open source. Users can contribute by opening issues for bugs or making pull requests for new features or improvements.

file-organizer-2000

AI File Organizer 2000 is an Obsidian Plugin that uses AI to transcribe audio, annotate images, and automatically organize files by moving them to the most likely folders. It supports text, audio, and images, with upcoming local-first LLM support. Users can simply place unorganized files into the 'Inbox' folder for automatic organization. The tool renames and moves files quickly, providing a seamless file organization experience. Self-hosting is also possible by running the server and enabling the 'Self-hosted' option in the plugin settings. Join the community Discord server for more information and use the provided iOS shortcut for easy access on mobile devices.

dbeaver

DBeaver is a free multi-platform database tool designed for developers, SQL programmers, database administrators, and analysts. It offers a wide range of features including schema editor, SQL editor, data editor, AI integration, ER diagrams, data export/import/migration, SQL execution plans, database administration tools, database dashboards, Spatial data viewer, proxy and SSH tunnelling, custom database drivers editor, etc. It supports over 100 database drivers out of the box and is compatible with any database that has a JDBC or ODBC driver. DBeaver also supports smart AI completion and code generation with OpenAI or Copilot.

opensearch-ai

OpenSearch GPT is a personalized AI search engine that adapts to user interests while browsing the web. It utilizes advanced technologies like Mem0 for automatic memory collection, Vercel AI ADK for AI applications, Next.js for React framework, Tailwind CSS for styling, Shadcn UI for UI components, Cobe for globe animation, GPT-4o-mini for AI capabilities, and Cloudflare Pages for web application deployment. Developed by Supermemory.ai team.

amazon-sagemaker-generativeai

Repository for training and deploying Generative AI models, including text-text, text-to-image generation, prompt engineering playground and chain of thought examples using SageMaker Studio. The tool provides a platform for users to experiment with generative AI techniques, enabling them to create text and image outputs based on input data. It offers a range of functionalities for training and deploying models, as well as exploring different generative AI applications.

LayaAir

LayaAir engine, under the Layabox brand, is a 3D engine that supports full-platform publishing. It can be applied in various fields such as games, education, advertising, marketing, digital twins, metaverse, AR guides, VR scenes, architectural design, industrial design, etc.

NanoBanana-AI-Pose-Transfer

NanoBanana-AI-Pose-Transfer is a lightweight tool for transferring poses between images using artificial intelligence. It leverages advanced AI algorithms to accurately map and transfer poses from a source image to a target image. This tool is designed to be user-friendly and efficient, allowing users to easily manipulate and transfer poses for various applications such as image editing, animation, and virtual reality. With NanoBanana-AI-Pose-Transfer, users can seamlessly transfer poses between images with high precision and quality.

facefusion-pinokio

FaceFusion Pinokio is an industry leading face manipulation platform. It provides advanced tools for manipulating and blending faces in images and videos. The platform offers a user-friendly interface and powerful features to create stunning visual effects. With FaceFusion Pinokio, users can seamlessly blend faces, swap facial features, and create unique and creative compositions. Whether you are a professional graphic designer, a social media influencer, or just someone looking to have fun with face manipulation, FaceFusion Pinokio has everything you need to bring your ideas to life.

emgucv

Emgu CV is a cross-platform .Net wrapper for the OpenCV image-processing library. It allows OpenCV functions to be called from .NET compatible languages. The wrapper can be compiled by Visual Studio, Unity, and "dotnet" command, and it can run on Windows, Mac OS, Linux, iOS, and Android.

img-prompt

IMGPrompt is an AI prompt editor tailored for image and video generation tools like Stable Diffusion, Midjourney, DALL·E, FLUX, and Sora. It offers a clean interface for viewing and combining prompts with translations in multiple languages. The tool includes features like smart recommendations, translation, random color generation, prompt tagging, interactive editing, categorized tag display, character count, and localization. Users can enhance their creative workflow by simplifying prompt creation and boosting efficiency.

For similar tasks

exif-photo-blog

EXIF Photo Blog is a full-stack photo blog application built with Next.js, Vercel, and Postgres. It features built-in authentication, photo upload with EXIF extraction, photo organization by tag, infinite scroll, light/dark mode, automatic OG image generation, a CMD-K menu with photo search, experimental support for AI-generated descriptions, and support for Fujifilm simulations. The application is easy to deploy to Vercel with just a few clicks and can be customized with a variety of environment variables.

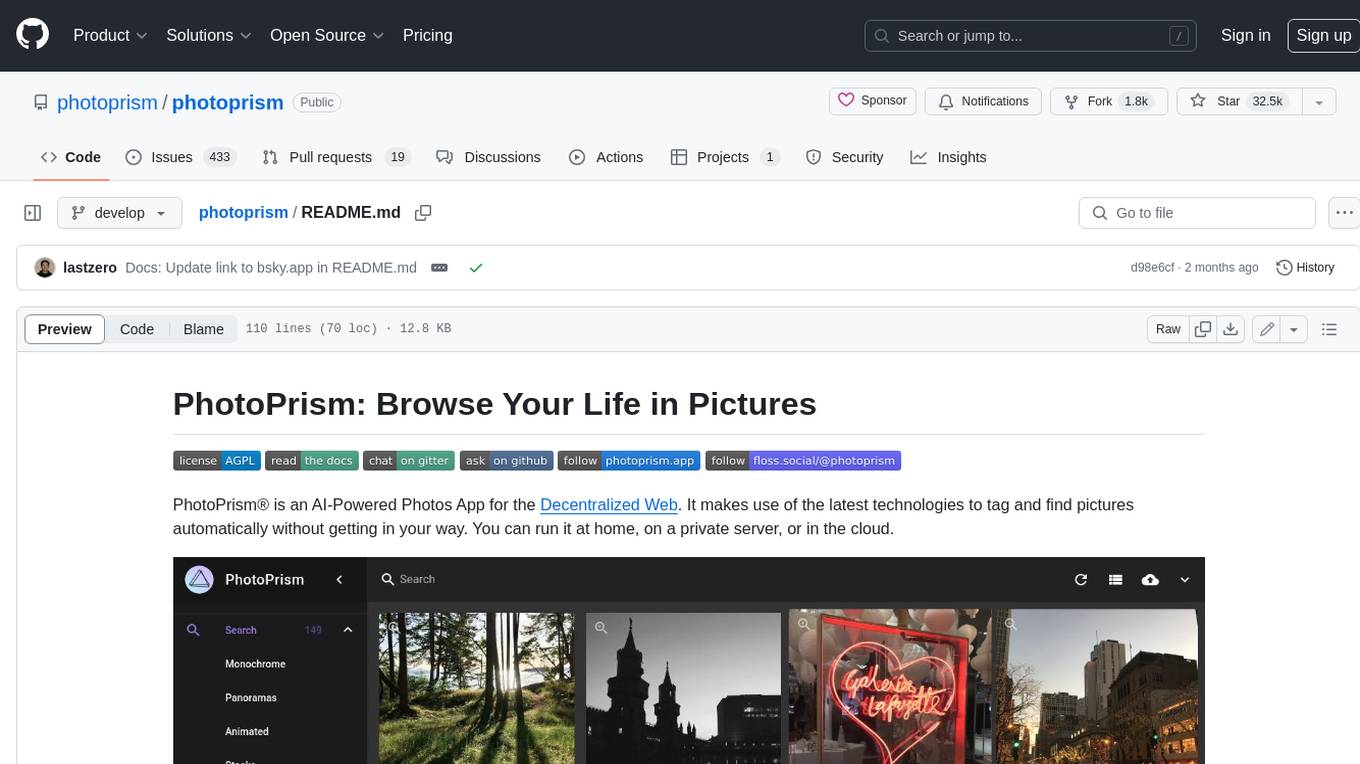

photoprism

PhotoPrism is an AI-powered photos app for the decentralized web. It uses the latest technologies to tag and find pictures automatically without getting in your way. You can run it at home, on a private server, or in the cloud.

album-ai

Album AI is an experimental project that uses GPT-4o-mini to automatically identify metadata from image files in the album. It leverages RAG technology to enable conversations with the album, serving as a photo album or image knowledge base to assist in content generation. The tool provides APIs for search and chat functionalities, supports one-click deployment to platforms like Render, and allows for integration and modification under a permissive open-source license.

home-gallery

Home-Gallery.org is a self-hosted open-source web gallery for browsing personal photos and videos with tagging, mobile-friendly interface, and AI-powered image and face discovery. It aims to provide a fast user experience on mobile phones and help users browse and rediscover memories from their media archive. The tool allows users to serve their local data without relying on cloud services, view photos and videos from mobile phones, and manage images from multiple media source directories. Features include endless photo stream, video transcoding, reverse image lookup, face detection, GEO location reverse lookups, tagging, and more. The tool runs on NodeJS and supports various platforms like Linux, Mac, and Windows.

spec-kit

Spec Kit is a tool designed to enable organizations to focus on product scenarios rather than writing undifferentiated code through Spec-Driven Development. It flips the script on traditional software development by making specifications executable, directly generating working implementations. The tool provides a structured process emphasizing intent-driven development, rich specification creation, multi-step refinement, and heavy reliance on advanced AI model capabilities for specification interpretation. Spec Kit supports various development phases, including 0-to-1 Development, Creative Exploration, and Iterative Enhancement, and aims to achieve experimental goals related to technology independence, enterprise constraints, user-centric development, and creative & iterative processes. The tool requires Linux/macOS (or WSL2 on Windows), an AI coding agent (Claude Code, GitHub Copilot, Gemini CLI, or Cursor), uv for package management, Python 3.11+, and Git.

lap

Lap is a lightning-fast, cross-platform photo manager that prioritizes user privacy and local AI processing. It allows users to organize and browse their photos efficiently, with features like natural language search, smart face recognition, and similar image search. Lap does not require importing photos, syncs seamlessly with the file system, and supports multiple libraries. Built for performance with a Rust core and lazy loading, Lap offers a delightful user experience with beautiful design, customization options, and multi-language support. It is a great alternative to cloud-based photo services, offering excellent organization, performance, and no vendor lock-in.

pocketpaw

PocketPaw is a lightweight and user-friendly tool designed for managing and organizing your digital assets. It provides a simple interface for users to easily categorize, tag, and search for files across different platforms. With PocketPaw, you can efficiently organize your photos, documents, and other files in a centralized location, making it easier to access and share them. Whether you are a student looking to organize your study materials, a professional managing project files, or a casual user wanting to declutter your digital space, PocketPaw is the perfect solution for all your file management needs.

TrailSnap

TrailSnap is an intelligent AI photo album application dedicated to helping users easily record, organize, and review their travel experiences. With powerful AI processing capabilities, every photo and journey becomes a cherished memory. The app transforms moments captured in the album into valuable memories, allowing users to silently record tickets, attractions, automatically organize photos for social media posts, prepare captions, create short videos, and more. The future envisions every individual (at least every family) having their own AI data center, with the album serving as a significant data source, preserving many moments in life.

For similar jobs

exif-photo-blog

EXIF Photo Blog is a full-stack photo blog application built with Next.js, Vercel, and Postgres. It features built-in authentication, photo upload with EXIF extraction, photo organization by tag, infinite scroll, light/dark mode, automatic OG image generation, a CMD-K menu with photo search, experimental support for AI-generated descriptions, and support for Fujifilm simulations. The application is easy to deploy to Vercel with just a few clicks and can be customized with a variety of environment variables.

obsidian-textgenerator-plugin

Text Generator is an open-source AI Assistant Tool that leverages Generative Artificial Intelligence to enhance knowledge creation and organization in Obsidian. It allows users to generate ideas, titles, summaries, outlines, and paragraphs based on their knowledge database, offering endless possibilities. The plugin is free and open source, compatible with Obsidian for a powerful Personal Knowledge Management system. It provides flexible prompts, template engine for repetitive tasks, community templates for shared use cases, and highly flexible configuration with services like Google Generative AI, OpenAI, and HuggingFace.

video2blog

video2blog is an open-source project aimed at converting videos into textual notes. The tool follows a process of extracting video information using yt-dlp, downloading the video, downloading subtitles if available, translating subtitles if not in Chinese, generating Chinese subtitles using whisper if no subtitles exist, converting subtitles to articles using gemini, and manually inserting images from the video into the article. The tool provides a solution for creating blog content from video resources, enhancing accessibility and content creation efficiency.

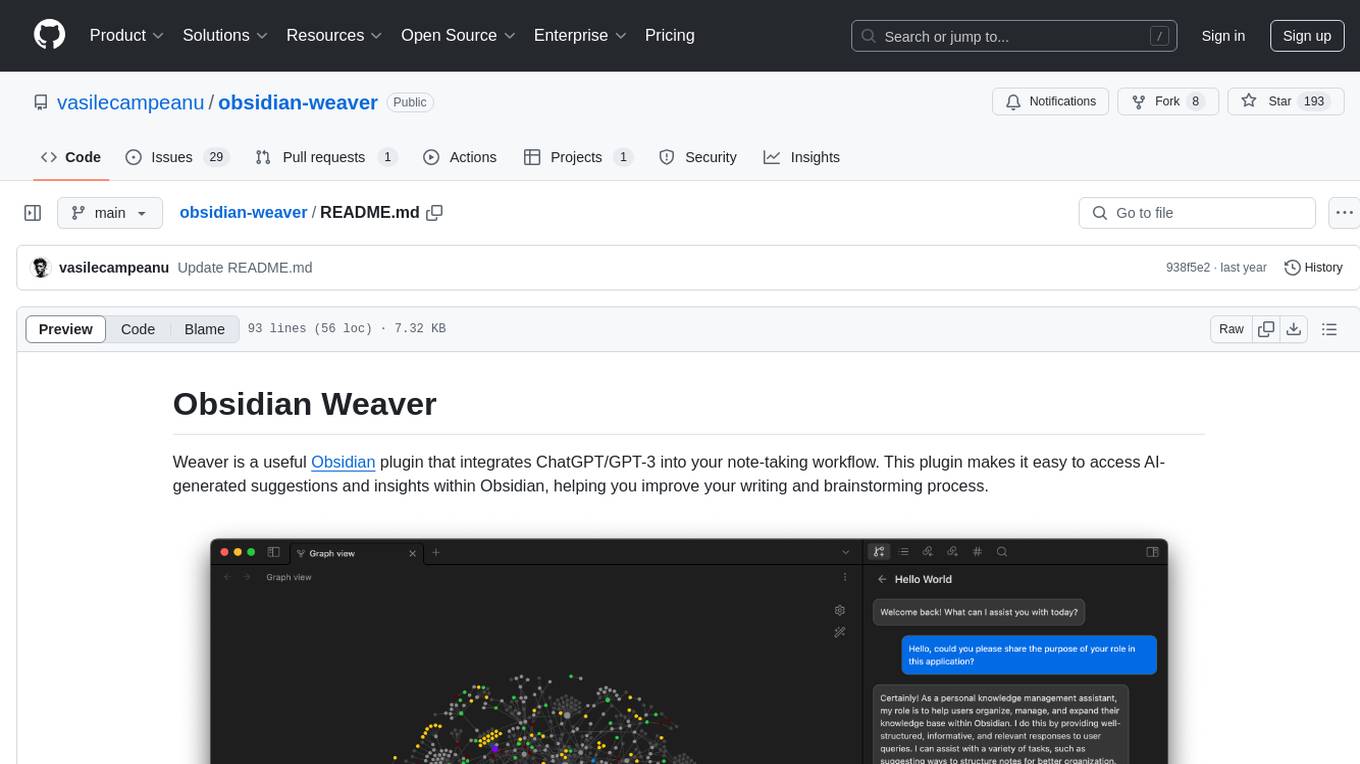

obsidian-weaver

Obsidian Weaver is a plugin that integrates ChatGPT/GPT-3 into the note-taking workflow of Obsidian. It allows users to easily access AI-generated suggestions and insights within Obsidian, enhancing the writing and brainstorming process. The plugin respects Obsidian's philosophy of storing notes locally, ensuring data security and privacy. Weaver offers features like creating new chat sessions with the AI assistant and receiving instant responses, all within the Obsidian environment. It provides a seamless integration with Obsidian's interface, making the writing process efficient and helping users stay focused. The plugin is constantly being improved with new features and updates to enhance the note-taking experience.

wordlift-plugin

WordLift is a plugin that helps online content creators organize posts and pages by adding facts, links, and media to build beautifully structured websites for both humans and search engines. It allows users to create, own, and publish their own knowledge graph, and publishes content as Linked Open Data following Tim Berners-Lee's Linked Data Principles. The plugin supports writers by providing trustworthy and contextual facts, enriching content with images, links, and interactive visualizations, keeping readers engaged with relevant content recommendations, and producing content compatible with schema.org markup for better indexing and display on search engines. It also offers features like creating a personal Wikipedia, publishing metadata to share and distribute content, and supporting content tagging for better SEO.

AI-Writing-Assistant

DeepWrite AI is an AI writing assistant tool created with the help of ChatGPT3. It is designed to generate perfect blog posts with utmost clarity. The tool is currently at version 1.0 with plans for further improvements. It is an open-source project, welcoming contributions. An extension has been developed for using the tool directly in Notepad, currently supported only on Calmly Writer. The tool requires installation and setup, utilizing technologies like React, Next, TailwindCSS, Node, and Express. For support, users can message the creator on Instagram. The creator, Sabir Khan, is an undergraduate student of Computer Science from Mumbai, known for frequently creating innovative projects.

AI-Assistant-ChatGPT

AI Assistant ChatGPT is a web client tool that allows users to create or chat using ChatGPT or Claude. It enables generating long texts and conversations with efficient control over quality and content direction. The tool supports customization of reverse proxy address, conversation management, content editing, markdown document export, JSON backup, context customization, session-topic management, role customization, dynamic content navigation, and more. Users can access the tool directly at https://eaias.com or deploy it independently. It offers features for dialogue management, assistant configuration, session configuration, and more. The tool lacks data cloud storage and synchronization but provides guidelines for independent deployment. It is a frontend project that can be deployed using Cloudflare Pages and customized with backend modifications. The project is open-source under the MIT license.

MarkFlowy

MarkFlowy is a lightweight and feature-rich Markdown editor with built-in AI capabilities. It supports one-click export of conversations, translation of articles, and obtaining article abstracts. Users can leverage large AI models like DeepSeek and Chatgpt as intelligent assistants. The editor provides high availability with multiple editing modes and custom themes. Available for Linux, macOS, and Windows, MarkFlowy aims to offer an efficient, beautiful, and data-safe Markdown editing experience for users.