AI-Assistant-ChatGPT

这是一个AI使用工具,可以使用AI进行文字创作或模拟聊天,支持简单地配置复杂的设定,支持在任何时候修改、停用、启用设定,实现更便捷有效的控制AI生成内容。可以配合API中转服务使用ChatGPT、Claude、ChatGLM、通义千问等大模型

Stars: 57

AI Assistant ChatGPT is a web client tool that allows users to create or chat using ChatGPT or Claude. It enables generating long texts and conversations with efficient control over quality and content direction. The tool supports customization of reverse proxy address, conversation management, content editing, markdown document export, JSON backup, context customization, session-topic management, role customization, dynamic content navigation, and more. Users can access the tool directly at https://eaias.com or deploy it independently. It offers features for dialogue management, assistant configuration, session configuration, and more. The tool lacks data cloud storage and synchronization but provides guidelines for independent deployment. It is a frontend project that can be deployed using Cloudflare Pages and customized with backend modifications. The project is open-source under the MIT license.

README:

此项目提供了一个使用ChatGPT或Claude来创作或聊天的工具,通过上下文控制,可以实现超长文本生成,和超长对话生成,且可以高效的控制质量和内容方向

此项目为纯粹的网页客户端,无登录无限制,配置自建的免费的API代理实现API访问

- 支持随时修改到自己的反向代理地址,文档后面有代码配置示例。

- 以对话的形式使用,可以在任意位置发起任意次数的对话,方便反复提问或挑选高质量内容。

- 可以任意的编辑所有内容,可以以任意身份插入内容到任意位置,方便创作。

- 可以导出为markdown文档,快速的生成博客、小说、或对话。

- 可以导出为json格式用于备份或在设备间传递,因为没有后台,就没有在线同步功能。

- 支持自定义上下文数量,且可以将任意对话或提示词加入到上下文中。

- 使用 会话-话题 的方式管理对话,一个会话有多个话题,大部分配置都安照会话做区分。

- 可以为每个会话独立的配置各种设定,工作娱乐分开进行。

- 可以自由修改对话角色的头像和名称,可以设置页面背景图片(几乎所有的内容块都是半透明的,配合背景图更好看)。

- 动态的获取内容中的标题,生成内容导航,方便在长内容中快速跳转

- 一个可用的执行器在 https://github.com/viyiviyi/aias-executor.git

- 页面会完整显示执行器调用参数和返回值

- 因为https的限制,需要配合反向代理工具才能在

https://eaias.com这个网页访问,或者自己搭建在内网的https服务

- 可以访问 https://eaias.com 直接使用。

- 如果你需要自己部署,请看这里

- 可以使用Chub的角色设定了,在设定的扩展里打开相关的功能即可,在用来角色扮演时会更好用,不过依然比不上类酒馆软件。

- 可以自己添加任何兼容ChatGPT接口的APi服务,并可独立配置token和切换,如阿里云百炼大模型、Kimi、DeepSeek

- 增加了可调用Stable-Diffusion-Webui Api 绘图的功能,当选中文字时可以打开弹窗配置地址和参数并生成图片

此文档的说明可能滞后,以网站体验为准

- 将鼠标放到任意消息上(移动端需要点击一下消息)可以看见插入消息和再次提问的按钮

- 话题顶端可以导出、删除或在顶端插入消息

- 双击消息或点击消息下边的编辑按钮可以编辑消息,编辑时可以任意修改消息的角色

- 新消息可以任意指定角色,且可以仅增加到上下文而不触发请求

- 可配置助理和用户的头像和昵称

- 可以分组管理提示词(助理设定、人格设定),且可以任意调节分组顺序

- 每个分组可以包含任意多个提示词,且能调整顺序

- 可以随时启用和禁用分组,便于控制内容生成

- 可以使用后置提示词,效果是放在上下文的最后,作用不用把生成下一条内容的提示插入到上下文,连续生成时效果更好

- 配置界面如图,分别是设定列表,设定详情和预览,可以在使用中随时勾选,调整生成的内容

- 可以在配置界面上方 更多>导入酒馆角色卡json 将Chub.ai的角色卡json文件导入为设定

- 会话配置在每个会话间独立

- 可配置多个key,用于解决单个key的调用频率次数的限制

- 可切换模型和切换不同的大语言模型

- 可以为会话指定使用的模型

- 可以配置AI支持的参数,如果有需要的话,正常情况下使用默认值即可

- 可以指定上下文数量。上下文数量在支持上下文的AI里,可以自由的调整发送内容给AI时从当前话题加载多少条记录发送给AI,如果是模拟聊天,建议10以上,如果是提问或创作,建议设为1,临时需要包含前面的内容进行提问时,可以临时勾选后发送。

- 可配置接口代理地址(因为没有使用服务器转发的方式,而是直接由浏览器请求,所有代理地址需要将此网站加入允许跨域访问的名单),同ip多人访问可能产生封号危险,所有这里你可以使用你自己的代理地址。参考chatgptProxyAPI

- 除标注的几个配置外,其他配置都是仅当前会话生效。

- 数据云存储和同步:因为只是一个静态网页,没有服务器,数据全部存在浏览器里面,清除浏览器数据时也会清除。

这个项目现在是一个纯前端项目,本质上不需要独立部署,如果你需要做修改并使用自己的后端,可以参考以下内容

-

这只是一个nextjs的项目,可以使用比如Cloudflare的Pages快速部署(需要使用静态网站的模式,也是上面可用网站的部署方式)

- fork仓库

- 在你的Cloudflare绑定你的GitHub

- 在pages里创建项目并使用GitHub仓库

- 等待第一次构建

- 访问pages默认的域名即可

- 如果有自己的域名,绑定自己的域名更好,因为默认的域名被墙了

-

如果你需要使用自己的后端(比如改成免费的服务,增加广告)

- 可以在AiService/ServiceProvider.ts这个文件修改默认后端地址,并且把设置里面的自定义代理地址删除。

- 如果要增加新的AI服务,比如国内的,继承AiService/IAiService.ts 这个接口实现后在AiService/ServiceProvider.ts文件里面增加类型名称和对应的初始化方式就行

- 如果需要登录功能,需要自己写。

-

如果你需要二次修改,请随意。这是一个MIT开源协议的项目。

addEventListener('fetch', event => {

event.respondWith(handleRequest(event.request))

})

async function handleRequest(request) {

let url = new URL(request.url);

if (url.pathname == '/') {

return new Response('not found', { status: 404 })

}

let lastHost = url.origin;

url = new URL(url.href.substring(url.href.indexOf('http', 4)));

let new_request_headers = new Headers(request.headers);

new_request_headers.set('Host', url.host);

new_request_headers.set('Orgin', url.origin);

new_request_headers.set('Referer', '');

new_request_headers.set('X-Forwarded-For', '');

new_request_headers.set('X-Real-IP', '');

[...new_request_headers.keys()].forEach(key => {

new_request_headers.set(key, new_request_headers.get(key).replace(lastHost + '/', ''))

})

const modifiedRequest = new Request(url.toString(), {

headers: new_request_headers,

method: request.method,

body: request.body,

redirect: 'follow'

});

let response = new Response(null,{status:200});

if (request.method == 'OPTIONS') response = new Response(null,{status:200});

else response = await fetch(modifiedRequest);

const modifiedResponse = new Response(response.body, response);

// 添加允许跨域访问的响应头

modifiedResponse.headers.set('Access-Control-Allow-Origin', "*");

modifiedResponse.headers.set('cache-control', 'public, max-age=14400')

modifiedResponse.headers.set('Access-Control-Allow-Methods', 'GET, POST, PUT, DELETE, OPTIONS');

modifiedResponse.headers.set('Access-Control-Allow-Headers', '*');

// modifiedResponse.headers.set('Access-Control-Allow-Headers', 'Content-Type,Content-Length, Authorization, Accept,X-Requested-With');

modifiedResponse.headers.set('access-control-allow-credentials', 'true');

modifiedResponse.headers.delete('content-security-policy');

modifiedResponse.headers.delete('content-security-policy-report-only');

modifiedResponse.headers.delete('clear-site-data');

return modifiedResponse;

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AI-Assistant-ChatGPT

Similar Open Source Tools

AI-Assistant-ChatGPT

AI Assistant ChatGPT is a web client tool that allows users to create or chat using ChatGPT or Claude. It enables generating long texts and conversations with efficient control over quality and content direction. The tool supports customization of reverse proxy address, conversation management, content editing, markdown document export, JSON backup, context customization, session-topic management, role customization, dynamic content navigation, and more. Users can access the tool directly at https://eaias.com or deploy it independently. It offers features for dialogue management, assistant configuration, session configuration, and more. The tool lacks data cloud storage and synchronization but provides guidelines for independent deployment. It is a frontend project that can be deployed using Cloudflare Pages and customized with backend modifications. The project is open-source under the MIT license.

qianfan-starter

WenXin-Starter is a spring-boot-starter for Baidu's 'WenXin Workshop' large model, facilitating quick integration of Baidu's AI capabilities. It provides complete integration with WenXin Workshop's official API documentation, supports WenShengTu, built-in conversation memory, and supports conversation streaming. It also supports QPS control for individual models and queuing mechanism, with upcoming plugin support.

wenxin-starter

WenXin-Starter is a spring-boot-starter for Baidu's "Wenxin Qianfan WENXINWORKSHOP" large model, which can help you quickly access Baidu's AI capabilities. It fully integrates the official API documentation of Wenxin Qianfan. Supports text-to-image generation, built-in dialogue memory, and supports streaming return of dialogue. Supports QPS control of a single model and supports queuing mechanism. Plugins will be added soon.

herc.ai

Herc.ai is a powerful library for interacting with the Herc.ai API. It offers free access to users and supports all languages. Users can benefit from Herc.ai's features unlimitedly with a one-time subscription and API key. The tool provides functionalities for question answering and text-to-image generation, with support for various models and customization options. Herc.ai can be easily integrated into CLI, CommonJS, TypeScript, and supports beta models for advanced usage. Developed by FiveSoBes and Luppux Development.

AutoAgents

AutoAgents is a cutting-edge multi-agent framework built in Rust that enables the creation of intelligent, autonomous agents powered by Large Language Models (LLMs) and Ractor. Designed for performance, safety, and scalability. AutoAgents provides a robust foundation for building complex AI systems that can reason, act, and collaborate. With AutoAgents you can create Cloud Native Agents, Edge Native Agents and Hybrid Models as well. It is so extensible that other ML Models can be used to create complex pipelines using Actor Framework.

Rankify

Rankify is a Python toolkit designed for unified retrieval, re-ranking, and retrieval-augmented generation (RAG) research. It integrates 40 pre-retrieved benchmark datasets and supports 7 retrieval techniques, 24 state-of-the-art re-ranking models, and multiple RAG methods. Rankify provides a modular and extensible framework, enabling seamless experimentation and benchmarking across retrieval pipelines. It offers comprehensive documentation, open-source implementation, and pre-built evaluation tools, making it a powerful resource for researchers and practitioners in the field.

rust-genai

genai is a multi-AI providers library for Rust that aims to provide a common and ergonomic single API to various generative AI providers such as OpenAI, Anthropic, Cohere, Ollama, and Gemini. It focuses on standardizing chat completion APIs across major AI services, prioritizing ergonomics and commonality. The library initially focuses on text chat APIs and plans to expand to support images, function calling, and more in the future versions. Version 0.1.x will have breaking changes in patches, while version 0.2.x will follow semver more strictly. genai does not provide a full representation of a given AI provider but aims to simplify the differences at a lower layer for ease of use.

polyfire-js

Polyfire is an all-in-one managed backend for AI apps that allows users to build AI apps directly from the frontend, eliminating the need for a separate backend. It simplifies the process by providing most backend services in just a few lines of code. With Polyfire, users can easily create chatbots, transcribe audio files to text, generate simple text, create a long-term memory, and generate images with Dall-E. The tool also offers starter guides and tutorials to help users get started quickly and efficiently.

auto-round

AutoRound is an advanced weight-only quantization algorithm for low-bits LLM inference. It competes impressively against recent methods without introducing any additional inference overhead. The method adopts sign gradient descent to fine-tune rounding values and minmax values of weights in just 200 steps, often significantly outperforming SignRound with the cost of more tuning time for quantization. AutoRound is tailored for a wide range of models and consistently delivers noticeable improvements.

acte

Acte is a framework designed to build GUI-like tools for AI Agents. It aims to address the issues of cognitive load and freedom degrees when interacting with multiple APIs in complex scenarios. By providing a graphical user interface (GUI) for Agents, Acte helps reduce cognitive load and constraints interaction, similar to how humans interact with computers through GUIs. The tool offers APIs for starting new sessions, executing actions, and displaying screens, accessible via HTTP requests or the SessionManager class.

botserver

General Bots is a self-hosted AI automation platform and LLM conversational platform focused on convention over configuration and code-less approaches. It serves as the core API server handling LLM orchestration, business logic, database operations, and multi-channel communication. The platform offers features like multi-vendor LLM API, MCP + LLM Tools Generation, Semantic Caching, Web Automation Engine, Enterprise Data Connectors, and Git-like Version Control. It enforces a ZERO TOLERANCE POLICY for code quality and security, with strict guidelines for error handling, performance optimization, and code patterns. The project structure includes modules for core functionalities like Rhai BASIC interpreter, security, shared types, tasks, auto task system, file operations, learning system, and LLM assistance.

actor-core

Actor-core is a lightweight and flexible library for building actor-based concurrent applications in Java. It provides a simple API for creating and managing actors, as well as handling message passing between actors. With actor-core, developers can easily implement scalable and fault-tolerant systems using the actor model.

node-sdk

The ChatBotKit Node SDK is a JavaScript-based platform for building conversational AI bots and agents. It offers easy setup, serverless compatibility, modern framework support, customizability, and multi-platform deployment. With capabilities like multi-modal and multi-language support, conversation management, chat history review, custom datasets, and various integrations, this SDK enables users to create advanced chatbots for websites, mobile apps, and messaging platforms.

openai-scala-client

This is a no-nonsense async Scala client for OpenAI API supporting all the available endpoints and params including streaming, chat completion, vision, and voice routines. It provides a single service called OpenAIService that supports various calls such as Models, Completions, Chat Completions, Edits, Images, Embeddings, Batches, Audio, Files, Fine-tunes, Moderations, Assistants, Threads, Thread Messages, Runs, Run Steps, Vector Stores, Vector Store Files, and Vector Store File Batches. The library aims to be self-contained with minimal dependencies and supports API-compatible providers like Azure OpenAI, Azure AI, Anthropic, Google Vertex AI, Groq, Grok, Fireworks AI, OctoAI, TogetherAI, Cerebras, Mistral, Deepseek, Ollama, FastChat, and more.

x

Ant Design X is a tool for crafting AI-driven interfaces effortlessly. It is built on the best practices of enterprise-level AI products, offering flexible and diverse atomic components for various AI dialogue scenarios. The tool provides out-of-the-box model integration with inference services compatible with OpenAI standards. It also enables efficient management of conversation data flows, supports rich template options, complete TypeScript support, and advanced theme customization. Ant Design X is designed to enhance development efficiency and deliver exceptional AI interaction experiences.

aiotieba

Aiotieba is an asynchronous Python library for interacting with the Tieba API. It provides a comprehensive set of features for working with Tieba, including support for authentication, thread and post management, and image and file uploading. Aiotieba is well-documented and easy to use, making it a great choice for developers who want to build applications that interact with Tieba.

For similar tasks

h2ogpt

h2oGPT is an Apache V2 open-source project that allows users to query and summarize documents or chat with local private GPT LLMs. It features a private offline database of any documents (PDFs, Excel, Word, Images, Video Frames, Youtube, Audio, Code, Text, MarkDown, etc.), a persistent database (Chroma, Weaviate, or in-memory FAISS) using accurate embeddings (instructor-large, all-MiniLM-L6-v2, etc.), and efficient use of context using instruct-tuned LLMs (no need for LangChain's few-shot approach). h2oGPT also offers parallel summarization and extraction, reaching an output of 80 tokens per second with the 13B LLaMa2 model, HYDE (Hypothetical Document Embeddings) for enhanced retrieval based upon LLM responses, a variety of models supported (LLaMa2, Mistral, Falcon, Vicuna, WizardLM. With AutoGPTQ, 4-bit/8-bit, LORA, etc.), GPU support from HF and LLaMa.cpp GGML models, and CPU support using HF, LLaMa.cpp, and GPT4ALL models. Additionally, h2oGPT provides Attention Sinks for arbitrarily long generation (LLaMa-2, Mistral, MPT, Pythia, Falcon, etc.), a UI or CLI with streaming of all models, the ability to upload and view documents through the UI (control multiple collaborative or personal collections), Vision Models LLaVa, Claude-3, Gemini-Pro-Vision, GPT-4-Vision, Image Generation Stable Diffusion (sdxl-turbo, sdxl) and PlaygroundAI (playv2), Voice STT using Whisper with streaming audio conversion, Voice TTS using MIT-Licensed Microsoft Speech T5 with multiple voices and Streaming audio conversion, Voice TTS using MPL2-Licensed TTS including Voice Cloning and Streaming audio conversion, AI Assistant Voice Control Mode for hands-free control of h2oGPT chat, Bake-off UI mode against many models at the same time, Easy Download of model artifacts and control over models like LLaMa.cpp through the UI, Authentication in the UI by user/password via Native or Google OAuth, State Preservation in the UI by user/password, Linux, Docker, macOS, and Windows support, Easy Windows Installer for Windows 10 64-bit (CPU/CUDA), Easy macOS Installer for macOS (CPU/M1/M2), Inference Servers support (oLLaMa, HF TGI server, vLLM, Gradio, ExLLaMa, Replicate, OpenAI, Azure OpenAI, Anthropic), OpenAI-compliant, Server Proxy API (h2oGPT acts as drop-in-replacement to OpenAI server), Python client API (to talk to Gradio server), JSON Mode with any model via code block extraction. Also supports MistralAI JSON mode, Claude-3 via function calling with strict Schema, OpenAI via JSON mode, and vLLM via guided_json with strict Schema, Web-Search integration with Chat and Document Q/A, Agents for Search, Document Q/A, Python Code, CSV frames (Experimental, best with OpenAI currently), Evaluate performance using reward models, and Quality maintained with over 1000 unit and integration tests taking over 4 GPU-hours.

serverless-chat-langchainjs

This sample shows how to build a serverless chat experience with Retrieval-Augmented Generation using LangChain.js and Azure. The application is hosted on Azure Static Web Apps and Azure Functions, with Azure Cosmos DB for MongoDB vCore as the vector database. You can use it as a starting point for building more complex AI applications.

react-native-vercel-ai

Run Vercel AI package on React Native, Expo, Web and Universal apps. Currently React Native fetch API does not support streaming which is used as a default on Vercel AI. This package enables you to use AI library on React Native but the best usage is when used on Expo universal native apps. On mobile you get back responses without streaming with the same API of `useChat` and `useCompletion` and on web it will fallback to `ai/react`

LLamaSharp

LLamaSharp is a cross-platform library to run 🦙LLaMA/LLaVA model (and others) on your local device. Based on llama.cpp, inference with LLamaSharp is efficient on both CPU and GPU. With the higher-level APIs and RAG support, it's convenient to deploy LLM (Large Language Model) in your application with LLamaSharp.

gpt4all

GPT4All is an ecosystem to run powerful and customized large language models that work locally on consumer grade CPUs and any GPU. Note that your CPU needs to support AVX or AVX2 instructions. Learn more in the documentation. A GPT4All model is a 3GB - 8GB file that you can download and plug into the GPT4All open-source ecosystem software. Nomic AI supports and maintains this software ecosystem to enforce quality and security alongside spearheading the effort to allow any person or enterprise to easily train and deploy their own on-edge large language models.

ChatGPT-Telegram-Bot

ChatGPT Telegram Bot is a Telegram bot that provides a smooth AI experience. It supports both Azure OpenAI and native OpenAI, and offers real-time (streaming) response to AI, with a faster and smoother experience. The bot also has 15 preset bot identities that can be quickly switched, and supports custom bot identities to meet personalized needs. Additionally, it supports clearing the contents of the chat with a single click, and restarting the conversation at any time. The bot also supports native Telegram bot button support, making it easy and intuitive to implement required functions. User level division is also supported, with different levels enjoying different single session token numbers, context numbers, and session frequencies. The bot supports English and Chinese on UI, and is containerized for easy deployment.

twinny

Twinny is a free and open-source AI code completion plugin for Visual Studio Code and compatible editors. It integrates with various tools and frameworks, including Ollama, llama.cpp, oobabooga/text-generation-webui, LM Studio, LiteLLM, and Open WebUI. Twinny offers features such as fill-in-the-middle code completion, chat with AI about your code, customizable API endpoints, and support for single or multiline fill-in-middle completions. It is easy to install via the Visual Studio Code extensions marketplace and provides a range of customization options. Twinny supports both online and offline operation and conforms to the OpenAI API standard.

agnai

Agnaistic is an AI roleplay chat tool that allows users to interact with personalized characters using their favorite AI services. It supports multiple AI services, persona schema formats, and features such as group conversations, user authentication, and memory/lore books. Agnaistic can be self-hosted or run using Docker, and it provides a range of customization options through its settings.json file. The tool is designed to be user-friendly and accessible, making it suitable for both casual users and developers.

For similar jobs

exif-photo-blog

EXIF Photo Blog is a full-stack photo blog application built with Next.js, Vercel, and Postgres. It features built-in authentication, photo upload with EXIF extraction, photo organization by tag, infinite scroll, light/dark mode, automatic OG image generation, a CMD-K menu with photo search, experimental support for AI-generated descriptions, and support for Fujifilm simulations. The application is easy to deploy to Vercel with just a few clicks and can be customized with a variety of environment variables.

obsidian-textgenerator-plugin

Text Generator is an open-source AI Assistant Tool that leverages Generative Artificial Intelligence to enhance knowledge creation and organization in Obsidian. It allows users to generate ideas, titles, summaries, outlines, and paragraphs based on their knowledge database, offering endless possibilities. The plugin is free and open source, compatible with Obsidian for a powerful Personal Knowledge Management system. It provides flexible prompts, template engine for repetitive tasks, community templates for shared use cases, and highly flexible configuration with services like Google Generative AI, OpenAI, and HuggingFace.

video2blog

video2blog is an open-source project aimed at converting videos into textual notes. The tool follows a process of extracting video information using yt-dlp, downloading the video, downloading subtitles if available, translating subtitles if not in Chinese, generating Chinese subtitles using whisper if no subtitles exist, converting subtitles to articles using gemini, and manually inserting images from the video into the article. The tool provides a solution for creating blog content from video resources, enhancing accessibility and content creation efficiency.

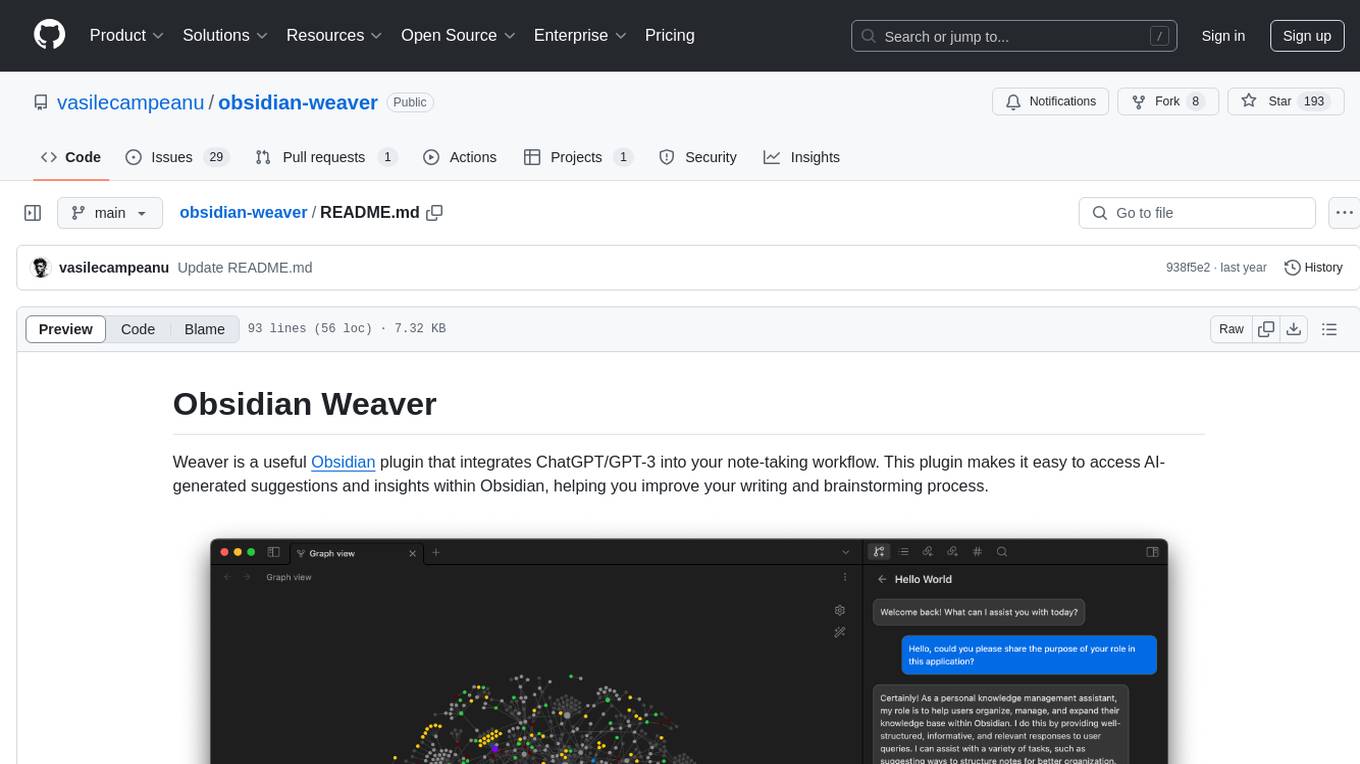

obsidian-weaver

Obsidian Weaver is a plugin that integrates ChatGPT/GPT-3 into the note-taking workflow of Obsidian. It allows users to easily access AI-generated suggestions and insights within Obsidian, enhancing the writing and brainstorming process. The plugin respects Obsidian's philosophy of storing notes locally, ensuring data security and privacy. Weaver offers features like creating new chat sessions with the AI assistant and receiving instant responses, all within the Obsidian environment. It provides a seamless integration with Obsidian's interface, making the writing process efficient and helping users stay focused. The plugin is constantly being improved with new features and updates to enhance the note-taking experience.

wordlift-plugin

WordLift is a plugin that helps online content creators organize posts and pages by adding facts, links, and media to build beautifully structured websites for both humans and search engines. It allows users to create, own, and publish their own knowledge graph, and publishes content as Linked Open Data following Tim Berners-Lee's Linked Data Principles. The plugin supports writers by providing trustworthy and contextual facts, enriching content with images, links, and interactive visualizations, keeping readers engaged with relevant content recommendations, and producing content compatible with schema.org markup for better indexing and display on search engines. It also offers features like creating a personal Wikipedia, publishing metadata to share and distribute content, and supporting content tagging for better SEO.

AI-Writing-Assistant

DeepWrite AI is an AI writing assistant tool created with the help of ChatGPT3. It is designed to generate perfect blog posts with utmost clarity. The tool is currently at version 1.0 with plans for further improvements. It is an open-source project, welcoming contributions. An extension has been developed for using the tool directly in Notepad, currently supported only on Calmly Writer. The tool requires installation and setup, utilizing technologies like React, Next, TailwindCSS, Node, and Express. For support, users can message the creator on Instagram. The creator, Sabir Khan, is an undergraduate student of Computer Science from Mumbai, known for frequently creating innovative projects.

AI-Assistant-ChatGPT

AI Assistant ChatGPT is a web client tool that allows users to create or chat using ChatGPT or Claude. It enables generating long texts and conversations with efficient control over quality and content direction. The tool supports customization of reverse proxy address, conversation management, content editing, markdown document export, JSON backup, context customization, session-topic management, role customization, dynamic content navigation, and more. Users can access the tool directly at https://eaias.com or deploy it independently. It offers features for dialogue management, assistant configuration, session configuration, and more. The tool lacks data cloud storage and synchronization but provides guidelines for independent deployment. It is a frontend project that can be deployed using Cloudflare Pages and customized with backend modifications. The project is open-source under the MIT license.

MarkFlowy

MarkFlowy is a lightweight and feature-rich Markdown editor with built-in AI capabilities. It supports one-click export of conversations, translation of articles, and obtaining article abstracts. Users can leverage large AI models like DeepSeek and Chatgpt as intelligent assistants. The editor provides high availability with multiple editing modes and custom themes. Available for Linux, macOS, and Windows, MarkFlowy aims to offer an efficient, beautiful, and data-safe Markdown editing experience for users.