BrowserAI

Run local LLMs like llama, deepseek-distill, kokoro and more inside your browser

Stars: 856

BrowserAI is a production-ready tool that allows users to run AI models directly in the browser, offering simplicity, speed, privacy, and open-source capabilities. It provides WebGPU acceleration for fast inference, zero server costs, offline capability, and developer-friendly features. Perfect for web developers, companies seeking privacy-conscious AI solutions, researchers experimenting with browser-based AI, and hobbyists exploring AI without infrastructure overhead. The tool supports various AI tasks like text generation, speech recognition, and text-to-speech, with pre-configured popular models ready to use. It offers a simple SDK with multiple engine support and seamless switching between MLC and Transformers engines.

README:

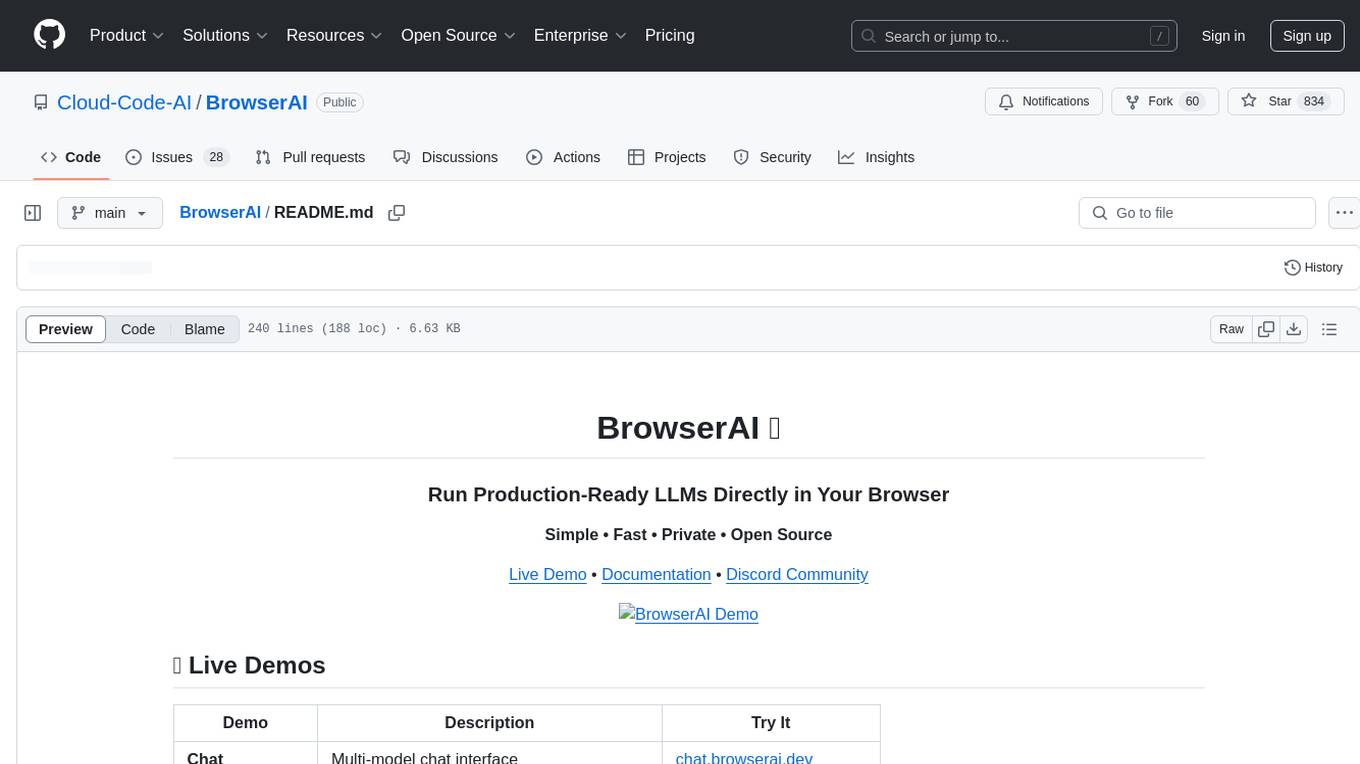

| Demo | Description | Try It |

|---|---|---|

| Chat | Multi-model chat interface | chat.browserai.dev |

| Voice Chat | Full-featured with speech recognition & TTS | voice-demo.browserai.dev |

| Text-to-Speech | Powered by Kokoro 82M | tts-demo.browserai.dev |

- 🔒 100% Private: All processing happens locally in your browser

- 🚀 WebGPU Accelerated: Near-native performance

- 💰 Zero Server Costs: No complex infrastructure needed

- 🌐 Offline Capable: Works without internet after initial download

- 🎯 Developer Friendly: Simple sdk with multiple engine support

- 📦 Production Ready: Pre-optimized popular models

- Web developers building AI-powered applications

- Companies needing privacy-conscious AI solutions

- Researchers experimenting with browser-based AI

- Hobbyists exploring AI without infrastructure overhead

- 🎯 Run AI models directly in the browser - no server required!

- ⚡ WebGPU acceleration for blazing fast inference

- 🔄 Seamless switching between MLC and Transformers engines

- 📦 Pre-configured popular models ready to use

- 🛠️ Easy-to-use API for text generation and more

- 🔧 Web Worker support for non-blocking UI performance

- 📊 Structured output generation with JSON schemas

- 🎙️ Speech recognition and text-to-speech capabilities

- 💾 Built-in database support for storing conversations and embeddings

npm install @browserai/browseraiOR

yarn add @browserai/browseraiimport { BrowserAI } from '@browserai/browserai';

const browserAI = new BrowserAI();

// Load model with progress tracking

await browserAI.loadModel('llama-3.2-1b-instruct', {

quantization: 'q4f16_1',

onProgress: (progress) => console.log('Loading:', progress.progress + '%')

});

// Generate text

const response = await browserAI.generateText('Hello, how are you?');

console.log(response);const response = await browserAI.generateText('Write a short poem about coding', {

temperature: 0.8,

max_tokens: 100,

system_prompt: "You are a creative poet specialized in technology themes."

});const ai = new BrowserAI();

await ai.loadModel('gemma-2b-it');

const response = await ai.generateText([

{ role: 'system', content: 'You are a helpful assistant.' },

{ role: 'user', content: 'What is WebGPU?' }

]);const response = await browserAI.generateText('List 3 colors', {

json_schema: {

type: "object",

properties: {

colors: {

type: "array",

items: {

type: "object",

properties: {

name: { type: "string" },

hex: { type: "string" }

}

}

}

}

},

response_format: { type: "json_object" }

});const browserAI = new BrowserAI();

await browserAI.loadModel('whisper-tiny-en');

// Using the built-in recorder

await browserAI.startRecording();

const audioBlob = await browserAI.stopRecording();

const transcription = await browserAI.transcribeAudio(audioBlob, {

return_timestamps: true,

language: 'en'

});const ai = new BrowserAI();

await ai.loadModel('kokoro-tts');

const audioBuffer = await browserAI.textToSpeech('Hello, how are you today?', {

voice: 'af_bella',

speed: 1.0

});// Play the audio using Web Audio API

const audioContext = new AudioContext();

const source = audioContext.createBufferSource();

audioContext.decodeAudioData(audioBuffer, (buffer) => {

source.buffer = buffer;

source.connect(audioContext.destination);

source.start(0);

});More models will be added soon. Request a model by creating an issue.

- Llama-3.2-1b-Instruct

- Llama-3.2-3b-Instruct

- Hermes-Llama-3.2-3b

- SmolLM2-135M-Instruct

- SmolLM2-360M-Instruct

- SmolLM2-1.7B-Instruct

- Qwen-0.5B-Instruct

- Gemma-2B-IT

- TinyLlama-1.1B-Chat-v0.4

- Phi-3.5-mini-instruct

- Qwen2.5-1.5B-Instruct

- DeepSeek-R1-Distill-Qwen-7B

- DeepSeek-R1-Distill-Llama-8B

- Snowflake-Arctic-Embed-M-B32

- Snowflake-Arctic-Embed-S-B32

- Snowflake-Arctic-Embed-M-B4

- Snowflake-Arctic-Embed-S-B4

- Llama-3.2-1b-Instruct

- Whisper-tiny-en (Speech Recognition)

- Whisper-base-all (Speech Recognition)

- Whisper-small-all (Speech Recognition)

- Kokoro-TTS (Text-to-Speech)

- 🎯 Simplified model initialization

- 📊 Basic monitoring and metrics

- 🔍 Simple RAG implementation

- 🛠️ Developer tools integration

- 📚 Enhanced RAG capabilities

- Hybrid search

- Auto-chunking

- Source tracking

- 📊 Advanced observability

- Performance dashboards

- Memory profiling

- Error tracking

- 🔐 Security features

- 📈 Advanced analytics

- 🤝 Multi-model orchestration

We welcome contributions! Feel free to:

- Fork the repository

- Create your feature branch (

git checkout -b feature/amazing-feature) - Commit your changes (

git commit -m 'Add amazing feature') - Push to the branch (

git push origin feature/amazing-feature) - Open a Pull Request

This project is licensed under the MIT License - see the LICENSE file for details.

- MLC AI for their incredible mode compilation library and support for webgpu runtime and xgrammar

- Hugging Face and Xenova for their Transformers.js library, licensed under Apache License 2.0. The original code has been modified to work in a browser environment and converted to TypeScript.

- All our contributors and supporters!

Made with ❤️ for the AI community

- Modern browser with WebGPU support (Chrome 113+, Edge 113+, or equivalent)

- For models with

shader-f16requirement, hardware must support 16-bit floating point operations

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for BrowserAI

Similar Open Source Tools

BrowserAI

BrowserAI is a production-ready tool that allows users to run AI models directly in the browser, offering simplicity, speed, privacy, and open-source capabilities. It provides WebGPU acceleration for fast inference, zero server costs, offline capability, and developer-friendly features. Perfect for web developers, companies seeking privacy-conscious AI solutions, researchers experimenting with browser-based AI, and hobbyists exploring AI without infrastructure overhead. The tool supports various AI tasks like text generation, speech recognition, and text-to-speech, with pre-configured popular models ready to use. It offers a simple SDK with multiple engine support and seamless switching between MLC and Transformers engines.

BrowserAI

BrowserAI is a tool that allows users to run large language models (LLMs) directly in the browser, providing a simple, fast, and open-source solution. It prioritizes privacy by processing data locally, is cost-effective with no server costs, works offline after initial download, and offers WebGPU acceleration for high performance. It is developer-friendly with a simple API, supports multiple engines, and comes with pre-configured models for easy use. Ideal for web developers, companies needing privacy-conscious AI solutions, researchers experimenting with browser-based AI, and hobbyists exploring AI without infrastructure overhead.

R2R

R2R (RAG to Riches) is a fast and efficient framework for serving high-quality Retrieval-Augmented Generation (RAG) to end users. The framework is designed with customizable pipelines and a feature-rich FastAPI implementation, enabling developers to quickly deploy and scale RAG-based applications. R2R was conceived to bridge the gap between local LLM experimentation and scalable production solutions. **R2R is to LangChain/LlamaIndex what NextJS is to React**. A JavaScript client for R2R deployments can be found here. ### Key Features * **🚀 Deploy** : Instantly launch production-ready RAG pipelines with streaming capabilities. * **🧩 Customize** : Tailor your pipeline with intuitive configuration files. * **🔌 Extend** : Enhance your pipeline with custom code integrations. * **⚖️ Autoscale** : Scale your pipeline effortlessly in the cloud using SciPhi. * **🤖 OSS** : Benefit from a framework developed by the open-source community, designed to simplify RAG deployment.

quantalogic

QuantaLogic is a ReAct framework for building advanced AI agents that seamlessly integrates large language models with a robust tool system. It aims to bridge the gap between advanced AI models and practical implementation in business processes by enabling agents to understand, reason about, and execute complex tasks through natural language interaction. The framework includes features such as ReAct Framework, Universal LLM Support, Secure Tool System, Real-time Monitoring, Memory Management, and Enterprise Ready components.

crawl4ai

Crawl4AI is a powerful and free web crawling service that extracts valuable data from websites and provides LLM-friendly output formats. It supports crawling multiple URLs simultaneously, replaces media tags with ALT, and is completely free to use and open-source. Users can integrate Crawl4AI into Python projects as a library or run it as a standalone local server. The tool allows users to crawl and extract data from specified URLs using different providers and models, with options to include raw HTML content, force fresh crawls, and extract meaningful text blocks. Configuration settings can be adjusted in the `crawler/config.py` file to customize providers, API keys, chunk processing, and word thresholds. Contributions to Crawl4AI are welcome from the open-source community to enhance its value for AI enthusiasts and developers.

alphora

Alphora is a full-stack framework for building production AI agents, providing agent orchestration, prompt engineering, tool execution, memory management, streaming, and deployment with an async-first, OpenAI-compatible design. It offers features like agent derivation, reasoning-action loop, async streaming, visual debugger, OpenAI compatibility, multimodal support, tool system with zero-config tools and type safety, prompt engine with dynamic prompts, memory and storage management, sandbox for secure execution, deployment as API, and more. Alphora allows users to build sophisticated AI agents easily and efficiently.

mem0

Mem0 is a tool that provides a smart, self-improving memory layer for Large Language Models, enabling personalized AI experiences across applications. It offers persistent memory for users, sessions, and agents, self-improving personalization, a simple API for easy integration, and cross-platform consistency. Users can store memories, retrieve memories, search for related memories, update memories, get the history of a memory, and delete memories using Mem0. It is designed to enhance AI experiences by enabling long-term memory storage and retrieval.

agentscope

AgentScope is an agent-oriented programming tool for building LLM (Large Language Model) applications. It provides transparent development, realtime steering, agentic tools management, model agnostic programming, LEGO-style agent building, multi-agent support, and high customizability. The tool supports async invocation, reasoning models, streaming returns, async/sync tool functions, user interruption, group-wise tools management, streamable transport, stateful/stateless mode MCP client, distributed and parallel evaluation, multi-agent conversation management, and fine-grained MCP control. AgentScope Studio enables tracing and visualization of agent applications. The tool is highly customizable and encourages customization at various levels.

dexto

Dexto is a lightweight runtime for creating and running AI agents that turn natural language into real-world actions. It serves as the missing intelligence layer for building AI applications, standalone chatbots, or as the reasoning engine inside larger products. Dexto features a powerful CLI and Web UI for running AI agents, supports multiple interfaces, allows hot-swapping of LLMs from various providers, connects to remote tool servers via the Model Context Protocol, is config-driven with version-controlled YAML, offers production-ready core features, extensibility for custom services, and enables multi-agent collaboration via MCP and A2A.

mistral.rs

Mistral.rs is a fast LLM inference platform written in Rust. We support inference on a variety of devices, quantization, and easy-to-use application with an Open-AI API compatible HTTP server and Python bindings.

executorch

ExecuTorch is an end-to-end solution for enabling on-device inference capabilities across mobile and edge devices including wearables, embedded devices and microcontrollers. It is part of the PyTorch Edge ecosystem and enables efficient deployment of PyTorch models to edge devices. Key value propositions of ExecuTorch are: * **Portability:** Compatibility with a wide variety of computing platforms, from high-end mobile phones to highly constrained embedded systems and microcontrollers. * **Productivity:** Enabling developers to use the same toolchains and SDK from PyTorch model authoring and conversion, to debugging and deployment to a wide variety of platforms. * **Performance:** Providing end users with a seamless and high-performance experience due to a lightweight runtime and utilizing full hardware capabilities such as CPUs, NPUs, and DSPs.

ai-counsel

AI Counsel is a true deliberative consensus MCP server where AI models engage in actual debate, refine positions across multiple rounds, and converge with voting and confidence levels. It features two modes (quick and conference), mixed adapters (CLI tools and HTTP services), auto-convergence, structured voting, semantic grouping, model-controlled stopping, evidence-based deliberation, local model support, data privacy, context injection, semantic search, fault tolerance, and full transcripts. Users can run local and cloud models to deliberate on various questions, ground decisions in reality by querying code and files, and query past decisions for analysis. The tool is designed for critical technical decisions requiring multi-model deliberation and consensus building.

trpc-agent-go

A powerful Go framework for building intelligent agent systems with large language models (LLMs), hierarchical planners, memory, telemetry, and a rich tool ecosystem. tRPC-Agent-Go enables the creation of autonomous or semi-autonomous agents that reason, call tools, collaborate with sub-agents, and maintain long-term state. The framework provides detailed documentation, examples, and tools for accelerating the development of AI applications.

ai

A TypeScript toolkit for building AI-driven video workflows on the server, powered by Mux! @mux/ai provides purpose-driven workflow functions and primitive functions that integrate with popular AI/LLM providers like OpenAI, Anthropic, and Google. It offers pre-built workflows for tasks like generating summaries and tags, content moderation, chapter generation, and more. The toolkit is cost-effective, supports multi-modal analysis, tone control, and configurable thresholds, and provides full TypeScript support. Users can easily configure credentials for Mux and AI providers, as well as cloud infrastructure like AWS S3 for certain workflows. @mux/ai is production-ready, offers composable building blocks, and supports universal language detection.

flutter_gen_ai_chat_ui

A modern, high-performance Flutter chat UI kit for building beautiful messaging interfaces. Features streaming text animations, markdown support, file attachments, and extensive customization options. Perfect for AI assistants, customer support, team chat, social messaging, and any conversational application. Production Ready, Cross-Platform, High Performance, Fully Customizable. Core features include dark/light mode, word-by-word streaming with animations, enhanced markdown support, speech-to-text integration, responsive layout, RTL language support, high performance message handling, improved pagination support. AI-specific features include customizable welcome message, example questions component, persistent example questions, AI typing indicators, streaming markdown rendering. New AI Actions System with function calling support, generative UI, human-in-the-loop confirmation dialogs, real-time status updates, type-safe parameters, event streaming, error handling. UI components include customizable message bubbles, custom bubble builder, multiple input field styles, loading indicators, smart scroll management, enhanced theme customization, better code block styling.

logicstamp-context

LogicStamp Context is a static analyzer that extracts deterministic component contracts from TypeScript codebases, providing structured architectural context for AI coding assistants. It helps AI assistants understand architecture by extracting props, hooks, and dependencies without implementation noise. The tool works with React, Next.js, Vue, Express, and NestJS, and is compatible with various AI assistants like Claude, Cursor, and MCP agents. It offers features like watch mode for real-time updates, breaking change detection, and dependency graph creation. LogicStamp Context is a security-first tool that protects sensitive data, runs locally, and is non-opinionated about architectural decisions.

For similar tasks

BrowserAI

BrowserAI is a production-ready tool that allows users to run AI models directly in the browser, offering simplicity, speed, privacy, and open-source capabilities. It provides WebGPU acceleration for fast inference, zero server costs, offline capability, and developer-friendly features. Perfect for web developers, companies seeking privacy-conscious AI solutions, researchers experimenting with browser-based AI, and hobbyists exploring AI without infrastructure overhead. The tool supports various AI tasks like text generation, speech recognition, and text-to-speech, with pre-configured popular models ready to use. It offers a simple SDK with multiple engine support and seamless switching between MLC and Transformers engines.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

LocalAI

LocalAI is a free and open-source OpenAI alternative that acts as a drop-in replacement REST API compatible with OpenAI (Elevenlabs, Anthropic, etc.) API specifications for local AI inferencing. It allows users to run LLMs, generate images, audio, and more locally or on-premises with consumer-grade hardware, supporting multiple model families and not requiring a GPU. LocalAI offers features such as text generation with GPTs, text-to-audio, audio-to-text transcription, image generation with stable diffusion, OpenAI functions, embeddings generation for vector databases, constrained grammars, downloading models directly from Huggingface, and a Vision API. It provides a detailed step-by-step introduction in its Getting Started guide and supports community integrations such as custom containers, WebUIs, model galleries, and various bots for Discord, Slack, and Telegram. LocalAI also offers resources like an LLM fine-tuning guide, instructions for local building and Kubernetes installation, projects integrating LocalAI, and a how-tos section curated by the community. It encourages users to cite the repository when utilizing it in downstream projects and acknowledges the contributions of various software from the community.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

jupyter-ai

Jupyter AI connects generative AI with Jupyter notebooks. It provides a user-friendly and powerful way to explore generative AI models in notebooks and improve your productivity in JupyterLab and the Jupyter Notebook. Specifically, Jupyter AI offers: * An `%%ai` magic that turns the Jupyter notebook into a reproducible generative AI playground. This works anywhere the IPython kernel runs (JupyterLab, Jupyter Notebook, Google Colab, Kaggle, VSCode, etc.). * A native chat UI in JupyterLab that enables you to work with generative AI as a conversational assistant. * Support for a wide range of generative model providers, including AI21, Anthropic, AWS, Cohere, Gemini, Hugging Face, NVIDIA, and OpenAI. * Local model support through GPT4All, enabling use of generative AI models on consumer grade machines with ease and privacy.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

infinity

Infinity is an AI-native database designed for LLM applications, providing incredibly fast full-text and vector search capabilities. It supports a wide range of data types, including vectors, full-text, and structured data, and offers a fused search feature that combines multiple embeddings and full text. Infinity is easy to use, with an intuitive Python API and a single-binary architecture that simplifies deployment. It achieves high performance, with 0.1 milliseconds query latency on million-scale vector datasets and up to 15K QPS.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.