quantalogic

Quantalogic ReAct Agent - Coding Agent Framework - Gives a ⭐️ if you like the project

Stars: 376

QuantaLogic is a ReAct framework for building advanced AI agents that seamlessly integrates large language models with a robust tool system. It aims to bridge the gap between advanced AI models and practical implementation in business processes by enabling agents to understand, reason about, and execute complex tasks through natural language interaction. The framework includes features such as ReAct Framework, Universal LLM Support, Secure Tool System, Real-time Monitoring, Memory Management, and Enterprise Ready components.

README:

Hey there, welcome to QuantaLogic—your cosmic toolkit for crafting AI agents and workflows that shine! Whether you’re coding up a storm, automating a business process, chatting with a clever assistant, or dreaming up something wild, QuantaLogic is here to make it happen. We’re talking large language models (LLMs) fused with a stellar toolset, featuring three powerhouse approaches: the ReAct framework for dynamic problem-solving, the dazzling new Flow module for structured brilliance, and a shiny Chat mode for conversational magic with tool-calling capabilities.

Picture this: a CLI that’s as easy as a snap, a Python API that’s pure magic, and a framework that scales from quick hacks to galactic enterprises. Ready to launch? Let’s blast off!

Chinese Version French Version German Version

At QuantaLogic, we spotted a black hole: amazing AI models from OpenAI, Anthropic, and DeepSeek weren’t fully lighting up real-world tasks. Our mission? Ignite that spark! We’re here to make generative AI a breeze for developers, businesses, and dreamers alike—turning ideas into action, one brilliant solution at a time, whether through task-solving, structured workflows, or natural conversation.

"AI should be your co-pilot, not a puzzle. QuantaLogic makes it happen—fast, fun, and fearless!"

- ReAct Framework: Reasoning + action = unstoppable agents!

- Flow Module: Structured workflows that flow like a river.

- Chat Mode: Conversational brilliance with tool-calling powers.

- LLM Galaxy: Tap into OpenAI, DeepSeek, and more via LiteLLM.

- Secure Tools: Docker-powered safety for code and files.

- Live Monitoring: Watch it unfold with a web interface and SSE.

- Memory Magic: Smart context keeps things snappy.

- Enterprise-Ready: Logs, error handling, and validation—rock solid.

- Why QuantaLogic?

- Key Features

- Installation

- Quick Start

- ReAct Framework: Dynamic Agents

-

Flow Module: Structured Workflows

- 📘 Workflow YAML DSL Specification: Comprehensive guide to defining powerful, structured workflows using our Domain-Specific Language

- 📚 Flow YAML Documentation: Dive into the official documentation for a deeper understanding of Flow YAML and its applications

- Chat Mode: Conversational Power

- ReAct vs. Flow vs. Chat: Pick Your Power

- Using the CLI

- Examples That Spark Joy

- Core Components

- Developing with QuantaLogic

- Contributing

- License

- Project Growth

- API Keys and Environment Configuration

Let’s get QuantaLogic orbiting your system—it’s as easy as 1-2-3!

- Python 3.12+: The fuel for our rocket.

- Docker (optional): Locks down code execution in a safe pod.

pip install quantalogicpipx install quantalogicgit clone https://github.com/quantalogic/quantalogic.git

cd quantalogic

python -m venv .venv

source .venv/bin/activate # Windows: .venv\Scripts\activate

poetry installTip: No Poetry? Grab it with

pip install poetryand join the crew!

Ready to see the magic? Here’s your launchpad:

quantalogic task "Write a Python function for Fibonacci numbers"Boom! ReAct whips up a solution in seconds.

quantalogic chat --persona "You are a witty space explorer" "Tell me about Mars with a search"Chat mode engages, uses tools if needed, and delivers a conversational response!

from quantalogic import Agent

agent = Agent(model_name="deepseek/deepseek-chat")

result = agent.solve_task("Code a Fibonacci function")

print(result)

# Output: "def fib(n): return [0, 1] if n <= 2 else fib(n-1) + [fib(n-1)[-1] + fib(n-1)[-2]]"from quantalogic import Agent

agent = Agent(model_name="gpt-4o", chat_system_prompt="You are a friendly guide")

response = agent.chat("What's the weather like in Tokyo?")

print(response)

# Engages in conversation, potentially calling a weather tool if configuredfrom quantalogic import Agent

# Create a synchronous agent

agent = Agent(model_name="gpt-4o")

# Solve a task synchronously

result = agent.solve_task(

task="Write a Python function to calculate Fibonacci numbers",

max_iterations=10 # Optional: limit iterations

)

print(result)import asyncio

from quantalogic import Agent

async def main():

# Create an async agent

agent = Agent(model_name="gpt-4o")

# Solve a task asynchronously with streaming

result = await agent.async_solve_task(

task="Write a Python script to scrape top GitHub repositories",

max_iterations=15, # Optional: limit iterations

streaming=True # Optional: stream the response

)

print(result)

# Run the async function

asyncio.run(main())from quantalogic import Agent

from quantalogic.console_print_events import console_print_events

from quantalogic.console_print_token import console_print_token

from quantalogic.tools import (

DuckDuckGoSearchTool,

TechnicalAnalysisTool,

YFinanceTool

)

# Create an agent with finance-related tools

agent = Agent(

model_name="gpt-4o",

tools=[

DuckDuckGoSearchTool(), # Web search tool

TechnicalAnalysisTool(), # Stock technical analysis

YFinanceTool() # Stock data retrieval

]

)

# Set up comprehensive event listeners

agent.event_emitter.on(

event=[

"task_complete",

"task_think_start",

"task_think_end",

"tool_execution_start",

"tool_execution_end",

"error_max_iterations_reached",

"memory_full",

"memory_compacted"

],

listener=console_print_events

)

# Optional: Monitor streaming tokens

agent.event_emitter.on(

event=["stream_chunk"],

listener=console_print_token

)

# Execute a multi-step financial analysis task

result = agent.solve_task(

"1. Find the top 3 tech stocks for Q3 2024 "

"2. Retrieve historical stock data for each "

"3. Calculate 50-day and 200-day moving averages "

"4. Provide a brief investment recommendation",

streaming=True # Enable streaming for detailed output

)

print(result)import asyncio

from quantalogic import Agent

from quantalogic.console_print_events import console_print_events

from quantalogic.console_print_token import console_print_token

from quantalogic.tools import (

DuckDuckGoSearchTool,

TechnicalAnalysisTool,

YFinanceTool

)

async def main():

# Create an async agent with finance-related tools

agent = Agent(

model_name="gpt-4o",

tools=[

DuckDuckGoSearchTool(), # Web search tool

TechnicalAnalysisTool(), # Stock technical analysis

YFinanceTool() # Stock data retrieval

]

)

# Set up comprehensive event listeners

agent.event_emitter.on(

event=[

"task_complete",

"task_think_start",

"task_think_end",

"tool_execution_start",

"tool_execution_end",

"error_max_iterations_reached",

"memory_full",

"memory_compacted"

],

listener=console_print_events

)

# Optional: Monitor streaming tokens

agent.event_emitter.on(

event=["stream_chunk"],

listener=console_print_token

)

# Execute a multi-step financial analysis task asynchronously

result = await agent.async_solve_task(

"1. Find emerging AI technology startups "

"2. Analyze their recent funding rounds "

"3. Compare market potential and growth indicators "

"4. Provide an investment trend report",

streaming=True # Enable streaming for detailed output

)

print(result)

# Run the async function

asyncio.run(main())from quantalogic.flow import Workflow, Nodes

@Nodes.define(output="greeting")

def greet(name: str) -> str:

return f"Hello, {name}!"

workflow = Workflow("greet").build()

result = await workflow.run({"name": "Luna"})

print(result["greeting"]) # "Hello, Luna!"The ReAct framework is your AI sidekick—think fast, act smart. It pairs LLM reasoning with tool-powered action, perfect for tasks that need a bit of improvisation.

- You Say: "Write me a script."

- It Thinks: LLM plots the course.

-

It Acts: Tools like

PythonToolget to work. - It Loops: Keeps going until it’s done.

Check this out:

graph TD

A[You: 'Write a script'] --> B[ReAct Agent]

B --> C{Reason with LLM}

C --> D[Call Tools]

D --> E[Get Results]

E --> F{Task Done?}

F -->|No| C

F -->|Yes| G[Deliver Answer]

G --> H[You: Happy!]

style A fill:#f9f,stroke:#333

style H fill:#bbf,stroke:#333quantalogic task "Create a Python script to sort a list"ReAct figures it out, writes the code, and hands it over—smooth as silk!

Perfect for coding, debugging, or answering wild questions on the fly.

The Flow module is your architect—building workflows that hum with precision. It's all about nodes, transitions, and a steady rhythm, ideal for repeatable missions.

🔍 Want to dive deeper? Check out our comprehensive Workflow YAML DSL Specification, a detailed guide that walks you through defining powerful, structured workflows. From basic node configurations to complex transition logic, this documentation is your roadmap to mastering workflow design with QuantaLogic.

📚 For a deeper understanding of Flow YAML and its applications, please refer to the official Flow YAML Documentation.

The Flow YAML documentation provides a comprehensive overview of the Flow YAML language, including its syntax, features, and best practices. It's a valuable resource for anyone looking to create complex workflows with QuantaLogic.

Additionally, the Flow YAML documentation includes a number of examples and tutorials to help you get started with creating your own workflows. These examples cover a range of topics, from simple workflows to more complex scenarios, and are designed to help you understand how to use Flow YAML to create powerful and flexible workflows.

- Nodes: Tasks like functions or LLM calls.

- Transitions: Paths with optional conditions.

- Engine: Runs the show with flair.

- Observers: Peek at progress with events.

from quantalogic.flow import Workflow, Nodes

@Nodes.llm_node(model="openai/gpt-4o-mini", output="chapter")

async def write_chapter(ctx: dict) -> str:

return f"Chapter 1: {ctx['theme']}"

workflow = (

Workflow("write_chapter")

.then("end", condition="lambda ctx: True")

.add_observer(lambda e: print(f" {e.event_type}"))

)

engine = workflow.build()

result = await engine.run({"theme": "Cosmic Quest"})

print(result["chapter"])from typing import List

import anyio

from loguru import logger

from quantalogic.flow import Nodes, Workflow

# Define node functions with decorators

@Nodes.validate_node(output="validation_result")

async def validate_input(genre: str, num_chapters: int) -> str:

"""Validate input parameters."""

if not (1 <= num_chapters <= 20 and genre.lower() in ["science fiction", "fantasy", "mystery", "romance"]):

raise ValueError("Invalid input: genre must be one of science fiction, fantasy, mystery, romance")

return "Input validated"

@Nodes.llm_node(

model="gemini/gemini-2.0-flash",

system_prompt="You are a creative writer specializing in story titles.",

prompt_template="Generate a creative title for a {{ genre }} story. Output only the title.",

output="title",

)

async def generate_title(genre: str) -> str:

"""Generate a title based on the genre (handled by llm_node)."""

pass # Logic handled by llm_node decorator

@Nodes.define(output="manuscript")

async def compile_book(title: str, outline: str, chapters: List[str]) -> str:

"""Compile the full manuscript from title, outline, and chapters."""

return f"Title: {title}\n\nOutline:\n{outline}\n\n" + "\n\n".join(

f"Chapter {i}:\n{chap}" for i, chap in enumerate(chapters, 1)

)

# Define the workflow with conditional branching

workflow = (

Workflow("validate_input")

.then("generate_title")

.then("generate_outline")

.then("generate_chapter")

.then("update_chapter_progress")

.then("generate_chapter", condition=lambda ctx: ctx["completed_chapters"] < ctx["num_chapters"])

.then("compile_book", condition=lambda ctx: ctx["completed_chapters"] >= ctx["num_chapters"])

.then("quality_check")

.then("end")

)

# Run the workflow

async def main():

initial_context = {

"genre": "science fiction",

"num_chapters": 3,

"chapters": [],

"completed_chapters": 0,

}

engine = workflow.build()

result = await engine.run(initial_context)This example demonstrates:

- Input validation with

@Nodes.validate_node - LLM integration with

@Nodes.llm_node - Custom processing with

@Nodes.define - Conditional branching for iterative chapter generation

- Context management for tracking progress

The full example is available at examples/flow/story_generator/story_generator_agent.py.

graph LR

A[Start] --> B[WriteChapter]

B -->|Condition: True| C[End]

subgraph WriteChapter

D[Call LLM] --> E[Save Chapter]

end

A -->|Observer| F[Log: NODE_STARTED]

B -->|Observer| G[Log: NODE_COMPLETED]

style A fill:#dfd,stroke:#333

style C fill:#dfd,stroke:#333

style B fill:#ffb,stroke:#333@Nodes.define(output="processed")

def clean_data(data: str) -> str:

return data.strip().upper()

workflow = Workflow("clean_data").build()

result = await workflow.run({"data": " hello "})

print(result["processed"]) # "HELLO"Think content pipelines, automation flows, or any multi-step task that needs order.

The Chat mode is your conversational companion—engaging, flexible, and tool-savvy. Built on the same robust ReAct foundation, it lets you chat naturally with an AI persona while seamlessly integrating tool calls when needed. Perfect for interactive dialogues or quick queries with a dash of utility.

- You Chat: "What’s the weather like today?"

- It Responds: Engages conversationally, deciding if a tool (like a weather lookup) is needed.

- Tool Magic: If required, it calls tools using the same XML-based system as ReAct, then weaves the results into the conversation.

- Keeps Going: Maintains context for a smooth, flowing chat.

quantalogic chat --persona "You are a helpful travel guide" "Find me flights to Paris"The agent chats back: "Looking up flights to Paris… Here are some options from a search tool: [flight details]. Anything else I can help with?"

from quantalogic import Agent

from quantalogic.tools import DuckDuckGoSearchTool

agent = Agent(

model_name="gpt-4o",

chat_system_prompt="You are a curious explorer",

tools=[DuckDuckGoSearchTool()]

)

response = agent.chat("Tell me about the tallest mountain")

print(response)

# Might output: "I’ll look that up! The tallest mountain is Mount Everest, standing at 8,848 meters, according to a quick search."Chat mode uses the same tool-calling mechanism as ReAct:

<action>

<duckduckgo_tool>

<query>tallest mountain</query>

<max_results>5</max_results>

</duckduckgo_tool>

</action>- Tools are auto-executed (configurable with

--auto-tool-call) and results are formatted naturally. - Prioritize specific tools with

--tool-mode(e.g.,searchorcode).

Ideal for casual chats, quick info lookups, or interactive assistance with tool-powered precision—without the rigid task-solving structure of ReAct.

All three modes are stellar, but here’s the scoop:

| Feature | ReAct Framework | Flow Module | Chat Mode |

|---|---|---|---|

| Vibe | Free-spirited, adaptive | Organized, predictable | Conversational, flexible |

| Flow | Loops ‘til it’s solved | Follows a roadmap | Flows with the chat |

| Sweet Spot | Creative chaos (coding, Q&A) | Steady workflows (pipelines) | Casual chats, quick queries |

| State | Memory keeps it loose | Nodes lock it down | Context keeps it flowing |

| Tools | Grabbed as needed | Baked into nodes | Called when relevant |

| Watch It | Events like task_complete

|

Observers like NODE_STARTED

|

Events like chat_response

|

- ReAct: Code on-the-fly, explore answers, debug like a pro.

- Flow: Build a pipeline, automate a process, keep it tight.

- Chat: Converse naturally, get quick answers, use tools on demand.

The CLI is your command center—fast, flexible, and fun!

quantalogic [OPTIONS] COMMAND [ARGS]...QuantaLogic AI Assistant - A powerful AI tool for various tasks.

-

OpenAI: Set

OPENAI_API_KEYto your OpenAI API key -

Anthropic: Set

ANTHROPIC_API_KEYto your Anthropic API key -

DeepSeek: Set

DEEPSEEK_API_KEYto your DeepSeek API key

Use a .env file or export these variables in your shell for seamless integration.

-

task: Kick off a mission.quantalogic task "Summarize this file" --file notes.txt -

chat: Start a conversation.quantalogic chat --persona "You are a tech guru" "What’s new in AI?"

-

list-models: List supported LiteLLM models with optional fuzzy search.quantalogic list-models --search "gpt"

-

--model-name TEXT: Specify the model to use (litellm format). Examples:openai/gpt-4o-miniopenai/gpt-4oanthropic/claude-3.5-sonnetdeepseek/deepseek-chatdeepseek/deepseek-reasoneropenrouter/deepseek/deepseek-r1openrouter/openai/gpt-4o

-

--mode [code|basic|interpreter|full|code-basic|search|search-full|chat]: Agent mode -

--vision-model-name TEXT: Specify the vision model to use (litellm format) -

--log [info|debug|warning]: Set logging level -

--verbose: Enable verbose output -

--max-iterations INTEGER: Maximum number of iterations (default: 30, task mode only) -

--max-tokens-working-memory INTEGER: Set the maximum tokens allowed in working memory -

--compact-every-n-iteration INTEGER: Set the frequency of memory compaction -

--thinking-model TEXT: The thinking model to use -

--persona TEXT: Set the chat persona (chat mode only) -

--tool-mode TEXT: Prioritize a tool or toolset (chat mode only) -

--auto-tool-call: Enable/disable auto tool execution (chat mode only, default: True) -

--version: Show version information

Tip: Run

quantalogic --helpfor the complete command reference!

Explore our collection of examples to see QuantaLogic in action:

- Flow Examples: Discover practical workflows showcasing Quantalogic Flow capabilities

- Agent Examples: See dynamic agents in action with the ReAct framework

- Tool Examples: Explore our powerful tool integrations

Each example comes with detailed documentation and ready-to-run code.

| Name | What’s It Do? | File |

|---|---|---|

| Simple Agent | Basic ReAct agent demo | 01-simple-agent.py |

| Event Monitoring | Agent with event tracking | 02-agent-with-event-monitoring.py |

| Interpreter Mode | Agent with interpreter | 03-agent-with-interpreter.py |

| Agent Summary | Task summary generation | 04-agent-summary-task.py |

| Code Generation | Basic code generation | 05-code.py |

| Code Screen | Advanced code generation | 06-code-screen.py |

| Tutorial Writer | Write technical tutorials | 07-write-tutorial.py |

| PRD Writer | Product Requirements Document | 08-prd-writer.py |

| Story Generator | Flow-based story creation | story_generator_agent.py |

| SQL Query | Database query generation | 09-sql-query.py |

| Finance Agent | Financial analysis and tasks | 10-finance-agent.py |

| Textual Interface | Agent with textual UI | 11-textual-agent-interface.py |

| Composio Test | Composio integration demo | 12-composio-test.py |

| Synchronous Agent | Synchronous agent demo | 13-synchronous-agent.py |

| Async Agent | Async agent demo | 14-async-agent.py |

quantalogic task "Solve 2x + 5 = 15"Output: "Let’s solve it! 2x + 5 = 15 → 2x = 10 → x = 5. Done!"

quantalogic chat "Search for the latest AI breakthroughs"Output: "I’ll dig into that! Here’s what I found with a search: [latest AI news]. Pretty cool, right?"

- Brain: LLMs power the thinking.

-

Hands: Tools like

PythonTooldo the work. - Memory: Ties it all together.

- Nodes: Your task blocks.

- Engine: The maestro of execution.

- Persona: Customizable conversational style.

- Tools: Integrated seamlessly via ReAct’s system.

- Context: Keeps the conversation flowing.

-

Code:

PythonTool,NodeJsTool. -

Files:

ReadFileTool,WriteFileTool. - More in REFERENCE_TOOLS.md.

git clone https://github.com/quantalogic/quantalogic.git

cd quantalogic

python -m venv venv

source venv/bin/activate

poetry installpytest --cov=quantalogicruff format # Shine that code

mypy quantalogic # Check types

ruff check quantalogic # Lint itThe create_tool() function transforms any Python function into a reusable Tool:

from quantalogic.tools import create_tool

def weather_lookup(city: str, country: str = "US") -> dict:

"""Retrieve current weather for a given location.

Args:

city: Name of the city to look up

country: Two-letter country code (default: US)

Returns:

Dictionary with weather information

"""

# Implement weather lookup logic here

return {"temperature": 22, "condition": "Sunny"}

# Convert the function to a Tool

weather_tool = create_tool(weather_lookup)

# Now you can use it as a Tool

print(weather_tool.to_markdown()) # Generate tool documentation

result = weather_tool.execute(city="New York") # Execute as a toolfrom quantalogic import Agent

from quantalogic.tools import create_tool, PythonTool

# Create a custom stock price lookup tool

def get_stock_price(symbol: str) -> str:

"""Get the current price of a stock by its ticker symbol.

Args:

symbol: Stock ticker symbol (e.g., AAPL, MSFT)

Returns:

Current stock price information

"""

# In a real implementation, you would fetch from an API

prices = {"AAPL": 185.92, "MSFT": 425.27, "GOOGL": 175.43}

if symbol in prices:

return f"{symbol} is currently trading at ${prices[symbol]}"

return f"Could not find price for {symbol}"

# Create an agent with standard and custom tools

agent = Agent(

model_name="gpt-4o",

tools=[

PythonTool(), # Standard Python execution tool

create_tool(get_stock_price) # Custom stock price tool

]

)

# The agent can now use both tools to solve tasks

result = agent.solve_task(

"Write a Python function to calculate investment growth, "

"then analyze Apple stock's current price"

)

print(result)from quantalogic import Agent

from quantalogic.tools import create_tool

# Reuse the stock price tool

stock_tool = create_tool(get_stock_price)

# Create a chat agent

agent = Agent(

model_name="gpt-4o",

chat_system_prompt="You are a financial advisor",

tools=[stock_tool]

)

# Chat with tool usage

response = agent.chat("What’s the price of Microsoft stock?")

print(response)

# Might output: "Let me check that for you! MSFT is currently trading at $425.27."Key features of create_tool():

- 🔧 Automatically converts functions to Tools

- 📝 Extracts metadata from function signature and docstring

- 🔍 Supports both synchronous and asynchronous functions

- 🛠️ Generates tool documentation and validation

Join the QuantaLogic galaxy!

- Fork it.

- Branch:

git checkout -b feature/epic-thing. - Code + test.

- PR it!

See CONTRIBUTING.md for the full scoop.

2024 QuantaLogic Contributors. Apache 2.0—free and open. Check LICENSE.

Dreamed up by Raphaël MANSUY, founder of QuantaLogic.

QuantaLogic links to LLMs via API keys—here’s your guide to unlocking the universe!

Store keys in a .env file or export them:

echo "OPENAI_API_KEY=sk-your-openai-key" > .env

echo "DEEPSEEK_API_KEY=ds-your-deepseek-key" >> .env

source .env| Model Name | Key Variable | What’s It Good For? |

|---|---|---|

openai/gpt-4o-mini |

OPENAI_API_KEY |

Speedy, budget-friendly tasks |

openai/gpt-4o |

OPENAI_API_KEY |

Heavy-duty reasoning |

anthropic/claude-3.5-sonnet |

ANTHROPIC_API_KEY |

Balanced brilliance |

deepseek/deepseek-chat |

DEEPSEEK_API_KEY |

Chatty and versatile |

deepseek/deepseek-reasoner |

DEEPSEEK_API_KEY |

Deep problem-solving |

openrouter/deepseek/deepseek-r1 |

OPENROUTER_API_KEY |

Research-grade via OpenRouter |

mistral/mistral-large-2407 |

MISTRAL_API_KEY |

Multilingual mastery |

dashscope/qwen-max |

DASHSCOPE_API_KEY |

Alibaba’s power player |

lm_studio/mistral-small-24b-instruct-2501 |

LM_STUDIO_API_KEY |

Local LLM action |

export LM_STUDIO_API_BASE="http://localhost:1234/v1"

export LM_STUDIO_API_KEY="lm-your-key"-

Security: Keep keys in

.env, not code! -

Extras: Add

OPENROUTER_REFERRERfor OpenRouter flair. - More: Dig into LiteLLM Docs.

QuantaLogic is your ticket to AI awesomeness. Install it, play with it—whether solving tasks, crafting workflows, or chatting up a storm—and let’s build something unforgettable together!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for quantalogic

Similar Open Source Tools

quantalogic

QuantaLogic is a ReAct framework for building advanced AI agents that seamlessly integrates large language models with a robust tool system. It aims to bridge the gap between advanced AI models and practical implementation in business processes by enabling agents to understand, reason about, and execute complex tasks through natural language interaction. The framework includes features such as ReAct Framework, Universal LLM Support, Secure Tool System, Real-time Monitoring, Memory Management, and Enterprise Ready components.

sgr-deep-research

This repository contains a deep learning research project focused on natural language processing tasks. It includes implementations of various state-of-the-art models and algorithms for text classification, sentiment analysis, named entity recognition, and more. The project aims to provide a comprehensive resource for researchers and developers interested in exploring deep learning techniques for NLP applications.

core

CORE is an open-source unified, persistent memory layer for all AI tools, allowing developers to maintain context across different tools like Cursor, ChatGPT, and Claude. It aims to solve the issue of context switching and information loss between sessions by creating a knowledge graph that remembers conversations, decisions, and insights. With features like unified memory, temporal knowledge graph, browser extension, chat with memory, auto-sync from apps, and MCP integration hub, CORE provides a seamless experience for managing and recalling context. The tool's ingestion pipeline captures evolving context through normalization, extraction, resolution, and graph integration, resulting in a dynamic memory that grows and changes with the user. When recalling from memory, CORE utilizes search, re-ranking, filtering, and output to provide relevant and contextual answers. Security measures include data encryption, authentication, access control, and vulnerability reporting.

code_puppy

Code Puppy is an AI-powered code generation agent designed to understand programming tasks, generate high-quality code, and explain its reasoning. It supports multi-language code generation, interactive CLI, and detailed code explanations. The tool requires Python 3.9+ and API keys for various models like GPT, Google's Gemini, Cerebras, and Claude. It also integrates with MCP servers for advanced features like code search and documentation lookups. Users can create custom JSON agents for specialized tasks and access a variety of tools for file management, code execution, and reasoning sharing.

pocketgroq

PocketGroq is a tool that provides advanced functionalities for text generation, web scraping, web search, and AI response evaluation. It includes features like an Autonomous Agent for answering questions, web crawling and scraping capabilities, enhanced web search functionality, and flexible integration with Ollama server. Users can customize the agent's behavior, evaluate responses using AI, and utilize various methods for text generation, conversation management, and Chain of Thought reasoning. The tool offers comprehensive methods for different tasks, such as initializing RAG, error handling, and tool management. PocketGroq is designed to enhance development processes and enable the creation of AI-powered applications with ease.

open-responses

OpenResponses API provides enterprise-grade AI capabilities through a powerful API, simplifying development and deployment while ensuring complete data control. It offers automated tracing, integrated RAG for contextual information retrieval, pre-built tool integrations, self-hosted architecture, and an OpenAI-compatible interface. The toolkit addresses development challenges like feature gaps and integration complexity, as well as operational concerns such as data privacy and operational control. Engineering teams can benefit from improved productivity, production readiness, compliance confidence, and simplified architecture by choosing OpenResponses.

Acontext

Acontext is a context data platform designed for production AI agents, offering unified storage, built-in context management, and observability features. It helps agents scale from local demos to production without the need to rebuild context infrastructure. The platform provides solutions for challenges like scattered context data, long-running agents requiring context management, and tracking states from multi-modal agents. Acontext offers core features such as context storage, session management, disk storage, agent skills management, and sandbox for code execution and analysis. Users can connect to Acontext, install SDKs, initialize clients, store and retrieve messages, perform context engineering, and utilize agent storage tools. The platform also supports building agents using end-to-end scripts in Python and Typescript, with various templates available. Acontext's architecture includes client layer, backend with API and core components, infrastructure with PostgreSQL, S3, Redis, and RabbitMQ, and a web dashboard. Join the Acontext community on Discord and follow updates on GitHub.

claude-task-master

Claude Task Master is a task management system designed for AI-driven development with Claude, seamlessly integrating with Cursor AI. It allows users to configure tasks through environment variables, parse PRD documents, generate structured tasks with dependencies and priorities, and manage task status. The tool supports task expansion, complexity analysis, and smart task recommendations. Users can interact with the system through CLI commands for task discovery, implementation, verification, and completion. It offers features like task breakdown, dependency management, and AI-driven task generation, providing a structured workflow for efficient development.

UnrealGenAISupport

The Unreal Engine Generative AI Support Plugin is a tool designed to integrate various cutting-edge LLM/GenAI models into Unreal Engine for game development. It aims to simplify the process of using AI models for game development tasks, such as controlling scene objects, generating blueprints, running Python scripts, and more. The plugin currently supports models from organizations like OpenAI, Anthropic, XAI, Google Gemini, Meta AI, Deepseek, and Baidu. It provides features like API support, model control, generative AI capabilities, UI generation, project file management, and more. The plugin is still under development but offers a promising solution for integrating AI models into game development workflows.

instructor

Instructor is a tool that provides structured outputs from Large Language Models (LLMs) in a reliable manner. It simplifies the process of extracting structured data by utilizing Pydantic for validation, type safety, and IDE support. With Instructor, users can define models and easily obtain structured data without the need for complex JSON parsing, error handling, or retries. The tool supports automatic retries, streaming support, and extraction of nested objects, making it production-ready for various AI applications. Trusted by a large community of developers and companies, Instructor is used by teams at OpenAI, Google, Microsoft, AWS, and YC startups.

LocalAGI

LocalAGI is a powerful, self-hostable AI Agent platform that allows you to design AI automations without writing code. It provides a complete drop-in replacement for OpenAI's Responses APIs with advanced agentic capabilities. With LocalAGI, you can create customizable AI assistants, automations, chat bots, and agents that run 100% locally, without the need for cloud services or API keys. The platform offers features like no-code agents, web-based interface, advanced agent teaming, connectors for various platforms, comprehensive REST API, short & long-term memory capabilities, planning & reasoning, periodic tasks scheduling, memory management, multimodal support, extensible custom actions, fully customizable models, observability, and more.

factorio-learning-environment

Factorio Learning Environment is an open source framework designed for developing and evaluating LLM agents in the game of Factorio. It provides two settings: Lab-play with structured tasks and Open-play for building large factories. Results show limitations in spatial reasoning and automation strategies. Agents interact with the environment through code synthesis, observation, action, and feedback. Tools are provided for game actions and state representation. Agents operate in episodes with observation, planning, and action execution. Tasks specify agent goals and are implemented in JSON files. The project structure includes directories for agents, environment, cluster, data, docs, eval, and more. A database is used for checkpointing agent steps. Benchmarks show performance metrics for different configurations.

aider-desk

AiderDesk is a desktop application that enhances coding workflow by leveraging AI capabilities. It offers an intuitive GUI, project management, IDE integration, MCP support, settings management, cost tracking, structured messages, visual file management, model switching, code diff viewer, one-click reverts, and easy sharing. Users can install it by downloading the latest release and running the executable. AiderDesk also supports Python version detection and auto update disabling. It includes features like multiple project management, context file management, model switching, chat mode selection, question answering, cost tracking, MCP server integration, and MCP support for external tools and context. Development setup involves cloning the repository, installing dependencies, running in development mode, and building executables for different platforms. Contributions from the community are welcome following specific guidelines.

LLMVoX

LLMVoX is a lightweight 30M-parameter, LLM-agnostic, autoregressive streaming Text-to-Speech (TTS) system designed to convert text outputs from Large Language Models into high-fidelity streaming speech with low latency. It achieves significantly lower Word Error Rate compared to speech-enabled LLMs while operating at comparable latency and speech quality. Key features include being lightweight & fast with only 30M parameters, LLM-agnostic for easy integration with existing models, multi-queue streaming for continuous speech generation, and multilingual support for easy adaptation to new languages.

ai

A TypeScript toolkit for building AI-driven video workflows on the server, powered by Mux! @mux/ai provides purpose-driven workflow functions and primitive functions that integrate with popular AI/LLM providers like OpenAI, Anthropic, and Google. It offers pre-built workflows for tasks like generating summaries and tags, content moderation, chapter generation, and more. The toolkit is cost-effective, supports multi-modal analysis, tone control, and configurable thresholds, and provides full TypeScript support. Users can easily configure credentials for Mux and AI providers, as well as cloud infrastructure like AWS S3 for certain workflows. @mux/ai is production-ready, offers composable building blocks, and supports universal language detection.

mcp-documentation-server

The mcp-documentation-server is a lightweight server application designed to serve documentation files for projects. It provides a simple and efficient way to host and access project documentation, making it easy for team members and stakeholders to find and reference important information. The server supports various file formats, such as markdown and HTML, and allows for easy navigation through the documentation. With mcp-documentation-server, teams can streamline their documentation process and ensure that project information is easily accessible to all involved parties.

For similar tasks

quantalogic

QuantaLogic is a ReAct framework for building advanced AI agents that seamlessly integrates large language models with a robust tool system. It aims to bridge the gap between advanced AI models and practical implementation in business processes by enabling agents to understand, reason about, and execute complex tasks through natural language interaction. The framework includes features such as ReAct Framework, Universal LLM Support, Secure Tool System, Real-time Monitoring, Memory Management, and Enterprise Ready components.

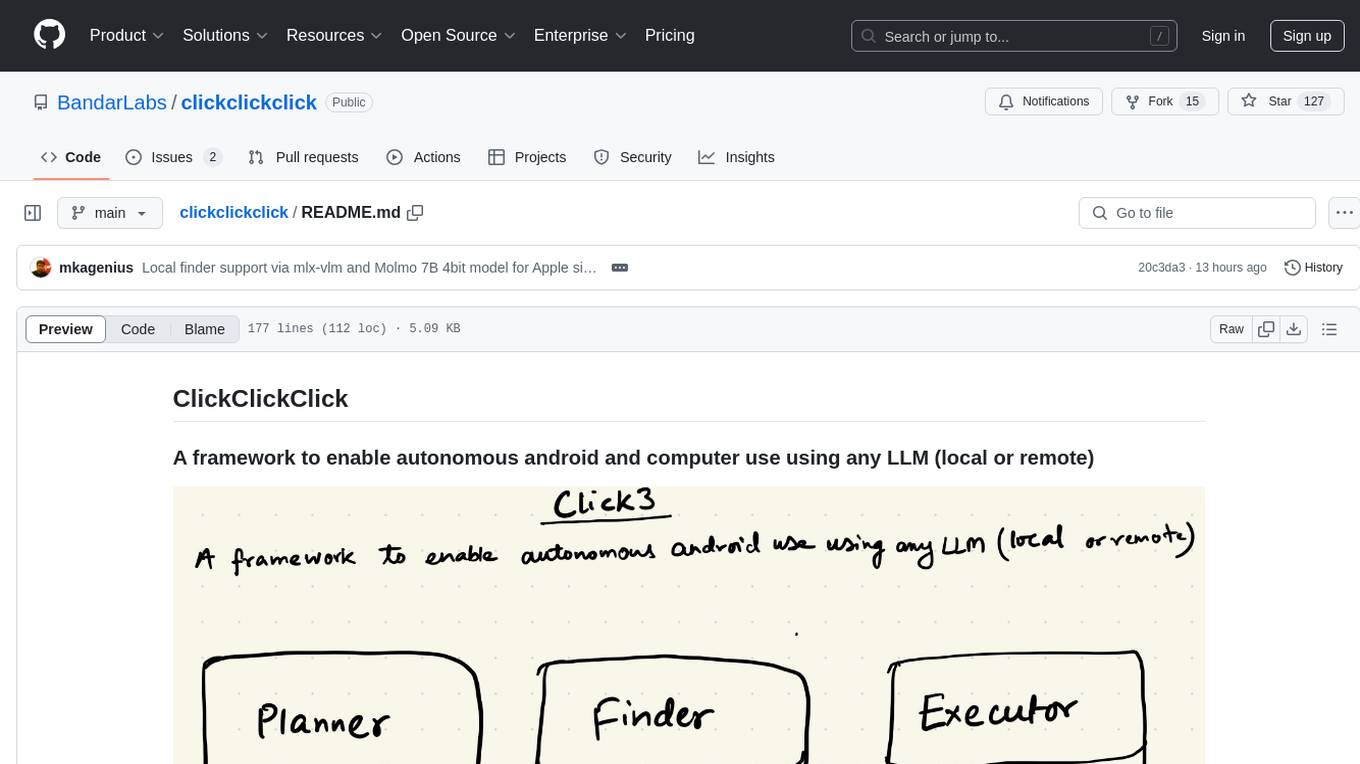

clickclickclick

ClickClickClick is a framework designed to enable autonomous Android and computer use using various LLM models, both locally and remotely. It supports tasks such as drafting emails, opening browsers, and starting games, with current support for local models via Ollama, Gemini, and GPT 4o. The tool is highly experimental and evolving, with the best results achieved using specific model combinations. Users need prerequisites like `adb` installation and USB debugging enabled on Android phones. The tool can be installed via cloning the repository, setting up a virtual environment, and installing dependencies. It can be used as a CLI tool or script, allowing users to configure planner and finder models for different tasks. Additionally, it can be used as an API to execute tasks based on provided prompts, platform, and models.

agenticSeek

AgenticSeek is a voice-enabled AI assistant powered by DeepSeek R1 agents, offering a fully local alternative to cloud-based AI services. It allows users to interact with their filesystem, code in multiple languages, and perform various tasks autonomously. The tool is equipped with memory to remember user preferences and past conversations, and it can divide tasks among multiple agents for efficient execution. AgenticSeek prioritizes privacy by running entirely on the user's hardware without sending data to the cloud.

invariant

Invariant Analyzer is an open-source scanner designed for LLM-based AI agents to find bugs, vulnerabilities, and security threats. It scans agent execution traces to identify issues like looping behavior, data leaks, prompt injections, and unsafe code execution. The tool offers a library of built-in checkers, an expressive policy language, data flow analysis, real-time monitoring, and extensible architecture for custom checkers. It helps developers debug AI agents, scan for security violations, and prevent security issues and data breaches during runtime. The analyzer leverages deep contextual understanding and a purpose-built rule matching engine for security policy enforcement.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.