supergateway

Run MCP stdio servers over SSE and SSE over stdio. AI gateway.

Stars: 599

Supergateway is a tool that allows running MCP stdio-based servers over SSE (Server-Sent Events) with one command. It is useful for remote access, debugging, or connecting to SSE-based clients when your MCP server only speaks stdio. The tool supports running in SSE to Stdio mode as well, where it connects to a remote SSE server and exposes a local stdio interface for downstream clients. Supergateway can be used with ngrok to share local MCP servers with remote clients and can also be run in a Docker containerized deployment. It is designed with modularity in mind, ensuring compatibility and ease of use for AI tools exchanging data.

README:

Supergateway runs MCP stdio-based servers over SSE (Server-Sent Events) or WebSockets (WS) with one command. This is useful for remote access, debugging, or connecting to clients when your MCP server only supports stdio.

Supported by Supermachine (hosted MCPs), Superinterface, and Supercorp.

Run Supergateway via npx:

npx -y supergateway --stdio "uvx mcp-server-git"-

--stdio "command": Command that runs an MCP server over stdio -

--sse "https://mcp-server-ab71a6b2-cd55-49d0-adba-562bc85956e3.supermachine.app": SSE URL to connect to (SSE→stdio mode) -

--outputTransport stdio | sse | ws: Output MCP transport (default:ssewith--stdio,stdiowith--sse) -

--port 8000: Port to listen on (stdio→SSE or stdio→WS mode, default:8000) -

--baseUrl "http://localhost:8000": Base URL for SSE or WS clients (stdio→SSE mode; optional) -

--ssePath "/sse": Path for SSE subscriptions (stdio→SSE mode, default:/sse) -

--messagePath "/message": Path for messages (stdio→SSE or stdio→WS mode, default:/message) -

--header "Authorization: Bearer 123": Add one or more headers (stdio→SSE or SSE→stdio mode; can be used multiple times) -

--logLevel info | none: Controls logging level (default:info). Usenoneto suppress all logs. -

--cors: Enable CORS (stdio→SSE or stdio→WS mode) -

--healthEndpoint /healthz: Register one or more endpoints (stdio→SSE or stdio→WS mode; can be used multiple times) that respond with"ok"

Expose an MCP stdio server as an SSE server:

npx -y supergateway \

--stdio "npx -y @modelcontextprotocol/server-filesystem ./my-folder" \

--port 8000 --baseUrl http://localhost:8000 \

--ssePath /sse --messagePath /message-

Subscribe to events:

GET http://localhost:8000/sse -

Send messages:

POST http://localhost:8000/message

Connect to a remote SSE server and expose locally via stdio:

npx -y supergateway --sse "https://mcp-server-ab71a6b2-cd55-49d0-adba-562bc85956e3.supermachine.app"Useful for integrating remote SSE MCP servers into local command-line environments.

You can also pass headers when sending requests. This is useful for authentication:

npx -y supergateway \

--sse "https://mcp-server-ab71a6b2-cd55-49d0-adba-562bc85956e3.supermachine.app" \

--header "Authorization: Bearer some-token" \

--header "X-My-Header: another-value"Expose an MCP stdio server as a WebSocket server:

npx -y supergateway \

--stdio "npx -y @modelcontextprotocol/server-filesystem ./my-folder" \

--port 8000 --outputTransport ws --messagePath /message-

WebSocket endpoint:

ws://localhost:8000/message

- Run Supergateway:

npx -y supergateway --port 8000 \

--stdio "npx -y @modelcontextprotocol/server-filesystem /Users/MyName/Desktop"- Use MCP Inspector:

npx @modelcontextprotocol/inspectorYou can now list tools, resources, or perform MCP actions via Supergateway.

Use ngrok to share your local MCP server publicly:

npx -y supergateway --port 8000 \

--stdio "npx -y @modelcontextprotocol/server-filesystem ."

# In another terminal:

ngrok http 8000ngrok provides a public URL for remote access.

A Docker-based workflow avoids local Node.js setup. A ready-to-run Docker image is available here: supercorp/supergateway. Also on GHCR: ghcr.io/supercorp-ai/supergateway

docker run -it --rm -p 8000:8000 supercorp/supergateway \

--stdio "npx -y @modelcontextprotocol/server-filesystem /" \

--port 8000Docker pulls the image automatically. The MCP server runs in the container’s root directory (/). You can mount host directories if needed.

Use provided Dockerfile:

docker build -t supergateway .

docker run -it --rm -p 8000:8000 supergateway \

--stdio "npx -y @modelcontextprotocol/server-filesystem /" \

--port 8000Claude Desktop can use Supergateway’s SSE→stdio mode.

{

"mcpServers": {

"supermachineExampleNpx": {

"command": "npx",

"args": [

"-y",

"supergateway",

"--sse",

"https://mcp-server-ab71a6b2-cd55-49d0-adba-562bc85956e3.supermachine.app"

]

}

}

}{

"mcpServers": {

"supermachineExampleDocker": {

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"supercorp/supergateway",

"--sse",

"https://mcp-server-ab71a6b2-cd55-49d0-adba-562bc85956e3.supermachine.app"

]

}

}

}Model Context Protocol standardizes AI tool interactions. Supergateway converts MCP stdio servers into SSE or WS services, simplifying integration and debugging with web-based or remote clients.

Supergateway emphasizes modularity:

- Automatically manages JSON-RPC versioning.

- Retransmits package metadata where possible.

- stdio→SSE or stdio→WS mode logs via standard output; SSE→stdio mode logs via stderr.

- Superargs - provide arguments to MCP servers during runtime.

Issues and PRs welcome. Please open one if you encounter problems or have feature suggestions.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for supergateway

Similar Open Source Tools

supergateway

Supergateway is a tool that allows running MCP stdio-based servers over SSE (Server-Sent Events) with one command. It is useful for remote access, debugging, or connecting to SSE-based clients when your MCP server only speaks stdio. The tool supports running in SSE to Stdio mode as well, where it connects to a remote SSE server and exposes a local stdio interface for downstream clients. Supergateway can be used with ngrok to share local MCP servers with remote clients and can also be run in a Docker containerized deployment. It is designed with modularity in mind, ensuring compatibility and ease of use for AI tools exchanging data.

open-edison

OpenEdison is a secure MCP control panel that connects AI to data/software with additional security controls to reduce data exfiltration risks. It helps address the lethal trifecta problem by providing visibility, monitoring potential threats, and alerting on data interactions. The tool offers features like data leak monitoring, controlled execution, easy configuration, visibility into agent interactions, a simple API, and Docker support. It integrates with LangGraph, LangChain, and plain Python agents for observability and policy enforcement. OpenEdison helps gain observability, control, and policy enforcement for AI interactions with systems of records, existing company software, and data to reduce risks of AI-caused data leakage.

TermNet

TermNet is an AI-powered terminal assistant that connects a Large Language Model (LLM) with shell command execution, browser search, and dynamically loaded tools. It streams responses in real-time, executes tools one at a time, and maintains conversational memory across steps. The project features terminal integration for safe shell command execution, dynamic tool loading without code changes, browser automation powered by Playwright, WebSocket architecture for real-time communication, a memory system to track planning and actions, streaming LLM output integration, a safety layer to block dangerous commands, dual interface options, a notification system, and scratchpad memory for persistent note-taking. The architecture includes a multi-server setup with servers for WebSocket, browser automation, notifications, and web UI. The project structure consists of core backend files, various tools like web browsing and notification management, and servers for browser automation and notifications. Installation requires Python 3.9+, Ollama, and Chromium, with setup steps provided in the README. The tool can be used via the launcher for managing components or directly by starting individual servers. Additional tools can be added by registering them in `toolregistry.json` and implementing them in Python modules. Safety notes highlight the blocking of dangerous commands, allowed risky commands with warnings, and the importance of monitoring tool execution and setting appropriate timeouts.

unity-mcp

MCP for Unity is a tool that acts as a bridge, enabling AI assistants to interact with the Unity Editor via a local MCP Client. Users can instruct their LLM to manage assets, scenes, scripts, and automate tasks within Unity. The tool offers natural language control, powerful tools for asset management, scene manipulation, and automation of workflows. It is extensible and designed to work with various MCP Clients, providing a range of functions for precise text edits, script management, GameObject operations, and more.

text-extract-api

The text-extract-api is a powerful tool that allows users to convert images, PDFs, or Office documents to Markdown text or JSON structured documents with high accuracy. It is built using FastAPI and utilizes Celery for asynchronous task processing, with Redis for caching OCR results. The tool provides features such as PDF/Office to Markdown and JSON conversion, improving OCR results with LLama, removing Personally Identifiable Information from documents, distributed queue processing, caching using Redis, switchable storage strategies, and a CLI tool for task management. Users can run the tool locally or on cloud services, with support for GPU processing. The tool also offers an online demo for testing purposes.

mcp-redis

The Redis MCP Server is a natural language interface designed for agentic applications to efficiently manage and search data in Redis. It integrates seamlessly with MCP (Model Content Protocol) clients, enabling AI-driven workflows to interact with structured and unstructured data in Redis. The server supports natural language queries, seamless MCP integration, full Redis support for various data types, search and filtering capabilities, scalability, and lightweight design. It provides tools for managing data stored in Redis, such as string, hash, list, set, sorted set, pub/sub, streams, JSON, query engine, and server management. Installation can be done from PyPI or GitHub, with options for testing, development, and Docker deployment. Configuration can be via command line arguments or environment variables. Integrations include OpenAI Agents SDK, Augment, Claude Desktop, and VS Code with GitHub Copilot. Use cases include AI assistants, chatbots, data search & analytics, and event processing. Contributions are welcome under the MIT License.

mcphub.nvim

MCPHub.nvim is a powerful Neovim plugin that integrates MCP (Model Context Protocol) servers into your workflow. It offers a centralized config file for managing servers and tools, with an intuitive UI for testing resources. Ideal for LLM integration, it provides programmatic API access and interactive testing through the `:MCPHub` command.

mcp-documentation-server

The mcp-documentation-server is a lightweight server application designed to serve documentation files for projects. It provides a simple and efficient way to host and access project documentation, making it easy for team members and stakeholders to find and reference important information. The server supports various file formats, such as markdown and HTML, and allows for easy navigation through the documentation. With mcp-documentation-server, teams can streamline their documentation process and ensure that project information is easily accessible to all involved parties.

ocode

OCode is a sophisticated terminal-native AI coding assistant that provides deep codebase intelligence and autonomous task execution. It seamlessly works with local Ollama models, bringing enterprise-grade AI assistance directly to your development workflow. OCode offers core capabilities such as terminal-native workflow, deep codebase intelligence, autonomous task execution, direct Ollama integration, and an extensible plugin layer. It can perform tasks like code generation & modification, project understanding, development automation, data processing, system operations, and interactive operations. The tool includes specialized tools for file operations, text processing, data processing, system operations, development tools, and integration. OCode enhances conversation parsing, offers smart tool selection, and provides performance improvements for coding tasks.

AI-Agent-Starter-Kit

AI Agent Starter Kit is a modern full-stack AI-enabled template using Next.js for frontend and Express.js for backend, with Telegram and OpenAI integrations. It offers AI-assisted development, smart environment variable setup assistance, intelligent error resolution, context-aware code completion, and built-in debugging helpers. The kit provides a structured environment for developers to interact with AI tools seamlessly, enhancing the development process and productivity.

git-mcp-server

A secure and scalable Git MCP server providing AI agents with powerful version control capabilities for local and serverless environments. It offers 28 comprehensive Git operations organized into seven functional categories, resources for contextual information about the Git environment, and structured prompt templates for guiding AI agents through complex workflows. The server features declarative tools, robust error handling, pluggable authentication, abstracted storage, full-stack observability, dependency injection, and edge-ready architecture. It also includes specialized features for Git integration such as cross-runtime compatibility, provider-based architecture, optimized Git execution, working directory management, configurable Git identity, safety features, and commit signing.

flapi

flAPI is a powerful service that automatically generates read-only APIs for datasets by utilizing SQL templates. Built on top of DuckDB, it offers features like automatic API generation, support for Model Context Protocol (MCP), connecting to multiple data sources, caching, security implementation, and easy deployment. The tool allows users to create APIs without coding and enables the creation of AI tools alongside REST endpoints using SQL templates. It supports unified configuration for REST endpoints and MCP tools/resources, concurrent servers for REST API and MCP server, and automatic tool discovery. The tool also provides DuckLake-backed caching for modern, snapshot-based caching with features like full refresh, incremental sync, retention, compaction, and audit logs.

oxylabs-mcp

The Oxylabs MCP Server acts as a bridge between AI models and the web, providing clean, structured data from any site. It enables scraping of URLs, rendering JavaScript-heavy pages, content extraction for AI use, bypassing anti-scraping measures, and accessing geo-restricted web data from 195+ countries. The implementation utilizes the Model Context Protocol (MCP) to facilitate secure interactions between AI assistants and web content. Key features include scraping content from any site, automatic data cleaning and conversion, bypassing blocks and geo-restrictions, flexible setup with cross-platform support, and built-in error handling and request management.

mcp-devtools

MCP DevTools is a high-performance server written in Go that replaces multiple Node.js and Python-based servers. It provides access to essential developer tools through a unified, modular interface. The server is efficient, with minimal memory footprint and fast response times. It offers a comprehensive tool suite for agentic coding, including 20+ essential developer agent tools. The tool registry allows for easy addition of new tools. The server supports multiple transport modes, including STDIO, HTTP, and SSE. It includes a security framework for multi-layered protection and a plugin system for adding new tools.

mediasoup-client-aiortc

mediasoup-client-aiortc is a handler for the aiortc Python library, allowing Node.js applications to connect to a mediasoup server using WebRTC for real-time audio, video, and DataChannel communication. It facilitates the creation of Worker instances to manage Python subprocesses, obtain audio/video tracks, and create mediasoup-client handlers. The tool supports features like getUserMedia, handlerFactory creation, and event handling for subprocess closure and unexpected termination. It provides custom classes for media stream and track constraints, enabling diverse audio/video sources like devices, files, or URLs. The tool enhances WebRTC capabilities in Node.js applications through seamless Python subprocess communication.

ruler

Ruler is a tool designed to centralize AI coding assistant instructions, providing a single source of truth for managing instructions across multiple AI coding tools. It helps in avoiding inconsistent guidance, duplicated effort, context drift, onboarding friction, and complex project structures by automatically distributing instructions to the right configuration files. With support for nested rule loading, Ruler can handle complex project structures with context-specific instructions for different components. It offers features like centralised rule management, nested rule loading, automatic distribution, targeted agent configuration, MCP server propagation, .gitignore automation, and a simple CLI for easy configuration management.

For similar tasks

supergateway

Supergateway is a tool that allows running MCP stdio-based servers over SSE (Server-Sent Events) with one command. It is useful for remote access, debugging, or connecting to SSE-based clients when your MCP server only speaks stdio. The tool supports running in SSE to Stdio mode as well, where it connects to a remote SSE server and exposes a local stdio interface for downstream clients. Supergateway can be used with ngrok to share local MCP servers with remote clients and can also be run in a Docker containerized deployment. It is designed with modularity in mind, ensuring compatibility and ease of use for AI tools exchanging data.

mcpdotnet

mcpdotnet is a .NET implementation of the Model Context Protocol (MCP), facilitating connections and interactions between .NET applications and MCP clients and servers. It aims to provide a clean, specification-compliant implementation with support for various MCP capabilities and transport types. The library includes features such as async/await pattern, logging support, and compatibility with .NET 8.0 and later. Users can create clients to use tools from configured servers and also create servers to register tools and interact with clients. The project roadmap includes expanding documentation, increasing test coverage, adding samples, performance optimization, SSE server support, and authentication.

shell-ai

Shell-AI (`shai`) is a CLI utility that enables users to input commands in natural language and receive single-line command suggestions. It leverages natural language understanding and interactive CLI tools to enhance command line interactions. Users can describe tasks in plain English and receive corresponding command suggestions, making it easier to execute commands efficiently. Shell-AI supports cross-platform usage and is compatible with Azure OpenAI deployments, offering a user-friendly and efficient way to interact with the command line.

Helios

Helios is a powerful open-source tool for managing and monitoring your Kubernetes clusters. It provides a user-friendly interface to easily visualize and control your cluster resources, including pods, deployments, services, and more. With Helios, you can efficiently manage your containerized applications and ensure high availability and performance of your Kubernetes infrastructure.

Ivy-Framework

Ivy-Framework is a powerful tool for building internal applications with AI assistance using C# codebase. It provides a CLI for project initialization, authentication integrations, database support, LLM code generation, secrets management, container deployment, hot reload, dependency injection, state management, routing, and external widget framework. Users can easily create data tables for sorting, filtering, and pagination. The framework offers a seamless integration of front-end and back-end development, making it ideal for developing robust internal tools and dashboards.

onnxruntime-server

ONNX Runtime Server is a server that provides TCP and HTTP/HTTPS REST APIs for ONNX inference. It aims to offer simple, high-performance ML inference and a good developer experience. Users can provide inference APIs for ONNX models without writing additional code by placing the models in the directory structure. Each session can choose between CPU or CUDA, analyze input/output, and provide Swagger API documentation for easy testing. Ready-to-run Docker images are available, making it convenient to deploy the server.

aiohttp-devtools

aiohttp-devtools provides dev tools for developing applications with aiohttp and associated libraries. It includes CLI commands for running a local server with live reloading and serving static files. The tools aim to simplify the development process by automating tasks such as setting up a new application and managing dependencies. Developers can easily create and run aiohttp applications, manage static files, and utilize live reloading for efficient development.

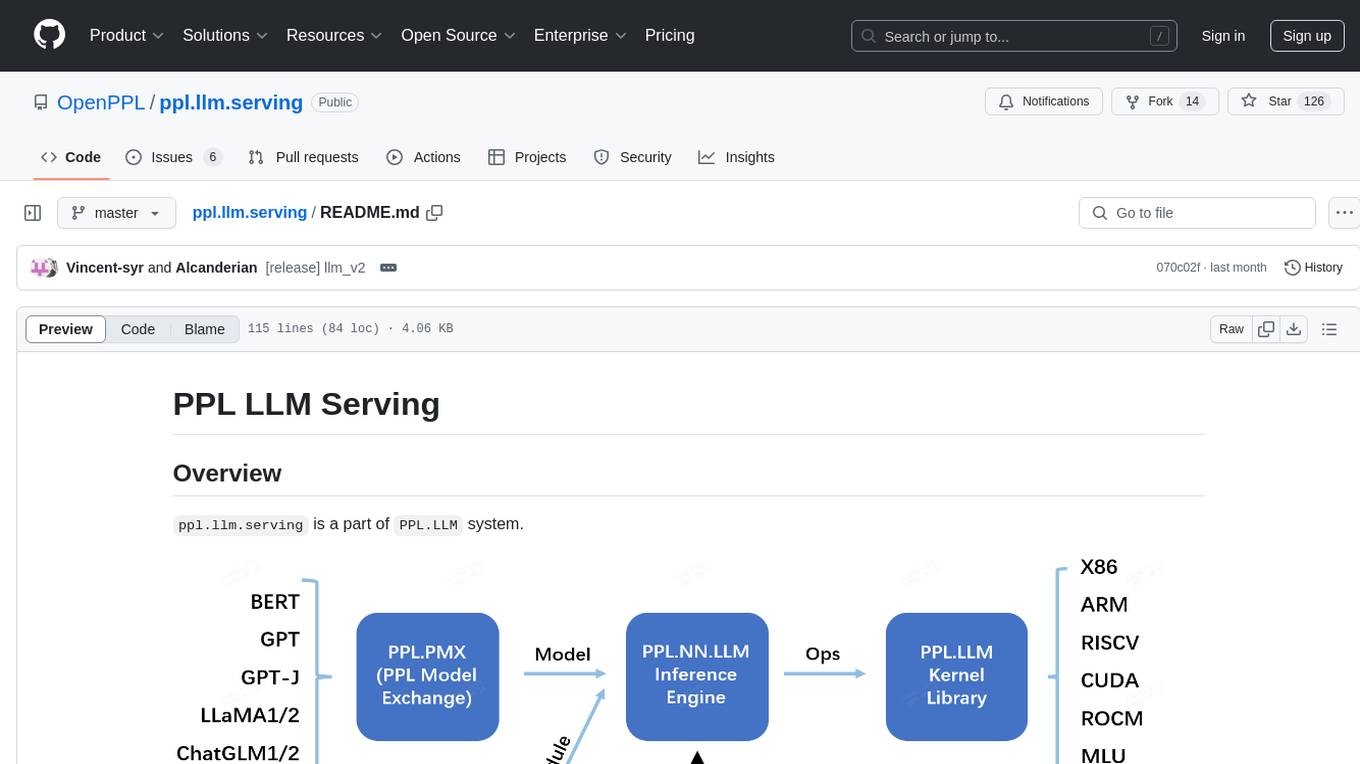

ppl.llm.serving

ppl.llm.serving is a serving component for Large Language Models (LLMs) within the PPL.LLM system. It provides a server based on gRPC and supports inference for LLaMA. The repository includes instructions for prerequisites, quick start guide, model exporting, server setup, client usage, benchmarking, and offline inference. Users can refer to the LLaMA Guide for more details on using this serving component.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.