UnrealGenAISupport

An Unreal Engine plugin for LLM/GenAI models & MCP UE5 server. Supports Claude Desktop App, Windsurf & Cursor, also includes OpenAI's GPT 5, Deepseek V3.1, Claude Sonnet 4 APIs and Grok 4, with plans to add Gemini, audio & realtime APIs soon. UnrealMCP is also here!! Automatic blueprint and scene generation from AI!!

Stars: 263

The Unreal Engine Generative AI Support Plugin is a tool designed to integrate various cutting-edge LLM/GenAI models into Unreal Engine for game development. It aims to simplify the process of using AI models for game development tasks, such as controlling scene objects, generating blueprints, running Python scripts, and more. The plugin currently supports models from organizations like OpenAI, Anthropic, XAI, Google Gemini, Meta AI, Deepseek, and Baidu. It provides features like API support, model control, generative AI capabilities, UI generation, project file management, and more. The plugin is still under development but offers a promising solution for integrating AI models into game development workflows.

README:

Claude spawning scene objects and controlling their transformations and materials, generating blueprints, functions, variables, adding components, running python scripts etc.

A project called become human, where NPCs are OpenAI agentic instances. Built using this plugin.

Every month, hundreds of new AI models are released by various organizations, making it hard to keep up with the latest advancements.

The Unreal Engine Generative AI Support Plugin allows you to focus on game development without worrying about the LLM/GenAI integration layer.

Currently integrating Model Control Protocol (MCP) with Unreal Engine 5.5.

This project aims to build a long-term support (LTS) plugin for various cutting-edge LLM/GenAI models and foster a community around it. It currently includes OpenAI's GPT-4o, Deepseek R1, Claude Sonnet 4, Claude Opus 4, and GPT-4o-mini for Unreal Engine 5.1 or higher, with plans to add , real-time APIs, Gemini, MCP, and Grok 3 APIs soon. The plugin will focus exclusively on APIs useful for game development, evals and interactive experiences. All suggestions and contributions are welcome. The plugin can also be used for setting up new evals and ways to compare models in game battlefields.

[!WARNING]

This plugin is still under rapid development.

- Do not use it in production environments.

⚠️ - Do not use it without version control.

⚠️

I will continue to keep this repo updated with the latest features and models as they become available. Contributions are welcome. If you want a production ready plugin, with more features and guaranteed stability please checkout the Gen AI Pro plugin, as it costs a lot of API credits for me to test different models per feature and per engine version to make sure everything works well and compatible. Otherwise, I feel this plugin is good enough for many use cases (including the examples shown in the beginning) and you can use it for free, forever.

Currently working on fixing the issues with MCP (especially the node generation) and adding more features to it.

- OpenAI API Support:

- OpenAI Chat API ✅

(models-ref)

-

gpt-5✅, -

gpt-4.1,gpt-4.1-mini,gpt-4.1-nanoModel ✅ -

gpt-4o,gpt-4o-miniModel ✅ -

o4-miniModel ✅ -

o3,o3-pro,o3-mini,o1Model ✅

-

- OpenAI DALL-E API (available in pro) ☑️

- Responses API 🛠️

- OpenAI Vision API (available in pro) ☑️

- OpenAI Realtime API

-

gpt-4o-realtime-previewgpt-4o-mini-realtime-previewModel (available in pro) ☑️

-

- OpenAI Structured Outputs ✅

- OpenAI Whisper & TTS API (available in pro) ☑️

- Multimodal API Support (available in pro) ☑️

- Text Streaming (available in pro) ☑️

- OpenAI Chat API ✅

(models-ref)

- Anthropic Claude API Support:

- Claude Chat API ✅

-

claude-4-latest,claude-3-7-sonnet-latest,claude-3-5-sonnet,claude-3-5-haiku-latest,claude-3-opus-latestModel ✅

-

- Multimodal/Vision API Support (available in pro) ☑️

- Claude Chat API ✅

- XAI (Grok 3) API Support:

- XAI Chat Completions API ✅

-

grok-3-latest,grok-3-mini-betaModel ✅ -

grok-3,grok-3-mini,grok-3-fast,grok-3-mini-fast,grok-2-vision-1212,grok-2-1212. -

grok-4Reasoning API (available in pro) ☑️

-

- XAI Image API 🚧

- Text Streaming API (available in pro) ☑️

- Multimodal API Support (available in pro) ☑️

- XAI Chat Completions API ✅

- Google Gemini API Support:

- Gemini Chat API

-

gemini-2.0-flash-lite,gemini-2.0-flashgemini-1.5-flash(available in pro) ☑️ - Gemini 2.5 Pro Model (available in pro) ☑️

-

- Gemini Imagen API:

-

imagen-3.0-generate-002(available in pro) ☑️ -

nano-banana(available in pro) ☑️

-

- Google TTS & Transcription API: (available in pro) ☑️

- Multimodal API Support (available in pro) ☑️

- Gemini Chat API

- Meta AI API Support:

- Llama 4 herd: ❌

- Llama 4 Behemoth, Llama 4 Maverick, Llama 4 Scout ❌

- llama3.3-70b, llama3.1-8b Model❌

- Local Llama API 🚧🤝

- Llama 4 herd: ❌

- Deepseek API Support:

- Deepseek Chat API ✅

-

deepseek-chat(DeepSeek-V3.1) Model ✅

-

- Deepseek Reasoning API, R1 ✅

-

deepseek-reasoning-r1Model ✅ -

deepseek-reasoning-r1CoT Streaming ❌

-

- Independently Hosted Deepseek Models ❌

- Deepseek Chat API ✅

- Baidu API Support:

- Baidu Chat API ❌

-

baidu-chatModel ❌

-

- Baidu Chat API ❌

- 3D generative model APIs:

- TripoSR by StabilityAI 🚧

- Plugin Documentation 🛠️🤝

- Plugin Example Project 🛠️ here

- Version Control Support

- Perforce Support 🛠️

- Git Submodule Support ✅

- LTS Branching 🚧

- Stable Branch with Bug Fixes 🚧

- Dedicated Contributor for LTS 🚧

- Lightweight Plugin (In Builds)

- No External Dependencies ✅

- Build Flags to enable/disable APIs 🚧

- Submodules per API Organization 🚧

- Exclude MCP from build 🚧

- Testing

- Automated Testing (available in pro) ☑️

- Different Platforms (available in pro) ☑️

- Different Engine Versions (available in pro) ☑️

- Clients Support ✅

- Claude Desktop App Support ✅

- Cursor IDE Support ✅

- OpenAI Operator API Support 🚧

- Blueprints Auto Generation 🛠️

- Creating new blueprint of types ✅

- Adding new functions, function/blueprint variables ✅

- Adding nodes and connections 🛠️ (buggy, issues open)

- Advanced Blueprints Generation 🛠️

- Level/Scene Control for LLMs 🛠️

- Spawning Objects and Shapes ✅

- Moving, rotating and scaling objects ✅

- Changing materials and color ✅

- Advanced scene features 🛠️

- Generative AI:

- Prompt to 3D model fetch and spawn 🛠️

- Control:

- Ability to run Python scripts ✅

- Ability to run Console Commands ✅

- UI:

- Widgets generation 🛠️

- UI Blueprint generation 🛠️

- Project Files:

- Create/Edit project files/folders ️✅

- Delete existing project files ❌

- Others:

- Project Cleanup 🛠️

Where,

- ✅ - Completed

- ☑️ - (available in pro)

- 🛠️ - In Progress

- 🚧 - Planned

- 🤝 - Need Contributors

- ❌ - Won't Support For Now

- Setting API Keys

- Setting up MCP

- Adding the plugin to your project

- Fetching the Latest Plugin Changes

- Usage

- Known Issues

- Config Window

- Contribution Guidelines

- References

[!NOTE]

There is no need to set the API key for testing the MCP features in Claude app. Anthropic key only needed for Claude API.

Set the environment variable PS_<ORGNAME> to your API key.

setx PS_<ORGNAME> "your api key"-

Run the following command in your terminal, replacing yourkey with your API key.

echo "export PS_<ORGNAME>='yourkey'" >> ~/.zshrc

-

Update the shell with the new variable:

source ~/.zshrc

PS: Don't forget to restart the Editor and ALSO the connected IDE after setting the environment variable.

Where <ORGNAME> can be:

PS_OPENAIAPIKEY, PS_DEEPSEEKAPIKEY, PS_ANTHROPICAPIKEY, PS_METAAPIKEY, PS_GOOGLEAPIKEY etc.

Storing API keys in packaged builds is a security risk. This is what the OpenAI API documentation says about it:

"Exposing your OpenAI API key in client-side environments like browsers or mobile apps allows malicious users to take that key and make requests on your behalf – which may lead to unexpected charges or compromise of certain account data. Requests should always be routed through your own backend server where you can keep your API key secure."

Read more about it here.

For test builds you can call the GenSecureKey::SetGenAIApiKeyRuntime either in c++ or blueprints function with your API key in the packaged build.

[!NOTE]

If your project only uses the LLM APIs and not the MCP, you can skip this section.

[!CAUTION]

Discalimer: If you are using the MCP feature of the plugin, it will directly let the Claude Desktop App control your Unreal Engine project. Make sure you are aware of the security risks and only use it in a controlled environment.Please backup your project before using the MCP feature and use version control to track changes.

claude_desktop_config.json file in Claude Desktop App's installation directory. (might ask claude where its located for your platform!)

The file will look something like this:

{

"mcpServers": {

"unreal-handshake": {

"command": "python",

"args": ["<your_project_directoy_path>/Plugins/GenerativeAISupport/Content/Python/mcp_server.py"],

"env": {

"UNREAL_HOST": "localhost",

"UNREAL_PORT": "9877"

}

}

}

}.cursor/mcp.json file in your project directory. The file will look something like this:

{

"mcpServers": {

"unreal-handshake": {

"command": "python",

"args": ["<your_project_directoy_path>/Plugins/GenerativeAISupport/Content/Python/mcp_server.py"],

"env": {

"UNREAL_HOST": "localhost",

"UNREAL_PORT": "9877"

}

}

}

}pip install mcp[cli]-

Add the Plugin Repository as a Submodule in your project's repository.

git submodule add https://github.com/prajwalshettydev/UnrealGenAISupport Plugins/GenerativeAISupport

-

Regenerate Project Files: Right-click your .uproject file and select Generate Visual Studio project files.

-

Enable the Plugin in Unreal Editor: Open your project in Unreal Editor. Go to Edit > Plugins. Search for the Plugin in the list and enable it.

-

For Unreal C++ Projects, include the Plugin's module in your project's Build.cs file:

PrivateDependencyModuleNames.AddRange(new string[] { "GenerativeAISupport" });

Still in development..

Coming soon, for free, in the Unreal Engine Marketplace.

you can pull the latest changes with:

cd Plugins/GenerativeAISupport

git pull origin mainOr update all submodules in the project:

git submodule update --recursive --remoteStill in development..

There is a example Unreal project that already implements the plugin. You can find it here.

Currently the plugin supports Chat and Structured Outputs from OpenAI API. Both for C++ and Blueprints.

Tested models are gpt-4o, gpt-4o-mini, gpt-4.5, o1-mini, o1, o3-mini-high.

void SomeDebugSubsystem::CallGPT(const FString& Prompt,

const TFunction<void(const FString&, const FString&, bool)>& Callback)

{

FGenChatSettings ChatSettings;

ChatSettings.Model = TEXT("gpt-4o-mini");

ChatSettings.MaxTokens = 500;

ChatSettings.Messages.Add(FGenChatMessage{ TEXT("system"), Prompt });

FOnChatCompletionResponse OnComplete = FOnChatCompletionResponse::CreateLambda(

[Callback](const FString& Response, const FString& ErrorMessage, bool bSuccess)

{

Callback(Response, ErrorMessage, bSuccess);

});

UGenOAIChat::SendChatRequest(ChatSettings, OnComplete);

}Sending a custom schema json directly to function call

FString MySchemaJson = R"({

"type": "object",

"properties": {

"count": {

"type": "integer",

"description": "The total number of users."

},

"users": {

"type": "array",

"items": {

"type": "object",

"properties": {

"name": { "type": "string", "description": "The user's name." },

"heading_to": { "type": "string", "description": "The user's destination." }

},

"required": ["name", "role", "age", "heading_to"]

}

}

},

"required": ["count", "users"]

})";

UGenAISchemaService::RequestStructuredOutput(

TEXT("Generate a list of users and their details"),

MySchemaJson,

[](const FString& Response, const FString& Error, bool Success) {

if (Success)

{

UE_LOG(LogTemp, Log, TEXT("Structured Output: %s"), *Response);

}

else

{

UE_LOG(LogTemp, Error, TEXT("Error: %s"), *Error);

}

}

);Sending a custom schema json from a file

#include "Misc/FileHelper.h"

#include "Misc/Paths.h"

FString SchemaFilePath = FPaths::Combine(

FPaths::ProjectDir(),

TEXT("Source/:ProjectName/Public/AIPrompts/SomeSchema.json")

);

FString MySchemaJson;

if (FFileHelper::LoadFileToString(MySchemaJson, *SchemaFilePath))

{

UGenAISchemaService::RequestStructuredOutput(

TEXT("Generate a list of users and their details"),

MySchemaJson,

[](const FString& Response, const FString& Error, bool Success) {

if (Success)

{

UE_LOG(LogTemp, Log, TEXT("Structured Output: %s"), *Response);

}

else

{

UE_LOG(LogTemp, Error, TEXT("Error: %s"), *Error);

}

}

);

}Currently the plugin supports Chat and Reasoning from DeepSeek API. Both for C++ and Blueprints. Points to note:

- System messages are currently mandatory for the reasoning model. API otherwise seems to return null

- Also, from the documentation: "Please note that if the reasoning_content field is included in the sequence of input messages, the API will return a 400 error. Read more about it here"

[!WARNING]

While using the R1 reasoning model, make sure the Unreal's HTTP timeouts are not the default values at 30 seconds. As these API calls can take longer than 30 seconds to respond. Simply setting theHttpRequest->SetTimeout(<N Seconds>);is not enough So the following lines need to be added to your project'sDefaultEngine.inifile:[HTTP] HttpConnectionTimeout=180 HttpReceiveTimeout=180

FGenDSeekChatSettings ReasoningSettings;

ReasoningSettings.Model = EDeepSeekModels::Reasoner; // or EDeepSeekModels::Chat for Chat API

ReasoningSettings.MaxTokens = 100;

ReasoningSettings.Messages.Add(FGenChatMessage{TEXT("system"), TEXT("You are a helpful assistant.")});

ReasoningSettings.Messages.Add(FGenChatMessage{TEXT("user"), TEXT("9.11 and 9.8, which is greater?")});

ReasoningSettings.bStreamResponse = false;

UGenDSeekChat::SendChatRequest(

ReasoningSettings,

FOnDSeekChatCompletionResponse::CreateLambda(

[this](const FString& Response, const FString& ErrorMessage, bool bSuccess)

{

if (!UTHelper::IsContextStillValid(this))

{

return;

}

// Log response details regardless of success

UE_LOG(LogTemp, Warning, TEXT("DeepSeek Reasoning Response Received - Success: %d"), bSuccess);

UE_LOG(LogTemp, Warning, TEXT("Response: %s"), *Response);

if (!ErrorMessage.IsEmpty())

{

UE_LOG(LogTemp, Error, TEXT("Error Message: %s"), *ErrorMessage);

}

})

);Currently the plugin supports Chat from Anthropic API. Both for C++ and Blueprints.

Tested models are claude-sonnet-4-20250514, claude-opus-4-20250514, claude-3-7-sonnet-latest, claude-3-5-sonnet, claude-3-5-haiku-latest, claude-3-opus-latest.

// ---- Claude Chat Test ----

FGenClaudeChatSettings ChatSettings;

ChatSettings.Model = EClaudeModels::Claude_3_7_Sonnet; // Use Claude 3.7 Sonnet model

ChatSettings.MaxTokens = 4096;

ChatSettings.Temperature = 0.7f;

ChatSettings.Messages.Add(FGenChatMessage{TEXT("system"), TEXT("You are a helpful assistant.")});

ChatSettings.Messages.Add(FGenChatMessage{TEXT("user"), TEXT("What is the capital of France?")});

UGenClaudeChat::SendChatRequest(

ChatSettings,

FOnClaudeChatCompletionResponse::CreateLambda(

[this](const FString& Response, const FString& ErrorMessage, bool bSuccess)

{

if (!UTHelper::IsContextStillValid(this))

{

return;

}

if (bSuccess)

{

UE_LOG(LogTemp, Warning, TEXT("Claude Chat Response: %s"), *Response);

}

else

{

UE_LOG(LogTemp, Error, TEXT("Claude Chat Error: %s"), *ErrorMessage);

}

})

);Currently the plugin supports Chat from XAI's Grok 3 API. Both for C++ and Blueprints.

FGenXAIChatSettings ChatSettings;

ChatSettings.Model = TEXT("grok-3-latest");

ChatSettings.Messages.Add(FGenXAIMessage{

TEXT("system"),

TEXT("You are a helpful AI assistant for a game. Please provide concise responses.")

});

ChatSettings.Messages.Add(FGenXAIMessage{TEXT("user"), TEXT("Create a brief description for a forest level in a fantasy game")});

ChatSettings.MaxTokens = 1000;

UGenXAIChat::SendChatRequest(

ChatSettings,

FOnXAIChatCompletionResponse::CreateLambda(

[this](const FString& Response, const FString& ErrorMessage, bool bSuccess)

{

if (!UTHelper::IsContextStillValid(this))

{

return;

}

UE_LOG(LogTemp, Warning, TEXT("XAI Chat response: %s"), *Response);

if (!bSuccess)

{

UE_LOG(LogTemp, Error, TEXT("XAI Chat error: %s"), *ErrorMessage);

}

})

);This is currently work in progress. The plugin supports various clients like Claude Desktop App, Cursor etc.

That's it! You can now use the MCP features of the plugin.

python <your_project_directoy>/Plugins/GenerativeAISupport/Content/Python/mcp_server.py3. Open a new Unreal Engine project and run the below python script from the plugin's python directory.

Tools -> Run Python Script -> Select the

Plugins/GenerativeAISupport/Content/Python/unreal_socket_server.pyfile.

- Nodes fail to connect properly with MCP

- No undo redo support for MCP

- No streaming support for Deepseek reasoning model

- No complex material generation support for the create material tool

- Issues with running some llm generated valid python scripts

- When LLM compiles a blueprint no proper error handling in its response

- Issues spawning certain nodes, especially with getters and setters

- Doesn't open the right context window during scene and project files edit.

- Doesn't dock the window properly in the editor for blueprints.

- Install

unrealpython package and setup the IDE's python interpreter for proper intellisense.

pip install unrealMore details will be added soon.

More details will be added soon.

- Env Var set logic from: OpenAI-Api-Unreal by KellanM

- MCP Server inspiration from: Blender-MCP by ahujasid

- OpenAI API Documentation

- Anthropic API Documentation

- XAI API Documentation

- Google Gemini API Documentation

- Meta AI API Documentation

- Deepseek API Documentation

- Model Control Protocol (MCP) Documentation

- TripoSt Documentation

If you find UnrealGenAISupport helpful, consider sponsoring me to keep the project going! Click the "Sponsor" button above to contribute.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for UnrealGenAISupport

Similar Open Source Tools

UnrealGenAISupport

The Unreal Engine Generative AI Support Plugin is a tool designed to integrate various cutting-edge LLM/GenAI models into Unreal Engine for game development. It aims to simplify the process of using AI models for game development tasks, such as controlling scene objects, generating blueprints, running Python scripts, and more. The plugin currently supports models from organizations like OpenAI, Anthropic, XAI, Google Gemini, Meta AI, Deepseek, and Baidu. It provides features like API support, model control, generative AI capabilities, UI generation, project file management, and more. The plugin is still under development but offers a promising solution for integrating AI models into game development workflows.

ai-dial-sdk

AI DIAL Python SDK is a framework designed to create applications and model adapters for AI DIAL API, which is based on Azure OpenAI API. It provides a user-friendly interface for routing requests to applications. The SDK includes features for chat completions, response generation, and API interactions. Developers can easily build and deploy AI-powered applications using this SDK, ensuring compatibility with the AI DIAL platform.

NextChat

NextChat is a well-designed cross-platform ChatGPT web UI tool that supports Claude, GPT4, and Gemini Pro. It offers a compact client for Linux, Windows, and MacOS, with features like self-deployed LLMs compatibility, privacy-first data storage, markdown support, responsive design, and fast loading speed. Users can create, share, and debug chat tools with prompt templates, access various prompts, compress chat history, and use multiple languages. The tool also supports enterprise-level privatization and customization deployment, with features like brand customization, resource integration, permission control, knowledge integration, security auditing, private deployment, and continuous updates.

obsei

Obsei is an open-source, low-code, AI powered automation tool that consists of an Observer to collect unstructured data from various sources, an Analyzer to analyze the collected data with various AI tasks, and an Informer to send analyzed data to various destinations. The tool is suitable for scheduled jobs or serverless applications as all Observers can store their state in databases. Obsei is still in alpha stage, so caution is advised when using it in production. The tool can be used for social listening, alerting/notification, automatic customer issue creation, extraction of deeper insights from feedbacks, market research, dataset creation for various AI tasks, and more based on creativity.

catai

CatAI is a tool that allows users to run GGUF models on their computer with a chat UI. It serves as a local AI assistant inspired by Node-Llama-Cpp and Llama.cpp. The tool provides features such as auto-detecting programming language, showing original messages by clicking on user icons, real-time text streaming, and fast model downloads. Users can interact with the tool through a CLI that supports commands for installing, listing, setting, serving, updating, and removing models. CatAI is cross-platform and supports Windows, Linux, and Mac. It utilizes node-llama-cpp and offers a simple API for asking model questions. Additionally, developers can integrate the tool with node-llama-cpp@beta for model management and chatting. The configuration can be edited via the web UI, and contributions to the project are welcome. The tool is licensed under Llama.cpp's license.

ai-microcore

MicroCore is a collection of python adapters for Large Language Models and Vector Databases / Semantic Search APIs. It allows convenient communication with these services, easy switching between models & services, and separation of business logic from implementation details. Users can keep applications simple and try various models & services without changing application code. MicroCore connects MCP tools to language models easily, supports text completion and chat completion models, and provides features for configuring, installing vendor-specific packages, and using vector databases.

maclocal-api

MacLocalAPI is a macOS server application that exposes Apple's Foundation Models through OpenAI-compatible API endpoints. It allows users to run Apple Intelligence locally with full OpenAI API compatibility. The tool supports MLX local models, API gateway mode, LoRA adapter support, Vision OCR, built-in WebUI, privacy-first processing, fast and lightweight operation, easy integration with existing OpenAI client libraries, and provides token consumption metrics. Users can install MacLocalAPI using Homebrew or pip, and it requires macOS 26 or later, an Apple Silicon Mac, and Apple Intelligence enabled in System Settings. The tool is designed for easy integration with Python, JavaScript, and open-webui applications.

instructor

Instructor is a tool that provides structured outputs from Large Language Models (LLMs) in a reliable manner. It simplifies the process of extracting structured data by utilizing Pydantic for validation, type safety, and IDE support. With Instructor, users can define models and easily obtain structured data without the need for complex JSON parsing, error handling, or retries. The tool supports automatic retries, streaming support, and extraction of nested objects, making it production-ready for various AI applications. Trusted by a large community of developers and companies, Instructor is used by teams at OpenAI, Google, Microsoft, AWS, and YC startups.

quantalogic

QuantaLogic is a ReAct framework for building advanced AI agents that seamlessly integrates large language models with a robust tool system. It aims to bridge the gap between advanced AI models and practical implementation in business processes by enabling agents to understand, reason about, and execute complex tasks through natural language interaction. The framework includes features such as ReAct Framework, Universal LLM Support, Secure Tool System, Real-time Monitoring, Memory Management, and Enterprise Ready components.

llm.rb

llm.rb is a zero-dependency Ruby toolkit for Large Language Models that includes various providers like OpenAI, Gemini, Anthropic, xAI (Grok), zAI, DeepSeek, Ollama, and LlamaCpp. It provides full support for chat, streaming, tool calling, audio, images, files, and structured outputs. The toolkit offers features like unified API across providers, pluggable JSON adapters, tool calling, JSON Schema structured output, streaming responses, TTS, transcription, translation, image generation, files API, multimodal prompts, embeddings, models API, OpenAI vector stores, and more.

claude-task-master

Claude Task Master is a task management system designed for AI-driven development with Claude, seamlessly integrating with Cursor AI. It allows users to configure tasks through environment variables, parse PRD documents, generate structured tasks with dependencies and priorities, and manage task status. The tool supports task expansion, complexity analysis, and smart task recommendations. Users can interact with the system through CLI commands for task discovery, implementation, verification, and completion. It offers features like task breakdown, dependency management, and AI-driven task generation, providing a structured workflow for efficient development.

pocketgroq

PocketGroq is a tool that provides advanced functionalities for text generation, web scraping, web search, and AI response evaluation. It includes features like an Autonomous Agent for answering questions, web crawling and scraping capabilities, enhanced web search functionality, and flexible integration with Ollama server. Users can customize the agent's behavior, evaluate responses using AI, and utilize various methods for text generation, conversation management, and Chain of Thought reasoning. The tool offers comprehensive methods for different tasks, such as initializing RAG, error handling, and tool management. PocketGroq is designed to enhance development processes and enable the creation of AI-powered applications with ease.

agentpress

AgentPress is a collection of simple but powerful utilities that serve as building blocks for creating AI agents. It includes core components for managing threads, registering tools, processing responses, state management, and utilizing LLMs. The tool provides a modular architecture for handling messages, LLM API calls, response processing, tool execution, and results management. Users can easily set up the environment, create custom tools with OpenAPI or XML schema, and manage conversation threads with real-time interaction. AgentPress aims to be agnostic, simple, and flexible, allowing users to customize and extend functionalities as needed.

llm-sandbox

LLM Sandbox is a lightweight and portable sandbox environment designed to securely execute large language model (LLM) generated code in a safe and isolated manner using Docker containers. It provides an easy-to-use interface for setting up, managing, and executing code in a controlled Docker environment, simplifying the process of running code generated by LLMs. The tool supports multiple programming languages, offers flexibility with predefined Docker images or custom Dockerfiles, and allows scalability with support for Kubernetes and remote Docker hosts.

req_llm

ReqLLM is a Req-based library for LLM interactions, offering a unified interface to AI providers through a plugin-based architecture. It brings composability and middleware advantages to LLM interactions, with features like auto-synced providers/models, typed data structures, ergonomic helpers, streaming capabilities, usage & cost extraction, and a plugin-based provider system. Users can easily generate text, structured data, embeddings, and track usage costs. The tool supports various AI providers like Anthropic, OpenAI, Groq, Google, and xAI, and allows for easy addition of new providers. ReqLLM also provides API key management, detailed documentation, and a roadmap for future enhancements.

mcphub.nvim

MCPHub.nvim is a powerful Neovim plugin that integrates MCP (Model Context Protocol) servers into your workflow. It offers a centralized config file for managing servers and tools, with an intuitive UI for testing resources. Ideal for LLM integration, it provides programmatic API access and interactive testing through the `:MCPHub` command.

For similar tasks

UnrealGenAISupport

The Unreal Engine Generative AI Support Plugin is a tool designed to integrate various cutting-edge LLM/GenAI models into Unreal Engine for game development. It aims to simplify the process of using AI models for game development tasks, such as controlling scene objects, generating blueprints, running Python scripts, and more. The plugin currently supports models from organizations like OpenAI, Anthropic, XAI, Google Gemini, Meta AI, Deepseek, and Baidu. It provides features like API support, model control, generative AI capabilities, UI generation, project file management, and more. The plugin is still under development but offers a promising solution for integrating AI models into game development workflows.

generative-ai-dart

The Google Generative AI SDK for Dart enables developers to utilize cutting-edge Large Language Models (LLMs) for creating language applications. It provides access to the Gemini API for generating content using state-of-the-art models. Developers can integrate the SDK into their Dart or Flutter applications to leverage powerful AI capabilities. It is recommended to use the SDK for server-side API calls to ensure the security of API keys and protect against potential key exposure in mobile or web apps.

SemanticKernel.Assistants

This repository contains an assistant proposal for the Semantic Kernel, allowing the usage of assistants without relying on OpenAI Assistant APIs. It runs locally planners and plugins for the assistants, providing scenarios like Assistant with Semantic Kernel plugins, Multi-Assistant conversation, and AutoGen conversation. The Semantic Kernel is a lightweight SDK enabling integration of AI Large Language Models with conventional programming languages, offering functions like semantic functions, native functions, and embeddings-based memory. Users can bring their own model for the assistants and host them locally. The repository includes installation instructions, usage examples, and information on creating new conversation threads with the assistant.

ezlocalai

ezlocalai is an artificial intelligence server that simplifies running multimodal AI models locally. It handles model downloading and server configuration based on hardware specs. It offers OpenAI Style endpoints for integration, voice cloning, text-to-speech, voice-to-text, and offline image generation. Users can modify environment variables for customization. Supports NVIDIA GPU and CPU setups. Provides demo UI and workflow visualization for easy usage.

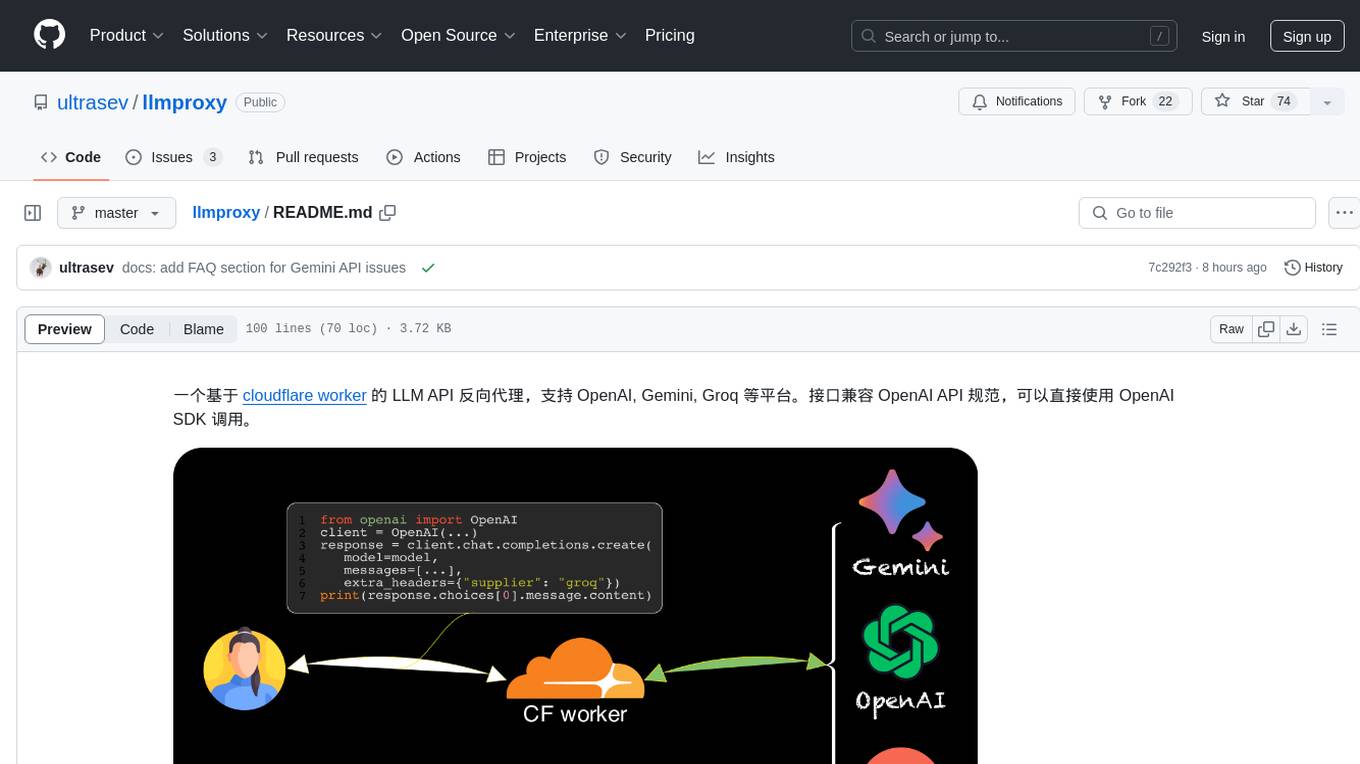

llmproxy

llmproxy is a reverse proxy for LLM API based on Cloudflare Worker, supporting platforms like OpenAI, Gemini, and Groq. The interface is compatible with the OpenAI API specification and can be directly accessed using the OpenAI SDK. It provides a convenient way to interact with various AI platforms through a unified API endpoint, enabling seamless integration and usage in different applications.

gemini-api-quickstart

This repository contains a simple Python Flask App utilizing the Google AI Gemini API to explore multi-modal capabilities. It provides a basic UI and Flask backend for easy integration and testing. The app allows users to interact with the AI model through chat messages, making it a great starting point for developers interested in AI-powered applications.

KaibanJS

KaibanJS is a JavaScript-native framework for building multi-agent AI systems. It enables users to create specialized AI agents with distinct roles and goals, manage tasks, and coordinate teams efficiently. The framework supports role-based agent design, tool integration, multiple LLMs support, robust state management, observability and monitoring features, and a real-time agentic Kanban board for visualizing AI workflows. KaibanJS aims to empower JavaScript developers with a user-friendly AI framework tailored for the JavaScript ecosystem, bridging the gap in the AI race for non-Python developers.

FFAIVideo

FFAIVideo is a lightweight node.js project that utilizes popular AI LLM to intelligently generate short videos. It supports multiple AI LLM models such as OpenAI, Moonshot, Azure, g4f, Google Gemini, etc. Users can input text to automatically synthesize exciting video content with subtitles, background music, and customizable settings. The project integrates Microsoft Edge's online text-to-speech service for voice options and uses Pexels website for video resources. Installation of FFmpeg is essential for smooth operation. Inspired by MoneyPrinterTurbo, MoneyPrinter, and MsEdgeTTS, FFAIVideo is designed for front-end developers with minimal dependencies and simple usage.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.