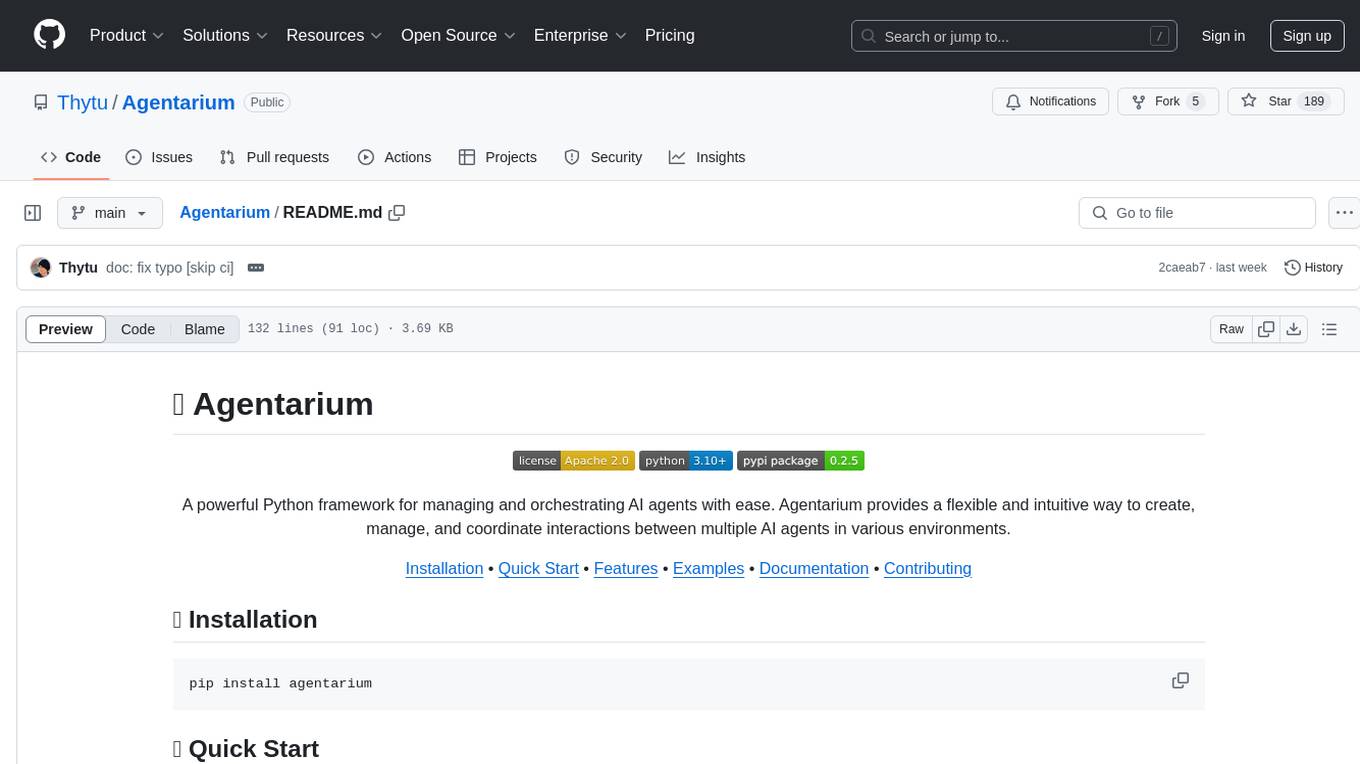

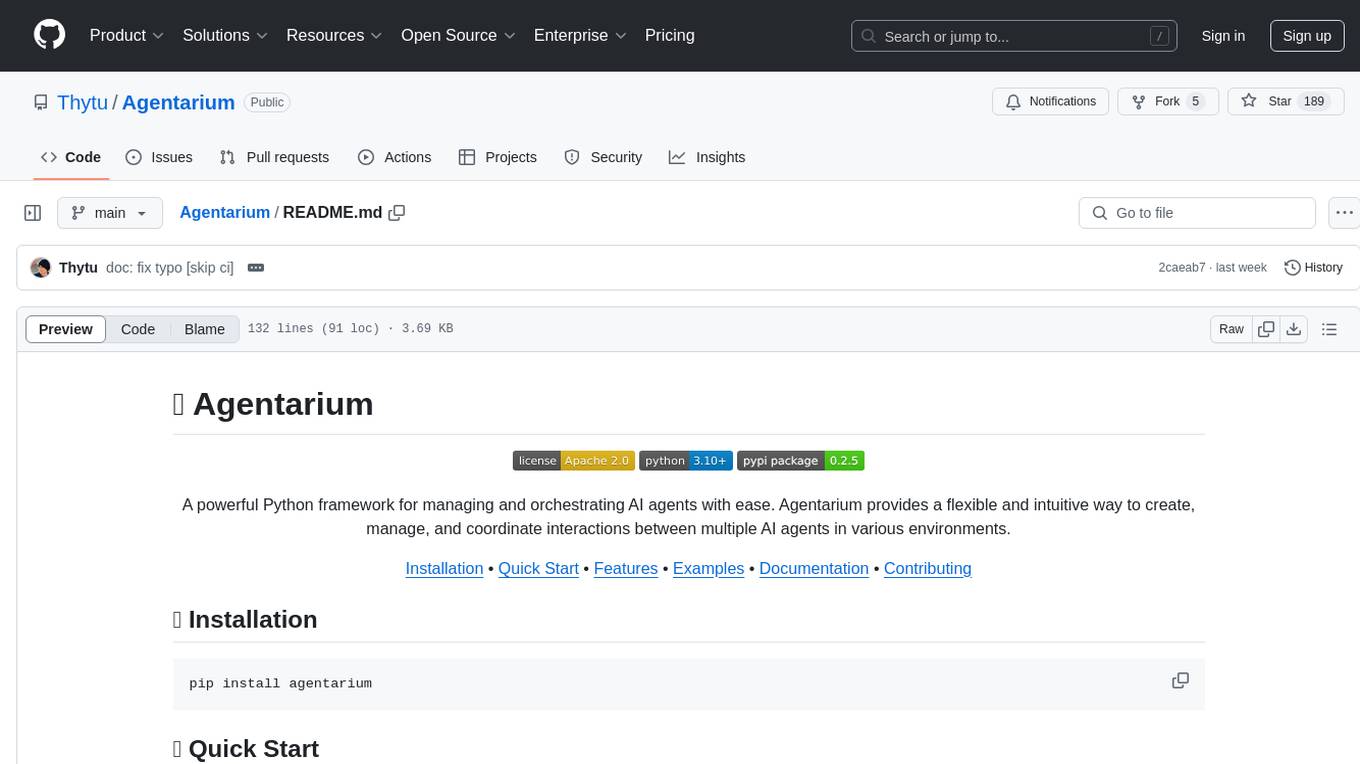

Agentarium

open-source framework for creating and managing simulations populated with AI-powered agents. It provides an intuitive platform for designing complex, interactive environments where agents can act, learn, and evolve.

Stars: 854

Agentarium is a powerful Python framework for managing and orchestrating AI agents with ease. It provides a flexible and intuitive way to create, manage, and coordinate interactions between multiple AI agents in various environments. The framework offers advanced agent management, robust interaction management, a checkpoint system for saving and restoring agent states, data generation through agent interactions, performance optimization, flexible environment configuration, and an extensible architecture for customization.

README:

A powerful Python framework for managing and orchestrating AI agents with ease. Agentarium provides a flexible and intuitive way to create, manage, and coordinate interactions between multiple AI agents in various environments.

Installation • Quick Start • Features • Examples • Documentation • Contributing

pip install agentariumfrom agentarium import Agent

# Create agents

agent1 = Agent(name="agent1")

agent2 = Agent(name="agent2")

# Direct communication between agents

alice.talk_to(bob, "Hello Bob! I heard you're working on some interesting ML projects.")

# Agent autonomously decides its next action based on context

bob.act()- 🤖 Advanced Agent Management: Create and orchestrate multiple AI agents with different roles and capabilities

- 🔄 Autonomous Decision Making: Agents can make decisions and take actions based on their context

- 💾 Checkpoint System: Save and restore agent states and interactions for reproducibility

- 🎭 Customizable Actions: Define custom actions beyond the default talk/think capabilities

- 🧠 Memory & Context: Agents maintain memory of past interactions for contextual responses

- ⚡ AI Integration: Seamless integration with various AI providers through aisuite

- ⚡ Performance Optimized: Built for efficiency and scalability

- 🛠️ Extensible Architecture: Easy to extend and customize for your specific needs

Create a simple chat interaction between agents:

from agentarium import Agent

# Create agents with specific characteristics

alice = Agent.create_agent(name="Alice", occupation="Software Engineer")

bob = Agent.create_agent(name="Bob", occupation="Data Scientist")

# Direct communication

alice.talk_to(bob, "Hello Bob! I heard you're working on some interesting projects.")

# Let Bob autonomously decide how to respond

bob.act()Add new capabilities to your agents:

from agentarium import Agent, Action

# Define a simple greeting action

def greet(name: str, **kwargs) -> str:

return f"Hello, {name}!"

# Create an agent and add the greeting action

agent = Agent.create_agent(name="Alice")

agent.add_action(

Action(

name="GREET",

description="Greet someone by name",

parameters=["name"],

function=greet

)

)

# Use the custom action

agent.execute_action("GREET", "Bob")Save and restore agent states:

from agentarium import Agent

from agentarium.CheckpointManager import CheckpointManager

# Initialize checkpoint manager

checkpoint = CheckpointManager("demo")

# Create and interact with agents

alice = Agent.create_agent(name="Alice")

bob = Agent.create_agent(name="Bob")

alice.talk_to(bob, "What a beautiful day!")

checkpoint.update(step="interaction_1")

# Save the current state

checkpoint.save()More examples can be found in the examples/ directory.

Create agents with custom characteristics:

agent = Agent.create_agent(

name="Alice",

age=28,

occupation="Software Engineer",

location="San Francisco",

bio="A passionate developer who loves AI"

)Configure your LLM provider and credentials using a YAML file:

llm:

provider: "openai" # The LLM provider to use (any provider supported by aisuite)

model: "gpt-4" # The specific model to use from the provider

aisuite: # (optional) Credentials for aisuite

openai: # Provider-specific configuration

api_key: "sk-..." # Your API key- Agent: Core class for creating AI agents with personalities and autonomous behavior

- CheckpointManager: Handles saving and loading of agent states and interactions

- Action: Base class for defining custom agent actions

- AgentInteractionManager: Manages and tracks all agent interactions

Contributions are welcome! Here's how you can help:

- Fork the repository

- Create a new branch (

git checkout -b feature/amazing-feature) - Make your changes

- Commit your changes (

git commit -m 'feat: add amazing feature') - Push to the branch (

git push origin feature/amazing-feature) - Open a Pull Request

This project is licensed under the Apache 2.0 License - see the LICENSE file for details.

Thanks to all contributors who have helped shape Agentarium 🫶

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Agentarium

Similar Open Source Tools

Agentarium

Agentarium is a powerful Python framework for managing and orchestrating AI agents with ease. It provides a flexible and intuitive way to create, manage, and coordinate interactions between multiple AI agents in various environments. The framework offers advanced agent management, robust interaction management, a checkpoint system for saving and restoring agent states, data generation through agent interactions, performance optimization, flexible environment configuration, and an extensible architecture for customization.

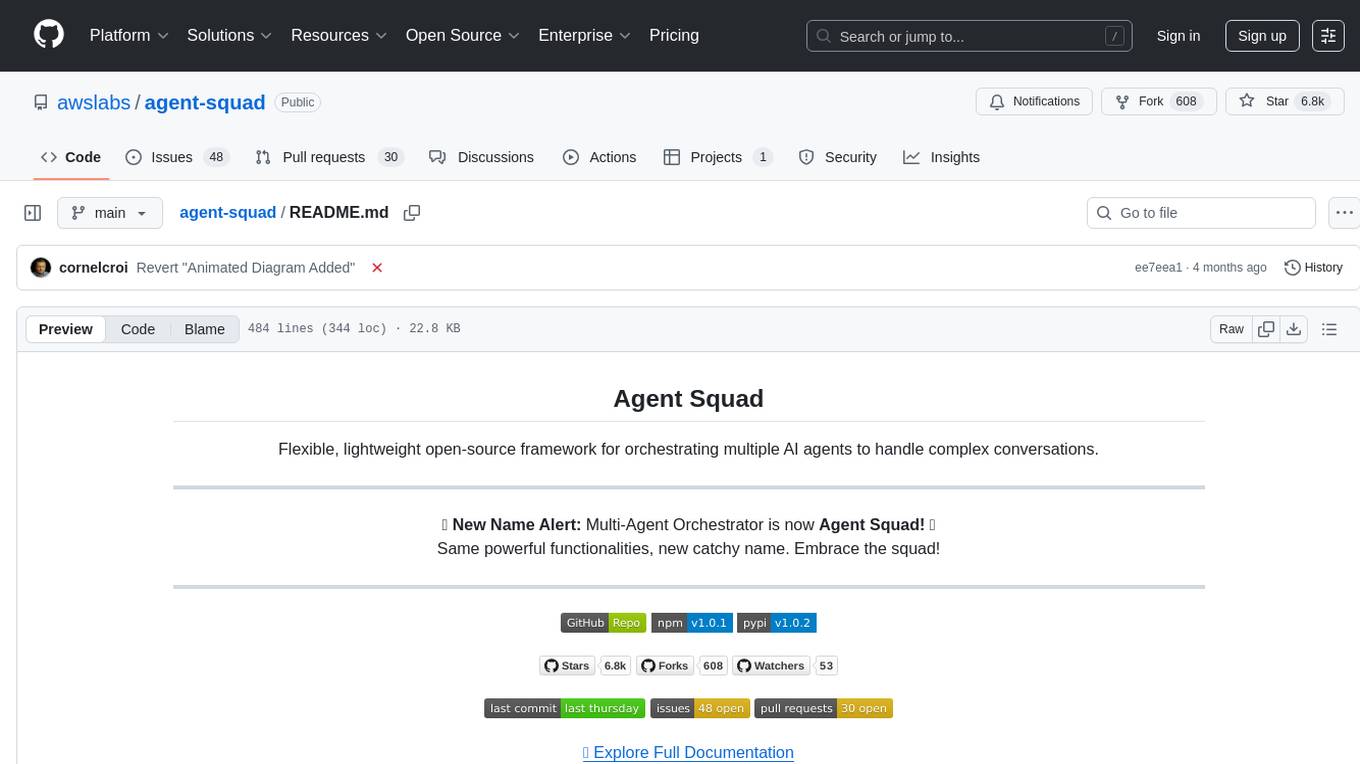

agent-squad

Agent Squad is a flexible, lightweight open-source framework for orchestrating multiple AI agents to handle complex conversations. It intelligently routes queries, maintains context across interactions, and offers pre-built components for quick deployment. The system allows easy integration of custom agents and conversation messages storage solutions, making it suitable for various applications from simple chatbots to sophisticated AI systems, scaling efficiently.

multi-agent-orchestrator

Multi-Agent Orchestrator is a flexible and powerful framework for managing multiple AI agents and handling complex conversations. It intelligently routes queries to the most suitable agent based on context and content, supports dual language implementation in Python and TypeScript, offers flexible agent responses, context management across agents, extensible architecture for customization, universal deployment options, and pre-built agents and classifiers. It is suitable for various applications, from simple chatbots to sophisticated AI systems, accommodating diverse requirements and scaling efficiently.

agents

The LiveKit Agent Framework is designed for building real-time, programmable participants that run on servers. Easily tap into LiveKit WebRTC sessions and process or generate audio, video, and data streams. The framework includes plugins for common workflows, such as voice activity detection and speech-to-text. Agents integrates seamlessly with LiveKit server, offloading job queuing and scheduling responsibilities to it. This eliminates the need for additional queuing infrastructure. Agent code developed on your local machine can scale to support thousands of concurrent sessions when deployed to a server in production.

Biomni

Biomni is a general-purpose biomedical AI agent designed to autonomously execute a wide range of research tasks across diverse biomedical subfields. By integrating cutting-edge large language model (LLM) reasoning with retrieval-augmented planning and code-based execution, Biomni helps scientists dramatically enhance research productivity and generate testable hypotheses.

zenml

ZenML is an extensible, open-source MLOps framework for creating portable, production-ready machine learning pipelines. By decoupling infrastructure from code, ZenML enables developers across your organization to collaborate more effectively as they develop to production.

embodied-agents

Embodied Agents is a toolkit for integrating large multi-modal models into existing robot stacks with just a few lines of code. It provides consistency, reliability, scalability, and is configurable to any observation and action space. The toolkit is designed to reduce complexities involved in setting up inference endpoints, converting between different model formats, and collecting/storing datasets. It aims to facilitate data collection and sharing among roboticists by providing Python-first abstractions that are modular, extensible, and applicable to a wide range of tasks. The toolkit supports asynchronous and remote thread-safe agent execution for maximal responsiveness and scalability, and is compatible with various APIs like HuggingFace Spaces, Datasets, Gymnasium Spaces, Ollama, and OpenAI. It also offers automatic dataset recording and optional uploads to the HuggingFace hub.

sdialog

SDialog is an MIT-licensed open-source toolkit for building, simulating, and evaluating LLM-based conversational agents end-to-end. It aims to bridge agent construction, user simulation, dialog generation, and evaluation in a single reproducible workflow, enabling the generation of reliable, controllable dialog systems or data at scale. The toolkit standardizes a Dialog schema, offers persona-driven multi-agent simulation with LLMs, provides composable orchestration for precise control over behavior and flow, includes built-in evaluation metrics, and offers mechanistic interpretability. It allows for easy creation of user-defined components and interoperability across various AI platforms.

LightAgent

LightAgent is a lightweight, open-source Agentic AI development framework with memory, tools, and a tree of thought. It supports multi-agent collaboration, autonomous learning, tool integration, complex task handling, and multi-model support. It also features a streaming API, tool generator, agent self-learning, adaptive tool mechanism, and more. LightAgent is designed for intelligent customer service, data analysis, automated tools, and educational assistance.

semantic-kernel

Semantic Kernel is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. Semantic Kernel achieves this by allowing you to define plugins that can be chained together in just a few lines of code. What makes Semantic Kernel _special_ , however, is its ability to _automatically_ orchestrate plugins with AI. With Semantic Kernel planners, you can ask an LLM to generate a plan that achieves a user's unique goal. Afterwards, Semantic Kernel will execute the plan for the user.

exospherehost

Exosphere is an open source infrastructure designed to run AI agents at scale for large data and long running flows. It allows developers to define plug and playable nodes that can be run on a reliable backbone in the form of a workflow, with features like dynamic state creation at runtime, infinite parallel agents, persistent state management, and failure handling. This enables the deployment of production agents that can scale beautifully to build robust autonomous AI workflows.

AgentFly

AgentFly is an extensible framework for building LLM agents with reinforcement learning. It supports multi-turn training by adapting traditional RL methods with token-level masking. It features a decorator-based interface for defining tools and reward functions, enabling seamless extension and ease of use. To support high-throughput training, it implemented asynchronous execution of tool calls and reward computations, and designed a centralized resource management system for scalable environment coordination. A suite of prebuilt tools and environments are provided.

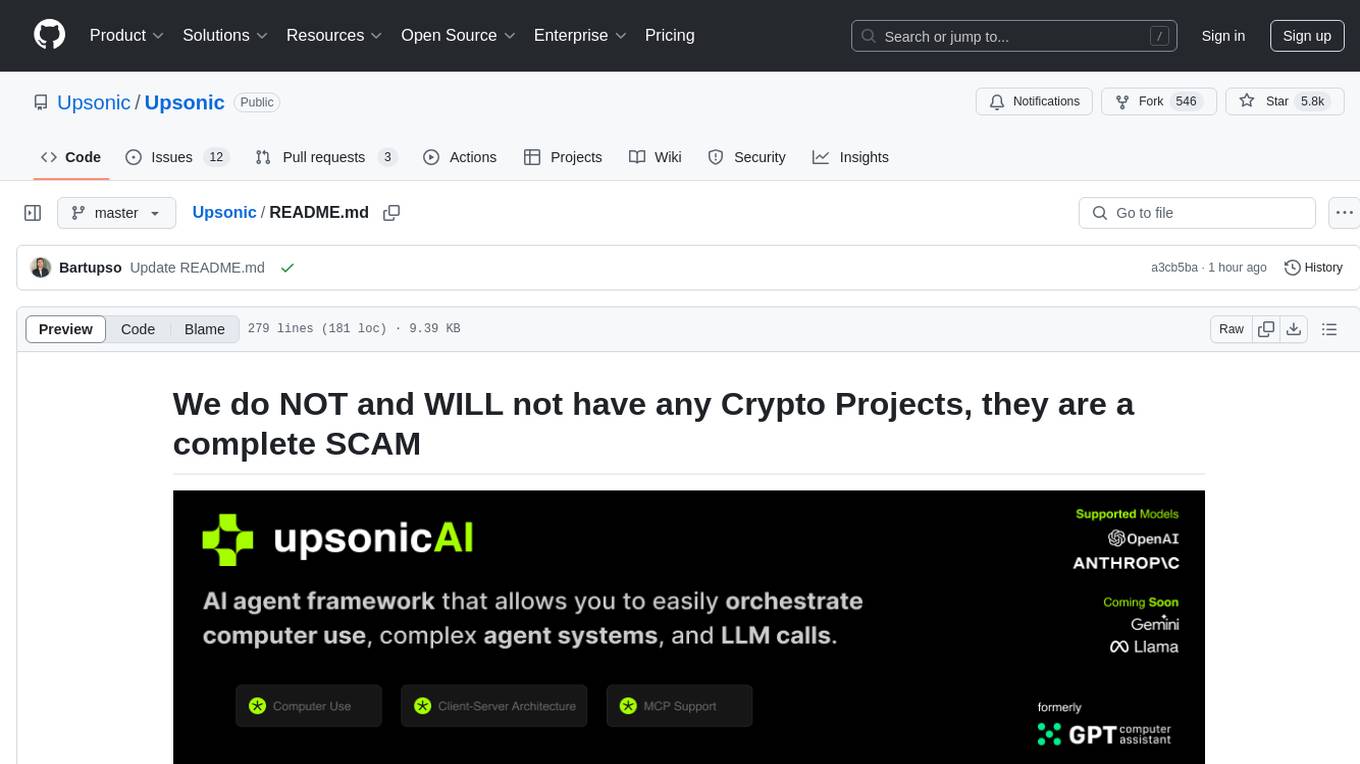

gpt-computer-assistant

GPT Computer Assistant (GCA) is an open-source framework designed to build vertical AI agents that can automate tasks on Windows, macOS, and Ubuntu systems. It leverages the Model Context Protocol (MCP) and its own modules to mimic human-like actions and achieve advanced capabilities. With GCA, users can empower themselves to accomplish more in less time by automating tasks like updating dependencies, analyzing databases, and configuring cloud security settings.

axar

AXAR AI is a lightweight framework designed for building production-ready agentic applications using TypeScript. It aims to simplify the process of creating robust, production-grade LLM-powered apps by focusing on familiar coding practices without unnecessary abstractions or steep learning curves. The framework provides structured, typed inputs and outputs, familiar and intuitive patterns like dependency injection and decorators, explicit control over agent behavior, real-time logging and monitoring tools, minimalistic design with little overhead, model agnostic compatibility with various AI models, and streamed outputs for fast and accurate results. AXAR AI is ideal for developers working on real-world AI applications who want a tool that gets out of the way and allows them to focus on shipping reliable software.

lionagi

LionAGI is a robust framework for orchestrating multi-step AI operations with precise control. It allows users to bring together multiple models, advanced reasoning, tool integrations, and custom validations in a single coherent pipeline. The framework is structured, expandable, controlled, and transparent, offering features like real-time logging, message introspection, and tool usage tracking. LionAGI supports advanced multi-step reasoning with ReAct, integrates with Anthropic's Model Context Protocol, and provides observability and debugging tools. Users can seamlessly orchestrate multiple models, integrate with Claude Code CLI SDK, and leverage a fan-out fan-in pattern for orchestration. The framework also offers optional dependencies for additional functionalities like reader tools, local inference support, rich output formatting, database support, and graph visualization.

GraphRAG-SDK

Build fast and accurate GenAI applications with GraphRAG SDK, a specialized toolkit for building Graph Retrieval-Augmented Generation (GraphRAG) systems. It integrates knowledge graphs, ontology management, and state-of-the-art LLMs to deliver accurate, efficient, and customizable RAG workflows. The SDK simplifies the development process by automating ontology creation, knowledge graph agent creation, and query handling, enabling users to interact and query their knowledge graphs effectively. It supports multi-agent systems and orchestrates agents specialized in different domains. The SDK is optimized for FalkorDB, ensuring high performance and scalability for large-scale applications. By leveraging knowledge graphs, it enables semantic relationships and ontology-driven queries that go beyond standard vector similarity, enhancing retrieval-augmented generation capabilities.

For similar tasks

Agentarium

Agentarium is a powerful Python framework for managing and orchestrating AI agents with ease. It provides a flexible and intuitive way to create, manage, and coordinate interactions between multiple AI agents in various environments. The framework offers advanced agent management, robust interaction management, a checkpoint system for saving and restoring agent states, data generation through agent interactions, performance optimization, flexible environment configuration, and an extensible architecture for customization.

MeeseeksAI

MeeseeksAI is a framework designed to orchestrate AI agents using a mermaid graph and networkx. It provides a structured approach to managing and coordinating multiple AI agents within a system. The framework allows users to define the interactions and dependencies between agents through a visual representation, making it easier to understand and modify the behavior of the AI system. By leveraging the power of networkx, MeeseeksAI enables efficient graph-based computations and optimizations, enhancing the overall performance of AI workflows. With its intuitive design and flexible architecture, MeeseeksAI simplifies the process of building and deploying complex AI systems, empowering users to create sophisticated agent interactions with ease.

honcho

Honcho is a platform for creating personalized AI agents and LLM powered applications for end users. The repository is a monorepo containing the server/API for managing database interactions and storing application state, along with a Python SDK. It utilizes FastAPI for user context management and Poetry for dependency management. The API can be run using Docker or manually by setting environment variables. The client SDK can be installed using pip or Poetry. The project is open source and welcomes contributions, following a fork and PR workflow. Honcho is licensed under the AGPL-3.0 License.

sagentic-af

Sagentic.ai Agent Framework is a tool for creating AI agents with hot reloading dev server. It allows users to spawn agents locally by calling specific endpoint. The framework comes with detailed documentation and supports contributions, issues, and feature requests. It is MIT licensed and maintained by Ahyve Inc.

tinyllm

tinyllm is a lightweight framework designed for developing, debugging, and monitoring LLM and Agent powered applications at scale. It aims to simplify code while enabling users to create complex agents or LLM workflows in production. The core classes, Function and FunctionStream, standardize and control LLM, ToolStore, and relevant calls for scalable production use. It offers structured handling of function execution, including input/output validation, error handling, evaluation, and more, all while maintaining code readability. Users can create chains with prompts, LLM models, and evaluators in a single file without the need for extensive class definitions or spaghetti code. Additionally, tinyllm integrates with various libraries like Langfuse and provides tools for prompt engineering, observability, logging, and finite state machine design.

council

Council is an open-source platform designed for the rapid development and deployment of customized generative AI applications using teams of agents. It extends the LLM tool ecosystem by providing advanced control flow and scalable oversight for AI agents. Users can create sophisticated agents with predictable behavior by leveraging Council's powerful approach to control flow using Controllers, Filters, Evaluators, and Budgets. The framework allows for automated routing between agents, comparing, evaluating, and selecting the best results for a task. Council aims to facilitate packaging and deploying agents at scale on multiple platforms while enabling enterprise-grade monitoring and quality control.

mentals-ai

Mentals AI is a tool designed for creating and operating agents that feature loops, memory, and various tools, all through straightforward markdown syntax. This tool enables you to concentrate solely on the agent’s logic, eliminating the necessity to compose underlying code in Python or any other language. It redefines the foundational frameworks for future AI applications by allowing the creation of agents with recursive decision-making processes, integration of reasoning frameworks, and control flow expressed in natural language. Key concepts include instructions with prompts and references, working memory for context, short-term memory for storing intermediate results, and control flow from strings to algorithms. The tool provides a set of native tools for message output, user input, file handling, Python interpreter, Bash commands, and short-term memory. The roadmap includes features like a web UI, vector database tools, agent's experience, and tools for image generation and browsing. The idea behind Mentals AI originated from studies on psychoanalysis executive functions and aims to integrate 'System 1' (cognitive executor) with 'System 2' (central executive) to create more sophisticated agents.

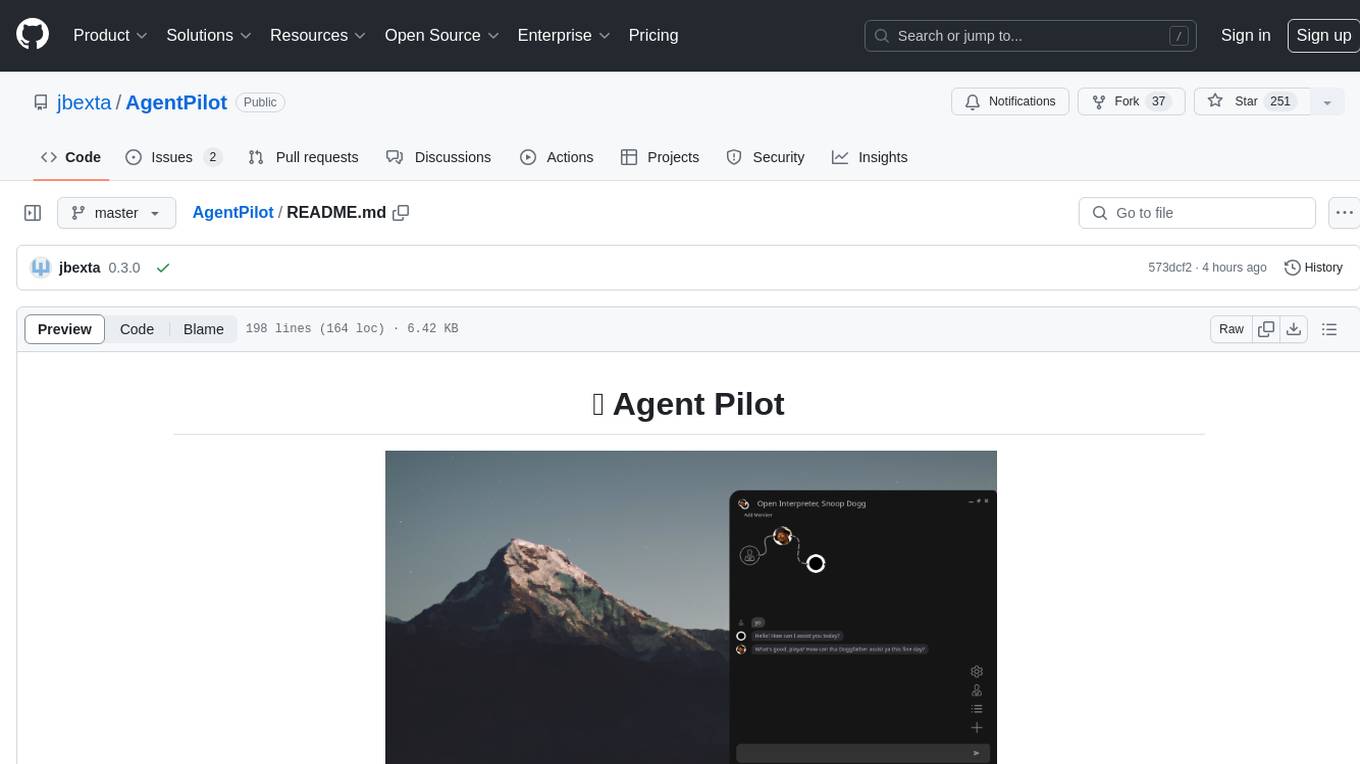

AgentPilot

Agent Pilot is an open source desktop app for creating, managing, and chatting with AI agents. It features multi-agent, branching chats with various providers through LiteLLM. Users can combine models from different providers, configure interactions, and run code using the built-in Open Interpreter. The tool allows users to create agents, manage chats, work with multi-agent workflows, branching workflows, context blocks, tools, and plugins. It also supports a code interpreter, scheduler, voice integration, and integration with various AI providers. Contributions to the project are welcome, and users can report known issues for improvement.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.