client-python

Python client library for Mistral AI platform

Stars: 570

The Mistral Python Client is a tool inspired by cohere-python that allows users to interact with the Mistral AI API. It provides functionalities to access and utilize the AI capabilities offered by Mistral. Users can easily install the client using pip and manage dependencies using poetry. The client includes examples demonstrating how to use the API for various tasks, such as chat interactions. To get started, users need to obtain a Mistral API Key and set it as an environment variable. Overall, the Mistral Python Client simplifies the integration of Mistral AI services into Python applications.

README:

This documentation is for Mistral AI SDK v1. You can find more details on how to migrate from v0 to v1 here

Before you begin, you will need a Mistral AI API key.

- Get your own Mistral API Key: https://docs.mistral.ai/#api-access

- Set your Mistral API Key as an environment variable. You only need to do this once.

# set Mistral API Key (using zsh for example)

$ echo 'export MISTRAL_API_KEY=[your_key_here]' >> ~/.zshenv

# reload the environment (or just quit and open a new terminal)

$ source ~/.zshenvMistral AI API: Our Chat Completion and Embeddings APIs specification. Create your account on La Plateforme to get access and read the docs to learn how to use it.

[!NOTE] Python version upgrade policy

Once a Python version reaches its official end of life date, a 3-month grace period is provided for users to upgrade. Following this grace period, the minimum python version supported in the SDK will be updated.

The SDK can be installed with either pip or poetry package managers.

PIP is the default package installer for Python, enabling easy installation and management of packages from PyPI via the command line.

pip install mistralaiPoetry is a modern tool that simplifies dependency management and package publishing by using a single pyproject.toml file to handle project metadata and dependencies.

poetry add mistralaiYou can use this SDK in a Python shell with uv and the uvx command that comes with it like so:

uvx --from mistralai pythonIt's also possible to write a standalone Python script without needing to set up a whole project like so:

#!/usr/bin/env -S uv run --script

# /// script

# requires-python = ">=3.9"

# dependencies = [

# "mistralai",

# ]

# ///

from mistralai import Mistral

sdk = Mistral(

# SDK arguments

)

# Rest of script here...Once that is saved to a file, you can run it with uv run script.py where

script.py can be replaced with the actual file name.

This example shows how to create chat completions.

# Synchronous Example

from mistralai import Mistral

import os

with Mistral(

api_key=os.getenv("MISTRAL_API_KEY", ""),

) as mistral:

res = mistral.chat.complete(model="mistral-small-latest", messages=[

{

"content": "Who is the best French painter? Answer in one short sentence.",

"role": "user",

},

])

# Handle response

print(res)The same SDK client can also be used to make asychronous requests by importing asyncio.

# Asynchronous Example

import asyncio

from mistralai import Mistral

import os

async def main():

async with Mistral(

api_key=os.getenv("MISTRAL_API_KEY", ""),

) as mistral:

res = await mistral.chat.complete_async(model="mistral-small-latest", messages=[

{

"content": "Who is the best French painter? Answer in one short sentence.",

"role": "user",

},

])

# Handle response

print(res)

asyncio.run(main())This example shows how to upload a file.

# Synchronous Example

from mistralai import Mistral

import os

with Mistral(

api_key=os.getenv("MISTRAL_API_KEY", ""),

) as mistral:

res = mistral.files.upload(file={

"file_name": "example.file",

"content": open("example.file", "rb"),

})

# Handle response

print(res)The same SDK client can also be used to make asychronous requests by importing asyncio.

# Asynchronous Example

import asyncio

from mistralai import Mistral

import os

async def main():

async with Mistral(

api_key=os.getenv("MISTRAL_API_KEY", ""),

) as mistral:

res = await mistral.files.upload_async(file={

"file_name": "example.file",

"content": open("example.file", "rb"),

})

# Handle response

print(res)

asyncio.run(main())This example shows how to create agents completions.

# Synchronous Example

from mistralai import Mistral

import os

with Mistral(

api_key=os.getenv("MISTRAL_API_KEY", ""),

) as mistral:

res = mistral.agents.complete(messages=[

{

"content": "Who is the best French painter? Answer in one short sentence.",

"role": "user",

},

], agent_id="<id>")

# Handle response

print(res)The same SDK client can also be used to make asychronous requests by importing asyncio.

# Asynchronous Example

import asyncio

from mistralai import Mistral

import os

async def main():

async with Mistral(

api_key=os.getenv("MISTRAL_API_KEY", ""),

) as mistral:

res = await mistral.agents.complete_async(messages=[

{

"content": "Who is the best French painter? Answer in one short sentence.",

"role": "user",

},

], agent_id="<id>")

# Handle response

print(res)

asyncio.run(main())This example shows how to create embedding request.

# Synchronous Example

from mistralai import Mistral

import os

with Mistral(

api_key=os.getenv("MISTRAL_API_KEY", ""),

) as mistral:

res = mistral.embeddings.create(model="mistral-embed", inputs=[

"Embed this sentence.",

"As well as this one.",

])

# Handle response

print(res)The same SDK client can also be used to make asychronous requests by importing asyncio.

# Asynchronous Example

import asyncio

from mistralai import Mistral

import os

async def main():

async with Mistral(

api_key=os.getenv("MISTRAL_API_KEY", ""),

) as mistral:

res = await mistral.embeddings.create_async(model="mistral-embed", inputs=[

"Embed this sentence.",

"As well as this one.",

])

# Handle response

print(res)

asyncio.run(main())You can run the examples in the examples/ directory using poetry run or by entering the virtual environment using poetry shell.

Prerequisites

Before you begin, ensure you have AZUREAI_ENDPOINT and an AZURE_API_KEY. To obtain these, you will need to deploy Mistral on Azure AI.

See instructions for deploying Mistral on Azure AI here.

Here's a basic example to get you started. You can also run the example in the examples directory.

import asyncio

import os

from mistralai_azure import MistralAzure

client = MistralAzure(

azure_api_key=os.getenv("AZURE_API_KEY", ""),

azure_endpoint=os.getenv("AZURE_ENDPOINT", "")

)

async def main() -> None:

res = await client.chat.complete_async(

max_tokens= 100,

temperature= 0.5,

messages= [

{

"content": "Hello there!",

"role": "user"

}

]

)

print(res)

asyncio.run(main())The documentation for the Azure SDK is available here.

Prerequisites

Before you begin, you will need to create a Google Cloud project and enable the Mistral API. To do this, follow the instructions here.

To run this locally you will also need to ensure you are authenticated with Google Cloud. You can do this by running

gcloud auth application-default loginStep 1: Install

Install the extras dependencies specific to Google Cloud:

pip install mistralai[gcp]Step 2: Example Usage

Here's a basic example to get you started.

import asyncio

from mistralai_gcp import MistralGoogleCloud

client = MistralGoogleCloud()

async def main() -> None:

res = await client.chat.complete_async(

model= "mistral-small-2402",

messages= [

{

"content": "Hello there!",

"role": "user"

}

]

)

print(res)

asyncio.run(main())The documentation for the GCP SDK is available here.

Available methods

- moderate - Moderations

- moderate_chat - Chat Moderations

- create - Embeddings

- upload - Upload File

- list - List Files

- retrieve - Retrieve File

- delete - Delete File

- download - Download File

- get_signed_url - Get Signed Url

- list - Get Fine Tuning Jobs

- create - Create Fine Tuning Job

- get - Get Fine Tuning Job

- cancel - Cancel Fine Tuning Job

- start - Start Fine Tuning Job

- list - List Models

- retrieve - Retrieve Model

- delete - Delete Model

- update - Update Fine Tuned Model

- archive - Archive Fine Tuned Model

- unarchive - Unarchive Fine Tuned Model

- process - OCR

Server-sent events are used to stream content from certain

operations. These operations will expose the stream as Generator that

can be consumed using a simple for loop. The loop will

terminate when the server no longer has any events to send and closes the

underlying connection.

The stream is also a Context Manager and can be used with the with statement and will close the

underlying connection when the context is exited.

from mistralai import Mistral

import os

with Mistral(

api_key=os.getenv("MISTRAL_API_KEY", ""),

) as mistral:

res = mistral.chat.stream(model="mistral-small-latest", messages=[

{

"content": "Who is the best French painter? Answer in one short sentence.",

"role": "user",

},

])

with res as event_stream:

for event in event_stream:

# handle event

print(event, flush=True)Certain SDK methods accept file objects as part of a request body or multi-part request. It is possible and typically recommended to upload files as a stream rather than reading the entire contents into memory. This avoids excessive memory consumption and potentially crashing with out-of-memory errors when working with very large files. The following example demonstrates how to attach a file stream to a request.

[!TIP]

For endpoints that handle file uploads bytes arrays can also be used. However, using streams is recommended for large files.

from mistralai import Mistral

import os

with Mistral(

api_key=os.getenv("MISTRAL_API_KEY", ""),

) as mistral:

res = mistral.files.upload(file={

"file_name": "example.file",

"content": open("example.file", "rb"),

})

# Handle response

print(res)Some of the endpoints in this SDK support retries. If you use the SDK without any configuration, it will fall back to the default retry strategy provided by the API. However, the default retry strategy can be overridden on a per-operation basis, or across the entire SDK.

To change the default retry strategy for a single API call, simply provide a RetryConfig object to the call:

from mistralai import Mistral

from mistralai.utils import BackoffStrategy, RetryConfig

import os

with Mistral(

api_key=os.getenv("MISTRAL_API_KEY", ""),

) as mistral:

res = mistral.models.list(,

RetryConfig("backoff", BackoffStrategy(1, 50, 1.1, 100), False))

# Handle response

print(res)If you'd like to override the default retry strategy for all operations that support retries, you can use the retry_config optional parameter when initializing the SDK:

from mistralai import Mistral

from mistralai.utils import BackoffStrategy, RetryConfig

import os

with Mistral(

retry_config=RetryConfig("backoff", BackoffStrategy(1, 50, 1.1, 100), False),

api_key=os.getenv("MISTRAL_API_KEY", ""),

) as mistral:

res = mistral.models.list()

# Handle response

print(res)Handling errors in this SDK should largely match your expectations. All operations return a response object or raise an exception.

By default, an API error will raise a models.SDKError exception, which has the following properties:

| Property | Type | Description |

|---|---|---|

.status_code |

int | The HTTP status code |

.message |

str | The error message |

.raw_response |

httpx.Response | The raw HTTP response |

.body |

str | The response content |

When custom error responses are specified for an operation, the SDK may also raise their associated exceptions. You can refer to respective Errors tables in SDK docs for more details on possible exception types for each operation. For example, the list_async method may raise the following exceptions:

| Error Type | Status Code | Content Type |

|---|---|---|

| models.HTTPValidationError | 422 | application/json |

| models.SDKError | 4XX, 5XX | */* |

from mistralai import Mistral, models

import os

with Mistral(

api_key=os.getenv("MISTRAL_API_KEY", ""),

) as mistral:

res = None

try:

res = mistral.models.list()

# Handle response

print(res)

except models.HTTPValidationError as e:

# handle e.data: models.HTTPValidationErrorData

raise(e)

except models.SDKError as e:

# handle exception

raise(e)You can override the default server globally by passing a server name to the server: str optional parameter when initializing the SDK client instance. The selected server will then be used as the default on the operations that use it. This table lists the names associated with the available servers:

| Name | Server | Description |

|---|---|---|

eu |

https://api.mistral.ai |

EU Production server |

from mistralai import Mistral

import os

with Mistral(

server="eu",

api_key=os.getenv("MISTRAL_API_KEY", ""),

) as mistral:

res = mistral.models.list()

# Handle response

print(res)The default server can also be overridden globally by passing a URL to the server_url: str optional parameter when initializing the SDK client instance. For example:

from mistralai import Mistral

import os

with Mistral(

server_url="https://api.mistral.ai",

api_key=os.getenv("MISTRAL_API_KEY", ""),

) as mistral:

res = mistral.models.list()

# Handle response

print(res)The Python SDK makes API calls using the httpx HTTP library. In order to provide a convenient way to configure timeouts, cookies, proxies, custom headers, and other low-level configuration, you can initialize the SDK client with your own HTTP client instance.

Depending on whether you are using the sync or async version of the SDK, you can pass an instance of HttpClient or AsyncHttpClient respectively, which are Protocol's ensuring that the client has the necessary methods to make API calls.

This allows you to wrap the client with your own custom logic, such as adding custom headers, logging, or error handling, or you can just pass an instance of httpx.Client or httpx.AsyncClient directly.

For example, you could specify a header for every request that this sdk makes as follows:

from mistralai import Mistral

import httpx

http_client = httpx.Client(headers={"x-custom-header": "someValue"})

s = Mistral(client=http_client)or you could wrap the client with your own custom logic:

from mistralai import Mistral

from mistralai.httpclient import AsyncHttpClient

import httpx

class CustomClient(AsyncHttpClient):

client: AsyncHttpClient

def __init__(self, client: AsyncHttpClient):

self.client = client

async def send(

self,

request: httpx.Request,

*,

stream: bool = False,

auth: Union[

httpx._types.AuthTypes, httpx._client.UseClientDefault, None

] = httpx.USE_CLIENT_DEFAULT,

follow_redirects: Union[

bool, httpx._client.UseClientDefault

] = httpx.USE_CLIENT_DEFAULT,

) -> httpx.Response:

request.headers["Client-Level-Header"] = "added by client"

return await self.client.send(

request, stream=stream, auth=auth, follow_redirects=follow_redirects

)

def build_request(

self,

method: str,

url: httpx._types.URLTypes,

*,

content: Optional[httpx._types.RequestContent] = None,

data: Optional[httpx._types.RequestData] = None,

files: Optional[httpx._types.RequestFiles] = None,

json: Optional[Any] = None,

params: Optional[httpx._types.QueryParamTypes] = None,

headers: Optional[httpx._types.HeaderTypes] = None,

cookies: Optional[httpx._types.CookieTypes] = None,

timeout: Union[

httpx._types.TimeoutTypes, httpx._client.UseClientDefault

] = httpx.USE_CLIENT_DEFAULT,

extensions: Optional[httpx._types.RequestExtensions] = None,

) -> httpx.Request:

return self.client.build_request(

method,

url,

content=content,

data=data,

files=files,

json=json,

params=params,

headers=headers,

cookies=cookies,

timeout=timeout,

extensions=extensions,

)

s = Mistral(async_client=CustomClient(httpx.AsyncClient()))This SDK supports the following security scheme globally:

| Name | Type | Scheme | Environment Variable |

|---|---|---|---|

api_key |

http | HTTP Bearer | MISTRAL_API_KEY |

To authenticate with the API the api_key parameter must be set when initializing the SDK client instance. For example:

from mistralai import Mistral

import os

with Mistral(

api_key=os.getenv("MISTRAL_API_KEY", ""),

) as mistral:

res = mistral.models.list()

# Handle response

print(res)The Mistral class implements the context manager protocol and registers a finalizer function to close the underlying sync and async HTTPX clients it uses under the hood. This will close HTTP connections, release memory and free up other resources held by the SDK. In short-lived Python programs and notebooks that make a few SDK method calls, resource management may not be a concern. However, in longer-lived programs, it is beneficial to create a single SDK instance via a context manager and reuse it across the application.

from mistralai import Mistral

import os

def main():

with Mistral(

api_key=os.getenv("MISTRAL_API_KEY", ""),

) as mistral:

# Rest of application here...

# Or when using async:

async def amain():

async with Mistral(

api_key=os.getenv("MISTRAL_API_KEY", ""),

) as mistral:

# Rest of application here...You can setup your SDK to emit debug logs for SDK requests and responses.

You can pass your own logger class directly into your SDK.

from mistralai import Mistral

import logging

logging.basicConfig(level=logging.DEBUG)

s = Mistral(debug_logger=logging.getLogger("mistralai"))You can also enable a default debug logger by setting an environment variable MISTRAL_DEBUG to true.

Generally, the SDK will work well with most IDEs out of the box. However, when using PyCharm, you can enjoy much better integration with Pydantic by installing an additional plugin.

While we value open-source contributions to this SDK, this library is generated programmatically. Any manual changes added to internal files will be overwritten on the next generation. We look forward to hearing your feedback. Feel free to open a PR or an issue with a proof of concept and we'll do our best to include it in a future release.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for client-python

Similar Open Source Tools

client-python

The Mistral Python Client is a tool inspired by cohere-python that allows users to interact with the Mistral AI API. It provides functionalities to access and utilize the AI capabilities offered by Mistral. Users can easily install the client using pip and manage dependencies using poetry. The client includes examples demonstrating how to use the API for various tasks, such as chat interactions. To get started, users need to obtain a Mistral API Key and set it as an environment variable. Overall, the Mistral Python Client simplifies the integration of Mistral AI services into Python applications.

langserve

LangServe helps developers deploy `LangChain` runnables and chains as a REST API. This library is integrated with FastAPI and uses pydantic for data validation. In addition, it provides a client that can be used to call into runnables deployed on a server. A JavaScript client is available in LangChain.js.

matchlock

Matchlock is a CLI tool designed for running AI agents in isolated and disposable microVMs with network allowlisting and secret injection capabilities. It ensures that your secrets never enter the VM, providing a secure environment for AI agents to execute code without risking access to your machine. The tool offers features such as sealing the network to only allow traffic to specified hosts, injecting real credentials in-flight by the host, and providing a full Linux environment for the agent's operations while maintaining isolation from the host machine. Matchlock supports quick booting of Linux environments, sandbox lifecycle management, image building, and SDKs for Go and Python for embedding sandboxes in applications.

swarmzero

SwarmZero SDK is a library that simplifies the creation and execution of AI Agents and Swarms of Agents. It supports various LLM Providers such as OpenAI, Azure OpenAI, Anthropic, MistralAI, Gemini, Nebius, and Ollama. Users can easily install the library using pip or poetry, set up the environment and configuration, create and run Agents, collaborate with Swarms, add tools for complex tasks, and utilize retriever tools for semantic information retrieval. Sample prompts are provided to help users explore the capabilities of the agents and swarms. The SDK also includes detailed examples and documentation for reference.

raglite

RAGLite is a Python toolkit for Retrieval-Augmented Generation (RAG) with PostgreSQL or SQLite. It offers configurable options for choosing LLM providers, database types, and rerankers. The toolkit is fast and permissive, utilizing lightweight dependencies and hardware acceleration. RAGLite provides features like PDF to Markdown conversion, multi-vector chunk embedding, optimal semantic chunking, hybrid search capabilities, adaptive retrieval, and improved output quality. It is extensible with a built-in Model Context Protocol server, customizable ChatGPT-like frontend, document conversion to Markdown, and evaluation tools. Users can configure RAGLite for various tasks like configuring, inserting documents, running RAG pipelines, computing query adapters, evaluating performance, running MCP servers, and serving frontends.

instructor

Instructor is a Python library that makes it a breeze to work with structured outputs from large language models (LLMs). Built on top of Pydantic, it provides a simple, transparent, and user-friendly API to manage validation, retries, and streaming responses. Get ready to supercharge your LLM workflows!

UnrealGenAISupport

The Unreal Engine Generative AI Support Plugin is a tool designed to integrate various cutting-edge LLM/GenAI models into Unreal Engine for game development. It aims to simplify the process of using AI models for game development tasks, such as controlling scene objects, generating blueprints, running Python scripts, and more. The plugin currently supports models from organizations like OpenAI, Anthropic, XAI, Google Gemini, Meta AI, Deepseek, and Baidu. It provides features like API support, model control, generative AI capabilities, UI generation, project file management, and more. The plugin is still under development but offers a promising solution for integrating AI models into game development workflows.

suno-api

Suno AI API is an open-source project that allows developers to integrate the music generation capabilities of Suno.ai into their own applications. The API provides a simple and convenient way to generate music, lyrics, and other audio content using Suno.ai's powerful AI models. With Suno AI API, developers can easily add music generation functionality to their apps, websites, and other projects.

lollms

LoLLMs Server is a text generation server based on large language models. It provides a Flask-based API for generating text using various pre-trained language models. This server is designed to be easy to install and use, allowing developers to integrate powerful text generation capabilities into their applications.

fragments

Fragments is an open-source tool that leverages Anthropic's Claude Artifacts, Vercel v0, and GPT Engineer. It is powered by E2B Sandbox SDK and Code Interpreter SDK, allowing secure execution of AI-generated code. The tool is based on Next.js 14, shadcn/ui, TailwindCSS, and Vercel AI SDK. Users can stream in the UI, install packages from npm and pip, and add custom stacks and LLM providers. Fragments enables users to build web apps with Python interpreter, Next.js, Vue.js, Streamlit, and Gradio, utilizing providers like OpenAI, Anthropic, Google AI, and more.

aiavatarkit

AIAvatarKit is a tool for building AI-based conversational avatars quickly. It supports various platforms like VRChat and cluster, along with real-world devices. The tool is extensible, allowing unlimited capabilities based on user needs. It requires VOICEVOX API, Google or Azure Speech Services API keys, and Python 3.10. Users can start conversations out of the box and enjoy seamless interactions with the avatars.

instructor

Instructor is a popular Python library for managing structured outputs from large language models (LLMs). It offers a user-friendly API for validation, retries, and streaming responses. With support for various LLM providers and multiple languages, Instructor simplifies working with LLM outputs. The library includes features like response models, retry management, validation, streaming support, and flexible backends. It also provides hooks for logging and monitoring LLM interactions, and supports integration with Anthropic, Cohere, Gemini, Litellm, and Google AI models. Instructor facilitates tasks such as extracting user data from natural language, creating fine-tuned models, managing uploaded files, and monitoring usage of OpenAI models.

mcphub.nvim

MCPHub.nvim is a powerful Neovim plugin that integrates MCP (Model Context Protocol) servers into your workflow. It offers a centralized config file for managing servers and tools, with an intuitive UI for testing resources. Ideal for LLM integration, it provides programmatic API access and interactive testing through the `:MCPHub` command.

llm.rb

llm.rb is a zero-dependency Ruby toolkit for Large Language Models that includes various providers like OpenAI, Gemini, Anthropic, xAI (Grok), zAI, DeepSeek, Ollama, and LlamaCpp. It provides full support for chat, streaming, tool calling, audio, images, files, and structured outputs. The toolkit offers features like unified API across providers, pluggable JSON adapters, tool calling, JSON Schema structured output, streaming responses, TTS, transcription, translation, image generation, files API, multimodal prompts, embeddings, models API, OpenAI vector stores, and more.

shortest

Shortest is an AI-powered natural language end-to-end testing framework built on Playwright. It provides a seamless testing experience by allowing users to write tests in natural language and execute them using Anthropic Claude API. The framework also offers GitHub integration with 2FA support, making it suitable for testing web applications with complex authentication flows. Shortest simplifies the testing process by enabling users to run tests locally or in CI/CD pipelines, ensuring the reliability and efficiency of web applications.

mcp-agent

mcp-agent is a simple, composable framework designed to build agents using the Model Context Protocol. It handles the lifecycle of MCP server connections and implements patterns for building production-ready AI agents in a composable way. The framework also includes OpenAI's Swarm pattern for multi-agent orchestration in a model-agnostic manner, making it the simplest way to build robust agent applications. It is purpose-built for the shared protocol MCP, lightweight, and closer to an agent pattern library than a framework. mcp-agent allows developers to focus on the core business logic of their AI applications by handling mechanics such as server connections, working with LLMs, and supporting external signals like human input.

For similar tasks

client-python

The Mistral Python Client is a tool inspired by cohere-python that allows users to interact with the Mistral AI API. It provides functionalities to access and utilize the AI capabilities offered by Mistral. Users can easily install the client using pip and manage dependencies using poetry. The client includes examples demonstrating how to use the API for various tasks, such as chat interactions. To get started, users need to obtain a Mistral API Key and set it as an environment variable. Overall, the Mistral Python Client simplifies the integration of Mistral AI services into Python applications.

Bavarder

Bavarder is an AI-powered chit-chat tool designed for informal conversations about unimportant matters. Users can engage in light-hearted discussions with the AI, simulating casual chit-chat scenarios. The tool provides a platform for users to interact with AI in a fun and entertaining way, offering a unique experience of engaging with artificial intelligence in a conversational manner.

ChaKt-KMP

ChaKt is a multiplatform app built using Kotlin and Compose Multiplatform to demonstrate the use of Generative AI SDK for Kotlin Multiplatform to generate content using Google's Generative AI models. It features a simple chat based user interface and experience to interact with AI. The app supports mobile, desktop, and web platforms, and is built with Kotlin Multiplatform, Kotlin Coroutines, Compose Multiplatform, Generative AI SDK, Calf - File picker, and BuildKonfig. Users can contribute to the project by following the guidelines in CONTRIBUTING.md. The app is licensed under the MIT License.

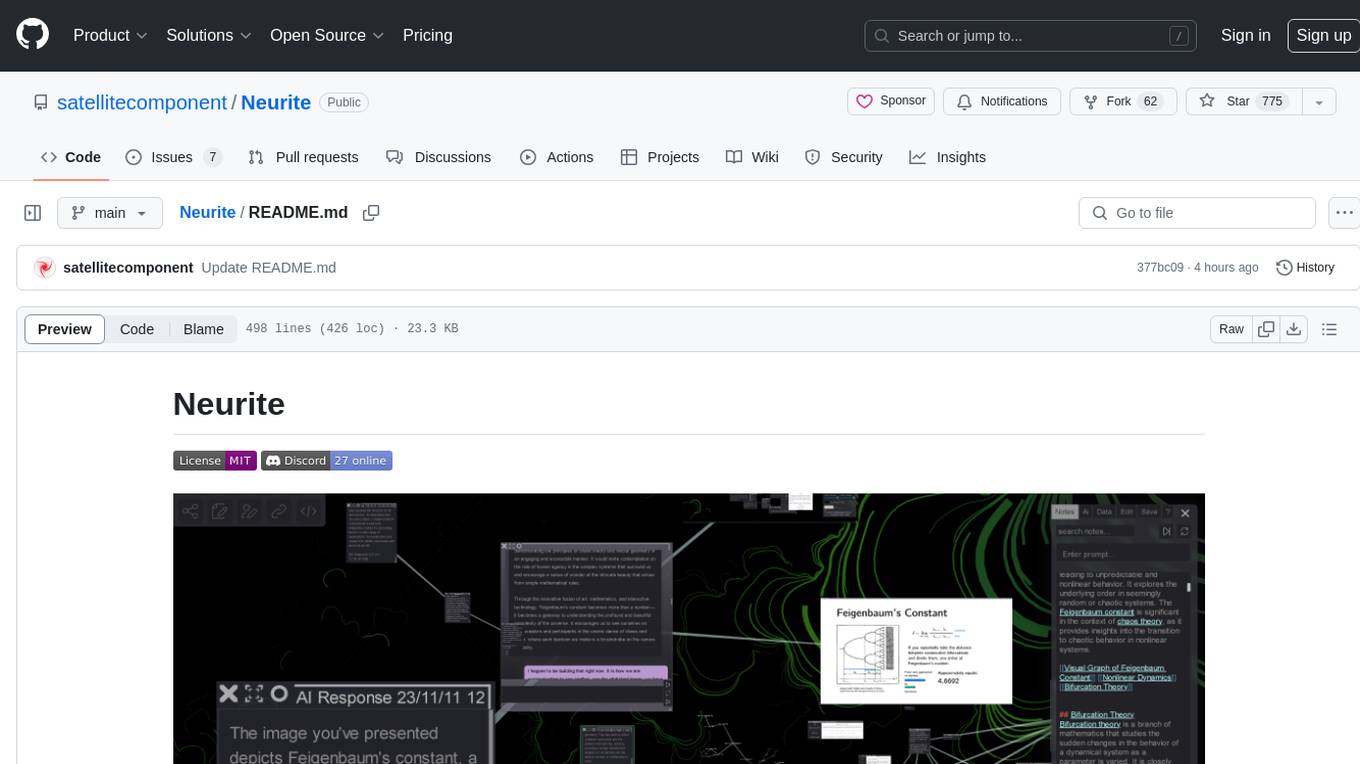

Neurite

Neurite is an innovative project that combines chaos theory and graph theory to create a digital interface that explores hidden patterns and connections for creative thinking. It offers a unique workspace blending fractals with mind mapping techniques, allowing users to navigate the Mandelbrot set in real-time. Nodes in Neurite represent various content types like text, images, videos, code, and AI agents, enabling users to create personalized microcosms of thoughts and inspirations. The tool supports synchronized knowledge management through bi-directional synchronization between mind-mapping and text-based hyperlinking. Neurite also features FractalGPT for modular conversation with AI, local AI capabilities for multi-agent chat networks, and a Neural API for executing code and sequencing animations. The project is actively developed with plans for deeper fractal zoom, advanced control over node placement, and experimental features.

weixin-dyh-ai

WeiXin-Dyh-AI is a backend management system that supports integrating WeChat subscription accounts with AI services. It currently supports integration with Ali AI, Moonshot, and Tencent Hyunyuan. Users can configure different AI models to simulate and interact with AI in multiple modes: text-based knowledge Q&A, text-to-image drawing, image description, text-to-voice conversion, enabling human-AI conversations on WeChat. The system allows hierarchical AI prompt settings at system, subscription account, and WeChat user levels. Users can configure AI model types, providers, and specific instances. The system also supports rules for allocating models and keys at different levels. It addresses limitations of WeChat's messaging system and offers features like text-based commands and voice support for interactions with AI.

Senparc.AI

Senparc.AI is an AI extension package for the Senparc ecosystem, focusing on LLM (Large Language Models) interaction. It provides modules for standard interfaces and basic functionalities, as well as interfaces using SemanticKernel for plug-and-play capabilities. The package also includes a library for supporting the 'PromptRange' ecosystem, compatible with various systems and frameworks. Users can configure different AI platforms and models, define AI interface parameters, and run AI functions easily. The package offers examples and commands for dialogue, embedding, and DallE drawing operations.

catai

CatAI is a tool that allows users to run GGUF models on their computer with a chat UI. It serves as a local AI assistant inspired by Node-Llama-Cpp and Llama.cpp. The tool provides features such as auto-detecting programming language, showing original messages by clicking on user icons, real-time text streaming, and fast model downloads. Users can interact with the tool through a CLI that supports commands for installing, listing, setting, serving, updating, and removing models. CatAI is cross-platform and supports Windows, Linux, and Mac. It utilizes node-llama-cpp and offers a simple API for asking model questions. Additionally, developers can integrate the tool with node-llama-cpp@beta for model management and chatting. The configuration can be edited via the web UI, and contributions to the project are welcome. The tool is licensed under Llama.cpp's license.

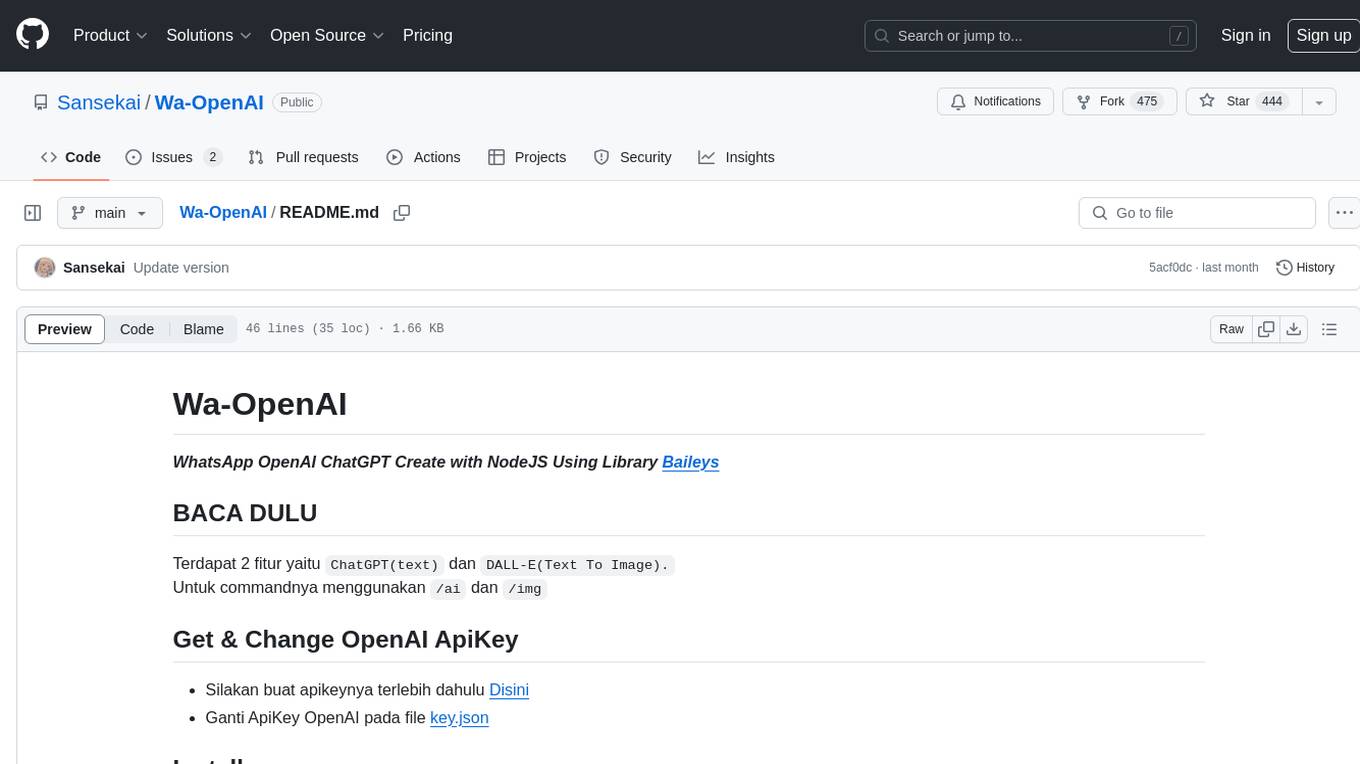

Wa-OpenAI

Wa-OpenAI is a WhatsApp chatbot powered by OpenAI's ChatGPT and DALL-E models, allowing users to interact with AI for text generation and image creation. Users can easily integrate the bot into their WhatsApp conversations using commands like '/ai' and '/img'. The tool requires setting up an OpenAI API key and can be installed on RDP/Windows or Termux environments. It provides a convenient way to leverage AI capabilities within WhatsApp chats, offering a seamless experience for generating text and images.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.