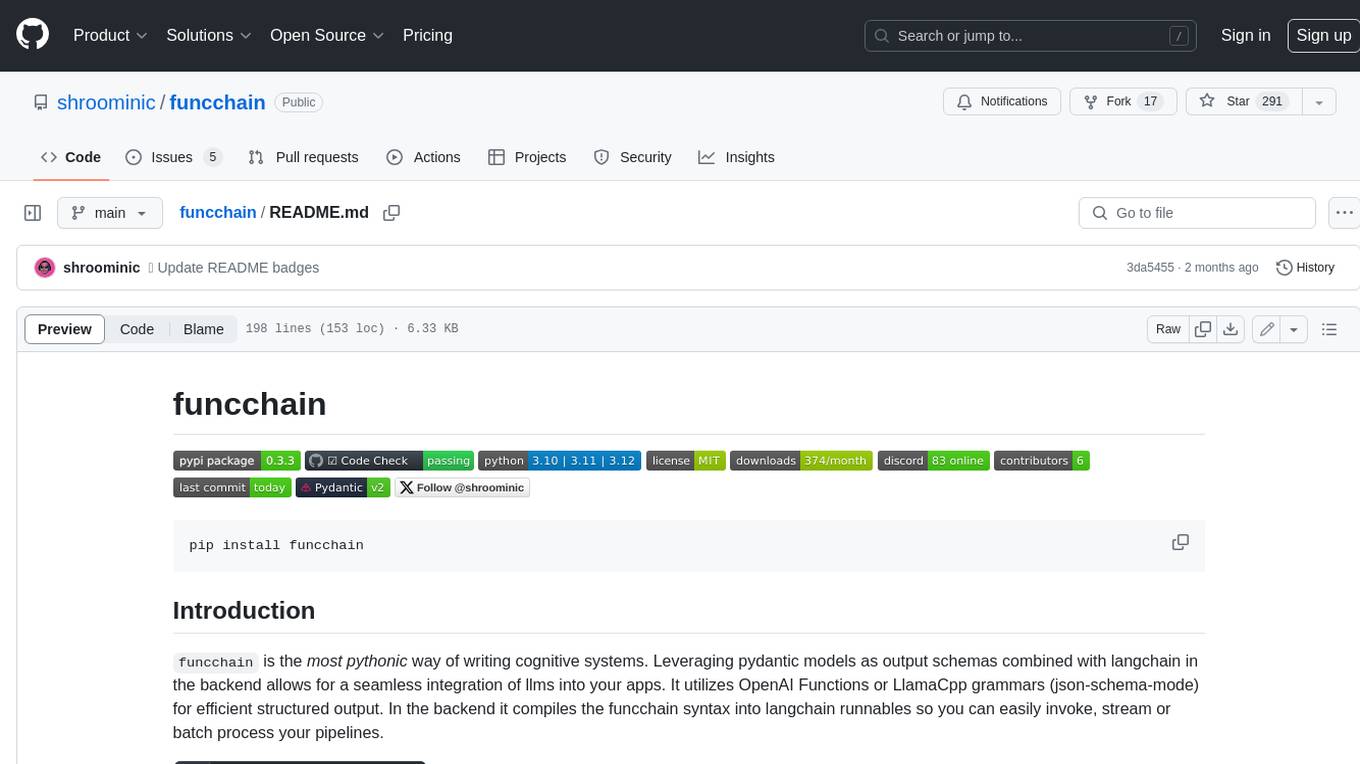

funcchain

⛓️ build cognitive systems, pythonic

Stars: 291

Funcchain is a Python library that allows you to easily write cognitive systems by leveraging Pydantic models as output schemas and LangChain in the backend. It provides a seamless integration of LLMs into your apps, utilizing OpenAI Functions or LlamaCpp grammars (json-schema-mode) for efficient structured output. Funcchain compiles the Funcchain syntax into LangChain runnables, enabling you to invoke, stream, or batch process your pipelines effortlessly.

README:

pip install funcchainfuncchain is the most pythonic way of writing cognitive systems. Leveraging pydantic models as output schemas combined with langchain in the backend allows for a seamless integration of llms into your apps.

It utilizes OpenAI Functions or LlamaCpp grammars (json-schema-mode) for efficient structured output.

In the backend it compiles the funcchain syntax into langchain runnables so you can easily invoke, stream or batch process your pipelines.

from funcchain import chain

from pydantic import BaseModel

# define your output shape

class Recipe(BaseModel):

ingredients: list[str]

instructions: list[str]

duration: int

# write prompts utilising all native python features

def generate_recipe(topic: str) -> Recipe:

"""

Generate a recipe for a given topic.

"""

return chain() # <- this is doing all the magic

# generate llm response

recipe = generate_recipe("christmas dinner")

# recipe is automatically converted as pydantic model

print(recipe.ingredients)from pydantic import BaseModel, Field

from funcchain import chain

# define nested models

class Item(BaseModel):

name: str = Field(description="Name of the item")

description: str = Field(description="Description of the item")

keywords: list[str] = Field(description="Keywords for the item")

class ShoppingList(BaseModel):

items: list[Item]

store: str = Field(description="The store to buy the items from")

class TodoList(BaseModel):

todos: list[Item]

urgency: int = Field(description="The urgency of all tasks (1-10)")

# support for union types

def extract_list(user_input: str) -> TodoList | ShoppingList:

"""

The user input is either a shopping List or a todo list.

"""

return chain()

# the model will choose the output type automatically

lst = extract_list(

input("Enter your list: ")

)

# custom handler based on type

match lst:

case ShoppingList(items=items, store=store):

print("Here is your Shopping List: ")

for item in items:

print(f"{item.name}: {item.description}")

print(f"You need to go to: {store}")

case TodoList(todos=todos, urgency=urgency):

print("Here is your Todo List: ")

for item in todos:

print(f"{item.name}: {item.description}")

print(f"Urgency: {urgency}")from funcchain import Image

from pydantic import BaseModel, Field

from funcchain import chain, settings

# set global llm using model identifiers (see MODELS.md)

settings.llm = "openai/gpt-4-vision-preview"

# everything defined is part of the prompt

class AnalysisResult(BaseModel):

"""The result of an image analysis."""

theme: str = Field(description="The theme of the image")

description: str = Field(description="A description of the image")

objects: list[str] = Field(description="A list of objects found in the image")

# easy use of images as input with structured output

def analyse_image(image: Image) -> AnalysisResult:

"""

Analyse the image and extract its

theme, description and objects.

"""

return chain()

result = analyse_image(Image.open("examples/assets/old_chinese_temple.jpg"))

print("Theme:", result.theme)

print("Description:", result.description)

for obj in result.objects:

print("Found this object:", obj)from pydantic import BaseModel, Field

from funcchain import chain, settings

# auto-download the model from huggingface

settings.llm = "ollama/openchat"

class SentimentAnalysis(BaseModel):

analysis: str

sentiment: bool = Field(description="True for Happy, False for Sad")

def analyze(text: str) -> SentimentAnalysis:

"""

Determines the sentiment of the text.

"""

return chain()

# generates using the local model

poem = analyze("I really like when my dog does a trick!")

# promised structured output (for local models!)

print(poem.analysis)- 🐍 pythonic

- 🔀 easy swap between openai or local models

- 🔄 dynamic output types (pydantic models, or primitives)

- 👁️ vision llm support

- 🧠 langchain_core as backend

- 📝 jinja templating for prompts

- 🏗️ reliable structured output

- 🔁 auto retry parsing

- 🔧 langsmith support

- 🔄 sync, async, streaming, parallel, fallbacks

- 📦 gguf download from huggingface

- ✅ type hints for all functions and mypy support

- 🗣️ chat router component

- 🧩 composable with langchain LCEL

- 🛠️ easy error handling

- 🚦 enums and literal support

- 📐 custom parsing types

Also highly recommend to try and run the examples in the ./examples folder.

You want to contribute? Thanks, that's great! For more information checkout the Contributing Guide. Please run the dev setup to get started:

git clone https://github.com/shroominic/funcchain.git && cd funcchain

./dev_setup.shFor Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for funcchain

Similar Open Source Tools

funcchain

Funcchain is a Python library that allows you to easily write cognitive systems by leveraging Pydantic models as output schemas and LangChain in the backend. It provides a seamless integration of LLMs into your apps, utilizing OpenAI Functions or LlamaCpp grammars (json-schema-mode) for efficient structured output. Funcchain compiles the Funcchain syntax into LangChain runnables, enabling you to invoke, stream, or batch process your pipelines effortlessly.

aioshelly

Aioshelly is an asynchronous library designed to control Shelly devices. It is currently under development and requires Python version 3.11 or higher, along with dependencies like bluetooth-data-tools, aiohttp, and orjson. The library provides examples for interacting with Gen1 devices using CoAP protocol and Gen2/Gen3 devices using RPC and WebSocket protocols. Users can easily connect to Shelly devices, retrieve status information, and perform various actions through the provided APIs. The repository also includes example scripts for quick testing and usage guidelines for contributors to maintain consistency with the Shelly API.

client-python

The Mistral Python Client is a tool inspired by cohere-python that allows users to interact with the Mistral AI API. It provides functionalities to access and utilize the AI capabilities offered by Mistral. Users can easily install the client using pip and manage dependencies using poetry. The client includes examples demonstrating how to use the API for various tasks, such as chat interactions. To get started, users need to obtain a Mistral API Key and set it as an environment variable. Overall, the Mistral Python Client simplifies the integration of Mistral AI services into Python applications.

ChatRex

ChatRex is a Multimodal Large Language Model (MLLM) designed to seamlessly integrate fine-grained object perception and robust language understanding. By adopting a decoupled architecture with a retrieval-based approach for object detection and leveraging high-resolution visual inputs, ChatRex addresses key challenges in perception tasks. It is powered by the Rexverse-2M dataset with diverse image-region-text annotations. ChatRex can be applied to various scenarios requiring fine-grained perception, such as object detection, grounded conversation, grounded image captioning, and region understanding.

continuous-eval

Open-Source Evaluation for LLM Applications. `continuous-eval` is an open-source package created for granular and holistic evaluation of GenAI application pipelines. It offers modularized evaluation, a comprehensive metric library covering various LLM use cases, the ability to leverage user feedback in evaluation, and synthetic dataset generation for testing pipelines. Users can define their own metrics by extending the Metric class. The tool allows running evaluation on a pipeline defined with modules and corresponding metrics. Additionally, it provides synthetic data generation capabilities to create user interaction data for evaluation or training purposes.

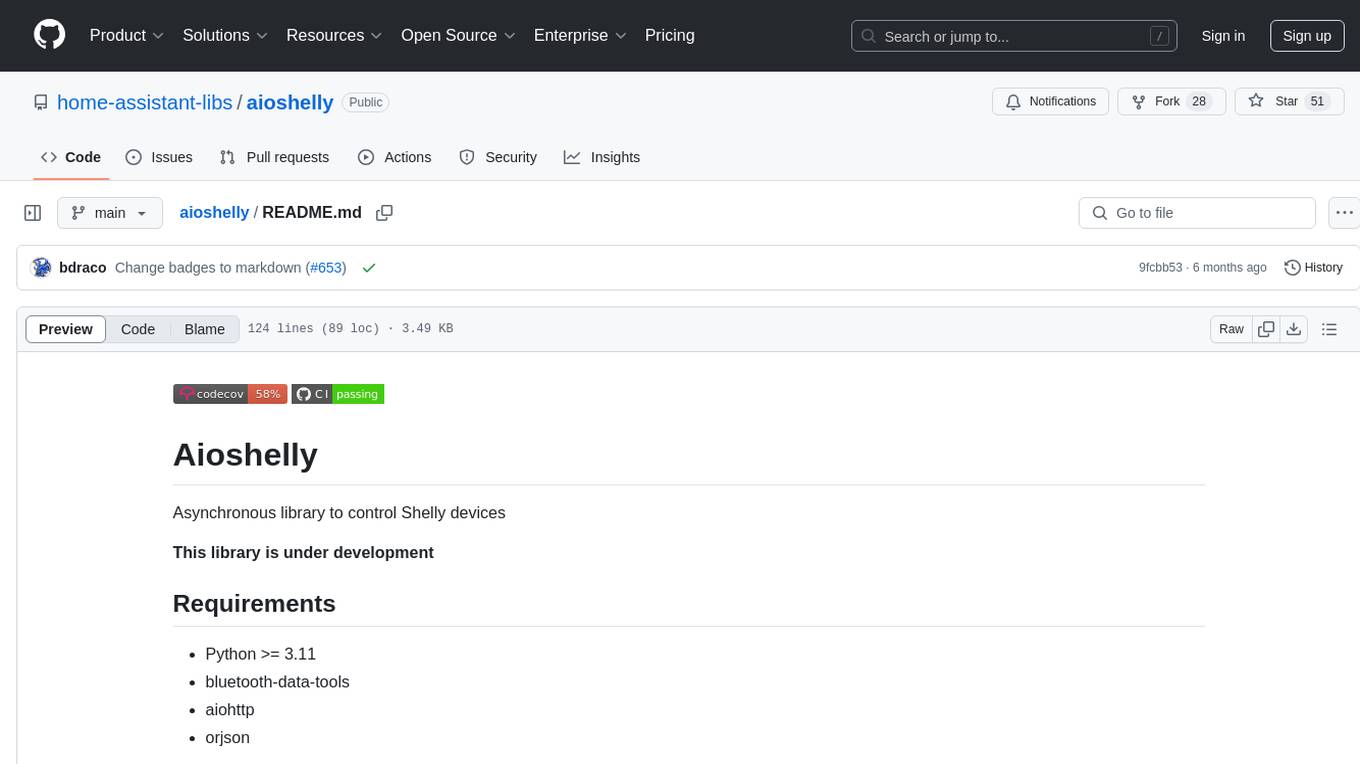

vecs

vecs is a Python client for managing and querying vector stores in PostgreSQL with the pgvector extension. It allows users to create collections of vectors with associated metadata, index the collections for fast search performance, and query the collections based on specified filters. The tool simplifies the process of working with vector data in a PostgreSQL database, making it easier to store, retrieve, and analyze vector information.

educhain

Educhain is a powerful Python package that leverages Generative AI to create engaging and personalized educational content. It enables users to generate multiple-choice questions, create lesson plans, and support various LLM models. Users can export questions to JSON, PDF, and CSV formats, customize prompt templates, and generate questions from text, PDF, URL files, youtube videos, and images. Educhain outperforms traditional methods in content generation speed and quality. It offers advanced configuration options and has a roadmap for future enhancements, including integration with popular Learning Management Systems and a mobile app for content generation on-the-go.

notte

Notte is a web browser designed specifically for LLM agents, providing a language-first web navigation experience without the need for DOM/HTML parsing. It transforms websites into structured, navigable maps described in natural language, enabling users to interact with the web using natural language commands. By simplifying browser complexity, Notte allows LLM policies to focus on conversational reasoning and planning, reducing token usage, costs, and latency. The tool supports various language model providers and offers a reinforcement learning style action space and controls for full navigation control.

mcp-agent

mcp-agent is a simple, composable framework designed to build agents using the Model Context Protocol. It handles the lifecycle of MCP server connections and implements patterns for building production-ready AI agents in a composable way. The framework also includes OpenAI's Swarm pattern for multi-agent orchestration in a model-agnostic manner, making it the simplest way to build robust agent applications. It is purpose-built for the shared protocol MCP, lightweight, and closer to an agent pattern library than a framework. mcp-agent allows developers to focus on the core business logic of their AI applications by handling mechanics such as server connections, working with LLMs, and supporting external signals like human input.

openvino.genai

The GenAI repository contains pipelines that implement image and text generation tasks. The implementation uses OpenVINO capabilities to optimize the pipelines. Each sample covers a family of models and suggests certain modifications to adapt the code to specific needs. It includes the following pipelines: 1. Benchmarking script for large language models 2. Text generation C++ samples that support most popular models like LLaMA 2 3. Stable Diffuison (with LoRA) C++ image generation pipeline 4. Latent Consistency Model (with LoRA) C++ image generation pipeline

redisvl

Redis Vector Library (RedisVL) is a Python client library for building AI applications on top of Redis. It provides a high-level interface for managing vector indexes, performing vector search, and integrating with popular embedding models and providers. RedisVL is designed to make it easy for developers to build and deploy AI applications that leverage the speed, flexibility, and reliability of Redis.

unitxt

Unitxt is a customizable library for textual data preparation and evaluation tailored to generative language models. It natively integrates with common libraries like HuggingFace and LM-eval-harness and deconstructs processing flows into modular components, enabling easy customization and sharing between practitioners. These components encompass model-specific formats, task prompts, and many other comprehensive dataset processing definitions. The Unitxt-Catalog centralizes these components, fostering collaboration and exploration in modern textual data workflows. Beyond being a tool, Unitxt is a community-driven platform, empowering users to build, share, and advance their pipelines collaboratively.

mcphub.nvim

MCPHub.nvim is a powerful Neovim plugin that integrates MCP (Model Context Protocol) servers into your workflow. It offers a centralized config file for managing servers and tools, with an intuitive UI for testing resources. Ideal for LLM integration, it provides programmatic API access and interactive testing through the `:MCPHub` command.

letta

Letta is an open source framework for building stateful LLM applications. It allows users to build stateful agents with advanced reasoning capabilities and transparent long-term memory. The framework is white box and model-agnostic, enabling users to connect to various LLM API backends. Letta provides a graphical interface, the Letta ADE, for creating, deploying, interacting, and observing with agents. Users can access Letta via REST API, Python, Typescript SDKs, and the ADE. Letta supports persistence by storing agent data in a database, with PostgreSQL recommended for data migrations. Users can install Letta using Docker or pip, with Docker defaulting to PostgreSQL and pip defaulting to SQLite. Letta also offers a CLI tool for interacting with agents. The project is open source and welcomes contributions from the community.

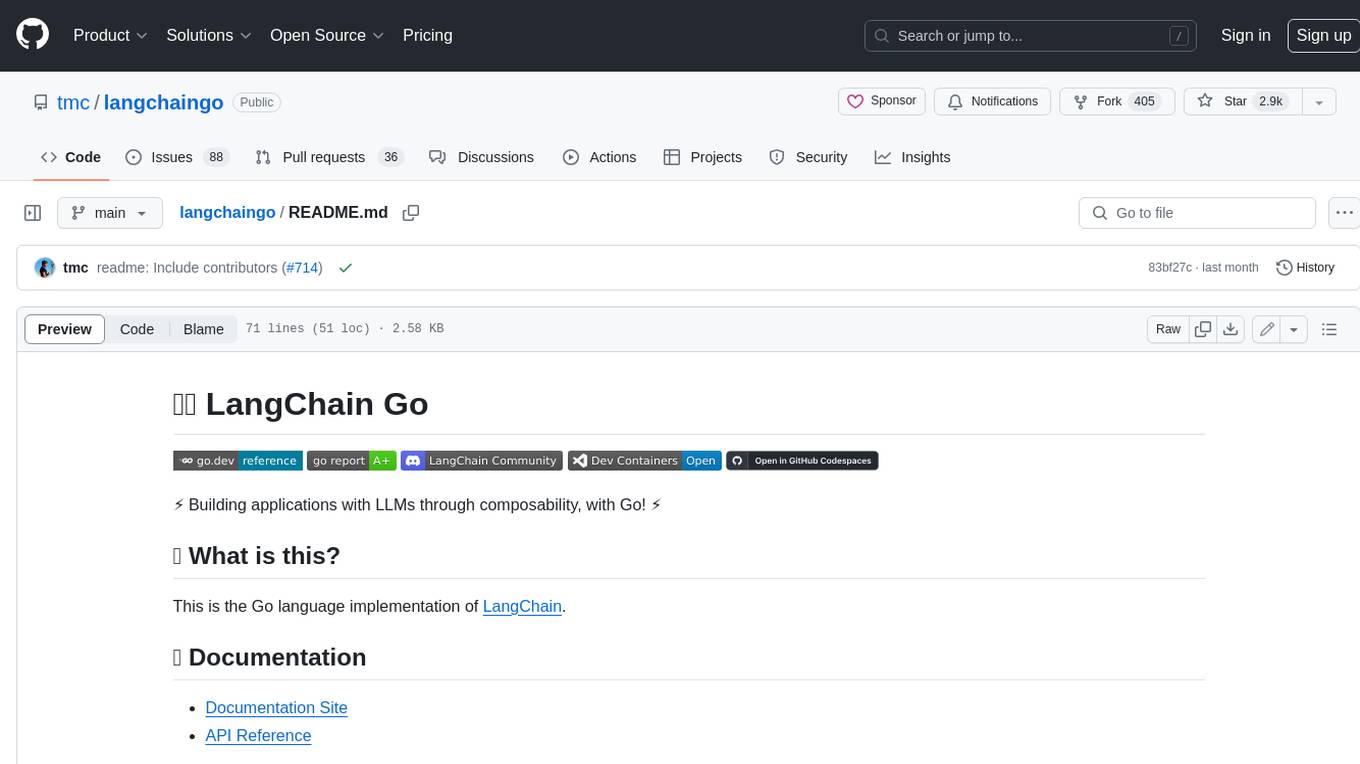

langchaingo

LangChain Go is a Go language implementation of LangChain, a framework for building applications with LLMs through composability. It provides a simple and easy-to-use API for interacting with LLMs, making it easy to add language-based features to your applications.

UniChat

UniChat is a pipeline tool for creating online and offline chat-bots in Unity. It leverages Unity.Sentis and text vector embedding technology to enable offline mode text content search based on vector databases. The tool includes a chain toolkit for embedding LLM and Agent in games, along with middleware components for Text to Speech, Speech to Text, and Sub-classifier functionalities. UniChat also offers a tool for invoking tools based on ReActAgent workflow, allowing users to create personalized chat scenarios and character cards. The tool provides a comprehensive solution for designing flexible conversations in games while maintaining developer's ideas.

For similar tasks

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

jupyter-ai

Jupyter AI connects generative AI with Jupyter notebooks. It provides a user-friendly and powerful way to explore generative AI models in notebooks and improve your productivity in JupyterLab and the Jupyter Notebook. Specifically, Jupyter AI offers: * An `%%ai` magic that turns the Jupyter notebook into a reproducible generative AI playground. This works anywhere the IPython kernel runs (JupyterLab, Jupyter Notebook, Google Colab, Kaggle, VSCode, etc.). * A native chat UI in JupyterLab that enables you to work with generative AI as a conversational assistant. * Support for a wide range of generative model providers, including AI21, Anthropic, AWS, Cohere, Gemini, Hugging Face, NVIDIA, and OpenAI. * Local model support through GPT4All, enabling use of generative AI models on consumer grade machines with ease and privacy.

khoj

Khoj is an open-source, personal AI assistant that extends your capabilities by creating always-available AI agents. You can share your notes and documents to extend your digital brain, and your AI agents have access to the internet, allowing you to incorporate real-time information. Khoj is accessible on Desktop, Emacs, Obsidian, Web, and Whatsapp, and you can share PDF, markdown, org-mode, notion files, and GitHub repositories. You'll get fast, accurate semantic search on top of your docs, and your agents can create deeply personal images and understand your speech. Khoj is self-hostable and always will be.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

infinity

Infinity is an AI-native database designed for LLM applications, providing incredibly fast full-text and vector search capabilities. It supports a wide range of data types, including vectors, full-text, and structured data, and offers a fused search feature that combines multiple embeddings and full text. Infinity is easy to use, with an intuitive Python API and a single-binary architecture that simplifies deployment. It achieves high performance, with 0.1 milliseconds query latency on million-scale vector datasets and up to 15K QPS.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.