mcp-agent

Build effective agents using Model Context Protocol and simple workflow patterns

Stars: 7412

mcp-agent is a simple, composable framework designed to build agents using the Model Context Protocol. It handles the lifecycle of MCP server connections and implements patterns for building production-ready AI agents in a composable way. The framework also includes OpenAI's Swarm pattern for multi-agent orchestration in a model-agnostic manner, making it the simplest way to build robust agent applications. It is purpose-built for the shared protocol MCP, lightweight, and closer to an agent pattern library than a framework. mcp-agent allows developers to focus on the core business logic of their AI applications by handling mechanics such as server connections, working with LLMs, and supporting external signals like human input.

README:

Build effective agents with Model Context Protocol using simple, composable patterns.

Examples | Building Effective Agents | MCP

mcp-agent is a simple, composable framework to build agents using Model Context Protocol.

Inspiration: Anthropic announced 2 foundational updates for AI application developers:

- Model Context Protocol - a standardized interface to let any software be accessible to AI assistants via MCP servers.

- Building Effective Agents - a seminal writeup on simple, composable patterns for building production-ready AI agents.

mcp-agent puts these two foundational pieces into an AI application framework:

- It handles the pesky business of managing the lifecycle of MCP server connections so you don't have to.

- It implements every pattern described in Building Effective Agents, and does so in a composable way, allowing you to chain these patterns together.

- Bonus: It implements OpenAI's Swarm pattern for multi-agent orchestration, but in a model-agnostic way.

Altogether, this is the simplest and easiest way to build robust agent applications. Much like MCP, this project is in early development. We welcome all kinds of contributions, feedback and your help in growing this to become a new standard.

We recommend using uv to manage your Python projects:

uv add "mcp-agent"Alternatively:

pip install mcp-agent[!TIP] The

examplesdirectory has several example applications to get started with. To run an example, clone this repo, then:cd examples/basic/mcp_basic_agent # Or any other example # Option A: secrets YAML # cp mcp_agent.secrets.yaml.example mcp_agent.secrets.yaml && edit mcp_agent.secrets.yaml # Option B: .env cp .env.example .env && edit .env uv run main.py

Here is a basic "finder" agent that uses the fetch and filesystem servers to look up a file, read a blog and write a tweet. Example link:

finder_agent.py

import asyncio

import os

from mcp_agent.app import MCPApp

from mcp_agent.agents.agent import Agent

from mcp_agent.workflows.llm.augmented_llm_openai import OpenAIAugmentedLLM

app = MCPApp(name="hello_world_agent")

async def example_usage():

async with app.run() as mcp_agent_app:

logger = mcp_agent_app.logger

# This agent can read the filesystem or fetch URLs

finder_agent = Agent(

name="finder",

instruction="""You can read local files or fetch URLs.

Return the requested information when asked.""",

server_names=["fetch", "filesystem"], # MCP servers this Agent can use

)

async with finder_agent:

# Automatically initializes the MCP servers and adds their tools for LLM use

tools = await finder_agent.list_tools()

logger.info(f"Tools available:", data=tools)

# Attach an OpenAI LLM to the agent (defaults to GPT-4o)

llm = await finder_agent.attach_llm(OpenAIAugmentedLLM)

# This will perform a file lookup and read using the filesystem server

result = await llm.generate_str(

message="Show me what's in README.md verbatim"

)

logger.info(f"README.md contents: {result}")

# Uses the fetch server to fetch the content from URL

result = await llm.generate_str(

message="Print the first two paragraphs from https://www.anthropic.com/research/building-effective-agents"

)

logger.info(f"Blog intro: {result}")

# Multi-turn interactions by default

result = await llm.generate_str("Summarize that in a 128-char tweet")

logger.info(f"Tweet: {result}")

if __name__ == "__main__":

asyncio.run(example_usage())mcp_agent.config.yaml

execution_engine: asyncio

logger:

transports: [console] # You can use [file, console] for both

level: debug

path: "logs/mcp-agent.jsonl" # Used for file transport

# For dynamic log filenames:

# path_settings:

# path_pattern: "logs/mcp-agent-{unique_id}.jsonl"

# unique_id: "timestamp" # Or "session_id"

# timestamp_format: "%Y%m%d_%H%M%S"

mcp:

servers:

fetch:

command: "uvx"

args: ["mcp-server-fetch"]

filesystem:

command: "npx"

args:

[

"-y",

"@modelcontextprotocol/server-filesystem",

"<add_your_directories>",

]

openai:

# Secrets (API keys, etc.) are stored in an mcp_agent.secrets.yaml file which can be gitignored

default_model: gpt-4o- Why use mcp-agent?

- Example Applications

- Core Concepts

- Workflows Patterns

- Advanced

- Contributing

- Roadmap

- FAQs

There are too many AI frameworks out there already. But mcp-agent is the only one that is purpose-built for a shared protocol - MCP. It is also the most lightweight, and is closer to an agent pattern library than a framework.

As more services become MCP-aware, you can use mcp-agent to build robust and controllable AI agents that can leverage those services out-of-the-box.

Before we go into the core concepts of mcp-agent, let's show what you can build with it.

In short, you can build any kind of AI application with mcp-agent: multi-agent collaborative workflows, human-in-the-loop workflows, RAG pipelines and more.

You can integrate mcp-agent apps into MCP clients like Claude Desktop.

This app wraps an mcp-agent application inside an MCP server, and exposes that server to Claude Desktop. The app exposes agents and workflows that Claude Desktop can invoke to service of the user's request.

https://github.com/user-attachments/assets/7807cffd-dba7-4f0c-9c70-9482fd7e0699

This demo shows a multi-agent evaluation task where each agent evaluates aspects of an input poem, and then an aggregator summarizes their findings into a final response.

Details: Starting from a user's request over text, the application:

- dynamically defines agents to do the job

- uses the appropriate workflow to orchestrate those agents (in this case the Parallel workflow)

Link to code: examples/basic/mcp_server_aggregator

[!NOTE] Huge thanks to Jerron Lim (@StreetLamb) for developing and contributing this example!

You can deploy mcp-agent apps using Streamlit.

This app is able to perform read and write actions on gmail using text prompts -- i.e. read, delete, send emails, mark as read/unread, etc. It uses an MCP server for Gmail.

https://github.com/user-attachments/assets/54899cac-de24-4102-bd7e-4b2022c956e3

Link to code: gmail-mcp-server

[!NOTE] Huge thanks to Jason Summer (@jasonsum) for developing and contributing this example!

This app uses a Qdrant vector database (via an MCP server) to do Q&A over a corpus of text.

https://github.com/user-attachments/assets/f4dcd227-cae9-4a59-aa9e-0eceeb4acaf4

Link to code: examples/usecases/streamlit_mcp_rag_agent

[!NOTE] Huge thanks to Jerron Lim (@StreetLamb) for developing and contributing this example!

Marimo is a reactive Python notebook that replaces Jupyter and Streamlit. Here's the "file finder" agent from Quickstart implemented in Marimo:

Link to code: examples/usecases/marimo_mcp_basic_agent

[!NOTE] Huge thanks to Akshay Agrawal (@akshayka) for developing and contributing this example!

You can write mcp-agent apps as Python scripts or Jupyter notebooks.

This example demonstrates a multi-agent setup for handling different customer service requests in an airline context using the Swarm workflow pattern. The agents can triage requests, handle flight modifications, cancellations, and lost baggage cases.

https://github.com/user-attachments/assets/b314d75d-7945-4de6-965b-7f21eb14a8bd

Link to code: examples/workflows/workflow_swarm

The following are the building blocks of the mcp-agent framework:

- MCPApp: global state and app configuration

-

MCP server management:

gen_clientandMCPConnectionManagerto easily connect to MCP servers. - Agent: An Agent is an entity that has access to a set of MCP servers and exposes them to an LLM as tool calls. It has a name and purpose (instruction).

-

AugmentedLLM: An LLM that is enhanced with tools provided from a collection of MCP servers. Every Workflow pattern described below is an

AugmentedLLMitself, allowing you to compose and chain them together.

Everything in the framework is a derivative of these core capabilities.

mcp-agent provides implementations for every pattern in Anthropic’s Building Effective Agents, as well as the OpenAI Swarm pattern.

Each pattern is model-agnostic, and exposed as an AugmentedLLM, making everything very composable.

AugmentedLLM is an LLM that has access to MCP servers and functions via Agents.

LLM providers implement the AugmentedLLM interface to expose 3 functions:

-

generate: Generate message(s) given a prompt, possibly over multiple iterations and making tool calls as needed. -

generate_str: Callsgenerateand returns result as a string output. -

generate_structured: Uses Instructor to return the generated result as a Pydantic model.

Additionally, AugmentedLLM has memory, to keep track of long or short-term history.

Example

from mcp_agent.agents.agent import Agent

from mcp_agent.workflows.llm.augmented_llm_anthropic import AnthropicAugmentedLLM

finder_agent = Agent(

name="finder",

instruction="You are an agent with filesystem + fetch access. Return the requested file or URL contents.",

server_names=["fetch", "filesystem"],

)

async with finder_agent:

llm = await finder_agent.attach_llm(AnthropicAugmentedLLM)

result = await llm.generate_str(

message="Print the first 2 paragraphs of https://www.anthropic.com/research/building-effective-agents",

# Can override model, tokens and other defaults

)

logger.info(f"Result: {result}")

# Multi-turn conversation

result = await llm.generate_str(

message="Summarize those paragraphs in a 128 character tweet",

)

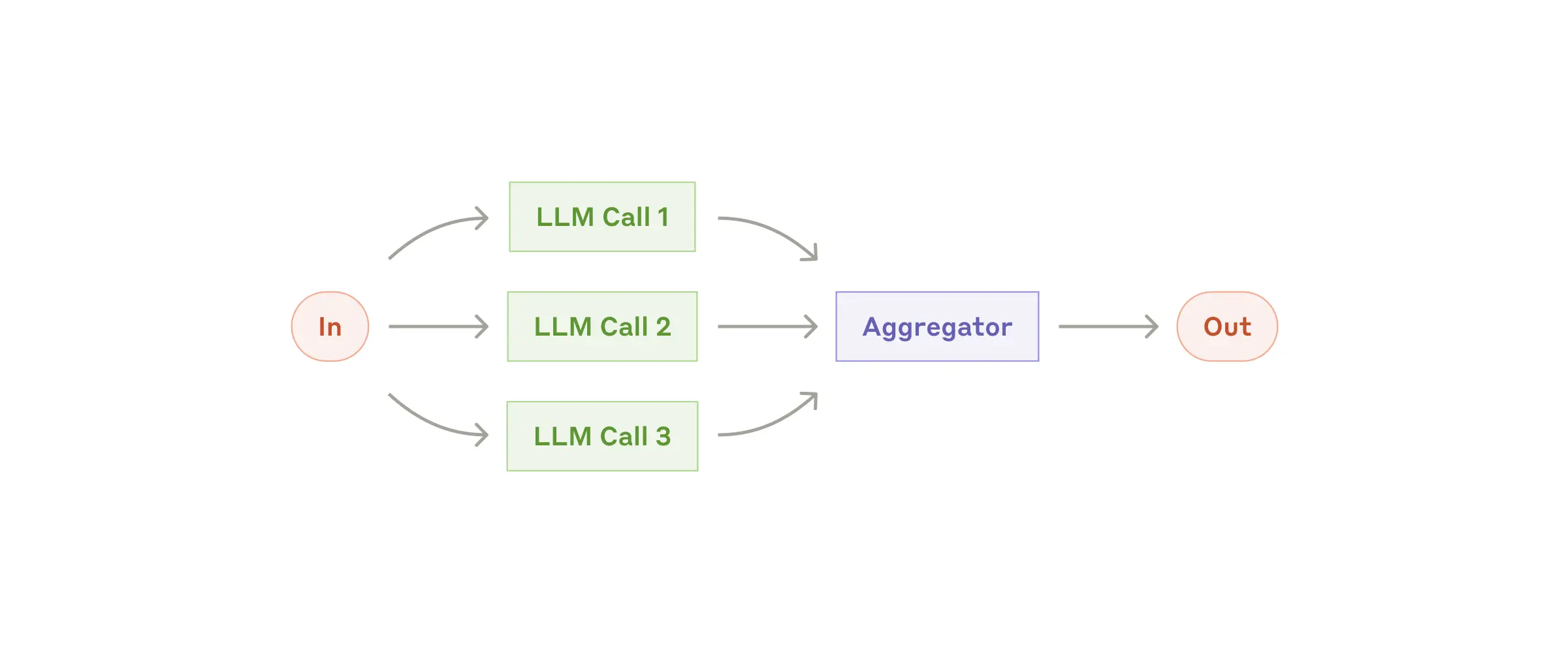

logger.info(f"Result: {result}")Fan-out tasks to multiple sub-agents and fan-in the results. Each subtask is an AugmentedLLM, as is the overall Parallel workflow, meaning each subtask can optionally be a more complex workflow itself.

[!NOTE]

Example

proofreader = Agent(name="proofreader", instruction="Review grammar...")

fact_checker = Agent(name="fact_checker", instruction="Check factual consistency...")

style_enforcer = Agent(name="style_enforcer", instruction="Enforce style guidelines...")

grader = Agent(name="grader", instruction="Combine feedback into a structured report.")

parallel = ParallelLLM(

fan_in_agent=grader,

fan_out_agents=[proofreader, fact_checker, style_enforcer],

llm_factory=OpenAIAugmentedLLM,

)

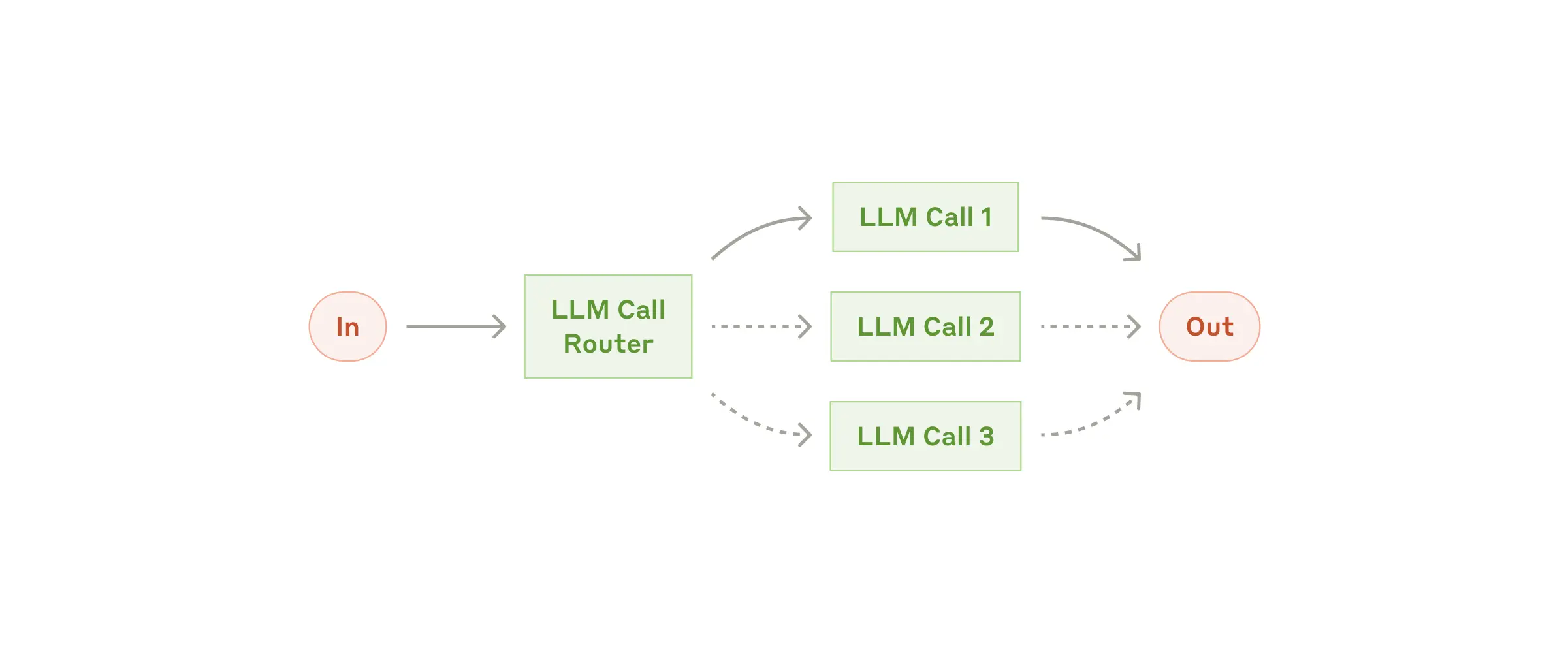

result = await parallel.generate_str("Student short story submission: ...", RequestParams(model="gpt4-o"))Given an input, route to the top_k most relevant categories. A category can be an Agent, an MCP server or a regular function.

mcp-agent provides several router implementations, including:

-

EmbeddingRouter: uses embedding models for classification -

LLMRouter: uses LLMs for classification

[!NOTE]

Example

def print_hello_world:

print("Hello, world!")

finder_agent = Agent(name="finder", server_names=["fetch", "filesystem"])

writer_agent = Agent(name="writer", server_names=["filesystem"])

llm = OpenAIAugmentedLLM()

router = LLMRouter(

llm=llm,

agents=[finder_agent, writer_agent],

functions=[print_hello_world],

)

results = await router.route( # Also available: route_to_agent, route_to_server

request="Find and print the contents of README.md verbatim",

top_k=1

)

chosen_agent = results[0].result

async with chosen_agent:

...A close sibling of Router, the Intent Classifier pattern identifies the top_k Intents that most closely match a given input.

Just like a Router, mcp-agent provides both an embedding and LLM-based intent classifier.

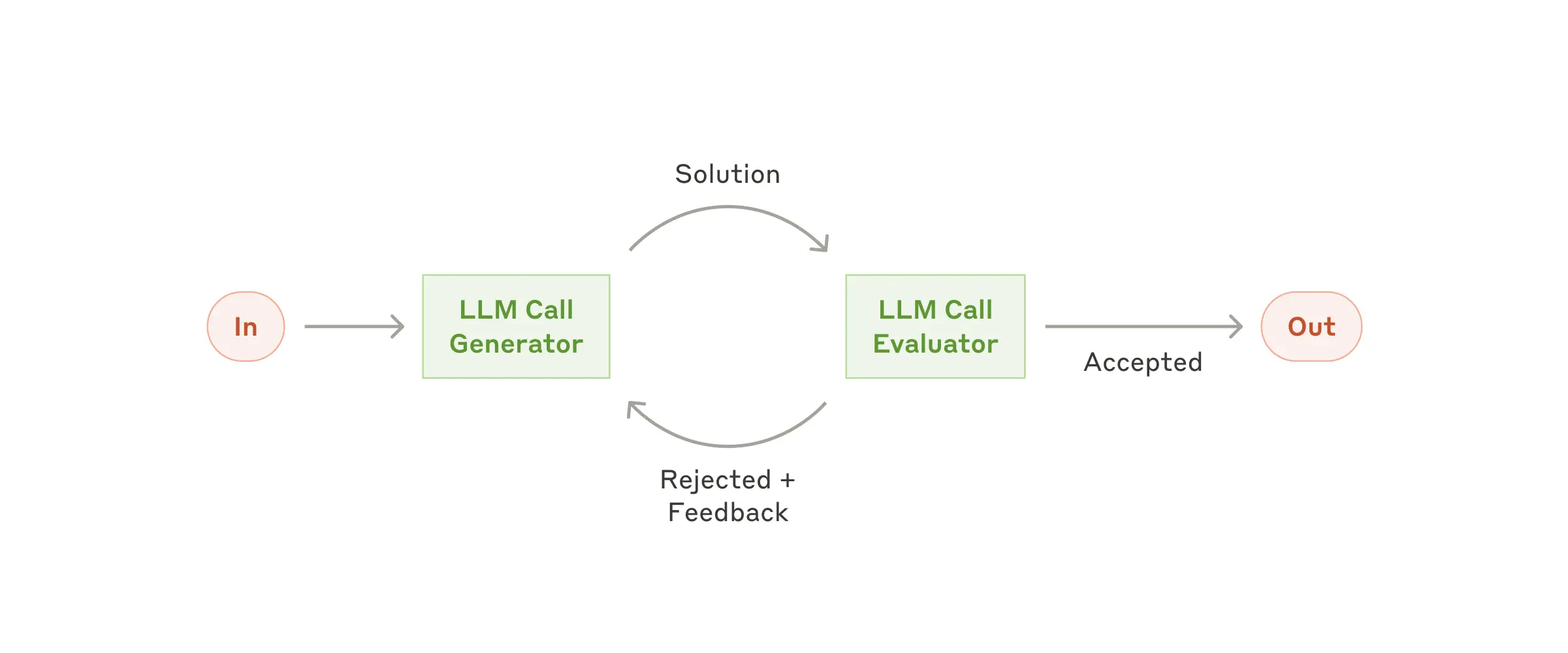

One LLM (the “optimizer”) refines a response, another (the “evaluator”) critiques it until a response exceeds a quality criteria.

[!NOTE]

Example

optimizer = Agent(name="cover_letter_writer", server_names=["fetch"], instruction="Generate a cover letter ...")

evaluator = Agent(name="critiquer", instruction="Evaluate clarity, specificity, relevance...")

eo_llm = EvaluatorOptimizerLLM(

optimizer=optimizer,

evaluator=evaluator,

llm_factory=OpenAIAugmentedLLM,

min_rating=QualityRating.EXCELLENT, # Keep iterating until the minimum quality bar is reached

)

result = await eo_llm.generate_str("Write a job cover letter for an AI framework developer role at LastMile AI.")

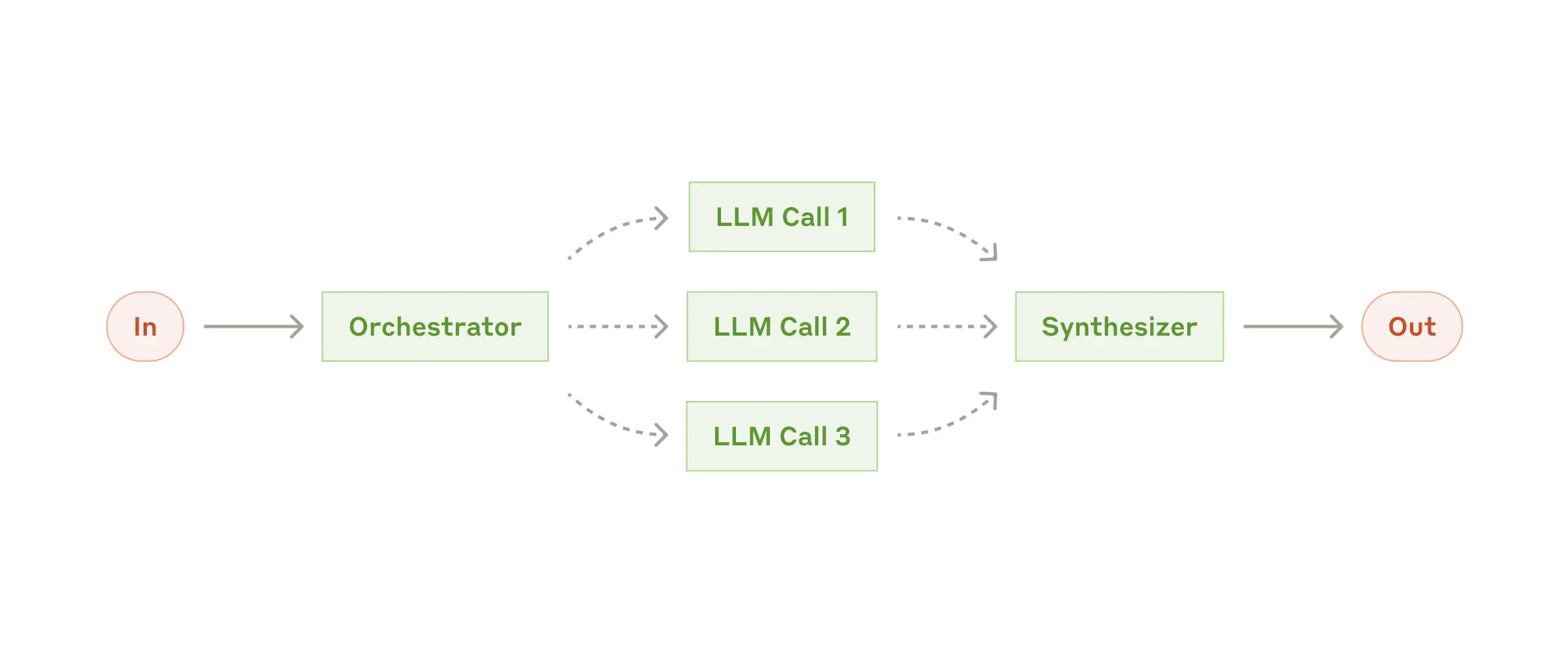

print("Final refined cover letter:", result)A higher-level LLM generates a plan, then assigns them to sub-agents, and synthesizes the results. The Orchestrator workflow automatically parallelizes steps that can be done in parallel, and blocks on dependencies.

[!NOTE]

Example

finder_agent = Agent(name="finder", server_names=["fetch", "filesystem"])

writer_agent = Agent(name="writer", server_names=["filesystem"])

proofreader = Agent(name="proofreader", ...)

fact_checker = Agent(name="fact_checker", ...)

style_enforcer = Agent(name="style_enforcer", instructions="Use APA style guide from ...", server_names=["fetch"])

orchestrator = Orchestrator(

llm_factory=AnthropicAugmentedLLM,

available_agents=[finder_agent, writer_agent, proofreader, fact_checker, style_enforcer],

)

task = "Load short_story.md, evaluate it, produce a graded_report.md with multiple feedback aspects."

result = await orchestrator.generate_str(task, RequestParams(model="gpt-4o"))

print(result)OpenAI has an experimental multi-agent pattern called Swarm, which we provide a model-agnostic reference implementation for in mcp-agent.

The mcp-agent Swarm pattern works seamlessly with MCP servers, and is exposed as an AugmentedLLM, allowing for composability with other patterns above.

[!NOTE]

Example

triage_agent = SwarmAgent(...)

flight_mod_agent = SwarmAgent(...)

lost_baggage_agent = SwarmAgent(...)

# The triage agent decides whether to route to flight_mod_agent or lost_baggage_agent

swarm = AnthropicSwarm(agent=triage_agent, context_variables={...})

test_input = "My bag was not delivered!"

result = await swarm.generate_str(test_input)

print("Result:", result)An example of composability is using an Evaluator-Optimizer workflow as the planner LLM inside the Orchestrator workflow. Generating a high-quality plan to execute is important for robust behavior, and an evaluator-optimizer can help ensure that.

Doing so is seamless in mcp-agent, because each workflow is implemented as an AugmentedLLM.

Example

optimizer = Agent(name="plan_optimizer", server_names=[...], instruction="Generate a plan given an objective ...")

evaluator = Agent(name="plan_evaluator", instruction="Evaluate logic, ordering and precision of plan......")

planner_llm = EvaluatorOptimizerLLM(

optimizer=optimizer,

evaluator=evaluator,

llm_factory=OpenAIAugmentedLLM,

min_rating=QualityRating.EXCELLENT,

)

orchestrator = Orchestrator(

llm_factory=AnthropicAugmentedLLM,

available_agents=[finder_agent, writer_agent, proofreader, fact_checker, style_enforcer],

planner=planner_llm # It's that simple

)

...Signaling: The framework can pause/resume tasks. The agent or LLM might “signal” that it needs user input, so the workflow awaits. A developer may signal during a workflow to seek approval or review before continuing with a workflow.

Human Input: If an Agent has a human_input_callback, the LLM can call a __human_input__ tool to request user input mid-workflow.

Example

The Swarm example shows this in action.

from mcp_agent.human_input.console_handler import console_input_callback

lost_baggage = SwarmAgent(

name="Lost baggage traversal",

instruction=lambda context_variables: f"""

{

FLY_AIR_AGENT_PROMPT.format(

customer_context=context_variables.get("customer_context", "None"),

flight_context=context_variables.get("flight_context", "None"),

)

}\n Lost baggage policy: policies/lost_baggage_policy.md""",

functions=[

escalate_to_agent,

initiate_baggage_search,

transfer_to_triage,

case_resolved,

],

server_names=["fetch", "filesystem"],

human_input_callback=console_input_callback, # Request input from the console

)Create an mcp_agent.config.yaml and define secrets via either a gitignored mcp_agent.secrets.yaml or a local .env. In production, prefer MCP_APP_SETTINGS_PRELOAD to avoid writing plaintext secrets to disk.

mcp-agent makes it trivial to connect to MCP servers. Create an mcp_agent.config.yaml to define server configuration under the mcp section:

mcp:

servers:

fetch:

command: "uvx"

args: ["mcp-server-fetch"]

description: "Fetch content at URLs from the world wide web"Manage the lifecycle of an MCP server within an async context manager:

from mcp_agent.mcp.gen_client import gen_client

async with gen_client("fetch") as fetch_client:

# Fetch server is initialized and ready to use

result = await fetch_client.list_tools()

# Fetch server is automatically disconnected/shutdownThe gen_client function makes it easy to spin up connections to MCP servers.

In many cases, you want an MCP server to stay online for persistent use (e.g. in a multi-step tool use workflow). For persistent connections, use:

-

connectanddisconnect

from mcp_agent.mcp.gen_client import connect, disconnect

fetch_client = None

try:

fetch_client = connect("fetch")

result = await fetch_client.list_tools()

finally:

disconnect("fetch")-

MCPConnectionManagerFor even more fine-grained control over server connections, you can use the MCPConnectionManager.

Example

from mcp_agent.context import get_current_context

from mcp_agent.mcp.mcp_connection_manager import MCPConnectionManager

context = get_current_context()

connection_manager = MCPConnectionManager(context.server_registry)

async with connection_manager:

fetch_client = await connection_manager.get_server("fetch") # Initializes fetch server

result = fetch_client.list_tool()

fetch_client2 = await connection_manager.get_server("fetch") # Reuses same server connection

# All servers managed by connection manager are automatically disconnected/shut downMCPAggregator acts as a "server-of-servers".

It provides a single MCP server interface for interacting with multiple MCP servers.

This allows you to expose tools from multiple servers to LLM applications.

Example

from mcp_agent.mcp.mcp_aggregator import MCPAggregator

aggregator = await MCPAggregator.create(server_names=["fetch", "filesystem"])

async with aggregator:

# combined list of tools exposed by 'fetch' and 'filesystem' servers

tools = await aggregator.list_tools()

# namespacing -- invokes the 'fetch' server to call the 'fetch' tool

fetch_result = await aggregator.call_tool(name="fetch-fetch", arguments={"url": "https://www.anthropic.com/research/building-effective-agents"})

# no namespacing -- first server in the aggregator exposing that tool wins

read_file_result = await aggregator.call_tool(name="read_file", arguments={})We welcome any and all kinds of contributions. Please see the CONTRIBUTING guidelines to get started.

There have already been incredible community contributors who are driving this project forward:

-

Shaun Smith (@evalstate) -- who has been leading the charge on countless complex improvements, both to

mcp-agentand generally to the MCP ecosystem. - Jerron Lim (@StreetLamb) -- who has contributed countless hours and excellent examples, and great ideas to the project.

- Jason Summer (@jasonsum) -- for identifying several issues and adapting his Gmail MCP server to work with mcp-agent

We will be adding a detailed roadmap (ideally driven by your feedback). The current set of priorities include:

- Durable Execution -- allow workflows to pause/resume and serialize state so they can be replayed or be paused indefinitely. We are working on integrating Temporal for this purpose.

- Memory -- adding support for long-term memory

- Streaming -- Support streaming listeners for iterative progress

-

Additional MCP capabilities -- Expand beyond tool calls to support:

- Resources

- Prompts

- Notifications

mcp-agent provides a streamlined approach to building AI agents using capabilities exposed by MCP (Model Context Protocol) servers.

MCP is quite low-level, and this framework handles the mechanics of connecting to servers, working with LLMs, handling external signals (like human input) and supporting persistent state via durable execution. That lets you, the developer, focus on the core business logic of your AI application.

Core benefits:

- 🤝 Interoperability: ensures that any tool exposed by any number of MCP servers can seamlessly plug in to your agents.

- ⛓️ Composability & Customizability: Implements well-defined workflows, but in a composable way that enables compound workflows, and allows full customization across model provider, logging, orchestrator, etc.

- 💻 Programmatic control flow: Keeps things simple as developers just write code instead of thinking in graphs, nodes and edges. For branching logic, you write

ifstatements. For cycles, usewhileloops. - 🖐️ Human Input & Signals: Supports pausing workflows for external signals, such as human input, which are exposed as tool calls an Agent can make.

No, you can use mcp-agent anywhere, since it handles MCPClient creation for you. This allows you to leverage MCP servers outside of MCP hosts like Claude Desktop.

Here's all the ways you can set up your mcp-agent application:

You can expose mcp-agent applications as MCP servers themselves (see example), allowing MCP clients to interface with sophisticated AI workflows using the standard tools API of MCP servers. This is effectively a server-of-servers.

You can embed mcp-agent in an MCP client directly to manage the orchestration across multiple MCP servers.

You can use mcp-agent applications in a standalone fashion (i.e. they aren't part of an MCP client). The examples are all standalone applications.

I debated naming this project silsila (سلسلہ), which means chain of events in Urdu. mcp-agent is more matter-of-fact, but there's still an easter egg in the project paying homage to silsila.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for mcp-agent

Similar Open Source Tools

mcp-agent

mcp-agent is a simple, composable framework designed to build agents using the Model Context Protocol. It handles the lifecycle of MCP server connections and implements patterns for building production-ready AI agents in a composable way. The framework also includes OpenAI's Swarm pattern for multi-agent orchestration in a model-agnostic manner, making it the simplest way to build robust agent applications. It is purpose-built for the shared protocol MCP, lightweight, and closer to an agent pattern library than a framework. mcp-agent allows developers to focus on the core business logic of their AI applications by handling mechanics such as server connections, working with LLMs, and supporting external signals like human input.

GraphRAG-SDK

Build fast and accurate GenAI applications with GraphRAG SDK, a specialized toolkit for building Graph Retrieval-Augmented Generation (GraphRAG) systems. It integrates knowledge graphs, ontology management, and state-of-the-art LLMs to deliver accurate, efficient, and customizable RAG workflows. The SDK simplifies the development process by automating ontology creation, knowledge graph agent creation, and query handling, enabling users to interact and query their knowledge graphs effectively. It supports multi-agent systems and orchestrates agents specialized in different domains. The SDK is optimized for FalkorDB, ensuring high performance and scalability for large-scale applications. By leveraging knowledge graphs, it enables semantic relationships and ontology-driven queries that go beyond standard vector similarity, enhancing retrieval-augmented generation capabilities.

AgentFly

AgentFly is an extensible framework for building LLM agents with reinforcement learning. It supports multi-turn training by adapting traditional RL methods with token-level masking. It features a decorator-based interface for defining tools and reward functions, enabling seamless extension and ease of use. To support high-throughput training, it implemented asynchronous execution of tool calls and reward computations, and designed a centralized resource management system for scalable environment coordination. A suite of prebuilt tools and environments are provided.

exospherehost

Exosphere is an open source infrastructure designed to run AI agents at scale for large data and long running flows. It allows developers to define plug and playable nodes that can be run on a reliable backbone in the form of a workflow, with features like dynamic state creation at runtime, infinite parallel agents, persistent state management, and failure handling. This enables the deployment of production agents that can scale beautifully to build robust autonomous AI workflows.

semantic-kernel

Semantic Kernel is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. Semantic Kernel achieves this by allowing you to define plugins that can be chained together in just a few lines of code. What makes Semantic Kernel _special_ , however, is its ability to _automatically_ orchestrate plugins with AI. With Semantic Kernel planners, you can ask an LLM to generate a plan that achieves a user's unique goal. Afterwards, Semantic Kernel will execute the plan for the user.

sdialog

SDialog is an MIT-licensed open-source toolkit for building, simulating, and evaluating LLM-based conversational agents end-to-end. It aims to bridge agent construction, user simulation, dialog generation, and evaluation in a single reproducible workflow, enabling the generation of reliable, controllable dialog systems or data at scale. The toolkit standardizes a Dialog schema, offers persona-driven multi-agent simulation with LLMs, provides composable orchestration for precise control over behavior and flow, includes built-in evaluation metrics, and offers mechanistic interpretability. It allows for easy creation of user-defined components and interoperability across various AI platforms.

bee-agent-framework

The Bee Agent Framework is an open-source tool for building, deploying, and serving powerful agentic workflows at scale. It provides AI agents, tools for creating workflows in Javascript/Python, a code interpreter, memory optimization strategies, serialization for pausing/resuming workflows, traceability features, production-level control, and upcoming features like model-agnostic support and a chat UI. The framework offers various modules for agents, llms, memory, tools, caching, errors, adapters, logging, serialization, and more, with a roadmap including MLFlow integration, JSON support, structured outputs, chat client, base agent improvements, guardrails, and evaluation.

notte

Notte is a web browser designed specifically for LLM agents, providing a language-first web navigation experience without the need for DOM/HTML parsing. It transforms websites into structured, navigable maps described in natural language, enabling users to interact with the web using natural language commands. By simplifying browser complexity, Notte allows LLM policies to focus on conversational reasoning and planning, reducing token usage, costs, and latency. The tool supports various language model providers and offers a reinforcement learning style action space and controls for full navigation control.

agentica

Agentica is a specialized Agentic AI library focused on LLM Function Calling. Users can provide Swagger/OpenAPI documents or TypeScript class types to Agentica for seamless functionality. The library simplifies AI development by handling various tasks effortlessly.

refact-lsp

Refact Agent is a small executable written in Rust as part of the Refact Agent project. It lives inside your IDE to keep AST and VecDB indexes up to date, supporting connection graphs between definitions and usages in popular programming languages. It functions as an LSP server, offering code completion, chat functionality, and integration with various tools like browsers, databases, and debuggers. Users can interact with it through a Text UI in the command line.

DeepResearch

Tongyi DeepResearch is an agentic large language model with 30.5 billion total parameters, designed for long-horizon, deep information-seeking tasks. It demonstrates state-of-the-art performance across various search benchmarks. The model features a fully automated synthetic data generation pipeline, large-scale continual pre-training on agentic data, end-to-end reinforcement learning, and compatibility with two inference paradigms. Users can download the model directly from HuggingFace or ModelScope. The repository also provides benchmark evaluation scripts and information on the Deep Research Agent Family.

lionagi

LionAGI is a robust framework for orchestrating multi-step AI operations with precise control. It allows users to bring together multiple models, advanced reasoning, tool integrations, and custom validations in a single coherent pipeline. The framework is structured, expandable, controlled, and transparent, offering features like real-time logging, message introspection, and tool usage tracking. LionAGI supports advanced multi-step reasoning with ReAct, integrates with Anthropic's Model Context Protocol, and provides observability and debugging tools. Users can seamlessly orchestrate multiple models, integrate with Claude Code CLI SDK, and leverage a fan-out fan-in pattern for orchestration. The framework also offers optional dependencies for additional functionalities like reader tools, local inference support, rich output formatting, database support, and graph visualization.

langroid

Langroid is a Python framework that makes it easy to build LLM-powered applications. It uses a multi-agent paradigm inspired by the Actor Framework, where you set up Agents, equip them with optional components (LLM, vector-store and tools/functions), assign them tasks, and have them collaboratively solve a problem by exchanging messages. Langroid is a fresh take on LLM app-development, where considerable thought has gone into simplifying the developer experience; it does not use Langchain.

rl

TorchRL is an open-source Reinforcement Learning (RL) library for PyTorch. It provides pytorch and **python-first** , low and high level abstractions for RL that are intended to be **efficient** , **modular** , **documented** and properly **tested**. The code is aimed at supporting research in RL. Most of it is written in python in a highly modular way, such that researchers can easily swap components, transform them or write new ones with little effort.

DB-GPT

DB-GPT is a personal database administrator that can solve database problems by reading documents, using various tools, and writing analysis reports. It is currently undergoing an upgrade. **Features:** * **Online Demo:** * Import documents into the knowledge base * Utilize the knowledge base for well-founded Q&A and diagnosis analysis of abnormal alarms * Send feedbacks to refine the intermediate diagnosis results * Edit the diagnosis result * Browse all historical diagnosis results, used metrics, and detailed diagnosis processes * **Language Support:** * English (default) * Chinese (add "language: zh" in config.yaml) * **New Frontend:** * Knowledgebase + Chat Q&A + Diagnosis + Report Replay * **Extreme Speed Version for localized llms:** * 4-bit quantized LLM (reducing inference time by 1/3) * vllm for fast inference (qwen) * Tiny LLM * **Multi-path extraction of document knowledge:** * Vector database (ChromaDB) * RESTful Search Engine (Elasticsearch) * **Expert prompt generation using document knowledge** * **Upgrade the LLM-based diagnosis mechanism:** * Task Dispatching -> Concurrent Diagnosis -> Cross Review -> Report Generation * Synchronous Concurrency Mechanism during LLM inference * **Support monitoring and optimization tools in multiple levels:** * Monitoring metrics (Prometheus) * Flame graph in code level * Diagnosis knowledge retrieval (dbmind) * Logical query transformations (Calcite) * Index optimization algorithms (for PostgreSQL) * Physical operator hints (for PostgreSQL) * Backup and Point-in-time Recovery (Pigsty) * **Continuously updated papers and experimental reports** This project is constantly evolving with new features. Don't forget to star ⭐ and watch 👀 to stay up to date.

typedai

TypedAI is a TypeScript-first AI platform designed for developers to create and run autonomous AI agents, LLM based workflows, and chatbots. It offers advanced autonomous agents, software developer agents, pull request code review agent, AI chat interface, Slack chatbot, and supports various LLM services. The platform features configurable Human-in-the-loop settings, functional callable tools/integrations, CLI and Web UI interface, and can be run locally or deployed on the cloud with multi-user/SSO support. It leverages the Python AI ecosystem through executing Python scripts/packages and provides flexible run/deploy options like single user mode, Firestore & Cloud Run deployment, and multi-user SSO enterprise deployment. TypedAI also includes UI examples, code examples, and automated LLM function schemas for seamless development and execution of AI workflows.

For similar tasks

OpenAGI

OpenAGI is an AI agent creation package designed for researchers and developers to create intelligent agents using advanced machine learning techniques. The package provides tools and resources for building and training AI models, enabling users to develop sophisticated AI applications. With a focus on collaboration and community engagement, OpenAGI aims to facilitate the integration of AI technologies into various domains, fostering innovation and knowledge sharing among experts and enthusiasts.

GPTSwarm

GPTSwarm is a graph-based framework for LLM-based agents that enables the creation of LLM-based agents from graphs and facilitates the customized and automatic self-organization of agent swarms with self-improvement capabilities. The library includes components for domain-specific operations, graph-related functions, LLM backend selection, memory management, and optimization algorithms to enhance agent performance and swarm efficiency. Users can quickly run predefined swarms or utilize tools like the file analyzer. GPTSwarm supports local LM inference via LM Studio, allowing users to run with a local LLM model. The framework has been accepted by ICML2024 and offers advanced features for experimentation and customization.

AgentForge

AgentForge is a low-code framework tailored for the rapid development, testing, and iteration of AI-powered autonomous agents and Cognitive Architectures. It is compatible with a range of LLM models and offers flexibility to run different models for different agents based on specific needs. The framework is designed for seamless extensibility and database-flexibility, making it an ideal playground for various AI projects. AgentForge is a beta-testing ground and future-proof hub for crafting intelligent, model-agnostic autonomous agents.

atomic_agents

Atomic Agents is a modular and extensible framework designed for creating powerful applications. It follows the principles of Atomic Design, emphasizing small and single-purpose components. Leveraging Pydantic for data validation and serialization, the framework offers a set of tools and agents that can be combined to build AI applications. It depends on the Instructor package and supports various APIs like OpenAI, Cohere, Anthropic, and Gemini. Atomic Agents is suitable for developers looking to create AI agents with a focus on modularity and flexibility.

LongRoPE

LongRoPE is a method to extend the context window of large language models (LLMs) beyond 2 million tokens. It identifies and exploits non-uniformities in positional embeddings to enable 8x context extension without fine-tuning. The method utilizes a progressive extension strategy with 256k fine-tuning to reach a 2048k context. It adjusts embeddings for shorter contexts to maintain performance within the original window size. LongRoPE has been shown to be effective in maintaining performance across various tasks from 4k to 2048k context lengths.

ax

Ax is a Typescript library that allows users to build intelligent agents inspired by agentic workflows and the Stanford DSP paper. It seamlessly integrates with multiple Large Language Models (LLMs) and VectorDBs to create RAG pipelines or collaborative agents capable of solving complex problems. The library offers advanced features such as streaming validation, multi-modal DSP, and automatic prompt tuning using optimizers. Users can easily convert documents of any format to text, perform smart chunking, embedding, and querying, and ensure output validation while streaming. Ax is production-ready, written in Typescript, and has zero dependencies.

Awesome-AI-Agents

Awesome-AI-Agents is a curated list of projects, frameworks, benchmarks, platforms, and related resources focused on autonomous AI agents powered by Large Language Models (LLMs). The repository showcases a wide range of applications, multi-agent task solver projects, agent society simulations, and advanced components for building and customizing AI agents. It also includes frameworks for orchestrating role-playing, evaluating LLM-as-Agent performance, and connecting LLMs with real-world applications through platforms and APIs. Additionally, the repository features surveys, paper lists, and blogs related to LLM-based autonomous agents, making it a valuable resource for researchers, developers, and enthusiasts in the field of AI.

CodeFuse-muAgent

CodeFuse-muAgent is a Multi-Agent framework designed to streamline Standard Operating Procedure (SOP) orchestration for agents. It integrates toolkits, code libraries, knowledge bases, and sandbox environments for rapid construction of complex Multi-Agent interactive applications. The framework enables efficient execution and handling of multi-layered and multi-dimensional tasks.

For similar jobs

goat

GOAT (Great Onchain Agent Toolkit) is an open-source framework designed to simplify the process of making AI agents perform onchain actions by providing a provider-agnostic solution that abstracts away the complexities of interacting with blockchain tools such as wallets, token trading, and smart contracts. It offers a catalog of ready-made blockchain actions for agent developers and allows dApp/smart contract developers to develop plugins for easy access by agents. With compatibility across popular agent frameworks, support for multiple blockchains and wallet providers, and customizable onchain functionalities, GOAT aims to streamline the integration of blockchain capabilities into AI agents.

typedai

TypedAI is a TypeScript-first AI platform designed for developers to create and run autonomous AI agents, LLM based workflows, and chatbots. It offers advanced autonomous agents, software developer agents, pull request code review agent, AI chat interface, Slack chatbot, and supports various LLM services. The platform features configurable Human-in-the-loop settings, functional callable tools/integrations, CLI and Web UI interface, and can be run locally or deployed on the cloud with multi-user/SSO support. It leverages the Python AI ecosystem through executing Python scripts/packages and provides flexible run/deploy options like single user mode, Firestore & Cloud Run deployment, and multi-user SSO enterprise deployment. TypedAI also includes UI examples, code examples, and automated LLM function schemas for seamless development and execution of AI workflows.

appworld

AppWorld is a high-fidelity execution environment of 9 day-to-day apps, operable via 457 APIs, populated with digital activities of ~100 people living in a simulated world. It provides a benchmark of natural, diverse, and challenging autonomous agent tasks requiring rich and interactive coding. The repository includes implementations of AppWorld apps and APIs, along with tests. It also introduces safety features for code execution and provides guides for building agents and extending the benchmark.

mcp-agent

mcp-agent is a simple, composable framework designed to build agents using the Model Context Protocol. It handles the lifecycle of MCP server connections and implements patterns for building production-ready AI agents in a composable way. The framework also includes OpenAI's Swarm pattern for multi-agent orchestration in a model-agnostic manner, making it the simplest way to build robust agent applications. It is purpose-built for the shared protocol MCP, lightweight, and closer to an agent pattern library than a framework. mcp-agent allows developers to focus on the core business logic of their AI applications by handling mechanics such as server connections, working with LLMs, and supporting external signals like human input.

openrouter-kit

OpenRouter Kit is a powerful TypeScript/JavaScript library for interacting with the OpenRouter API. It simplifies working with LLMs by providing a high-level API for chats, dialogue history management, tool calls with error handling, security module, and cost tracking. Ideal for building chatbots, AI agents, and integrating LLMs into applications.

starknet-agentic

Open-source stack for giving AI agents wallets, identity, reputation, and execution rails on Starknet. `starknet-agentic` is a monorepo with Cairo smart contracts for agent wallets, identity, reputation, and validation, TypeScript packages for MCP tools, A2A integration, and payment signing, reusable skills for common Starknet agent capabilities, and examples and docs for integration. It provides contract primitives + runtime tooling in one place for integrating agents. The repo includes various layers such as Agent Frameworks / Apps, Integration + Runtime Layer, Packages / Tooling Layer, Cairo Contract Layer, and Starknet L2. It aims for portability of agent integrations without giving up Starknet strengths, with a cross-chain interop strategy and skills marketplace. The repository layout consists of directories for contracts, packages, skills, examples, docs, and website.

SwiftAgent

A type-safe, declarative framework for building AI agents in Swift, SwiftAgent is built on Apple FoundationModels. It allows users to compose agents by combining Steps in a declarative syntax similar to SwiftUI. The framework ensures compile-time checked input/output types, native Apple AI integration, structured output generation, and built-in security features like permission, sandbox, and guardrail systems. SwiftAgent is extensible with MCP integration, distributed agents, and a skills system. Users can install SwiftAgent with Swift 6.2+ on iOS 26+, macOS 26+, or Xcode 26+ using Swift Package Manager.

agent-device

CLI tool for controlling iOS and Android devices for AI agents, with core commands like open, back, home, press, and more. It supports minimal dependencies, TypeScript execution on Node 22+, and is in early development. The tool allows for automation flows, session management, semantic finding, assertions, replay updates, and settings helpers for simulators. It also includes backends for iOS snapshots, app resolution, iOS-specific notes, testing, and building. Contributions are welcome, and the project is maintained by Callstack, a group of React and React Native enthusiasts.