ChatRex

Code for ChatRex: Taming Multimodal LLM for Joint Perception and Understanding

Stars: 124

ChatRex is a Multimodal Large Language Model (MLLM) designed to seamlessly integrate fine-grained object perception and robust language understanding. By adopting a decoupled architecture with a retrieval-based approach for object detection and leveraging high-resolution visual inputs, ChatRex addresses key challenges in perception tasks. It is powered by the Rexverse-2M dataset with diverse image-region-text annotations. ChatRex can be applied to various scenarios requiring fine-grained perception, such as object detection, grounded conversation, grounded image captioning, and region understanding.

README:

- Contents

- 1. Introduction 📚

- 2. Installation 🛠️

- 3. Usage 🚀

- 4. Gradio Demos 🎨

- 5. RexVerse-2M Dataset

- 6. LICENSE

- BibTeX 📚

- 2025-1-23: RexVerse-2M dataset is now available at https://huggingface.co/datasets/IDEA-Research/Rexverse-2M

TL;DR: ChatRex is an MLLM skilled in perception that can respond to questions while simultaneously grounding its answers to the referenced objects.

ChatRex is a Multimodal Large Language Model (MLLM) designed to seamlessly integrate fine-grained object perception and robust language understanding. By adopting a decoupled architecture with a retrieval-based approach for object detection and leveraging high-resolution visual inputs, ChatRex addresses key challenges in perception tasks. It is powered by the Rexverse-2M dataset with diverse image-region-text annotations. ChatRex can be applied to various scenarios requiring fine-grained perception, such as object detection, grounded conversation, grounded image captioning and region understanding.

conda install -n chatrex python=3.9

pip install torch==2.1.2 torchvision==0.16.2 --index-url https://download.pytorch.org/whl/cu121

pip install -v -e .

# install deformable attention for universal proposal network

cd chatrex/upn/ops

pip install -v -e .We provide model checkpoints for both the Universal Proposal Network (UPN) and the ChatRex model. You can download the pre-trained models from the following links:

Or you can also using the following command to download the pre-trained models:

mkdir checkpoints

mkdir checkpoints/upn

# download UPN checkpoint

wget -O checkpoints/upn/upn_large.pth https://github.com/IDEA-Research/ChatRex/releases/download/upn-large/upn_large.pth

# Download ChatRex checkpoint from Hugging Face

git lfs install

git clone https://huggingface.co/IDEA-Research/ChatRex-7B checkpoints/chatrexTo verify the installation of the Universal Proposal Network (UPN), run the following command:

python tests/test_upn_install.pyIf the installation is successful, you will get two visualization images of both fine-grained proposal and coarse-grained proposal in tests folder.

To verify the installation of the ChatRex model, run the following command:

python tests/test_chatrex_install.pyIf the installation is successful, you will get an output like this:

prediction: <obj0> shows a brown dog lying on a bed. The dog is resting comfortably, possibly sleeping, and is positioned on the left side of the bed

Universal Proposal Network (UPN) is a robust object proposal model designed as part of ChatRex to enable comprehensive and accurate object detection across diverse granularities and domains. Built upon T-Rex2, UPN is a DETR-based model with a dual-granularity prompt tuning strategy, combining fine-grained (e.g., part-level) and coarse-grained (e.g., instance-level) detection.

Example Code for UPN

import torch

from PIL import Image

from tools.visualize import plot_boxes_to_image

from chatrex.upn import UPNWrapper

ckpt_path = "checkpoints/upn_checkpoints/upn_large.pth"

test_image_path = "tests/images/test_upn.jpeg"

model = UPNWrapper(ckpt_path)

# fine-grained prompt

fine_grained_proposals = model.inference(

test_image_path, prompt_type="fine_grained_prompt"

)

# filter by score (default: 0.3) and nms (default: 0.8)

fine_grained_filtered_proposals = model.filter(

fine_grained_proposals, min_score=0.3, nms_value=0.8

)

## output is a dict with keys: "original_xyxy_boxes", "scores"

## - "original_xyxy_boxes": list of boxes in xyxy format in shape (B, N, 4)

## - "scores": list of scores for each box in shape (B, N)

# coarse-grained prompt

coarse_grained_proposals = model.inference(

test_image_path, prompt_type="coarse_grained_prompt"

)

coarse_grained_filtered_proposals = model.filter(

coarse_grained_proposals, min_score=0.3, nms_value=0.8

)

## output is a dict with keys: "original_xyxy_boxes", "scores"

## - "original_xyxy_boxes": list of boxes in xyxy format in shape (B, N, 4)

## - "scores": list of scores for each box in shape (B, N)We also provide a visualization tool to visualize the object proposals generated by UPN. You can use the following code to visualize the object proposals:

Example Code for UPN Visualization

from chatrex.tools.visualize import plot_boxes_to_image

image = Image.open(test_image_path)

fine_grained_vis_image, _ = plot_boxes_to_image(

image.copy(),

fine_grained_filtered_proposals["original_xyxy_boxes"][0],

fine_grained_filtered_proposals["scores"][0],

)

fine_grained_vis_image.save("tests/test_image_fine_grained.jpeg")

print(f"fine-grained proposal is saved at tests/test_image_fine_grained.jpeg")

coarse_grained_vis_image, _ = plot_boxes_to_image(

image.copy(),

coarse_grained_filtered_proposals["original_xyxy_boxes"][0],

coarse_grained_filtered_proposals["scores"][0],

)

coarse_grained_vis_image.save("tests/test_image_coarse_grained.jpeg")

print(f"coarse-grained proposal is saved at tests/test_image_coarse_grained.jpeg")ChatRex takes three inputs: image, text prompt, and box input. For the box input, you can either use the object proposals generated by UPN or provide your own box input (user drawn boxes). We have wrapped the ChatRex model to huggingface transformers format for easy usage. ChatRex can be used for various tasks and we provide example code for each task below.

Example Prompt for detection, grounding, referring tasks:

# Single Object Detection

Please detect dog in this image. Answer the question with object indexes.

Please detect the man in yellow shirt in this image. Answer the question with object indexes.

# multiple object detection, use ; to separate the objects

Please detect person; pigeon in this image. Answer the question with object indexes.

Please detect person in the car; cat below the table in this image. Answer the question with object indexes.

Example Code

import torch

from PIL import Image

from transformers import AutoModelForCausalLM, AutoProcessor, GenerationConfig

from chatrex.tools.visualize import visualize_chatrex_output

from chatrex.upn import UPNWrapper

if __name__ == "__main__":

# load the processor

processor = AutoProcessor.from_pretrained(

"IDEA-Research/ChatRex-7B",

trust_remote_code=True,

device_map="cuda",

)

print(f"loading chatrex model...")

# load chatrex model

model = AutoModelForCausalLM.from_pretrained(

"IDEA-Research/ChatRex-7B",

trust_remote_code=True,

use_safetensors=True,

).to("cuda")

# load upn model

print(f"loading upn model...")

ckpt_path = "checkpoints/upn_checkpoints/upn_large.pth"

model_upn = UPNWrapper(ckpt_path)

test_image_path = "tests/images/test_chatrex_detection.jpg"

# get upn predictions

fine_grained_proposals = model_upn.inference(

test_image_path, prompt_type="fine_grained_prompt"

)

fine_grained_filtered_proposals = model_upn.filter(

fine_grained_proposals, min_score=0.3, nms_value=0.8

)

inputs = processor.process(

image=Image.open(test_image_path),

question="Please detect person; pigeon in this image. Answer the question with object indexes.",

bbox=fine_grained_filtered_proposals["original_xyxy_boxes"][

0

], # box in xyxy format

)

inputs = {k: v.to("cuda") for k, v in inputs.items()}

# perform inference

gen_config = GenerationConfig(

max_new_tokens=512,

do_sample=False,

eos_token_id=processor.tokenizer.eos_token_id,

pad_token_id=(

processor.tokenizer.pad_token_id

if processor.tokenizer.pad_token_id is not None

else processor.tokenizer.eos_token_id

),

)

with torch.autocast(device_type="cuda", enabled=True, dtype=torch.bfloat16):

prediction = model.generate(

inputs, gen_config=gen_config, tokenizer=processor.tokenizer

)

print(f"prediction:", prediction)

# visualize the prediction

vis_image = visualize_chatrex_output(

Image.open(test_image_path),

fine_grained_filtered_proposals["original_xyxy_boxes"][0],

prediction,

font_size=15,

draw_width=5,

)

vis_image.save("tests/test_chatrex_detection.jpeg")

print(f"prediction is saved at tests/test_chatrex_detection.jpeg")The output from LLM is like:

<ground>person</ground><objects><obj10><obj14><obj15><obj27><obj28><obj32><obj33><obj35><obj38><obj47><obj50></objects>

<ground>pigeon</ground><objects><obj0><obj1><obj2><obj3><obj4><obj5><obj6><obj7><obj8><obj9><obj11><obj12><obj13><obj16><obj17><obj18><obj19><obj20><obj21><obj22><obj23><obj24><obj25><obj26><obj29><obj31><obj37><obj39><obj40><obj41><obj44><obj49></objects>

The visualization of the output is like:

Example Prompt for Region Caption tasks:

# Single Object Detection

## caption in category name

What is the category name of <obji>? Answer the question with its category name in free format.

## caption in short phrase

Can you provide me with a short phrase to describe <obji>? Answer the question with a short phrase.

## caption in referring style

Can you provide me with a brief description of <obji>? Answer the question with brief description.

## caption in one sentence

Can you provide me with a one sentence of <obji>? Answer the question with one sentence description.

# multiple object detection, use ; to separate the objects

Example Code

import torch

from PIL import Image

from transformers import AutoModelForCausalLM, AutoProcessor, GenerationConfig

from chatrex.tools.visualize import visualize_chatrex_output

from chatrex.upn import UPNWrapper

if __name__ == "__main__":

# load the processor

processor = AutoProcessor.from_pretrained(

"IDEA-Research/ChatRex-7B",

trust_remote_code=True,

device_map="cuda",

)

print(f"loading chatrex model...")

# load chatrex model

model = AutoModelForCausalLM.from_pretrained(

"IDEA-Research/ChatRex-7B",

trust_remote_code=True,

use_safetensors=True,

).to("cuda")

test_image_path = "tests/images/test_chatrex_install.jpg"

inputs = processor.process(

image=Image.open(test_image_path),

question="Can you provide a one sentence description of <obj0> in the image? Answer the question with a one sentence description.",

bbox=[[73.88417, 56.62228, 227.69223, 216.34338]],

)

inputs = {k: v.to("cuda") for k, v in inputs.items()}

# perform inference

gen_config = GenerationConfig(

max_new_tokens=512,

do_sample=False,

eos_token_id=processor.tokenizer.eos_token_id,

pad_token_id=(

processor.tokenizer.pad_token_id

if processor.tokenizer.pad_token_id is not None

else processor.tokenizer.eos_token_id

),

)

with torch.autocast(device_type="cuda", enabled=True, dtype=torch.bfloat16):

prediction = model.generate(

inputs, gen_config=gen_config, tokenizer=processor.tokenizer

)

print(f"prediction:", prediction)

# visualize the prediction

vis_image = visualize_chatrex_output(

Image.open(test_image_path),

[[73.88417, 56.62228, 227.69223, 216.34338]],

prediction,

font_size=15,

draw_width=5,

)

vis_image.save("tests/test_chatrex_region_caption.jpeg")

print(f"prediction is saved at tests/test_chatrex_region_caption.jpeg")The output from LLM is like:

<ground>A brown dog is lying on a bed, appearing relaxed and comfortable</ground><objects><obj0></objects>

The visualization of the output is like:

Example Prompt for Region Caption tasks:

# Brief Grounded Imager Caption

Please breifly describe this image in one sentence and detect all the mentioned objects. Answer the question with grounded answer.

# Detailed Grounded Image Caption

Please provide a detailed description of the image and detect all the mentioned objects. Answer the question with grounded object indexes.

Example Code

import torch

from PIL import Image

from transformers import AutoModelForCausalLM, AutoProcessor, GenerationConfig

from chatrex.tools.visualize import visualize_chatrex_output

from chatrex.upn import UPNWrapper

if __name__ == "__main__":

# load the processor

processor = AutoProcessor.from_pretrained(

"IDEA-Research/ChatRex-7B",

trust_remote_code=True,

device_map="cuda",

)

print(f"loading chatrex model...")

# load chatrex model

model = AutoModelForCausalLM.from_pretrained(

"IDEA-Research/ChatRex-7B",

trust_remote_code=True,

use_safetensors=True,

).to("cuda")

# load upn model

print(f"loading upn model...")

ckpt_path = "checkpoints/upn_checkpoints/upn_large.pth"

model_upn = UPNWrapper(ckpt_path)

test_image_path = "tests/images/test_chatrex_grounded_caption.jpg"

# get upn predictions

fine_grained_proposals = model_upn.inference(

test_image_path, prompt_type="fine_grained_prompt"

)

fine_grained_filtered_proposals = model_upn.filter(

fine_grained_proposals, min_score=0.3, nms_value=0.8

)

inputs = processor.process(

image=Image.open(test_image_path),

question="Please breifly describe this image in one sentence and detect all the mentioned objects. Answer the question with grounded answer.",

bbox=fine_grained_filtered_proposals["original_xyxy_boxes"][

0

], # box in xyxy format

)

inputs = {k: v.to("cuda") for k, v in inputs.items()}

# perform inference

gen_config = GenerationConfig(

max_new_tokens=512,

do_sample=False,

eos_token_id=processor.tokenizer.eos_token_id,

pad_token_id=(

processor.tokenizer.pad_token_id

if processor.tokenizer.pad_token_id is not None

else processor.tokenizer.eos_token_id

),

)

with torch.autocast(device_type="cuda", enabled=True, dtype=torch.bfloat16):

prediction = model.generate(

inputs, gen_config=gen_config, tokenizer=processor.tokenizer

)

print(f"prediction:", prediction)

# visualize the prediction

vis_image = visualize_chatrex_output(

Image.open(test_image_path),

fine_grained_filtered_proposals["original_xyxy_boxes"][0],

prediction,

font_size=15,

draw_width=5,

)

vis_image.save("tests/test_chatrex_grounded_image_caption.jpeg")

print(f"prediction is saved at tests/test_chatrex_grounded_image_caption.jpeg")The output from LLM is like:

The image depicts a cozy living room with a <ground>plaid couch,</ground><objects><obj2></objects> a <ground>wooden TV stand</ground><objects><obj3></objects>holding a <ground>black television,</ground><objects><obj1></objects> a <ground>red armchair,</ground><objects><obj4></objects> and a <ground>whiteboard</ground><objects><obj0></objects>with writing on the wall, accompanied by a <ground>framed poster</ground><objects><obj6></objects>of a <ground>couple.</ground><objects><obj9><obj11></objects>

The visualization of the output is like:

Example Prompt for Region Caption tasks:

Answer the question in Grounded format. Question

Example Code

import torch

from PIL import Image

from transformers import AutoModelForCausalLM, AutoProcessor, GenerationConfig

from chatrex.tools.visualize import visualize_chatrex_output

from chatrex.upn import UPNWrapper

if __name__ == "__main__":

# load the processor

processor = AutoProcessor.from_pretrained(

"IDEA-Research/ChatRex-7B",

trust_remote_code=True,

device_map="cuda",

)

print(f"loading chatrex model...")

# load chatrex model

model = AutoModelForCausalLM.from_pretrained(

"IDEA-Research/ChatRex-7B",

trust_remote_code=True,

use_safetensors=True,

).to("cuda")

# load upn model

print(f"loading upn model...")

ckpt_path = "checkpoints/upn_checkpoints/upn_large.pth"

model_upn = UPNWrapper(ckpt_path)

test_image_path = "tests/images/test_grounded_conversation.jpg"

# get upn predictions

fine_grained_proposals = model_upn.inference(

test_image_path, prompt_type="coarse_grained_prompt"

)

fine_grained_filtered_proposals = model_upn.filter(

fine_grained_proposals, min_score=0.3, nms_value=0.8

)

inputs = processor.process(

image=Image.open(test_image_path),

question="Answer the question in grounded format. This is a photo of my room, and can you tell me what kind of person I am? ",

bbox=fine_grained_filtered_proposals["original_xyxy_boxes"][

0

], # box in xyxy format

)

inputs = {k: v.to("cuda") for k, v in inputs.items()}

# perform inference

gen_config = GenerationConfig(

max_new_tokens=512,

do_sample=False,

eos_token_id=processor.tokenizer.eos_token_id,

pad_token_id=(

processor.tokenizer.pad_token_id

if processor.tokenizer.pad_token_id is not None

else processor.tokenizer.eos_token_id

),

)

with torch.autocast(device_type="cuda", enabled=True, dtype=torch.bfloat16):

prediction = model.generate(

inputs, gen_config=gen_config, tokenizer=processor.tokenizer

)

print(f"prediction:", prediction)

# visualize the prediction

vis_image = visualize_chatrex_output(

Image.open(test_image_path),

fine_grained_filtered_proposals["original_xyxy_boxes"][0],

prediction,

font_size=30,

draw_width=10,

)

vis_image.save("tests/test_chatrex_grounded_conversation.jpeg")

print(f"prediction is saved at tests/test_chatrex_grounded_conversation.jpeg")The output from LLM is like:

Based on the items in the image, it can be inferred that the <ground>person</ground><objects><obj1></objects> who owns this room has an interest in fitness and possibly enjoys reading. The presence of the <ground>dumbbell</ground><objects><obj2></objects> suggests a commitment to physical activity, while the <ground>book</ground><objects><obj3></objects> indicates a liking for literature or reading. The <ground>sneaker</ground><objects><obj0></objects>s and the <ground>plush toy</ground><objects><obj1></objects> add a personal touch, suggesting that the <ground>person</ground><objects><obj1></objects> might also value comfort and perhaps has a playful or nostalgic side. However, without more context, it is not possible to accurately determine the individual's specific traits or <ground>person</ground><objects><obj1></objects>ality.

The visualization of the output is like:

Here are Workflow Readme you can follow to run the gradio demos.

We provide a gradio demo for UPN to visualize the object proposals generated by UPN. You can run the following command to start the gradio demo:

python gradio_demos/upn_demo.py

# if there is permission error, please run the following command

mkdir tmp

TMPDIR='/tmp' python gradio_demos/upn_demo.pyWe also provide a gradio demo for ChatRex. Before you use, we highly recommend you to watch the following video to understand how to use this demo:

python gradio_demos/chatrex_demo.py

# if there is permission error, please run the following command

mkdir tmp

TMPDIR='/tmp' python gradio_demos/upn_demo.py- We have released 500K data samples of RexVerse-2M dataset. You can download the dataset from Hugging Face

ChatRex is licensed under the IDEA License 1.0, Copyright (c) IDEA. All Rights Reserved. Note that this project utilizes certain datasets and checkpoints that are subject to their respective original licenses. Users must comply with all terms and conditions of these original licenses including but not limited to the:

- OpenAI Terms of Use for the dataset.

- For the LLM used in this project, the model is lmsys/vicuna-7b-v1.5, which is licensed under Llama 2 Community License Agreement.

- For the high resolution vision encoder, we are using laion/CLIP-convnext_large_d.laion2B-s26B-b102K-augreg which is licensed under MIT LICENSE.

- For the low resolution vision encoder, we are using openai/clip-vit-large-patch14 which is licensed under MIT LICENSE

@misc{jiang2024chatrextamingmultimodalllm,

title={ChatRex: Taming Multimodal LLM for Joint Perception and Understanding},

author={Qing Jiang and Gen luo and Yuqin Yang and Yuda Xiong and Yihao Chen and Zhaoyang Zeng and Tianhe Ren and Lei Zhang},

year={2024},

eprint={2411.18363},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2411.18363},

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ChatRex

Similar Open Source Tools

ChatRex

ChatRex is a Multimodal Large Language Model (MLLM) designed to seamlessly integrate fine-grained object perception and robust language understanding. By adopting a decoupled architecture with a retrieval-based approach for object detection and leveraging high-resolution visual inputs, ChatRex addresses key challenges in perception tasks. It is powered by the Rexverse-2M dataset with diverse image-region-text annotations. ChatRex can be applied to various scenarios requiring fine-grained perception, such as object detection, grounded conversation, grounded image captioning, and region understanding.

cappr

CAPPr is a tool for text classification that does not require training or post-processing. It allows users to have their language models pick from a list of choices or compute the probability of a completion given a prompt. The tool aims to help users get more out of open source language models by simplifying the text classification process. CAPPr can be used with GGUF models, Hugging Face models, models from the OpenAI API, and for tasks like caching instructions, extracting final answers from step-by-step completions, and running predictions in batches with different sets of completions.

LightRAG

LightRAG is a PyTorch library designed for building and optimizing Retriever-Agent-Generator (RAG) pipelines. It follows principles of simplicity, quality, and optimization, offering developers maximum customizability with minimal abstraction. The library includes components for model interaction, output parsing, and structured data generation. LightRAG facilitates tasks like providing explanations and examples for concepts through a question-answering pipeline.

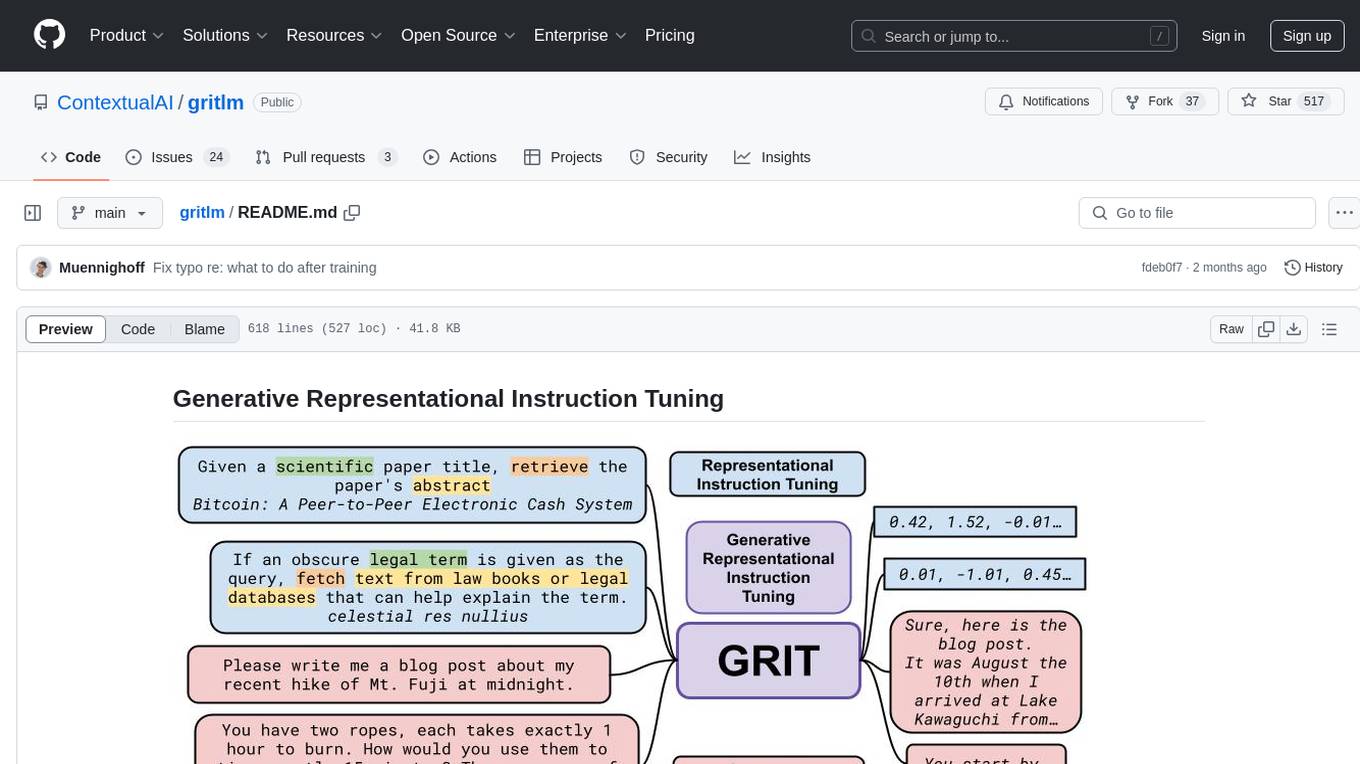

gritlm

The 'gritlm' repository provides all materials for the paper Generative Representational Instruction Tuning. It includes code for inference, training, evaluation, and known issues related to the GritLM model. The repository also offers models for embedding and generation tasks, along with instructions on how to train and evaluate the models. Additionally, it contains visualizations, acknowledgements, and a citation for referencing the work.

continuous-eval

Open-Source Evaluation for LLM Applications. `continuous-eval` is an open-source package created for granular and holistic evaluation of GenAI application pipelines. It offers modularized evaluation, a comprehensive metric library covering various LLM use cases, the ability to leverage user feedback in evaluation, and synthetic dataset generation for testing pipelines. Users can define their own metrics by extending the Metric class. The tool allows running evaluation on a pipeline defined with modules and corresponding metrics. Additionally, it provides synthetic data generation capabilities to create user interaction data for evaluation or training purposes.

zeta

Zeta is a tool designed to build state-of-the-art AI models faster by providing modular, high-performance, and scalable building blocks. It addresses the common issues faced while working with neural nets, such as chaotic codebases, lack of modularity, and low performance modules. Zeta emphasizes usability, modularity, and performance, and is currently used in hundreds of models across various GitHub repositories. It enables users to prototype, train, optimize, and deploy the latest SOTA neural nets into production. The tool offers various modules like FlashAttention, SwiGLUStacked, RelativePositionBias, FeedForward, BitLinear, PalmE, Unet, VisionEmbeddings, niva, FusedDenseGELUDense, FusedDropoutLayerNorm, MambaBlock, Film, hyper_optimize, DPO, and ZetaCloud for different tasks in AI model development.

LLM-Blender

LLM-Blender is a framework for ensembling large language models (LLMs) to achieve superior performance. It consists of two modules: PairRanker and GenFuser. PairRanker uses pairwise comparisons to distinguish between candidate outputs, while GenFuser merges the top-ranked candidates to create an improved output. LLM-Blender has been shown to significantly surpass the best LLMs and baseline ensembling methods across various metrics on the MixInstruct benchmark dataset.

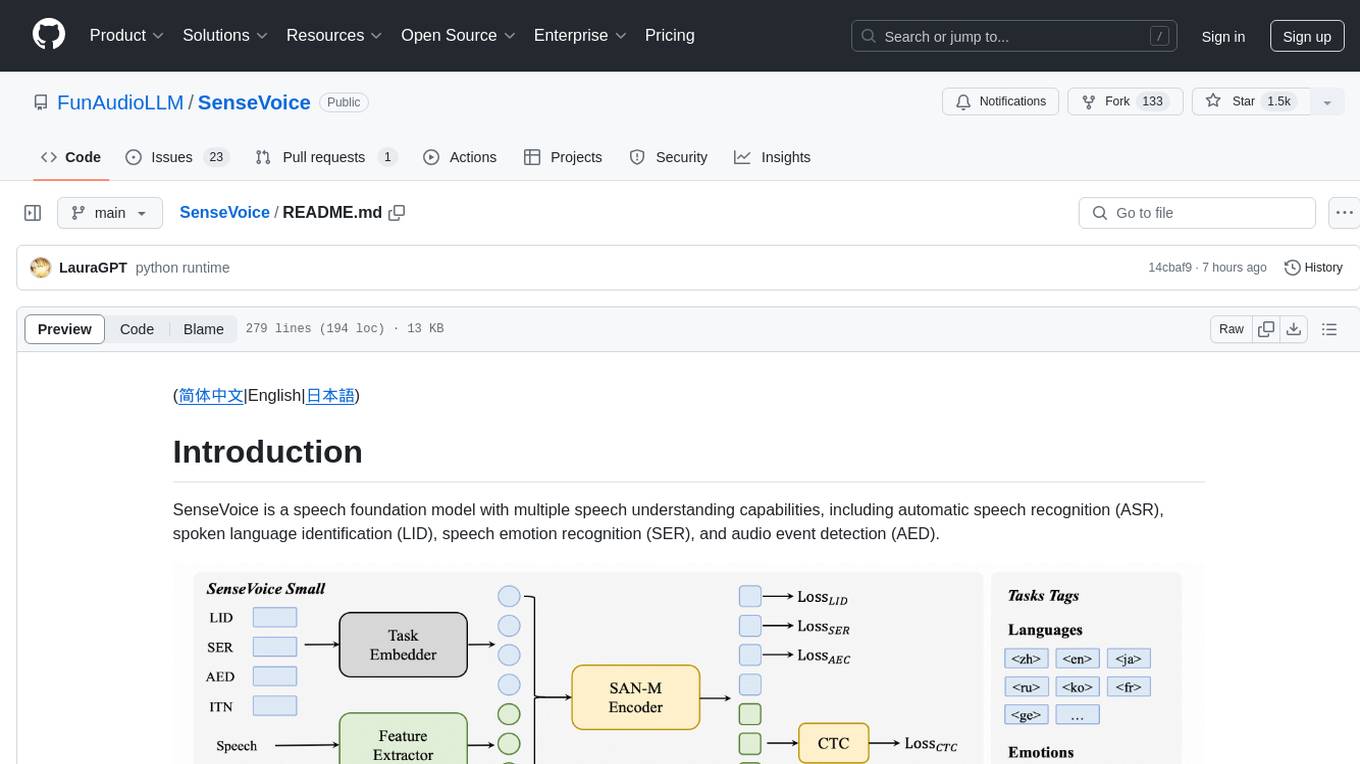

SenseVoice

SenseVoice is a speech foundation model focusing on high-accuracy multilingual speech recognition, speech emotion recognition, and audio event detection. Trained with over 400,000 hours of data, it supports more than 50 languages and excels in emotion recognition and sound event detection. The model offers efficient inference with low latency and convenient finetuning scripts. It can be deployed for service with support for multiple client-side languages. SenseVoice-Small model is open-sourced and provides capabilities for Mandarin, Cantonese, English, Japanese, and Korean. The tool also includes features for natural speech generation and fundamental speech recognition tasks.

UniChat

UniChat is a pipeline tool for creating online and offline chat-bots in Unity. It leverages Unity.Sentis and text vector embedding technology to enable offline mode text content search based on vector databases. The tool includes a chain toolkit for embedding LLM and Agent in games, along with middleware components for Text to Speech, Speech to Text, and Sub-classifier functionalities. UniChat also offers a tool for invoking tools based on ReActAgent workflow, allowing users to create personalized chat scenarios and character cards. The tool provides a comprehensive solution for designing flexible conversations in games while maintaining developer's ideas.

GraphRAG-SDK

Build fast and accurate GenAI applications with GraphRAG SDK, a specialized toolkit for building Graph Retrieval-Augmented Generation (GraphRAG) systems. It integrates knowledge graphs, ontology management, and state-of-the-art LLMs to deliver accurate, efficient, and customizable RAG workflows. The SDK simplifies the development process by automating ontology creation, knowledge graph agent creation, and query handling, enabling users to interact and query their knowledge graphs effectively. It supports multi-agent systems and orchestrates agents specialized in different domains. The SDK is optimized for FalkorDB, ensuring high performance and scalability for large-scale applications. By leveraging knowledge graphs, it enables semantic relationships and ontology-driven queries that go beyond standard vector similarity, enhancing retrieval-augmented generation capabilities.

chromem-go

chromem-go is an embeddable vector database for Go with a Chroma-like interface and zero third-party dependencies. It enables retrieval augmented generation (RAG) and similar embeddings-based features in Go apps without the need for a separate database. The focus is on simplicity and performance for common use cases, allowing querying of documents with minimal memory allocations. The project is in beta and may introduce breaking changes before v1.0.0.

rl

TorchRL is an open-source Reinforcement Learning (RL) library for PyTorch. It provides pytorch and **python-first** , low and high level abstractions for RL that are intended to be **efficient** , **modular** , **documented** and properly **tested**. The code is aimed at supporting research in RL. Most of it is written in python in a highly modular way, such that researchers can easily swap components, transform them or write new ones with little effort.

azure-functions-openai-extension

Azure Functions OpenAI Extension is a project that adds support for OpenAI LLM (GPT-3.5-turbo, GPT-4) bindings in Azure Functions. It provides NuGet packages for various functionalities like text completions, chat completions, assistants, embeddings generators, and semantic search. The project requires .NET 6 SDK or greater, Azure Functions Core Tools v4.x, and specific settings in Azure Function or local settings for development. It offers features like text completions, chat completion, assistants with custom skills, embeddings generators for text relatedness, and semantic search using vector databases. The project also includes examples in C# and Python for different functionalities.

simple-openai

Simple-OpenAI is a Java library that provides a simple way to interact with the OpenAI API. It offers consistent interfaces for various OpenAI services like Audio, Chat Completion, Image Generation, and more. The library uses CleverClient for HTTP communication, Jackson for JSON parsing, and Lombok to reduce boilerplate code. It supports asynchronous requests and provides methods for synchronous calls as well. Users can easily create objects to communicate with the OpenAI API and perform tasks like text-to-speech, transcription, image generation, and chat completions.

Jlama

Jlama is a modern Java inference engine designed for large language models. It supports various model types such as Gemma, Llama, Mistral, GPT-2, BERT, and more. The tool implements features like Flash Attention, Mixture of Experts, and supports different model quantization formats. Built with Java 21 and utilizing the new Vector API for faster inference, Jlama allows users to add LLM inference directly to their Java applications. The tool includes a CLI for running models, a simple UI for chatting with LLMs, and examples for different model types.

ragoon

RAGoon is a high-level library designed for batched embeddings generation, fast web-based RAG (Retrieval-Augmented Generation) processing, and quantized indexes processing. It provides NLP utilities for multi-model embedding production, high-dimensional vector visualization, and enhancing language model performance through search-based querying, web scraping, and data augmentation techniques.

For similar tasks

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

jupyter-ai

Jupyter AI connects generative AI with Jupyter notebooks. It provides a user-friendly and powerful way to explore generative AI models in notebooks and improve your productivity in JupyterLab and the Jupyter Notebook. Specifically, Jupyter AI offers: * An `%%ai` magic that turns the Jupyter notebook into a reproducible generative AI playground. This works anywhere the IPython kernel runs (JupyterLab, Jupyter Notebook, Google Colab, Kaggle, VSCode, etc.). * A native chat UI in JupyterLab that enables you to work with generative AI as a conversational assistant. * Support for a wide range of generative model providers, including AI21, Anthropic, AWS, Cohere, Gemini, Hugging Face, NVIDIA, and OpenAI. * Local model support through GPT4All, enabling use of generative AI models on consumer grade machines with ease and privacy.

khoj

Khoj is an open-source, personal AI assistant that extends your capabilities by creating always-available AI agents. You can share your notes and documents to extend your digital brain, and your AI agents have access to the internet, allowing you to incorporate real-time information. Khoj is accessible on Desktop, Emacs, Obsidian, Web, and Whatsapp, and you can share PDF, markdown, org-mode, notion files, and GitHub repositories. You'll get fast, accurate semantic search on top of your docs, and your agents can create deeply personal images and understand your speech. Khoj is self-hostable and always will be.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

infinity

Infinity is an AI-native database designed for LLM applications, providing incredibly fast full-text and vector search capabilities. It supports a wide range of data types, including vectors, full-text, and structured data, and offers a fused search feature that combines multiple embeddings and full text. Infinity is easy to use, with an intuitive Python API and a single-binary architecture that simplifies deployment. It achieves high performance, with 0.1 milliseconds query latency on million-scale vector datasets and up to 15K QPS.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.