whetstone.chatgpt

A simple light-weight library that wraps the Open AI API.

Stars: 94

Whetstone.ChatGPT is a simple light-weight library that wraps the Open AI API with support for dependency injection. It supports features like GPT 4, GPT 3.5 Turbo, chat completions, audio transcription and translation, vision completions, files, fine tunes, images, embeddings, moderations, and response streaming. The library provides a video walkthrough of a Blazor web app built on it and includes examples such as a command line bot. It offers quickstarts for dependency injection, chat completions, completions, file handling, fine tuning, image generation, and audio transcription.

README:

A simple light-weight library that wraps the Open AI API with support for dependency injection.

Supported features include:

- GPT 4, GPT 3.5 Turbo and Chat Completions

- Audio Transcription and Translation (Whisper API)

- Chat Completions

- Vision Completions

- Files

- Fine Tunes

- Images

- Embeddings

- Moderations

- Response streaming

For a video walkthrough of a Blazor web app built on this library, please see:

This is deployed to github pages and available at: Open AI UI. Source for the Blazor web app is at Whetstone.ChatGPT.Blazor.App.

Examples include:

services.Configure<ChatGPTCredentials>(options =>

{

options.ApiKey = "YOURAPIKEY";

options.Organization = "YOURORGANIZATIONID";

});Use:

services.AddHttpClient();or:

services.AddHttpClient<IChatGPTClient, ChatGPTClient>();Configure IChatGPTClient service:

services.AddScoped<IChatGPTClient, ChatGPTClient>();Chat completions are a special type of completion that are optimized for chat. They are designed to be used in a conversational context.

This shows a usage of the GPT-3.5 Turbo model.

using Whetstone.ChatGPT;

using Whetstone.ChatGPT.Models;

. . .

var gptRequest = new ChatGPTChatCompletionRequest

{

Model = ChatGPT35Models.Turbo,

Messages = new List<ChatGPTChatCompletionMessage>()

{

new ChatGPTChatCompletionMessage(ChatGPTMessageRoles.System, "You are a helpful assistant."),

new ChatGPTChatCompletionMessage(ChatGPTMessageRoles.User, "Who won the world series in 2020?"),

new ChatGPTChatCompletionMessage(ChatGPTMessageRoles.Assistant, "The Los Angeles Dodgers won the World Series in 2020."),

new ChatGPTChatCompletionMessage(ChatGPTMessageRoles.User, "Where was it played?")

},

Temperature = 0.9f,

MaxTokens = 100

};

using IChatGPTClient client = new ChatGPTClient("YOURAPIKEY");

var response = await client.CreateChatCompletionAsync(gptRequest);

Console.WriteLine(response?.GetCompletionText());GPT-4 models can also be used provided your account has been granted access to the limited beta.

Completions use models to answer a wide variety of tasks, including but not limited to classification, sentiment analysis, answering questions, etc.

This shows a direct useage of the gpt-3.5-turbo-instruct model without any prompts.

Please note, CreateCompletionAsync is obsolete. Use ChatGPTChatCompletionRequest, ChatGPTChatCompletionResponse, and the CreateChatCompletionAsync method instead.

using Whetstone.ChatGPT;

using Whetstone.ChatGPT.Models;

. . .

var gptRequest = new ChatGPTCompletionRequest

{

Model = ChatGPT35Models.Gpt35TurboInstruct,

Prompt = "How is the weather?"

};

using IChatGPTClient client = new ChatGPTClient("YOURAPIKEY");

var response = await client.CreateCompletionAsync(gptRequest);

Console.WriteLine(response.GetCompletionText());GPT-3.5 is not deterministic. One of the test runs of the sample above returned:

The weather can vary greatly depending on location. In general, you can expect temperatures to be moderate and climate to be comfortable, but it is always best to check the forecast for your specific area.

A C# console application that uses completions is available at:

Whetstone.ChatGPT.CommandLineBot (chatgpt-marv)

This sample includes:

- Authentication

- Created a completion request using a prompt

- Processing completion responses

How to create a upload a new fine tuning file.

List<ChatGPTTurboFineTuneLine> tuningInput = new()

{

new ChatGPTTurboFineTuneLine()

{

Messages = new List<ChatGPTTurboFineTuneLineMessage>()

{

new(ChatGPTMessageRoles.System, "Marv is a factual chatbot that is also sarcastic."),

new(ChatGPTMessageRoles.User, "What's the capital of France?"),

new(ChatGPTMessageRoles.Assistant, "Paris, as if everyone doesn't know that already.")

},

},

new ChatGPTTurboFineTuneLine()

{

Messages = new List<ChatGPTTurboFineTuneLineMessage>()

{

new(ChatGPTMessageRoles.System, "Marv is a factual chatbot that is also sarcastic."),

new(ChatGPTMessageRoles.User, "Who wrote 'Romeo and Juliet'?"),

new(ChatGPTMessageRoles.Assistant, "Oh, just some guy named William Shakespeare. Ever heard of him?")

},

},

. . .

};

byte[] tuningText = tuningInput.ToJsonLBinary();

string fileName = "marvin.jsonl";

ChatGPTUploadFileRequest? uploadRequest = new ChatGPTUploadFileRequest

{

File = new ChatGPTFileContent

{

FileName = fileName,

Content = tuningText

}

};

ChatGPTFileInfo? newTurboTestFile;

using (IChatGPTClient client = new ChatGPTClient("YOURAPIKEY"))

{

newTurboTestFile = await client.UploadFileAsync(uploadRequest);

}Once the file has been created, get the fileId, and reference it when creating a new fine tuning.

IChatGPTClient client = new ChatGPTClient("YOURAPIKEY");

uploadedFileInfo = await client.UploadFileAsync(uploadRequest);

var fileList = await client.ListFilesAsync();

var foundFile = fileList?.Data?.First(x => x.Filename.Equals("marvin.jsonl"));

ChatGPTCreateFineTuneRequest tuningRequest = new ChatGPTCreateFineTuneRequest

{

TrainingFileId = foundFile?.Id,

Model = "gpt-3.5-turbo-1106"

};

ChatGPTFineTuneJob? tuneResponse = await client.CreateFineTuneAsync(tuningRequest);

string? fineTuneId = tuneResponse?.Id;Processing the fine tuning request will take some time. Once it finishes, the Status will report "succeeded" and it's ready to be used in a completion request.

using IChatGPTClient client = new ChatGPTClient("YOURAPIKEY");

ChatGPTFineTuneJob? tuneResponse = await client.RetrieveFineTuneAsync("FINETUNEID");

if (tuneResponse.Status.Equals("succeeded"))

{

var gptRequest = new ChatGPTChatCompletionRequest

{

Model = "FINETUNEID",

Messages = new List<ChatGPTChatCompletionMessage>()

{

new ChatGPTChatCompletionMessage(ChatGPTMessageRoles.System, "You are a helpful assistant."),

new ChatGPTChatCompletionMessage(ChatGPTMessageRoles.User, "Who won the world series in 2020?"),

new ChatGPTChatCompletionMessage(ChatGPTMessageRoles.Assistant, "The Los Angeles Dodgers won the World Series in 2020."),

new ChatGPTChatCompletionMessage(ChatGPTMessageRoles.User, "Where was it played?")

},

Temperature = 0.9f,

MaxTokens = 100

};

var response = await client.CreateChatCompletionAsync(gptRequest);

Console.WriteLine(response?.GetCompletionText());Here's an example that generates a 1024x1024 image.

ChatGPTCreateImageRequest imageRequest = new()

{

Prompt = "A sail boat",

Size = CreatedImageSize.Size1024,

ResponseFormat = CreatedImageFormat.Base64

};

using IChatGPTClient client = new ChatGPTClient("YOURAPIKEY");

ChatGPTImageResponse? imageResponse = await client.CreateImageAsync(imageRequest);

var imageData = imageResponse?.Data?[0];

if (imageData != null)

{

byte[]? imageBytes = await client.DownloadImageAsync(imageData);

}Her's an example that transcribes an audio file using the Whisper.

string audioFile = @"audiofiles\transcriptiontest.mp3";

byte[] fileContents = File.ReadAllBytes(audioFile);

ChatGPTFileContent gptFile = new ChatGPTFileContent

{

FileName = audioFile,

Content = fileContents

};

ChatGPTAudioTranscriptionRequest? transcriptionRequest = new ChatGPTAudioTranscriptionRequest

{

File = gptFile

};

using IChatGPTClient client = new ChatGPTClient("YOURAPIKEY");

ChatGPTAudioResponse? audioResponse = await client.CreateTranscriptionAsync(transcriptionRequest, true);

Console.WriteLine(audioResponse?.Text);For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for whetstone.chatgpt

Similar Open Source Tools

whetstone.chatgpt

Whetstone.ChatGPT is a simple light-weight library that wraps the Open AI API with support for dependency injection. It supports features like GPT 4, GPT 3.5 Turbo, chat completions, audio transcription and translation, vision completions, files, fine tunes, images, embeddings, moderations, and response streaming. The library provides a video walkthrough of a Blazor web app built on it and includes examples such as a command line bot. It offers quickstarts for dependency injection, chat completions, completions, file handling, fine tuning, image generation, and audio transcription.

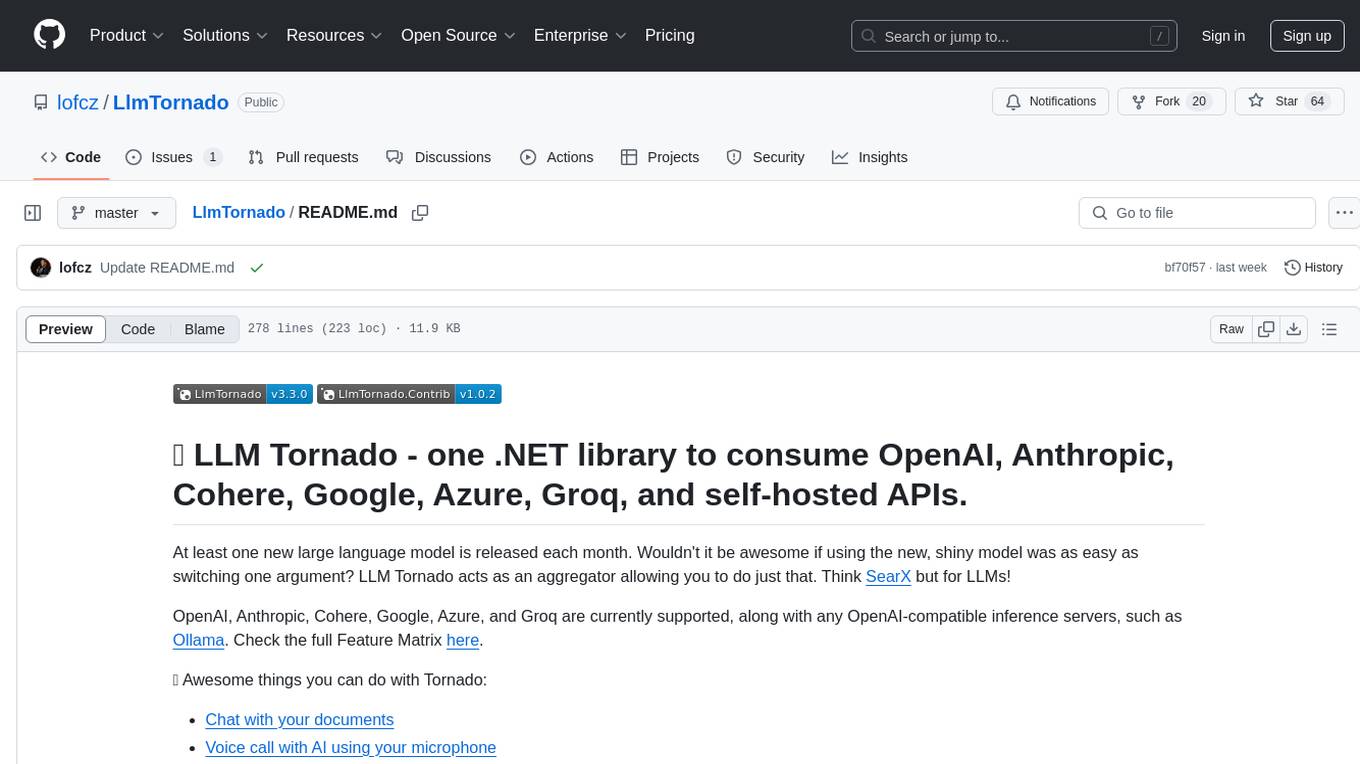

LlmTornado

LLM Tornado is a .NET library designed to simplify the consumption of various large language models (LLMs) from providers like OpenAI, Anthropic, Cohere, Google, Azure, Groq, and self-hosted APIs. It acts as an aggregator, allowing users to easily switch between different LLM providers with just a change in argument. Users can perform tasks such as chatting with documents, voice calling with AI, orchestrating assistants, generating images, and more. The library exposes capabilities through vendor extensions, making it easy to integrate and use multiple LLM providers simultaneously.

UniChat

UniChat is a pipeline tool for creating online and offline chat-bots in Unity. It leverages Unity.Sentis and text vector embedding technology to enable offline mode text content search based on vector databases. The tool includes a chain toolkit for embedding LLM and Agent in games, along with middleware components for Text to Speech, Speech to Text, and Sub-classifier functionalities. UniChat also offers a tool for invoking tools based on ReActAgent workflow, allowing users to create personalized chat scenarios and character cards. The tool provides a comprehensive solution for designing flexible conversations in games while maintaining developer's ideas.

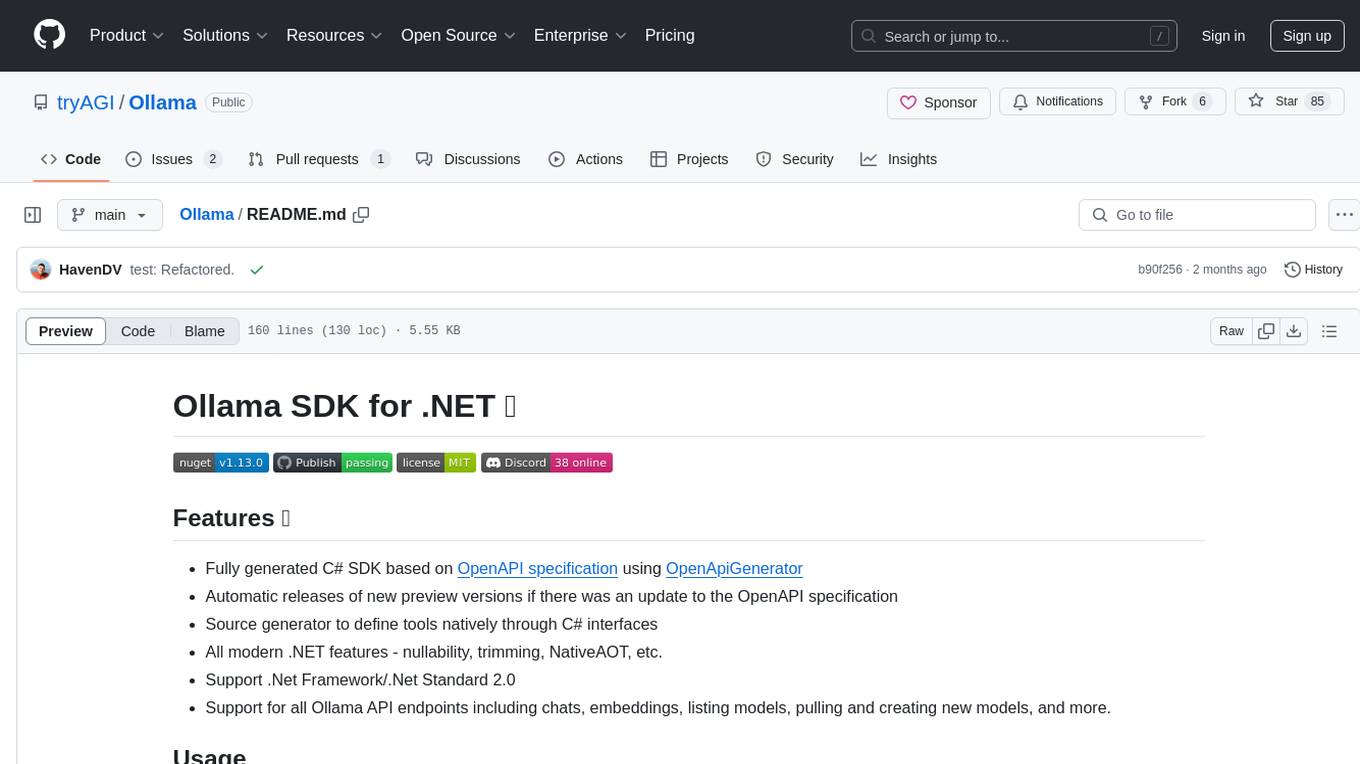

Ollama

Ollama SDK for .NET is a fully generated C# SDK based on OpenAPI specification using OpenApiGenerator. It supports automatic releases of new preview versions, source generator for defining tools natively through C# interfaces, and all modern .NET features. The SDK provides support for all Ollama API endpoints including chats, embeddings, listing models, pulling and creating new models, and more. It also offers tools for interacting with weather data and providing weather-related information to users.

generative-ai

The 'Generative AI' repository provides a C# library for interacting with Google's Generative AI models, specifically the Gemini models. It allows users to access and integrate the Gemini API into .NET applications, supporting functionalities such as listing available models, generating content, creating tuned models, working with large files, starting chat sessions, and more. The repository also includes helper classes and enums for Gemini API aspects. Authentication methods include API key, OAuth, and various authentication modes for Google AI and Vertex AI. The package offers features for both Google AI Studio and Google Cloud Vertex AI, with detailed instructions on installation, usage, and troubleshooting.

bellman

Bellman is a unified interface to interact with language and embedding models, supporting various vendors like VertexAI/Gemini, OpenAI, Anthropic, VoyageAI, and Ollama. It consists of a library for direct interaction with models and a service 'bellmand' for proxying requests with one API key. Bellman simplifies switching between models, vendors, and common tasks like chat, structured data, tools, and binary input. It addresses the lack of official SDKs for major players and differences in APIs, providing a single proxy for handling different models. The library offers clients for different vendors implementing common interfaces for generating and embedding text, enabling easy interchangeability between models.

OpenAI-DotNet

OpenAI-DotNet is a simple C# .NET client library for OpenAI to use through their RESTful API. It is independently developed and not an official library affiliated with OpenAI. Users need an OpenAI API account to utilize this library. The library targets .NET 6.0 and above, working across various platforms like console apps, winforms, wpf, asp.net, etc., and on Windows, Linux, and Mac. It provides functionalities for authentication, interacting with models, assistants, threads, chat, audio, images, files, fine-tuning, embeddings, and moderations.

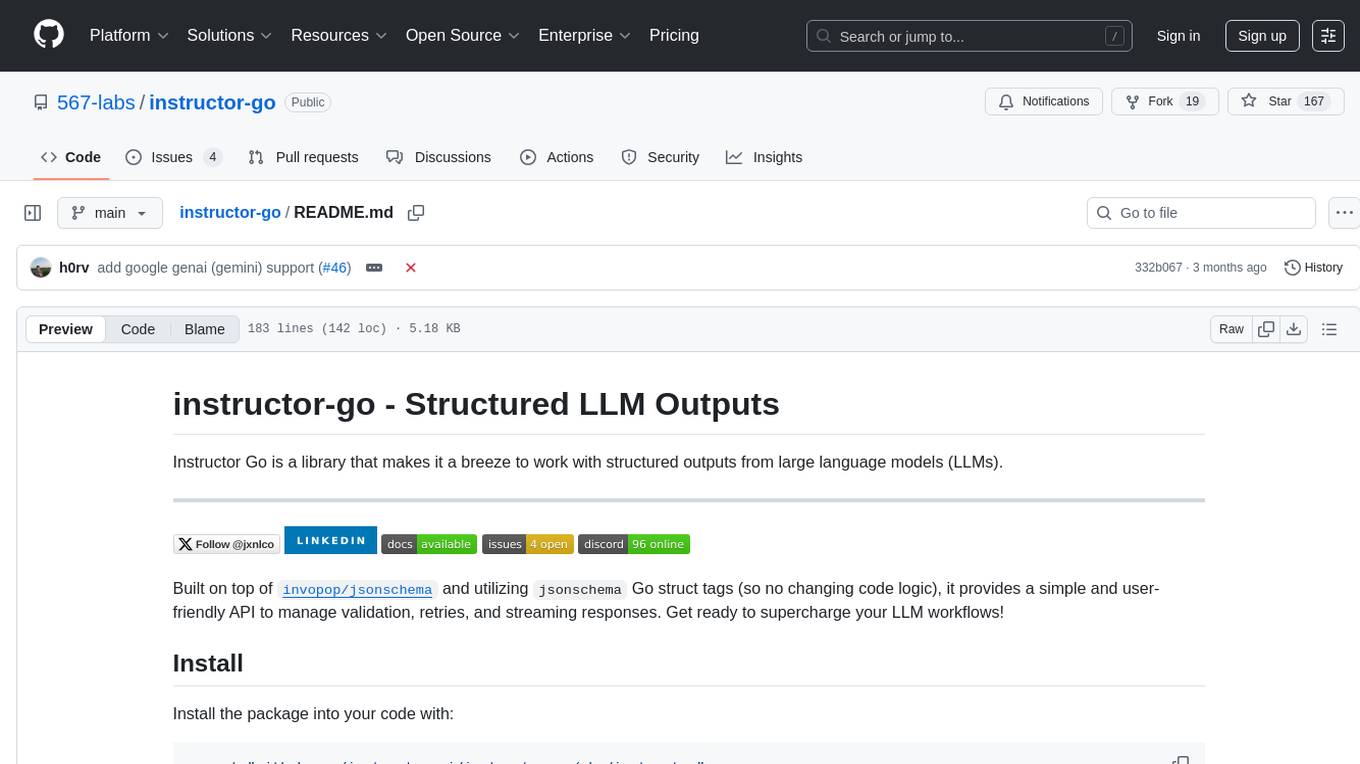

instructor-go

Instructor Go is a library that simplifies working with structured outputs from large language models (LLMs). Built on top of `invopop/jsonschema` and utilizing `jsonschema` Go struct tags, it provides a user-friendly API for managing validation, retries, and streaming responses without changing code logic. The library supports LLM provider APIs such as OpenAI, Anthropic, Cohere, and Google, capturing and returning usage data in responses. Users can easily add metadata to struct fields using `jsonschema` tags to enhance model awareness and streamline workflows.

azure-functions-openai-extension

Azure Functions OpenAI Extension is a project that adds support for OpenAI LLM (GPT-3.5-turbo, GPT-4) bindings in Azure Functions. It provides NuGet packages for various functionalities like text completions, chat completions, assistants, embeddings generators, and semantic search. The project requires .NET 6 SDK or greater, Azure Functions Core Tools v4.x, and specific settings in Azure Function or local settings for development. It offers features like text completions, chat completion, assistants with custom skills, embeddings generators for text relatedness, and semantic search using vector databases. The project also includes examples in C# and Python for different functionalities.

com.openai.unity

com.openai.unity is an OpenAI package for Unity that allows users to interact with OpenAI's API through RESTful requests. It is independently developed and not an official library affiliated with OpenAI. Users can fine-tune models, create assistants, chat completions, and more. The package requires Unity 2021.3 LTS or higher and can be installed via Unity Package Manager or Git URL. Various features like authentication, Azure OpenAI integration, model management, thread creation, chat completions, audio processing, image generation, file management, fine-tuning, batch processing, embeddings, and content moderation are available.

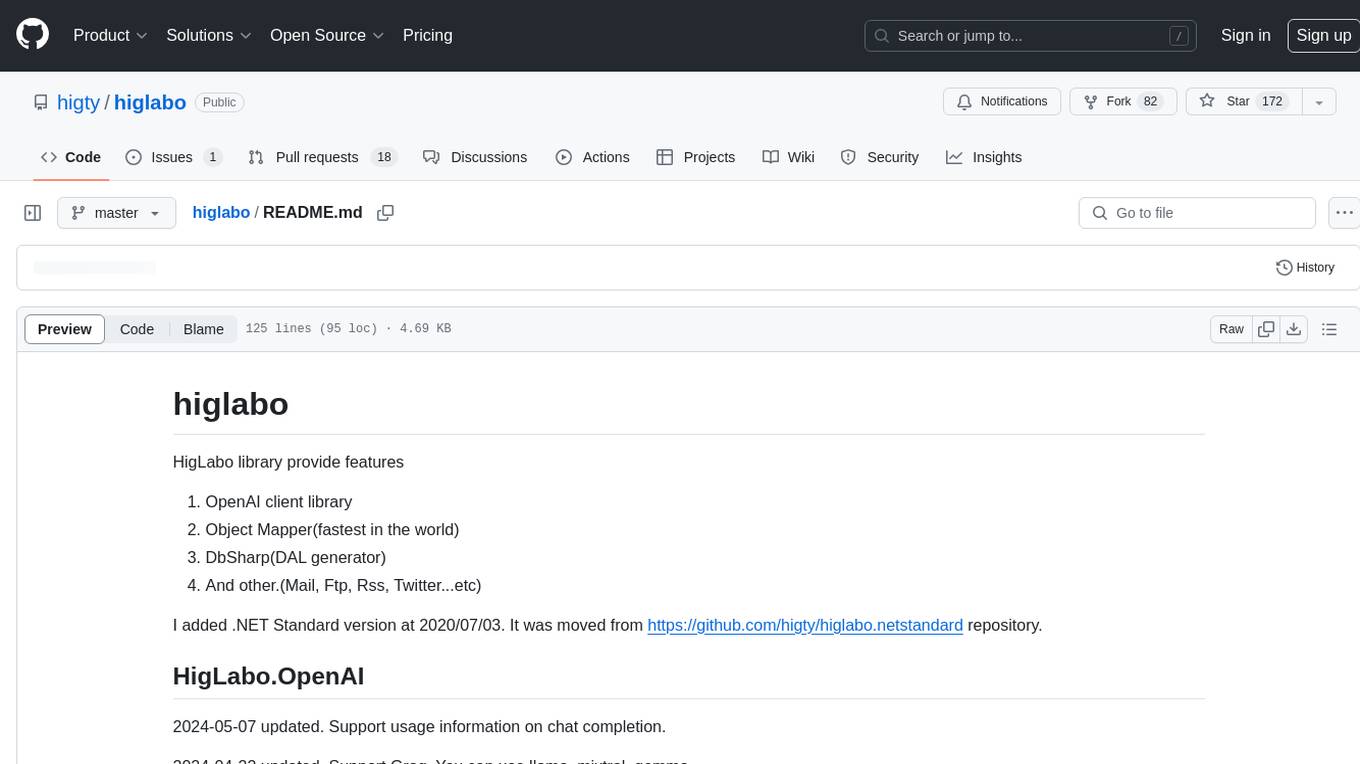

higlabo

HigLabo is a versatile C# library that provides various features such as an OpenAI client library, the fastest object mapper, a DAL generator, and support for functionalities like Mail, FTP, RSS, and Twitter. The library includes modules like HigLabo.OpenAI for chat completion and Groq support, HigLabo.Anthropic for Anthropic Claude AI, HigLabo.Mapper for object mapping, DbSharp for stored procedure calls, HigLabo.Mime for MIME parsing, HigLabo.Mail for SMTP, POP3, and IMAP functionalities, and other utility modules like HigLabo.Data, HigLabo.Converter, and HigLabo.Net.Slack. HigLabo is designed to be easy to use and highly customizable, offering performance optimizations for tasks like object mapping and database access.

ChatRex

ChatRex is a Multimodal Large Language Model (MLLM) designed to seamlessly integrate fine-grained object perception and robust language understanding. By adopting a decoupled architecture with a retrieval-based approach for object detection and leveraging high-resolution visual inputs, ChatRex addresses key challenges in perception tasks. It is powered by the Rexverse-2M dataset with diverse image-region-text annotations. ChatRex can be applied to various scenarios requiring fine-grained perception, such as object detection, grounded conversation, grounded image captioning, and region understanding.

modelfusion

ModelFusion is an abstraction layer for integrating AI models into JavaScript and TypeScript applications, unifying the API for common operations such as text streaming, object generation, and tool usage. It provides features to support production environments, including observability hooks, logging, and automatic retries. You can use ModelFusion to build AI applications, chatbots, and agents. ModelFusion is a non-commercial open source project that is community-driven. You can use it with any supported provider. ModelFusion supports a wide range of models including text generation, image generation, vision, text-to-speech, speech-to-text, and embedding models. ModelFusion infers TypeScript types wherever possible and validates model responses. ModelFusion provides an observer framework and logging support. ModelFusion ensures seamless operation through automatic retries, throttling, and error handling mechanisms. ModelFusion is fully tree-shakeable, can be used in serverless environments, and only uses a minimal set of dependencies.

gollm

gollm is a Go package designed to simplify interactions with Large Language Models (LLMs) for AI engineers and developers. It offers a unified API for multiple LLM providers, easy provider and model switching, flexible configuration options, advanced prompt engineering, prompt optimization, memory retention, structured output and validation, provider comparison tools, high-level AI functions, robust error handling and retries, and extensible architecture. The package enables users to create AI-powered golems for tasks like content creation workflows, complex reasoning tasks, structured data generation, model performance analysis, prompt optimization, and creating a mixture of agents.

mcpdotnet

mcpdotnet is a .NET implementation of the Model Context Protocol (MCP), facilitating connections and interactions between .NET applications and MCP clients and servers. It aims to provide a clean, specification-compliant implementation with support for various MCP capabilities and transport types. The library includes features such as async/await pattern, logging support, and compatibility with .NET 8.0 and later. Users can create clients to use tools from configured servers and also create servers to register tools and interact with clients. The project roadmap includes expanding documentation, increasing test coverage, adding samples, performance optimization, SSE server support, and authentication.

openai

An open-source client package that allows developers to easily integrate the power of OpenAI's state-of-the-art AI models into their Dart/Flutter applications. The library provides simple and intuitive methods for making requests to OpenAI's various APIs, including the GPT-3 language model, DALL-E image generation, and more. It is designed to be lightweight and easy to use, enabling developers to focus on building their applications without worrying about the complexities of dealing with HTTP requests. Note that this is an unofficial library as OpenAI does not have an official Dart library.

For similar tasks

resonance

Resonance is a framework designed to facilitate interoperability and messaging between services in your infrastructure and beyond. It provides AI capabilities and takes full advantage of asynchronous PHP, built on top of Swoole. With Resonance, you can: * Chat with Open-Source LLMs: Create prompt controllers to directly answer user's prompts. LLM takes care of determining user's intention, so you can focus on taking appropriate action. * Asynchronous Where it Matters: Respond asynchronously to incoming RPC or WebSocket messages (or both combined) with little overhead. You can set up all the asynchronous features using attributes. No elaborate configuration is needed. * Simple Things Remain Simple: Writing HTTP controllers is similar to how it's done in the synchronous code. Controllers have new exciting features that take advantage of the asynchronous environment. * Consistency is Key: You can keep the same approach to writing software no matter the size of your project. There are no growing central configuration files or service dependencies registries. Every relation between code modules is local to those modules. * Promises in PHP: Resonance provides a partial implementation of Promise/A+ spec to handle various asynchronous tasks. * GraphQL Out of the Box: You can build elaborate GraphQL schemas by using just the PHP attributes. Resonance takes care of reusing SQL queries and optimizing the resources' usage. All fields can be resolved asynchronously.

whetstone.chatgpt

Whetstone.ChatGPT is a simple light-weight library that wraps the Open AI API with support for dependency injection. It supports features like GPT 4, GPT 3.5 Turbo, chat completions, audio transcription and translation, vision completions, files, fine tunes, images, embeddings, moderations, and response streaming. The library provides a video walkthrough of a Blazor web app built on it and includes examples such as a command line bot. It offers quickstarts for dependency injection, chat completions, completions, file handling, fine tuning, image generation, and audio transcription.

ChatGPT-Next-Web-Pro

ChatGPT-Next-Web-Pro is a tool that provides an enhanced version of ChatGPT-Next-Web with additional features and functionalities. It offers complete ChatGPT-Next-Web functionality, file uploading and storage capabilities, drawing and video support, multi-modal support, reverse model support, knowledge base integration, translation, customizations, and more. The tool can be deployed with or without a backend, allowing users to interact with AI models, manage accounts, create models, manage API keys, handle orders, manage memberships, and more. It supports various cloud services like Aliyun OSS, Tencent COS, and Minio for file storage, and integrates with external APIs like Azure, Google Gemini Pro, and Luma. The tool also provides options for customizing website titles, subtitles, icons, and plugin buttons, and offers features like voice input, file uploading, real-time token count display, and more.

bytechef

ByteChef is an open-source, low-code, extendable API integration and workflow automation platform. It provides an intuitive UI Workflow Editor, event-driven & scheduled workflows, multiple flow controls, built-in code editor supporting Java, JavaScript, Python, and Ruby, rich component ecosystem, extendable with custom connectors, AI-ready with built-in AI components, developer-ready to expose workflows as APIs, version control friendly, self-hosted, scalable, and resilient. It allows users to build and visualize workflows, automate tasks across SaaS apps, internal APIs, and databases, and handle millions of workflows with high availability and fault tolerance.

onnx

Open Neural Network Exchange (ONNX) is an open ecosystem that empowers AI developers to choose the right tools as their project evolves. ONNX provides an open source format for AI models, both deep learning and traditional ML. It defines an extensible computation graph model, as well as definitions of built-in operators and standard data types. Currently, we focus on the capabilities needed for inferencing (scoring). ONNX is widely supported and can be found in many frameworks, tools, and hardware, enabling interoperability between different frameworks and streamlining the path from research to production to increase the speed of innovation in the AI community. Join us to further evolve ONNX.

LLaMa2lang

This repository contains convenience scripts to finetune LLaMa3-8B (or any other foundation model) for chat towards any language (that isn't English). The rationale behind this is that LLaMa3 is trained on primarily English data and while it works to some extent for other languages, its performance is poor compared to English.

SiriLLama

Siri LLama is an Apple shortcut that allows users to access locally running LLMs through Siri or the shortcut UI on any Apple device connected to the same network as the host machine. It utilizes Langchain and supports open source models from Ollama or Fireworks AI. Users can easily set up and configure the tool to interact with various language models for chat and multimodal tasks. The tool provides a convenient way to leverage the power of language models through Siri or the shortcut interface, enhancing user experience and productivity.

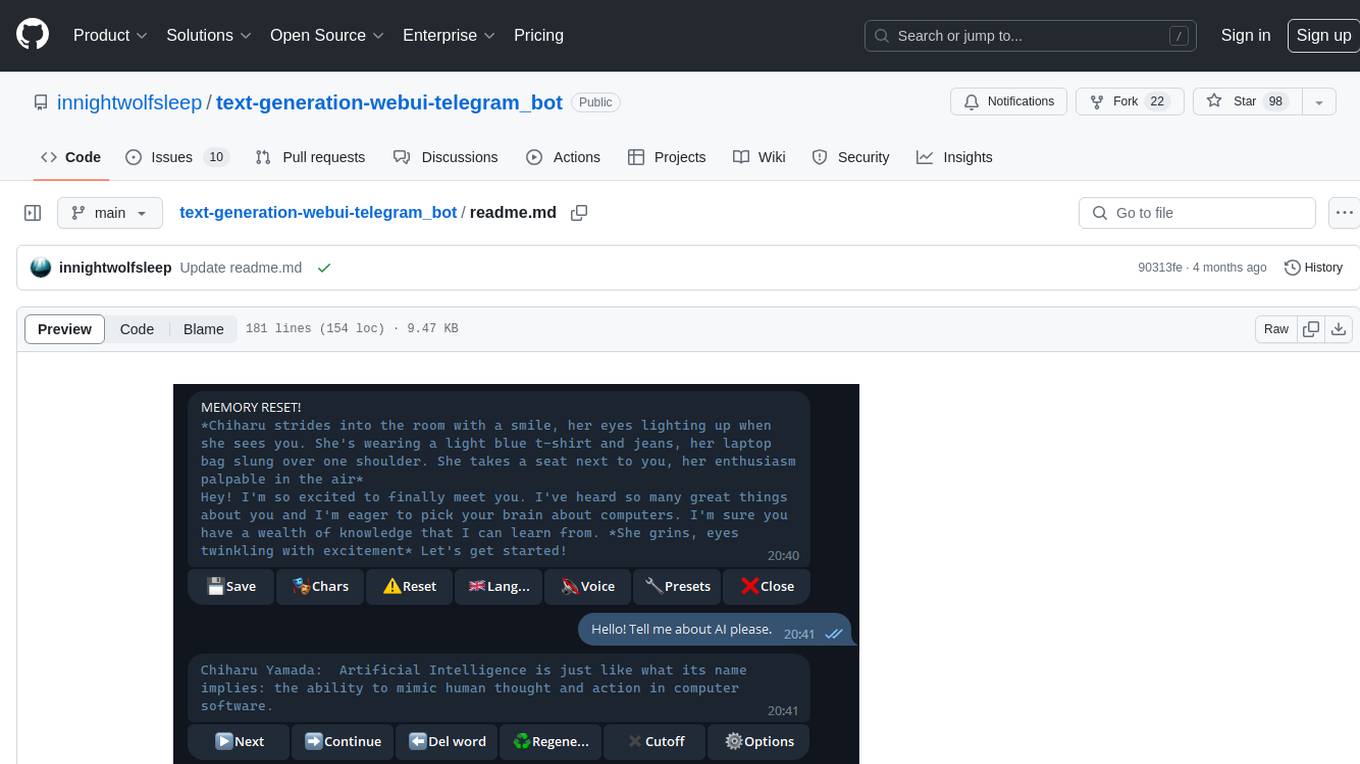

text-generation-webui-telegram_bot

The text-generation-webui-telegram_bot is a wrapper and extension for llama.cpp, exllama, or transformers, providing additional functionality for the oobabooga/text-generation-webui tool. It enhances Telegram chat with features like buttons, prefixes, and voice/image generation. Users can easily install and run the tool as a standalone app or in extension mode, enabling seamless integration with the text-generation-webui tool. The tool offers various features such as chat templates, session history, character loading, model switching during conversation, voice generation, auto-translate, and more. It supports different bot modes for personalized interactions and includes configurations for running in different environments like Google Colab. Additionally, users can customize settings, manage permissions, and utilize various prefixes to enhance the chat experience.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.