Jlama

Jlama is a modern LLM inference engine for Java

Stars: 987

Jlama is a modern Java inference engine designed for large language models. It supports various model types such as Gemma, Llama, Mistral, GPT-2, BERT, and more. The tool implements features like Flash Attention, Mixture of Experts, and supports different model quantization formats. Built with Java 21 and utilizing the new Vector API for faster inference, Jlama allows users to add LLM inference directly to their Java applications. The tool includes a CLI for running models, a simple UI for chatting with LLMs, and examples for different model types.

README:

Model Support:

- Gemma & Gemma 2 Models

- Llama & Llama2 & Llama3 Models

- Mistral & Mixtral Models

- Qwen2 Models

- IBM Granite Models

- GPT-2 Models

- BERT Models

- BPE Tokenizers

- WordPiece Tokenizers

Implements:

- Paged Attention

- Mixture of Experts

- Tool Calling

- Generate Embeddings

- Classifier Support

- Huggingface SafeTensors model and tokenizer format

- Support for F32, F16, BF16 types

- Support for Q8, Q4 model quantization

- Fast GEMM operations

- Distributed Inference!

Jlama requires Java 20 or later and utilizes the new Vector API for faster inference.

Add LLM Inference directly to your Java application.

Jlama includes a command line tool that makes it easy to use.

The CLI can be run with jbang.

#Install jbang (or https://www.jbang.dev/download/)

curl -Ls https://sh.jbang.dev | bash -s - app setup

#Install Jlama CLI (will ask if you trust the source)

jbang app install --force jlama@tjakeNow that you have jlama installed you can download a model from huggingface and chat with it. Note I have pre-quantized models available at https://hf.co/tjake

# Run the openai chat api and UI on a model

jlama restapi tjake/Llama-3.2-1B-Instruct-JQ4 --auto-downloadopen browser to http://localhost:8080/

Usage:

jlama [COMMAND]

Description:

Jlama is a modern LLM inference engine for Java!

Quantized models are maintained at https://hf.co/tjake

Choose from the available commands:

Inference:

chat Interact with the specified model

restapi Starts a openai compatible rest api for interacting with this model

complete Completes a prompt using the specified model

Distributed Inference:

cluster-coordinator Starts a distributed rest api for a model using cluster workers

cluster-worker Connects to a cluster coordinator to perform distributed inference

Other:

download Downloads a HuggingFace model - use owner/name format

list Lists local models

quantize Quantize the specified model

rm Removes local model

version Display JLama version informationThe main purpose of Jlama is to provide a simple way to use large language models in Java.

The simplest way to embed Jlama in your app is with the Langchain4j Integration.

If you would like to embed Jlama without langchain4j, add the following maven dependencies to your project:

<dependency>

<groupId>com.github.tjake</groupId>

<artifactId>jlama-core</artifactId>

<version>${jlama.version}</version>

</dependency>

<dependency>

<groupId>com.github.tjake</groupId>

<artifactId>jlama-native</artifactId>

<!-- supports linux-x86_64, macos-x86_64/aarch_64, windows-x86_64

Use https://github.com/trustin/os-maven-plugin to detect os and arch -->

<classifier>${os.detected.name}-${os.detected.arch}</classifier>

<version>${jlama.version}</version>

</dependency>

jlama uses Java 21 preview features. You can enable the features globally with:

export JDK_JAVA_OPTIONS="--add-modules jdk.incubator.vector --enable-preview"or enable the preview features by configuring maven compiler and failsafe plugins.

Then you can use the Model classes to run models:

public void sample() throws IOException {

String model = "tjake/Llama-3.2-1B-Instruct-JQ4";

String workingDirectory = "./models";

String prompt = "What is the best season to plant avocados?";

// Downloads the model or just returns the local path if it's already downloaded

File localModelPath = new Downloader(workingDirectory, model).huggingFaceModel();

// Loads the quantized model and specified use of quantized memory

AbstractModel m = ModelSupport.loadModel(localModelPath, DType.F32, DType.I8);

PromptContext ctx;

// Checks if the model supports chat prompting and adds prompt in the expected format for this model

if (m.promptSupport().isPresent()) {

ctx = m.promptSupport()

.get()

.builder()

.addSystemMessage("You are a helpful chatbot who writes short responses.")

.addUserMessage(prompt)

.build();

} else {

ctx = PromptContext.of(prompt);

}

System.out.println("Prompt: " + ctx.getPrompt() + "\n");

// Generates a response to the prompt and prints it

// The api allows for streaming or non-streaming responses

// The response is generated with a temperature of 0.7 and a max token length of 256

Generator.Response r = m.generate(UUID.randomUUID(), ctx, 0.0f, 256, (s, f) -> {});

System.out.println(r.responseText);

}Or you can use a Builder API:

public void sample() throws IOException {

String model = "tjake/Llama-3.2-1B-Instruct-JQ4";

String workingDirectory = "./models";

String prompt = "What is the best season to plant avocados?";

// Downloads the model or just returns the local path if it's already downloaded

File localModelPath = new Downloader(workingDirectory, model).huggingFaceModel();

// Loads the quantized model and specified use of quantized memory

AbstractModel m = ModelSupport.loadModel(localModelPath, DType.F32, DType.I8);

PromptContext ctx;

// Checks if the model supports chat prompting and adds prompt in the expected format for this model

if (m.promptSupport().isPresent()) {

ctx = m.promptSupport()

.get()

.builder()

.addSystemMessage("You are a helpful chatbot who writes short responses.")

.addUserMessage(prompt)

.build();

} else {

ctx = PromptContext.of(prompt);

}

System.out.println("Prompt: " + ctx.getPrompt() + "\n");

// Generates a response to the prompt and prints it

// The api allows for streaming or non-streaming responses

// The response is generated with a temperature of 0.7 and a max token length of 256

Generator.Response r = m.generateBuilder()

.session(UUID.randomUUID()) //By default, UUID.randomUUID()

.promptContext(ctx) // Required or use prompt(String text)

.ntokens(256) //By default, 256

.temperature(0.0f) //By default, 0.0f

.onTokenWithTimings((s, aFloat) -> {}) //By default, (s, aFloat) -> {}, nothing

.generate();

System.out.println(r.responseText);

}You can simplify promptSupport using:

public void sample() throws IOException {

String model = "tjake/Llama-3.2-1B-Instruct-JQ4";

String workingDirectory = "./models";

String prompt = "What is the best season to plant avocados?";

// Downloads the model or just returns the local path if it's already downloaded

File localModelPath = new Downloader(workingDirectory, model).huggingFaceModel();

// Loads the quantized model and specified use of quantized memory

AbstractModel m = ModelSupport.loadModel(localModelPath, DType.F32, DType.I8);

var systemPrompt = "You are a helpful chatbot who writes short responses.";

PromptContext ctx = m.prompt()

.addUserMessage(prompt)

.addSystemMessage(systemPrompt)

.build(); //build method will create a PromptContext, if model don't support prompt, a simple PromptContext object will be created

System.out.println("Prompt: " + ctx.getPrompt() + "\n");

// Generates a response to the prompt and prints it

// The api allows for streaming or non-streaming responses

// The response is generated with a temperature of 0.7 and a max token length of 256

Generator.Response r = m.generateBuilder()

.session(UUID.randomUUID()) //By default, UUID.randomUUID()

.promptContext(ctx) // Required or use prompt(String text)

.ntokens(256) //By default, 256

.temperature(0.0f) //By default, 0.0f

.onTokenWithTimings((s, aFloat) -> {}) //By default, (s, aFloat) -> {}, nothing

.generate();

System.out.println(r.responseText);

}If you like or are using this project to build your own, please give us a star. It's a free way to show your support.

- Support more and more models

Add pure java tokenizersSupport Quantization (e.g. k-quantization)- Add LoRA support

- GraalVM support

Add distributed inference

The code is available under Apache License.

If you find this project helpful in your research, please cite this work at

@misc{jlama2024,

title = {Jlama: A modern Java inference engine for large language models},

url = {https://github.com/tjake/jlama},

author = {T Jake Luciani},

month = {January},

year = {2024}

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Jlama

Similar Open Source Tools

Jlama

Jlama is a modern Java inference engine designed for large language models. It supports various model types such as Gemma, Llama, Mistral, GPT-2, BERT, and more. The tool implements features like Flash Attention, Mixture of Experts, and supports different model quantization formats. Built with Java 21 and utilizing the new Vector API for faster inference, Jlama allows users to add LLM inference directly to their Java applications. The tool includes a CLI for running models, a simple UI for chatting with LLMs, and examples for different model types.

simple-openai

Simple-OpenAI is a Java library that provides a simple way to interact with the OpenAI API. It offers consistent interfaces for various OpenAI services like Audio, Chat Completion, Image Generation, and more. The library uses CleverClient for HTTP communication, Jackson for JSON parsing, and Lombok to reduce boilerplate code. It supports asynchronous requests and provides methods for synchronous calls as well. Users can easily create objects to communicate with the OpenAI API and perform tasks like text-to-speech, transcription, image generation, and chat completions.

IntelliNode

IntelliNode is a javascript module that integrates cutting-edge AI models like ChatGPT, LLaMA, WaveNet, Gemini, and Stable diffusion into projects. It offers functions for generating text, speech, and images, as well as semantic search, multi-model evaluation, and chatbot capabilities. The module provides a wrapper layer for low-level model access, a controller layer for unified input handling, and a function layer for abstract functionality tailored to various use cases.

UniChat

UniChat is a pipeline tool for creating online and offline chat-bots in Unity. It leverages Unity.Sentis and text vector embedding technology to enable offline mode text content search based on vector databases. The tool includes a chain toolkit for embedding LLM and Agent in games, along with middleware components for Text to Speech, Speech to Text, and Sub-classifier functionalities. UniChat also offers a tool for invoking tools based on ReActAgent workflow, allowing users to create personalized chat scenarios and character cards. The tool provides a comprehensive solution for designing flexible conversations in games while maintaining developer's ideas.

azure-functions-openai-extension

Azure Functions OpenAI Extension is a project that adds support for OpenAI LLM (GPT-3.5-turbo, GPT-4) bindings in Azure Functions. It provides NuGet packages for various functionalities like text completions, chat completions, assistants, embeddings generators, and semantic search. The project requires .NET 6 SDK or greater, Azure Functions Core Tools v4.x, and specific settings in Azure Function or local settings for development. It offers features like text completions, chat completion, assistants with custom skills, embeddings generators for text relatedness, and semantic search using vector databases. The project also includes examples in C# and Python for different functionalities.

generative-ai

The 'Generative AI' repository provides a C# library for interacting with Google's Generative AI models, specifically the Gemini models. It allows users to access and integrate the Gemini API into .NET applications, supporting functionalities such as listing available models, generating content, creating tuned models, working with large files, starting chat sessions, and more. The repository also includes helper classes and enums for Gemini API aspects. Authentication methods include API key, OAuth, and various authentication modes for Google AI and Vertex AI. The package offers features for both Google AI Studio and Google Cloud Vertex AI, with detailed instructions on installation, usage, and troubleshooting.

LightRAG

LightRAG is a PyTorch library designed for building and optimizing Retriever-Agent-Generator (RAG) pipelines. It follows principles of simplicity, quality, and optimization, offering developers maximum customizability with minimal abstraction. The library includes components for model interaction, output parsing, and structured data generation. LightRAG facilitates tasks like providing explanations and examples for concepts through a question-answering pipeline.

LlmTornado

LLM Tornado is a .NET library designed to simplify the consumption of various large language models (LLMs) from providers like OpenAI, Anthropic, Cohere, Google, Azure, Groq, and self-hosted APIs. It acts as an aggregator, allowing users to easily switch between different LLM providers with just a change in argument. Users can perform tasks such as chatting with documents, voice calling with AI, orchestrating assistants, generating images, and more. The library exposes capabilities through vendor extensions, making it easy to integrate and use multiple LLM providers simultaneously.

ChatRex

ChatRex is a Multimodal Large Language Model (MLLM) designed to seamlessly integrate fine-grained object perception and robust language understanding. By adopting a decoupled architecture with a retrieval-based approach for object detection and leveraging high-resolution visual inputs, ChatRex addresses key challenges in perception tasks. It is powered by the Rexverse-2M dataset with diverse image-region-text annotations. ChatRex can be applied to various scenarios requiring fine-grained perception, such as object detection, grounded conversation, grounded image captioning, and region understanding.

Google_GenerativeAI

Google GenerativeAI (Gemini) is an unofficial C# .Net SDK based on REST APIs for accessing Google Gemini models. It offers a complete rewrite of the previous SDK with improved performance, flexibility, and ease of use. The SDK seamlessly integrates with LangChain.net, providing easy methods for JSON-based interactions and function calling with Google Gemini models. It includes features like enhanced JSON mode handling, function calling with code generator, multi-modal functionality, Vertex AI support, multimodal live API, image generation and captioning, retrieval-augmented generation with Vertex RAG Engine and Google AQA, easy JSON handling, Gemini tools and function calling, multimodal live API, and more.

chromem-go

chromem-go is an embeddable vector database for Go with a Chroma-like interface and zero third-party dependencies. It enables retrieval augmented generation (RAG) and similar embeddings-based features in Go apps without the need for a separate database. The focus is on simplicity and performance for common use cases, allowing querying of documents with minimal memory allocations. The project is in beta and may introduce breaking changes before v1.0.0.

rl

TorchRL is an open-source Reinforcement Learning (RL) library for PyTorch. It provides pytorch and **python-first** , low and high level abstractions for RL that are intended to be **efficient** , **modular** , **documented** and properly **tested**. The code is aimed at supporting research in RL. Most of it is written in python in a highly modular way, such that researchers can easily swap components, transform them or write new ones with little effort.

mcpdotnet

mcpdotnet is a .NET implementation of the Model Context Protocol (MCP), facilitating connections and interactions between .NET applications and MCP clients and servers. It aims to provide a clean, specification-compliant implementation with support for various MCP capabilities and transport types. The library includes features such as async/await pattern, logging support, and compatibility with .NET 8.0 and later. Users can create clients to use tools from configured servers and also create servers to register tools and interact with clients. The project roadmap includes expanding documentation, increasing test coverage, adding samples, performance optimization, SSE server support, and authentication.

zeta

Zeta is a tool designed to build state-of-the-art AI models faster by providing modular, high-performance, and scalable building blocks. It addresses the common issues faced while working with neural nets, such as chaotic codebases, lack of modularity, and low performance modules. Zeta emphasizes usability, modularity, and performance, and is currently used in hundreds of models across various GitHub repositories. It enables users to prototype, train, optimize, and deploy the latest SOTA neural nets into production. The tool offers various modules like FlashAttention, SwiGLUStacked, RelativePositionBias, FeedForward, BitLinear, PalmE, Unet, VisionEmbeddings, niva, FusedDenseGELUDense, FusedDropoutLayerNorm, MambaBlock, Film, hyper_optimize, DPO, and ZetaCloud for different tasks in AI model development.

uzu-swift

Swift package for uzu, a high-performance inference engine for AI models on Apple Silicon. Deploy AI directly in your app with zero latency, full data privacy, and no inference costs. Key features include a simple, high-level API, specialized configurations for performance boosts, broad model support, and an observable model manager. Easily set up projects, obtain an API key, choose a model, and run it with corresponding identifiers. Examples include chat, speedup with speculative decoding, chat with dynamic context, chat with static context, summarization, classification, cloud, and structured output. Troubleshooting available via Discord or email. Licensed under MIT.

axar

AXAR AI is a lightweight framework designed for building production-ready agentic applications using TypeScript. It aims to simplify the process of creating robust, production-grade LLM-powered apps by focusing on familiar coding practices without unnecessary abstractions or steep learning curves. The framework provides structured, typed inputs and outputs, familiar and intuitive patterns like dependency injection and decorators, explicit control over agent behavior, real-time logging and monitoring tools, minimalistic design with little overhead, model agnostic compatibility with various AI models, and streamed outputs for fast and accurate results. AXAR AI is ideal for developers working on real-world AI applications who want a tool that gets out of the way and allows them to focus on shipping reliable software.

For similar tasks

Jlama

Jlama is a modern Java inference engine designed for large language models. It supports various model types such as Gemma, Llama, Mistral, GPT-2, BERT, and more. The tool implements features like Flash Attention, Mixture of Experts, and supports different model quantization formats. Built with Java 21 and utilizing the new Vector API for faster inference, Jlama allows users to add LLM inference directly to their Java applications. The tool includes a CLI for running models, a simple UI for chatting with LLMs, and examples for different model types.

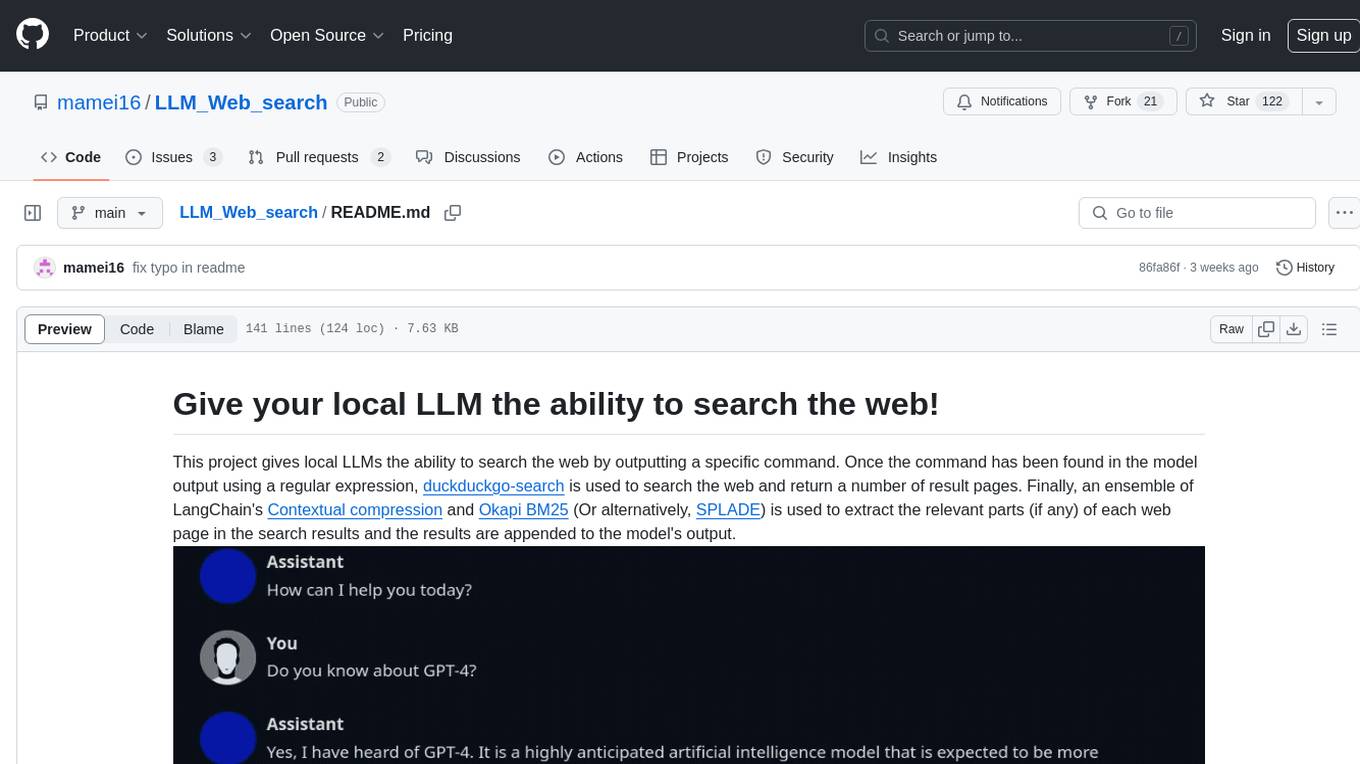

LLM_Web_search

LLM_Web_search project gives local LLMs the ability to search the web by outputting a specific command. It uses regular expressions to extract search queries from model output and then utilizes duckduckgo-search to search the web. LangChain's Contextual compression and Okapi BM25 or SPLADE are used to extract relevant parts of web pages in search results. The extracted results are appended to the model's output.

node-llama-cpp

node-llama-cpp is a tool that allows users to run AI models locally on their machines. It provides pre-built bindings with the option to build from source using cmake. Users can interact with text generation models, chat with models using a chat wrapper, and force models to generate output in a parseable format like JSON. The tool supports Metal and CUDA, offers CLI functionality for chatting with models without coding, and ensures up-to-date compatibility with the latest version of llama.cpp. Installation includes pre-built binaries for macOS, Linux, and Windows, with the option to build from source if binaries are not available for the platform.

torchchat

torchchat is a codebase showcasing the ability to run large language models (LLMs) seamlessly. It allows running LLMs using Python in various environments such as desktop, server, iOS, and Android. The tool supports running models via PyTorch, chatting, generating text, running chat in the browser, and running models on desktop/server without Python. It also provides features like AOT Inductor for faster execution, running in C++ using the runner, and deploying and running on iOS and Android. The tool supports popular hardware and OS including Linux, Mac OS, Android, and iOS, with various data types and execution modes available.

chatgpt-cli

ChatGPT CLI provides a powerful command-line interface for seamless interaction with ChatGPT models via OpenAI and Azure. It features streaming capabilities, extensive configuration options, and supports various modes like streaming, query, and interactive mode. Users can manage thread-based context, sliding window history, and provide custom context from any source. The CLI also offers model and thread listing, advanced configuration options, and supports GPT-4, GPT-3.5-turbo, and Perplexity's models. Installation is available via Homebrew or direct download, and users can configure settings through default values, a config.yaml file, or environment variables.

elmer

Elmer is a user-friendly wrapper over common APIs for calling llm’s, with support for streaming and easy registration and calling of R functions. Users can interact with Elmer in various ways, such as interactive chat console, interactive method call, programmatic chat, and streaming results. Elmer also supports async usage for running multiple chat sessions concurrently, useful for Shiny applications. The tool calling feature allows users to define external tools that Elmer can request to execute, enhancing the capabilities of the chat model.

mlx-lm

MLX LM is a Python package designed for generating text and fine-tuning large language models on Apple silicon using MLX. It offers integration with the Hugging Face Hub for easy access to thousands of LLMs, support for quantizing and uploading models to the Hub, low-rank and full model fine-tuning capabilities, and distributed inference and fine-tuning with `mx.distributed`. Users can interact with the package through command line options or the Python API, enabling tasks such as text generation, chatting with language models, model conversion, streaming generation, and sampling. MLX LM supports various Hugging Face models and provides tools for efficient scaling to long prompts and generations, including a rotating key-value cache and prompt caching. It requires macOS 15.0 or higher for optimal performance.

mini-sglang

Mini-SGLang is a lightweight yet high-performance inference framework for Large Language Models. With a compact codebase of ~5,000 lines of Python, it serves as both a capable inference engine and a transparent reference for researchers and developers. It achieves state-of-the-art throughput and latency with advanced optimizations such as Radix Cache, Chunked Prefill, Overlap Scheduling, Tensor Parallelism, and Optimized Kernels integrating FlashAttention and FlashInfer for maximum efficiency. Mini-SGLang is designed to demystify the complexities of modern LLM serving systems, providing a clean, modular, and fully type-annotated codebase that is easy to understand and modify.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.