simple-openai

A Java library to use the OpenAI Api in the simplest possible way.

Stars: 289

Simple-OpenAI is a Java library that provides a simple way to interact with the OpenAI API. It offers consistent interfaces for various OpenAI services like Audio, Chat Completion, Image Generation, and more. The library uses CleverClient for HTTP communication, Jackson for JSON parsing, and Lombok to reduce boilerplate code. It supports asynchronous requests and provides methods for synchronous calls as well. Users can easily create objects to communicate with the OpenAI API and perform tasks like text-to-speech, transcription, image generation, and chat completions.

README:

A Java library to use the OpenAI Api in the simplest possible way.

- Description

- Supported Services

- Installation

-

How to Use

- Creating a SimpleOpenAI Object

- Using HttpClient or OkHttp

- Using Realtime Feature

- Audio Example

- Image Example

- Chat Completion Example

- Chat Completion with Streaming Example

- Chat Completion with Functions Example

- Chat Completion with Vision Example

- Chat Completion with Audio Example

- Chat Completion with Structured Outputs

- Chat Completion Conversation Example

- Assistant v2 Conversation Example

- Realtime Conversation Example

- Exception Handling

- Retrying Requests

- Instructions for Android

- Support for OpenAI-compatible API Providers

- Run Examples

- Contributing

- License

- Who is Using Simple-OpenAI?

- Show Us Your Love

Simple-OpenAI is a Java http client library for sending requests to and receiving responses from the OpenAI API. It exposes a consistent interface across all the services, yet as simple as you can find in other languages like Python or NodeJs. It's an unofficial library.

Simple-OpenAI uses the CleverClient library for http communication, Jackson for Json parsing, and Lombok to minimize boilerplate code, among others libraries.

Simple-OpenAI seeks to stay up to date with the most recent changes in OpenAI. Currently, it supports most of the existing features and will continue to update with future changes.

Full support for most of the OpenAI services:

- Audio (Speech, Transcription, Translation)

- Batch (Batches of Chat Completion)

- Chat Completion (Text Generation, Streaming, Function Calling, Vision, Structured Outputs, Audio)

- Completion (Legacy Text Generation)

- Embedding (Vectoring Text)

- Files (Upload Files)

- Fine Tuning (Customize Models)

- Image (Generate, Edit, Variation)

- Models (List)

- Moderation (Check Harmful Text)

- Realtime Beta (Speech-to-Speech Conversation, Multimodality, Function Calling)

- Session Token (Create Ephemeral Tokens)

- Upload (Upload Large Files in Parts)

- Assistants Beta v2 (Assistants, Threads, Messages, Runs, Steps, Vector Stores, Streaming, Function Calling, Vision, Structured Outputs)

NOTES:

- The methods's responses are

CompletableFuture<ResponseObject>, which means they are asynchronous, but you can call the join() method to return the result value when complete. - Exceptions for the above point are the methods whose names end with the suffix

AndPoll(). These methods are synchronous and block until a Predicate function that you provide returns false.

You can install Simple-OpenAI by adding the following dependencies to your Maven project:

<dependency>

<groupId>io.github.sashirestela</groupId>

<artifactId>simple-openai</artifactId>

<version>[simple-openai_latest_version]</version>

</dependency>

<!-- OkHttp dependency is optional if you decide to use it with simple-openai -->

<dependency>

<groupId>com.squareup.okhttp3</groupId>

<artifactId>okhttp</artifactId>

<version>[okhttp_latest_version]</version>

</dependency>Or alternatively using Gradle:

dependencies {

implementation 'io.github.sashirestela:simple-openai:[simple-openai_latest_version]'

/* OkHttp dependency is optional if you decide to use it with simple-openai */

implementation 'com.squareup.okhttp3:okhttp:[okhttp_latest_version]'

}Take in account that you need to use Java 11 or greater.

This is the first step you need to do before to use the services. You must provide at least your OpenAI Api Key (See here for more details). In the following example we are getting the Api Key from an environment variable called OPENAI_API_KEY which we have created to keep it:

var openAI = SimpleOpenAI.builder()

.apiKey(System.getenv("OPENAI_API_KEY"))

.build();Optionally you could pass your OpenAI Organization Id in case you have multiple organizations and you want to identify usage by organization and/or you could pass your OpenAI Project Id in case you want to provides access to a single project. In the following example we are using environment variable for those Ids:

var openAI = SimpleOpenAI.builder()

.apiKey(System.getenv("OPENAI_API_KEY"))

.organizationId(System.getenv("OPENAI_ORGANIZATION_ID"))

.projectId(System.getenv("OPENAI_PROJECT_ID"))

.build();After you have created a SimpleOpenAI object, you are ready to call its services in order to communicate to OpenAI API.

Simple-OpenAI uses one of the following available http client components: Java's HttpClient (by default) or Square's OkHttp (adding a dependency).You can use the clientAdapter attribute to indicate which to use. In the following example we are providing a custom Java HttpClient:

var httpClient = HttpClient.newBuilder()

.version(Version.HTTP_1_1)

.followRedirects(Redirect.NORMAL)

.connectTimeout(Duration.ofSeconds(20))

.executor(Executors.newFixedThreadPool(3))

.proxy(ProxySelector.of(new InetSocketAddress("proxy.example.com", 80)))

.build();

var openAI = SimpleOpenAI.builder()

.apiKey(System.getenv("OPENAI_API_KEY"))

.clientAdapter(new JavaHttpClientAdpter(httpClient)) // To use a custom Java HttpClient

//.clientAdapter(new JavaHttpClientAdpter()) // To use a default Java HttpClient

//.clientAdapter(new OkHttpClientAdpter(okHttpClient)) // To use a custom OkHttpClient

//.clientAdapter(new OkHttpClientAdpter()) // To use a default OkHttpClient

.build();If you want to use the Realtime feature, you need to set the realtimeConfig attribute. For this feature you will set another http client (similar to clientAdapter) for WebSocket communication: Java's HttpClient (by default) or Square's OkHttp

var openAI = SimpleOpenAI.builder()

.apiKey(System.getenv("OPENAI_API_KEY"))

// -- To use a default Java HttpClient for WebSocket

.realtimeConfig(RealtimeConfig.of("model")

// -- To use a default Java HttpClient for WebSocket

//.realtimeConfig(RealtimeConfig.of("model", new JavaHttpWebSocketAdpter())

// -- To use a custom Java HttpClient for WebSocket

//.realtimeConfig(RealtimeConfig.of("model", new JavaHttpWebSocketAdpter(httpClient))

// -- To use a default OkHttpClient for WebSocket

//.realtimeConfig(RealtimeConfig.of("model", new OkHttpWebSocketAdpter())

// -- To use a custom OkHttpClient for WebSocket

//.realtimeConfig(RealtimeConfig.of("model", new OkHttpWebSocketAdpter(okHttpClient))

.build();Example to call the Audio service to transform text to audio. We are requesting to receive the audio in binary format (InputStream):

var speechRequest = SpeechRequest.builder()

.model("tts-1")

.input("Hello world, welcome to the AI universe!")

.voice(Voice.ALLOY)

.responseFormat(SpeechResponseFormat.MP3)

.speed(1.0)

.build();

var futureSpeech = openAI.audios().speak(speechRequest);

var speechResponse = futureSpeech.join();

try {

var audioFile = new FileOutputStream(speechFileName);

audioFile.write(speechResponse.readAllBytes());

System.out.println(audioFile.getChannel().size() + " bytes");

audioFile.close();

} catch (Exception e) {

e.printStackTrace();

}Example to call the Audio service to transcribe an audio to text. We are requesting to receive the transcription in plain text format (see the name of the method):

var audioRequest = TranscriptionRequest.builder()

.file(Paths.get("hello_audio.mp3"))

.model("whisper-1")

.responseFormat(AudioResponseFormat.VERBOSE_JSON)

.temperature(0.2)

.timestampGranularity(TimestampGranularity.WORD)

.timestampGranularity(TimestampGranularity.SEGMENT)

.build();

var futureAudio = openAI.audios().transcribe(audioRequest);

var audioResponse = futureAudio.join();

System.out.println(audioResponse);Example to call the Image service to generate two images in response to our prompt. We are requesting to receive the images' urls and we are printing out them in the console:

var imageRequest = ImageRequest.builder()

.prompt("A cartoon of a hummingbird that is flying around a flower.")

.n(2)

.size(Size.X256)

.responseFormat(ImageResponseFormat.URL)

.model("dall-e-2")

.build();

var futureImage = openAI.images().create(imageRequest);

var imageResponse = futureImage.join();

imageResponse.stream().forEach(img -> System.out.println("\n" + img.getUrl()));Example to call the Chat Completion service to ask a question and wait for a full answer. We are printing out it in the console:

var chatRequest = ChatRequest.builder()

.model("gpt-4o-mini")

.message(SystemMessage.of("You are an expert in AI."))

.message(UserMessage.of("Write a technical article about ChatGPT, no more than 100 words."))

.temperature(0.0)

.maxCompletionTokens(300)

.build();

var futureChat = openAI.chatCompletions().create(chatRequest);

var chatResponse = futureChat.join();

System.out.println(chatResponse.firstContent());Example to call the Chat Completion service to ask a question and wait for an answer in partial message deltas. We are printing out it in the console as soon as each delta is arriving:

var chatRequest = ChatRequest.builder()

.model("gpt-4o-mini")

.message(SystemMessage.of("You are an expert in AI."))

.message(UserMessage.of("Write a technical article about ChatGPT, no more than 100 words."))

.temperature(0.0)

.maxCompletionTokens(300)

.build();

var futureChat = openAI.chatCompletions().createStream(chatRequest);

var chatResponse = futureChat.join();

chatResponse.filter(chatResp -> chatResp.getChoices().size() > 0 && chatResp.firstContent() != null)

.map(Chat::firstContent)

.forEach(System.out::print);

System.out.println();This functionality empowers the Chat Completion service to solve specific problems to our context. In this example we are setting three functions and we are entering a prompt that will require to call one of them (the function product). For setting functions we are using additional classes which implements the interface Functional. Those classes define a field by each function argument, annotating them to describe them and each class must override the execute method with the function's logic. Note that we are using the FunctionExecutor utility class to enroll the functions and to execute the function selected by the openai.chatCompletions() calling:

public void demoCallChatWithFunctions() {

var functionExecutor = new FunctionExecutor();

functionExecutor.enrollFunction(

FunctionDef.builder()

.name("get_weather")

.description("Get the current weather of a location")

.functionalClass(Weather.class)

.strict(Boolean.TRUE)

.build());

functionExecutor.enrollFunction(

FunctionDef.builder()

.name("product")

.description("Get the product of two numbers")

.functionalClass(Product.class)

.strict(Boolean.TRUE)

.build());

functionExecutor.enrollFunction(

FunctionDef.builder()

.name("run_alarm")

.description("Run an alarm")

.functionalClass(RunAlarm.class)

.strict(Boolean.TRUE)

.build());

var messages = new ArrayList<ChatMessage>();

messages.add(UserMessage.of("What is the product of 123 and 456?"));

chatRequest = ChatRequest.builder()

.model("gpt-4o-mini")

.messages(messages)

.tools(functionExecutor.getToolFunctions())

.build();

var futureChat = openAI.chatCompletions().create(chatRequest);

var chatResponse = futureChat.join();

var chatMessage = chatResponse.firstMessage();

var chatToolCall = chatMessage.getToolCalls().get(0);

var result = functionExecutor.execute(chatToolCall.getFunction());

messages.add(chatMessage);

messages.add(ToolMessage.of(result.toString(), chatToolCall.getId()));

chatRequest = ChatRequest.builder()

.model("gpt-4o-mini")

.messages(messages)

.tools(functionExecutor.getToolFunctions())

.build();

futureChat = openAI.chatCompletions().create(chatRequest);

chatResponse = futureChat.join();

System.out.println(chatResponse.firstContent());

}

public static class Weather implements Functional {

@JsonPropertyDescription("City and state, for example: León, Guanajuato")

@JsonProperty(required = true)

public String location;

@JsonPropertyDescription("The temperature unit, can be 'celsius' or 'fahrenheit'")

@JsonProperty(required = true)

public String unit;

@Override

public Object execute() {

return Math.random() * 45;

}

}

public static class Product implements Functional {

@JsonPropertyDescription("The multiplicand part of a product")

@JsonProperty(required = true)

public double multiplicand;

@JsonPropertyDescription("The multiplier part of a product")

@JsonProperty(required = true)

public double multiplier;

@Override

public Object execute() {

return multiplicand * multiplier;

}

}

public static class RunAlarm implements Functional {

@Override

public Object execute() {

return "DONE";

}

}Example to call the Chat Completion service to allow the model to take in external images and answer questions about them:

var chatRequest = ChatRequest.builder()

.model("gpt-4o-mini")

.messages(List.of(

UserMessage.of(List.of(

ContentPartText.of(

"What do you see in the image? Give in details in no more than 100 words."),

ContentPartImageUrl.of(ImageUrl.of(

"https://upload.wikimedia.org/wikipedia/commons/e/eb/Machu_Picchu%2C_Peru.jpg"))))))

.temperature(0.0)

.maxCompletionTokens(500)

.build();

var chatResponse = openAI.chatCompletions().createStream(chatRequest).join();

chatResponse.filter(chatResp -> chatResp.getChoices().size() > 0 && chatResp.firstContent() != null)

.map(Chat::firstContent)

.forEach(System.out::print);

System.out.println();Example to call the Chat Completion service to allow the model to take in local images and answer questions about them (check the Base64Util's code in this repository):

var chatRequest = ChatRequest.builder()

.model("gpt-4o-mini")

.messages(List.of(

UserMessage.of(List.of(

ContentPartText.of(

"What do you see in the image? Give in details in no more than 100 words."),

ContentPartImageUrl.of(ImageUrl.of(

Base64Util.encode("src/demo/resources/machupicchu.jpg", MediaType.IMAGE)))))))

.temperature(0.0)

.maxCompletionTokens(500)

.build();

var chatResponse = openAI.chatCompletions().createStream(chatRequest).join();

chatResponse.filter(chatResp -> chatResp.getChoices().size() > 0 && chatResp.firstContent() != null)

.map(Chat::firstContent)

.forEach(System.out::print);

System.out.println();Example to call the Chat Completion service to generate a spoken audio response to a prompt, and to use audio inputs to prompt the model (check the Base64Util's code in this repository):

var messages = new ArrayList<ChatMessage>();

messages.add(SystemMessage.of("Respond in a short and concise way."));

messages.add(UserMessage.of(List.of(ContentPartInputAudio.of(InputAudio.of(

Base64Util.encode("src/demo/resources/question1.mp3", null), InputAudioFormat.MP3)))));

chatRequest = ChatRequest.builder()

.model("gpt-4o-audio-preview")

.modality(Modality.TEXT)

.modality(Modality.AUDIO)

.audio(Audio.of(Voice.ALLOY, AudioFormat.MP3))

.messages(messages)

.build();

var chatResponse = openAI.chatCompletions().create(chatRequest).join();

var audio = chatResponse.firstMessage().getAudio();

Base64Util.decode(audio.getData(), "src/demo/resources/answer1.mp3");

System.out.println("Answer 1: " + audio.getTranscript());

messages.add(AssistantMessage.builder().audioId(audio.getId()).build());

messages.add(UserMessage.of(List.of(ContentPartInputAudio.of(InputAudio.of(

Base64Util.encode("src/demo/resources/question2.mp3", null), InputAudioFormat.MP3)))));

chatRequest = ChatRequest.builder()

.model("gpt-4o-audio-preview")

.modality(Modality.TEXT)

.modality(Modality.AUDIO)

.audio(Audio.of(Voice.ALLOY, AudioFormat.MP3))

.messages(messages)

.build();

chatResponse = openAI.chatCompletions().create(chatRequest).join();

audio = chatResponse.firstMessage().getAudio();

Base64Util.decode(audio.getData(), "src/demo/resources/answer2.mp3");

System.out.println("Answer 2: " + audio.getTranscript());Example to call the Chat Completion service to ensure the model will always generate responses that adhere to a Json Schema defined through Java classes:

public void demoCallChatWithStructuredOutputs() {

var chatRequest = ChatRequest.builder()

.model("gpt-4o-mini")

.message(SystemMessage

.of("You are a helpful math tutor. Guide the user through the solution step by step."))

.message(UserMessage.of("How can I solve 8x + 7 = -23"))

.responseFormat(ResponseFormat.jsonSchema(JsonSchema.builder()

.name("MathReasoning")

.schemaClass(MathReasoning.class)

.build()))

.build();

var chatResponse = openAI.chatCompletions().createStream(chatRequest).join();

chatResponse.filter(chatResp -> chatResp.getChoices().size() > 0 && chatResp.firstContent() != null)

.map(Chat::firstContent)

.forEach(System.out::print);

System.out.println();

}

public static class MathReasoning {

public List<Step> steps;

public String finalAnswer;

public static class Step {

public String explanation;

public String output;

}

}This example simulates a conversation chat by the command console and demonstrates the usage of ChatCompletion with streaming and call functions.

You can see the full demo code as well as the results from running the demo code:

Demo Results

Welcome! Write any message: Hi, can you help me with some quetions about Lima, Peru?

Of course! What would you like to know about Lima, Peru?

Write any message (or write 'exit' to finish): Tell me something brief about Lima Peru, then tell me how's the weather there right now. Finally give me three tips to travel there.

### Brief About Lima, Peru

Lima, the capital city of Peru, is a bustling metropolis that blends modernity with rich historical heritage. Founded by Spanish conquistador Francisco Pizarro in 1535, Lima is known for its colonial architecture, vibrant culture, and delicious cuisine, particularly its world-renowned ceviche. The city is also a gateway to exploring Peru's diverse landscapes, from the coastal deserts to the Andean highlands and the Amazon rainforest.

### Current Weather in Lima, Peru

I'll check the current temperature and the probability of rain in Lima for you.### Current Weather in Lima, Peru

- **Temperature:** Approximately 11.8°C

- **Probability of Rain:** Approximately 97.8%

### Three Tips for Traveling to Lima, Peru

1. **Explore the Historic Center:**

- Visit the Plaza Mayor, the Government Palace, and the Cathedral of Lima. These landmarks offer a glimpse into Lima's colonial past and are UNESCO World Heritage Sites.

2. **Savor the Local Cuisine:**

- Don't miss out on trying ceviche, a traditional Peruvian dish made from fresh raw fish marinated in citrus juices. Also, explore the local markets and try other Peruvian delicacies.

3. **Visit the Coastal Districts:**

- Head to Miraflores and Barranco for stunning ocean views, vibrant nightlife, and cultural experiences. These districts are known for their beautiful parks, cliffs, and bohemian atmosphere.

Enjoy your trip to Lima! If you have any more questions, feel free to ask.

Write any message (or write 'exit' to finish): exitThis example simulates a conversation chat by the command console and demonstrates the usage of the latest Assistants API v2 features:

- Vector Stores to upload files and incorporate it as new knowledge base.

- Function Tools to use internal bussiness services to answer questions.

- File Search Tools to use vectorized files to do semantic search.

- Thread Run Streaming to answer with chunks of tokens in real time.

You can see the full demo code as well as the results from running the demo code:

Demo Results

File was created with id: file-oDFIF7o4SwuhpwBNnFIILhMK

Vector Store was created with id: vs_lG1oJmF2s5wLhqHUSeJpELMr

Assistant was created with id: asst_TYS5cZ05697tyn3yuhDrCCIv

Thread was created with id: thread_33n258gFVhZVIp88sQKuqMvg

Welcome! Write any message: Hello

=====>> Thread Run: id=run_nihN6dY0uyudsORg4xyUvQ5l, status=QUEUED

Hello! How can I assist you today?

=====>> Thread Run: id=run_nihN6dY0uyudsORg4xyUvQ5l, status=COMPLETED

Write any message (or write 'exit' to finish): Tell me something brief about Lima Peru, then tell me how's the weather there right now. Finally give me three tips to travel there.

=====>> Thread Run: id=run_QheimPyP5UK6FtmH5obon0fB, status=QUEUED

Lima, the capital city of Peru, is located on the country's arid Pacific coast. It's known for its vibrant culinary scene, rich history, and as a cultural hub with numerous museums, colonial architecture, and remnants of pre-Columbian civilizations. This bustling metropolis serves as a key gateway to visiting Peru’s more famous attractions, such as Machu Picchu and the Amazon rainforest.

Let me find the current weather conditions in Lima for you, and then I'll provide three travel tips.

=====>> Thread Run: id=run_QheimPyP5UK6FtmH5obon0fB, status=REQUIRES_ACTION

### Current Weather in Lima, Peru:

- **Temperature:** 12.8°C

- **Rain Probability:** 82.7%

### Three Travel Tips for Lima, Peru:

1. **Best Time to Visit:** Plan your trip during the dry season, from May to September, which offers clearer skies and milder temperatures. This period is particularly suitable for outdoor activities and exploring the city comfortably.

2. **Local Cuisine:** Don't miss out on tasting the local Peruvian dishes, particularly the ceviche, which is renowned worldwide. Lima is also known as the gastronomic capital of South America, so indulge in the wide variety of dishes available.

3. **Cultural Attractions:** Allocate enough time to visit Lima's rich array of museums, such as the Larco Museum, which showcases pre-Columbian art, and the historical center which is a UNESCO World Heritage Site. Moreover, exploring districts like Miraflores and Barranco can provide insights into the modern and bohemian sides of the city.

Enjoy planning your trip to Lima! If you need more information or help, feel free to ask.

=====>> Thread Run: id=run_QheimPyP5UK6FtmH5obon0fB, status=COMPLETED

Write any message (or write 'exit' to finish): Tell me something about the Mistral company

=====>> Thread Run: id=run_5u0t8kDQy87p5ouaTRXsCG8m, status=QUEUED

Mistral AI is a French company that specializes in selling artificial intelligence products. It was established in April 2023 by former employees of Meta Platforms and Google DeepMind. Notably, the company secured a significant amount of funding, raising €385 million in October 2023, and achieved a valuation exceeding $2 billion by December of the same year.

The prime focus of Mistral AI is on developing and producing open-source large language models. This approach underscores the foundational role of open-source software as a counter to proprietary models. As of March 2024, Mistral AI has published two models, which are available in terms of weights, while three more models—categorized as Small, Medium, and Large—are accessible only through an API[1].

=====>> Thread Run: id=run_5u0t8kDQy87p5ouaTRXsCG8m, status=COMPLETED

Write any message (or write 'exit' to finish): exit

File was deleted: true

Vector Store was deleted: true

Assistant was deleted: true

Thread was deleted: trueIn this example you can see the code to establish a speech-to-speech conversation between you and the model using your microphone and your speaker. Here you can see in action the following events:

- ClientEvent.SessionUpdate

- ClientEvent.InputAudioBufferAppend

- ClientEvent.ResponseCreate

- ServerEvent.ResponseAudioDelta

- ServerEvent.ResponseAudioDone

- ServerEvent.ResponseAudioTranscriptDone

- ServerEvent.ConversationItemAudioTransCompleted

You can see the full code on:

Simple-OpenAI provides an exception handling mechanism through the OpenAIExceptionConverter class. This converter maps HTTP errors to specific OpenAI exceptions, making it easier to handle different types of API errors:

-

BadRequestException(400) -

AuthenticationException(401) -

PermissionDeniedException(403) -

NotFoundException(404) -

UnprocessableEntityException(422) -

RateLimitException(429) -

InternalServerException(500+) -

UnexpectedStatusCodeException(other status codes)

Here's a minimalist example of handling OpenAI exceptions:

try {

// Your code to call the OpenAI API using simple-openai goes here;

} catch (Exception e) {

try {

OpenAIExceptionConverter.rethrow(e);

} catch (AuthenticationException ae) {

// Handle this exception

} catch (NotFoundException ne) {

// Handle this exception

// Catching other exceptions

} catch (RuntimeException re) {

// Handle default exceptions

}

}Each exception provides access to OpenAIResponseInfo, which contains detailed information about the error including:

- HTTP status code

- Error message and type

- Request and response headers

- API endpoint URL and HTTP method

This exception handling mechanism allows you to handle API errors and provide feedback in your applications.

Simple-OpenAI provides automatic request retries using exponential backoff with optional jitter. You can configure retries using the RetryConfig class.

| Attribute | Description | Default Value |

|---|---|---|

| maxAttempts | Maximum number of retry attempts | 3 |

| initialDelayMs | Initial delay before retrying (in milliseconds) | 1000 |

| maxDelayMs | Maximum delay between retries (in milliseconds) | 10000 |

| backoffMultiplier | Multiplier for exponential backoff | 2.0 |

| jitterFactor | Percentage of jitter to apply to delay values | 0.2 |

| retryableExceptions | List of exception types that should trigger a retry | IOException, ConnectException, SocketTimeoutException |

| retryableStatusCodes | List of HTTP status codes that should trigger a retry | 408, 409, 429, 500-599 |

var retryConfig = RetryConfig.builder()

.maxAttempts(4)

.initialDelayMs(500)

.maxDelayMs(8000)

.backoffMultiplier(1.5)

.jitterFactor(0.1)

.build();

var openAI = SimpleOpenAI.builder()

.apiKey(System.getenv("OPENAI_API_KEY"))

.retryConfig(retryConfig)

.build();With this configuration, failed requests matching the criteria will be retried automatically with increasing delays based on exponential backoff. If you not set the retryConfig attribute, the default values will be used for retrying.

Follow the next instructions to run Simple-OpenAI in Android devices:

android {

//...

defaultConfig {

//...

minSdk 24

//...

}

//...

compileOptions {

sourceCompatibility JavaVersion.VERSION_11

targetCompatibility JavaVersion.VERSION_11

}

kotlinOptions {

jvmTarget = '11'

}

}

dependencies {

//...

implementation 'io.github.sashirestela:simple-openai:[simple-openai_version]'

implementation 'com.squareup.okhttp3:okhttp:[okhttp_version]'

}In Java:

SimpleOpenAI openAI = SimpleOpenAI.builder()

.apiKey(API_KEY)

.clientAdapter(new OkHttpClientAdapter()) // Optionally you could add a custom OkHttpClient

.build();In Kotlin:

val openAI = SimpleOpenAI.builder()

.apiKey(API_KEY)

.clientAdapter(OkHttpClientAdapter()) // Optionally you could add a custom OkHttpClient

.build()Simple-OpenAI can be used with additional providers that are compatible with the OpenAI API. At this moment, there is support for the following additional providers:

Gemini Vertex API is supported by Simple-OpenAI. We can use the class SimpleOpenAIGeminiVertex to start using this provider.

Note that SimpleOpenAIGeminiVertex depends on the following library that you must add your project:

<dependency>

<groupId>com.google.auth</groupId>

<artifactId>google-auth-library-oauth2-http</artifactId>

<version>1.15.0</version>

</dependency>

var openai = SimpleOpenAIGeminiVertex.builder()

.baseUrl(System.getenv("GEMINI_VERTEX_BASE_URL"))

.apiKeyProvider(<a function that returns a valid API key that refreshes every hour>)

//.clientAdapter(...) Optionally you could pass a custom clientAdapter

.build();Currently we are supporting the following service:

-

chatCompletionService(text generation, streaming, function calling, vision, structured outputs)

Gemini Google API is supported by Simple-OpenAI. We can use the class SimpleOpenAIGeminiGoogle to start using this provider.

var openai = SimpleOpenAIGeminiGoogle.builder()

.apiKey(System.getenv("GEMINIGOOGLE_API_KEY"))

//.baseUrl(customUrl) Optionally you could pass a custom baseUrl

//.clientAdapter(...) Optionally you could pass a custom clientAdapter

.build();Currently we are supporting the following services:

-

chatCompletionService(text generation, streaming, function calling, vision, structured outputs) -

embeddingService(float format)

Deepseek API is supported by Simple-OpenAI. We can use the class SimpleOpenAIDeepseek to start using this provider.

var openai = SimpleOpenAIDeepseek.builder()

.apiKey(System.getenv("DEEPSEEK_API_KEY"))

//.baseUrl(customUrl) Optionally you could pass a custom baseUrl

//.clientAdapter(...) Optionally you could pass a custom clientAdapter

.build();Currently we are supporting the following services:

-

chatCompletionService(text generation, streaming, thinking, function calling) -

modelService(list)

Mistral API is supported by Simple-OpenAI. We can use the class SimpleOpenAIMistral to start using this provider.

var openai = SimpleOpenAIMistral.builder()

.apiKey(System.getenv("MISTRAL_API_KEY"))

//.baseUrl(customUrl) Optionally you could pass a custom baseUrl

//.clientAdapter(...) Optionally you could pass a custom clientAdapter

.build();Currently we are supporting the following services:

-

chatCompletionService(text generation, streaming, function calling, vision) -

embeddingService(float format) -

modelService(list, detail, delete)

Azure OpenIA is supported by Simple-OpenAI. We can use the class SimpleOpenAIAzure to start using this provider.

var openai = SimpleOpenAIAzure.builder()

.apiKey(System.getenv("AZURE_OPENAI_API_KEY"))

.baseUrl(System.getenv("AZURE_OPENAI_BASE_URL")) // Including resourceName and deploymentId

.apiVersion(System.getenv("AZURE_OPENAI_API_VERSION"))

//.clientAdapter(...) Optionally you could pass a custom clientAdapter

.build();Azure OpenAI is powered by a diverse set of models with different capabilities and it requires a separate deployment for each model. Model availability varies by region and cloud. See more details about Azure OpenAI Models.

Currently we are supporting the following services only:

-

chatCompletionService(text generation, streaming, function calling, vision, structured outputs) -

fileService(upload files) -

assistantService beta V2(assistants, threads, messages, runs, steps, vector stores, streaming, function calling, vision, structured outputs)

Anyscale is suported by Simple-OpenAI. We can use the class SimpleOpenAIAnyscale to start using this provider.

var openai = SimpleOpenAIAnyscale.builder()

.apiKey(System.getenv("ANYSCALE_API_KEY"))

//.baseUrl(customUrl) Optionally you could pass a custom baseUrl

//.clientAdapter(...) Optionally you could pass a custom clientAdapter

.build();Currently we are supporting the chatCompletionService service only. It was tested with the Mistral model.

Examples for each OpenAI service have been created in the folder demo and you can follow the next steps to execute them:

-

Clone this repository:

git clone https://github.com/sashirestela/simple-openai.git cd simple-openai -

Build the project:

mvn clean install -

Create an environment variable for your OpenAI Api Key (the variable varies according to the OpenAI provider that we want to run):

export OPENAI_API_KEY=<here goes your api key> -

Grant execution permission to the script file:

chmod +x rundemo.sh -

Run examples:

./rundemo.sh <demo>Where:

-

<demo>Is mandatory and must be one of the Java files in the folder demo without the suffixDemo, for example: Audio, Chat, ChatMistral, Realtime, AssistantV2, Conversation, ConversationV2, etc. -

For example, to run the chat demo with a log file:

./rundemo.sh Chat

-

-

Instructions for Azure OpenAI demo

The recommended models to run this demo are:

- gpt-4o

- gpt-4o-mini

See the Azure OpenAI docs for more details: Azure OpenAI documentation. Once you have the deployment URL and the API key, set the following environment variables:

export AZURE_OPENAI_BASE_URL=<https://YOUR_RESOURCE_NAME.openai.azure.com/openai/deployments/YOUR_DEPLOYMENT_NAME> export AZURE_OPENAI_API_KEY=<here goes your regional API key> export AZURE_OPENAI_API_VERSION=<for example: 2025-01-01-preview>Note that some models may not be available in all regions. If you have trouble finding a model, try a different region. The API keys are regional (per cognitive account). If you provision multiple models in the same region they will share the same API key (actually there are two keys per region to support alternate key rotation).

-

Instructions for Gemini Vertex Demo

You need a GCP project with the Vertex AI API enabled and a GCP service account with the necessary permissions to access the API.

For details see https://cloud.google.com/vertex-ai/generative-ai/docs/model-reference/inference Note the target region for the endpoint, the GCP project ID and the service account credentials JSON file. Before you run the demo define the following environment variables:

export GEMINI_VERTEX_BASE_URL=https://<location>-aiplatform.googleapis.com/v1beta1/projects/<gcp project>/locations/<location>>/endpoints/openapi export GEMINI_VERTEX_SA_CREDS_PATH=<path to GCP service account credentials JSON file>

Kindly read our Contributing guide to learn and understand how to contribute to this project.

Simple-OpenAI is licensed under the MIT License. See the LICENSE file for more information.

List of the main users of our library:

- ChatMotor: A framework for OpenAI services. Thanks for credits!

- OpenOLAT: A learning managment system.

- Willy: A multi-channel assistant.

- SuperTurtyBot: A multi-purpose discord bot.

- Woolly: A code generation IntelliJ plugin.

- Vinopener: A wine recommender app.

- ScalerX.ai: A Telegram chatbot factory.

- Katie Backend: A question-answering platform.

Thanks for using simple-openai. If you find this project valuable there are a few ways you can show us your love, preferably all of them 🙂:

- Letting your friends know about this project 🗣📢.

- Writing a brief review on your social networks ✍🌐.

- Giving us a star on Github ⭐.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for simple-openai

Similar Open Source Tools

simple-openai

Simple-OpenAI is a Java library that provides a simple way to interact with the OpenAI API. It offers consistent interfaces for various OpenAI services like Audio, Chat Completion, Image Generation, and more. The library uses CleverClient for HTTP communication, Jackson for JSON parsing, and Lombok to reduce boilerplate code. It supports asynchronous requests and provides methods for synchronous calls as well. Users can easily create objects to communicate with the OpenAI API and perform tasks like text-to-speech, transcription, image generation, and chat completions.

Jlama

Jlama is a modern Java inference engine designed for large language models. It supports various model types such as Gemma, Llama, Mistral, GPT-2, BERT, and more. The tool implements features like Flash Attention, Mixture of Experts, and supports different model quantization formats. Built with Java 21 and utilizing the new Vector API for faster inference, Jlama allows users to add LLM inference directly to their Java applications. The tool includes a CLI for running models, a simple UI for chatting with LLMs, and examples for different model types.

continuous-eval

Open-Source Evaluation for LLM Applications. `continuous-eval` is an open-source package created for granular and holistic evaluation of GenAI application pipelines. It offers modularized evaluation, a comprehensive metric library covering various LLM use cases, the ability to leverage user feedback in evaluation, and synthetic dataset generation for testing pipelines. Users can define their own metrics by extending the Metric class. The tool allows running evaluation on a pipeline defined with modules and corresponding metrics. Additionally, it provides synthetic data generation capabilities to create user interaction data for evaluation or training purposes.

jsgrad

jsgrad is a modern ML library for JavaScript and TypeScript that aims to provide a fast and efficient way to run and train machine learning models. It is a rewrite of tinygrad in TypeScript, offering a clean and modern API with zero dependencies. The library supports multiple runtime backends such as WebGPU, WASM, and CLANG, making it versatile for various applications in browser and server environments. With a focus on simplicity and performance, jsgrad is designed to be easy to use for both model inference and training tasks.

SemanticKernel.Assistants

This repository contains an assistant proposal for the Semantic Kernel, allowing the usage of assistants without relying on OpenAI Assistant APIs. It runs locally planners and plugins for the assistants, providing scenarios like Assistant with Semantic Kernel plugins, Multi-Assistant conversation, and AutoGen conversation. The Semantic Kernel is a lightweight SDK enabling integration of AI Large Language Models with conventional programming languages, offering functions like semantic functions, native functions, and embeddings-based memory. Users can bring their own model for the assistants and host them locally. The repository includes installation instructions, usage examples, and information on creating new conversation threads with the assistant.

zeta

Zeta is a tool designed to build state-of-the-art AI models faster by providing modular, high-performance, and scalable building blocks. It addresses the common issues faced while working with neural nets, such as chaotic codebases, lack of modularity, and low performance modules. Zeta emphasizes usability, modularity, and performance, and is currently used in hundreds of models across various GitHub repositories. It enables users to prototype, train, optimize, and deploy the latest SOTA neural nets into production. The tool offers various modules like FlashAttention, SwiGLUStacked, RelativePositionBias, FeedForward, BitLinear, PalmE, Unet, VisionEmbeddings, niva, FusedDenseGELUDense, FusedDropoutLayerNorm, MambaBlock, Film, hyper_optimize, DPO, and ZetaCloud for different tasks in AI model development.

mcpdotnet

mcpdotnet is a .NET implementation of the Model Context Protocol (MCP), facilitating connections and interactions between .NET applications and MCP clients and servers. It aims to provide a clean, specification-compliant implementation with support for various MCP capabilities and transport types. The library includes features such as async/await pattern, logging support, and compatibility with .NET 8.0 and later. Users can create clients to use tools from configured servers and also create servers to register tools and interact with clients. The project roadmap includes expanding documentation, increasing test coverage, adding samples, performance optimization, SSE server support, and authentication.

ivy

Ivy is an open-source machine learning framework that enables users to convert code between different ML frameworks and write framework-agnostic code. It allows users to transpile code from one framework to another, making it easy to use building blocks from different frameworks in a single project. Ivy also serves as a flexible framework that breaks free from framework limitations, allowing users to publish code that is interoperable with various frameworks and future frameworks. Users can define trainable modules and layers using Ivy's stateful API, making it easy to build and train models across different backends.

ChatRex

ChatRex is a Multimodal Large Language Model (MLLM) designed to seamlessly integrate fine-grained object perception and robust language understanding. By adopting a decoupled architecture with a retrieval-based approach for object detection and leveraging high-resolution visual inputs, ChatRex addresses key challenges in perception tasks. It is powered by the Rexverse-2M dataset with diverse image-region-text annotations. ChatRex can be applied to various scenarios requiring fine-grained perception, such as object detection, grounded conversation, grounded image captioning, and region understanding.

ivy

Ivy is an open-source machine learning framework that enables you to: * 🔄 **Convert code into any framework** : Use and build on top of any model, library, or device by converting any code from one framework to another using `ivy.transpile`. * ⚒️ **Write framework-agnostic code** : Write your code once in `ivy` and then choose the most appropriate ML framework as the backend to leverage all the benefits and tools. Join our growing community 🌍 to connect with people using Ivy. **Let's** unify.ai **together 🦾**

beyondllm

Beyond LLM offers an all-in-one toolkit for experimentation, evaluation, and deployment of Retrieval-Augmented Generation (RAG) systems. It simplifies the process with automated integration, customizable evaluation metrics, and support for various Large Language Models (LLMs) tailored to specific needs. The aim is to reduce LLM hallucination risks and enhance reliability.

wandb

Weights & Biases (W&B) is a platform that helps users build better machine learning models faster by tracking and visualizing all components of the machine learning pipeline, from datasets to production models. It offers tools for tracking, debugging, evaluating, and monitoring machine learning applications. W&B provides integrations with popular frameworks like PyTorch, TensorFlow/Keras, Hugging Face Transformers, PyTorch Lightning, XGBoost, and Sci-Kit Learn. Users can easily log metrics, visualize performance, and compare experiments using W&B. The platform also supports hosting options in the cloud or on private infrastructure, making it versatile for various deployment needs.

ShannonBase

ShannonBase is a HTAP database provided by Shannon Data AI, designed for big data and AI. It extends MySQL with native embedding support, machine learning capabilities, a JavaScript engine, and a columnar storage engine. ShannonBase supports multimodal data types and natively integrates LightGBM for training and prediction. It leverages embedding algorithms and vector data type for ML/RAG tasks, providing Zero Data Movement, Native Performance Optimization, and Seamless SQL Integration. The tool includes a lightweight JavaScript engine for writing stored procedures in SQL or JavaScript.

catalyst

Catalyst is a C# Natural Language Processing library designed for speed, inspired by spaCy's design. It provides pre-trained models, support for training word and document embeddings, and flexible entity recognition models. The library is fast, modern, and pure-C#, supporting .NET standard 2.0. It is cross-platform, running on Windows, Linux, macOS, and ARM. Catalyst offers non-destructive tokenization, named entity recognition, part-of-speech tagging, language detection, and efficient binary serialization. It includes pre-built models for language packages and lemmatization. Users can store and load models using streams. Getting started with Catalyst involves installing its NuGet Package and setting the storage to use the online repository. The library supports lazy loading of models from disk or online. Users can take advantage of C# lazy evaluation and native multi-threading support to process documents in parallel. Training a new FastText word2vec embedding model is straightforward, and Catalyst also provides algorithms for fast embedding search and dimensionality reduction.

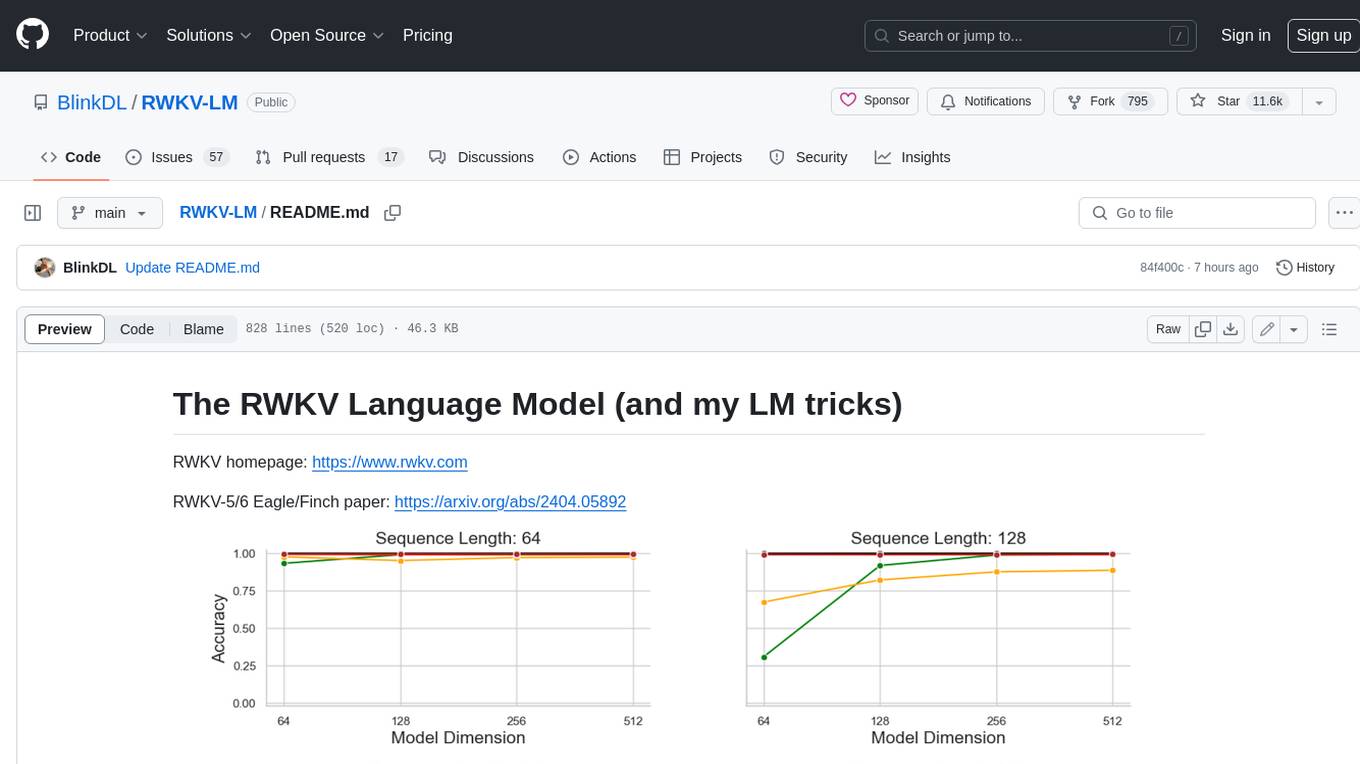

RWKV-LM

RWKV is an RNN with Transformer-level LLM performance, which can also be directly trained like a GPT transformer (parallelizable). And it's 100% attention-free. You only need the hidden state at position t to compute the state at position t+1. You can use the "GPT" mode to quickly compute the hidden state for the "RNN" mode. So it's combining the best of RNN and transformer - **great performance, fast inference, saves VRAM, fast training, "infinite" ctx_len, and free sentence embedding** (using the final hidden state).

bellman

Bellman is a unified interface to interact with language and embedding models, supporting various vendors like VertexAI/Gemini, OpenAI, Anthropic, VoyageAI, and Ollama. It consists of a library for direct interaction with models and a service 'bellmand' for proxying requests with one API key. Bellman simplifies switching between models, vendors, and common tasks like chat, structured data, tools, and binary input. It addresses the lack of official SDKs for major players and differences in APIs, providing a single proxy for handling different models. The library offers clients for different vendors implementing common interfaces for generating and embedding text, enabling easy interchangeability between models.

For similar tasks

fastllm

A collection of LLM services you can self host via docker or modal labs to support your applications development. The goal is to provide docker containers or modal labs deployments of common patterns when using LLMs and endpoints to integrate easily with existing codebases using the openai api. It supports GPT4all's embedding api, JSONFormer api for chat completion, Cross Encoders based on sentence transformers, and provides documentation using MkDocs.

llm-apps-java-spring-ai

The 'LLM Applications with Java and Spring AI' repository provides samples demonstrating how to build Java applications powered by Generative AI and Large Language Models (LLMs) using Spring AI. It includes projects for question answering, chat completion models, prompts, templates, multimodality, output converters, embedding models, document ETL pipeline, function calling, image models, and audio models. The repository also lists prerequisites such as Java 21, Docker/Podman, Mistral AI API Key, OpenAI API Key, and Ollama. Users can explore various use cases and projects to leverage LLMs for text generation, vector transformation, document processing, and more.

simple-openai

Simple-OpenAI is a Java library that provides a simple way to interact with the OpenAI API. It offers consistent interfaces for various OpenAI services like Audio, Chat Completion, Image Generation, and more. The library uses CleverClient for HTTP communication, Jackson for JSON parsing, and Lombok to reduce boilerplate code. It supports asynchronous requests and provides methods for synchronous calls as well. Users can easily create objects to communicate with the OpenAI API and perform tasks like text-to-speech, transcription, image generation, and chat completions.

gateway

Adaline Gateway is a fully local production-grade Super SDK that offers a unified interface for calling over 200+ LLMs. It is production-ready, supports batching, retries, caching, callbacks, and OpenTelemetry. Users can create custom plugins and providers for seamless integration with their infrastructure.

generative-ai

This repository contains notebooks, code samples, sample apps, and other resources that demonstrate how to use, develop and manage generative AI workflows using Generative AI on Google Cloud, powered by Vertex AI. For more Vertex AI samples, please visit the Vertex AI samples Github repository.

AISuperDomain

Aila Desktop Application is a powerful tool that integrates multiple leading AI models into a single desktop application. It allows users to interact with various AI models simultaneously, providing diverse responses and insights to their inquiries. With its user-friendly interface and customizable features, Aila empowers users to engage with AI seamlessly and efficiently. Whether you're a researcher, student, or professional, Aila can enhance your AI interactions and streamline your workflow.

generative-ai-for-beginners

This course has 18 lessons. Each lesson covers its own topic so start wherever you like! Lessons are labeled either "Learn" lessons explaining a Generative AI concept or "Build" lessons that explain a concept and code examples in both **Python** and **TypeScript** when possible. Each lesson also includes a "Keep Learning" section with additional learning tools. **What You Need** * Access to the Azure OpenAI Service **OR** OpenAI API - _Only required to complete coding lessons_ * Basic knowledge of Python or Typescript is helpful - *For absolute beginners check out these Python and TypeScript courses. * A Github account to fork this entire repo to your own GitHub account We have created a **Course Setup** lesson to help you with setting up your development environment. Don't forget to star (🌟) this repo to find it easier later. ## 🧠 Ready to Deploy? If you are looking for more advanced code samples, check out our collection of Generative AI Code Samples in both **Python** and **TypeScript**. ## 🗣️ Meet Other Learners, Get Support Join our official AI Discord server to meet and network with other learners taking this course and get support. ## 🚀 Building a Startup? Sign up for Microsoft for Startups Founders Hub to receive **free OpenAI credits** and up to **$150k towards Azure credits to access OpenAI models through Azure OpenAI Services**. ## 🙏 Want to help? Do you have suggestions or found spelling or code errors? Raise an issue or Create a pull request ## 📂 Each lesson includes: * A short video introduction to the topic * A written lesson located in the README * Python and TypeScript code samples supporting Azure OpenAI and OpenAI API * Links to extra resources to continue your learning ## 🗃️ Lessons | | Lesson Link | Description | Additional Learning | | :-: | :------------------------------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------: | ------------------------------------------------------------------------------ | | 00 | Course Setup | **Learn:** How to Setup Your Development Environment | Learn More | | 01 | Introduction to Generative AI and LLMs | **Learn:** Understanding what Generative AI is and how Large Language Models (LLMs) work. | Learn More | | 02 | Exploring and comparing different LLMs | **Learn:** How to select the right model for your use case | Learn More | | 03 | Using Generative AI Responsibly | **Learn:** How to build Generative AI Applications responsibly | Learn More | | 04 | Understanding Prompt Engineering Fundamentals | **Learn:** Hands-on Prompt Engineering Best Practices | Learn More | | 05 | Creating Advanced Prompts | **Learn:** How to apply prompt engineering techniques that improve the outcome of your prompts. | Learn More | | 06 | Building Text Generation Applications | **Build:** A text generation app using Azure OpenAI | Learn More | | 07 | Building Chat Applications | **Build:** Techniques for efficiently building and integrating chat applications. | Learn More | | 08 | Building Search Apps Vector Databases | **Build:** A search application that uses Embeddings to search for data. | Learn More | | 09 | Building Image Generation Applications | **Build:** A image generation application | Learn More | | 10 | Building Low Code AI Applications | **Build:** A Generative AI application using Low Code tools | Learn More | | 11 | Integrating External Applications with Function Calling | **Build:** What is function calling and its use cases for applications | Learn More | | 12 | Designing UX for AI Applications | **Learn:** How to apply UX design principles when developing Generative AI Applications | Learn More | | 13 | Securing Your Generative AI Applications | **Learn:** The threats and risks to AI systems and methods to secure these systems. | Learn More | | 14 | The Generative AI Application Lifecycle | **Learn:** The tools and metrics to manage the LLM Lifecycle and LLMOps | Learn More | | 15 | Retrieval Augmented Generation (RAG) and Vector Databases | **Build:** An application using a RAG Framework to retrieve embeddings from a Vector Databases | Learn More | | 16 | Open Source Models and Hugging Face | **Build:** An application using open source models available on Hugging Face | Learn More | | 17 | AI Agents | **Build:** An application using an AI Agent Framework | Learn More | | 18 | Fine-Tuning LLMs | **Learn:** The what, why and how of fine-tuning LLMs | Learn More |

cog-comfyui

Cog-comfyui allows users to run ComfyUI workflows on Replicate. ComfyUI is a visual programming tool for creating and sharing generative art workflows. With cog-comfyui, users can access a variety of pre-trained models and custom nodes to create their own unique artworks. The tool is easy to use and does not require any coding experience. Users simply need to upload their API JSON file and any necessary input files, and then click the "Run" button. Cog-comfyui will then generate the output image or video file.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.