reolink_aio

Reolink NVR/camera API PyPI package

Stars: 125

The 'reolink_aio' Python package is designed to integrate Reolink devices (NVR/cameras) into your application. It implements Reolink IP NVR and camera API, allowing users to subscribe to Reolink ONVIF SWN events for real-time event notifications via webhook. The package provides functionalities to obtain and cache NVR or camera settings, capabilities, and states, as well as enable features like infrared lights, spotlight, and siren. Users can also subscribe to events, renew timers, and disconnect from the host device.

README:

The reolink_aio Python package allows you to integrate your Reolink devices (NVR/cameras) in your application.

This is a package implementing Reolink IP NVR and camera API. Also it’s providing a way to subscribe to Reolink ONVIF SWN events, so that real-time events can be received on a webhook.

If you appreciate the reolink integration and want to support its development, please consider sponsering the upstream library or purchase Reolink products through this affiliate link.

- Python 3.11

pip3 install reolink-aio

or manually:

git clone https://github.com/StarkillerOG/reolink_aio

cd reolink_aio/

pip3 install .from reolink_aio.api import Host

import asyncio

# Create a host-object (representing either a camera, or NVR with several channels)

host = Host('192.168.1.10', 'user', 'mypassword')

# Obtain/cache NVR or camera settings and capabilities, like model name, ports, HDD size, etc:

await host.get_host_data()

# Get the subscribtion port and host-device name:

subscribtion_port = host.onvif_port

name = host.nvr_name

# Obtain/cache states of features:

await host.get_states()

# Print some state value on the channel with index 0:

print(host.ir_enabled(0))

# Enable the infrared lights on the channel with index 1:

await host.set_ir_lights(1, True)

# Enable the spotlight on the channel with index 1:

await host.set_spotlight(1, True)

# Enable the siren on the channel with index 0:

await host.set_siren(0, True)

# Now subscribe to events, suppose our webhook url is http://192.168.1.11/webhook123

await host.subscribe('http://192.168.1.11/webhook123')

# After some minutes check the renew timer (keep the eventing alive):

if (host.renewtimer() <= 100):

await host.renew()

# Logout and disconnect

await host.logout()This is an example of the usage of the API. In this case we want to retrive and print the Mac Address of the NVR.

from reolink_aio.api import Host

import asyncio

async def print_mac_address():

# initialize the host

host = Host('192.168.1.109','admin', 'admin1234', port=80)

# connect and obtain/cache device settings and capabilities

await host.get_host_data()

# check if it is a camera or an NVR

print(f"It is an NVR: {host.is_nvr}, number of channels: {host.num_channel}")

# print mac address

print(f"MAC: {host.mac_address}")

# close the device connection

await host.logout()

if __name__ == "__main__":

asyncio.run(print_mac_address())This is an example of how to receive TCP push events. The callback will be called each time a push is received. The state variables of the Host class will automatically be updated when a push comes in.

from reolink_aio.api import Host

import asyncio

import logging

logging.basicConfig(level="INFO")

_LOGGER = logging.getLogger(__name__)

def callback() -> None:

_LOGGER.info("Callback called")

async def tcp_push_demo():

# initialize the host

host = Host(host="192.168.1.109", username="admin", password="admin1234")

# connect and obtain/cache device settings and capabilities

await host.get_host_data()

# Register callback and subscribe to events

host.baichuan.register_callback("unique_id_string", callback)

await host.baichuan.subscribe_events()

# Process TCP events for 2 minutes

await asyncio.sleep(120)

# unsubscribe and close the device connection

await host.baichuan.unsubscribe_events()

await host.logout()

if __name__ == "__main__":

asyncio.run(tcp_push_demo())This library is officially authorized by Reolink, with @starkillerOG as the main developer, and it is built with the support of Reolink's official resources.

This library is based on the work of:

- @fwestenberg: https://github.com/fwestenberg/reolink_dev

The baichuan part of this library is based on the work of:

- @QuantumEntangledAndy and @thirtythreeforty: https://github.com/QuantumEntangledAndy/neolink

Author

@starkillerOG: https://github.com/starkillerOG

Contributors

- @xannor: https://github.com/xannor

- @mnpg: https://github.com/mnpg

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for reolink_aio

Similar Open Source Tools

reolink_aio

The 'reolink_aio' Python package is designed to integrate Reolink devices (NVR/cameras) into your application. It implements Reolink IP NVR and camera API, allowing users to subscribe to Reolink ONVIF SWN events for real-time event notifications via webhook. The package provides functionalities to obtain and cache NVR or camera settings, capabilities, and states, as well as enable features like infrared lights, spotlight, and siren. Users can also subscribe to events, renew timers, and disconnect from the host device.

python-sdks

Python SDK for LiveKit enables developers to easily integrate real-time video, audio, and data features into their Python applications. By connecting to a LiveKit server, users can quickly build interactive live streaming or video call applications with minimal code. The SDK includes packages for real-time participant connection and access token generation, making it simple to create rooms and manage participants. With asyncio and aiohttp support, developers can seamlessly interact with the LiveKit server API and handle real-time communication tasks effortlessly.

lollms

LoLLMs Server is a text generation server based on large language models. It provides a Flask-based API for generating text using various pre-trained language models. This server is designed to be easy to install and use, allowing developers to integrate powerful text generation capabilities into their applications.

lollms_legacy

Lord of Large Language Models (LoLLMs) Server is a text generation server based on large language models. It provides a Flask-based API for generating text using various pre-trained language models. This server is designed to be easy to install and use, allowing developers to integrate powerful text generation capabilities into their applications. The tool supports multiple personalities for generating text with different styles and tones, real-time text generation with WebSocket-based communication, RESTful API for listing personalities and adding new personalities, easy integration with various applications and frameworks, sending files to personalities, running on multiple nodes to provide a generation service to many outputs at once, and keeping data local even in the remote version.

hugging-chat-api

Unofficial HuggingChat Python API for creating chatbots, supporting features like image generation, web search, memorizing context, and changing LLMs. Users can log in, chat with the ChatBot, perform web searches, create new conversations, manage conversations, switch models, get conversation info, use assistants, and delete conversations. The API also includes a CLI mode with various commands for interacting with the tool. Users are advised not to use the application for high-stakes decisions or advice and to avoid high-frequency requests to preserve server resources.

simple-openai

Simple-OpenAI is a Java library that provides a simple way to interact with the OpenAI API. It offers consistent interfaces for various OpenAI services like Audio, Chat Completion, Image Generation, and more. The library uses CleverClient for HTTP communication, Jackson for JSON parsing, and Lombok to reduce boilerplate code. It supports asynchronous requests and provides methods for synchronous calls as well. Users can easily create objects to communicate with the OpenAI API and perform tasks like text-to-speech, transcription, image generation, and chat completions.

semantic-kernel

Semantic Kernel is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. Semantic Kernel achieves this by allowing you to define plugins that can be chained together in just a few lines of code. What makes Semantic Kernel _special_ , however, is its ability to _automatically_ orchestrate plugins with AI. With Semantic Kernel planners, you can ask an LLM to generate a plan that achieves a user's unique goal. Afterwards, Semantic Kernel will execute the plan for the user.

suno-api

Suno AI API is an open-source project that allows developers to integrate the music generation capabilities of Suno.ai into their own applications. The API provides a simple and convenient way to generate music, lyrics, and other audio content using Suno.ai's powerful AI models. With Suno AI API, developers can easily add music generation functionality to their apps, websites, and other projects.

fastrtc

FastRTC is a real-time communication library for Python that allows users to turn any Python function into a real-time audio and video stream over WebRTC or WebSockets. It provides features like automatic voice detection, UI launching, WebRTC support, WebSocket support, telephone support, and customizable backend for production applications. The library offers various examples and usage scenarios for audio and video streaming, object detection, voice APIs, chat applications, and more.

letta

Letta is an open source framework for building stateful LLM applications. It allows users to build stateful agents with advanced reasoning capabilities and transparent long-term memory. The framework is white box and model-agnostic, enabling users to connect to various LLM API backends. Letta provides a graphical interface, the Letta ADE, for creating, deploying, interacting, and observing with agents. Users can access Letta via REST API, Python, Typescript SDKs, and the ADE. Letta supports persistence by storing agent data in a database, with PostgreSQL recommended for data migrations. Users can install Letta using Docker or pip, with Docker defaulting to PostgreSQL and pip defaulting to SQLite. Letta also offers a CLI tool for interacting with agents. The project is open source and welcomes contributions from the community.

gateway

Adaline Gateway is a fully local production-grade Super SDK that offers a unified interface for calling over 200+ LLMs. It is production-ready, supports batching, retries, caching, callbacks, and OpenTelemetry. Users can create custom plugins and providers for seamless integration with their infrastructure.

a2a-java

A2A Java SDK is a Java library that helps run agentic applications as A2AServers following the Agent2Agent (A2A) Protocol. It provides a Java server implementation of the A2A Protocol, allowing users to create A2A server agents and execute tasks. The SDK also includes a Java client implementation for communication with A2A servers using various transports like JSON-RPC 2.0, gRPC, and HTTP+JSON/REST. Users can configure different transport protocols, handle messages, tasks, push notifications, and interact with server agents. The SDK supports streaming and non-streaming responses, error handling, and task management functionalities.

mcp-agent

mcp-agent is a simple, composable framework designed to build agents using the Model Context Protocol. It handles the lifecycle of MCP server connections and implements patterns for building production-ready AI agents in a composable way. The framework also includes OpenAI's Swarm pattern for multi-agent orchestration in a model-agnostic manner, making it the simplest way to build robust agent applications. It is purpose-built for the shared protocol MCP, lightweight, and closer to an agent pattern library than a framework. mcp-agent allows developers to focus on the core business logic of their AI applications by handling mechanics such as server connections, working with LLMs, and supporting external signals like human input.

hydraai

Generate React components on-the-fly at runtime using AI. Register your components, and let Hydra choose when to show them in your App. Hydra development is still early, and patterns for different types of components and apps are still being developed. Join the discord to chat with the developers. Expects to be used in a NextJS project. Components that have function props do not work.

ChatRex

ChatRex is a Multimodal Large Language Model (MLLM) designed to seamlessly integrate fine-grained object perception and robust language understanding. By adopting a decoupled architecture with a retrieval-based approach for object detection and leveraging high-resolution visual inputs, ChatRex addresses key challenges in perception tasks. It is powered by the Rexverse-2M dataset with diverse image-region-text annotations. ChatRex can be applied to various scenarios requiring fine-grained perception, such as object detection, grounded conversation, grounded image captioning, and region understanding.

aiodynamo

AsyncIO DynamoDB is an asynchronous pythonic client for DynamoDB, designed for asynchronous apps. It is two times faster than aiobotocore, botocore, or boto3 for operations like query or scan. The library provides a pythonic API with modern Python features, automatically depaginates paginated APIs using asynchronous iterators. The source code is legible and hand-written, allowing for easy inspection and understanding. It offers a pluggable HTTP client, enabling integration with existing asynchronous HTTP clients without additional dependencies or dependency resolution issues.

For similar tasks

reolink_aio

The 'reolink_aio' Python package is designed to integrate Reolink devices (NVR/cameras) into your application. It implements Reolink IP NVR and camera API, allowing users to subscribe to Reolink ONVIF SWN events for real-time event notifications via webhook. The package provides functionalities to obtain and cache NVR or camera settings, capabilities, and states, as well as enable features like infrared lights, spotlight, and siren. Users can also subscribe to events, renew timers, and disconnect from the host device.

ChatGPT-OpenAI-Smart-Speaker

ChatGPT Smart Speaker is a project that enables speech recognition and text-to-speech functionalities using OpenAI and Google Speech Recognition. It provides scripts for running on PC/Mac and Raspberry Pi, allowing users to interact with a smart speaker setup. The project includes detailed instructions for setting up the required hardware and software dependencies, along with customization options for the OpenAI model engine, language settings, and response randomness control. The Raspberry Pi setup involves utilizing the ReSpeaker hardware for voice feedback and light shows. The project aims to offer an advanced smart speaker experience with features like wake word detection and response generation using AI models.

aiohue

Aiohue is an asynchronous library designed to control Philips Hue lights. It requires Python 3.10+ and utilizes asyncio and aiohttp. The library supports both V1 and V2 APIs of the Hue Bridge, with V2 API offering event-based updates to eliminate the need for polling. The contribution guidelines emphasize matching object hierarchy and property/method names with the Philips Hue API.

aioshelly

Aioshelly is an asynchronous library designed to control Shelly devices. It is currently under development and requires Python version 3.11 or higher, along with dependencies like bluetooth-data-tools, aiohttp, and orjson. The library provides examples for interacting with Gen1 devices using CoAP protocol and Gen2/Gen3 devices using RPC and WebSocket protocols. Users can easily connect to Shelly devices, retrieve status information, and perform various actions through the provided APIs. The repository also includes example scripts for quick testing and usage guidelines for contributors to maintain consistency with the Shelly API.

For similar jobs

Protofy

Protofy is a full-stack, batteries-included low-code enabled web/app and IoT system with an API system and real-time messaging. It is based on Protofy (protoflow + visualui + protolib + protodevices) + Expo + Next.js + Tamagui + Solito + Express + Aedes + Redbird + Many other amazing packages. Protofy can be used to fast prototype Apps, webs, IoT systems, automations, or APIs. It is a ultra-extensible CMS with supercharged capabilities, mobile support, and IoT support (esp32 thanks to esphome).

openmeter

OpenMeter is a real-time and scalable usage metering tool for AI, usage-based billing, infrastructure, and IoT use cases. It provides a REST API for integrations and offers client SDKs in Node.js, Python, Go, and Web. OpenMeter is licensed under the Apache 2.0 License.

AIOsense

AIOsense is an all-in-one sensor that is modular, affordable, and easy to solder. It is designed to be an alternative to commercially available sensors and focuses on upgradeability. AIOsense is cheaper and better than most commercial sensors and supports a variety of sensors and modules, including: - (RGB)-LED - Barometer - Breath VOC equivalent - Buzzer / Beeper - CO² equivalent - Humidity sensor - Light / Illumination sensor - PIR motion sensor - Temperature sensor - mmWave / Radar sensor Upcoming features include full voice assistant support, microphone, and speaker. All supported sensors & modules are listed in the documentation. AIOsense has a low power consumption, with an idle power consumption of 0.45W / 0.09A on a fully equipped board. Without a mmWave sensor, the idle power consumption is around 0.11W / 0.02A. To get started with AIOsense, you can refer to the documentation. If you have any questions, you can open an issue.

air780e-forwarder

This repository provides a tool for forwarding SMS and call notifications using various notification methods such as Telegram, PushDeer, Bark, DingTalk, Feishu, WeCom, Pushover, email, Gotify, Inotify, and SMTP protocol. It also allows controlling devices via SMS, scheduling base station positioning, querying data usage, reporting device status, power button operations, low power mode, message queue usage for sending notifications without freezing, automatic resend on notification failure, and support for master-slave mode for message forwarding.

aiocoap

aiocoap is a Python library that implements the Constrained Application Protocol (CoAP) using native asyncio methods in Python 3. It supports various CoAP standards such as RFC7252, RFC7641, RFC7959, RFC8323, RFC7967, RFC8132, RFC9176, RFC8613, and draft-ietf-core-oscore-groupcomm-17. The library provides features for clients and servers, including multicast support, blockwise transfer, CoAP over TCP, TLS, and WebSockets, No-Response, PATCH/FETCH, OSCORE, and Group OSCORE. It offers an easy-to-use interface for concurrent operations and is suitable for IoT applications.

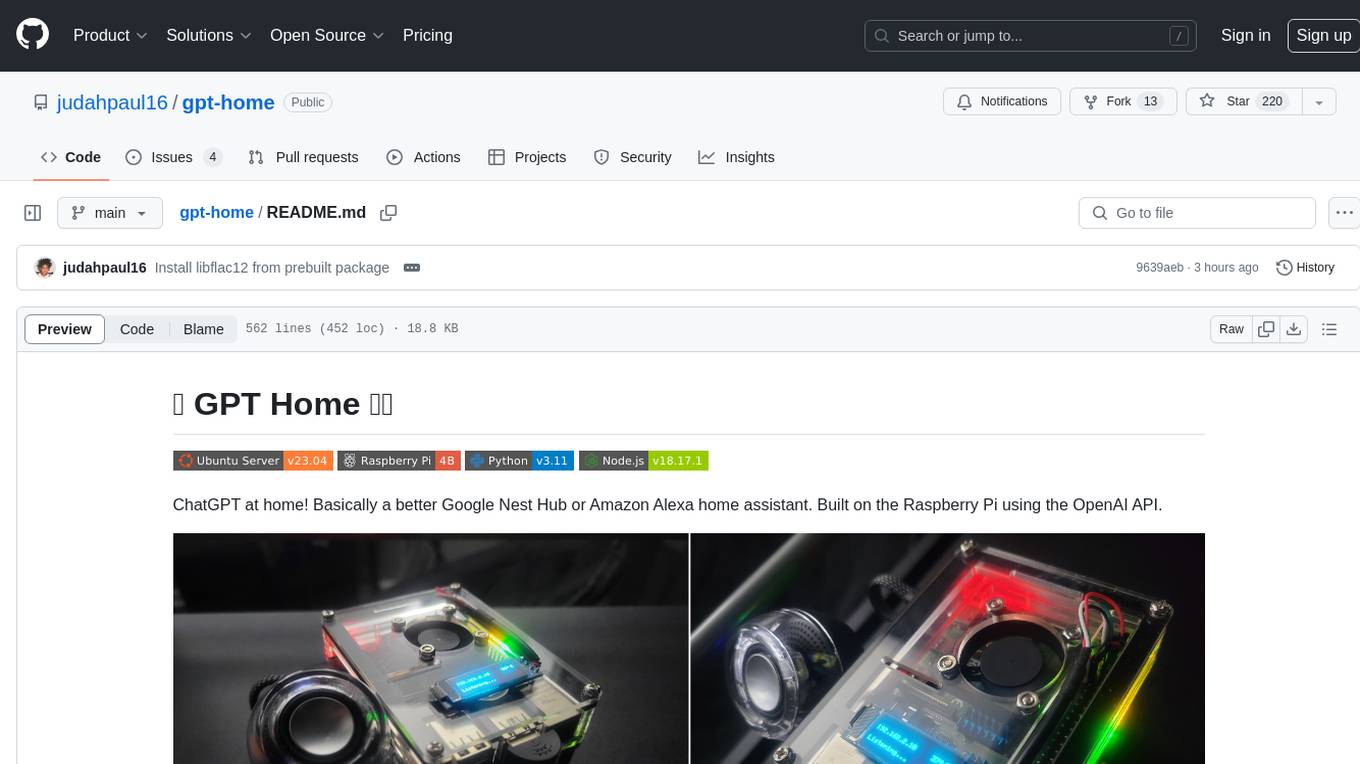

gpt-home

GPT Home is a project that allows users to build their own home assistant using Raspberry Pi and OpenAI API. It serves as a guide for setting up a smart home assistant similar to Google Nest Hub or Amazon Alexa. The project integrates various components like OpenAI, Spotify, Philips Hue, and OpenWeatherMap to provide a personalized home assistant experience. Users can follow the detailed instructions provided to build their own version of the home assistant on Raspberry Pi, with optional components for customization. The project also includes system configurations, dependencies installation, and setup scripts for easy deployment. Overall, GPT Home offers a DIY solution for creating a smart home assistant using Raspberry Pi and OpenAI technology.

AI-on-the-edge-device

AI-on-the-edge-device is a project that enables users to digitize analog water, gas, power, and other meters using an ESP32 board with a supported camera. It integrates Tensorflow Lite for AI processing, offers a small and affordable device with integrated camera and illumination, provides a web interface for administration and control, supports Homeassistant, Influx DB, MQTT, and REST API. The device captures meter images, extracts Regions of Interest (ROIs), runs them through AI for digitization, and allows users to send data to MQTT, InfluxDb, or access it via REST API. The project also includes 3D-printable housing options and tools for logfile management.

ai-edge-torch

AI Edge Torch is a Python library that supports converting PyTorch models into a .tflite format for on-device applications on Android, iOS, and IoT devices. It offers broad CPU coverage with initial GPU and NPU support, closely integrating with PyTorch and providing good coverage of Core ATen operators. The library includes a PyTorch converter for model conversion and a Generative API for authoring mobile-optimized PyTorch Transformer models, enabling easy deployment of Large Language Models (LLMs) on mobile devices.