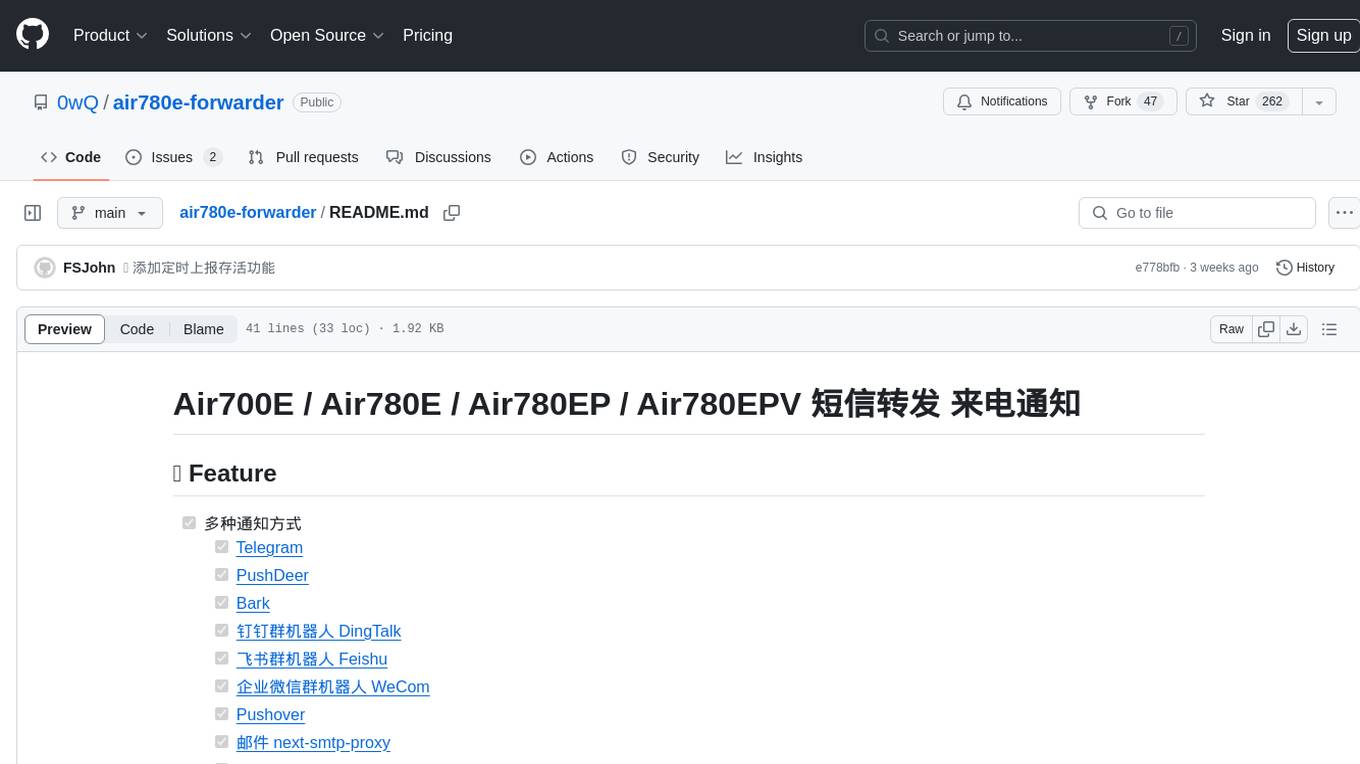

air780e-forwarder

合宙Air780系列4G模组,短信转发,来电通知,Air724在这里 -> https://github.com/0wQ/air724ug-forwarder

Stars: 262

This repository provides a tool for forwarding SMS and call notifications using various notification methods such as Telegram, PushDeer, Bark, DingTalk, Feishu, WeCom, Pushover, email, Gotify, Inotify, and SMTP protocol. It also allows controlling devices via SMS, scheduling base station positioning, querying data usage, reporting device status, power button operations, low power mode, message queue usage for sending notifications without freezing, automatic resend on notification failure, and support for master-slave mode for message forwarding.

README:

- [x] 多种通知方式

- [x] Telegram

- [x] PushDeer

- [x] Bark

- [x] 钉钉群机器人 DingTalk

- [x] 飞书群机器人 Feishu

- [x] 企业微信群机器人 WeCom

- [x] Pushover

- [x] 邮件 next-smtp-proxy

- [x] Gotify

- [x] Inotify / 合宙官方的推送服务

- [x] 邮件 (SMTP协议)

- [x] 通过短信控制设备

- [x] 发短信, 格式:

SMS,10010,余额查询

- [x] 发短信, 格式:

- [x] 定时基站定位

- [x] 定时查询流量

- [x] 定时上报存活

- [x] 开机通知

- [x] POW 按键长按短按操作

- [x] 使用消息队列, 测试添加几百条通知, 不会卡死

- [x] 通知发送失败, 自动重发, 断电后再次开机可以恢复重发

- [x] 支持主从模式,一主对多从,从机通过串口转发消息,主机接受消息后转发到通知服务

https://mizore.notion.site/Air780E-e750efe0d6cc40c3baa276eeb811d534

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for air780e-forwarder

Similar Open Source Tools

air780e-forwarder

This repository provides a tool for forwarding SMS and call notifications using various notification methods such as Telegram, PushDeer, Bark, DingTalk, Feishu, WeCom, Pushover, email, Gotify, Inotify, and SMTP protocol. It also allows controlling devices via SMS, scheduling base station positioning, querying data usage, reporting device status, power button operations, low power mode, message queue usage for sending notifications without freezing, automatic resend on notification failure, and support for master-slave mode for message forwarding.

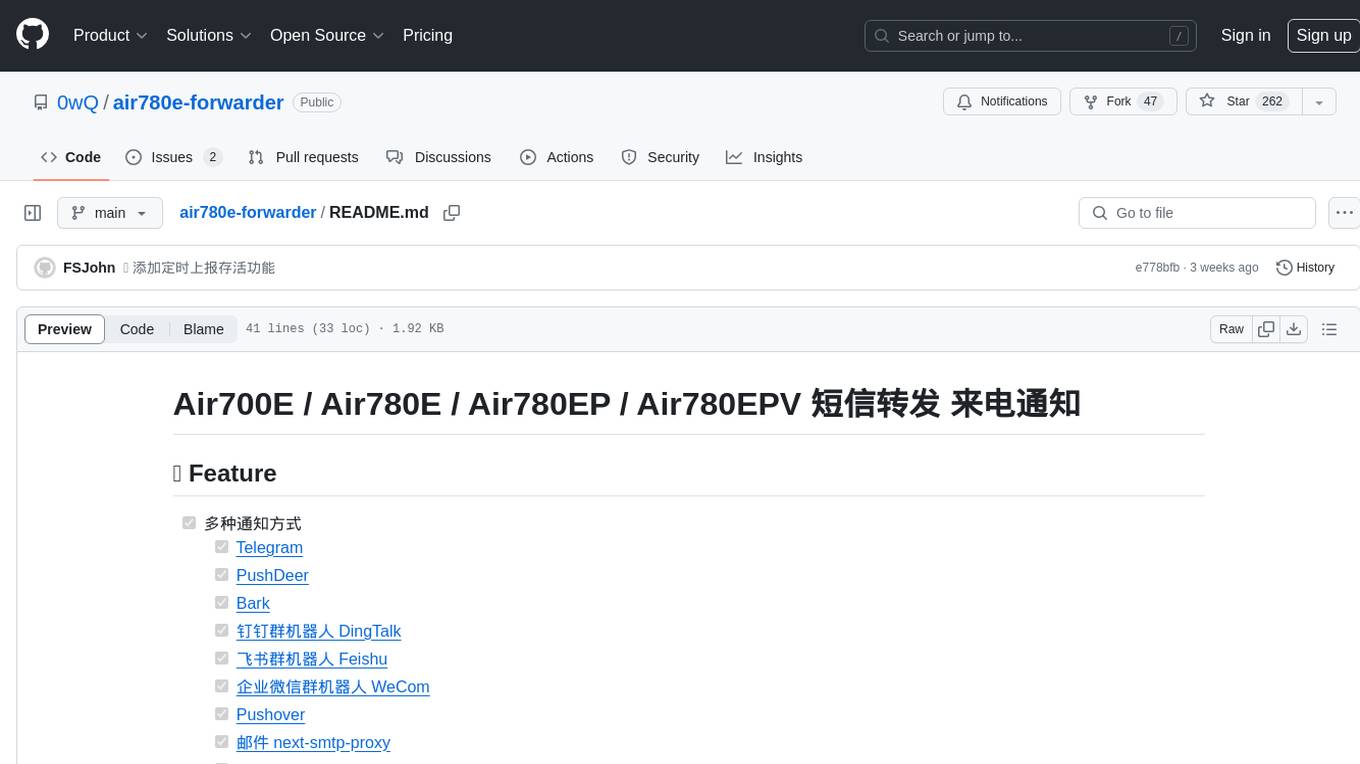

witsy

Witsy is a generative AI desktop application that supports various models like OpenAI, Ollama, Anthropic, MistralAI, Google, Groq, and Cerebras. It offers features such as chat completion, image generation, scratchpad for content creation, prompt anywhere functionality, AI commands for productivity, expert prompts for specialization, LLM plugins for additional functionalities, read aloud capabilities, chat with local files, transcription/dictation, Anthropic Computer Use support, local history of conversations, code formatting, image copy/download, and more. Users can interact with the application to generate content, boost productivity, and perform various AI-related tasks.

memoflow

MemoFlow is a Flutter mobile client for memos backend. It is an independent third-party client, not affiliated with the official Memos project. The app prioritizes offline functionality with local SQLite cache and Outbox retry queue. It supports creating, editing, searching, pinning, archiving, and deleting memos with Markdown, tags, and task lists. Users can quickly input content with draft saving, tag suggestions, bidirectional references, attachments, photos, and undo/redo. The app also features attachment browsing with image preview, audio playback, and voice memo recording. Users can review randomly and locally with statistics, share summaries, and configure AI reports. The app supports multiple account PAT logins, old Memos compatibility mode, widgets, app lock, preference settings, Markdown+ZIP export, and more.

LLMFarm

LLMFarm is an iOS and MacOS app designed to work with large language models (LLM). It allows users to load different LLMs with specific parameters, test the performance of various LLMs on iOS and macOS, and identify the most suitable model for their projects. The tool is based on ggml and llama.cpp by Georgi Gerganov and incorporates sources from rwkv.cpp by saharNooby, Mia by byroneverson, and LlamaChat by alexrozanski. LLMFarm features support for MacOS (13+) and iOS (16+), various inferences and sampling methods, Metal compatibility (not supported on Intel Mac), model setting templates, LoRA adapters support, LoRA finetune support, LoRA export as model support, and more. It also offers a range of inferences including LLaMA, GPTNeoX, Replit, GPT2, Starcoder, RWKV, Falcon, MPT, Bloom, and others. Additionally, it supports multimodal models like LLaVA, Obsidian, and MobileVLM. Users can customize inference options through JSON files and access supported models for download.

LearnPrompt

LearnPrompt is a permanent, free, open-source AIGC course platform that currently supports various tools like ChatGPT, Agent, Midjourney, Runway, Stable Diffusion, AI digital humans, AI voice & music, and large model fine-tuning. The platform offers features such as multilingual support, comment sections, daily selections, and submissions. Users can explore different modules, including sound cloning, RAG, GPT-SoVits, and OpenAI Sora world model. The platform aims to continuously update and provide tutorials, examples, and knowledge systems related to AI technologies.

dify-helm

Deploy langgenius/dify, an LLM based chat bot app on kubernetes with helm chart.

feast

Feast is an open source feature store for machine learning, providing a fast path to manage infrastructure for productionizing analytic data. It allows ML platform teams to make features consistently available, avoid data leakage, and decouple ML from data infrastructure. Feast abstracts feature storage from retrieval, ensuring portability across different model training and serving scenarios.

AIDE-Plus

AIDE-Plus is a comprehensive tool for Android app development, offering support for various Java syntax versions, Gradle and Maven build systems, ProGuard, AndroidX, CMake builds, APK/AAB generation, code coloring customization, data binding, and APK signing. It also provides features like AAPT2, D8, runtimeOnly, compileOnly, libgdxNatives, manifest merging, Shizuku installation support, and syntax auto-completion. The tool aims to streamline the development process and enhance the user experience by addressing common issues and providing advanced functionalities.

Eridanus

Eridanus is a powerful data visualization tool designed to help users create interactive and insightful visualizations from their datasets. With a user-friendly interface and a wide range of customization options, Eridanus makes it easy for users to explore and analyze their data in a meaningful way. Whether you are a data scientist, business analyst, or student, Eridanus provides the tools you need to communicate your findings effectively and make data-driven decisions.

Everything-LLMs-And-Robotics

The Everything-LLMs-And-Robotics repository is the world's largest GitHub repository focusing on the intersection of Large Language Models (LLMs) and Robotics. It provides educational resources, research papers, project demos, and Twitter threads related to LLMs, Robotics, and their combination. The repository covers topics such as reasoning, planning, manipulation, instructions and navigation, simulation frameworks, perception, and more, showcasing the latest advancements in the field.

Awesome-LLM-Robotics

This repository contains a curated list of **papers using Large Language/Multi-Modal Models for Robotics/RL**. Template from awesome-Implicit-NeRF-Robotics Please feel free to send me pull requests or email to add papers! If you find this repository useful, please consider citing and STARing this list. Feel free to share this list with others! ## Overview * Surveys * Reasoning * Planning * Manipulation * Instructions and Navigation * Simulation Frameworks * Citation

Jarvis

Jarvis is a powerful virtual AI assistant designed to simplify daily tasks through voice command integration. It features automation, device management, and personalized interactions, transforming technology engagement. Built using Python and AI models, it serves personal and administrative needs efficiently, making processes seamless and productive.

FluidFrames.RIFE

FluidFrames.RIFE is a Windows app powered by RIFE AI to create frame-generated and slowmotion videos. It is written in Python and utilizes external packages such as torch, onnxruntime-directml, customtkinter, OpenCV, moviepy, and Nuitka. The app features an elegant GUI, video frame generation at different speeds, video slow motion, video resizing, multiple GPU support, and compatibility with various video formats. Future versions aim to support different GPU types, enhance the GUI, include audio processing, optimize video processing speed, and introduce new features like saving AI-generated frames and supporting different RIFE AI models.

anylabeling

AnyLabeling is a tool for effortless data labeling with AI support from YOLO and Segment Anything. It combines features from LabelImg and Labelme with an improved UI and auto-labeling capabilities. Users can annotate images with polygons, rectangles, circles, lines, and points, as well as perform auto-labeling using YOLOv5 and Segment Anything. The tool also supports text detection, recognition, and Key Information Extraction (KIE) labeling, with multiple language options available such as English, Vietnamese, and Chinese.

Awesome-Robotics-3D

Awesome-Robotics-3D is a curated list of 3D Vision papers related to Robotics domain, focusing on large models like LLMs/VLMs. It includes papers on Policy Learning, Pretraining, VLM and LLM, Representations, and Simulations, Datasets, and Benchmarks. The repository is maintained by Zubair Irshad and welcomes contributions and suggestions for adding papers. It serves as a valuable resource for researchers and practitioners in the field of Robotics and Computer Vision.

RealScaler

RealScaler is a Windows app powered by RealESRGAN AI to enhance, upscale, and de-noise photos and videos. It provides an easy-to-use GUI for upscaling images and videos using multiple AI models. The tool supports automatic image tiling and merging to avoid GPU VRAM limitations, resizing images/videos before upscaling, interpolation between original and upscaled content, and compatibility with various image and video formats. RealScaler is written in Python and requires Windows 11/10, at least 8GB RAM, and a Directx12 compatible GPU with 4GB VRAM. Future versions aim to enhance performance, support more GPUs, offer a new GUI with Windows 11 style, include audio for upscaled videos, and provide features like metadata extraction and application from original to upscaled files.

For similar tasks

air780e-forwarder

This repository provides a tool for forwarding SMS and call notifications using various notification methods such as Telegram, PushDeer, Bark, DingTalk, Feishu, WeCom, Pushover, email, Gotify, Inotify, and SMTP protocol. It also allows controlling devices via SMS, scheduling base station positioning, querying data usage, reporting device status, power button operations, low power mode, message queue usage for sending notifications without freezing, automatic resend on notification failure, and support for master-slave mode for message forwarding.

Kai

Kai is a cross-platform open-source AI interface that runs on Android, iOS, Windows, Mac, Linux, and Web. It supports encrypted local history storage, text to speech output, seamless switch between services, and file attachments. Users can enable or disable tools like Get Local Time, Get Location, Send Notification, Create Calendar Event, Check Recent SMS, and Send SMS. The tool is designed to provide various AI services and features for different platforms.

For similar jobs

air780e-forwarder

This repository provides a tool for forwarding SMS and call notifications using various notification methods such as Telegram, PushDeer, Bark, DingTalk, Feishu, WeCom, Pushover, email, Gotify, Inotify, and SMTP protocol. It also allows controlling devices via SMS, scheduling base station positioning, querying data usage, reporting device status, power button operations, low power mode, message queue usage for sending notifications without freezing, automatic resend on notification failure, and support for master-slave mode for message forwarding.

Protofy

Protofy is a full-stack, batteries-included low-code enabled web/app and IoT system with an API system and real-time messaging. It is based on Protofy (protoflow + visualui + protolib + protodevices) + Expo + Next.js + Tamagui + Solito + Express + Aedes + Redbird + Many other amazing packages. Protofy can be used to fast prototype Apps, webs, IoT systems, automations, or APIs. It is a ultra-extensible CMS with supercharged capabilities, mobile support, and IoT support (esp32 thanks to esphome).

openmeter

OpenMeter is a real-time and scalable usage metering tool for AI, usage-based billing, infrastructure, and IoT use cases. It provides a REST API for integrations and offers client SDKs in Node.js, Python, Go, and Web. OpenMeter is licensed under the Apache 2.0 License.

AIOsense

AIOsense is an all-in-one sensor that is modular, affordable, and easy to solder. It is designed to be an alternative to commercially available sensors and focuses on upgradeability. AIOsense is cheaper and better than most commercial sensors and supports a variety of sensors and modules, including: - (RGB)-LED - Barometer - Breath VOC equivalent - Buzzer / Beeper - CO² equivalent - Humidity sensor - Light / Illumination sensor - PIR motion sensor - Temperature sensor - mmWave / Radar sensor Upcoming features include full voice assistant support, microphone, and speaker. All supported sensors & modules are listed in the documentation. AIOsense has a low power consumption, with an idle power consumption of 0.45W / 0.09A on a fully equipped board. Without a mmWave sensor, the idle power consumption is around 0.11W / 0.02A. To get started with AIOsense, you can refer to the documentation. If you have any questions, you can open an issue.

aiocoap

aiocoap is a Python library that implements the Constrained Application Protocol (CoAP) using native asyncio methods in Python 3. It supports various CoAP standards such as RFC7252, RFC7641, RFC7959, RFC8323, RFC7967, RFC8132, RFC9176, RFC8613, and draft-ietf-core-oscore-groupcomm-17. The library provides features for clients and servers, including multicast support, blockwise transfer, CoAP over TCP, TLS, and WebSockets, No-Response, PATCH/FETCH, OSCORE, and Group OSCORE. It offers an easy-to-use interface for concurrent operations and is suitable for IoT applications.

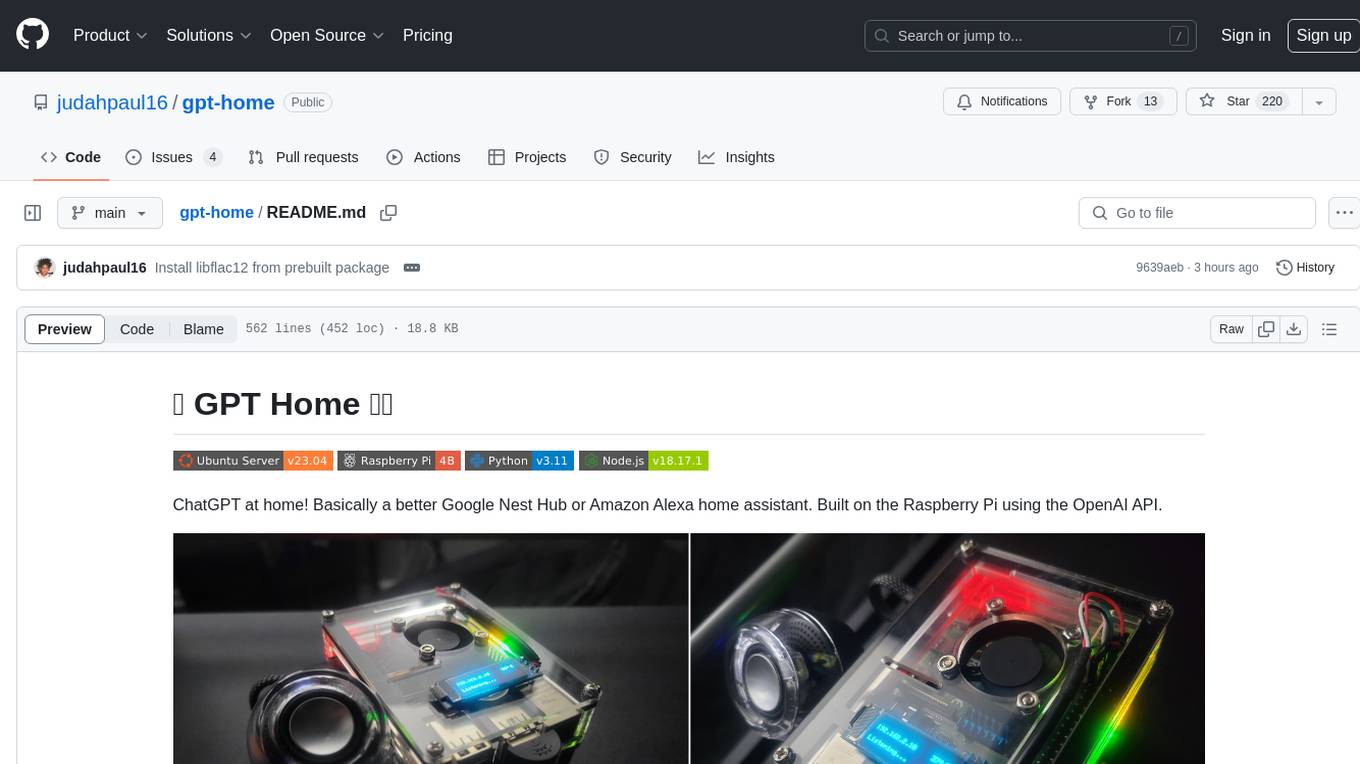

gpt-home

GPT Home is a project that allows users to build their own home assistant using Raspberry Pi and OpenAI API. It serves as a guide for setting up a smart home assistant similar to Google Nest Hub or Amazon Alexa. The project integrates various components like OpenAI, Spotify, Philips Hue, and OpenWeatherMap to provide a personalized home assistant experience. Users can follow the detailed instructions provided to build their own version of the home assistant on Raspberry Pi, with optional components for customization. The project also includes system configurations, dependencies installation, and setup scripts for easy deployment. Overall, GPT Home offers a DIY solution for creating a smart home assistant using Raspberry Pi and OpenAI technology.

AI-on-the-edge-device

AI-on-the-edge-device is a project that enables users to digitize analog water, gas, power, and other meters using an ESP32 board with a supported camera. It integrates Tensorflow Lite for AI processing, offers a small and affordable device with integrated camera and illumination, provides a web interface for administration and control, supports Homeassistant, Influx DB, MQTT, and REST API. The device captures meter images, extracts Regions of Interest (ROIs), runs them through AI for digitization, and allows users to send data to MQTT, InfluxDb, or access it via REST API. The project also includes 3D-printable housing options and tools for logfile management.

ai-edge-torch

AI Edge Torch is a Python library that supports converting PyTorch models into a .tflite format for on-device applications on Android, iOS, and IoT devices. It offers broad CPU coverage with initial GPU and NPU support, closely integrating with PyTorch and providing good coverage of Core ATen operators. The library includes a PyTorch converter for model conversion and a Generative API for authoring mobile-optimized PyTorch Transformer models, enabling easy deployment of Large Language Models (LLMs) on mobile devices.