ChatGPT-OpenAI-Smart-Speaker

This AI Smart Speaker uses speech recognition and text-to-speech to enable voice-driven conversations and vision capabilities with OpenAI and Agents. The user speaks a prompt into the microphone, and the program sends the prompt to OpenAI to generate a response. The response is then converted to an audio file and played back to the user.

Stars: 188

ChatGPT Smart Speaker is a project that enables speech recognition and text-to-speech functionalities using OpenAI and Google Speech Recognition. It provides scripts for running on PC/Mac and Raspberry Pi, allowing users to interact with a smart speaker setup. The project includes detailed instructions for setting up the required hardware and software dependencies, along with customization options for the OpenAI model engine, language settings, and response randomness control. The Raspberry Pi setup involves utilizing the ReSpeaker hardware for voice feedback and light shows. The project aims to offer an advanced smart speaker experience with features like wake word detection and response generation using AI models.

README:

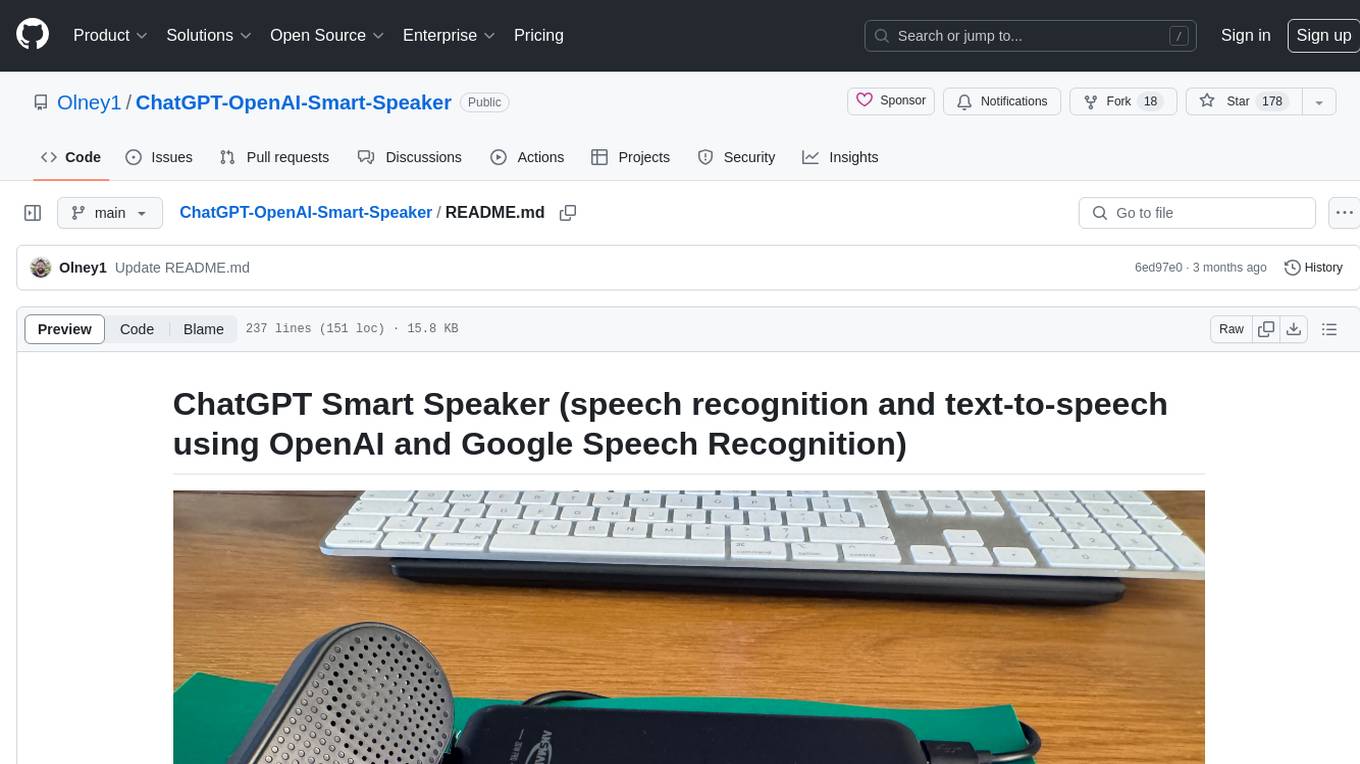

ChatGPT Smart Speaker (speech recognition and text-to-speech using OpenAI and Google Speech Recognition)

Video Demo using activation word "Jeffers"

Video Demo with Vision

The chat.py and test.py scripts run directly on your PC/Mac. They both allow you to use speech recognition to input a prompt, send the prompt to OpenAI to generate a response, and then use gTTS to convert the response to an audio file and play the audio file on your Mac/PC. Your PC/Mac must have a working default microphone and speakers for this script to work. Please note that these scripts were designed on a Mac, so additional dependencies may be required on Windows and Linux. The difference between them is that chat.py is faster and always on and test.py acts like a standard smart speaker - only working once it hears the activation command (currently set to 'Jeffers').

pi.py script is a new and more advanced custom version of the smart_speaker.py script and is the most advanced script similar to a real smart speaker. The purpose of this script is to offload the wake up word to a custom model build via PicoVoice (https://console.picovoice.ai/). This improves efficiency and long term usage reliability. This script will be the main script for development moving forward due to greater reliability and more advanced features to be added regularly.

- You need to have a valid OpenAI API key. You can sign up for a free API key at https://platform.openai.com.

- You'll need to be running Python version 3.7.3 or higher. I am using 3.11.4 on a Mac and 3.7.3 on Raspberry Pi.

- Run

brew install portaudioafter installing HomeBrew:/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)" - You need to install the following packages:

openai,gTTS,pyaudio,SpeechRecognition,playsound, python-dotenvandpyobjcif you are on a Mac. You can install these packages using pip or use pipenv if you wish to contain a virtual environment. - Firstly, update your tools:

pip install --upgrade pip setuptoolsthenpip install openai pyaudio SpeechRecognition gTTS playsound python-dotenv apa102-pi gpiozero pyobjc

To run pi.py you will need a Raspberry Pi 4b (I'm using the 4GB model but 2GB should be enough), ReSpeaker 4-Mic Array for Raspberry Pi and USB speakers.

You will also need a developer account and API key with OpenAI (https://platform.openai.com/overview), a Tavily Search agent API key (https://app.tavily.com/sign-in) and an Access Key and Custom Voice Model with PicoVoice (https://console.picovoice.ai/) and (https://console.picovoice.ai/ppn respectively. Please create your own voice model and download the correct version for use on a Raspberry Pi)

Now on to the Pi setup. Let's get started!

Run the following on your Raspberry Pi terminal:

-

sudo apt update -

sudo apt install python3-gpiozero -

git clone https://github.com/Olney1/ChatGPT-OpenAI-Smart-Speaker -

Firstly, update your tools:

pip install --upgrade pip setuptoolsthenpip install openai pyaudio SpeechRecognition gTTS pydub python-dotenv apa102-pi gpiozeroNext, install the dependencies,pip install -r requirements.txt. I am using Python 3.9#!/usr/bin/env python3.9. You can install these packages using pip or use pipenv if you wish to contain a virtual environment. -

PyAudio relies on PortAudio as a dependency. You can install it using the following command:

sudo apt-get install portaudio19-dev -

Pydub dependencies: You need to have ffmpeg installed on your system. On a Raspberry Pi you can install it using:

sudo apt-get install ffmpeg. You may also need simpleaudio if you run into issues with the script hanging when finding the wake word, so it's best to install these packages just in case:sudo apt-get install python3-dev(for development headers to compile) andinstall simpleaudio(for a different backend to play mp3 files) andsudo apt-get install libasound2-dev(necessary dependencies). -

If you are using the RESPEAKER, follow this guide to install the required dependencies: (

https://wiki.seeedstudio.com/ReSpeaker_4_Mic_Array_for_Raspberry_Pi/#getting-started). Then install support for the lights on the RESPEAKER board. You'll need APA102 LED:sudo apt install -y python3-rpi.gpioand thensudo pip3 install apa102-pi. -

Activate SPI: sudo raspi-config; Go to "Interface Options"; Go to "SPI"; Enable SPI; While you are at it: Do change the default password! Exit the tool and reboot.

-

Get the Seeed voice card source code, install and reboot:

git clone https://github.com/HinTak/seeed-voicecard.gitcd seeed-voicecardsudo ./install.shsudo reboot now -

Finally, load audio output on Raspberry Pi

sudo raspi-config-Select 1 System options -Select S2 Audio -Select your preferred Audio output device -Select Finish

- You'll need to set up the environment variables for your Open API Key. To do this create a

.envfile in the same directory and add your API Key to the file like this:OPENAI_API_KEY="API KEY GOES HERE". This is safer than hard coding your API key into the program. You must not change the name of the variableOPENAI_API_KEY. - Run the script using

python chat.py. - The script will prompt you to say something. Speak a sentence into your microphone. You may need to allow the program permission to access your microphone on a Mac, a prompt should appear when running the program.

- The script will send the spoken sentence to OpenAI, generate a response using the text-to-speech model, and play the response as an audio file.

- You'll need to set up the environment variables for your Open API Key, PicoVoice Access Key and Tavily API key for agent searches. To do this create a

.envfile in the same directory and add your API Keys to the file like this:OPENAI_API_KEY="API KEY GOES HERE"andACCESS_KEY="PICOVOICE ACCESS KEY GOES HERE"andTAVILY_API_KEY="API KEY GOES HERE". This is safer than hard coding your API key into the program. - Ensure that you have the

pi.pyscript along withapa102.pyandalexa_led_pattern.pyscripts in the same folder saved on your Pi if using ReSpeaker. - Run the script using

python3 pi.pyorpython3 pi.py 2> /dev/nullon the Raspberry Pi. The second option omits all developer warnings and errors to keep the console focused purely on the print statements. - The script will prompt you to say the wake word which is programmed into the wake word custom model by Picovoice as 'Jeffers'. You can change this to any name you want. Once the wake word has been detected the lights will light up blue. It will now be ready for you to ask your question. When you have asked your question, or when the microphone picks up and processes noise, the lights will rotate a blue colour meaning that your recording sample/question is being sent to OpenAI.

- The script will then generate a response using the text-to-speech model, and play the response as an audio file.

- You can change the OpenAI model engine by modifying the value of

model_engine. For example, to use the "gpt-3.5-turbo" model for a cheaper and quicker response but with a knowledge cut-off to Sep 2021, setmodel_engine = "gpt-3.5-turbo". - You can change the language of the generated audio file by modifying the value of

language. For example, to generate audio in French, setlanguage = 'fr'. - You can adjust the

temperatureparameter in the following line to control the randomness of the generated response:

response = client.chat.completions.create(

model=model_engine,

messages=[{"role": "system", "content": "You are a helpful smart speaker called Jeffers!"}, # Play about with more context here.

{"role": "user", "content": prompt}],

max_tokens=1024,

n=1,

temperature=0.7,

)

return response

Higher values of temperature will result in more diverse and random responses, while lower values will result in more deterministic responses.

If you are using the same USB speaker in my video you will need to run sudo apt-get install pulseaudio to install support for this. This may also require you to set a command to start pulseaudio on every boot: pulseaudio --start.

Open the terminal and type: sudo nano /etc/rc. local

After important network/start commands add this: su -l pi -c '/usr/bin/python3 /home/pi/ChatGPT-OpenAI-Smart-Speaker/ && pulseaudio --start && python3 pi.py 2> /dev/null’

Be sure to leave the line exit 0 at the end, then save the file and exit. In nano, to exit, type Ctrl-x, and then Y

If you want to use ReSpeaker for the lights, you can purchase this from most of the major online stores that stock Raspberry Pi. Here is the online guide: https://wiki.seeedstudio.com/ReSpeaker_4_Mic_Array_for_Raspberry_Pi/

To test your microphone and speakers install Audacity on your Raspberry Pi:

sudo apt update

sudo apt install audacity

audacity

On the raspberry pi you may encounter an error regarding the installation of flac.

See here for the resolution: https://raspberrypi.stackexchange.com/questions/137630/im-unable-to-install-flac-on-my-raspberry-pi-3

The files you will need are going to be here: https://archive.raspbian.org/raspbian/pool/main/f/flac/

Please note the links below may have changed or be updated, so please refer back to this link above for the latest file names and then update your command below.

sudo apt-get install libogg0

$ wget https://archive.raspbian.org/raspbian/pool/main/f/flac/libflac8_1.3.2-3+deb10u3_armhf.deb

$ wget https://archive.raspbian.org/raspbian/pool/main/f/flac/flac_1.3.2-3+deb10u3_armhf.deb

$ sudo dpkg -i libflac8_1.3.2-3+deb10u3_armhf.deb

$ sudo dpkg -i flac_1.3.2-3+deb10u3_armhf.deb

$ which flac

/usr/bin/flac

sudo reboot

$ flac --version

flac 1.3.2

You may find you need to install GStreamer if you encounter errors regarding Gst.

Install GStreamer: Open a terminal and run the following command to install GStreamer and its base plugins:

sudo apt-get install gstreamer1.0-tools gstreamer1.0-plugins-base gstreamer1.0-plugins-good

This installs the GStreamer core, along with a set of essential and good-quality plugins.

Next, you need to install the Python bindings for GStreamer. Use this command:

sudo apt-get install python3-gst-1.0

This command installs the GStreamer bindings for Python 3.

Install Additional GStreamer Plugins (if needed): Depending on the audio formats you need to work with, you might need additional GStreamer plugins. For example, to install plugins for MP3 playback, use:

sudo apt-get install gstreamer1.0-plugins-ugly

To quit a running script on Pi from boot: ALT + PrtScSysRq (or Print button) + K

https://github.com/tinue/apa102-pi & Seeed Technology Limited for supplementary code.

https://medium.com/@ben_olney/openai-smart-speaker-with-raspberry-pi-5e284d21a53e

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ChatGPT-OpenAI-Smart-Speaker

Similar Open Source Tools

ChatGPT-OpenAI-Smart-Speaker

ChatGPT Smart Speaker is a project that enables speech recognition and text-to-speech functionalities using OpenAI and Google Speech Recognition. It provides scripts for running on PC/Mac and Raspberry Pi, allowing users to interact with a smart speaker setup. The project includes detailed instructions for setting up the required hardware and software dependencies, along with customization options for the OpenAI model engine, language settings, and response randomness control. The Raspberry Pi setup involves utilizing the ReSpeaker hardware for voice feedback and light shows. The project aims to offer an advanced smart speaker experience with features like wake word detection and response generation using AI models.

Open-LLM-VTuber

Open-LLM-VTuber is a project in early stages of development that allows users to interact with Large Language Models (LLM) using voice commands and receive responses through a Live2D talking face. The project aims to provide a minimum viable prototype for offline use on macOS, Linux, and Windows, with features like long-term memory using MemGPT, customizable LLM backends, speech recognition, and text-to-speech providers. Users can configure the project to chat with LLMs, choose different backend services, and utilize Live2D models for visual representation. The project supports perpetual chat, offline operation, and GPU acceleration on macOS, addressing limitations of existing solutions on macOS.

ai-voice-cloning

This repository provides a tool for AI voice cloning, allowing users to generate synthetic speech that closely resembles a target speaker's voice. The tool is designed to be user-friendly and accessible, with a graphical user interface that guides users through the process of training a voice model and generating synthetic speech. The tool also includes a variety of features that allow users to customize the generated speech, such as the pitch, volume, and speaking rate. Overall, this tool is a valuable resource for anyone interested in creating realistic and engaging synthetic speech.

llamafile

llamafile is a tool that enables users to distribute and run Large Language Models (LLMs) with a single file. It combines llama.cpp with Cosmopolitan Libc to create a framework that simplifies the complexity of LLMs into a single-file executable called a 'llamafile'. Users can run these executable files locally on most computers without the need for installation, making open LLMs more accessible to developers and end users. llamafile also provides example llamafiles for various LLM models, allowing users to try out different LLMs locally. The tool supports multiple CPU microarchitectures, CPU architectures, and operating systems, making it versatile and easy to use.

CLI

Bito CLI provides a command line interface to the Bito AI chat functionality, allowing users to interact with the AI through commands. It supports complex automation and workflows, with features like long prompts and slash commands. Users can install Bito CLI on Mac, Linux, and Windows systems using various methods. The tool also offers configuration options for AI model type, access key management, and output language customization. Bito CLI is designed to enhance user experience in querying AI models and automating tasks through the command line interface.

AlwaysReddy

AlwaysReddy is a simple LLM assistant with no UI that you interact with entirely using hotkeys. It can easily read from or write to your clipboard, and voice chat with you via TTS and STT. Here are some of the things you can use AlwaysReddy for: - Explain a new concept to AlwaysReddy and have it save the concept (in roughly your words) into a note. - Ask AlwaysReddy "What is X called?" when you know how to roughly describe something but can't remember what it is called. - Have AlwaysReddy proofread the text in your clipboard before you send it. - Ask AlwaysReddy "From the comments in my clipboard, what do the r/LocalLLaMA users think of X?" - Quickly list what you have done today and get AlwaysReddy to write a journal entry to your clipboard before you shutdown the computer for the day.

aiarena-web

aiarena-web is a website designed for running the aiarena.net infrastructure. It consists of different modules such as core functionality, web API endpoints, frontend templates, and a module for linking users to their Patreon accounts. The website serves as a platform for obtaining new matches, reporting results, featuring match replays, and connecting with Patreon supporters. The project is licensed under GPLv3 in 2019.

seer

Seer is a service that provides AI capabilities to Sentry by running inference on Sentry issues and providing user insights. It is currently in early development and not yet compatible with self-hosted Sentry instances. The tool requires access to internal Sentry resources and is intended for internal Sentry employees. Users can set up the environment, download model artifacts, integrate with local Sentry, run evaluations for Autofix AI agent, and deploy to a sandbox staging environment. Development commands include applying database migrations, creating new migrations, running tests, and more. The tool also supports VCRs for recording and replaying HTTP requests.

fasttrackml

FastTrackML is an experiment tracking server focused on speed and scalability, fully compatible with MLFlow. It provides a user-friendly interface to track and visualize your machine learning experiments, making it easy to compare different models and identify the best performing ones. FastTrackML is open source and can be easily installed and run with pip or Docker. It is also compatible with the MLFlow Python package, making it easy to integrate with your existing MLFlow workflows.

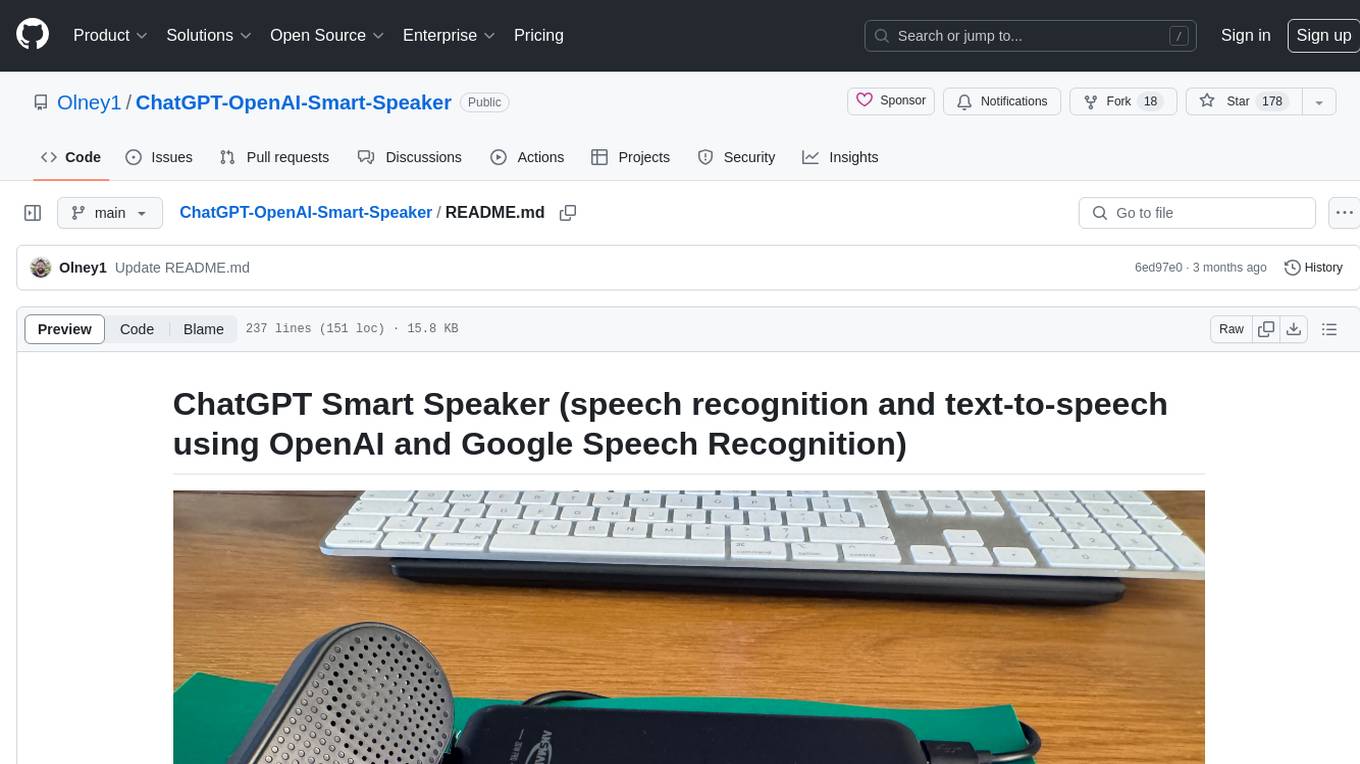

just-chat

Just-Chat is a containerized application that allows users to easily set up and chat with their AI agent. Users can customize their AI assistant using a YAML file, add new capabilities with Python tools, and interact with the agent through a chat web interface. The tool supports various modern models like DeepSeek Reasoner, ChatGPT, LLAMA3.3, etc. Users can also use semantic search capabilities with MeiliSearch to find and reference relevant information based on meaning. Just-Chat requires Docker or Podman for operation and provides detailed installation instructions for both Linux and Windows users.

llm-subtrans

LLM-Subtrans is an open source subtitle translator that utilizes LLMs as a translation service. It supports translating subtitles between any language pairs supported by the language model. The application offers multiple subtitle formats support through a pluggable system, including .srt, .ssa/.ass, and .vtt files. Users can choose to use the packaged release for easy usage or install from source for more control over the setup. The tool requires an active internet connection as subtitles are sent to translation service providers' servers for translation.

LLM_AppDev-HandsOn

This repository showcases how to build a simple LLM-based chatbot for answering questions based on documents using retrieval augmented generation (RAG) technique. It also provides guidance on deploying the chatbot using Podman or on the OpenShift Container Platform. The workshop associated with this repository introduces participants to LLMs & RAG concepts and demonstrates how to customize the chatbot for specific purposes. The software stack relies on open-source tools like streamlit, LlamaIndex, and local open LLMs via Ollama, making it accessible for GPU-constrained environments.

ai-town

AI Town is a virtual town where AI characters live, chat, and socialize. This project provides a deployable starter kit for building and customizing your own version of AI Town. It features a game engine, database, vector search, auth, text model, deployment, pixel art generation, background music generation, and local inference. You can customize your own simulation by creating characters and stories, updating spritesheets, changing the background, and modifying the background music.

openui

OpenUI is a tool designed to simplify the process of building UI components by allowing users to describe UI using their imagination and see it rendered live. It supports converting HTML to React, Svelte, Web Components, etc. The tool is open source and aims to make UI development fun, fast, and flexible. It integrates with various AI services like OpenAI, Groq, Gemini, Anthropic, Cohere, and Mistral, providing users with the flexibility to use different models. OpenUI also supports LiteLLM for connecting to various LLM services and allows users to create custom proxy configs. The tool can be run locally using Docker or Python, and it offers a development environment for quick setup and testing.

StableSwarmUI

StableSwarmUI is a modular Stable Diffusion web user interface that emphasizes making power tools easily accessible, high performance, and extensible. It is designed to be a one-stop-shop for all things Stable Diffusion, providing a wide range of features and capabilities to enhance the user experience.

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

For similar tasks

reolink_aio

The 'reolink_aio' Python package is designed to integrate Reolink devices (NVR/cameras) into your application. It implements Reolink IP NVR and camera API, allowing users to subscribe to Reolink ONVIF SWN events for real-time event notifications via webhook. The package provides functionalities to obtain and cache NVR or camera settings, capabilities, and states, as well as enable features like infrared lights, spotlight, and siren. Users can also subscribe to events, renew timers, and disconnect from the host device.

ChatGPT-OpenAI-Smart-Speaker

ChatGPT Smart Speaker is a project that enables speech recognition and text-to-speech functionalities using OpenAI and Google Speech Recognition. It provides scripts for running on PC/Mac and Raspberry Pi, allowing users to interact with a smart speaker setup. The project includes detailed instructions for setting up the required hardware and software dependencies, along with customization options for the OpenAI model engine, language settings, and response randomness control. The Raspberry Pi setup involves utilizing the ReSpeaker hardware for voice feedback and light shows. The project aims to offer an advanced smart speaker experience with features like wake word detection and response generation using AI models.

aiohue

Aiohue is an asynchronous library designed to control Philips Hue lights. It requires Python 3.10+ and utilizes asyncio and aiohttp. The library supports both V1 and V2 APIs of the Hue Bridge, with V2 API offering event-based updates to eliminate the need for polling. The contribution guidelines emphasize matching object hierarchy and property/method names with the Philips Hue API.

aioshelly

Aioshelly is an asynchronous library designed to control Shelly devices. It is currently under development and requires Python version 3.11 or higher, along with dependencies like bluetooth-data-tools, aiohttp, and orjson. The library provides examples for interacting with Gen1 devices using CoAP protocol and Gen2/Gen3 devices using RPC and WebSocket protocols. Users can easily connect to Shelly devices, retrieve status information, and perform various actions through the provided APIs. The repository also includes example scripts for quick testing and usage guidelines for contributors to maintain consistency with the Shelly API.

awesome-pi-agent

Awesome Pi Agent is a versatile and powerful tool for building intelligent agents on Raspberry Pi. It provides a framework for developing AI-powered applications that can interact with the physical world through sensors and actuators. With a focus on simplicity and extensibility, this tool enables users to create a wide range of smart devices, from home automation systems to robotics projects. The agent can be easily customized and integrated with various AI algorithms and libraries, making it suitable for both beginners and advanced users interested in exploring the intersection of AI and IoT technologies.

semantic-router

Semantic Router is a superfast decision-making layer for your LLMs and agents. Rather than waiting for slow LLM generations to make tool-use decisions, we use the magic of semantic vector space to make those decisions — _routing_ our requests using _semantic_ meaning.

hass-ollama-conversation

The Ollama Conversation integration adds a conversation agent powered by Ollama in Home Assistant. This agent can be used in automations to query information provided by Home Assistant about your house, including areas, devices, and their states. Users can install the integration via HACS and configure settings such as API timeout, model selection, context size, maximum tokens, and other parameters to fine-tune the responses generated by the AI language model. Contributions to the project are welcome, and discussions can be held on the Home Assistant Community platform.

luna-ai

Luna AI is a virtual streamer driven by a 'brain' composed of ChatterBot, GPT, Claude, langchain, chatglm, text-generation-webui, 讯飞星火, 智谱AI. It can interact with viewers in real-time during live streams on platforms like Bilibili, Douyin, Kuaishou, Douyu, or chat with you locally. Luna AI uses natural language processing and text-to-speech technologies like Edge-TTS, VITS-Fast, elevenlabs, bark-gui, VALL-E-X to generate responses to viewer questions and can change voice using so-vits-svc, DDSP-SVC. It can also collaborate with Stable Diffusion for drawing displays and loop custom texts. This project is completely free, and any identical copycat selling programs are pirated, please stop them promptly.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.