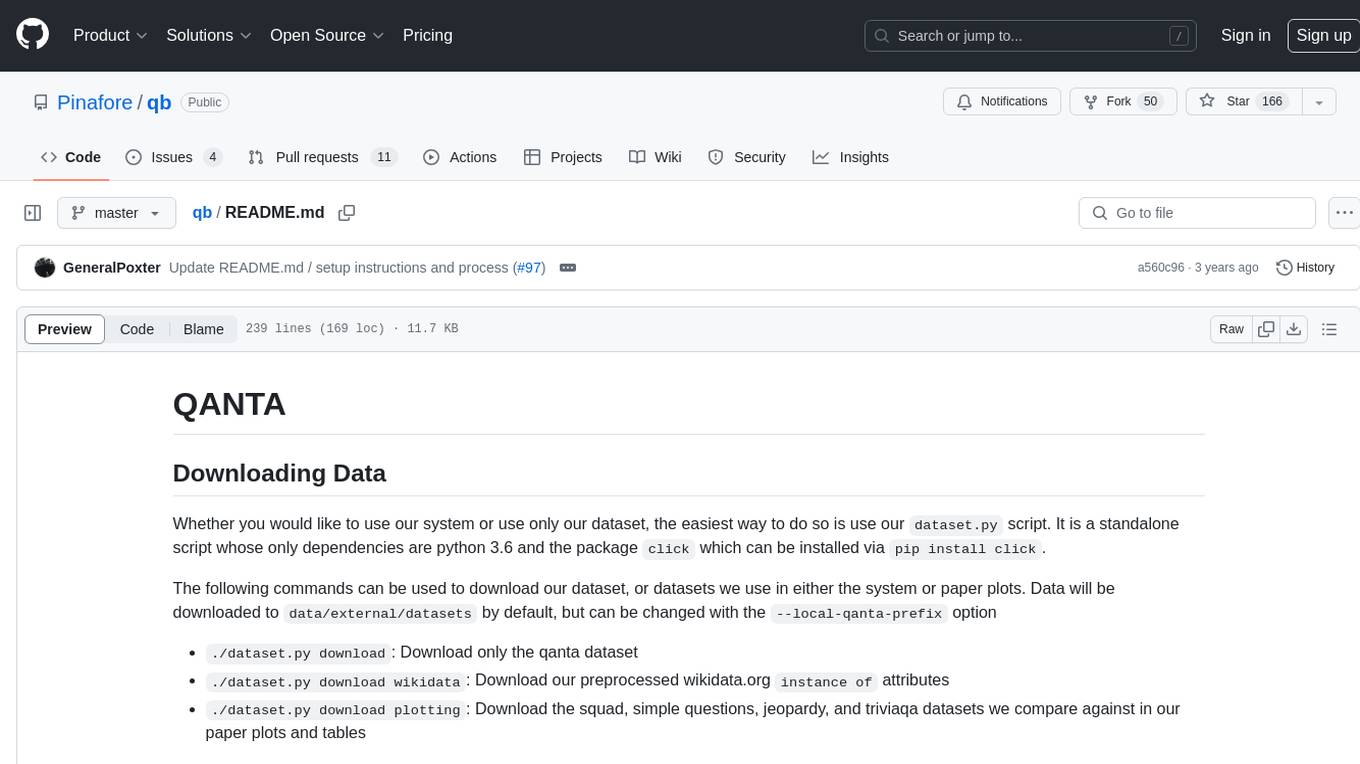

qb

QANTA Quiz Bowl AI

Stars: 167

QANTA is a system and dataset for question answering tasks. It provides a script to download datasets, preprocesses questions, and matches them with Wikipedia pages. The system includes various datasets, training, dev, and test data in JSON and SQLite formats. Dependencies include Python 3.6, `click`, and NLTK models. Elastic Search 5.6 is needed for the Guesser component. Configuration is managed through environment variables and YAML files. QANTA supports multiple guesser implementations that can be enabled/disabled. Running QANTA involves using `cli.py` and Luigi pipelines. The system accesses raw Wikipedia dumps for data processing. The QANTA ID numbering scheme categorizes datasets based on events and competitions.

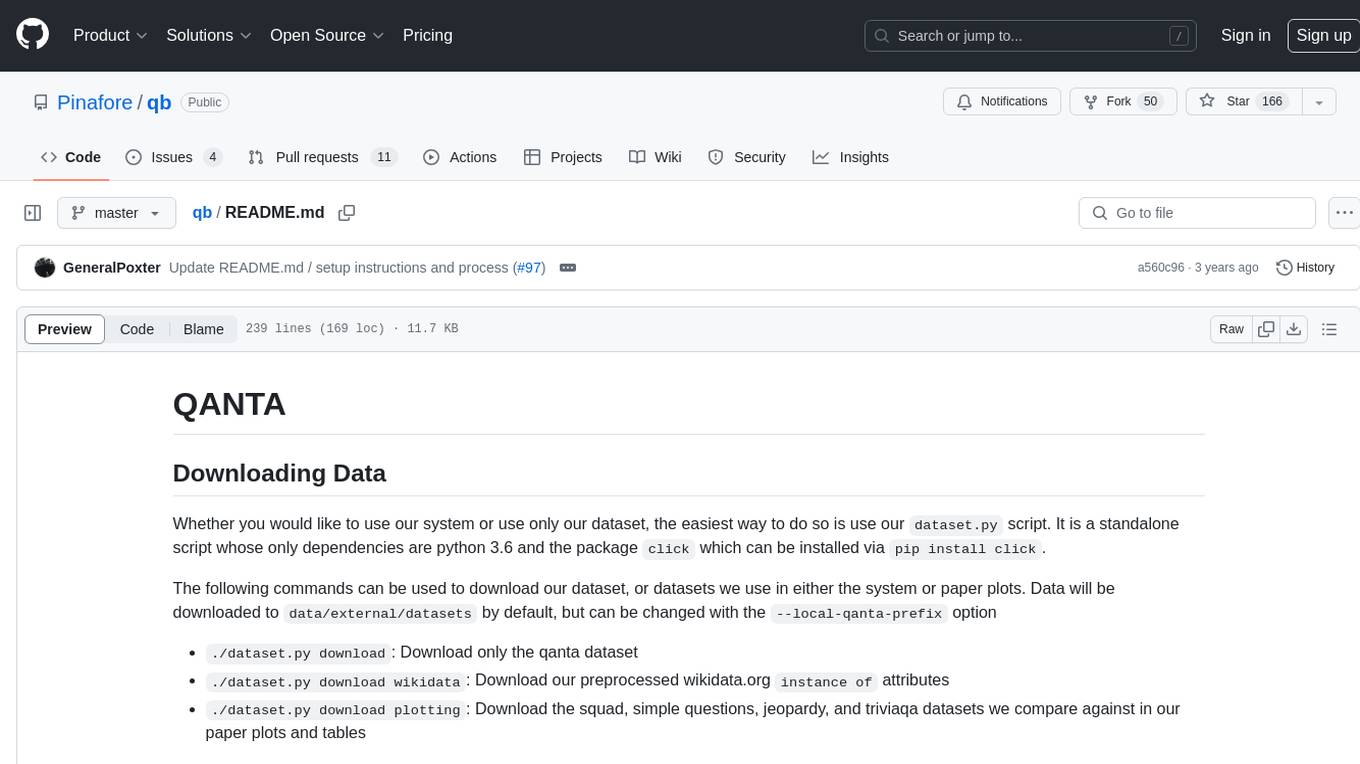

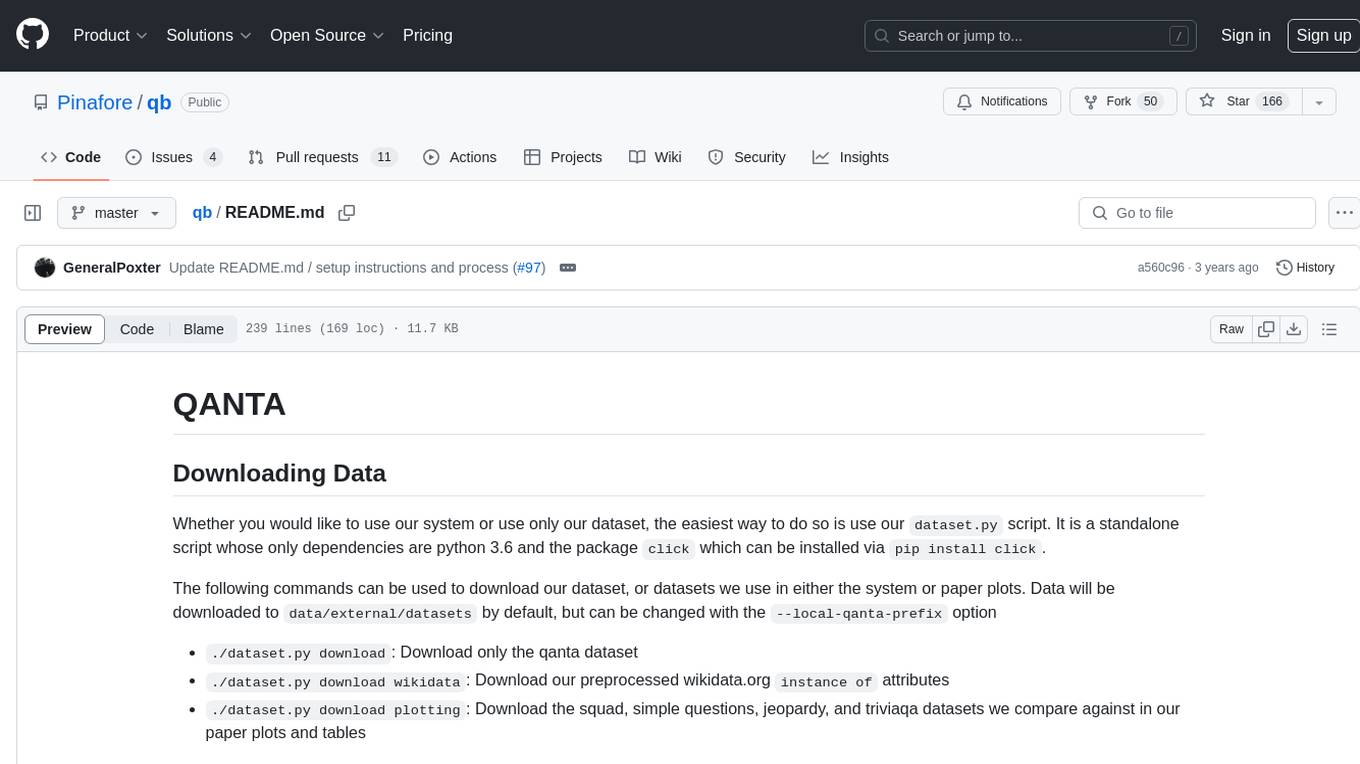

README:

Whether you would like to use our system or use only our dataset, the easiest way to do so is

use our dataset.py script. It is a standalone script whose only dependencies are python 3.6 and the package click

which can be installed via pip install click.

The following commands can be used to download our dataset, or datasets we use in either the system or paper plots.

Data will be downloaded to data/external/datasets by default, but can be changed with the --local-qanta-prefix

option

-

./dataset.py download: Download only the qanta dataset -

./dataset.py download wikidata: Download our preprocessed wikidata.orginstance ofattributes -

./dataset.py download plotting: Download the squad, simple questions, jeopardy, and triviaqa datasets we compare against in our paper plots and tables

-

qanta.unmapped.2018.04.18.json: All questions in our dataset, without mapped Wikipedia answers. Sourced from protobowl and quizdb. Light preprocessing has been applied to remove quiz bowl specific syntax such as instructions to moderators -

qanta.processed.2018.04.18.json: Prior dataset with added fields extracting the first sentence, and sentence tokenizations of the question paragraph for convenience. -

qanta.mapped.2018.04.18.json: The processed dataset with Wikipedia pages matched to the answer where possible. This includes all questions, even those without matched pages. -

qanta.2018.04.18.sqlite3: Equivalent toqanta.mapped.2018.04.18.jsonbut in sqlite3 format -

qanta.train.2018.04.18.json: Training data which is the mapped dataset filtered down to only questions with non-null page matches -

qanta.dev.2018.04.18.json: Dev data which is the mapped dataset filtered down to only questions with non-null page matches -

qanta.test.2018.04.18.json: Test data which is the mapped dataset filtered down to only questions with non-null page matches

Install all necessary Python packages into a virtual environment by running poetry install in the qanta directory. Further qanta setup requiring python depedencies should be performed in the virtual environment.

The virtual environment can be accessed by running poetry shell.

# Download nltk data

$ python3 nltk_setup.py(only needed for Elastic Search Guesser)

$ curl -L -O https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-5.6.2.tar.gz

$ tar -xvf elasticsearch-5.6.2.tar.gzInstall version 5.6.X, do not use 6.X. Also be sure that the directory bin/ within the extracted files is in your

$PATH as it contains the necessary binary elasticsearch.

In addition to these steps you need to include the qanta directory in your PYTHONPATH environment variable. We intend to fix path issues in the future by fixing absolute/relative paths.

QANTA configuration is done through a combination of environment variables and

the qanta-defaults.yaml/qanta.yaml files. QANTA will read a qanta.yaml

first if it exists, otherwise it will fall back to reading

qanta-defaults.yaml. This is meant to allow for custom configuration of

qanta.yaml after copying it via cp qanta-defaults.yaml qanta.yaml.

The configuration of most interest is how to enable or disable specific guesser

implementations. In the guesser config the keys such as

qanta.guesser.dan.DanGuesser correspond to the fully qualified paths of each

guesser. Each of these keys contain an array of configurations (this is

signified in yaml by the -). Our code will inspect all of these

configurations looking for those that have enabled: true, and only run those

guessers. By default we have enabled: false for all models. If you simply

want to perform a sanity check we recommend enabling

qanta.guesser.tfidf.TfidfGuesser. If you are looking for our best model and

configuration you should use enable qanta.guesser.rnn.RnnGuesser.

Running qanta is managed primarily by two methods: ./cli.py and

Luigi. The former is used to run specific

commands such as starting/stopping elastic search, but in general luigi is

the primary method for running our system.

Luigi is a pure python make-like framework for running data pipelines. Below we

give sample commands for running different parts of our pipeline. In general,

you should either append --local-scheduler to all commands or learn about

using the Luigi Central

Scheduler.

For these common tasks you can use command luigi --local-scheduler followed by:

-

--module qanta.pipeline.preprocess DownloadData: This downloads any necessary data and preprocesses it. This will download a copy of our preprocessed Wikipedia stored in AWS S3 and turn it into the format used by our code. This step requires the AWS CLI,lz4, Apache Spark, and may require a decent amount of RAM. -

--module qanta.pipeline.guesser AllGuesserReports: Train all enabled guessers, generate guesses for them, and produce a report of their performance intooutput/guesser.

Certain tasks might require Spacy models (e.g en_core_web_lg) or nltk data

(e.g wordnet) to be downloaded. See the FAQ

section for more information.

You can start/stop elastic search with

./cli.py elasticsearch start./cli.py elasticsearch stop

To provide and easy way to version, checkpoint, and restore runs of qanta we provide a script to

manage that at aws_checkpoint.py. We assume that you set an environment variable

QB_AWS_S3_BUCKET to where you want to checkpoint to and restore from. We assume that we have full

access to all the contents of the bucket so we suggest creating a dedicated bucket.

As part of our ingestion pipeline we access raw wikipedia dumps. The current code is based on the english wikipedia dumps created on 2017/04/01 available at https://dumps.wikimedia.org/enwiki/20170401/

Of these we use the following (you may need to use more recent dumps)

- Wikipedia page text: This is used to get the text, title, and id of wikipedia pages

- Wikipedia titles: This is used for more convenient access to wikipedia page titles

- Wikipedia redirects: DB dump for wikipedia redirects, used for resolving different ways of referencing the same wikipedia entity

- Wikipedia page to ids: Contains a mapping of wikipedia page and ids, necessary for making the redirect table useful

To process wikipedia we use https://github.com/attardi/wikiextractor with the following command:

$ WikiExtractor.py --processes 15 -o parsed-wiki --json enwiki-20170401-pages-articles-multistream.xml.bz2Do not use the flag to filter disambiguation pages. It uses a simple string regex to check the title and articles contents. This introduces both false positives and false negatives. We handle the problem of filtering these out by using the wikipedia categories dump

Afterwards we use the following command to tar it, compress it with lz4, and upload the archive to S3

tar cvf - parsed-wiki | lz4 - parsed-wiki.tar.lz4The output of this process is stored in s3://pinafore-us-west-2/public/wiki_redirects.csv

All the wikipedia database dumps are provided in MySQL sql files. This guide has a good explanation of how to install MySQL which is necessary to use SQL dumps. For this task we will need these tables:

- Redirect table: https://www.mediawiki.org/wiki/Manual:Redirect_table

- Page table: https://www.mediawiki.org/wiki/Manual:Page_table

- The namespace page is also helpful: https://www.mediawiki.org/wiki/Manual:Namespace

To install, prepare MySQL, and read in the Wikipedia SQL dumps execute the following:

- Install MySQL

sudo apt-get install mysql-serverandsudo mysql_secure_installation - Login with something like

mysql --user=root --password=something - Create a database and use it with

create database wikipedia;anduse wikipedia; -

source enwiki-20170401-redirect.sql;(in MySQL session) -

source enwiki-20170401-page.sql;(in MySQL session) - This will take quite a long time, so wait it out...

- Finally run the query to fetch the redirect mapping and write it to a CSV by executing

bin/redirect.sqlwithsource bin/redirect.sql. The file will be located in/var/lib/mysql/redirect.csvwhich requiressudoaccess to copy - The result of that query is CSV file containing a source page id, source page title, and target page title. This can be interpretted as the source page redirecting to the target page. We filter namespace=0 to keep only redirects/pages that are main pages and trash things like list/category pages

The purpose of this step is to use wikipedia category links to filter out disambiguation pages. Every wikipedia page

has a list of categories it belongs to. We filter out any pages which have a category which includes the string disambiguation

in its name. The output of this process is a json file containing a list of page_ids that correspond to known disambiguation pages.

These are then used downstream to filter down to only non-disambiguation wikipedia pages.

The output of this process is stored in s3://pinafore-us-west-2/public/disambiguation_pages.json with the csv also

saved at s3://pinafore-us-west-2/public/categorylinks.csv

The process for this is similar to redirects, except that you should instead source a file named similar to enwiki-20170401-categorylinks.sql, run

the script bin/categories.sql, and copy categorylinks.csv. Afterwards run ./cli.py categories disambiguate categorylinks.csv data/external/wikipedia/disambiguation_pages.json.

This file is automatically downloaded by the pipeline code like the redirects file so unless you would like to change this or inspect the results, you shouldn't need to worry about this.

These references may be useful and are the source for these instructions:

- https://www.digitalocean.com/community/tutorials/how-to-install-mysql-on-ubuntu-16-04

- https://dev.mysql.com/doc/refman/5.7/en/mysql-batch-commands.html

- http://stackoverflow.com/questions/356578/how-to-output-mysql-query-results-in-csv-format

pyspark uses the wrong version of python

Set PYSPARK_PYTHON to be python3

ImportError: No module named 'pyspark'

export PYTHONPATH=$SPARK_HOME/python:$SPARK_HOME/python/build:$PYTHONPATH

ValueError: unknown locale: UTF-8

export LC_ALL=en_US.UTF-8 export LANG=en_US.UTF-8

TypeError: namedtuple() missing 3 required keyword-only arguments: 'verbose', 'rename', and 'module'

Python 3.6 needs Spark 2.1.1

OSError: [E050] Can't find model 'en_core_web_lg'. It doesn't seem to be a shortcut link, a Python package or a valid path to a data directory.

To download the required Spacy model, run:

python -m spacy download en_core_web_lg

Missing "wordnet" data for nltk

In a Python interactive shell, run the following commands to download wordnet data:

import nltk

nltk.download('wordnet')- Default dataset starts near 0

- PACE Adversarial Writing Event May 2018 starts at 1,000,000

- December 15 2018 event starts at 2,000,000

- Dataset for HS student of ACF 2018 Regionals starts at 3,000,000

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for qb

Similar Open Source Tools

qb

QANTA is a system and dataset for question answering tasks. It provides a script to download datasets, preprocesses questions, and matches them with Wikipedia pages. The system includes various datasets, training, dev, and test data in JSON and SQLite formats. Dependencies include Python 3.6, `click`, and NLTK models. Elastic Search 5.6 is needed for the Guesser component. Configuration is managed through environment variables and YAML files. QANTA supports multiple guesser implementations that can be enabled/disabled. Running QANTA involves using `cli.py` and Luigi pipelines. The system accesses raw Wikipedia dumps for data processing. The QANTA ID numbering scheme categorizes datasets based on events and competitions.

vectorflow

VectorFlow is an open source, high throughput, fault tolerant vector embedding pipeline. It provides a simple API endpoint for ingesting large volumes of raw data, processing, and storing or returning the vectors quickly and reliably. The tool supports text-based files like TXT, PDF, HTML, and DOCX, and can be run locally with Kubernetes in production. VectorFlow offers functionalities like embedding documents, running chunking schemas, custom chunking, and integrating with vector databases like Pinecone, Qdrant, and Weaviate. It enforces a standardized schema for uploading data to a vector store and supports features like raw embeddings webhook, chunk validation webhook, S3 endpoint, and telemetry. The tool can be used with the Python client and provides detailed instructions for running and testing the functionalities.

aisheets

Hugging Face AI Sheets is an open-source tool for building, enriching, and transforming datasets using AI models with no code. It can be deployed locally or on the Hub, providing access to thousands of open models. Users can easily generate datasets, run data generation scripts, and customize inference endpoints for text generation. The tool supports custom LLMs and offers advanced configuration options for authentication, inference, and miscellaneous settings. With AI Sheets, users can leverage the power of AI models without writing any code, making dataset management and transformation efficient and accessible.

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

llm-subtrans

LLM-Subtrans is an open source subtitle translator that utilizes LLMs as a translation service. It supports translating subtitles between any language pairs supported by the language model. The application offers multiple subtitle formats support through a pluggable system, including .srt, .ssa/.ass, and .vtt files. Users can choose to use the packaged release for easy usage or install from source for more control over the setup. The tool requires an active internet connection as subtitles are sent to translation service providers' servers for translation.

warc-gpt

WARC-GPT is an experimental retrieval augmented generation pipeline for web archive collections. It allows users to interact with WARC files, extract text, generate text embeddings, visualize embeddings, and interact with a web UI and API. The tool is highly customizable, supporting various LLMs, providers, and embedding models. Users can configure the application using environment variables, ingest WARC files, start the server, and interact with the web UI and API to search for content and generate text completions. WARC-GPT is designed for exploration and experimentation in exploring web archives using AI.

PolyMind

PolyMind is a multimodal, function calling powered LLM webui designed for various tasks such as internet searching, image generation, port scanning, Wolfram Alpha integration, Python interpretation, and semantic search. It offers a plugin system for adding extra functions and supports different models and endpoints. The tool allows users to interact via function calling and provides features like image input, image generation, and text file search. The application's configuration is stored in a `config.json` file with options for backend selection, compatibility mode, IP address settings, API key, and enabled features.

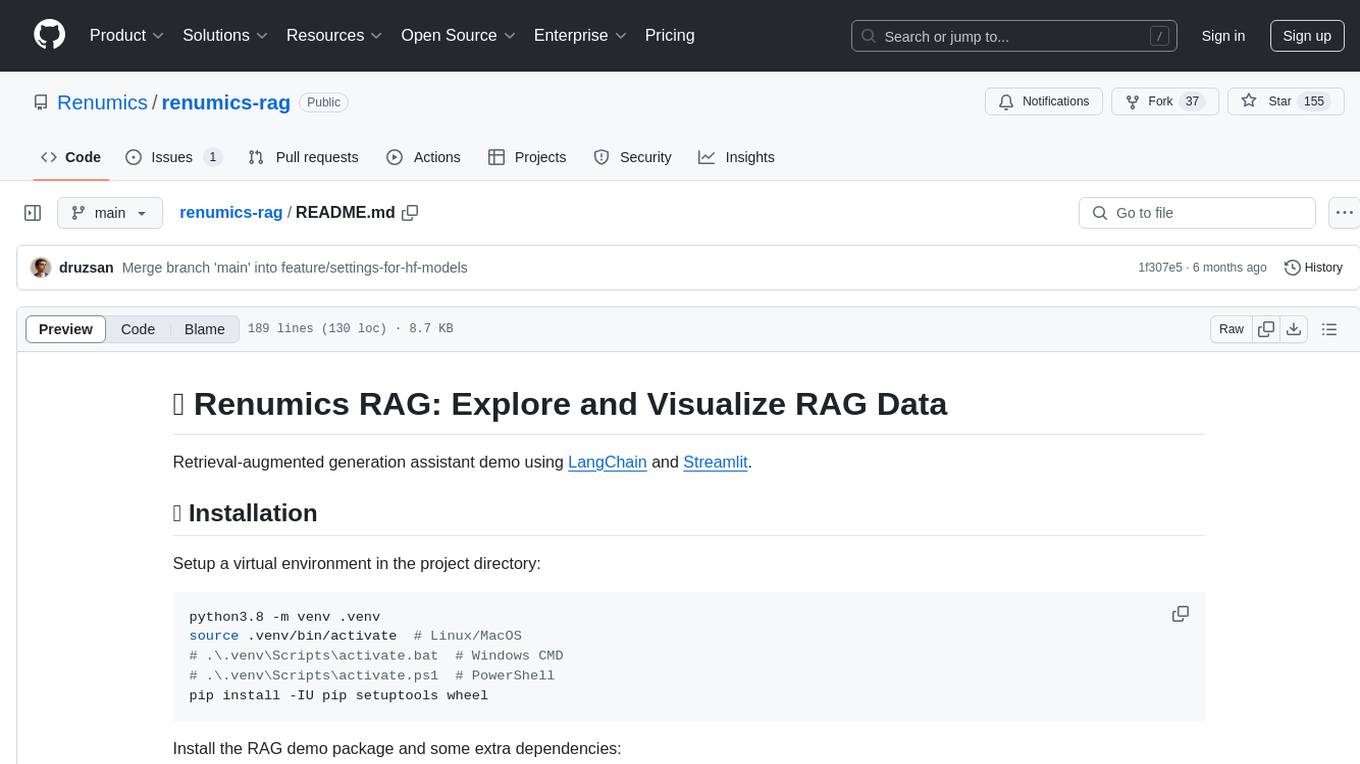

renumics-rag

Renumics RAG is a retrieval-augmented generation assistant demo that utilizes LangChain and Streamlit. It provides a tool for indexing documents and answering questions based on the indexed data. Users can explore and visualize RAG data, configure OpenAI and Hugging Face models, and interactively explore questions and document snippets. The tool supports GPU and CPU setups, offers a command-line interface for retrieving and answering questions, and includes a web application for easy access. It also allows users to customize retrieval settings, embeddings models, and database creation. Renumics RAG is designed to enhance the question-answering process by leveraging indexed documents and providing detailed answers with sources.

debug-gym

debug-gym is a text-based interactive debugging framework designed for debugging Python programs. It provides an environment where agents can interact with code repositories, use various tools like pdb and grep to investigate and fix bugs, and propose code patches. The framework supports different LLM backends such as OpenAI, Azure OpenAI, and Anthropic. Users can customize tools, manage environment states, and run agents to debug code effectively. debug-gym is modular, extensible, and suitable for interactive debugging tasks in a text-based environment.

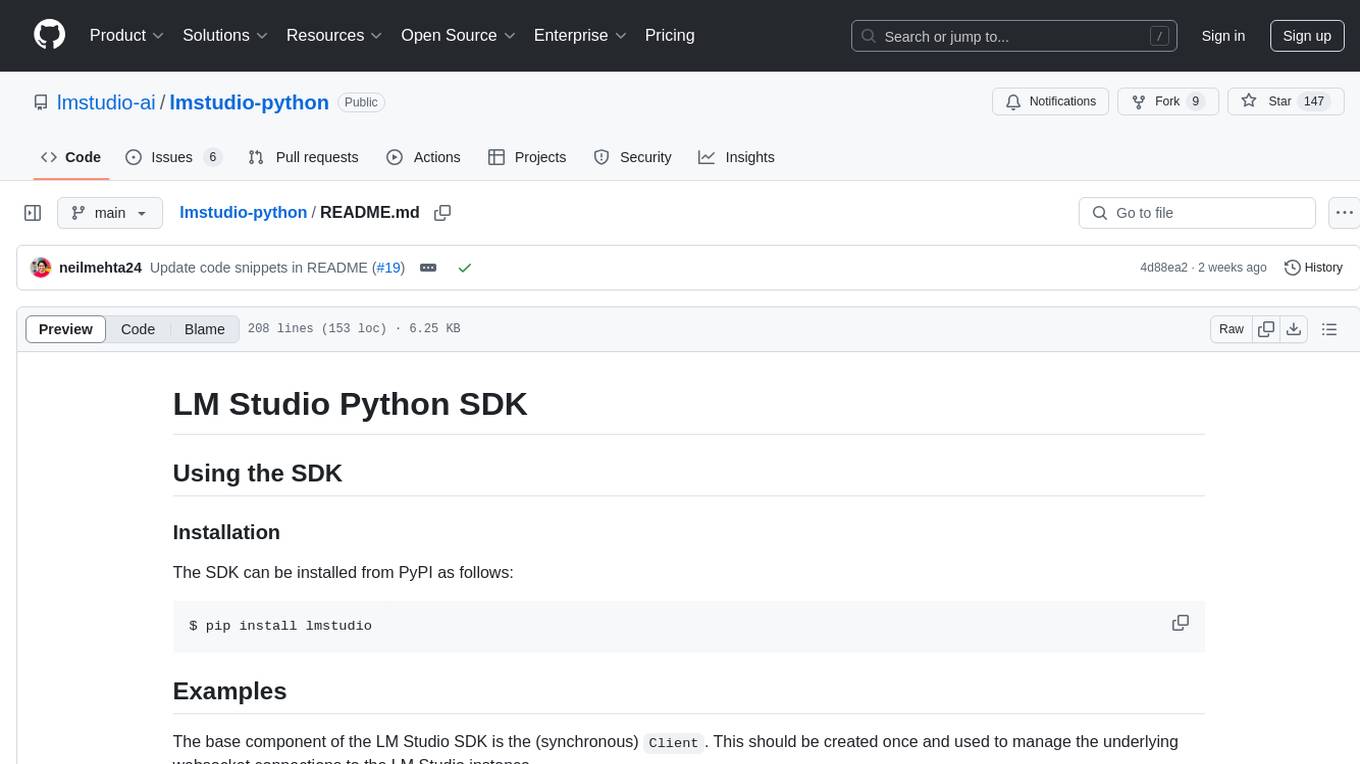

lmstudio-python

LM Studio Python SDK provides a convenient API for interacting with LM Studio instance, including text completion and chat response functionalities. The SDK allows users to manage websocket connections and chat history easily. It also offers tools for code consistency checks, automated testing, and expanding the API.

0chain

Züs is a high-performance cloud on a fast blockchain offering privacy and configurable uptime. It uses erasure code to distribute data between data and parity servers, allowing flexibility for IT managers to design for security and uptime. Users can easily share encrypted data with business partners through a proxy key sharing protocol. The ecosystem includes apps like Blimp for cloud migration, Vult for personal cloud storage, and Chalk for NFT artists. Other apps include Bolt for secure wallet and staking, Atlus for blockchain explorer, and Chimney for network participation. The QoS protocol challenges providers based on response time, while the privacy protocol enables secure data sharing. Züs supports hybrid and multi-cloud architectures, allowing users to improve regulatory compliance and security requirements.

MultiPL-E

MultiPL-E is a system for translating unit test-driven neural code generation benchmarks to new languages. It is part of the BigCode Code Generation LM Harness and allows for evaluating Code LLMs using various benchmarks. The tool supports multiple versions with improvements and new language additions, providing a scalable and polyglot approach to benchmarking neural code generation. Users can access a tutorial for direct usage and explore the dataset of translated prompts on the Hugging Face Hub.

vulnerability-analysis

The NVIDIA AI Blueprint for Vulnerability Analysis for Container Security showcases accelerated analysis on common vulnerabilities and exposures (CVE) at an enterprise scale, reducing mitigation time from days to seconds. It enables security analysts to determine software package vulnerabilities using large language models (LLMs) and retrieval-augmented generation (RAG). The blueprint is designed for security analysts, IT engineers, and AI practitioners in cybersecurity. It requires NVAIE developer license and API keys for vulnerability databases, search engines, and LLM model services. Hardware requirements include L40 GPU for pipeline operation and optional LLM NIM and Embedding NIM. The workflow involves LLM pipeline for CVE impact analysis, utilizing LLM planner, agent, and summarization nodes. The blueprint uses NVIDIA NIM microservices and Morpheus Cybersecurity AI SDK for vulnerability analysis.

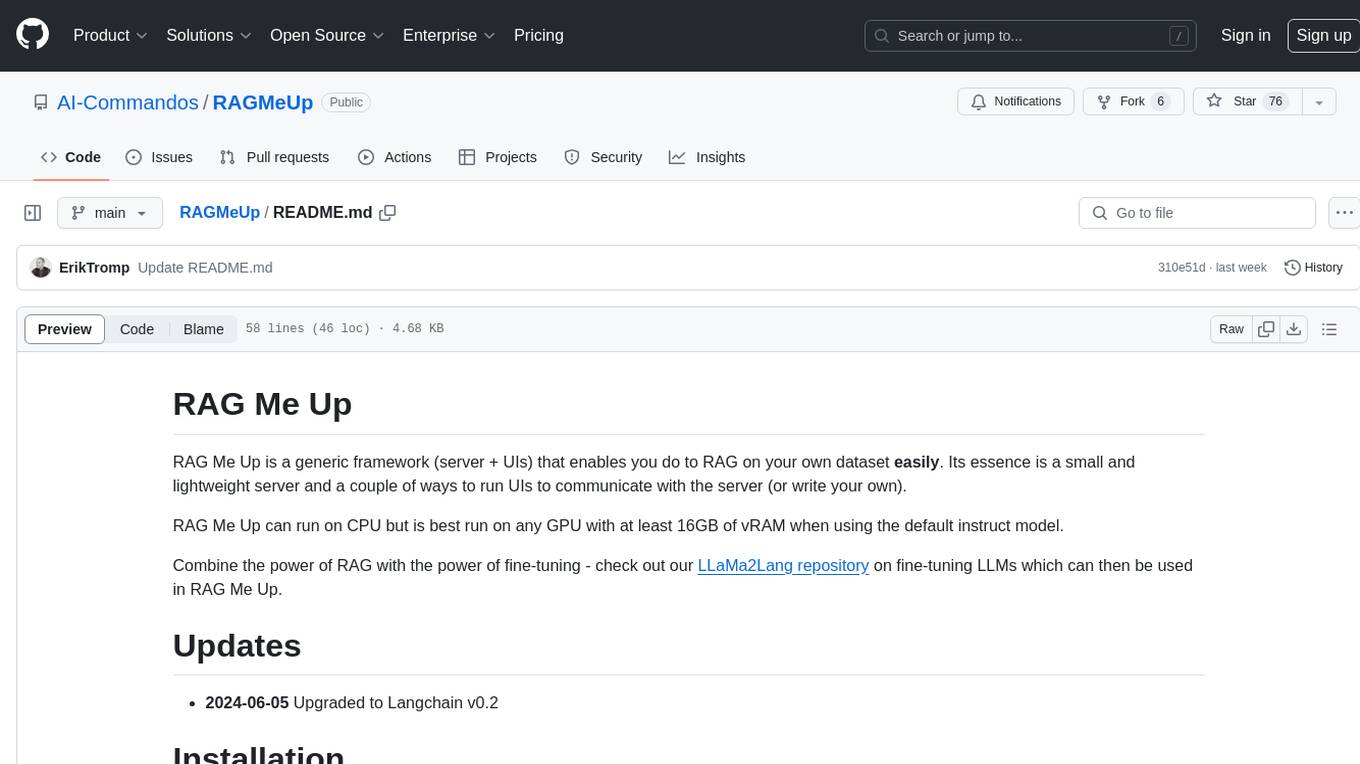

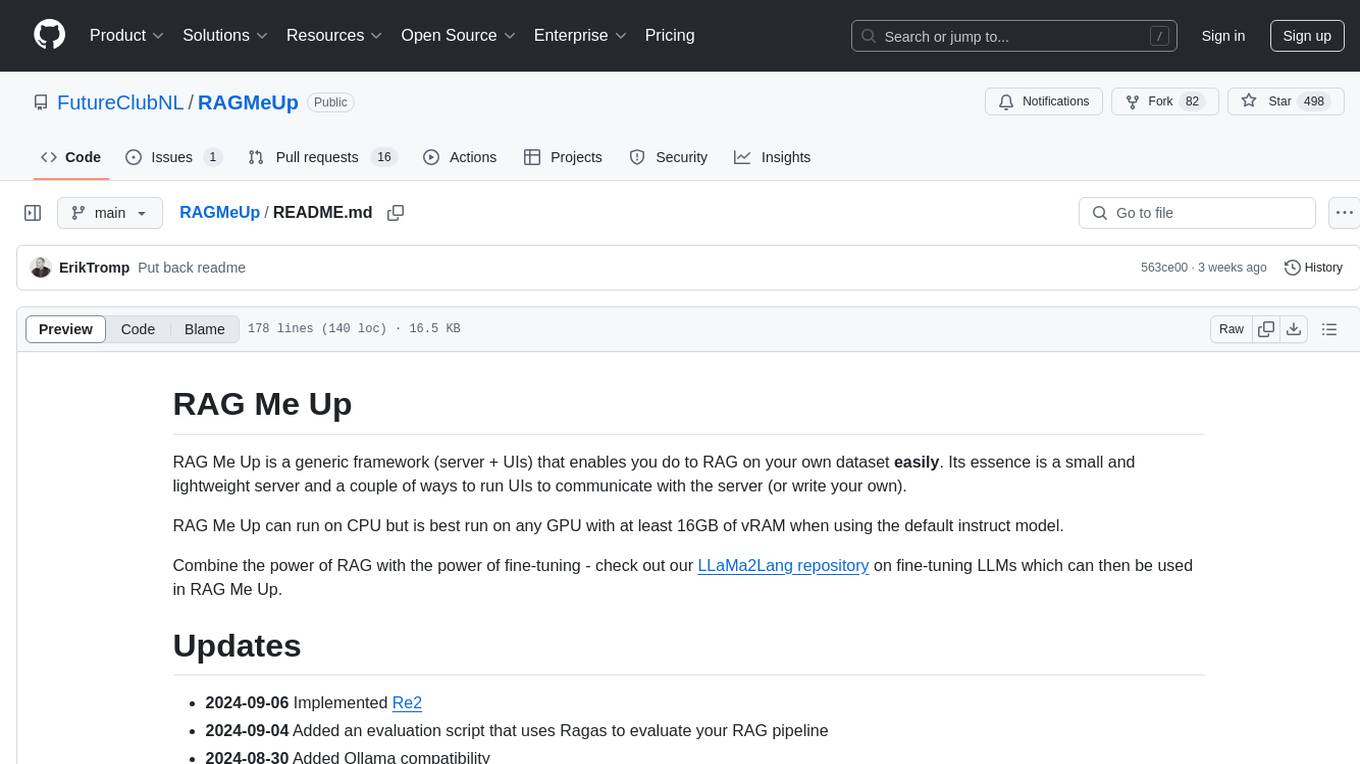

RAGMeUp

RAG Me Up is a generic framework that enables users to perform Retrieve and Generate (RAG) on their own dataset easily. It consists of a small server and UIs for communication. Best run on GPU with 16GB vRAM. Users can combine RAG with fine-tuning using LLaMa2Lang repository. The tool allows configuration for LLM, data, LLM parameters, prompt, and document splitting. Funding is sought to democratize AI and advance its applications.

vectara-answer

Vectara Answer is a sample app for Vectara-powered Summarized Semantic Search (or question-answering) with advanced configuration options. For examples of what you can build with Vectara Answer, check out Ask News, LegalAid, or any of the other demo applications.

RAGMeUp

RAG Me Up is a generic framework that enables users to perform Retrieve, Answer, Generate (RAG) on their own dataset easily. It consists of a small server and UIs for communication. The tool can run on CPU but is optimized for GPUs with at least 16GB of vRAM. Users can combine RAG with fine-tuning using the LLaMa2Lang repository. The tool provides a configurable RAG pipeline without the need for coding, utilizing indexing and inference steps to accurately answer user queries.

For similar tasks

qb

QANTA is a system and dataset for question answering tasks. It provides a script to download datasets, preprocesses questions, and matches them with Wikipedia pages. The system includes various datasets, training, dev, and test data in JSON and SQLite formats. Dependencies include Python 3.6, `click`, and NLTK models. Elastic Search 5.6 is needed for the Guesser component. Configuration is managed through environment variables and YAML files. QANTA supports multiple guesser implementations that can be enabled/disabled. Running QANTA involves using `cli.py` and Luigi pipelines. The system accesses raw Wikipedia dumps for data processing. The QANTA ID numbering scheme categorizes datasets based on events and competitions.

AI-TOD

AI-TOD is a dataset for tiny object detection in aerial images, containing 700,621 object instances across 28,036 images. Objects in AI-TOD are smaller with a mean size of 12.8 pixels compared to other aerial image datasets. To use AI-TOD, download xView training set and AI-TOD_wo_xview, then generate the complete dataset using the provided synthesis tool. The dataset is publicly available for academic and research purposes under CC BY-NC-SA 4.0 license.

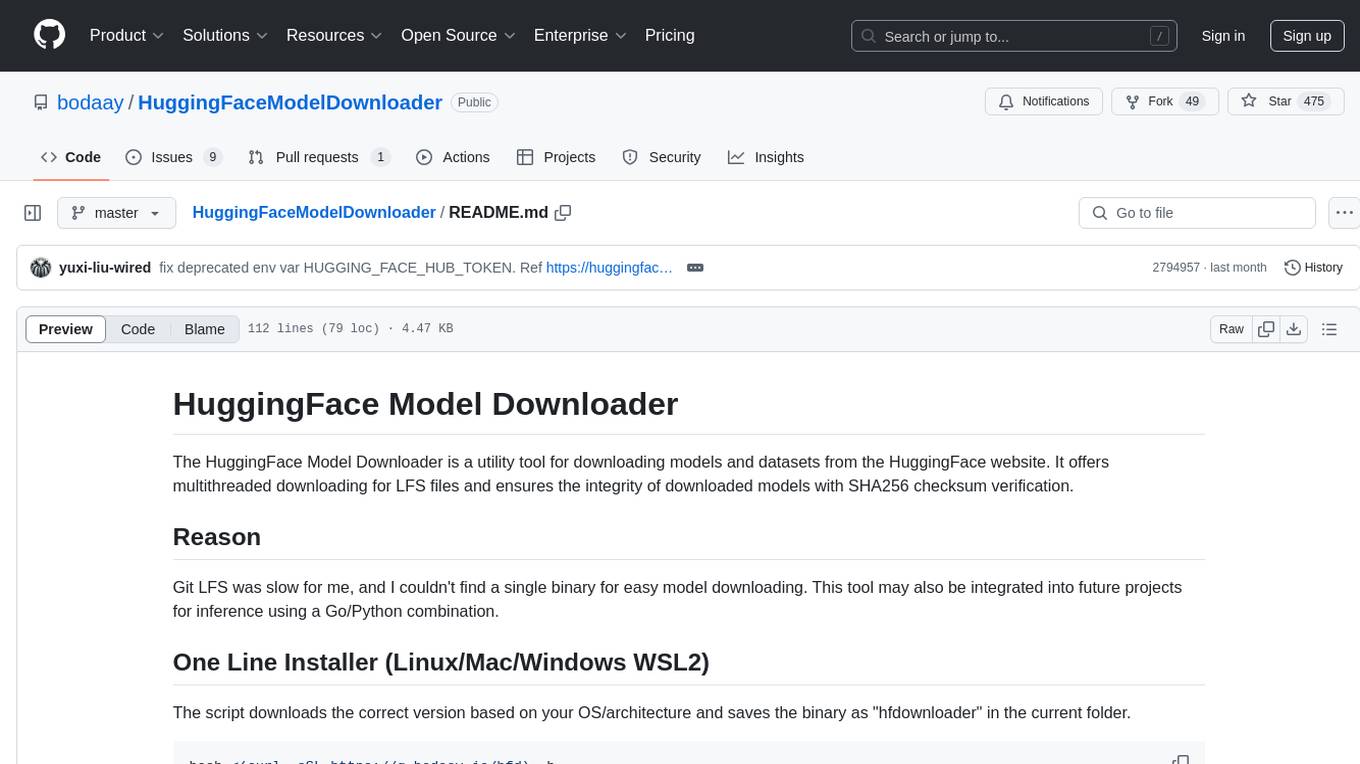

HuggingFaceModelDownloader

The HuggingFace Model Downloader is a utility tool for downloading models and datasets from the HuggingFace website. It offers multithreaded downloading for LFS files and ensures the integrity of downloaded models with SHA256 checksum verification. The tool provides features such as nested file downloading, filter downloads for specific LFS model files, support for HuggingFace Access Token, and configuration file support. It can be used as a library or a single binary for easy model downloading and inference in projects.

robot-3dlotus

Towards Generalizable Vision-Language Robotic Manipulation: A Benchmark and LLM-guided 3D Policy is a research project focusing on addressing the challenge of generalizing language-conditioned robotic policies to new tasks. The project introduces GemBench, a benchmark to evaluate the generalization capabilities of vision-language robotic manipulation policies. It also presents the 3D-LOTUS approach, which leverages rich 3D information for action prediction conditioned on language. Additionally, the project introduces 3D-LOTUS++, a framework that integrates 3D-LOTUS's motion planning capabilities with the task planning capabilities of LLMs and the object grounding accuracy of VLMs to achieve state-of-the-art performance on novel tasks in robotic manipulation.

airflow-chart

This Helm chart bootstraps an Airflow deployment on a Kubernetes cluster using the Helm package manager. The version of this chart does not correlate to any other component. Users should not expect feature parity between OSS airflow chart and the Astronomer airflow-chart for identical version numbers. To install this helm chart remotely (using helm 3) kubectl create namespace airflow helm repo add astronomer https://helm.astronomer.io helm install airflow --namespace airflow astronomer/airflow To install this repository from source sh kubectl create namespace airflow helm install --namespace airflow . Prerequisites: Kubernetes 1.12+ Helm 3.6+ PV provisioner support in the underlying infrastructure Installing the Chart: sh helm install --name my-release . The command deploys Airflow on the Kubernetes cluster in the default configuration. The Parameters section lists the parameters that can be configured during installation. Upgrading the Chart: First, look at the updating documentation to identify any backwards-incompatible changes. To upgrade the chart with the release name `my-release`: sh helm upgrade --name my-release . Uninstalling the Chart: To uninstall/delete the `my-release` deployment: sh helm delete my-release The command removes all the Kubernetes components associated with the chart and deletes the release. Updating DAGs: Bake DAGs in Docker image The recommended way to update your DAGs with this chart is to build a new docker image with the latest code (`docker build -t my-company/airflow:8a0da78 .`), push it to an accessible registry (`docker push my-company/airflow:8a0da78`), then update the Airflow pods with that image: sh helm upgrade my-release . --set images.airflow.repository=my-company/airflow --set images.airflow.tag=8a0da78 Docker Images: The Airflow image that are referenced as the default values in this chart are generated from this repository: https://github.com/astronomer/ap-airflow. Other non-airflow images used in this chart are generated from this repository: https://github.com/astronomer/ap-vendor. Parameters: The complete list of parameters supported by the community chart can be found on the Parameteres Reference page, and can be set under the `airflow` key in this chart. The following tables lists the configurable parameters of the Astronomer chart and their default values. | Parameter | Description | Default | | :----------------------------- | :-------------------------------------------------------------------------------------------------------- | :---------------------------- | | `ingress.enabled` | Enable Kubernetes Ingress support | `false` | | `ingress.acme` | Add acme annotations to Ingress object | `false` | | `ingress.tlsSecretName` | Name of secret that contains a TLS secret | `~` | | `ingress.webserverAnnotations` | Annotations added to Webserver Ingress object | `{}` | | `ingress.flowerAnnotations` | Annotations added to Flower Ingress object | `{}` | | `ingress.baseDomain` | Base domain for VHOSTs | `~` | | `ingress.auth.enabled` | Enable auth with Astronomer Platform | `true` | | `extraObjects` | Extra K8s Objects to deploy (these are passed through `tpl`). More about Extra Objects. | `[]` | | `sccEnabled` | Enable security context constraints required for OpenShift | `false` | | `authSidecar.enabled` | Enable authSidecar | `false` | | `authSidecar.repository` | The image for the auth sidecar proxy | `nginxinc/nginx-unprivileged` | | `authSidecar.tag` | The image tag for the auth sidecar proxy | `stable` | | `authSidecar.pullPolicy` | The K8s pullPolicy for the the auth sidecar proxy image | `IfNotPresent` | | `authSidecar.port` | The port the auth sidecar exposes | `8084` | | `gitSyncRelay.enabled` | Enables git sync relay feature. | `False` | | `gitSyncRelay.repo.url` | Upstream URL to the git repo to clone. | `~` | | `gitSyncRelay.repo.branch` | Branch of the upstream git repo to checkout. | `main` | | `gitSyncRelay.repo.depth` | How many revisions to check out. Leave as default `1` except in dev where history is needed. | `1` | | `gitSyncRelay.repo.wait` | Seconds to wait before pulling from the upstream remote. | `60` | | `gitSyncRelay.repo.subPath` | Path to the dags directory within the git repository. | `~` | Specify each parameter using the `--set key=value[,key=value]` argument to `helm install`. For example, sh helm install --name my-release --set executor=CeleryExecutor --set enablePodLaunching=false . Walkthrough using kind: Install kind, and create a cluster We recommend testing with Kubernetes 1.25+, example: sh kind create cluster --image kindest/node:v1.25.11 Confirm it's up: sh kubectl cluster-info --context kind-kind Add Astronomer's Helm repo sh helm repo add astronomer https://helm.astronomer.io helm repo update Create namespace + install the chart sh kubectl create namespace airflow helm install airflow -n airflow astronomer/airflow It may take a few minutes. Confirm the pods are up: sh kubectl get pods --all-namespaces helm list -n airflow Run `kubectl port-forward svc/airflow-webserver 8080:8080 -n airflow` to port-forward the Airflow UI to http://localhost:8080/ to confirm Airflow is working. Login as _admin_ and password _admin_. Build a Docker image from your DAGs: 1. Start a project using astro-cli, which will generate a Dockerfile, and load your DAGs in. You can test locally before pushing to kind with `astro airflow start`. `sh mkdir my-airflow-project && cd my-airflow-project astro dev init` 2. Then build the image: `sh docker build -t my-dags:0.0.1 .` 3. Load the image into kind: `sh kind load docker-image my-dags:0.0.1` 4. Upgrade Helm deployment: sh helm upgrade airflow -n airflow --set images.airflow.repository=my-dags --set images.airflow.tag=0.0.1 astronomer/airflow Extra Objects: This chart can deploy extra Kubernetes objects (assuming the role used by Helm can manage them). For Astronomer Cloud and Enterprise, the role permissions can be found in the Commander role. yaml extraObjects: - apiVersion: batch/v1beta1 kind: CronJob metadata: name: "{{ .Release.Name }}-somejob" spec: schedule: "*/10 * * * *" concurrencyPolicy: Forbid jobTemplate: spec: template: spec: containers: - name: myjob image: ubuntu command: - echo args: - hello restartPolicy: OnFailure Contributing: Check out our contributing guide! License: Apache 2.0 with Commons Clause

Awesome-AI-Data-GitHub-Repos

Awesome AI & Data GitHub-Repos is a curated list of essential GitHub repositories covering the AI & ML landscape. It includes resources for Natural Language Processing, Large Language Models, Computer Vision, Data Science, Machine Learning, MLOps, Data Engineering, SQL & Database, and Statistics. The repository aims to provide a comprehensive collection of projects and resources for individuals studying or working in the field of AI and data science.

routilux

Routilux is a powerful event-driven workflow orchestration framework designed for building complex data pipelines and workflows effortlessly. It offers features like event queue architecture, flexible connections, built-in state management, robust error handling, concurrent execution, persistence & recovery, and simplified API. Perfect for tasks such as data pipelines, API orchestration, event processing, workflow automation, microservices coordination, and LLM agent workflows.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.