Best AI tools for< Manage Data Pipelines >

20 - AI tool Sites

Astera Software

Astera Software offers enterprise-ready data management solutions, including data integration, unstructured data management, data warehousing, and EDI Connect. The platform provides automated data processing, data governance, and AI capabilities to transform data into powerful insights, enabling smarter decisions and innovation. Astera simplifies data management with features like data pipeline builder, data warehouse automation, and EDI transaction optimization. Trusted by leading enterprises worldwide, Astera boosts operational efficiency, accelerates time to market, ensures data accuracy, and reduces operational costs through AI-powered data management.

SingleStore

SingleStore is a real-time data platform designed for apps, analytics, and gen AI. It offers faster hybrid vector + full-text search, fast-scaling integrations, and a free tier. SingleStore can read, write, and reason on petabyte-scale data in milliseconds. It supports streaming ingestion, high concurrency, first-class vector support, record lookups, and more.

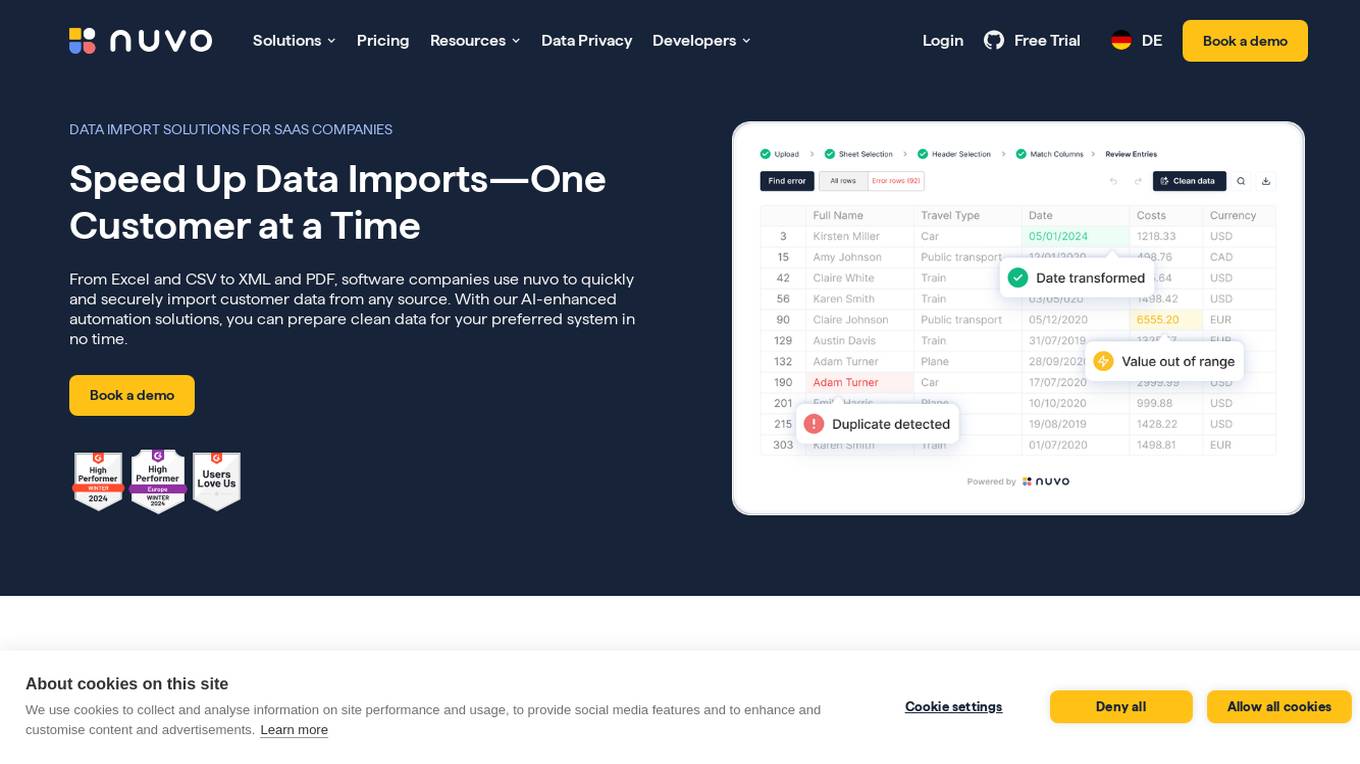

nuvo

nuvo is an AI-powered data import solution that offers fast, secure, and scalable data import solutions for software companies. It provides tools like nuvo Data Importer SDK and nuvo Data Pipeline to streamline manual and recurring ETL data imports, enabling users to manage data imports independently. With AI-enhanced automation, nuvo helps prepare clean data for preferred systems quickly and efficiently, reducing manual effort and improving data quality. The platform allows users to upload unlimited data in various formats, match imported data to system schemas, clean and validate data, and import clean data into target systems with just a click.

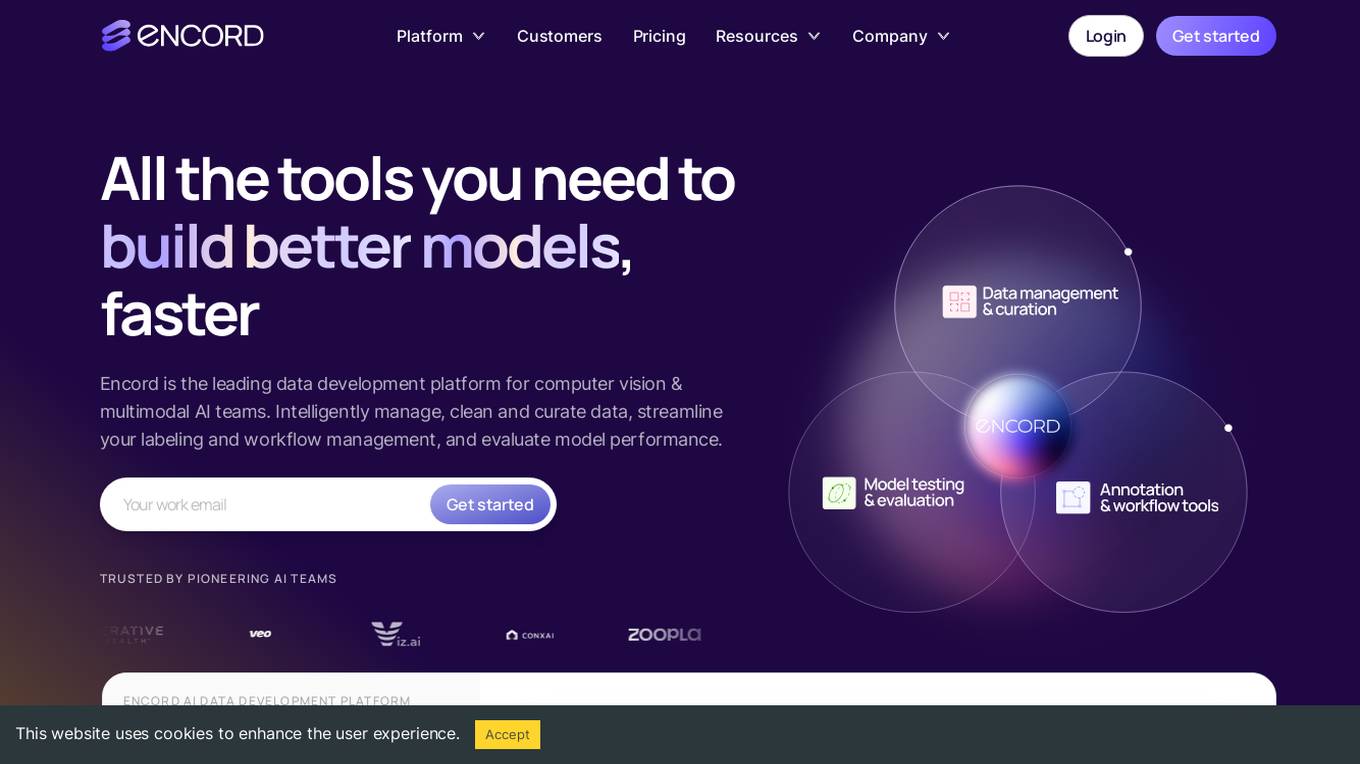

Encord

Encord is a complete data development platform designed for AI applications, specifically tailored for computer vision and multimodal AI teams. It offers tools to intelligently manage, clean, and curate data, streamline labeling and workflow management, and evaluate model performance. Encord aims to unlock the potential of AI for organizations by simplifying data-centric AI pipelines, enabling the building of better models and deploying high-quality production AI faster.

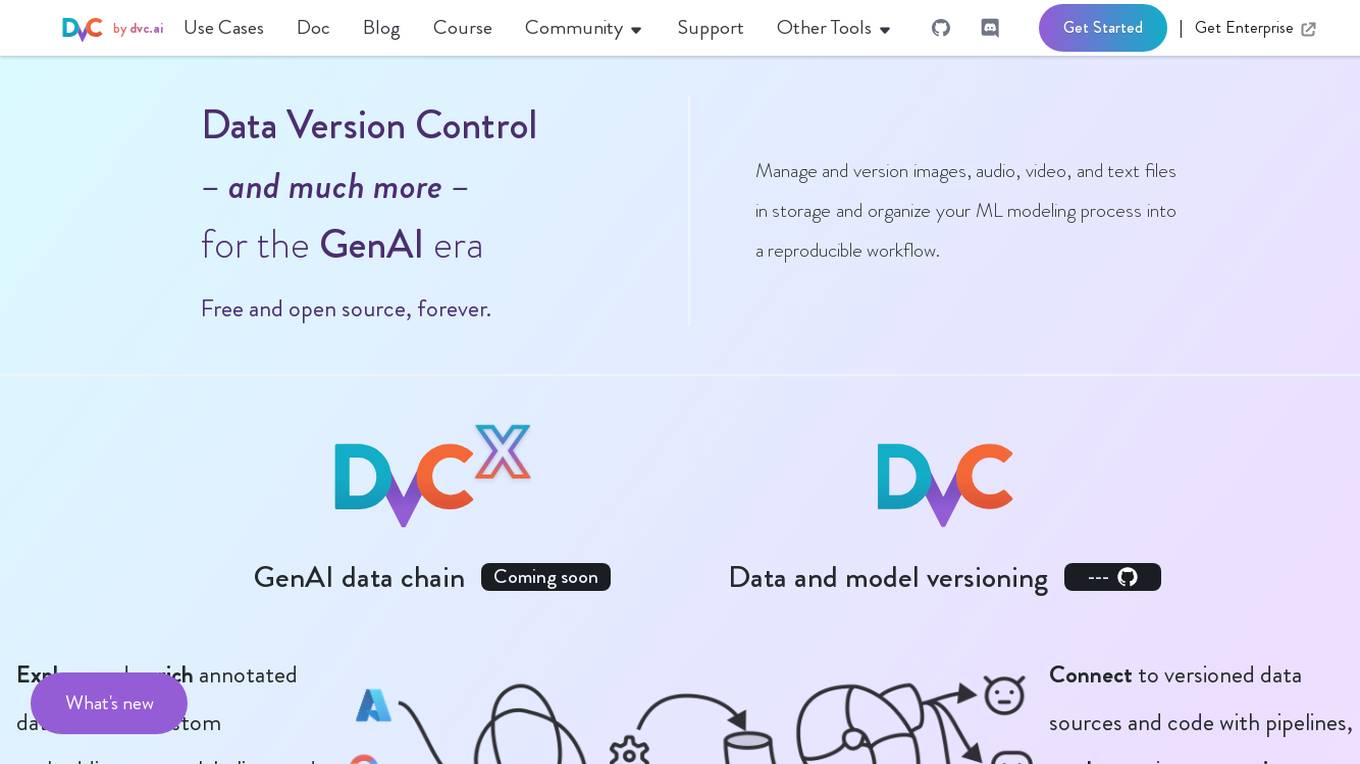

DVC

DVC is an open-source version control system for machine learning projects. It allows users to track and manage their data, models, and code in a single place. DVC also provides a number of features that make it easy to collaborate on machine learning projects, such as experiment tracking, model registration, and pipeline management.

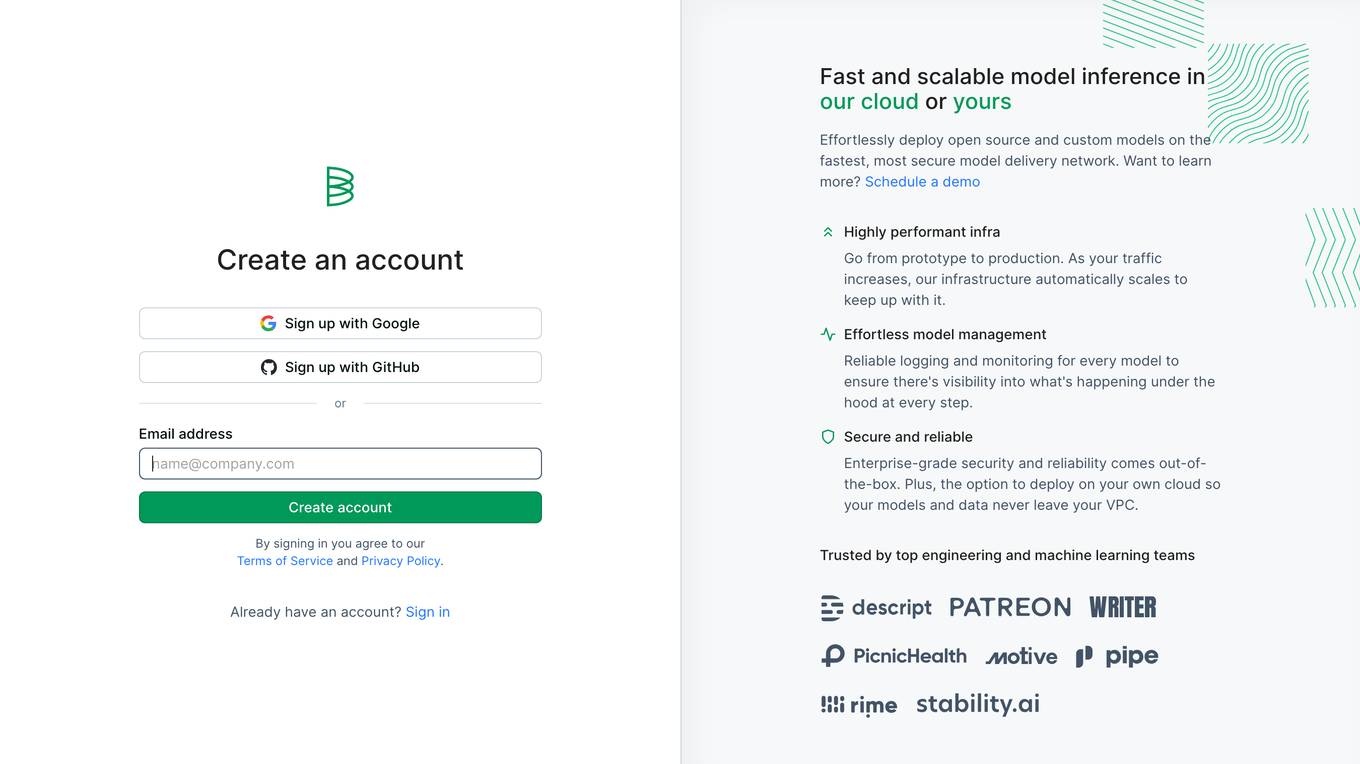

Baseten

Baseten is a machine learning infrastructure that provides a unified platform for data scientists and engineers to build, train, and deploy machine learning models. It offers a range of features to simplify the ML lifecycle, including data preparation, model training, and deployment. Baseten also provides a marketplace of pre-built models and components that can be used to accelerate the development of ML applications.

Tecton

Tecton is an AI data platform that helps build smarter AI applications by simplifying feature engineering, generating training data, serving real-time data, and enhancing AI models with context-rich prompts. It automates data pipelines, improves model accuracy, and lowers production costs, enabling faster deployment of AI models. Tecton abstracts away data complexity, provides a developer-friendly experience, and allows users to create features from any source. Trusted by top engineering teams, Tecton streamlines ML delivery processes, improves customer interactions, and automates release processes through CI/CD pipelines.

Credal

Credal is an AI tool that allows users to build secure AI assistants for enterprise operations. It enables every employee to create customized AI assistants with built-in security, permissions, and compliance features. Credal supports data integration, access control, search functionalities, and API development. The platform offers real-time sync, automatic permissions synchronization, and AI model deployment with security and compliance measures. It helps enterprises manage ETL pipelines, schedule tasks, and configure data processing. Credal ensures data protection, compliance with regulations like HIPAA, and comprehensive audit capabilities for generative AI applications.

Hopsworks

Hopsworks is an AI platform that offers a comprehensive solution for building, deploying, and monitoring machine learning systems. It provides features such as a Feature Store, real-time ML capabilities, and generative AI solutions. Hopsworks enables users to develop and deploy reliable AI systems, orchestrate and monitor models, and personalize machine learning models with private data. The platform supports batch and real-time ML tasks, with the flexibility to deploy on-premises or in the cloud.

Context Data

Context Data is an enterprise data platform designed for Generative AI applications. It enables organizations to build AI apps without the need to manage vector databases, pipelines, and infrastructure. The platform empowers AI teams to create mission-critical applications by simplifying the process of building and managing complex workflows. Context Data also provides real-time data processing capabilities and seamless vector data processing. It offers features such as data catalog ontology, semantic transformations, and the ability to connect to major vector databases. The platform is ideal for industries like financial services, healthcare, real estate, and shipping & supply chain.

FrogHire.ai

FrogHire.ai is an AI-powered job-seeking toolkit designed to help users search, research, improve, and manage their job applications effectively. It offers features such as Profile Match Rate, Keywords Highlighter, Strategy Job Manager, Interview Hub, Resume Builder, H1B Job List, and Career Outcome Navigator. FrogHire.ai empowers international students and job seekers by providing insights, customized search strategies, and tracking tools to enhance their job search experience.

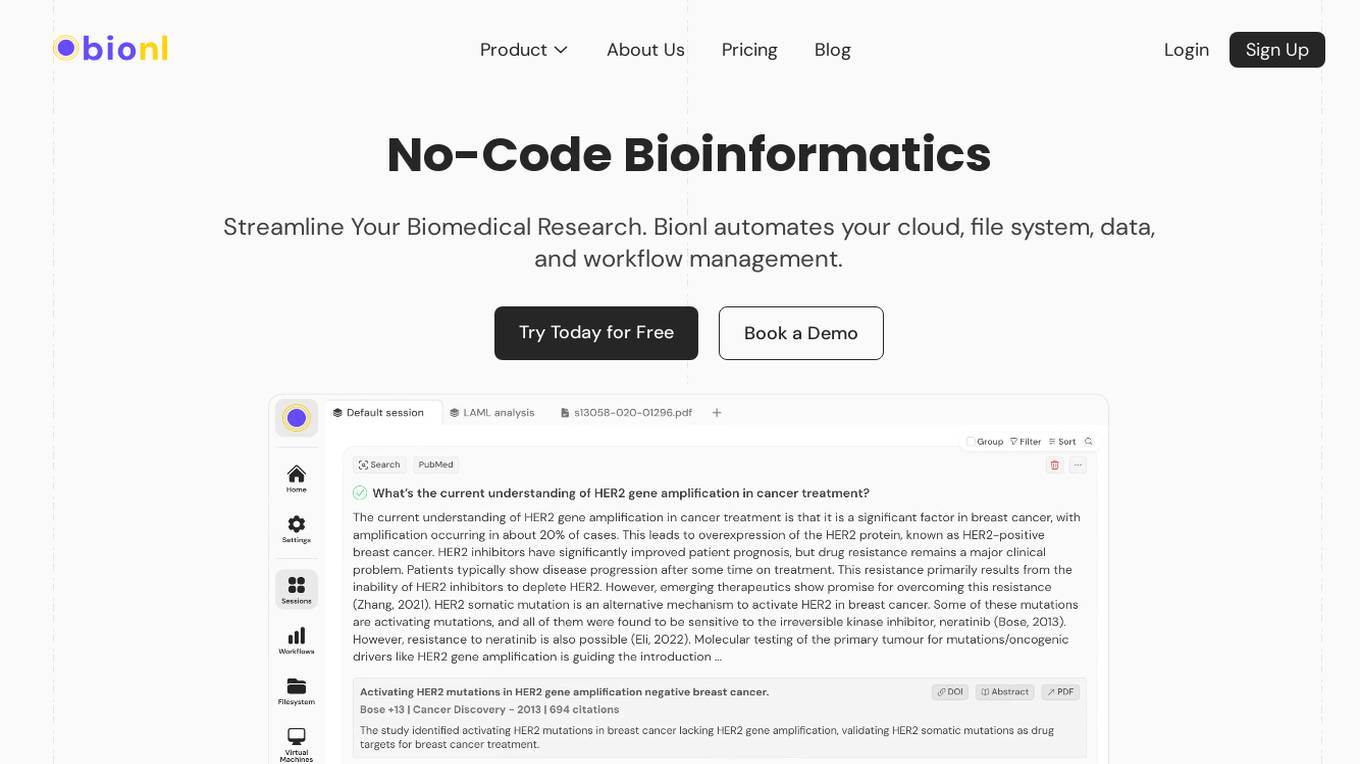

Bionl

Bionl is a no-code bioinformatics platform designed to streamline biomedical research for researchers and scientists. It offers a full workspace with features such as bioinformatics pipelines customization, GenAI for data analysis, AI-powered literature search, PDF analysis, and access to public datasets. Bionl aims to automate cloud, file system, data, and workflow management for efficient and precise analyses. The platform caters to Pharma and Biotech companies, academic researchers, and bioinformatics CROs, providing powerful tools for genetic analysis and speeding up research processes.

Modjo

Modjo is an AI platform designed to boost sales team productivity, enhance commercial efficiency, and develop sales skills. It captures interactions with customers, creates an augmented conversational database, and deploys AI solutions within organizations. Modjo's AI capabilities help in productivity, sales execution, and coaching, enabling teams to focus on selling, manage pipelines efficiently, and develop sales skills. The platform offers features like AI call scoring, CRM filling, call reviews, email follow-up, and insights library.

Athina AI

Athina AI is a comprehensive platform designed to monitor, debug, analyze, and improve the performance of Large Language Models (LLMs) in production environments. It provides a suite of tools and features that enable users to detect and fix hallucinations, evaluate output quality, analyze usage patterns, and optimize prompt management. Athina AI supports integration with various LLMs and offers a range of evaluation metrics, including context relevancy, harmfulness, summarization accuracy, and custom evaluations. It also provides a self-hosted solution for complete privacy and control, a GraphQL API for programmatic access to logs and evaluations, and support for multiple users and teams. Athina AI's mission is to empower organizations to harness the full potential of LLMs by ensuring their reliability, accuracy, and alignment with business objectives.

Salesmate

Salesmate is a modern CRM software designed for teams to market, sell, and service from one platform. It offers advanced automation features to streamline processes, engage customers across all channels, generate leads, and make better decisions with rich data and insights. Salesmate is highly customizable, user-friendly, and suitable for various industries and roles. With time-saving tools, automations, and AI capabilities, Salesmate aims to improve user experience and boost business growth.

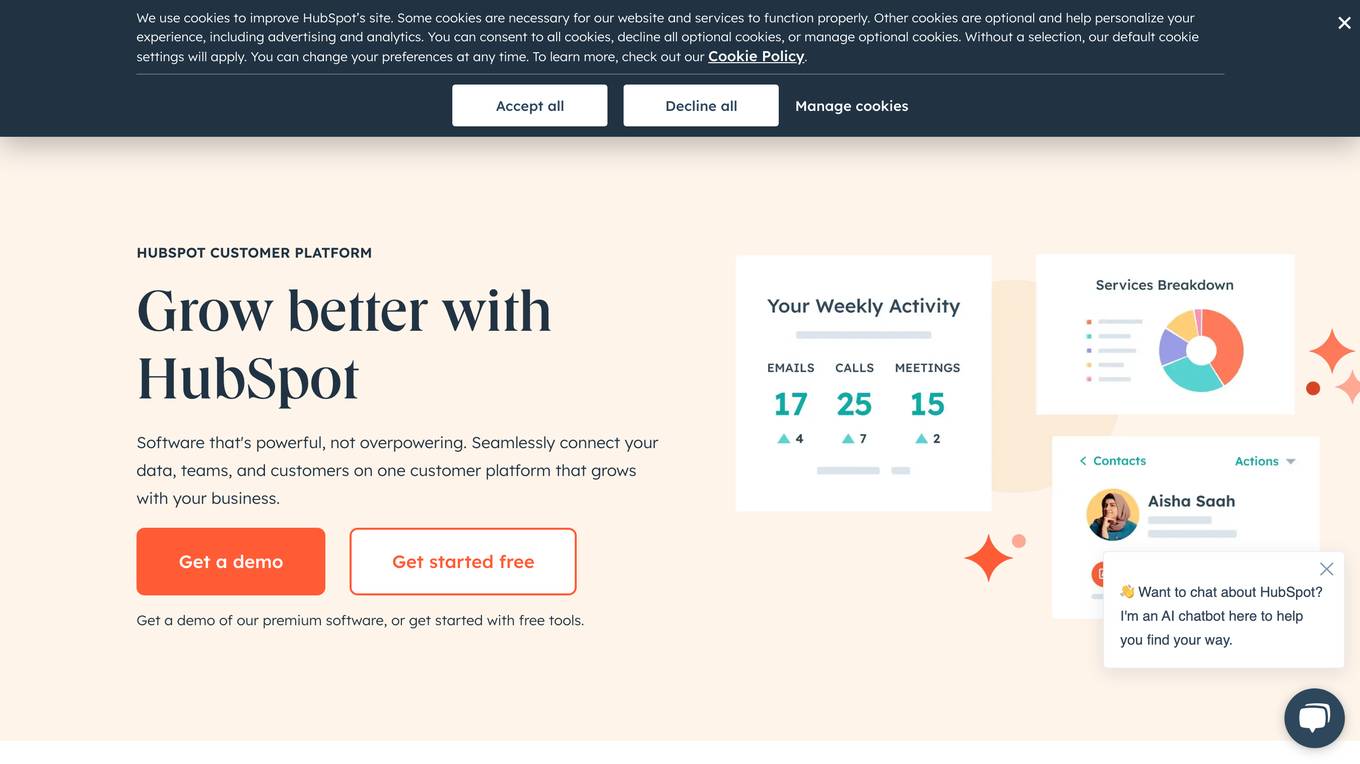

HubSpot

HubSpot is a customer relationship management (CRM) platform that provides software and tools for marketing, sales, customer service, content management, and operations. It is designed to help businesses grow by connecting their data, teams, and customers on one platform. HubSpot's AI tools are used to automate tasks, personalize marketing campaigns, and provide insights into customer behavior.

Oorwin

Oorwin is an AI Recruitment & Talent Management Platform trusted by over 1,000 customers globally. It offers advanced data security and features like Applicant Tracking System, Customer Relation Management, Human Resource Management, Candidate Relationship, Talent Recruitment, and Marketplace Apps. Oorwin leverages AI to streamline job posting, screening, interview scheduling, client interactions, sales pipelines, employee onboarding, talent nurturing, and recruitment processes. It also provides generative AI capabilities for auto-generating emails, job descriptions, and personalized outreach. Oorwin is designed to simplify and accelerate the talent lifecycle, reduce manual work, and boost recruiter productivity for modern hiring teams.

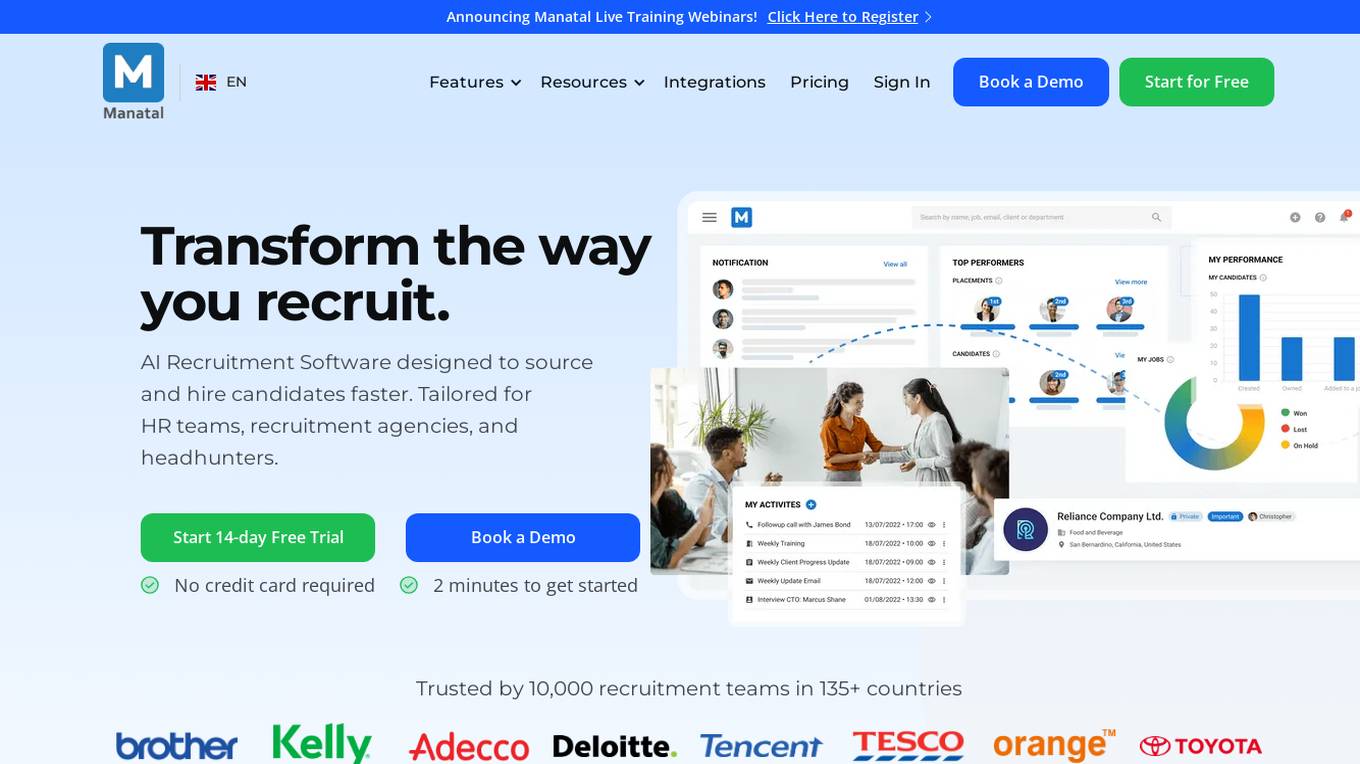

Manatal

Manatal is an AI Recruitment Software designed to streamline the hiring processes for HR teams, recruitment agencies, and headhunters. It offers features such as candidate sourcing from various channels, applicant tracking system, recruitment CRM, candidate enrichment, AI recommendations, collaboration tools, reports & analytics, branded career page creation, support & assistance, data privacy compliance, and more. The platform provides customizable pipelines, AI-powered recommendations, candidate profile enrichment, mobile application access, and onboarding & placement management. Manatal aims to transform recruitment by leveraging AI technology to source and hire candidates faster and more efficiently.

SeekOut

SeekOut is an AI-powered platform designed to help organizations find the right candidates for open roles, develop their teams, and improve company culture. It offers features such as external talent sourcing, applicant review, pipeline insights, internal talent development, career compass, and talent intelligence. SeekOut is trusted by over 1,000 leading brands to recruit hard-to-find, diverse talent and manage talent acquisition and management in one platform. The platform integrates external data with HR systems to automatically build comprehensive profiles and provides data-driven insights to understand talent needs and prepare for the future.

DataBahn

DataBahn is an AI-powered data pipeline management platform that empowers global enterprises with intelligent tools to gather, manage, and move data reliably and quickly. It offers a comprehensive solution for data integration, management, and optimization, helping users save costs and time. The platform ensures real-time insights, agility, and value by automating data processes and providing complete data ownership and governance. DataBahn is trusted by ambitious companies and partners worldwide for its efficiency and effectiveness in handling data workflows.

4 - Open Source AI Tools

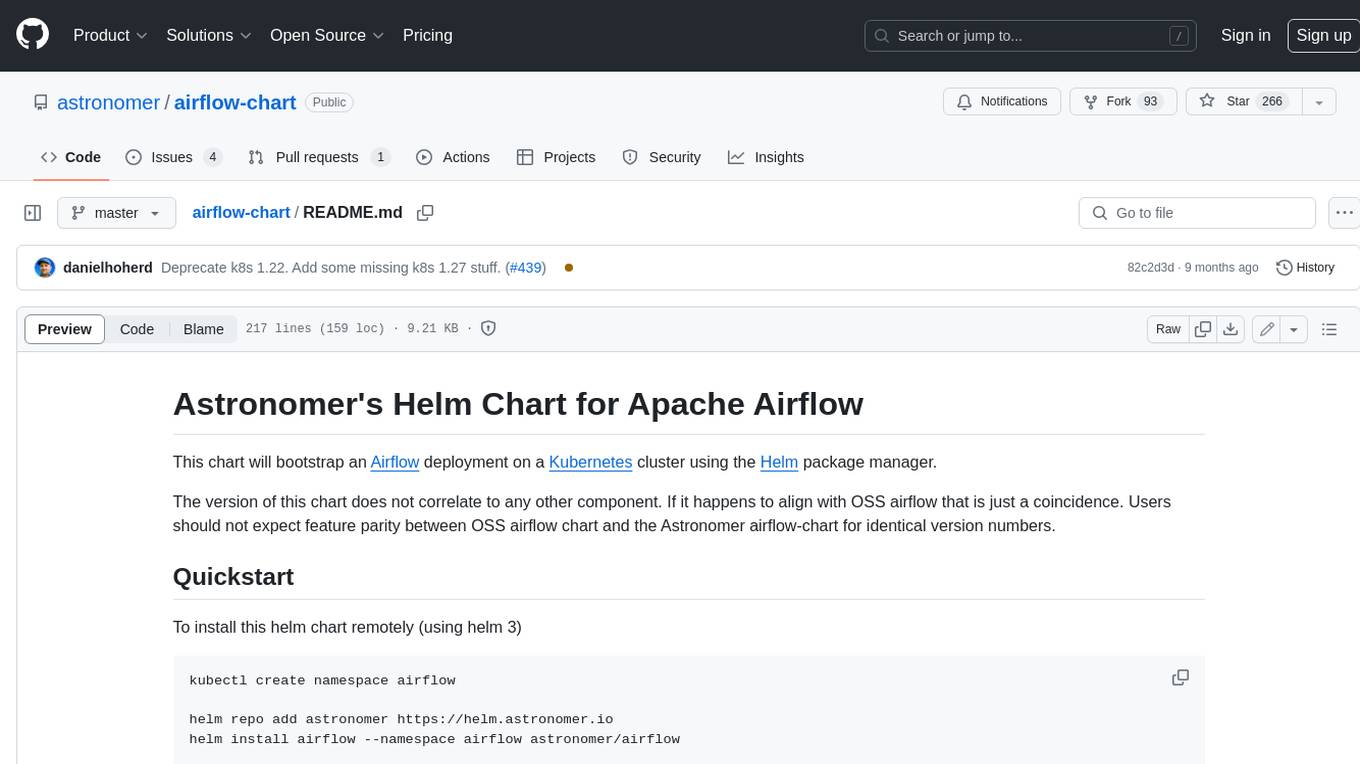

airflow-chart

This Helm chart bootstraps an Airflow deployment on a Kubernetes cluster using the Helm package manager. The version of this chart does not correlate to any other component. Users should not expect feature parity between OSS airflow chart and the Astronomer airflow-chart for identical version numbers. To install this helm chart remotely (using helm 3) kubectl create namespace airflow helm repo add astronomer https://helm.astronomer.io helm install airflow --namespace airflow astronomer/airflow To install this repository from source sh kubectl create namespace airflow helm install --namespace airflow . Prerequisites: Kubernetes 1.12+ Helm 3.6+ PV provisioner support in the underlying infrastructure Installing the Chart: sh helm install --name my-release . The command deploys Airflow on the Kubernetes cluster in the default configuration. The Parameters section lists the parameters that can be configured during installation. Upgrading the Chart: First, look at the updating documentation to identify any backwards-incompatible changes. To upgrade the chart with the release name `my-release`: sh helm upgrade --name my-release . Uninstalling the Chart: To uninstall/delete the `my-release` deployment: sh helm delete my-release The command removes all the Kubernetes components associated with the chart and deletes the release. Updating DAGs: Bake DAGs in Docker image The recommended way to update your DAGs with this chart is to build a new docker image with the latest code (`docker build -t my-company/airflow:8a0da78 .`), push it to an accessible registry (`docker push my-company/airflow:8a0da78`), then update the Airflow pods with that image: sh helm upgrade my-release . --set images.airflow.repository=my-company/airflow --set images.airflow.tag=8a0da78 Docker Images: The Airflow image that are referenced as the default values in this chart are generated from this repository: https://github.com/astronomer/ap-airflow. Other non-airflow images used in this chart are generated from this repository: https://github.com/astronomer/ap-vendor. Parameters: The complete list of parameters supported by the community chart can be found on the Parameteres Reference page, and can be set under the `airflow` key in this chart. The following tables lists the configurable parameters of the Astronomer chart and their default values. | Parameter | Description | Default | | :----------------------------- | :-------------------------------------------------------------------------------------------------------- | :---------------------------- | | `ingress.enabled` | Enable Kubernetes Ingress support | `false` | | `ingress.acme` | Add acme annotations to Ingress object | `false` | | `ingress.tlsSecretName` | Name of secret that contains a TLS secret | `~` | | `ingress.webserverAnnotations` | Annotations added to Webserver Ingress object | `{}` | | `ingress.flowerAnnotations` | Annotations added to Flower Ingress object | `{}` | | `ingress.baseDomain` | Base domain for VHOSTs | `~` | | `ingress.auth.enabled` | Enable auth with Astronomer Platform | `true` | | `extraObjects` | Extra K8s Objects to deploy (these are passed through `tpl`). More about Extra Objects. | `[]` | | `sccEnabled` | Enable security context constraints required for OpenShift | `false` | | `authSidecar.enabled` | Enable authSidecar | `false` | | `authSidecar.repository` | The image for the auth sidecar proxy | `nginxinc/nginx-unprivileged` | | `authSidecar.tag` | The image tag for the auth sidecar proxy | `stable` | | `authSidecar.pullPolicy` | The K8s pullPolicy for the the auth sidecar proxy image | `IfNotPresent` | | `authSidecar.port` | The port the auth sidecar exposes | `8084` | | `gitSyncRelay.enabled` | Enables git sync relay feature. | `False` | | `gitSyncRelay.repo.url` | Upstream URL to the git repo to clone. | `~` | | `gitSyncRelay.repo.branch` | Branch of the upstream git repo to checkout. | `main` | | `gitSyncRelay.repo.depth` | How many revisions to check out. Leave as default `1` except in dev where history is needed. | `1` | | `gitSyncRelay.repo.wait` | Seconds to wait before pulling from the upstream remote. | `60` | | `gitSyncRelay.repo.subPath` | Path to the dags directory within the git repository. | `~` | Specify each parameter using the `--set key=value[,key=value]` argument to `helm install`. For example, sh helm install --name my-release --set executor=CeleryExecutor --set enablePodLaunching=false . Walkthrough using kind: Install kind, and create a cluster We recommend testing with Kubernetes 1.25+, example: sh kind create cluster --image kindest/node:v1.25.11 Confirm it's up: sh kubectl cluster-info --context kind-kind Add Astronomer's Helm repo sh helm repo add astronomer https://helm.astronomer.io helm repo update Create namespace + install the chart sh kubectl create namespace airflow helm install airflow -n airflow astronomer/airflow It may take a few minutes. Confirm the pods are up: sh kubectl get pods --all-namespaces helm list -n airflow Run `kubectl port-forward svc/airflow-webserver 8080:8080 -n airflow` to port-forward the Airflow UI to http://localhost:8080/ to confirm Airflow is working. Login as _admin_ and password _admin_. Build a Docker image from your DAGs: 1. Start a project using astro-cli, which will generate a Dockerfile, and load your DAGs in. You can test locally before pushing to kind with `astro airflow start`. `sh mkdir my-airflow-project && cd my-airflow-project astro dev init` 2. Then build the image: `sh docker build -t my-dags:0.0.1 .` 3. Load the image into kind: `sh kind load docker-image my-dags:0.0.1` 4. Upgrade Helm deployment: sh helm upgrade airflow -n airflow --set images.airflow.repository=my-dags --set images.airflow.tag=0.0.1 astronomer/airflow Extra Objects: This chart can deploy extra Kubernetes objects (assuming the role used by Helm can manage them). For Astronomer Cloud and Enterprise, the role permissions can be found in the Commander role. yaml extraObjects: - apiVersion: batch/v1beta1 kind: CronJob metadata: name: "{{ .Release.Name }}-somejob" spec: schedule: "*/10 * * * *" concurrencyPolicy: Forbid jobTemplate: spec: template: spec: containers: - name: myjob image: ubuntu command: - echo args: - hello restartPolicy: OnFailure Contributing: Check out our contributing guide! License: Apache 2.0 with Commons Clause

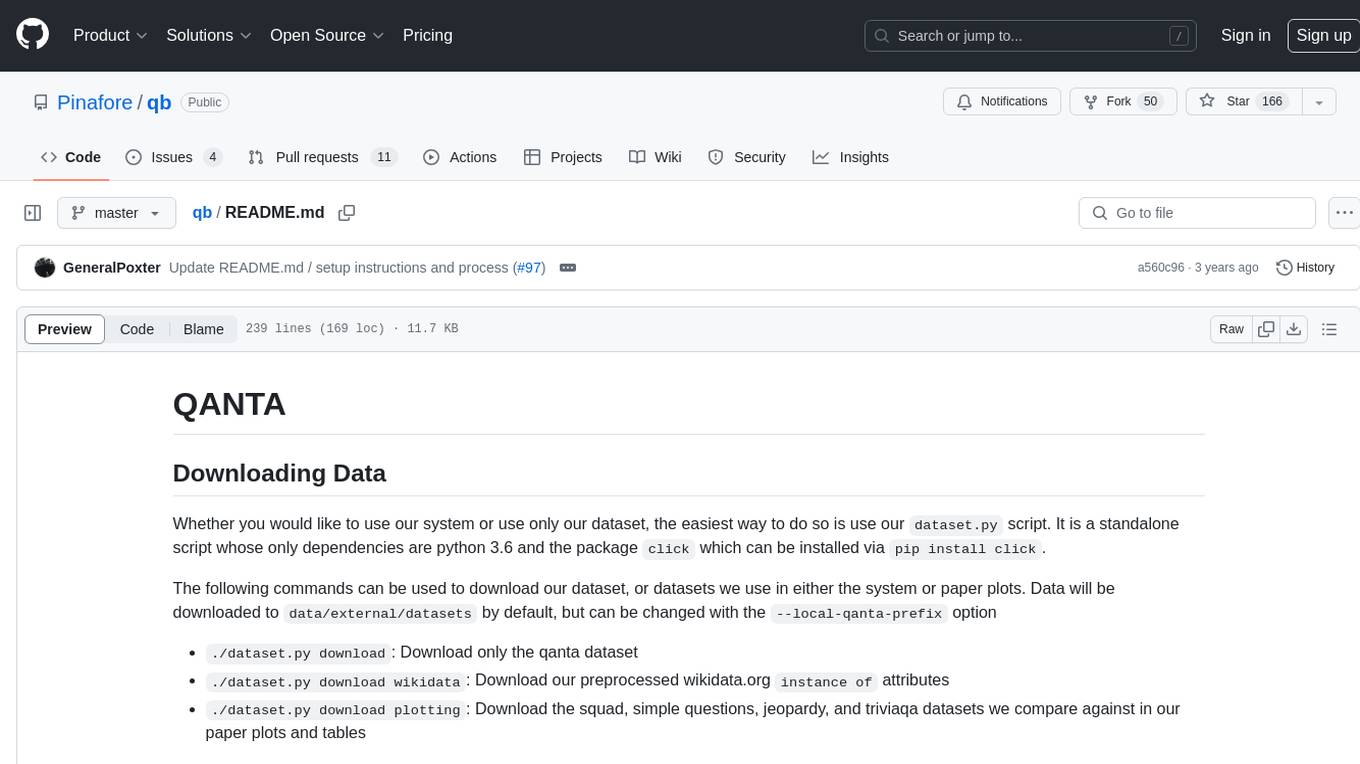

qb

QANTA is a system and dataset for question answering tasks. It provides a script to download datasets, preprocesses questions, and matches them with Wikipedia pages. The system includes various datasets, training, dev, and test data in JSON and SQLite formats. Dependencies include Python 3.6, `click`, and NLTK models. Elastic Search 5.6 is needed for the Guesser component. Configuration is managed through environment variables and YAML files. QANTA supports multiple guesser implementations that can be enabled/disabled. Running QANTA involves using `cli.py` and Luigi pipelines. The system accesses raw Wikipedia dumps for data processing. The QANTA ID numbering scheme categorizes datasets based on events and competitions.

Awesome-AI-Data-GitHub-Repos

Awesome AI & Data GitHub-Repos is a curated list of essential GitHub repositories covering the AI & ML landscape. It includes resources for Natural Language Processing, Large Language Models, Computer Vision, Data Science, Machine Learning, MLOps, Data Engineering, SQL & Database, and Statistics. The repository aims to provide a comprehensive collection of projects and resources for individuals studying or working in the field of AI and data science.

routilux

Routilux is a powerful event-driven workflow orchestration framework designed for building complex data pipelines and workflows effortlessly. It offers features like event queue architecture, flexible connections, built-in state management, robust error handling, concurrent execution, persistence & recovery, and simplified API. Perfect for tasks such as data pipelines, API orchestration, event processing, workflow automation, microservices coordination, and LLM agent workflows.

20 - OpenAI Gpts

DataKitchen DataOps and Data Observability GPT

A specialist in DataOps and Data Observability, aiding in data management and monitoring.

Data Engineer Consultant

Guides in data engineering tasks with a focus on practical solutions.

AI Workload Optimizer

You've heard that AI can save you time, but you don't know how? Tell me what you do in a typical workweek, and I'll tell you how!

Triage Management and Pipeline Architecture

Strategic advisor for triage management and pipeline optimization in business operations.

👑 Data Privacy for PI & Security Firms 👑

Private Investigators and Security Firms, given the nature of their work, handle highly sensitive information and must maintain strict confidentiality and data privacy standards.

👑 Data Privacy for Watch & Jewelry Designers 👑

Watchmakers and Jewelry Designers, high-end businesses dealing with valuable items and personal details of clients, making data privacy and security paramount.

👑 Data Privacy for Event Management 👑

Data Privacy for Event Management and Ticketing Services handle personal data such as names, contact details, and payment information for event registrations and ticket purchases.

Data Governance Advisor

Ensures data accuracy, consistency, and security across organization.

👑 Data Privacy for Home Inspection & Appraisal 👑

Home Inspection and Appraisal Services have access to personal property and related information, requiring them to be vigilant about data privacy.

👑 Data Privacy for Freelancers & Independents 👑

Freelancers and Independent Consultants, individuals in these roles often handle client data, project specifics, and personal contact information, requiring them to be vigilant about data privacy.

👑 Data Privacy for Architecture & Construction 👑

Architecture and Construction Firms handle sensitive project data, client information, and architectural plans, necessitating strict data privacy measures.

👑 Data Privacy for Real Estate Agencies 👑

Real Estate Agencies and Brokers deal with personal data of clients, including financial information and preferences, requiring careful handling and protection of such data.

👑 Data Privacy for Spa & Beauty Salons 👑

Spa and Beauty Salons collect Customer inforation, including personal details and treatment records, necessitating a high level of confidentiality and data protection.

Data Architect

Database Developer assisting with SQL/NoSQL, architecture, and optimization.