RAGMeUp

Generic rag framework to apply the power of LLMs on any given dataset

Stars: 489

RAG Me Up is a generic framework that enables users to perform Retrieve and Generate (RAG) on their own dataset easily. It consists of a small server and UIs for communication. Best run on GPU with 16GB vRAM. Users can combine RAG with fine-tuning using LLaMa2Lang repository. The tool allows configuration for LLM, data, LLM parameters, prompt, and document splitting. Funding is sought to democratize AI and advance its applications.

README:

RAG Me Up is a generic framework (server + UIs) that enables you do to RAG on your own dataset easily. Its essence is a small and lightweight server and a couple of ways to run UIs to communicate with the server (or write your own).

RAG Me Up can run on CPU but is best run on any GPU with at least 16GB of vRAM when using the default instruct model.

Combine the power of RAG with the power of fine-tuning - check out our LLaMa2Lang repository on fine-tuning LLMs which can then be used in RAG Me Up.

- 2024-09-06 Implemented Re2

- 2024-09-04 Added an evaluation script that uses Ragas to evaluate your RAG pipeline

- 2024-08-30 Added Ollama compatibility

- 2024-08-27 Using cross encoders now so you can specify your own reranking model

- 2024-07-30 Added multiple provenance attribution methods

- 2024-06-26 Updated readme, added more file types, robust self-inflection

- 2024-06-05 Upgraded to Langchain v0.2

git clone https://github.com/UnderstandLingBV/RAGMeUp.git

cd server

pip install -r requirements.txt

Then run the server using python server.py from the server subfolder.

Make sure you have JDK 17+. Download and install SBT and run sbt run from the server/scala directory or alternatively download the compiled binary and run bin/ragemup(.bat)

RAG Me Up supports Postgres as hybrid retrieval database with both pgvector and pg_search installed. To run Postgres instead of Milvus, follow these steps.

- In the postgres folder is a Dockerfile, build it using

docker build -t ragmeup-pgvector-pgsearch . - Run the container using

docker run --name ragmeup-pgvector-pgsearch -e POSTGRES_USER=langchain -e POSTGRES_PASSWORD=langchain -e POSTGRES_DB=langchain -p 6024:5432 -d ragmeup-pgvector-pgsearch - Once in use, our custom PostgresBM25Retriever will automatically create the right indexes for you.

- pgvector however, will not do this automatically so you have to create them yourself (perhaps after loading the documents first so the right tables are created):

- Make sure the vector column is an actual vector (it's not by default):

ALTER TABLE langchain_pg_embedding ALTER COLUMN embedding TYPE vector(384); - Create the index (may take a while with a lot of data):

CREATE INDEX ON langchain_pg_embedding USING hnsw (embedding vector_cosine_ops) WITH (m = 16, ef_construction = 64);

- Make sure the vector column is an actual vector (it's not by default):

RAG Me Up aims to provide a robust RAG pipeline that is configurable without necessarily writing any code. To achieve this, a couple of strategies are used to make sure that the user query can be accurately answered through the documents provided.

The RAG pipeline is visualized in the image below:

The following steps are executed. Take note that some steps are optional and can be turned off through configuring the .env file.

Top part - Indexing

- You collect and make your documents available to RAG Me Up.

- Using different file type loaders, RAG Me Up will read the contents of your documents. Note that for some document types like JSON and XML, you need to specify additional configuration to tell RAG Me Up what to extract.

- Your documents get chunked using a recursive splitter.

- The chunks get converted into document (chunk) embeddings using an embedding model. Note that this model is usually a different one than the LLM you intend to use for chat.

- RAG Me Up uses a hybrid search strategy, combining dense vectors in the vector database with sparse vectors using BM25. By default, RAG Me Up uses a local Milvus database.

Bottom part - Inference

- Inference starts with a user asking a query. This query can either be an initial query or a follow-up query with an associated history and documents retrieved before. Note that both (chat history, documents) need to be passed on by a UI to properly handle follow-up querying.

- A check is done if new documents need to be fetched, this can be due to one of two cases:

- There is no history given in which case we always need to fetch documents

-

[OPTIONAL] The LLM itself will judge whether or not the question - in isolation - is phrased in such a way that new documents are fetched or whether it is a follow-up question on existing documents. A flag called

fetch_new_documentsis set to indicate whether or not new documents need to be fetched.

- Documents are fetched from both the vector database (dense) and the BM25 index (sparse). Only executed if

fetch_new_documentsis set. -

[OPTIONAL] Reranking is applied to extract the most relevant documents returned by the previous step. Only executed if

fetch_new_documentsis set. -

[OPTIONAL] The LLM is asked to judge whether or not the documents retrieved contain an accurate answer to the user's query. Only executed if

fetch_new_documentsis set.- If this is not the case, the LLM is used to rewrite the query with the instruction to optimize for distance based similarity search. This is then fed back into step 3. but only once to avoid lengthy or infinite loops.

- The documents are injected into the prompt with the user query. The documents can come from:

- The retrieval and reranking of the document databases, if

fetch_new_documentsis set. - The history passed on with the initial user query, if

fetch_new_documentsis not set.

- The retrieval and reranking of the document databases, if

- The LLM is asked to answer the query with the given chat history and documents.

- The answer, chat history and documents are returned.

RAG Me Up uses a .env file for configuration, see .env.template. The following fields can be configured:

-

llm_modelThis is the main LLM (instruct or chat) model to use that you will converse with. Default is LLaMa3-8B -

llm_assistant_tokenThis should contain the unique query (sub)string that indicates where in a prompt template the assistant's answer starts -

embedding_modelThe model used to convert your documents' chunks into vectors that will be stored in the vector store -

trust_remote_codeSet this to true if your LLM needs to execute remote code -

force_cpuWhen set to True, forces RAG Me Up to run fully on CPU (not recommended)

If you want to use OpenAI as LLM backend, make sure to set use_openai to True and make sure you (externally) set the environment variable OPENAI_API_KEY to be your OpenAI API Key.

If you want to use Gemini as LLM backend, make sure to set use_gemini to True and make sure you (externally) set the environment variable GOOGLE_API_KEY to be your Gemini API Key.

If you want to use Azure OpenAI as LLM backend, make sure to set use_azure to True and make sure you (externally) set the following environment variables:

AZURE_OPENAI_API_KEYAZURE_OPENAI_API_VERSIONAZURE_OPENAI_ENDPOINTAZURE_OPENAI_CHAT_DEPLOYMENT_NAME

If you want to use Ollama as LLM backend, make sure to install Ollama and set use_ollama to True. The model to use should be given in ollama_model.

One of the biggest, arguably unsolved, challenges of RAG is to do good provenance attribution: tracking which of the source documents retrieved from your database led to the LLM generating its answer (the most). RAG Me Up implements several ways of achieving this, each with its own pros and cons.

The following environment variables can be set for provenance attribution.

-

provenance_methodCan be one ofrerank, attention, similarity, llm. IfrerankisFalseand the value ofprovenance_methodis eitherrerankor none of the allowed values, provenance attribution is turned completely off -

provenance_similarity_llmIfprovenance_methodis set tosimilarity, this model will be used to compute the similarity scores -

provenance_include_querySet to True or False to include the query itself when attributing provenance -

provenance_llm_promptIfprovenance_methodis set tollm, this prompt will be used to let the LLM attribute the provenance of each document in isolation.

The different provenance attribution metrics are described below.

This uses the reranker as the provenance method. While the reranking is already used when retrieving documents (if reranking is turned on), this only applies the rerankers cross-attention to the documents and the query. For provenance attribution, we use the same reranking to apply cross-attention to the answer (and potentially the query too).

This is probably the most accurate way of tracking provenance but it can only be used with OS LLMs that allow to return the attention weights. The way we track provenance is by looking at the actual attention weights (of the last attention layer in the model) for each token from the answer to the document and vice versa, optionally we do the same for the query if provenance_include_query=True.

This method uses a sentence transformer (LM) to get dense vectors for each document as well as for the answer (and potentially query). We then use a cosine similarity to get the similarity of the document vectors to the answer (+ query).

The LLM that is used to generate messages is now also used to attribute the provenance of each document in isolation. We use the provenance_llm_prompt as the prompt to ask the LLM to perform this task. Note that the outcome of this provenance method is highly influenced by the prompt and the strength of the model. As a good practice, make sure you force the LLM to return numbers on a relatively small scale (eg. score from 1 to 3). Using something like a percentage for each document will likely result in random outcomes.

-

data_directoryThe directory that contains your (initial) documents to load into the vector store -

file_typesComma-separated list of file types to load. Supported file types:PDF, JSON, DOCX, XSLX, PPTX, CSV, XML -

json_schemaIf you are loading JSON, this should be the schema (usingjq_schema). For example, use.for the root of a JSON object if your data contains JSON objects only and.[0]for the first element in each JSON array if your data contains JSON arrays with one JSON object in them -

json_text_contentWhether or not the JSON data should be loaded as textual content or as structured content (in case of a JSON object) -

xml_xpathIf you are loading XML, this should be the XPath of the documents to load (the tags that contain your text)

-

vector_store_uriRAG Me Up caches your vector store on disk if possible to make loading a next time faster. This is the location where the vector store is stored. Remove this file to force a reload of all your documents -

vector_store_kThe number of documents to retrieve from the vector store -

rerankSet to either True or False to enable reranking -

rerank_kThe number of documents to keep after reranking. Note that if you use reranking, this should be your final target forkandvector_store_kshould be set (significantly) higher. For example, setvector_store_kto 10 andrerank_kto 3 -

rerank_modelThe cross encoder reranking retrieval model to use. Sensible defaults arecross-encoder/ms-marco-TinyBERT-L-2-v2for speed andcolbert-ir/colbertv2.0for accuracy (antoinelouis/colbert-xmfor multilingual). Set this value toflashrankto use the FlashrankReranker.

-

temperatureThe chat LLM's temperature. Increase this to create more diverse answers -

repetition_penaltyThe penalty for repeating outputs in the chat answers. Some models are very sensitive to this parameter and need a value bigger than 1.0 (penalty) while others benefit from inversing it (lower than 1.0) -

max_new_tokensThis caps how much tokens the LLM can generate in its answer. More tokens means slower throughput and more memory usage

-

rag_instructionAn instruction message for the LLM to let it know what to do. Should include a mentioning of it performing RAG and that documents will be given as input context to generate the answer from. -

rag_question_initialThe initial question prompt that will be given to the LLM only for the first question a user asks, that is, without chat history -

rag_question_followupThis is a follow-up question the user is asking. While the context resulting from the prompt will be populated by RAG from the vector store, if chat history is present, this prompt will be used instead ofrag_question_initial

-

rag_fetch_new_instructionRAG Me Up automatically determines whether or not new documents should be fetched from the vector store or whether the user is asking a follow-up question on the already fetched documents by leveraging the same LLM that is used for chat. This environment variable determines the prompt to use to make this decision. Be very sure to instruct your LLM to answer with yes or no only and make sure your LLM is capable enough to follow this instruction -

rag_fetch_new_questionThe question prompt used in conjunction withrag_fetch_new_instructionto decide if new documents should be fetched or not

-

user_rewrite_loopSet to either True or False to enable the rewriting of the initial query. Note that a rewrite will always occur at most once -

rewrite_query_instructionThis is the instruction of the prompt that is used to ask the LLM to judge whether a rewrite is necessary or not. Make sure you force the LLM to answer with yes or no only -

rewrite_query_questionThis is the actual query part of the prompt that isued to ask the LLM to judge a rewrite -

rewrite_query_promptIf the rewrite loop is on and the LLM judges a rewrite is required, this is the instruction with question asked to the LLM to rewrite the user query into a phrasing more optimized for RAG. Make sure to instruct your model adequately.

-

use_re2Set to either True or False to enable Re2 (Re-reading) which repeats the question, generally improving the quality of the answer generated by the LLM. -

re2_promptThe prompt used in between the question and the repeated question to signal that we are re-asking.

-

splitterThe Langchain document splitter to use. Supported splitters areRecursiveCharacterTextSplitterandSemanticChunker. -

chunk_sizeThe chunk size to use when splitting up documents forRecursiveCharacterTextSplitter -

chunk_overlapThe chunk overlap forRecursiveCharacterTextSplitter -

breakpoint_threshold_typeSets the breakpoint threshold type when using theSemanticChunker(see here). Can be one of: percentile, standard_deviation, interquartile, gradient -

breakpoint_threshold_amountThe amount to use for the threshold type, in float. Set toNoneto leave default -

number_of_chunksThe number of chunks to use for the threshold type, in int. Set toNoneto leave default

While RAG evaluation is difficult and subjective to begin with, frameworks such as Ragas can give some metrics as to how well your RAG pipeline and its prompts are working, allowing us to benchmark one approach over the other quantitatively.

RAG Me Up uses Ragas to evaluate your pipeline. You can run an evaluation based on your .env using python Ragas_eval.py. The following configuration parameters can be set for evaluation:

-

ragas_sample_sizeThe amount of document (chunks) to use in evaluation. These are sampled from your data directory after chunking. -

ragas_qa_pairsRagas works upon questions and ground truth answers. The amount of such pairs to create based on the sampled document chunks is set by this parameter. -

ragas_question_instructionThe instruction prompt used to generate the questions of the Ragas input pairs. -

ragas_question_queryThe query prompt used to generate the questions of the Ragas input pairs. -

ragas_answer_instructionThe instruction prompt used to generate the answers of the Ragas input pairs. -

ragas_answer_queryThe query prompt used to generate the answers of the Ragas input pairs.

We are actively looking for funding to democratize AI and advance its applications. Contact us at [email protected] if you want to invest.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for RAGMeUp

Similar Open Source Tools

RAGMeUp

RAG Me Up is a generic framework that enables users to perform Retrieve and Generate (RAG) on their own dataset easily. It consists of a small server and UIs for communication. Best run on GPU with 16GB vRAM. Users can combine RAG with fine-tuning using LLaMa2Lang repository. The tool allows configuration for LLM, data, LLM parameters, prompt, and document splitting. Funding is sought to democratize AI and advance its applications.

RAGMeUp

RAG Me Up is a generic framework that enables users to perform Retrieve, Answer, Generate (RAG) on their own dataset easily. It consists of a small server and UIs for communication. The tool can run on CPU but is optimized for GPUs with at least 16GB of vRAM. Users can combine RAG with fine-tuning using the LLaMa2Lang repository. The tool provides a configurable RAG pipeline without the need for coding, utilizing indexing and inference steps to accurately answer user queries.

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

llm-subtrans

LLM-Subtrans is an open source subtitle translator that utilizes LLMs as a translation service. It supports translating subtitles between any language pairs supported by the language model. The application offers multiple subtitle formats support through a pluggable system, including .srt, .ssa/.ass, and .vtt files. Users can choose to use the packaged release for easy usage or install from source for more control over the setup. The tool requires an active internet connection as subtitles are sent to translation service providers' servers for translation.

aisheets

Hugging Face AI Sheets is an open-source tool for building, enriching, and transforming datasets using AI models with no code. It can be deployed locally or on the Hub, providing access to thousands of open models. Users can easily generate datasets, run data generation scripts, and customize inference endpoints for text generation. The tool supports custom LLMs and offers advanced configuration options for authentication, inference, and miscellaneous settings. With AI Sheets, users can leverage the power of AI models without writing any code, making dataset management and transformation efficient and accessible.

qb

QANTA is a system and dataset for question answering tasks. It provides a script to download datasets, preprocesses questions, and matches them with Wikipedia pages. The system includes various datasets, training, dev, and test data in JSON and SQLite formats. Dependencies include Python 3.6, `click`, and NLTK models. Elastic Search 5.6 is needed for the Guesser component. Configuration is managed through environment variables and YAML files. QANTA supports multiple guesser implementations that can be enabled/disabled. Running QANTA involves using `cli.py` and Luigi pipelines. The system accesses raw Wikipedia dumps for data processing. The QANTA ID numbering scheme categorizes datasets based on events and competitions.

Open-LLM-VTuber

Open-LLM-VTuber is a project in early stages of development that allows users to interact with Large Language Models (LLM) using voice commands and receive responses through a Live2D talking face. The project aims to provide a minimum viable prototype for offline use on macOS, Linux, and Windows, with features like long-term memory using MemGPT, customizable LLM backends, speech recognition, and text-to-speech providers. Users can configure the project to chat with LLMs, choose different backend services, and utilize Live2D models for visual representation. The project supports perpetual chat, offline operation, and GPU acceleration on macOS, addressing limitations of existing solutions on macOS.

ultimate-rvc

Ultimate RVC is an extension of AiCoverGen, offering new features and improvements for generating audio content using RVC. It is designed for users looking to integrate singing functionality into AI assistants/chatbots/vtubers, create character voices for songs or books, and train voice models. The tool provides easy setup, voice conversion enhancements, TTS functionality, voice model training suite, caching system, UI improvements, and support for custom configurations. It is available for local and Google Colab use, with a PyPI package for easy access. The tool also offers CLI usage and customization through environment variables.

REINVENT4

REINVENT is a molecular design tool for de novo design, scaffold hopping, R-group replacement, linker design, molecule optimization, and other small molecule design tasks. It uses a Reinforcement Learning (RL) algorithm to generate optimized molecules compliant with a user-defined property profile defined as a multi-component score. Transfer Learning (TL) can be used to create or pre-train a model that generates molecules closer to a set of input molecules.

polis

Polis is an AI powered sentiment gathering platform that offers a more organic approach than surveys and requires less effort than focus groups. It provides a comprehensive wiki, main deployment at https://pol.is, discussions, issue tracking, and project board for users. Polis can be set up using Docker infrastructure and offers various commands for building and running containers. Users can test their instance, update the system, and deploy Polis for production. The tool also provides developer conveniences for code reloading, type checking, and database connections. Additionally, Polis supports end-to-end browser testing using Cypress and offers troubleshooting tips for common Docker and npm issues.

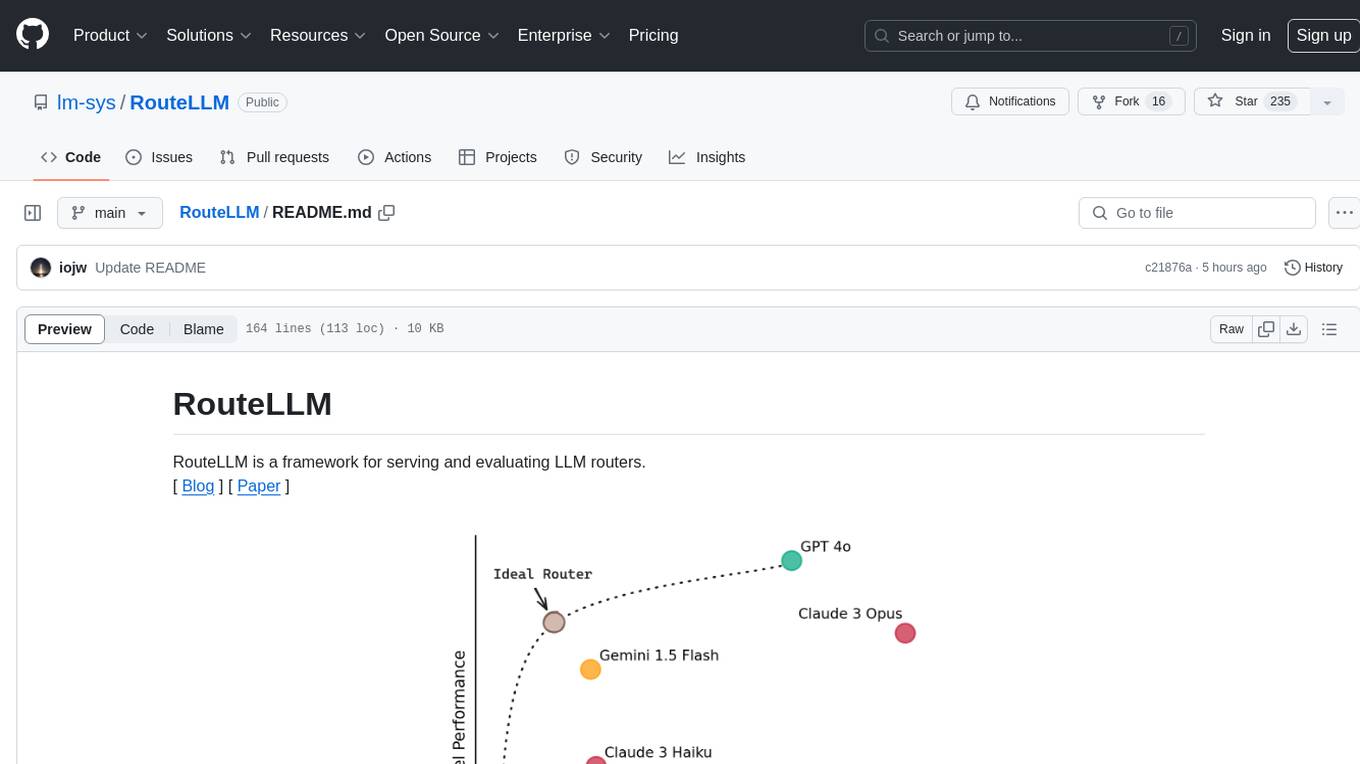

RouteLLM

RouteLLM is a framework for serving and evaluating LLM routers. It allows users to launch an OpenAI-compatible API that routes requests to the best model based on cost thresholds. Trained routers are provided to reduce costs while maintaining performance. Users can easily extend the framework, compare router performance, and calibrate cost thresholds. RouteLLM supports multiple routing strategies and benchmarks, offering a lightweight server and evaluation framework. It enables users to evaluate routers on benchmarks, calibrate thresholds, and modify model pairs. Contributions for adding new routers and benchmarks are welcome.

tutor-gpt

Tutor-GPT is an LLM powered learning companion developed by Plastic Labs. It dynamically reasons about your learning needs and updates its own prompts to best serve you. It is an expansive learning companion that uses theory of mind experiments to provide personalized learning experiences. The project is split into different modules for backend logic, including core logic, discord bot implementation, FastAPI API interface, NextJS web front end, common utilities, and SQL scripts for setting up local supabase. Tutor-GPT is powered by Honcho to build robust user representations and create personalized experiences for each user. Users can run their own instance of the bot by following the provided instructions.

MultiPL-E

MultiPL-E is a system for translating unit test-driven neural code generation benchmarks to new languages. It is part of the BigCode Code Generation LM Harness and allows for evaluating Code LLMs using various benchmarks. The tool supports multiple versions with improvements and new language additions, providing a scalable and polyglot approach to benchmarking neural code generation. Users can access a tutorial for direct usage and explore the dataset of translated prompts on the Hugging Face Hub.

SirChatalot

A Telegram bot that proves you don't need a body to have a personality. It can use various text and image generation APIs to generate responses to user messages. For text generation, the bot can use: * OpenAI's ChatGPT API (or other compatible API). Vision capabilities can be used with GPT-4 models. Function calling can be used with Function calling. * Anthropic's Claude API. Vision capabilities can be used with Claude 3 models. Function calling can be used with tool use. * YandexGPT API Bot can also generate images with: * OpenAI's DALL-E * Stability AI * Yandex ART This bot can also be used to generate responses to voice messages. Bot will convert the voice message to text and will then generate a response. Speech recognition can be done using the OpenAI's Whisper model. To use this feature, you need to install the ffmpeg library. This bot is also support working with files, see Files section for more details. If function calling is enabled, bot can generate images and search the web (limited).

redbox-copilot

Redbox Copilot is a retrieval augmented generation (RAG) app that uses GenAI to chat with and summarise civil service documents. It increases organisational memory by indexing documents and can summarise reports read months ago, supplement them with current work, and produce a first draft that lets civil servants focus on what they do best. The project uses a microservice architecture with each microservice running in its own container defined by a Dockerfile. Dependencies are managed using Python Poetry. Contributions are welcome, and the project is licensed under the MIT License.

ai-town

AI Town is a virtual town where AI characters live, chat, and socialize. This project provides a deployable starter kit for building and customizing your own version of AI Town. It features a game engine, database, vector search, auth, text model, deployment, pixel art generation, background music generation, and local inference. You can customize your own simulation by creating characters and stories, updating spritesheets, changing the background, and modifying the background music.

For similar tasks

Forza-Mods-AIO

Forza Mods AIO is a free and open-source tool that enhances the gaming experience in Forza Horizon 4 and 5. It offers a range of time-saving and quality-of-life features, making gameplay more enjoyable and efficient. The tool is designed to streamline various aspects of the game, improving user satisfaction and overall enjoyment.

hass-ollama-conversation

The Ollama Conversation integration adds a conversation agent powered by Ollama in Home Assistant. This agent can be used in automations to query information provided by Home Assistant about your house, including areas, devices, and their states. Users can install the integration via HACS and configure settings such as API timeout, model selection, context size, maximum tokens, and other parameters to fine-tune the responses generated by the AI language model. Contributions to the project are welcome, and discussions can be held on the Home Assistant Community platform.

crawl4ai

Crawl4AI is a powerful and free web crawling service that extracts valuable data from websites and provides LLM-friendly output formats. It supports crawling multiple URLs simultaneously, replaces media tags with ALT, and is completely free to use and open-source. Users can integrate Crawl4AI into Python projects as a library or run it as a standalone local server. The tool allows users to crawl and extract data from specified URLs using different providers and models, with options to include raw HTML content, force fresh crawls, and extract meaningful text blocks. Configuration settings can be adjusted in the `crawler/config.py` file to customize providers, API keys, chunk processing, and word thresholds. Contributions to Crawl4AI are welcome from the open-source community to enhance its value for AI enthusiasts and developers.

MaterialSearch

MaterialSearch is a tool for searching local images and videos using natural language. It provides functionalities such as text search for images, image search for images, text search for videos (providing matching video clips), image search for videos (searching for the segment in a video through a screenshot), image-text similarity calculation, and Pexels video search. The tool can be deployed through the source code or Docker image, and it supports GPU acceleration. Users can configure the tool through environment variables or a .env file. The tool is still under development, and configurations may change frequently. Users can report issues or suggest improvements through issues or pull requests.

tenere

Tenere is a TUI interface for Language Model Libraries (LLMs) written in Rust. It provides syntax highlighting, chat history, saving chats to files, Vim keybindings, copying text from/to clipboard, and supports multiple backends. Users can configure Tenere using a TOML configuration file, set key bindings, and use different LLMs such as ChatGPT, llama.cpp, and ollama. Tenere offers default key bindings for global and prompt modes, with features like starting a new chat, saving chats, scrolling, showing chat history, and quitting the app. Users can interact with the prompt in different modes like Normal, Visual, and Insert, with various key bindings for navigation, editing, and text manipulation.

openkore

OpenKore is a custom client and intelligent automated assistant for Ragnarok Online. It is a free, open source, and cross-platform program (Linux, Windows, and MacOS are supported). To run OpenKore, you need to download and extract it or clone the repository using Git. Configure OpenKore according to the documentation and run openkore.pl to start. The tool provides a FAQ section for troubleshooting, guidelines for reporting issues, and information about botting status on official servers. OpenKore is developed by a global team, and contributions are welcome through pull requests. Various community resources are available for support and communication. Users are advised to comply with the GNU General Public License when using and distributing the software.

QA-Pilot

QA-Pilot is an interactive chat project that leverages online/local LLM for rapid understanding and navigation of GitHub code repository. It allows users to chat with GitHub public repositories using a git clone approach, store chat history, configure settings easily, manage multiple chat sessions, and quickly locate sessions with a search function. The tool integrates with `codegraph` to view Python files and supports various LLM models such as ollama, openai, mistralai, and localai. The project is continuously updated with new features and improvements, such as converting from `flask` to `fastapi`, adding `localai` API support, and upgrading dependencies like `langchain` and `Streamlit` to enhance performance.

extension-gen-ai

The Looker GenAI Extension provides code examples and resources for building a Looker Extension that integrates with Vertex AI Large Language Models (LLMs). Users can leverage the power of LLMs to enhance data exploration and analysis within Looker. The extension offers generative explore functionality to ask natural language questions about data and generative insights on dashboards to analyze data by asking questions. It leverages components like BQML Remote Models, BQML Remote UDF with Vertex AI, and Custom Fine Tune Model for different integration options. Deployment involves setting up infrastructure with Terraform and deploying the Looker Extension by creating a Looker project, copying extension files, configuring BigQuery connection, connecting to Git, and testing the extension. Users can save example prompts and configure user settings for the extension. Development of the Looker Extension environment includes installing dependencies, starting the development server, and building for production.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.