polis

:milky_way: Open Source AI for large scale open ended feedback

Stars: 971

Polis is an AI powered sentiment gathering platform that offers a more organic approach than surveys and requires less effort than focus groups. It provides a comprehensive wiki, main deployment at https://pol.is, discussions, issue tracking, and project board for users. Polis can be set up using Docker infrastructure and offers various commands for building and running containers. Users can test their instance, update the system, and deploy Polis for production. The tool also provides developer conveniences for code reloading, type checking, and database connections. Additionally, Polis supports end-to-end browser testing using Cypress and offers troubleshooting tips for common Docker and npm issues.

README:

Polis is an AI powered sentiment gathering platform. More organic than surveys and less effort than focus groups.

For a detailed methods paper, see Polis: Scaling Deliberation by Mapping High Dimensional Opinion Spaces.

If you're interested in using or contributing to Polis, please see the following:

- 📚 knowledge base: for a comprehensive wiki to help you understand and use the system

- 🌐 main deployment: the main deployment of Polis is at https://pol.is, and is free to use for nonprofits and government

- 💬 discussions: for questions (QA) and discussion

- ✔️ issues: for well-defined technical issues

- 🏗️ project board: somewhat incomplete, but still useful; We stopped around the time that Projects Beta came out, and we have a Projects Beta Board that we'll eventually be migrating to

- ✉️ reach out: if you are applying Polis in a high-impact context, and need more help than you're able to get through the public channels above

If you're trying to set up a Polis deployment or development environment, then please read the rest of this document 👇 ⬇️ 👇

Polis comes with Docker infrastructure for running a complete system, whether for a production deployment or a development environment (details for each can be found in later sections of this document).

As a consequence, the only prerequisite to running Polis is that you install a recent docker (and Docker Desktop if you are on Mac or Windows).

If you aren't able to use Docker for some reason, the various Dockerfiles found in subdirectories (math, server, delphi, *-client) of this repository can be used as a reference for how you'd set up a system manually.

If you're interested in doing the legwork to support alternative infrastructure, please let us know in an issue.

cp example.env .env

make startThat should run docker compose with the development overlay (see below) and default configuration values.

You may encounter an error on mac if AirPlay receiver is enabled, which defaults to port 5000 and collides with Polis API_SERVER_PORT. You can change this in your .env file or disable AirPlay receiver in system settings.

Visit localhost:80/createuser and get started.

Newer versions of docker have docker compose built in as a subcommand.

If you are using an older version (and don't want to upgrade), you'll need to separately install docker-compose, and use that instead in the instructions that follow.

Note however that the newer docker compose command is required to take advantage of Docker Swarm as a scaling option.

Many convenient commands are found in the Makefile. Run make help for a list of available commands.

First clone the repository, then navigate via command line to the root directory and run the following command to build and run the docker containers.

Copy the example.env file and modify as needed (although it should just work as is for development and testing purposes).

cp example.env .envdocker compose --profile postgres --profile local-services up --buildIf you get a permission error, try running this command with sudo.

If this fixes the problem, sudo will be necessary for all other commands as well.

To avoid having to use sudo in the future (on a Linux or Windows machine with WSL), you can follow setup instructions here.

Once you've built the docker images, you can run without --build, which may be faster. Run

docker compose --profile postgres --profile local-services upor simply

make startAny time you want to rebuild the images, just reaffix --build when you run. Another way to

easily rebuild and start your containers is with make start-rebuild.

If you have only changed configuration values in .env, you can recreate your containers without

fully rebuilding them with --force-recreate. For example:

docker compose --profile postgres --profile local-services down

docker compose --profile postgres --profile local-services up --force-recreateTo see what the environment of your containers is going to look like, run:

docker compose --profile postgres --profile local-services convertOmit the --profile postgres flag to use a local or remote database. You will need to set the DATABASE_URL environment variable in your .env file to point to your database.

When using make commands, setting POSTGRES_DOCKER to true or false will determine whether to automatically include --profile postgres when it calls out to docker compose.

The commands in the Makefile can be prefaced with PROD. If so, the "dev overlay" configuration in docker-compose.dev.yml will be ignored.

Ports from services other than the HTTP proxy (80/443) will not be exposed. Containers will not mount local directories, watch for changes,

or rebuild themselves. In theory this should be one way to run Polis in a production environment.

You need a prod.env file:

cp example.env prod.env (and update accordingly).

Then you can run things like:

make PROD start

make PROD start-rebuildIf you want to run the stack without the local MinIO and DynamoDB services (e.g., to test connecting to real AWS services configured in your .env file), simply omit the --profile local-services flag.

Example: Run with the containerized DB but connect to external/real cloud services:

docker compose --profile postgres upExample: Run with an external DB and external/real cloud services (closest to production):

docker compose upYou can now test your setup by visiting http://localhost:80/home.

When running with make start or the development configuration, an OIDC Simulator automatically starts with predefined test users. You can log in immediately using:

-

Email:

[email protected] -

Password:

Te$tP@ssw0rd*

Additional test users are available:

-

[email protected]/Te$tP@ssw0rd* -

[email protected]through[email protected](all with passwordTe$tP@ssw0rd*)

Due to limitations of the OIDC Simulator, you cannot register new admin users in development and test environments.

When you're done working, you can end the process using Ctrl+C, or typing docker compose --profile postgres --profile local-services down

if you are running in "detached mode".

If you want to update the system, you may need to handle the following:

- ⬆️ Run database migrations, if there are new such

- Update docker images by running with

--buildif there have been changes to the Dockerfiles- consider using

--no-cacheif you'd like to rebuild from scratch, but note that this will take much longer

- consider using

While the commands above will get a functional Polis system up and running, additional steps must be taken to properly configure, secure and scale the system. In particular

-

⚙️ Configure the system, esp:

- the domain name you'll be serving from

- enable and add API keys for 3rd party services (e.g. automatic comment translation, spam filtering, etc)

- 🔏 Set up SSL/HTTPS, to keep the site secure

- 📈 Scale for large or many concurrent conversations

We encourage you to take advantage of the public channels above for support setting up a deployment. However, if you are deploying in a high impact context and need help, please reach out to us

Once you've gotten Polis running (as described above), you can enable developer conveniences by running

docker compose -f docker-compose.yml -f docker-compose.dev.yml --profile postgres up(run with --build if this is your first time running, or if you need to rebuild containers)

This enables:

- Live code reloading and static type checking of the server code

- A nREPL connection port open for connecting to the running math process

- Ports open for connecting directly to the database container

- Live code reloading for the client repos (in process)

- etc.

This command takes advantage of the docker-compose.dev.yml overlay file, which layers the developer conveniences describe above into the base system, as described in the docker-compose.yml file.

You can specify these -f docker-compose.yml -f docker-compose.dev.yml arguments for any docker command which you need to take advantage of these features (not just docker compose --profile postgres up).

You can create your own docker-compose.x.yml file as an overlay and add or modify any values you need to differ

from the defaults found in the docker-compose.yml file and pass it as the second argument to the docker compose -f command above.

We use Cypress for automated, end-to-end browser testing for PRs on GitHub (see badge above).

Please see e2e/README.md for more information on running these tests locally.

A lot of issues might be resolved by killing all docker containers and/or restarting docker entirely. If that doesn't work, this will wipe all of your polis containers and volumes (INCLUDING THE DATABASE VOLUME, so don't use this in prod!) and completely rebuild them:

make start-FULL-REBUILD

see also make help for additional commands that might be useful.

Due to past file re-organizations, you may find the following git configuration helpful for looking at history:

git config --local include.path ../.gitconfigIf you would like to run docker compose as a background process, run the up commands with the --detach flag, and use docker compose --profile postgres --profile local-services down to stop.

If your development machine is having trouble handling all of the docker containers, look into using Docker Machine.

Sometimes npm/docker get in a weird state, especially with native libs, and fail to recover gracefully.

You may get a message like Error: Cannot find module .... bcrypt.

If this happens to you, try following the instructions here.

You may find it necessary to install some dependencies, namely nodejs and postgres stuff, in a Rosetta terminal. Create an issue or reach out if you are having strange build issues on Apple computers.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for polis

Similar Open Source Tools

polis

Polis is an AI powered sentiment gathering platform that offers a more organic approach than surveys and requires less effort than focus groups. It provides a comprehensive wiki, main deployment at https://pol.is, discussions, issue tracking, and project board for users. Polis can be set up using Docker infrastructure and offers various commands for building and running containers. Users can test their instance, update the system, and deploy Polis for production. The tool also provides developer conveniences for code reloading, type checking, and database connections. Additionally, Polis supports end-to-end browser testing using Cypress and offers troubleshooting tips for common Docker and npm issues.

openui

OpenUI is a tool designed to simplify the process of building UI components by allowing users to describe UI using their imagination and see it rendered live. It supports converting HTML to React, Svelte, Web Components, etc. The tool is open source and aims to make UI development fun, fast, and flexible. It integrates with various AI services like OpenAI, Groq, Gemini, Anthropic, Cohere, and Mistral, providing users with the flexibility to use different models. OpenUI also supports LiteLLM for connecting to various LLM services and allows users to create custom proxy configs. The tool can be run locally using Docker or Python, and it offers a development environment for quick setup and testing.

aiarena-web

aiarena-web is a website designed for running the aiarena.net infrastructure. It consists of different modules such as core functionality, web API endpoints, frontend templates, and a module for linking users to their Patreon accounts. The website serves as a platform for obtaining new matches, reporting results, featuring match replays, and connecting with Patreon supporters. The project is licensed under GPLv3 in 2019.

seer

Seer is a service that provides AI capabilities to Sentry by running inference on Sentry issues and providing user insights. It is currently in early development and not yet compatible with self-hosted Sentry instances. The tool requires access to internal Sentry resources and is intended for internal Sentry employees. Users can set up the environment, download model artifacts, integrate with local Sentry, run evaluations for Autofix AI agent, and deploy to a sandbox staging environment. Development commands include applying database migrations, creating new migrations, running tests, and more. The tool also supports VCRs for recording and replaying HTTP requests.

lovelaice

Lovelaice is an AI-powered assistant for your terminal and editor. It can run bash commands, search the Internet, answer general and technical questions, complete text files, chat casually, execute code in various languages, and more. Lovelaice is configurable with API keys and LLM models, and can be used for a wide range of tasks requiring bash commands or coding assistance. It is designed to be versatile, interactive, and helpful for daily tasks and projects.

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

concierge

Concierge is a versatile automation tool designed to streamline repetitive tasks and workflows. It provides a user-friendly interface for creating custom automation scripts without the need for extensive coding knowledge. With Concierge, users can automate various tasks across different platforms and applications, increasing efficiency and productivity. The tool offers a wide range of pre-built automation templates and allows users to customize and schedule their automation processes. Concierge is suitable for individuals and businesses looking to automate routine tasks and improve overall workflow efficiency.

ai-town

AI Town is a virtual town where AI characters live, chat, and socialize. This project provides a deployable starter kit for building and customizing your own version of AI Town. It features a game engine, database, vector search, auth, text model, deployment, pixel art generation, background music generation, and local inference. You can customize your own simulation by creating characters and stories, updating spritesheets, changing the background, and modifying the background music.

Open-LLM-VTuber

Open-LLM-VTuber is a project in early stages of development that allows users to interact with Large Language Models (LLM) using voice commands and receive responses through a Live2D talking face. The project aims to provide a minimum viable prototype for offline use on macOS, Linux, and Windows, with features like long-term memory using MemGPT, customizable LLM backends, speech recognition, and text-to-speech providers. Users can configure the project to chat with LLMs, choose different backend services, and utilize Live2D models for visual representation. The project supports perpetual chat, offline operation, and GPU acceleration on macOS, addressing limitations of existing solutions on macOS.

AI-Horde-Worker

AI-Horde-Worker is a repository containing the original reference implementation for a worker that turns your graphics card(s) into a worker for the AI Horde. It allows users to generate or alchemize images for others. The repository provides instructions for setting up the worker on Windows and Linux, updating the worker code, running with multiple GPUs, and stopping the worker. Users can configure the worker using a WebUI to connect to the horde with their username and API key. The repository also includes information on model usage and running the Docker container with specified environment variables.

llm-subtrans

LLM-Subtrans is an open source subtitle translator that utilizes LLMs as a translation service. It supports translating subtitles between any language pairs supported by the language model. The application offers multiple subtitle formats support through a pluggable system, including .srt, .ssa/.ass, and .vtt files. Users can choose to use the packaged release for easy usage or install from source for more control over the setup. The tool requires an active internet connection as subtitles are sent to translation service providers' servers for translation.

ultravox

Ultravox is a fast multimodal Language Model (LLM) that can understand both text and human speech in real-time without the need for a separate Audio Speech Recognition (ASR) stage. By extending Meta's Llama 3 model with a multimodal projector, Ultravox converts audio directly into a high-dimensional space used by Llama 3, enabling quick responses and potential understanding of paralinguistic cues like timing and emotion in human speech. The current version (v0.3) has impressive speed metrics and aims for further enhancements. Ultravox currently converts audio to streaming text and plans to emit speech tokens for direct audio conversion. The tool is open for collaboration to enhance this functionality.

airbyte_serverless

AirbyteServerless is a lightweight tool designed to simplify the management of Airbyte connectors. It offers a serverless mode for running connectors, allowing users to easily move data from any source to their data warehouse. Unlike the full Airbyte-Open-Source-Platform, AirbyteServerless focuses solely on the Extract-Load process without a UI, database, or transform layer. It provides a CLI tool, 'abs', for managing connectors, creating connections, running jobs, selecting specific data streams, handling secrets securely, and scheduling remote runs. The tool is scalable, allowing independent deployment of multiple connectors. It aims to streamline the connector management process and provide a more agile alternative to the comprehensive Airbyte platform.

fasttrackml

FastTrackML is an experiment tracking server focused on speed and scalability, fully compatible with MLFlow. It provides a user-friendly interface to track and visualize your machine learning experiments, making it easy to compare different models and identify the best performing ones. FastTrackML is open source and can be easily installed and run with pip or Docker. It is also compatible with the MLFlow Python package, making it easy to integrate with your existing MLFlow workflows.

dockershrink

Dockershrink is an AI-powered Commandline Tool designed to help reduce the size of Docker images. It combines traditional Rule-based analysis with Generative AI techniques to optimize Image configurations. The tool supports NodeJS applications and aims to save costs on storage, data transfer, and build times while increasing developer productivity. By automatically applying advanced optimization techniques, Dockershrink simplifies the process for engineers and organizations, resulting in significant savings and efficiency improvements.

StableSwarmUI

StableSwarmUI is a modular Stable Diffusion web user interface that emphasizes making power tools easily accessible, high performance, and extensible. It is designed to be a one-stop-shop for all things Stable Diffusion, providing a wide range of features and capabilities to enhance the user experience.

For similar tasks

nlp-llms-resources

The 'nlp-llms-resources' repository is a comprehensive resource list for Natural Language Processing (NLP) and Large Language Models (LLMs). It covers a wide range of topics including traditional NLP datasets, data acquisition, libraries for NLP, neural networks, sentiment analysis, optical character recognition, information extraction, semantics, topic modeling, multilingual NLP, domain-specific LLMs, vector databases, ethics, costing, books, courses, surveys, aggregators, newsletters, papers, conferences, and societies. The repository provides valuable information and resources for individuals interested in NLP and LLMs.

adata

AData is a free and open-source A-share database that focuses on transaction-related data. It provides comprehensive data on stocks, including basic information, market data, and sentiment analysis. AData is designed to be easy to use and integrate with other applications, making it a valuable tool for quantitative trading and AI training.

PIXIU

PIXIU is a project designed to support the development, fine-tuning, and evaluation of Large Language Models (LLMs) in the financial domain. It includes components like FinBen, a Financial Language Understanding and Prediction Evaluation Benchmark, FIT, a Financial Instruction Dataset, and FinMA, a Financial Large Language Model. The project provides open resources, multi-task and multi-modal financial data, and diverse financial tasks for training and evaluation. It aims to encourage open research and transparency in the financial NLP field.

hezar

Hezar is an all-in-one AI library designed specifically for the Persian community. It brings together various AI models and tools, making it easy to use AI with just a few lines of code. The library seamlessly integrates with Hugging Face Hub, offering a developer-friendly interface and task-based model interface. In addition to models, Hezar provides tools like word embeddings, tokenizers, feature extractors, and more. It also includes supplementary ML tools for deployment, benchmarking, and optimization.

text-embeddings-inference

Text Embeddings Inference (TEI) is a toolkit for deploying and serving open source text embeddings and sequence classification models. TEI enables high-performance extraction for popular models like FlagEmbedding, Ember, GTE, and E5. It implements features such as no model graph compilation step, Metal support for local execution on Macs, small docker images with fast boot times, token-based dynamic batching, optimized transformers code for inference using Flash Attention, Candle, and cuBLASLt, Safetensors weight loading, and production-ready features like distributed tracing with Open Telemetry and Prometheus metrics.

CodeProject.AI-Server

CodeProject.AI Server is a standalone, self-hosted, fast, free, and open-source Artificial Intelligence microserver designed for any platform and language. It can be installed locally without the need for off-device or out-of-network data transfer, providing an easy-to-use solution for developers interested in AI programming. The server includes a HTTP REST API server, backend analysis services, and the source code, enabling users to perform various AI tasks locally without relying on external services or cloud computing. Current capabilities include object detection, face detection, scene recognition, sentiment analysis, and more, with ongoing feature expansions planned. The project aims to promote AI development, simplify AI implementation, focus on core use-cases, and leverage the expertise of the developer community.

spark-nlp

Spark NLP is a state-of-the-art Natural Language Processing library built on top of Apache Spark. It provides simple, performant, and accurate NLP annotations for machine learning pipelines that scale easily in a distributed environment. Spark NLP comes with 36000+ pretrained pipelines and models in more than 200+ languages. It offers tasks such as Tokenization, Word Segmentation, Part-of-Speech Tagging, Named Entity Recognition, Dependency Parsing, Spell Checking, Text Classification, Sentiment Analysis, Token Classification, Machine Translation, Summarization, Question Answering, Table Question Answering, Text Generation, Image Classification, Image to Text (captioning), Automatic Speech Recognition, Zero-Shot Learning, and many more NLP tasks. Spark NLP is the only open-source NLP library in production that offers state-of-the-art transformers such as BERT, CamemBERT, ALBERT, ELECTRA, XLNet, DistilBERT, RoBERTa, DeBERTa, XLM-RoBERTa, Longformer, ELMO, Universal Sentence Encoder, Llama-2, M2M100, BART, Instructor, E5, Google T5, MarianMT, OpenAI GPT2, Vision Transformers (ViT), OpenAI Whisper, and many more not only to Python and R, but also to JVM ecosystem (Java, Scala, and Kotlin) at scale by extending Apache Spark natively.

scikit-llm

Scikit-LLM is a tool that seamlessly integrates powerful language models like ChatGPT into scikit-learn for enhanced text analysis tasks. It allows users to leverage large language models for various text analysis applications within the familiar scikit-learn framework. The tool simplifies the process of incorporating advanced language processing capabilities into machine learning pipelines, enabling users to benefit from the latest advancements in natural language processing.

For similar jobs

mage

XMage is an open-source, cross-platform application that allows users to play the collectible card game Magic: The Gathering online against other players or computer opponents. It supports over 25,000 unique cards and more than 65,000 reprints from different editions, including custom sets like Star Wars. XMage supports single matches and tournaments with dozens of game modes, including duel, multiplayer, standard, modern, commander, pauper, oathbreaker, historic, freeform, and richman. It also features a deck editor, a player rating system, and support for special formats like Commander, Oathbreaker, Cube, Tiny Leaders, Super Standard, and Historic Standard.

Forza-Mods-AIO

Forza Mods AIO is a free and open-source tool that enhances the gaming experience in Forza Horizon 4 and 5. It offers a range of time-saving and quality-of-life features, making gameplay more enjoyable and efficient. The tool is designed to streamline various aspects of the game, improving user satisfaction and overall enjoyment.

eos-airdrops

This repository contains a list of EOS airdrops. Airdrops are a way for projects to distribute tokens to their community. They can be used to reward early adopters, promote the project, or raise funds. This repository includes airdrops for a variety of projects, including both new and established projects.

Discord-AI-Chatbot

Discord AI Chatbot is a versatile tool that seamlessly integrates into your Discord server, offering a wide range of capabilities to enhance your communication and engagement. With its advanced language model, the bot excels at imaginative generation, providing endless possibilities for creative expression. Additionally, it offers secure credential management, ensuring the privacy of your data. The bot's hybrid command system combines the best of slash and normal commands, providing flexibility and ease of use. It also features mention recognition, ensuring prompt responses whenever you mention it or use its name. The bot's message handling capabilities prevent confusion by recognizing when you're replying to others. You can customize the bot's behavior by selecting from a range of pre-existing personalities or creating your own. The bot's web access feature unlocks a new level of convenience, allowing you to interact with it from anywhere. With its open-source nature, you have the freedom to modify and adapt the bot to your specific needs.

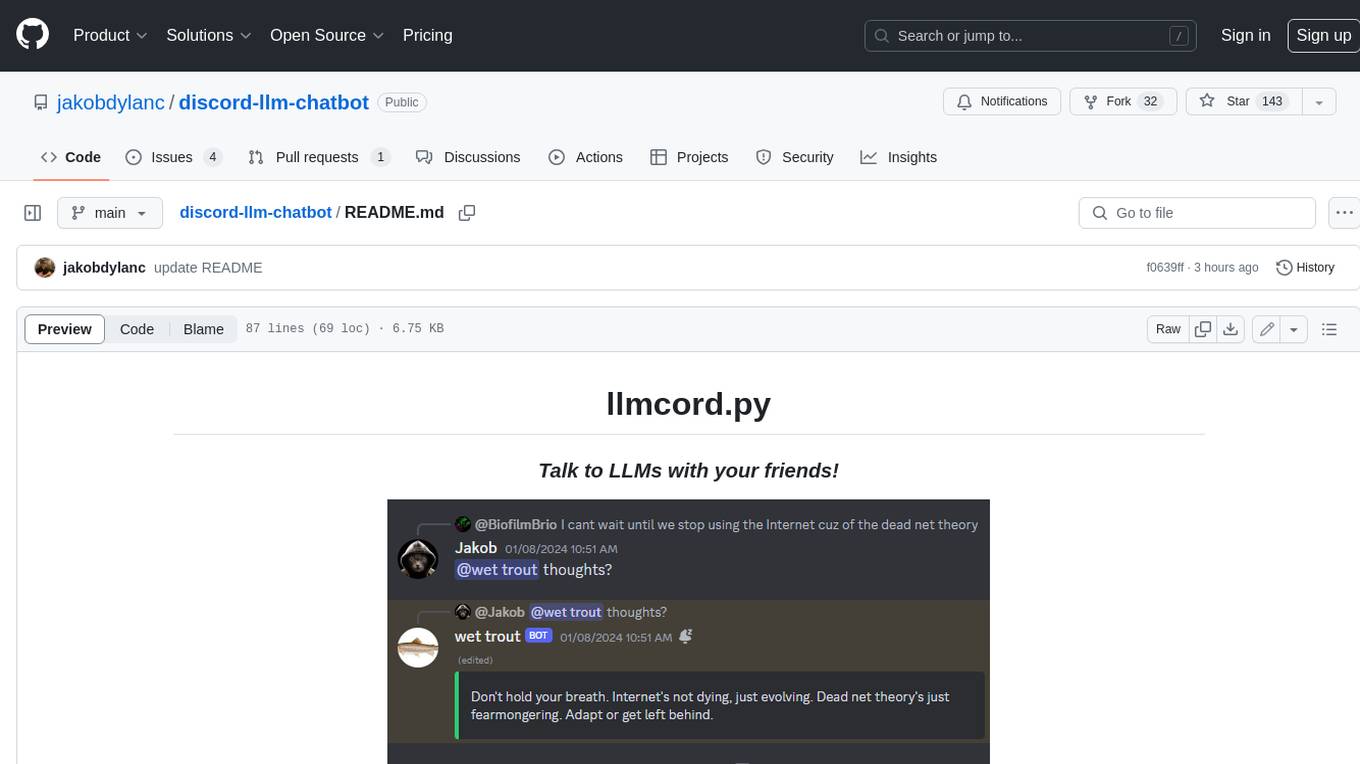

discord-llm-chatbot

llmcord.py enables collaborative LLM prompting in your Discord server. It works with practically any LLM, remote or locally hosted. ### Features ### Reply-based chat system Just @ the bot to start a conversation and reply to continue. Build conversations with reply chains! You can do things like: - Build conversations together with your friends - "Rewind" a conversation simply by replying to an older message - @ the bot while replying to any message in your server to ask a question about it Additionally: - Back-to-back messages from the same user are automatically chained together. Just reply to the latest one and the bot will see all of them. - You can seamlessly move any conversation into a thread. Just create a thread from any message and @ the bot inside to continue. ### Choose any LLM Supports remote models from OpenAI API, Mistral API, Anthropic API and many more thanks to LiteLLM. Or run a local model with ollama, oobabooga, Jan, LM Studio or any other OpenAI compatible API server. ### And more: - Supports image attachments when using a vision model - Customizable system prompt - DM for private access (no @ required) - User identity aware (OpenAI API only) - Streamed responses (turns green when complete, automatically splits into separate messages when too long, throttled to prevent Discord ratelimiting) - Displays helpful user warnings when appropriate (like "Only using last 20 messages", "Max 5 images per message", etc.) - Caches message data in a size-managed (no memory leaks) and per-message mutex-protected (no race conditions) global dictionary to maximize efficiency and minimize Discord API calls - Fully asynchronous - 1 Python file, ~200 lines of code

discourse-ai

Discourse AI is a plugin for the Discourse forum software that uses artificial intelligence to improve the user experience. It can automatically generate content, moderate posts, and answer questions. This can free up moderators and administrators to focus on other tasks, and it can help to create a more engaging and informative community.

MukeshRobot

MukeshRobot is a Telegram group controller bot written in Python. It is designed to help group administrators manage their groups more effectively. The bot can perform a variety of tasks, including: - Welcoming new members - Banning spammers - Deleting inappropriate messages - Managing group settings - Sending announcements - Playing games MukeshRobot is easy to set up and use. Simply add the bot to your group and give it administrator privileges. The bot will then automatically start performing its tasks. You can also customize the bot's behavior by editing the config file. MukeshRobot is a powerful tool that can help you keep your Telegram groups clean and organized. It is a must-have for any group administrator.

galxe-aio

Galxe AIO is a versatile tool designed to automate various tasks on social media platforms like Twitter, email, and Discord. It supports tasks such as following, retweeting, liking, and quoting on Twitter, as well as solving quizzes, submitting surveys, and more. Users can link their Twitter accounts, email accounts (IMAP or mail3.me), and Discord accounts to the tool to streamline their activities. Additionally, the tool offers features like claiming rewards, quiz solving, submitting surveys, and managing referral links and account statistics. It also supports different types of rewards like points, mystery boxes, gas-less OATs, gas OATs and NFTs, and participation in raffles. The tool provides settings for managing EVM wallets, proxies, twitters, emails, and discords, along with custom configurations in the `config.toml` file. Users can run the tool using Python 3.11 and install dependencies using `pip` and `playwright`. The tool generates results and logs in specific folders and allows users to donate using TRC-20 or ERC-20 tokens.