AIOStreams

One addon to rule them all. AIOStreams consolidates multiple Stremio addons and debrid services into a single, highly customisable super-addon.

Stars: 1483

AIOStreams is a versatile tool that combines streams from various addons into one platform, offering extensive customization options. Users can change result formats, filter results by various criteria, remove duplicates, prioritize services, sort results, specify size limits, and more. The tool scrapes results from selected addons, applies user configurations, and presents the results in a unified manner. It simplifies the process of finding and accessing desired content from multiple sources, enhancing user experience and efficiency.

README:

One addon to rule them all.

AIOStreams consolidates multiple Stremio addons and debrid services - including its own suite of built-in addons - into a single, highly customisable super-addon.

AIOStreams was created to give users ultimate control over their Stremio experience. Instead of juggling multiple addons with different configurations and limitations, AIOStreams acts as a central hub. It fetches results from all your favorite sources, then filters, sorts, and formats them according to your rules before presenting them in a single, clean list.

Whether you're a casual user who wants a simple, unified stream list or a power user who wants to fine-tune every aspect of your results, AIOStreams has you covered.

AIOStreams also includes a powerful suite of built-in addons that can search various torrents and usenet indexers. With support for nzbDAV and AltMount, you can stream usenet results directly from your Usenet provider via NNTP - no debrid service required.

- Unified Results: Aggregate streams from multiple addons into one consistently sorted and formatted list.

- Simplified Addon Management: AIOStreams features a built-in addon marketplace. Many addons require you to install them multiple times to support different debrid services. AIOStreams handles this automatically. Just enable an addon from the marketplace, and AIOStreams dynamically applies your debrid keys, so you only have to configure it once.

- Automatic Updates: Because addon manifests are generated dynamically, you get the latest updates and fixes without ever needing to reconfigure or reinstall.

- Custom Addon Support: Add any Stremio addon by providing its configured URL. If it works in Stremio, it works here.

- Full Stremio Support: AIOStreams doesn't just manage streams; it supports all Stremio resources, including catalogs, metadata, and even addon catalogs.

Think of "built-in addons" as independent addons bundled exclusively with AIOStreams. When you host AIOStreams, you're also hosting these addons, ready to be added to your configuration just like any other community addon (e.g., Comet, Torrentio).

AIOStreams includes over 10 built-in addons that search various sources and deliver results to be streamed by you.

[!NOTE] Built-in addons that search for torrents require a debrid service and do not yet support P2P streaming. Usenet results can be streamed directly from your Usenet provider via NZBDav or AltMount, or through TorBox (Pro plan required). All built-in addons come with anime support and support Kitsu/MAL catalogs.

The suite of built-in addons includes:

- Stremio GDrive: Connect your Google Drive to Stremio.

- TorBox Search: An alternative to the official TorBox addon with more customisability and support for more debrid services.

- Knaben: Scrapes Knaben, an indexer proxy for several popular torrent sites including The Pirate Bay, 1337x, and Nyaa.si.

- Zilean: Scrapes an instance of Zilean - A DMM hashlist scraper.

- AnimeTosho: Searches AnimeTosho, which mirrors most anime from Nyaa.si and TokyoTosho.

- Torrent Galaxy: Searches Torrent Galaxy for results.

-

Bitmagnet: Scrape your self-hosted Bitmagnet instance - a BitTorrent indexer and DHT crawler. Set

BUILTIN_BITMAGNET_URLfor the addon to appear. - Jackett: Connect and scrape your Jackett instance by providing its URL and API key.

- Prowlarr: Connect and scrape your Prowlarr instance by providing its URL and API key.

- NZBHydra: Stream results from your Usenet indexers by connecting your NZBHydra instance.

- Newznab: Directly configure and scrape your Usenet indexers for results using a Newznab API.

- Torznab: Configure any Torznab API to scrape torrent results, allowing individual indexers from Jackett to be added separately.

Because all addons are routed through AIOStreams, you only have to configure your filters and sorting rules once. This powerful, centralized engine offers far more options and flexibility than any individual addon.

-

Granular Filtering: Define

include(prevents filtering),required, orexcludedrules for a huge range of properties:-

Video/Audio: Resolution, quality, encodes, visual tags (

HDR,DV), audio tags (Atmos), and channels. -

Source: Stream type (

Debrid,Usenet,P2P), language, seeder ranges, and cached/uncached status (can be applied to specific addons/services).

-

Video/Audio: Resolution, quality, encodes, visual tags (

-

Preferred Lists: Manually define and order a list of preferred properties to prioritize certain results, for example, always showing

HDRstreams first. - Keyword & Regex Filtering: Filter by simple keywords or complex regex patterns matched against filenames, indexers and release groups for ultimate precision.

- Accurate Title Matching: Leverages the TMDB API to precisely match titles, years, and season/episode numbers, ensuring you always get the right content. This can be granularly applied to specific addons or content types.

-

Powerful Conditional Engine: Create dynamic rules with a simple yet powerful expression language.

-

Example: Only exclude 720p streams if more than five 1080p streams are available:

count(resolution(streams, '1080p')) > 5 ? resolution(streams, '720p') : false. - Check the wiki for a full function reference.

-

Example: Only exclude 720p streams if more than five 1080p streams are available:

- Customisable Deduplication: Choose how duplicate streams are detected: by filename, infohash, and a unique "smart detect" hash generated from certain file attributes.

-

Sophisticated Sorting:

- Build your perfect sort order using any combination of criteria.

- Define separate sorting logic for movies, series, anime, and even for cached vs. uncached results.

- The sorting system automatically uses the rankings from your "Preferred Lists".

Take control of your Stremio home page. AIOStreams lets you manage catalogs from all your addons in one place.

-

Rename: Rename both the name and the type of the catalog to whatever you want. (e.g. Changing Cinemeta's

Popular - MoviestoPopular - 📺) - Reorder & Disable: Arrange catalogs in your preferred order or hide the ones you don't use.

- Shuffle Catalogs: Discover new content by shuffling the results of any catalog. You can even persist the shuffle for a set period.

- Enhanced Posters: Automatically apply high-quality posters from RPDB to catalogs that provide a supported metadata source, even if the original addon doesn't support it.

- Custom Stream Formatting: Design exactly how stream information is displayed using a powerful templating system.

- Live Preview: See your custom format changes in real-time as you build them.

- Predefined Formats: Get started quickly with built-in formats, some created by me and others inspired by other popular addons like Torrentio and the TorBox Stremio Addon.

- Custom Formatter Wiki: Dive deep into the documentation to create your perfect stream title.

This format was created by one of our community members in the Discord Server

- Proxy Integration: Seamlessly proxy streams through AIOStreams' proxy or an external proxy like MediaFlow Proxy or StremThru.

- Bypass IP Restrictions: Essential for services that limit simultaneous connections from different IP addresses.

And much much more...

Setting up AIOStreams is simple.

-

Choose a Hosting Method

- 🔓 Public Instance: Use the Community Instance (Hosted by ElfHosted). It's free, but rate-limited and has Torrentio disabled.

- 🛠️ Self-Host / Paid Hosting: For full control and no rate limits, host it yourself (Docker) or use a paid service like ElfHosted (using this link supports the project!).

-

Configure Your Addon

- Open the

/stremio/configurepage of your AIOStreams instance in a web browser. - Enable the addons you use, add your debrid API keys, and set up your filtering, sorting, and formatting rules.

- Open the

-

Install

- Click the "Install" button. This will open your Stremio addon compatible app and add your newly configured AIOStreams addon.

For detailed instructions, check out the Wiki:

AIOStreams is a passion project developed and maintained for free. If you find it useful, please consider supporting its development.

- ⭐ Star the Repository on GitHub.

- ⭐ Star the Addon in the Stremio Community Catalog.

- 🤝 Contribute: Report issues, suggest features, or submit pull requests.

- ☕ Donate:

AIOStreams is a tool for aggregating and managing data from other Stremio addons. It does not host, store, or distribute any content. The developer does not endorse or promote access to copyrighted content. Users are solely responsible for complying with all applicable laws and the terms of service for any addons or services they use with AIOStreams.

This project wouldn't be possible without the foundational work of many others in the community, especially those who develop the addons that AIOStreams integrates. Special thanks to the developers of all the integrated addons, the creators of mhdzumair/mediaflow-proxy and MunifTanjim/stremthru, and the open-source projects that inspired parts of AIOStreams' design:

- UI Components and issue templates adapted with permission from 5rahim/seanime (which any anime enthusiast should definitely check out!)

- NzbDAV & AltMount integration inspired by Sanket9225/UsenetStreamer

- sleeyax/stremio-easynews-addon for the projects initial structure

- Custom formatter system inspired by and adapted from diced/zipline.

- Condition engine powered by silentmatt/expr-eval

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AIOStreams

Similar Open Source Tools

AIOStreams

AIOStreams is a versatile tool that combines streams from various addons into one platform, offering extensive customization options. Users can change result formats, filter results by various criteria, remove duplicates, prioritize services, sort results, specify size limits, and more. The tool scrapes results from selected addons, applies user configurations, and presents the results in a unified manner. It simplifies the process of finding and accessing desired content from multiple sources, enhancing user experience and efficiency.

nanobrowser

Nanobrowser is an open-source AI web automation tool that runs in your browser. It is a free alternative to OpenAI Operator with flexible LLM options and a multi-agent system. Nanobrowser offers premium web automation capabilities while keeping users in complete control, with features like a multi-agent system, interactive side panel, task automation, follow-up questions, and multiple LLM support. Users can easily download and install Nanobrowser as a Chrome extension, configure agent models, and accomplish tasks such as news summary, GitHub research, and shopping research with just a sentence. The tool uses a specialized multi-agent system powered by large language models to understand and execute complex web tasks. Nanobrowser is actively developed with plans to expand LLM support, implement security measures, optimize memory usage, enable session replay, and develop specialized agents for domain-specific tasks. Contributions from the community are welcome to improve Nanobrowser and build the future of web automation.

mikupad

mikupad is a lightweight and efficient language model front-end powered by ReactJS, all packed into a single HTML file. Inspired by the likes of NovelAI, it provides a simple yet powerful interface for generating text with the help of various backends.

kollektiv

Kollektiv is a Retrieval-Augmented Generation (RAG) system designed to enable users to chat with their favorite documentation easily. It aims to provide LLMs with access to the most up-to-date knowledge, reducing inaccuracies and improving productivity. The system utilizes intelligent web crawling, advanced document processing, vector search, multi-query expansion, smart re-ranking, AI-powered responses, and dynamic system prompts. The technical stack includes Python/FastAPI for backend, Supabase, ChromaDB, and Redis for storage, OpenAI and Anthropic Claude 3.5 Sonnet for AI/ML, and Chainlit for UI. Kollektiv is licensed under a modified version of the Apache License 2.0, allowing free use for non-commercial purposes.

WritingTools

Writing Tools is an Apple Intelligence-inspired application for Windows, Linux, and macOS that supercharges your writing with an AI LLM. It allows users to instantly proofread, optimize text, and summarize content from webpages, YouTube videos, documents, etc. The tool is privacy-focused, open-source, and supports multiple languages. It offers powerful features like grammar correction, content summarization, and LLM chat mode, making it a versatile writing assistant for various tasks.

easydiffusion

Easy Diffusion 3.0 is a user-friendly tool for installing and using Stable Diffusion on your computer. It offers hassle-free installation, clutter-free UI, task queue, intelligent model detection, live preview, image modifiers, multiple prompts file, saving generated images, UI themes, searchable models dropdown, and supports various image generation tasks like 'Text to Image', 'Image to Image', and 'InPainting'. The tool also provides advanced features such as custom models, merge models, custom VAE models, multi-GPU support, auto-updater, developer console, and more. It is designed for both new users and advanced users looking for powerful AI image generation capabilities.

TaskingAI

TaskingAI brings Firebase's simplicity to **AI-native app development**. The platform enables the creation of GPTs-like multi-tenant applications using a wide range of LLMs from various providers. It features distinct, modular functions such as Inference, Retrieval, Assistant, and Tool, seamlessly integrated to enhance the development process. TaskingAI’s cohesive design ensures an efficient, intelligent, and user-friendly experience in AI application development.

Director

Director is a framework to build video agents that can reason through complex video tasks like search, editing, compilation, generation, etc. It enables users to summarize videos, search for specific moments, create clips instantly, integrate GenAI projects and APIs, add overlays, generate thumbnails, and more. Built on VideoDB's 'video-as-data' infrastructure, Director is perfect for developers, creators, and teams looking to simplify media workflows and unlock new possibilities.

CodeGPT

CodeGPT is an extension for JetBrains IDEs that provides access to state-of-the-art large language models (LLMs) for coding assistance. It offers a range of features to enhance the coding experience, including code completions, a ChatGPT-like interface for instant coding advice, commit message generation, reference file support, name suggestions, and offline development support. CodeGPT is designed to keep privacy in mind, ensuring that user data remains secure and private.

chatnio

Chat Nio is a next-generation AI one-stop solution that provides a rich and user-friendly interface for interacting with various AI models. It offers features such as AI chat conversation, rich format compatibility, markdown support, message menu support, multi-platform adaptation, dialogue memory, full-model file parsing, full-model DuckDuckGo online search, full-screen large text editing, model marketplace, preset support, site announcements, preference settings, internationalization support, and a rich admin system. Chat Nio also boasts a powerful channel management system that utilizes a self-developed channel distribution algorithm, supports multi-channel management, is compatible with multiple formats, allows for custom models, supports channel retries, enables balanced load within the same channel, and provides channel model mapping and user grouping. Additionally, Chat Nio offers forwarding API services that are compatible with multiple formats in the OpenAI universal format and support multiple model compatible layers. It also provides a custom build and install option for highly customizable deployments. Chat Nio is an open-source project licensed under the Apache License 2.0 and welcomes contributions from the community.

AudioMuse-AI

AudioMuse-AI is a deep learning-based tool for audio analysis and music generation. It provides a user-friendly interface for processing audio data and generating music compositions. The tool utilizes state-of-the-art machine learning algorithms to analyze audio signals and extract meaningful features for music generation. With AudioMuse-AI, users can explore the possibilities of AI in music creation and experiment with different styles and genres. Whether you are a music enthusiast, a researcher, or a developer, AudioMuse-AI offers a versatile platform for audio analysis and music generation.

QOwnNotes

QOwnNotes is an open source notepad with Markdown support and todo list manager for GNU/Linux, macOS, and Windows. It allows you to write down thoughts, edit, and search for them later from mobile devices. Notes are stored as plain text markdown files and synced with Nextcloud's/ownCloud's file sync functionality. QOwnNotes offers features like multiple note folders, restoration of older versions and trashed notes, sub-string searching, customizable keyboard shortcuts, markdown highlighting, spellchecking, tabbing support, scripting support, encryption of notes, dark mode theme support, and more. It supports hierarchical note tagging, note subfolders, sharing notes on Nextcloud/ownCloud server, portable mode, Vim mode, distraction-free mode, full-screen mode, typewriter mode, Evernote and Joplin import, and is available in over 60 languages.

llm-answer-engine

This repository contains the code and instructions needed to build a sophisticated answer engine that leverages the capabilities of Groq, Mistral AI's Mixtral, Langchain.JS, Brave Search, Serper API, and OpenAI. Designed to efficiently return sources, answers, images, videos, and follow-up questions based on user queries, this project is an ideal starting point for developers interested in natural language processing and search technologies.

crawlee

Crawlee is a web scraping and browser automation library that helps you build reliable scrapers quickly. Your crawlers will appear human-like and fly under the radar of modern bot protections even with the default configuration. Crawlee gives you the tools to crawl the web for links, scrape data, and store it to disk or cloud while staying configurable to suit your project's needs.

plandex

Plandex is an open source, terminal-based AI coding engine designed for complex tasks. It uses long-running agents to break up large tasks into smaller subtasks, helping users work through backlogs, navigate unfamiliar technologies, and save time on repetitive tasks. Plandex supports various AI models, including OpenAI, Anthropic Claude, Google Gemini, and more. It allows users to manage context efficiently in the terminal, experiment with different approaches using branches, and review changes before applying them. The tool is platform-independent and runs from a single binary with no dependencies.

Simplifine

Simplifine is an open-source library designed for easy LLM finetuning, enabling users to perform tasks such as supervised fine tuning, question-answer finetuning, contrastive loss for embedding tasks, multi-label classification finetuning, and more. It provides features like WandB logging, in-built evaluation tools, automated finetuning parameters, and state-of-the-art optimization techniques. The library offers bug fixes, new features, and documentation updates in its latest version. Users can install Simplifine via pip or directly from GitHub. The project welcomes contributors and provides comprehensive documentation and support for users.

For similar tasks

AIOStreams

AIOStreams is a versatile tool that combines streams from various addons into one platform, offering extensive customization options. Users can change result formats, filter results by various criteria, remove duplicates, prioritize services, sort results, specify size limits, and more. The tool scrapes results from selected addons, applies user configurations, and presents the results in a unified manner. It simplifies the process of finding and accessing desired content from multiple sources, enhancing user experience and efficiency.

aiostream

aiostream provides a collection of stream operators for creating asynchronous pipelines of operations. It offers features like operator pipe-lining, repeatability, safe iteration context, simplified execution, slicing and indexing, and concatenation. The stream operators are categorized into creation, transformation, selection, combination, aggregation, advanced, timing, and miscellaneous. Users can combine these operators to perform various asynchronous tasks efficiently.

perplexity-mcp

Perplexity-mcp is a Model Context Protocol (MCP) server that provides web search functionality using Perplexity AI's API. It works with the Anthropic Claude desktop client. The server allows users to search the web with specific queries and filter results by recency. It implements the perplexity_search_web tool, which takes a query as a required argument and can filter results by day, week, month, or year. Users need to set up environment variables, including the PERPLEXITY_API_KEY, to use the server. The tool can be installed via Smithery and requires UV for installation. It offers various models for different contexts and can be added as an MCP server in Cursor or Claude Desktop configurations.

For similar jobs

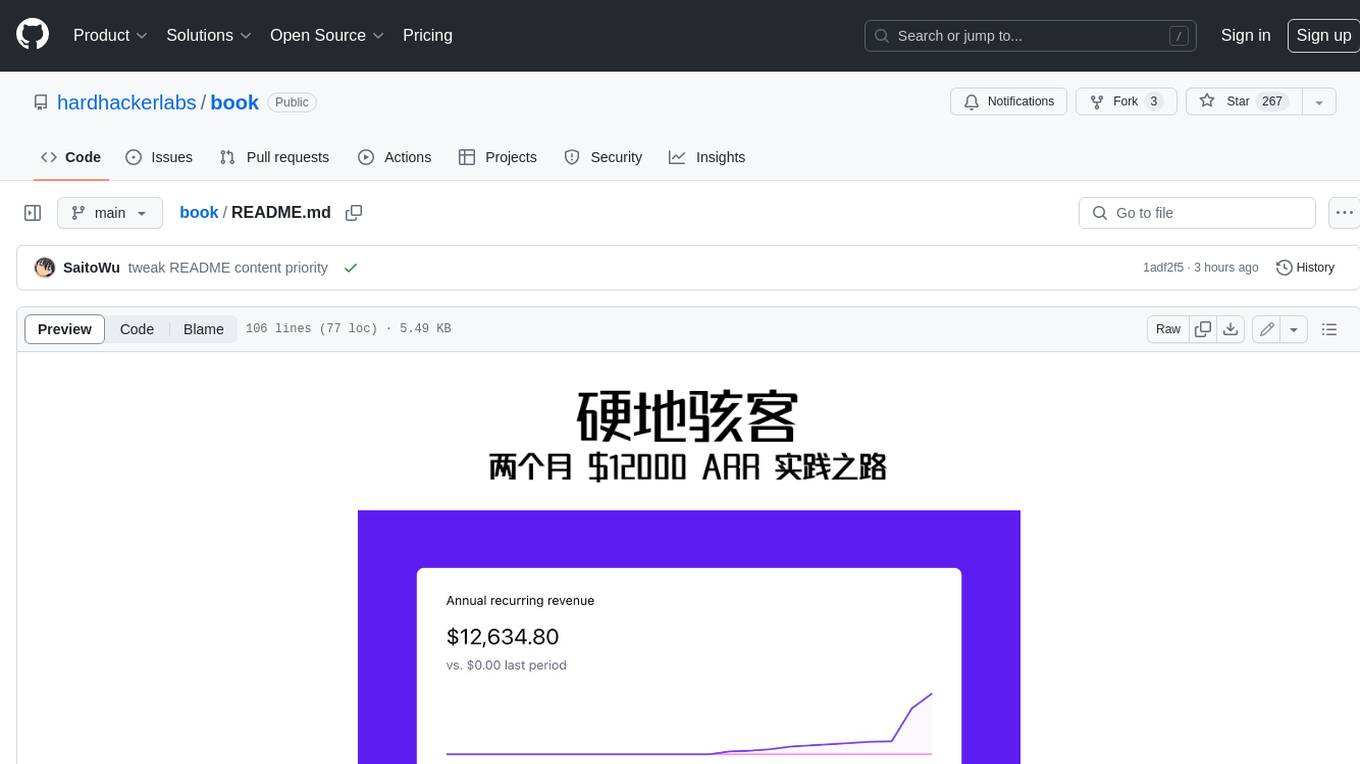

book

Podwise is an AI knowledge management app designed specifically for podcast listeners. With the Podwise platform, you only need to follow your favorite podcasts, such as "Hardcore Hackers". When a program is released, Podwise will use AI to transcribe, extract, summarize, and analyze the podcast content, helping you to break down the hard-core podcast knowledge. At the same time, it is connected to platforms such as Notion, Obsidian, Logseq, and Readwise, embedded in your knowledge management workflow, and integrated with content from other channels including news, newsletters, and blogs, helping you to improve your second brain 🧠.

extractor

Extractor is an AI-powered data extraction library for Laravel that leverages OpenAI's capabilities to effortlessly extract structured data from various sources, including images, PDFs, and emails. It features a convenient wrapper around OpenAI Chat and Completion endpoints, supports multiple input formats, includes a flexible Field Extractor for arbitrary data extraction, and integrates with Textract for OCR functionality. Extractor utilizes JSON Mode from the latest GPT-3.5 and GPT-4 models, providing accurate and efficient data extraction.

Scrapegraph-ai

ScrapeGraphAI is a Python library that uses Large Language Models (LLMs) and direct graph logic to create web scraping pipelines for websites, documents, and XML files. It allows users to extract specific information from web pages by providing a prompt describing the desired data. ScrapeGraphAI supports various LLMs, including Ollama, OpenAI, Gemini, and Docker, enabling users to choose the most suitable model for their needs. The library provides a user-friendly interface through its `SmartScraper` class, which simplifies the process of building and executing scraping pipelines. ScrapeGraphAI is open-source and available on GitHub, with extensive documentation and examples to guide users. It is particularly useful for researchers and data scientists who need to extract structured data from web pages for analysis and exploration.

databerry

Chaindesk is a no-code platform that allows users to easily set up a semantic search system for personal data without technical knowledge. It supports loading data from various sources such as raw text, web pages, files (Word, Excel, PowerPoint, PDF, Markdown, Plain Text), and upcoming support for web sites, Notion, and Airtable. The platform offers a user-friendly interface for managing datastores, querying data via a secure API endpoint, and auto-generating ChatGPT Plugins for each datastore. Chaindesk utilizes a Vector Database (Qdrant), Openai's text-embedding-ada-002 for embeddings, and has a chunk size of 1024 tokens. The technology stack includes Next.js, Joy UI, LangchainJS, PostgreSQL, Prisma, and Qdrant, inspired by the ChatGPT Retrieval Plugin.

auto-news

Auto-News is an automatic news aggregator tool that utilizes Large Language Models (LLM) to pull information from various sources such as Tweets, RSS feeds, YouTube videos, web articles, Reddit, and journal notes. The tool aims to help users efficiently read and filter content based on personal interests, providing a unified reading experience and organizing information effectively. It features feed aggregation with summarization, transcript generation for videos and articles, noise reduction, task organization, and deep dive topic exploration. The tool supports multiple LLM backends, offers weekly top-k aggregations, and can be deployed on Linux/MacOS using docker-compose or Kubernetes.

SemanticFinder

SemanticFinder is a frontend-only live semantic search tool that calculates embeddings and cosine similarity client-side using transformers.js and SOTA embedding models from Huggingface. It allows users to search through large texts like books with pre-indexed examples, customize search parameters, and offers data privacy by keeping input text in the browser. The tool can be used for basic search tasks, analyzing texts for recurring themes, and has potential integrations with various applications like wikis, chat apps, and personal history search. It also provides options for building browser extensions and future ideas for further enhancements and integrations.

1filellm

1filellm is a command-line data aggregation tool designed for LLM ingestion. It aggregates and preprocesses data from various sources into a single text file, facilitating the creation of information-dense prompts for large language models. The tool supports automatic source type detection, handling of multiple file formats, web crawling functionality, integration with Sci-Hub for research paper downloads, text preprocessing, and token count reporting. Users can input local files, directories, GitHub repositories, pull requests, issues, ArXiv papers, YouTube transcripts, web pages, Sci-Hub papers via DOI or PMID. The tool provides uncompressed and compressed text outputs, with the uncompressed text automatically copied to the clipboard for easy pasting into LLMs.

Agently-Daily-News-Collector

Agently Daily News Collector is an open-source project showcasing a workflow powered by the Agent ly AI application development framework. It allows users to generate news collections on various topics by inputting the field topic. The AI agents automatically perform the necessary tasks to generate a high-quality news collection saved in a markdown file. Users can edit settings in the YAML file, install Python and required packages, input their topic idea, and wait for the news collection to be generated. The process involves tasks like outlining, searching, summarizing, and preparing column data. The project dependencies include Agently AI Development Framework, duckduckgo-search, BeautifulSoup4, and PyYAM.