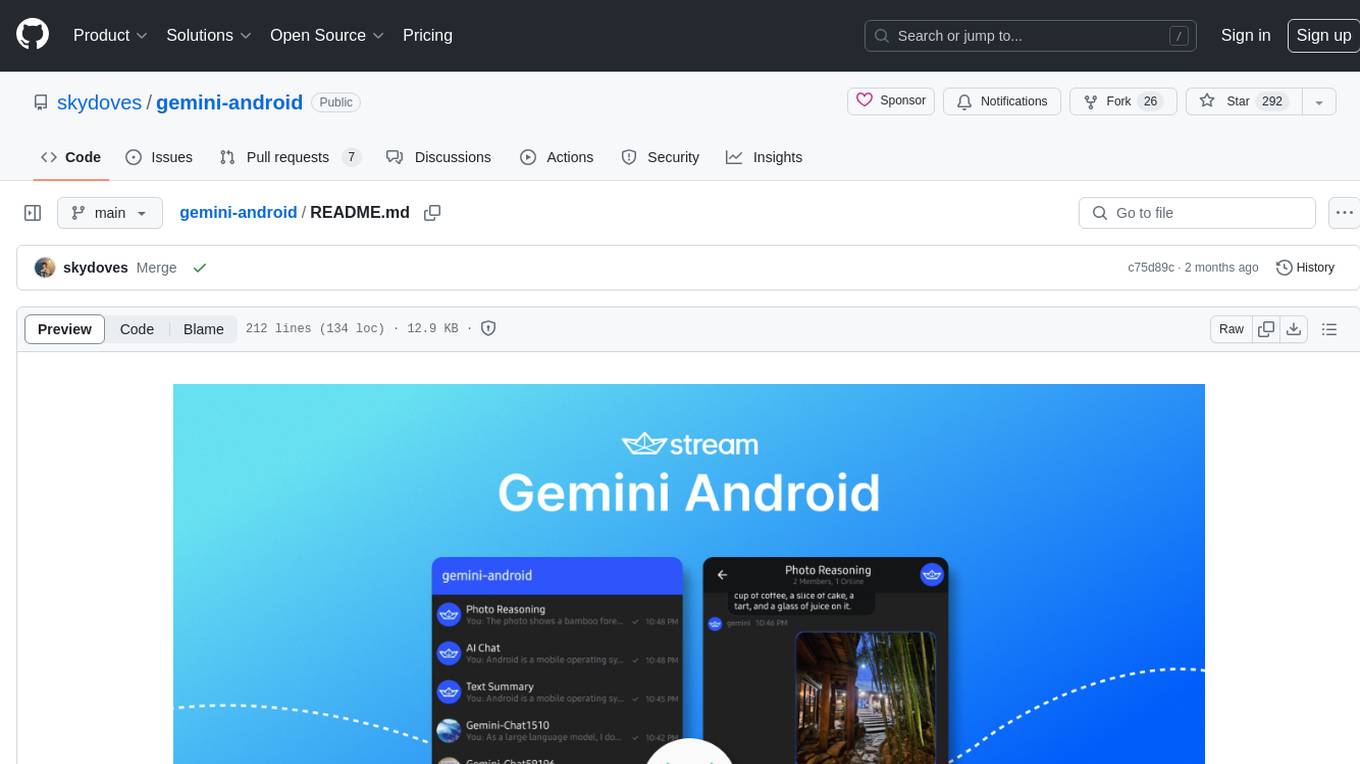

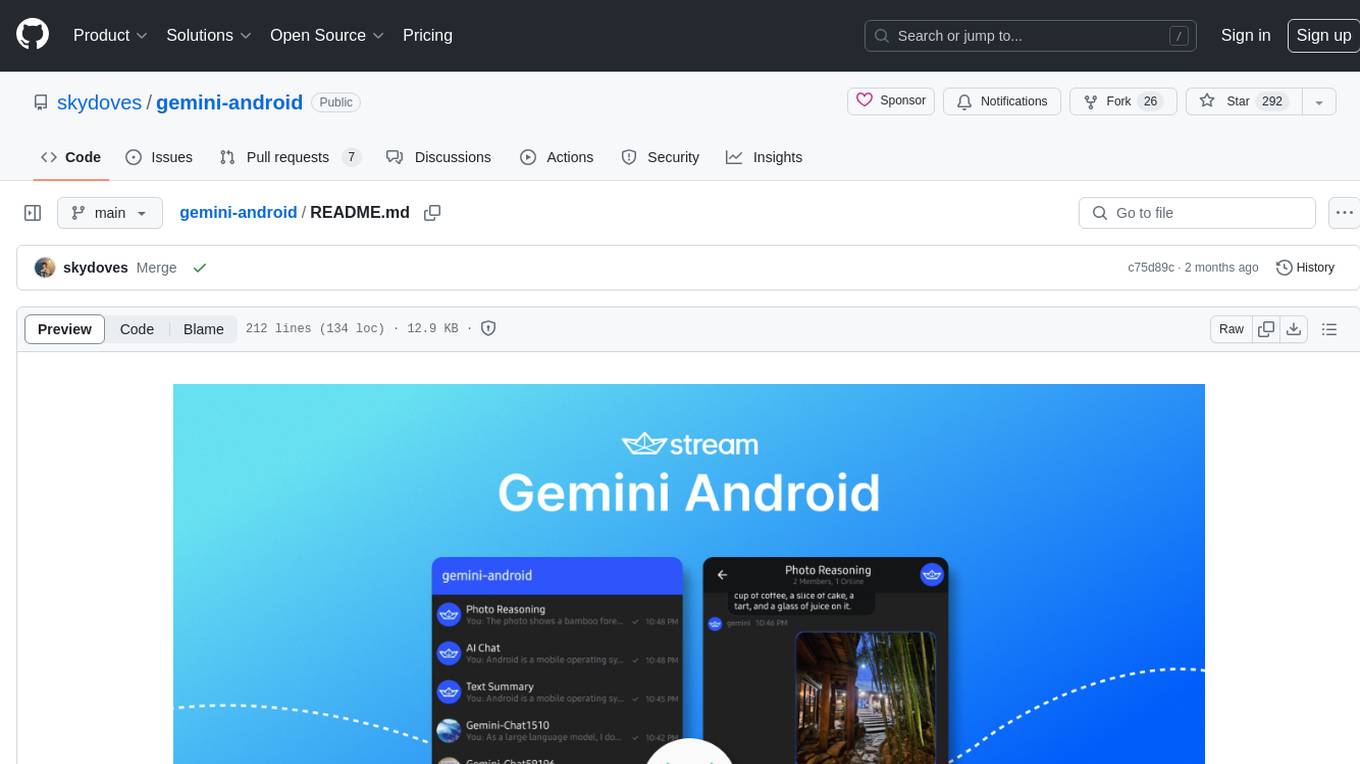

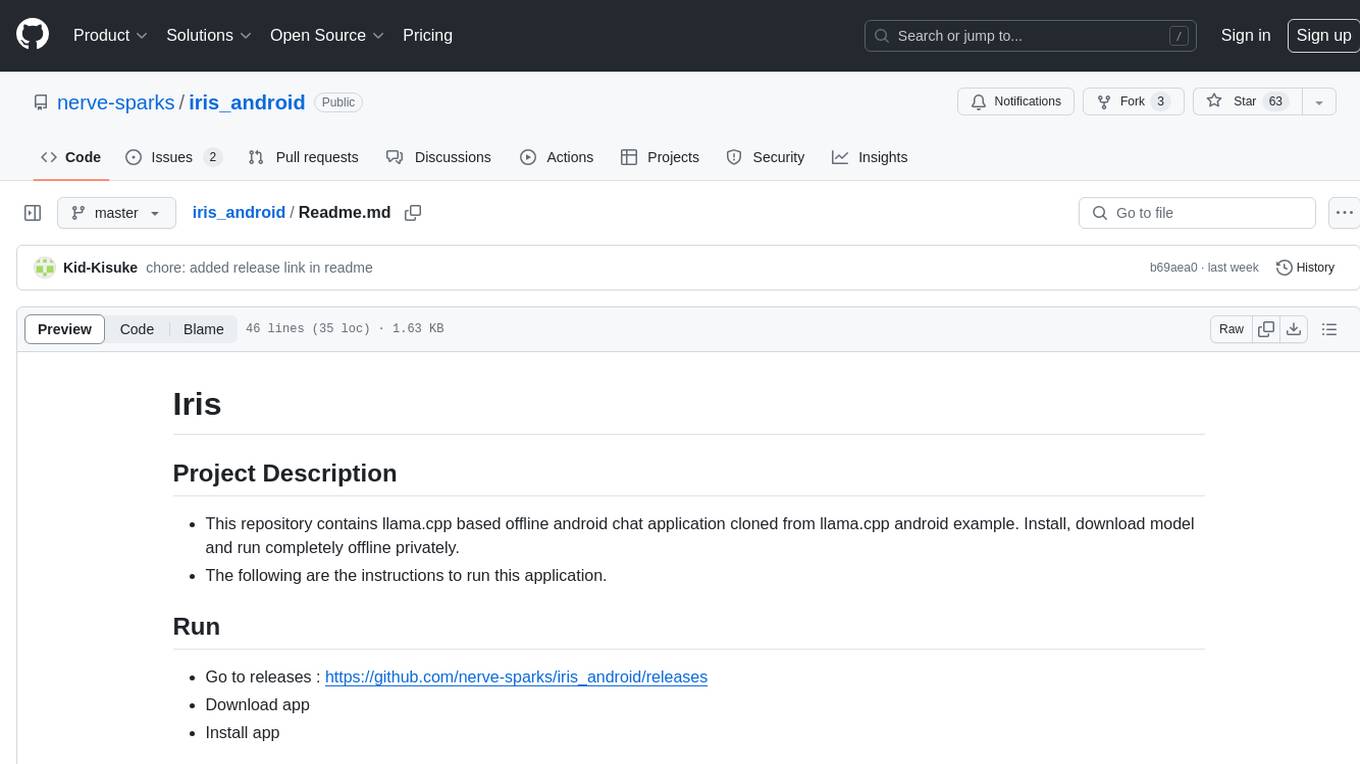

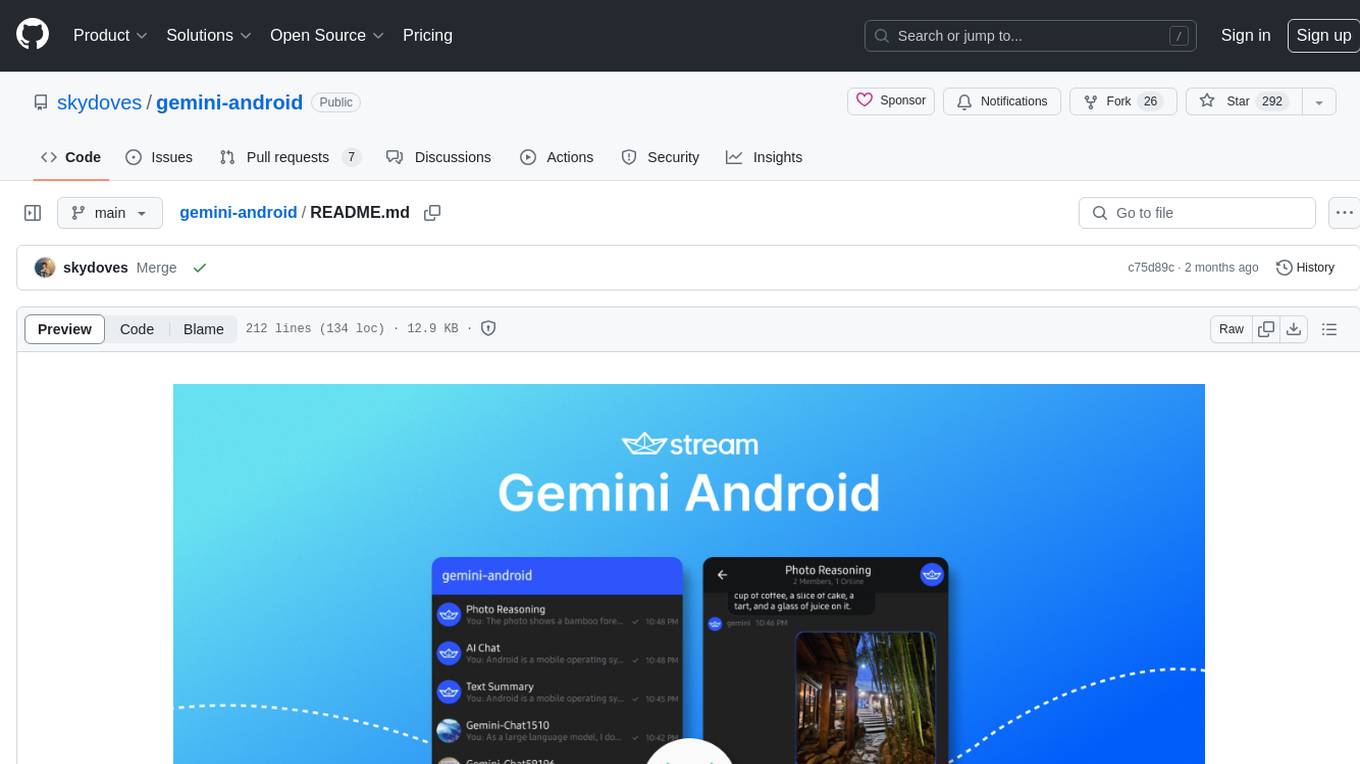

gemini-android

✨ Gemini Android demonstrates Google's Generative AI on Android with Stream Chat SDK for Compose.

Stars: 303

Gemini Android is a repository showcasing Google's Generative AI on Android using Stream Chat SDK for Compose. It demonstrates the Gemini API for Android, implements UI elements with Jetpack Compose, utilizes Android architecture components like Hilt and AppStartup, performs background tasks with Kotlin Coroutines, and integrates chat systems with Stream Chat Compose SDK for real-time event handling. The project also provides technical content, instructions on building the project, tech stack details, architecture overview, modularization strategies, and a contribution guideline. It follows Google's official architecture guidance and offers a real-world example of app architecture implementation.

README:

Gemini Android demonstrates Google's Generative AI on Android with Stream Chat SDK for Compose.

The purpose of this repository is to demonstrate below:

- Demonstrates Gemini API for Android.

- Implementing entire UI elements with Jetpack Compose.

- Implementation of Android architecture components with Jetpack libraries such as Hilt and AppStartup.

- Performing background tasks with Kotlin Coroutines.

- Integrating chat systems with Stream Chat Compose SDK for real-time event handling.

If you're interested in the overall architecture, each layer, Generative AI, Gemini SDK, and implementation details of this project, check out the following blog post: Build an AI Chat Android App With Google’s Generative AI

Gemini Android is built with Stream Chat SDK for Compose to implement messaging systems. If you’re interested in building powerful real-time video/audio calling, audio room, and livestreaming, check out the Stream Video SDK for Compose!

- Stream Chat SDK for Android on GitHub

- Android Samples for Stream Chat SDK on GitHub

- Stream Chat Compose UI Components Guidelines

To build this project properly, you should follow the instructions below:

- Go to the Stream login page.

- If you have your GitHub account, click the SIGN UP WITH GITHUB button and you can sign up within a couple of seconds.

- If you don't have a GitHub account, fill in the inputs and click the START FREE TRIAL button.

- Go to the Dashboard and click the Create App button like the below.

- Fill in the blanks like the below and click the Create App button.

- You will see the Key like the image below and then copy it.

- Create a new file named secrets.properties on the root directory of this Android project, and add the key to the

secrets.propertiesfile like the below:

STREAM_API_KEY=..-

Go to your Dashboard again and click your App.

-

In the Overview menu, you can find the Authentication category by scrolling to the middle of the page.

-

Switch on the Disable Auth Checks option and click the Submit button like the image below.

-

Click the Explorer tab on the left side menu.

-

Click users -> Create New User button sequentially and add fill in the user like the below:

- User Name:

gemini - User ID:

gemini

- Go to Google AI Studio, login with your Google account and select the Get API key on the menu left like the image below:

- Create your API key for using generative AI SDKs, and you'll get one like the image below:

- Add the key to the

secrets.propertiesfile like the below:

GEMINI_API_KEY=..- Build and run the project.

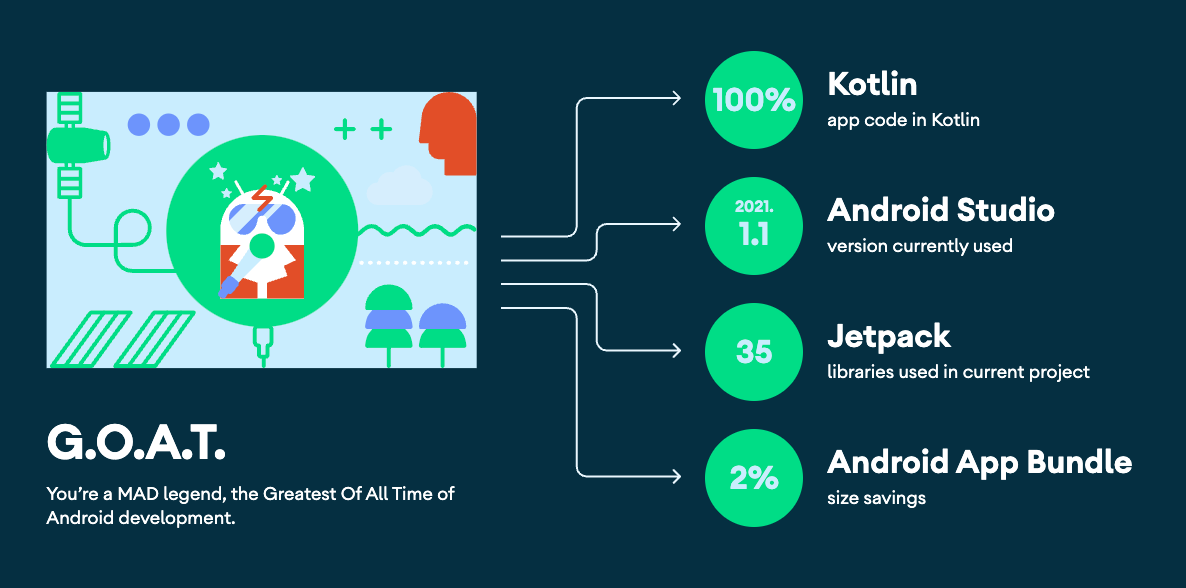

- Minimum SDK level 21.

- 100% Jetpack Compose based + Coroutines + Flow for asynchronous.

- Compose Chat SDK for Messaging: The Jetpack Compose Chat Messaging SDK is built on a low-level chat client and provides modular, customizable Compose UI components that you can easily drop into your app.

- Jetpack

- Compose: Android’s modern toolkit for building native UI.

- ViewModel: UI related data holder and lifecycle aware.

- App Startup: Provides a straightforward, performant way to initialize components at application startup.

- Navigation: For navigating screens and Hilt Navigation Compose for injecting dependencies.

- Room: Constructs Database by providing an abstraction layer over SQLite to allow fluent database access.

- Datastore: Store data asynchronously, consistently, and transactionally, overcoming some of the drawbacks of SharedPreferences.

- Hilt: Dependency Injection.

- Landscapist Glide, animation, placeholder: Jetpack Compose image loading library that fetches and displays network images with Glide, Coil, and Fresco.

- Retrofit2 & OkHttp3: Construct the REST APIs and paging network data.

- Sandwich: Construct a lightweight and modern response interface to handle network payload for Android.

- Moshi: A modern JSON library for Kotlin and Java.

- ksp: Kotlin Symbol Processing API.

- Balloon: Modernized and sophisticated tooltips, fully customizable with an arrow and animations for Android.

- StreamLog: A lightweight and extensible logger library for Kotlin and Android.

- Baseline Profiles: To improve app performance by including a list of classes and methods specifications in your APK that can be used by Android Runtime.

Gemini Android follows the Google's official architecture guidance.

Gemini Android was built with Guide to app architecture, so it would be a great sample to show how the architecture works in real-world projects.

The overall architecture is composed of two layers; UI Layer and the data layer. Each layer has dedicated components and they each have different responsibilities. The arrow means the component has a dependency on the target component following its direction.

Each layer has different responsibilities below. Basically, they follow unidirectional event/data flow.

The UI Layer consists of UI elements like buttons, menus, tabs that could interact with users and ViewModel that holds app states and restores data when configuration changes.

The data Layer consists of repositories, which include business logic, such as querying data from the local database and requesting remote data from the network. It is implemented as an offline-first source of business logic and follows the single source of truth principle.

For more information about the overall architecture, check out Build a Real-Time WhatsApp Clone With Jetpack Compose.

Gemini Android has implemented the following modularization strategies:

-

Reusability: By effectively modularizing reusable code, it not only facilitates code sharing but also restricts code access across different modules.

-

Parallel Building: Modules are capable of being built in parallel, leading to reduced overall build time.

-

Decentralized Focusing: Individual development teams are allocated specific modules, allowing them to concentrate on their designated areas.

Most of the features are not completed except the chat feature, so anyone can contribute and improve this project following the Contributing Guideline.

Support it by joining stargazers for this repository. ⭐

Also, follow me on GitHub for my next creations! 🤩

Designed and developed by 2024 skydoves (Jaewoong Eum)

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for gemini-android

Similar Open Source Tools

gemini-android

Gemini Android is a repository showcasing Google's Generative AI on Android using Stream Chat SDK for Compose. It demonstrates the Gemini API for Android, implements UI elements with Jetpack Compose, utilizes Android architecture components like Hilt and AppStartup, performs background tasks with Kotlin Coroutines, and integrates chat systems with Stream Chat Compose SDK for real-time event handling. The project also provides technical content, instructions on building the project, tech stack details, architecture overview, modularization strategies, and a contribution guideline. It follows Google's official architecture guidance and offers a real-world example of app architecture implementation.

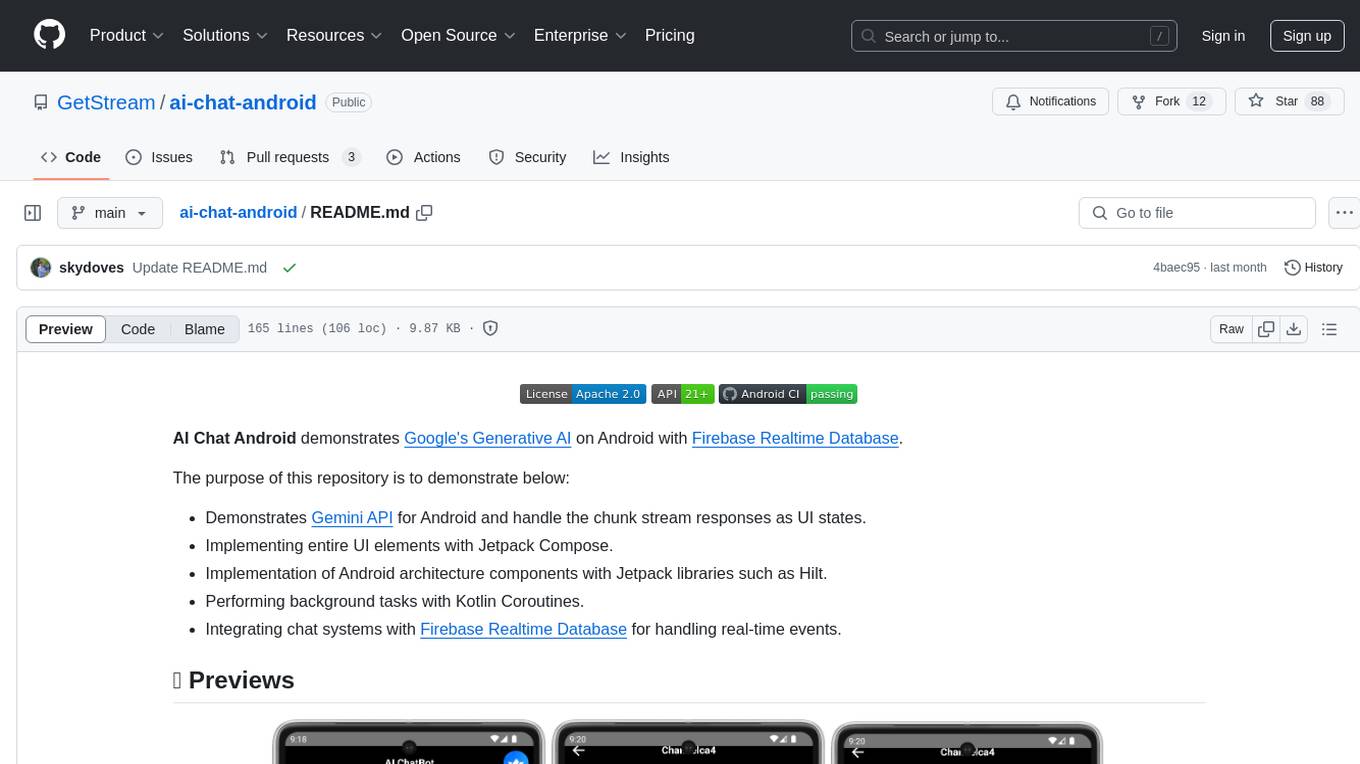

ai-chat-android

AI Chat Android demonstrates Google's Generative AI on Android with Firebase Realtime Database. It showcases Gemini API integration, Jetpack Compose UI elements, Android architecture components with Hilt, Kotlin Coroutines for background tasks, and Firebase Realtime Database integration for real-time events. The project follows Google's official architecture guidance with a modularized structure for reusability, parallel building, and decentralized focusing.

llm-answer-engine

This repository contains the code and instructions needed to build a sophisticated answer engine that leverages the capabilities of Groq, Mistral AI's Mixtral, Langchain.JS, Brave Search, Serper API, and OpenAI. Designed to efficiently return sources, answers, images, videos, and follow-up questions based on user queries, this project is an ideal starting point for developers interested in natural language processing and search technologies.

MyDeviceAI

MyDeviceAI is a personal AI assistant app for iPhone that brings the power of artificial intelligence directly to the device. It focuses on privacy, performance, and personalization by running AI models locally and integrating with privacy-focused web services. The app offers seamless user experience, web search integration, advanced reasoning capabilities, personalization features, chat history access, and broad device support. It requires macOS, Xcode, CocoaPods, Node.js, and a React Native development environment for installation. The technical stack includes React Native framework, AI models like Qwen 3 and BGE Small, SearXNG integration, Redux for state management, AsyncStorage for storage, Lucide for UI components, and tools like ESLint and Prettier for code quality.

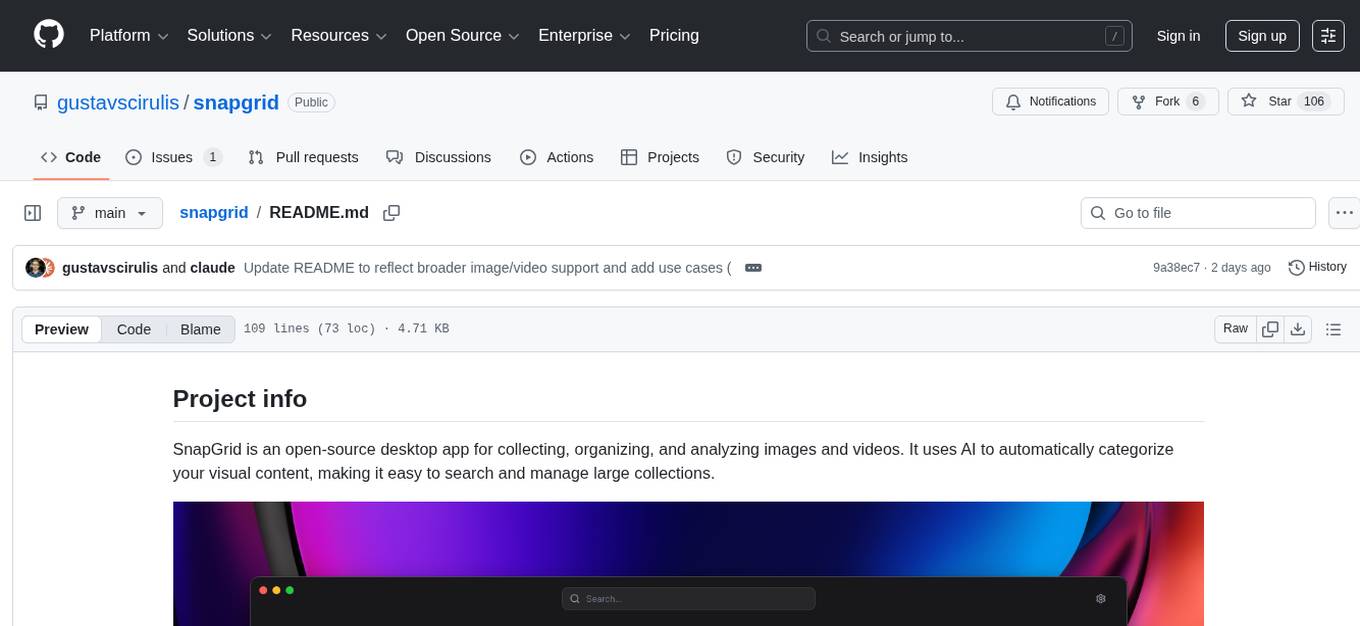

snapgrid

SnapGrid is an open-source desktop app that uses AI to collect, organize, and analyze images and videos. It helps users manage large collections by automatically categorizing visual content. The app offers features like image and video management, organizing media into collections, AI analysis from multiple providers, custom AI instructions, smart organization based on AI-detected categories, and fast local storage. SnapGrid prioritizes privacy by storing all data locally, offering optional AI analysis, and collecting only anonymous usage analytics. Users can contribute to the project, and the app is licensed under the GNU General Public License v3.0.

AIProxyBootstrap

AIProxyBootstrap is a collection of starter apps designed to help users build their own experiences using AIProxy. The sample apps are categorized by services such as OpenAI, Anthropic, etc. Each app provides a template for users to add their AIProxy constants and implements API calls using AIProxySwift. Users can follow the provided instructions to customize the apps for their needs and interact with the AIProxy backend through the iOS simulator.

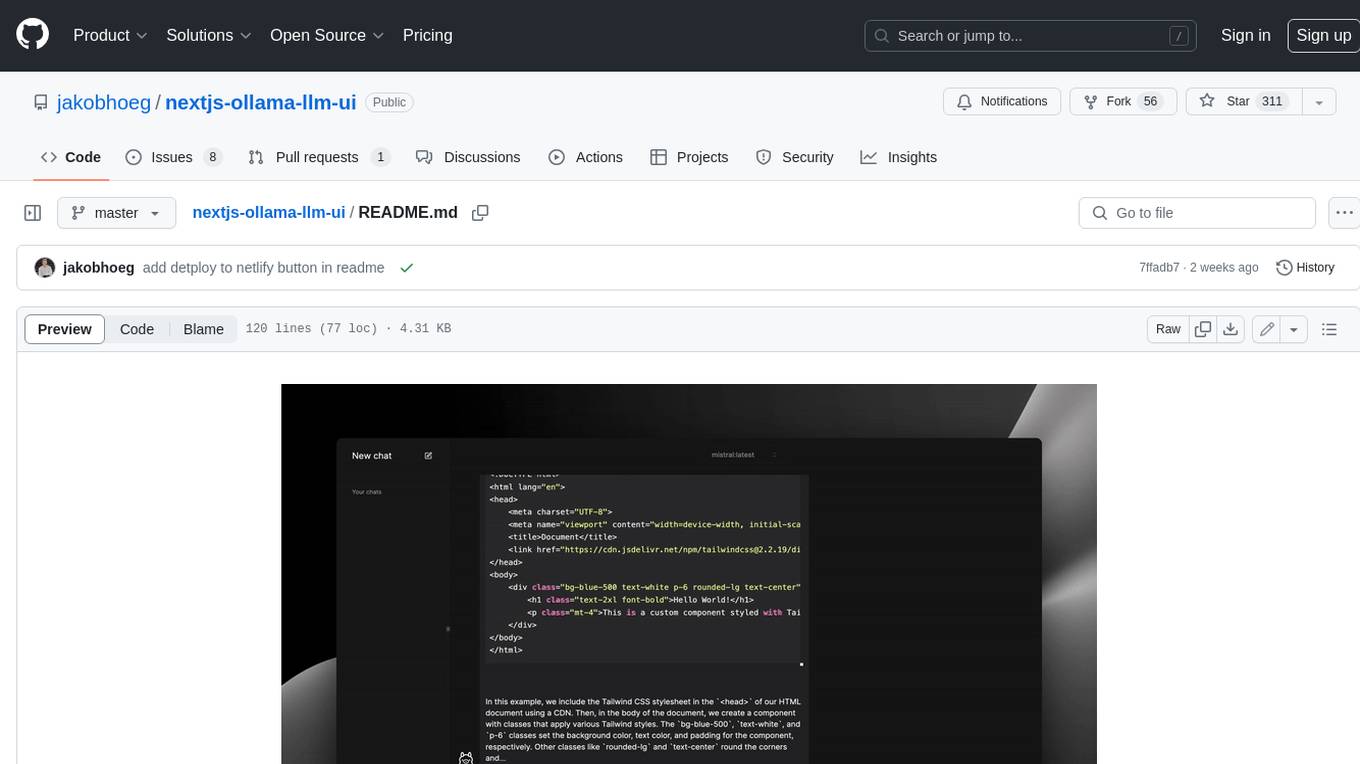

nextjs-ollama-llm-ui

This web interface provides a user-friendly and feature-rich platform for interacting with Ollama Large Language Models (LLMs). It offers a beautiful and intuitive UI inspired by ChatGPT, making it easy for users to get started with LLMs. The interface is fully local, storing chats in local storage for convenience, and fully responsive, allowing users to chat on their phones with the same ease as on a desktop. It features easy setup, code syntax highlighting, and the ability to easily copy codeblocks. Users can also download, pull, and delete models directly from the interface, and switch between models quickly. Chat history is saved and easily accessible, and users can choose between light and dark mode. To use the web interface, users must have Ollama downloaded and running, and Node.js (18+) and npm installed. Installation instructions are provided for running the interface locally. Upcoming features include the ability to send images in prompts, regenerate responses, import and export chats, and add voice input support.

gptme

Personal AI assistant/agent in your terminal, with tools for using the terminal, running code, editing files, browsing the web, using vision, and more. A great coding agent that is general-purpose to assist in all kinds of knowledge work, from a simple but powerful CLI. An unconstrained local alternative to ChatGPT with 'Code Interpreter', Cursor Agent, etc. Not limited by lack of software, internet access, timeouts, or privacy concerns if using local models.

CodeGPT

CodeGPT is an extension for JetBrains IDEs that provides access to state-of-the-art large language models (LLMs) for coding assistance. It offers a range of features to enhance the coding experience, including code completions, a ChatGPT-like interface for instant coding advice, commit message generation, reference file support, name suggestions, and offline development support. CodeGPT is designed to keep privacy in mind, ensuring that user data remains secure and private.

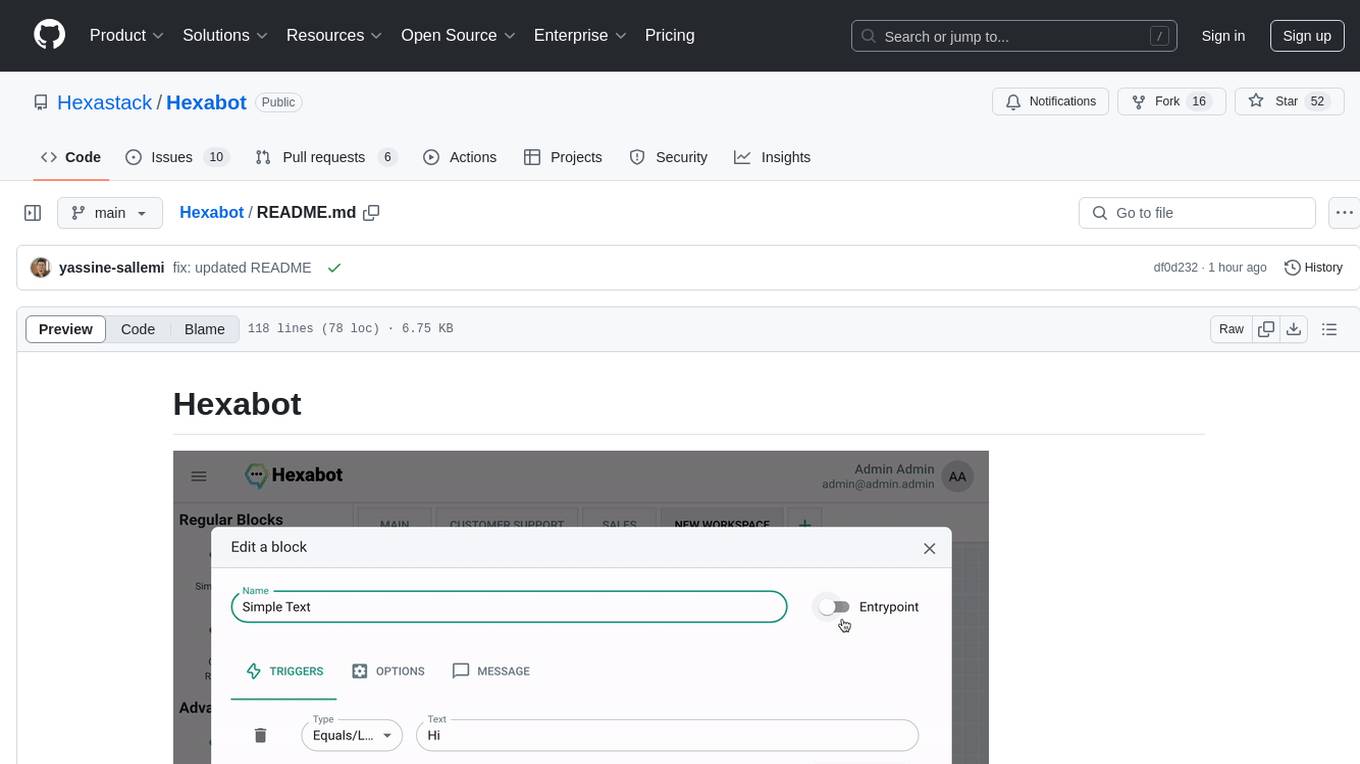

Hexabot

Hexabot Community Edition is an open-source chatbot solution designed for flexibility and customization, offering powerful text-to-action capabilities. It allows users to create and manage AI-powered, multi-channel, and multilingual chatbots with ease. The platform features an analytics dashboard, multi-channel support, visual editor, plugin system, NLP/NLU management, multi-lingual support, CMS integration, user roles & permissions, contextual data, subscribers & labels, and inbox & handover functionalities. The directory structure includes frontend, API, widget, NLU, and docker components. Prerequisites for running Hexabot include Docker and Node.js. The installation process involves cloning the repository, setting up the environment, and running the application. Users can access the UI admin panel and live chat widget for interaction. Various commands are available for managing the Docker services. Detailed documentation and contribution guidelines are provided for users interested in contributing to the project.

Director

Director is a framework to build video agents that can reason through complex video tasks like search, editing, compilation, generation, etc. It enables users to summarize videos, search for specific moments, create clips instantly, integrate GenAI projects and APIs, add overlays, generate thumbnails, and more. Built on VideoDB's 'video-as-data' infrastructure, Director is perfect for developers, creators, and teams looking to simplify media workflows and unlock new possibilities.

gptme

GPTMe is a tool that allows users to interact with an LLM assistant directly in their terminal in a chat-style interface. The tool provides features for the assistant to run shell commands, execute code, read/write files, and more, making it suitable for various development and terminal-based tasks. It serves as a local alternative to ChatGPT's 'Code Interpreter,' offering flexibility and privacy when using a local model. GPTMe supports code execution, file manipulation, context passing, self-correction, and works with various AI models like GPT-4. It also includes a GitHub Bot for requesting changes and operates entirely in GitHub Actions. In progress features include handling long contexts intelligently, a web UI and API for conversations, web and desktop vision, and a tree-based conversation structure.

ProxyAI

ProxyAI is an open-source AI copilot for JetBrains, offering advanced code assistance features powered by top-tier language models. Users can customize their coding experience, receive AI-suggested code changes, autocomplete suggestions, and context-aware naming suggestions. The tool also allows users to chat with images, reference project files and folders, web docs, git history, and search the web. ProxyAI prioritizes user privacy by not collecting sensitive information and only gathering anonymous usage data with consent.

plandex

Plandex is an open source, terminal-based AI coding engine designed for complex tasks. It uses long-running agents to break up large tasks into smaller subtasks, helping users work through backlogs, navigate unfamiliar technologies, and save time on repetitive tasks. Plandex supports various AI models, including OpenAI, Anthropic Claude, Google Gemini, and more. It allows users to manage context efficiently in the terminal, experiment with different approaches using branches, and review changes before applying them. The tool is platform-independent and runs from a single binary with no dependencies.

colors_ai

Colors AI is a cross-platform color scheme generator that uses deep learning from public API providers. It is available for all mainstream operating systems, including mobile. Features: - Choose from open APIs, with the ability to set up custom settings - Export section with many export formats to save or clipboard copy - URL providers to other static color generators - Localized to several languages - Dark and light theme - Material Design 3 - Data encryption - Accessibility - And much more

burpference

Burpference is an open-source extension designed to capture in-scope HTTP requests and responses from Burp's proxy history and send them to a remote LLM API in JSON format. It automates response capture, integrates with APIs, optimizes resource usage, provides color-coded findings visualization, offers comprehensive logging, supports native Burp reporting, and allows flexible configuration. Users can customize system prompts, API keys, and remote hosts, and host models locally to prevent high inference costs. The tool is ideal for offensive web application engagements to surface findings and vulnerabilities.

For similar tasks

gemini-android

Gemini Android is a repository showcasing Google's Generative AI on Android using Stream Chat SDK for Compose. It demonstrates the Gemini API for Android, implements UI elements with Jetpack Compose, utilizes Android architecture components like Hilt and AppStartup, performs background tasks with Kotlin Coroutines, and integrates chat systems with Stream Chat Compose SDK for real-time event handling. The project also provides technical content, instructions on building the project, tech stack details, architecture overview, modularization strategies, and a contribution guideline. It follows Google's official architecture guidance and offers a real-world example of app architecture implementation.

ai-chat-android

AI Chat Android demonstrates Google's Generative AI on Android with Firebase Realtime Database. It showcases Gemini API integration, Jetpack Compose UI elements, Android architecture components with Hilt, Kotlin Coroutines for background tasks, and Firebase Realtime Database integration for real-time events. The project follows Google's official architecture guidance with a modularized structure for reusability, parallel building, and decentralized focusing.

aiortc

aiortc is a Python library for Web Real-Time Communication (WebRTC) and Object Real-Time Communication (ORTC). It provides a simple and readable implementation for programmers to understand and tinker with WebRTC internals. The library allows for exchanging audio, video, and data channels, supports SDP generation/parsing, ICE, DTLS, SRTP, SCTP, and various audio/video codecs. It also enables creating innovative products by leveraging Python ecosystem modules, such as computer vision algorithms with OpenCV. Extensive testing ensures high code quality.

sample-ai-powered-sdlc-patterns-with-aws

This repository showcases AI-powered software development patterns that demonstrate how to integrate generative AI in different stages of the software development lifecycle using Amazon Q Developer, Amazon Q Business, and Amazon Bedrock. The patterns aim to enhance productivity, improve quality, and accelerate delivery through AI-powered development. Users can explore practical approaches for leveraging AWS's generative AI capabilities across various SDLC phases, such as Requirement & Planning, Design & Architecture, Implementation, Testing, Deployment, and Operation & Maintenance. Please note that the patterns use various AWS services, and users are responsible for any associated costs.

For similar jobs

react-native-vision-camera

VisionCamera is a powerful, high-performance Camera library for React Native. It features Photo and Video capture, QR/Barcode scanner, Customizable devices and multi-cameras ("fish-eye" zoom), Customizable resolutions and aspect-ratios (4k/8k images), Customizable FPS (30..240 FPS), Frame Processors (JS worklets to run facial recognition, AI object detection, realtime video chats, ...), Smooth zooming (Reanimated), Fast pause and resume, HDR & Night modes, Custom C++/GPU accelerated video pipeline (OpenGL).

iris_android

This repository contains an offline Android chat application based on llama.cpp example. Users can install, download models, and run the app completely offline and privately. To use the app, users need to go to the releases page, download and install the app. Building the app requires downloading Android Studio, cloning the repository, and importing it into Android Studio. The app can be run offline by following specific steps such as enabling developer options, wireless debugging, and downloading the stable LM model. The project is maintained by Nerve Sparks and contributions are welcome through creating feature branches and pull requests.

aiolauncher_scripts

AIO Launcher Scripts is a collection of Lua scripts that can be used with AIO Launcher to enhance its functionality. These scripts can be used to create widget scripts, search scripts, and side menu scripts. They provide various functions such as displaying text, buttons, progress bars, charts, and interacting with app widgets. The scripts can be used to customize the appearance and behavior of the launcher, add new features, and interact with external services.

gemini-android

Gemini Android is a repository showcasing Google's Generative AI on Android using Stream Chat SDK for Compose. It demonstrates the Gemini API for Android, implements UI elements with Jetpack Compose, utilizes Android architecture components like Hilt and AppStartup, performs background tasks with Kotlin Coroutines, and integrates chat systems with Stream Chat Compose SDK for real-time event handling. The project also provides technical content, instructions on building the project, tech stack details, architecture overview, modularization strategies, and a contribution guideline. It follows Google's official architecture guidance and offers a real-world example of app architecture implementation.

blinkid-android

The BlinkID Android SDK is a comprehensive solution for implementing secure document scanning and extraction. It offers powerful capabilities for extracting data from a wide range of identification documents. The SDK provides features for integrating document scanning into Android apps, including camera requirements, SDK resource pre-bundling, customizing the UX, changing default strings and localization, troubleshooting integration difficulties, and using the SDK through various methods. It also offers options for completely custom UX with low-level API integration. The SDK size is optimized for different processor architectures, and API documentation is available for reference. For any questions or support, users can contact the Microblink team at help.microblink.com.

react-native-airship

React Native Airship is a module designed to integrate Airship's iOS and Android SDKs into React Native applications. It provides developers with the necessary tools to incorporate Airship's push notification services seamlessly. The module offers a simple and efficient way to leverage Airship's features within React Native projects, enhancing user engagement and retention through targeted notifications.

gpt_mobile

GPT Mobile is a chat assistant for Android that allows users to chat with multiple models at once. It supports various platforms such as OpenAI GPT, Anthropic Claude, and Google Gemini. Users can customize temperature, top p (Nucleus sampling), and system prompt. The app features local chat history, Material You style UI, dark mode support, and per app language setting for Android 13+. It is built using 100% Kotlin, Jetpack Compose, and follows a modern app architecture for Android developers.

Native-LLM-for-Android

This repository provides a demonstration of running a native Large Language Model (LLM) on Android devices. It supports various models such as Qwen2.5-Instruct, MiniCPM-DPO/SFT, Yuan2.0, Gemma2-it, StableLM2-Chat/Zephyr, and Phi3.5-mini-instruct. The demo models are optimized for extreme execution speed after being converted from HuggingFace or ModelScope. Users can download the demo models from the provided drive link, place them in the assets folder, and follow specific instructions for decompression and model export. The repository also includes information on quantization methods and performance benchmarks for different models on various devices.