iris_android

Local LLM App

Stars: 152

This repository contains an offline Android chat application based on llama.cpp example. Users can install, download models, and run the app completely offline and privately. To use the app, users need to go to the releases page, download and install the app. Building the app requires downloading Android Studio, cloning the repository, and importing it into Android Studio. The app can be run offline by following specific steps such as enabling developer options, wireless debugging, and downloading the stable LM model. The project is maintained by Nerve Sparks and contributions are welcome through creating feature branches and pull requests.

README:

- This repository contains llama.cpp based offline android chat application cloned from llama.cpp android example. Install, download model and run completely offline privately.

- The following are the instructions to run this application.

- Go to releases : https://github.com/nerve-sparks/iris_android/releases

- Download app

- Install app

- Download Android studio

- Clone this repository and import into Android Studio

git clone https://github.com/nerve-sparks/iris_android.git

- Open developer options on the Android mobile phone.

- Enable developer options.

- Click on developer options and enable wireless debugging.

- In Android Studio, select the drop down on the left side of the 'app' button on the Navbar.

- Select on 'Pair devices using Wi-Fi'. A QR code appears on screen.

- Click on wireless debugging on Android phone. Select 'Pair device with QR code'. Scan the code. (Make sure both devices are on the same Wi-fi)

- You can use Usb Debugging also to connect your phone.

- Once the phone is connected, select the device name in the drop down menu and click on play button.

- In the app, click on 'Download Stable LM'. The model will download and load automatically.

- Now you can run the app offline. (In airplane mode as well)

- Visit www.nervesparks.com to contact us.

- Clone it.

- Create your feature branch (git checkout -b my-new-feature)

- Commit your changes (git commit -m 'Add some feature')

- Push your branch (git push origin my-new-feature)

- Create a new Pull request.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for iris_android

Similar Open Source Tools

iris_android

This repository contains an offline Android chat application based on llama.cpp example. Users can install, download models, and run the app completely offline and privately. To use the app, users need to go to the releases page, download and install the app. Building the app requires downloading Android Studio, cloning the repository, and importing it into Android Studio. The app can be run offline by following specific steps such as enabling developer options, wireless debugging, and downloading the stable LM model. The project is maintained by Nerve Sparks and contributions are welcome through creating feature branches and pull requests.

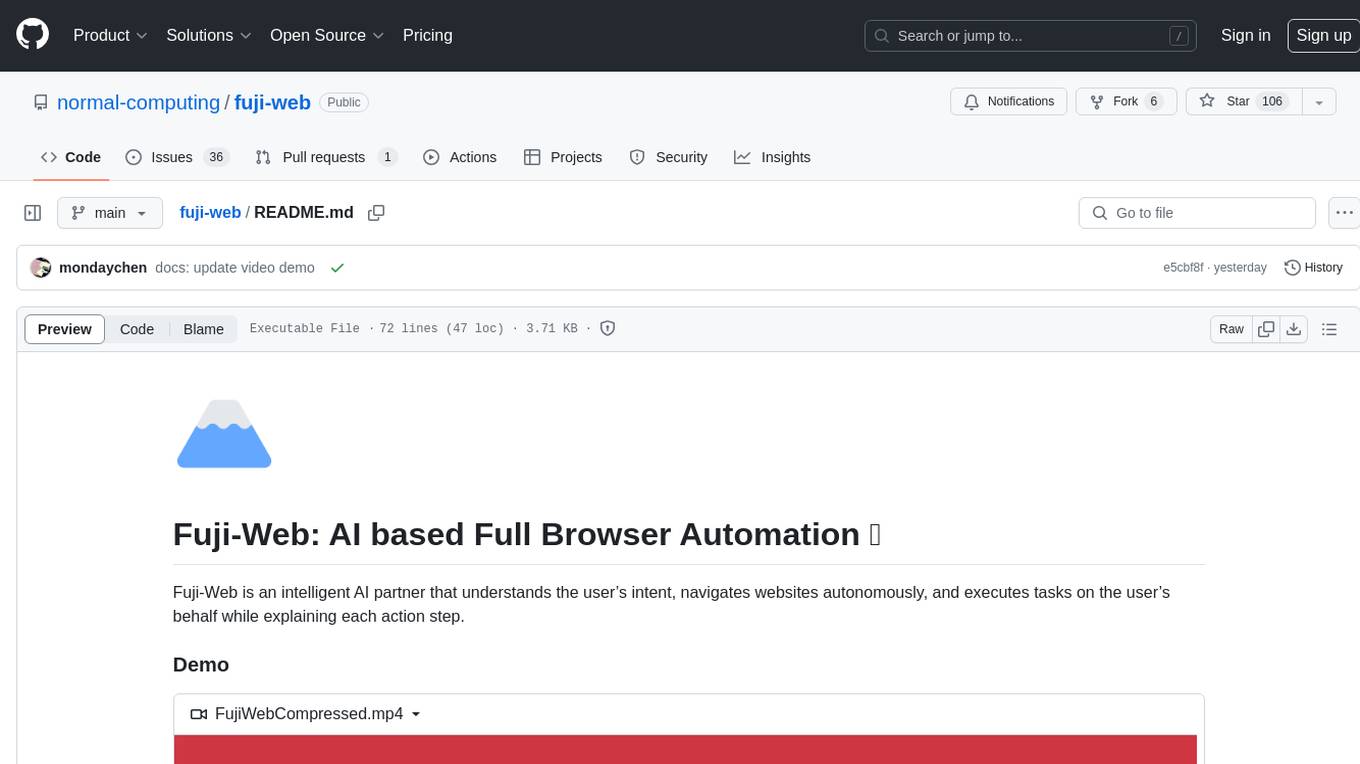

fuji-web

Fuji-Web is an intelligent AI partner designed for full browser automation. It autonomously navigates websites and performs tasks on behalf of the user while providing explanations for each action step. Users can easily install the extension in their browser, access the Fuji icon to input tasks, and interact with the tool to streamline web browsing tasks. The tool aims to enhance user productivity by automating repetitive web actions and providing a seamless browsing experience.

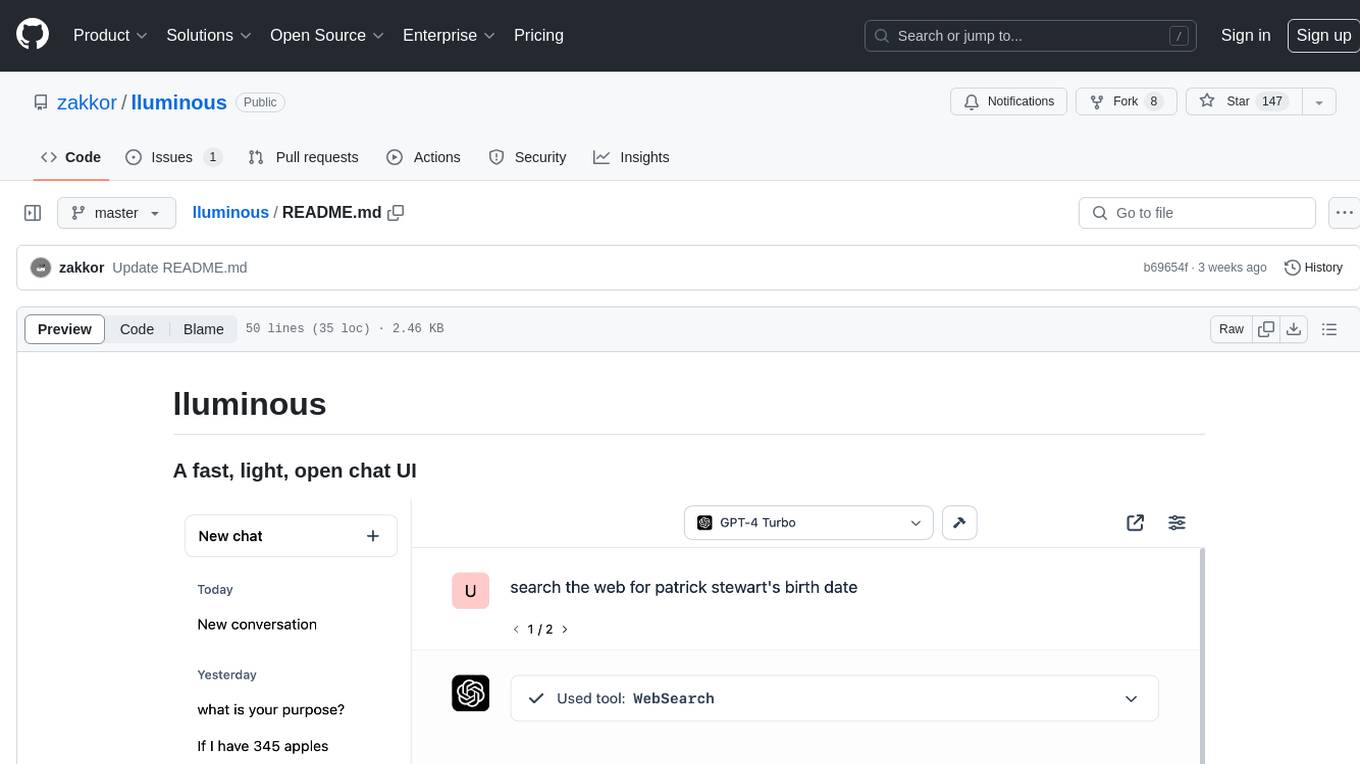

lluminous

lluminous is a fast and light open chat UI that supports multiple providers such as OpenAI, Anthropic, and Groq models. Users can easily plug in their API keys locally to access various models for tasks like multimodal input, image generation, multi-shot prompting, pre-filled responses, and more. The tool ensures privacy by storing all conversation history and keys locally on the user's device. Coming soon features include memory tool, file ingestion/embedding, embeddings-based web search, and prompt templates.

ai2apps

AI2Apps is a visual IDE for building LLM-based AI agent applications, enabling developers to efficiently create AI agents through drag-and-drop, with features like design-to-development for rapid prototyping, direct packaging of agents into apps, powerful debugging capabilities, enhanced user interaction, efficient team collaboration, flexible deployment, multilingual support, simplified product maintenance, and extensibility through plugins.

chrome-extension

Mem0 Chrome Extension lets you own your memory and preferences across any Gen AI apps like ChatGPT, Claude, Perplexity, etc and get personalized, relevant responses. It allows users to store memories from conversations, retrieve relevant memories during chats, manage and organize stored information, and seamlessly integrate with the Claude AI interface. The extension requires an API key and user ID for connecting to the Mem0 API, and it stores this information locally in the browser. Users can troubleshoot common issues, and contributions to improve the extension are welcome under the MIT License.

generative-ai-android

The Google AI client SDK for Android enables developers to use Google's state-of-the-art generative AI models (like Gemini) to build AI-powered features and applications. This SDK supports use cases like: - Generate text from text-only input - Generate text from text-and-images input (multimodal) - Build multi-turn conversations (chat)

pyOllaMx

PyOllaMx is a chatbot application that serves as a gateway to both Ollama and Apple MlX models. It allows users to chat with these models through a native MacOS interface, eliminating the need for external applications like Bing or Chat-GPT. The tool provides a versatile and user-friendly experience for interacting with different models, enabling tasks such as model discovery, model management, chatting, and web searching. PyOllaMx simplifies the workflow for users who want to leverage Ollama and MlX models on their Mac devices.

shipstation

ShipStation is an AI-based website and agents generation platform that optimizes landing page websites and generic connect-anything-to-anything services. It enables seamless communication between service providers and integration partners, offering features like user authentication, project management, code editing, payment integration, and real-time progress tracking. The project architecture includes server-side (Node.js) and client-side (React with Vite) components. Prerequisites include Node.js, npm or yarn, Anthropic API key, Supabase account, Tavily API key, and Razorpay account. Setup instructions involve cloning the repository, setting up Supabase, configuring environment variables, and starting the backend and frontend servers. Users can access the application through the browser, sign up or log in, create landing pages or portfolios, and get websites stored in an S3 bucket. Deployment to Heroku involves building the client project, committing changes, and pushing to the main branch. Contributions to the project are encouraged, and the license encourages doing good.

claude-memory

Claude Memory is a Chrome extension that enhances interactions with Claude by storing and retrieving important information from conversations, making interactions personalized and context-aware. It allows users to easily manage and organize stored information, with seamless integration with the Claude AI interface.

web-llm-chat

WebLLM Chat is a private AI chat interface that combines WebLLM with a user-friendly design, leveraging WebGPU to run large language models natively in your browser. It offers browser-native AI experience with WebGPU acceleration, guaranteed privacy as all data processing happens locally, offline accessibility, user-friendly interface with markdown support, and open-source customization. The project aims to democratize AI technology by making powerful tools accessible directly to end-users, enhancing the chatting experience and broadening the scope for deployment of self-hosted and customizable language models.

freeciv-web

Freeciv-web is an open-source turn-based strategy game that can be played in any HTML5 capable web-browser. It features in-depth gameplay, a wide variety of game modes and options. Players aim to build cities, collect resources, organize their government, and build an army to create the best civilization. The game offers both multiplayer and single-player modes, with a 2D version with isometric graphics and a 3D WebGL version available. The project consists of components like Freeciv-web, Freeciv C server, Freeciv-proxy, Publite2, and pbem for play-by-email support. Developers interested in contributing can check the GitHub issues and TODO file for tasks to work on.

bytechef

ByteChef is an open-source, low-code, extendable API integration and workflow automation platform. It provides an intuitive UI Workflow Editor, event-driven & scheduled workflows, multiple flow controls, built-in code editor supporting Java, JavaScript, Python, and Ruby, rich component ecosystem, extendable with custom connectors, AI-ready with built-in AI components, developer-ready to expose workflows as APIs, version control friendly, self-hosted, scalable, and resilient. It allows users to build and visualize workflows, automate tasks across SaaS apps, internal APIs, and databases, and handle millions of workflows with high availability and fault tolerance.

Gaudi-tutorials

The Intel Gaudi Tutorials repository contains source files for tutorials on using PyTorch and PyTorch Lightning on the Intel Gaudi AI Processor. The tutorials cater to users from beginner to advanced levels and cover various tasks such as fine-tuning models, running inference, and setting up DeepSpeed for training large language models. Users need access to an Intel Gaudi 2 Accelerator card or node, run the Intel Gaudi PyTorch Docker image, clone the tutorial repository, install Jupyterlab, and run the Jupyterlab server to follow along with the tutorials.

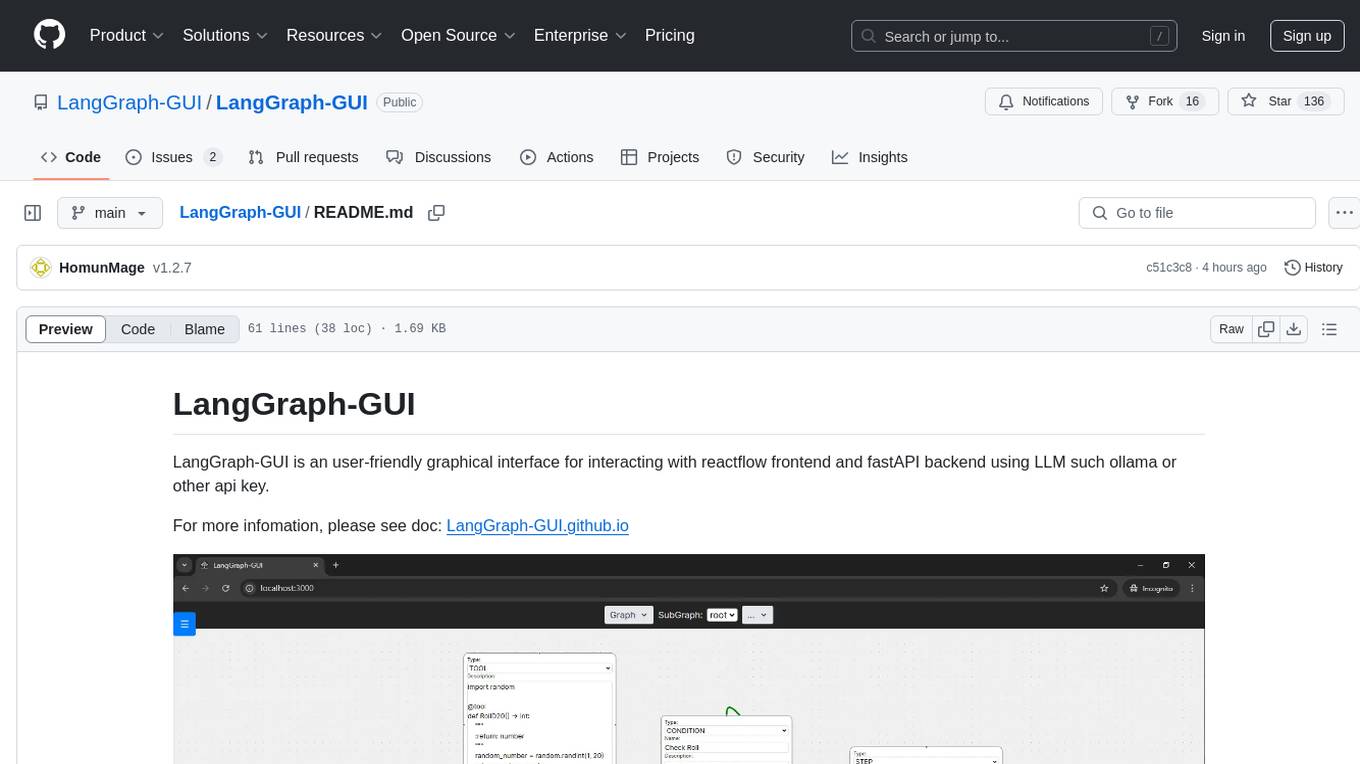

LangGraph-GUI

LangGraph-GUI is a user-friendly graphical interface for interacting with reactflow frontend and fastAPI backend using LLM such as ollama or other API key. It provides a convenient way to work with language models and APIs, offering a seamless experience for users to visualize and interact with the data flow. The tool simplifies the process of setting up the environment and accessing the application, making it easier for users to leverage the power of language models in their projects.

airconsole-api

The AirConsole Javascript API provides documentation and guides for developers to create projects that can be run on the AirConsole platform. The API allows developers to integrate features and functionalities specific to AirConsole, enabling them to build interactive and engaging games and applications for the platform. Developers can refer to the provided documentation and example projects to understand how to utilize the API effectively and create their own projects for AirConsole.

kdbai-samples

KDB.AI is a time-based vector database that allows developers to build scalable, reliable, and real-time applications by providing advanced search, recommendation, and personalization for Generative AI applications. It supports multiple index types, distance metrics, top-N and metadata filtered retrieval, as well as Python and REST interfaces. The repository contains samples demonstrating various use-cases such as temporal similarity search, document search, image search, recommendation systems, sentiment analysis, and more. KDB.AI integrates with platforms like ChatGPT, Langchain, and LlamaIndex. The setup steps require Unix terminal, Python 3.8+, and pip installed. Users can install necessary Python packages and run Jupyter notebooks to interact with the samples.

For similar tasks

iris_android

This repository contains an offline Android chat application based on llama.cpp example. Users can install, download models, and run the app completely offline and privately. To use the app, users need to go to the releases page, download and install the app. Building the app requires downloading Android Studio, cloning the repository, and importing it into Android Studio. The app can be run offline by following specific steps such as enabling developer options, wireless debugging, and downloading the stable LM model. The project is maintained by Nerve Sparks and contributions are welcome through creating feature branches and pull requests.

podman-desktop-extension-ai-lab

Podman AI Lab is an open source extension for Podman Desktop designed to work with Large Language Models (LLMs) on a local environment. It features a recipe catalog with common AI use cases, a curated set of open source models, and a playground for learning, prototyping, and experimentation. Users can quickly and easily get started bringing AI into their applications without depending on external infrastructure, ensuring data privacy and security.

llamafile-docker

This repository, llamafile-docker, automates the process of checking for new releases of Mozilla-Ocho/llamafile, building a Docker image with the latest version, and pushing it to Docker Hub. Users can download a pre-trained model in gguf format and use the Docker image to interact with the model via a server or CLI version. Contributions are welcome under the Apache 2.0 license.

hordelib

horde-engine is a wrapper around ComfyUI designed to run inference pipelines visually designed in the ComfyUI GUI. It enables users to design inference pipelines in ComfyUI and then call them programmatically, maintaining compatibility with the existing horde implementation. The library provides features for processing Horde payloads, initializing the library, downloading and validating models, and generating images based on input data. It also includes custom nodes for preprocessing and tasks such as face restoration and QR code generation. The project depends on various open source projects and bundles some dependencies within the library itself. Users can design ComfyUI pipelines, convert them to the backend format, and run them using the run_image_pipeline() method in hordelib.comfy.Comfy(). The project is actively developed and tested using git, tox, and a specific model directory structure.

HuggingFaceModelDownloader

The HuggingFace Model Downloader is a utility tool for downloading models and datasets from the HuggingFace website. It offers multithreaded downloading for LFS files and ensures the integrity of downloaded models with SHA256 checksum verification. The tool provides features such as nested file downloading, filter downloads for specific LFS model files, support for HuggingFace Access Token, and configuration file support. It can be used as a library or a single binary for easy model downloading and inference in projects.

ollama-r

The Ollama R library provides an easy way to integrate R with Ollama for running language models locally on your machine. It supports working with standard data structures for different LLMs, offers various output formats, and enables integration with other libraries/tools. The library uses the Ollama REST API and requires the Ollama app to be installed, with GPU support for accelerating LLM inference. It is inspired by Ollama Python and JavaScript libraries, making it familiar for users of those languages. The installation process involves downloading the Ollama app, installing the 'ollamar' package, and starting the local server. Example usage includes testing connection, downloading models, generating responses, and listing available models.

DeepResearch

Tongyi DeepResearch is an agentic large language model with 30.5 billion total parameters, designed for long-horizon, deep information-seeking tasks. It demonstrates state-of-the-art performance across various search benchmarks. The model features a fully automated synthetic data generation pipeline, large-scale continual pre-training on agentic data, end-to-end reinforcement learning, and compatibility with two inference paradigms. Users can download the model directly from HuggingFace or ModelScope. The repository also provides benchmark evaluation scripts and information on the Deep Research Agent Family.

For similar jobs

iris_android

This repository contains an offline Android chat application based on llama.cpp example. Users can install, download models, and run the app completely offline and privately. To use the app, users need to go to the releases page, download and install the app. Building the app requires downloading Android Studio, cloning the repository, and importing it into Android Studio. The app can be run offline by following specific steps such as enabling developer options, wireless debugging, and downloading the stable LM model. The project is maintained by Nerve Sparks and contributions are welcome through creating feature branches and pull requests.

react-native-vision-camera

VisionCamera is a powerful, high-performance Camera library for React Native. It features Photo and Video capture, QR/Barcode scanner, Customizable devices and multi-cameras ("fish-eye" zoom), Customizable resolutions and aspect-ratios (4k/8k images), Customizable FPS (30..240 FPS), Frame Processors (JS worklets to run facial recognition, AI object detection, realtime video chats, ...), Smooth zooming (Reanimated), Fast pause and resume, HDR & Night modes, Custom C++/GPU accelerated video pipeline (OpenGL).

aiolauncher_scripts

AIO Launcher Scripts is a collection of Lua scripts that can be used with AIO Launcher to enhance its functionality. These scripts can be used to create widget scripts, search scripts, and side menu scripts. They provide various functions such as displaying text, buttons, progress bars, charts, and interacting with app widgets. The scripts can be used to customize the appearance and behavior of the launcher, add new features, and interact with external services.

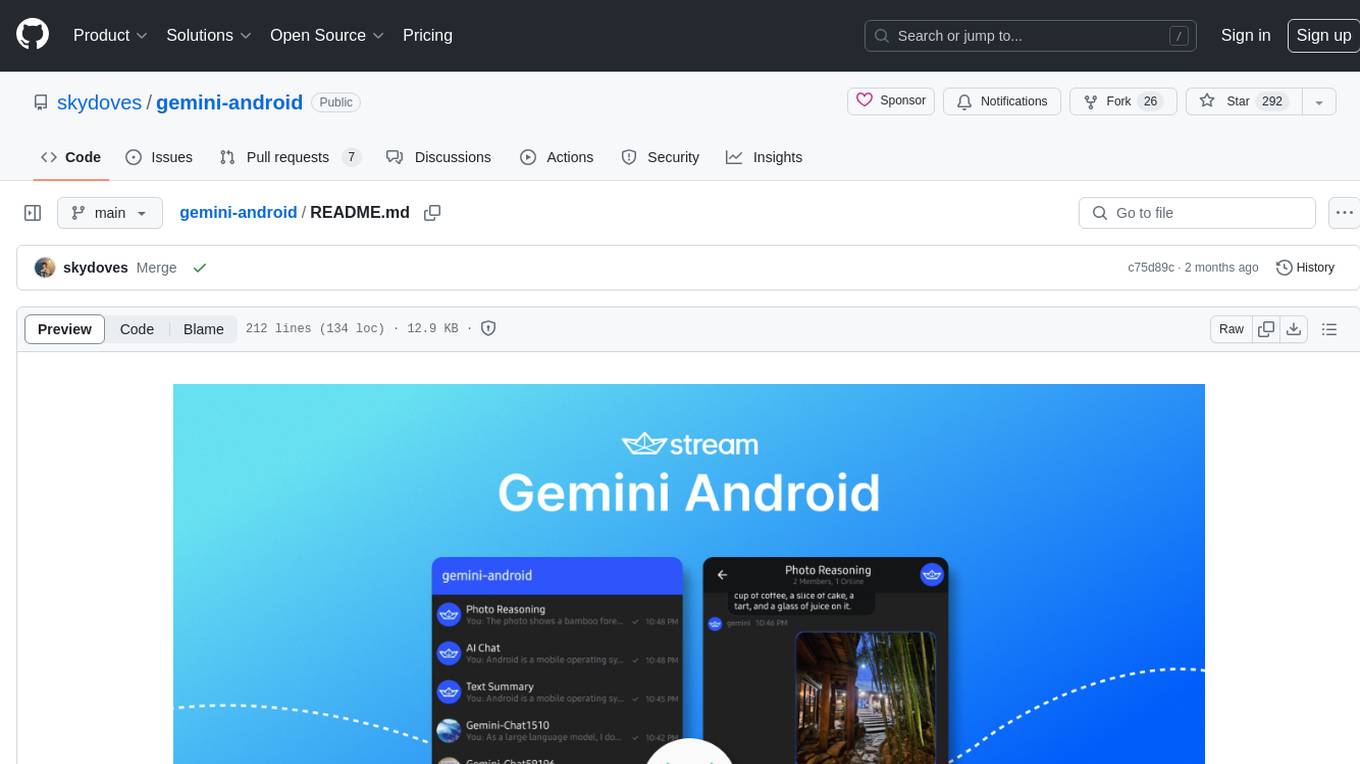

gemini-android

Gemini Android is a repository showcasing Google's Generative AI on Android using Stream Chat SDK for Compose. It demonstrates the Gemini API for Android, implements UI elements with Jetpack Compose, utilizes Android architecture components like Hilt and AppStartup, performs background tasks with Kotlin Coroutines, and integrates chat systems with Stream Chat Compose SDK for real-time event handling. The project also provides technical content, instructions on building the project, tech stack details, architecture overview, modularization strategies, and a contribution guideline. It follows Google's official architecture guidance and offers a real-world example of app architecture implementation.

blinkid-android

The BlinkID Android SDK is a comprehensive solution for implementing secure document scanning and extraction. It offers powerful capabilities for extracting data from a wide range of identification documents. The SDK provides features for integrating document scanning into Android apps, including camera requirements, SDK resource pre-bundling, customizing the UX, changing default strings and localization, troubleshooting integration difficulties, and using the SDK through various methods. It also offers options for completely custom UX with low-level API integration. The SDK size is optimized for different processor architectures, and API documentation is available for reference. For any questions or support, users can contact the Microblink team at help.microblink.com.

react-native-airship

React Native Airship is a module designed to integrate Airship's iOS and Android SDKs into React Native applications. It provides developers with the necessary tools to incorporate Airship's push notification services seamlessly. The module offers a simple and efficient way to leverage Airship's features within React Native projects, enhancing user engagement and retention through targeted notifications.

gpt_mobile

GPT Mobile is a chat assistant for Android that allows users to chat with multiple models at once. It supports various platforms such as OpenAI GPT, Anthropic Claude, and Google Gemini. Users can customize temperature, top p (Nucleus sampling), and system prompt. The app features local chat history, Material You style UI, dark mode support, and per app language setting for Android 13+. It is built using 100% Kotlin, Jetpack Compose, and follows a modern app architecture for Android developers.

Native-LLM-for-Android

This repository provides a demonstration of running a native Large Language Model (LLM) on Android devices. It supports various models such as Qwen2.5-Instruct, MiniCPM-DPO/SFT, Yuan2.0, Gemma2-it, StableLM2-Chat/Zephyr, and Phi3.5-mini-instruct. The demo models are optimized for extreme execution speed after being converted from HuggingFace or ModelScope. Users can download the demo models from the provided drive link, place them in the assets folder, and follow specific instructions for decompression and model export. The repository also includes information on quantization methods and performance benchmarks for different models on various devices.