genai-for-marketing

Showcasing Google Cloud's generative AI for marketing scenarios via application frontend, backend, and detailed, step-by-step guidance for setting up and utilizing generative AI tools, including examples of their use in crafting marketing materials like blog posts and social media content, nl2sql analysis, and campaign personalization.

Stars: 220

This repository provides a deployment guide for utilizing Google Cloud's Generative AI tools in marketing scenarios. It includes step-by-step instructions, examples of crafting marketing materials, and supplementary Jupyter notebooks. The demos cover marketing insights, audience analysis, trendspotting, content search, content generation, and workspace integration. Users can access and visualize marketing data, analyze trends, improve search experience, and generate compelling content. The repository structure includes backend APIs, frontend code, sample notebooks, templates, and installation scripts.

README:

This repository provides a deployment guide showcasing the application of Google Cloud's Generative AI for marketing scenarios. It offers detailed, step-by-step guidance for setting up and utilizing the Generative AI tools, including examples of their use in crafting marketing materials like blog posts and social media content.

Additionally, supplementary Jupyter notebooks are provided to aid users in grasping the concepts explored in the demonstration.

The architecture of all the demos that are implemented in this application is as follows.

.

├── app

└── backend_apis

└── frontend

└── notebooks

└── templates

└── installation_scripts

└── tf

-

/app: Architecture diagrams. -

/backend_apis: Source code for backend APIs. -

/frontend: Source code for the front end UI. -

/notebooks: Sample notebooks demonstrating the concepts covered in this demonstration. -

/templates: Workspace Slides, Docs and Sheets templates used in the demonstration. -

/installation_scripts: Installation scripts used by Terraform. -

/tf: Terraform installation scripts.

In this repository, the following demonstrations are provided:

- Marketing Insights: Utilize Looker Dashboards to access and visualize marketing data, powered by Looker dashboards, marketers can access and visualize marketing data to build data driven marketing campaigns. These features can empower businesses to connect with their target audience more efficiently, thereby improving conversion rates.

- Audience and Insight finder: Conversational interface that translates natural language into SQL queries. This democratizes access to data for non-SQL users removing any bottleneck for marketing teams.

- Trendspotting: Identify emerging trends in the market by analyzing Google Trends data on a Looker dashboard and summarize news related to top search terms. This can help businesses to stay ahead of the competition and to develop products and services that meet the needs and interests of their customers.

- Content Search: Improve search experience for internal or external content with Vertex AI Search for business users.

- Content Generation: Reduce time for content generation with Vertex Foundation Models. Generate compelling and captivating email copy, website articles, social media posts, and assets for PMax. All aimed at achieving specific goals such as boosting sales, generating leads, or enhancing brand awareness. This encompasses both textual and visual elements using Vertex language & vision models.

- Workspace integration: Transfer the insights and assets you've generated earlier to Workspace and visualize in Google Slides, Docs and Sheets.

The notebooks listed below were developed to explain the concepts exposed in this repository:

- Getting Started (installation_scripts/1_environment_setup.ipynb): This notebook is part of the deployment guide and helps with dataset preparation.

- Data Q&A with PaLM API and GoogleSQL (data_qa_with_sql.ipynb): Translate questions using natural language to GoogleSQL to interact with BigQuery.

- News summarization with LangChain agents and Vertex AI PaLM text models (news_summarization_langchain_palm.ipynb): Summarize news articles related to top search terms using LangChain agents and the ReAct concept.

- News summarization with PaLM API (simple_news_summarization.ipynb): News summarization related to top search terms using the PaLM API.

- Imagen fine tuning (Imagen_finetune.ipynb): Fine tune Imagen model.

The following additional (external) notebooks provide supplementary information on the concepts discussed in this repository:

- Tuning and deploy a foundation model: This notebook demonstrates how to tune a model with your dataset to improve the model's response. This is useful for brand voice because it allows you to ensure that the model is generating text that is consistent with your brand's tone and style.

- Document summarization techniques: Two notebooks explaining different techniques to summarize large documents.

- Document Q&A: Two notebooks explaining different techniques to do document Q&A on a large amount of documents.

- Vertex AI Search - Web search: This demo illustrates how to search through a corpus of documents using Vertex AI Search. Additional features include how to search the public Cloud Knowledge Graph using the Enterprise Knowledge Graph API.

- Vertex AI Search - Document search: This demo illustrates how Vertex AI Search and the Vertex AI PaLM API help ensure that generated content is grounded in validated, relevant and up-to-date information.

- Getting Started with LangChain and Vertex AI PaLM API: Use LangChain and Vertex AI PaLM API to generate text.

This section outlines the steps to configure the Google Cloud environment that is required in order to run the code provided in this repository.

You will be interacting with the following resources:

- BigQuery is utilized to house data from Marketing Platforms, while Dataplex is employed to keep their metadata.

- Vertex AI Search & Conversation - are used to construct a search engine for an external website.

- Workspace (Google Slides, Google Docs and Google Sheets) are used to visualized the resources generated by you.

In the Google Cloud Console, on the project selector page, select or create a Google Cloud project.

As this is a DEMONSTRATION, you need to be a project owner in order to set up the environment.

From Cloud Shell, run the following commands to enable the required Cloud APIs.

Replace <CHANGE TO YOUR PROJECT ID> to the id of your project and <CHANGE TO YOUR LOCATION> to the location where your resources will be deployed.

export PROJECT_ID=<CHANGE TO YOUR PROJECT ID>

export LOCATION=<CHANGE TO YOUR LOCATION>

gcloud config set project $PROJECT_ID Enable the services:

gcloud services enable \

run.googleapis.com \

cloudbuild.googleapis.com \

compute.googleapis.com \

cloudresourcemanager.googleapis.com \

iam.googleapis.com \

container.googleapis.com \

cloudapis.googleapis.com \

cloudtrace.googleapis.com \

containerregistry.googleapis.com \

iamcredentials.googleapis.com \

secretmanager.googleapis.com \

firebase.googleapis.com

gcloud services enable \

monitoring.googleapis.com \

logging.googleapis.com \

notebooks.googleapis.com \

aiplatform.googleapis.com \

storage.googleapis.com \

datacatalog.googleapis.com \

appengineflex.googleapis.com \

translate.googleapis.com \

admin.googleapis.com \

docs.googleapis.com \

drive.googleapis.com \

sheets.googleapis.com \

slides.googleapis.com \

firestore.googleapis.comFrom Cloud Shell, execute the following commands:

- Set your project id. Replace

<CHANGE TO YOUR PROJECT ID>with your project ID.

export PROJECT_ID=<CHANGE TO YOUR PROJECT ID>- Follow the instructions in your Shell to authenticate with the same user that has EDITOR/OWNER rights to this project.

gcloud auth application-default login

- Set the Quota Project

gcloud auth application-default $PROJECT_ID

From Cloud Shell, execute the following command:

git clone https://github.com/GoogleCloudPlatform/genai-for-marketing

Open the configuration file and include your project id (line 16) and location (line 17).

From Cloud Shell, navigate to /installation_scripts, install the python packages and execute the following script.

Make sure you have set the environmental variables PROJECT_ID and LOCATION.

cd ./genai-for-marketing/installation_scripts

pip3 install -r requirements.txt

Run the python script to create the BigQuery dataset and the DataCatalog TagTemplate.

python3 1_env_setup_script.py

Follow the steps below to create a search engine for a website using Vertex AI Search.

- Make sure the Vertex AI Search APIs are enabled here and you activated Vertex AI Search here.

- Create and preview the website search engine as described here and here.

After you finished creating the Vertex AI Search datastore, navigate back to the Apps page and copy the ID of the datastore you just created.

Example:

Open the configuration file - line 33 and include the datastore ID. Don't forget to save the configuration file.

Important: Alternatively, you can create a search engine for structure or unstructured data.

In order to render your Looker Dashboards in the Marketing Insights and Campaing Performance pages, you need to update a HTML file with links to them.

- Open this HTML file - lines 18 and 28 and include links to the Looker dashboards for Marketing Insights. Example:

- Add a new line after line 18 (or replace line 18) and include the title and ID of your Looker Dashboard.

Overview

- For each dashboard id/title you included the step above, include a link to it at the end of this file.

<iframe width="1000" height="1000" src="https://googledemo.looker.com/embed/dashboards/2131?allow_login_screen=true" ></iframe>

The allow_login_screen=true in the URL will open the authentication page from Looker to secure the access to your account.

- Open this HTML file - lines 27 and 37 and include links to the Looker dashboards for Campaign Performance.

[Optional] If you have your Google Ads and Google Analytics 4 accounts in production, you can deploy the Marketing Analytics Jumpstart solution to your project, build the Dashboards and link them to the demonstration UI.

Next you will create a Generative AI Agent that will assist the users to answer questions about Google Ads, etc.

- Follow the steps described in this Documentation to build your own Datastore Agent.

- Execute these steps in the same project you will deploy this demo.

- Enable Dialogflow Messenger integration and copy the

agent-idfrom the HTML code snippet provided by the platform. - Open the HTML file - line 117 and replace the variable

dialogFlowCxAgendIdwith theagent-id.

Follow the steps below to setup the Workspace integration with this demonstration.

- Create a Service Account (SA) in the same project you are deploying the demo and download the JSON API Key. This SA doesn't need any roles / permissions.

- Follow this documentation to create the service account. Take note of the service account address; it will look like this:

[email protected]. - Follow this documentation to download the key JSON file with the service account credentials.

- Follow this documentation to create the service account. Take note of the service account address; it will look like this:

- Upload the content of this Service Account to a Secret in Google Cloud Secret Manager.

- Follow the steps in the documentation to accomplish that

- Open the configuration file - line 21 and replace with the full path to your Secret in Secret Manager.

IMPORTANT: For security reasons, DON'T push this credentials to a public Github repository.

This demonstration will create folders under Google Drive, Google Docs documents, Google Slides presentations and Google Sheets documents.

When we create the Drive folder, we set the permission to all users under a specific domain.

- Open config.toml - line 59 and change to the domain you want to share the folder (example: mydomain.com).

- This is the same domain where you have Workspace set up.

Be aware that this configuration will share the folder with all the users in that domain.

If you want to change that behavior, explore different ways of sharing resources from this documentation:

https://developers.google.com/drive/api/reference/rest/v3/permissions#resource:-permission

- Navigate to Google Drive and create a folder.

- This folder will be used to host the templates and assets created in the demo.

- Share this folder with the service account address you created in the previous step. Give "Editor" rights to the service account. The share will look like this:

- Take note of the folder ID. Go into the folder you created and you will be able to find the ID in the URL. The URL will look like this:

- Open the configuration file app_config.toml - line 39 and change to your folder ID.

- IMPORTANT: Also share this folder with people who will be using the code.

- Copy the content of templates to this newly created folder.

- For the Google Slides template (

[template] Marketing Assets):- From the Google Drive folder open the file in Google Slides.

- In Google Slides, click on

FileandSave as Google Slides. Take note of the Slides ID from the URL. - Open the configuration file app_config.toml - line 40 and change to your Slides ID.

- For the Google Docs template (

[template] Gen AI for Marketing Google Doc Template):- From the Google Drive folder open the file in Google Docs.

- In Google Docs, click on

FileandSave as Google Docs. Take note of the Docs ID from the URL. - Open the configuration file app_config.toml - line 41 and change to your Docs ID.

- For the Google Sheets template (

[template] GenAI for Marketing):- From the Google Drive folder open the Google Sheets.

- In Google Sheets, click in

FileandSave as Google Sheets. Take note of the Sheets ID from the URL. - Open the configuration file app_config.toml - line 42 and change to your Sheets ID.

- Navigate to the /backend_apis folder

cd ./genai-for-marketing/backend_apis/

- Open the Dockerfile - line 20 and include your project id where indicated.

- Build and deploy the Docker image to Cloud Run.

gcloud run deploy genai-marketing --source . --region us-central1 --allow-unauthenticated

- Open the Typescript file - line 2 and include the URL to your newly created Cloud Run deployment.

Example:https://marketing-image-tlmb7xv43q-uc.a.run.app

Enable Firebase

- Go to https://console.firebase.google.com/

- Select "Add project" and enter your GCP project id. Make sure it is the same project you deployed the resources so far.

- Add Firebase to one of your existing Google Cloud projects

- Confirm Firebase billing plan

- Continue and complete the configuration

After you have a Firebase project, you can register your web app with that project.

In the center of the Firebase console's project overview page, click the Web icon (plat_web) to launch the setup workflow.

If you've already added an app to your Firebase project, click Add app to display the platform options.

- Enter your app's nickname.

- This nickname is an internal, convenience identifier and is only visible to you in the Firebase console.

- Click Register app.

- Copy the information to include in the configuration.

Open the frontend environment file - line 4 and include the Firebase information.

Angular is the framework for the Frontend. Execute the following commands to build your application.

npm install -g @angular/cli

npm install --legacy-peer-deps

cd ./genai-for-marketing/frontend

ng build Firebase Hosting is used to serve the frontend.

- Install firebase tools

npm install -g firebase-tools

firebase login --no-localhost Follow the steps presented in the console to login to Firebase.

- Init hosting

cd frontend/dist/frontend

firebase init hostingFirst type your Firebase project and then type browser as the public folder.

Leave the defaults for the rest of the questions.

- Deploy hosting

firebase deploy --only hostingNavigate to the created URL to access the Gen AI for Marketing app.

Visit the following URL to create a database for Firestore.

Replace your-project-id with your project ID.

https://console.cloud.google.com/datastore/setup?project=your-project-id

- Choose "Native Mode (Recommended)" for the database mode.

- Click Save

Visit the following URL to enable Firebase Authentication.

Replace your-project-id with your project ID.

https://console.firebase.google.com/project/your-project-id/authentication/providers

- Add a new provider by clicking on "Add new provider"

- Choose "Google" and click "enable" and then "Save".

If you have any questions or if you found any problems with this repository, please report through GitHub issues.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for genai-for-marketing

Similar Open Source Tools

genai-for-marketing

This repository provides a deployment guide for utilizing Google Cloud's Generative AI tools in marketing scenarios. It includes step-by-step instructions, examples of crafting marketing materials, and supplementary Jupyter notebooks. The demos cover marketing insights, audience analysis, trendspotting, content search, content generation, and workspace integration. Users can access and visualize marketing data, analyze trends, improve search experience, and generate compelling content. The repository structure includes backend APIs, frontend code, sample notebooks, templates, and installation scripts.

vertex-ai-creative-studio

GenMedia Creative Studio is an application showcasing the capabilities of Google Cloud Vertex AI generative AI creative APIs. It includes features like Gemini for prompt rewriting and multimodal evaluation of generated images. The app is built with Mesop, a Python-based UI framework, enabling rapid development of web and internal apps. The Experimental folder contains stand-alone applications and upcoming features demonstrating cutting-edge generative AI capabilities, such as image generation, prompting techniques, and audio/video tools.

serverless-chat-langchainjs

This sample shows how to build a serverless chat experience with Retrieval-Augmented Generation using LangChain.js and Azure. The application is hosted on Azure Static Web Apps and Azure Functions, with Azure Cosmos DB for MongoDB vCore as the vector database. You can use it as a starting point for building more complex AI applications.

devika

Devika is an advanced AI software engineer that can understand high-level human instructions, break them down into steps, research relevant information, and write code to achieve the given objective. Devika utilizes large language models, planning and reasoning algorithms, and web browsing abilities to intelligently develop software. Devika aims to revolutionize the way we build software by providing an AI pair programmer who can take on complex coding tasks with minimal human guidance. Whether you need to create a new feature, fix a bug, or develop an entire project from scratch, Devika is here to assist you.

agentok

Agentok Studio is a visual tool built for AutoGen, a cutting-edge agent framework from Microsoft and various contributors. It offers intuitive visual tools to simplify the construction and management of complex agent-based workflows. Users can create workflows visually as graphs, chat with agents, and share flow templates. The tool is designed to streamline the development process for creators and developers working on next-generation Multi-Agent Applications.

raggenie

RAGGENIE is a low-code RAG builder tool designed to simplify the creation of conversational AI applications. It offers out-of-the-box plugins for connecting to various data sources and building conversational AI on top of them, including integration with pre-built agents for actions. The tool is open-source under the MIT license, with a current focus on making it easy to build RAG applications and future plans for maintenance, monitoring, and transitioning applications from pilots to production.

azure-search-openai-demo

This sample demonstrates a few approaches for creating ChatGPT-like experiences over your own data using the Retrieval Augmented Generation pattern. It uses Azure OpenAI Service to access a GPT model (gpt-35-turbo), and Azure AI Search for data indexing and retrieval. The repo includes sample data so it's ready to try end to end. In this sample application we use a fictitious company called Contoso Electronics, and the experience allows its employees to ask questions about the benefits, internal policies, as well as job descriptions and roles.

vector-vein

VectorVein is a no-code AI workflow software inspired by LangChain and langflow, aiming to combine the powerful capabilities of large language models and enable users to achieve intelligent and automated daily workflows through simple drag-and-drop actions. Users can create powerful workflows without the need for programming, automating all tasks with ease. The software allows users to define inputs, outputs, and processing methods to create customized workflow processes for various tasks such as translation, mind mapping, summarizing web articles, and automatic categorization of customer reviews.

WebCraftifyAI

WebCraftifyAI is a software aid that makes it easy to create and build web pages and content. It is designed to be user-friendly and accessible to people of all skill levels. With WebCraftifyAI, you can quickly and easily create professional-looking websites without having to learn complex coding or design skills.

coral-cloud

Coral Cloud Resorts is a sample hospitality application that showcases Data Cloud, Agents, and Prompts. It provides highly personalized guest experiences through smart automation, content generation, and summarization. The app requires licenses for Data Cloud, Agents, Prompt Builder, and Einstein for Sales. Users can activate features, deploy metadata, assign permission sets, import sample data, and troubleshoot common issues. Additionally, the repository offers integration with modern web development tools like Prettier, ESLint, and pre-commit hooks for code formatting and linting.

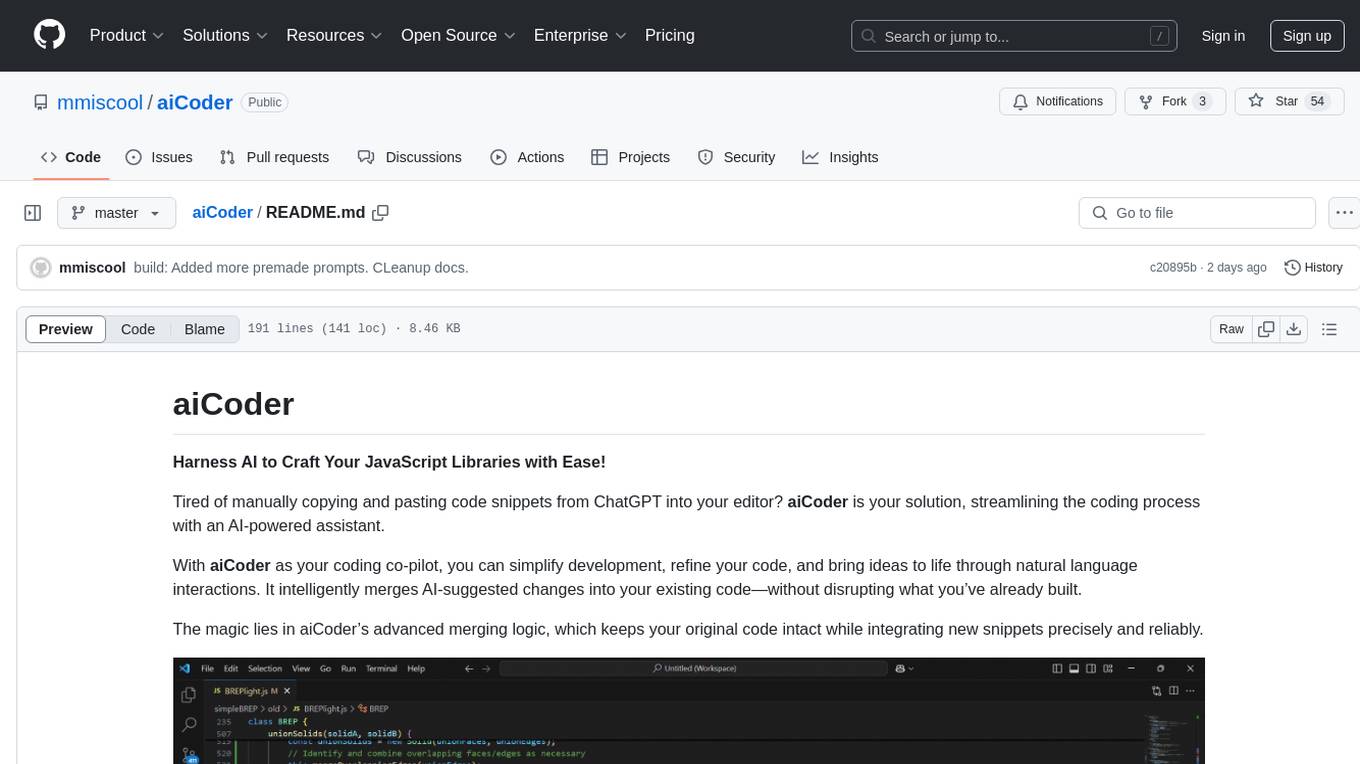

aiCoder

aiCoder is an AI-powered tool designed to streamline the coding process by automating repetitive tasks, providing intelligent code suggestions, and facilitating the integration of new features into existing codebases. It offers a chat interface for natural language interactions, methods and stubs lists for code modification, and settings customization for project-specific prompts. Users can leverage aiCoder to enhance code quality, focus on higher-level design, and save time during development.

easy-web-summarizer

A Python script leveraging advanced language models to summarize webpages and youtube videos directly from URLs. It integrates with LangChain and ChatOllama for state-of-the-art summarization, providing detailed summaries for quick understanding of web-based documents. The tool offers a command-line interface for easy use and integration into workflows, with plans to add support for translating to different languages and streaming text output on gradio. It can also be used via a web UI using the gradio app. The script is dockerized for easy deployment and is open for contributions to enhance functionality and capabilities.

create-tsi

Create TSI is a generative AI RAG toolkit that simplifies the process of creating AI Applications using LlamaIndex with low code. The toolkit leverages LLMs hosted by T-Systems on Open Telekom Cloud to generate bots, write agents, and customize them for specific use cases. It provides a Next.js-powered front-end for a chat interface, a Python FastAPI backend powered by llama-index package, and the ability to ingest and index user-supplied data for answering questions.

contoso-chat

Contoso Chat is a Python sample demonstrating how to build, evaluate, and deploy a retail copilot application with Azure AI Studio using Promptflow with Prompty assets. The sample implements a Retrieval Augmented Generation approach to answer customer queries based on the company's product catalog and customer purchase history. It utilizes Azure AI Search, Azure Cosmos DB, Azure OpenAI, text-embeddings-ada-002, and GPT models for vectorizing user queries, AI-assisted evaluation, and generating chat responses. By exploring this sample, users can learn to build a retail copilot application, define prompts using Prompty, design, run & evaluate a copilot using Promptflow, provision and deploy the solution to Azure using the Azure Developer CLI, and understand Responsible AI practices for evaluation and content safety.

shipstation

ShipStation is an AI-based website and agents generation platform that optimizes landing page websites and generic connect-anything-to-anything services. It enables seamless communication between service providers and integration partners, offering features like user authentication, project management, code editing, payment integration, and real-time progress tracking. The project architecture includes server-side (Node.js) and client-side (React with Vite) components. Prerequisites include Node.js, npm or yarn, Anthropic API key, Supabase account, Tavily API key, and Razorpay account. Setup instructions involve cloning the repository, setting up Supabase, configuring environment variables, and starting the backend and frontend servers. Users can access the application through the browser, sign up or log in, create landing pages or portfolios, and get websites stored in an S3 bucket. Deployment to Heroku involves building the client project, committing changes, and pushing to the main branch. Contributions to the project are encouraged, and the license encourages doing good.

conversational-agent-langchain

This repository contains a Rest-Backend for a Conversational Agent that allows embedding documents, semantic search, QA based on documents, and document processing with Large Language Models. It uses Aleph Alpha and OpenAI Large Language Models to generate responses to user queries, includes a vector database, and provides a REST API built with FastAPI. The project also features semantic search, secret management for API keys, installation instructions, and development guidelines for both backend and frontend components.

For similar tasks

genai-for-marketing

This repository provides a deployment guide for utilizing Google Cloud's Generative AI tools in marketing scenarios. It includes step-by-step instructions, examples of crafting marketing materials, and supplementary Jupyter notebooks. The demos cover marketing insights, audience analysis, trendspotting, content search, content generation, and workspace integration. Users can access and visualize marketing data, analyze trends, improve search experience, and generate compelling content. The repository structure includes backend APIs, frontend code, sample notebooks, templates, and installation scripts.

floneum

Floneum is a graph editor that makes it easy to develop your own AI workflows. It uses large language models (LLMs) to run AI models locally, without any external dependencies or even a GPU. This makes it easy to use LLMs with your own data, without worrying about privacy. Floneum also has a plugin system that allows you to improve the performance of LLMs and make them work better for your specific use case. Plugins can be used in any language that supports web assembly, and they can control the output of LLMs with a process similar to JSONformer or guidance.

llm-answer-engine

This repository contains the code and instructions needed to build a sophisticated answer engine that leverages the capabilities of Groq, Mistral AI's Mixtral, Langchain.JS, Brave Search, Serper API, and OpenAI. Designed to efficiently return sources, answers, images, videos, and follow-up questions based on user queries, this project is an ideal starting point for developers interested in natural language processing and search technologies.

discourse-ai

Discourse AI is a plugin for the Discourse forum software that uses artificial intelligence to improve the user experience. It can automatically generate content, moderate posts, and answer questions. This can free up moderators and administrators to focus on other tasks, and it can help to create a more engaging and informative community.

Gemini-API

Gemini-API is a reverse-engineered asynchronous Python wrapper for Google Gemini web app (formerly Bard). It provides features like persistent cookies, ImageFx support, extension support, classified outputs, official flavor, and asynchronous operation. The tool allows users to generate contents from text or images, have conversations across multiple turns, retrieve images in response, generate images with ImageFx, save images to local files, use Gemini extensions, check and switch reply candidates, and control log level.

generative-ai-dart

The Google Generative AI SDK for Dart enables developers to utilize cutting-edge Large Language Models (LLMs) for creating language applications. It provides access to the Gemini API for generating content using state-of-the-art models. Developers can integrate the SDK into their Dart or Flutter applications to leverage powerful AI capabilities. It is recommended to use the SDK for server-side API calls to ensure the security of API keys and protect against potential key exposure in mobile or web apps.

Dough

Dough is a tool for crafting videos with AI, allowing users to guide video generations with precision using images and example videos. Users can create guidance frames, assemble shots, and animate them by defining parameters and selecting guidance videos. The tool aims to help users make beautiful and unique video creations, providing control over the generation process. Setup instructions are available for Linux and Windows platforms, with detailed steps for installation and running the app.

ChaKt-KMP

ChaKt is a multiplatform app built using Kotlin and Compose Multiplatform to demonstrate the use of Generative AI SDK for Kotlin Multiplatform to generate content using Google's Generative AI models. It features a simple chat based user interface and experience to interact with AI. The app supports mobile, desktop, and web platforms, and is built with Kotlin Multiplatform, Kotlin Coroutines, Compose Multiplatform, Generative AI SDK, Calf - File picker, and BuildKonfig. Users can contribute to the project by following the guidelines in CONTRIBUTING.md. The app is licensed under the MIT License.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.