holohub

Central repository for Holoscan Reference Applications

Stars: 151

Holohub is a central repository for the NVIDIA Holoscan AI sensor processing community to share reference applications, operators, tutorials, and benchmarks. It includes example applications, community components, package configurations, and tutorials. Users and developers of the Holoscan platform are invited to reuse and contribute to this repository. The repository provides detailed instructions on prerequisites, building, running applications, contributing, and glossary terms. It also offers a searchable catalog of available components on the Holoscan SDK User Guide website.

README:

Visit https://nvidia-holoscan.github.io/holohub for a searchable catalog of all available components.

This is a central repository for the NVIDIA Holoscan AI sensor processing community to share reference applications, operators, tutorials and benchmarks. We invite users and developers of the Holoscan platform to reuse and contribute to this repository.

This repository is a collection of applications and extensions created by the Holoscan AI sensor processing community. The following directories make up the core of this repo:

-

Example applications: Visit

applicationsto explore an evolving collection of example applications built on the NVIDIA Holoscan platform. Examples are available from NVIDIA, partners, and community collaborators, and provide a demonstration of the SDK capabilities. -

Community components: Visit

operatorsandgxf_extensionsto explore reusable Holoscan modules. -

Package configurations: Visit

pkgfor a list of Debian packages to generate, to distribute operators and applications for easier development. -

Tutorials: Visit

tutorialsfor extended walkthroughs and tips for the Holoscan platform. -

Benchmarks: Visit

benchmarksfor performance benchmarks, tools, and examples to evaluate the performance of Holoscan applications.

Visit the Holoscan SDK User Guide to learn more about the NVIDIA Holoscan AI sensor processing platform. You can also chat with the Holoscan-GPT Large Language Model to learn about using Holoscan SDK, ask questions, and get code help. Holoscan-GPT requires an OpenAI account.

You will need a platform supported by NVIDIA Holoscan SDK. Refer to the Holoscan SDK User Guide for the latest requirements. In general, Holoscan supported platforms include:

- An x64 PC with an Ubuntu operating system and an NVIDIA GPU; or

- A supported NVIDIA ARM development kit.

Individual examples and operators in this repo may have additional platform requirements. For instance, some examples may support only ARM platforms.

You may choose to build applications and operators in a containerized development environment or in your native environment.

We strongly recommend new users follow our Container Build instructions to set up a container for development.

If you prefer to build locally without docker, take a look at our Native Build instructions.

Once your development environment is configured you may move on to Building the Holohub components you are interested in.

NOTE: Several applications and operators require additional dependencies beyond the basic prerequisites listed above. Please refer to the README of the specific application or operator for detailed dependency information before attempting to build or run it.

To build and run in a containerized environment you will need:

- the NVIDIA Container Toolkit (v1.12.2 or later)

-

Docker, including the buildx plugin (

docker-buildx-plugin) -

gitversion control - (optional) Python 3.10+ on the host machine to run the

holohubinfrastructure script

You will also need to set up your NVIDIA NGC credentials at ngc.nvidia.com.

Clone the repository to your local system:

git clone https://www.github.com/nvidia-holoscan/holohub.git

cd holohubAlternatively, download sources as a ZIP archive from the GitHub homepage.

The easiest way to build and run Holohub applications is to use the ./holohub run command.

./holohub run <application_name>If you want to use a specific based image for the application, you can use the --base-img option.

./holohub run --base-img <base_image> <application_name>NOTE: The build_and_run command is not supported for all applications and operators, especially applications that requires manual configurations or applications that requires additional datasets. Please refer to the README of each application or operator for more information.

If you want a more detailed command to build and run a specific application, please follow the instructions below.

Holohub provides a default development container that can be used to build and run applications. However several applications and operator requires specific dependencies that are not available in the default development container and are provided by specific docker files. Please refer to the README of each application or operator for more information.

Run the following command to build the development container for a given project. The build may take a few minutes.

./holohub build-container [project_name]Check to verify that the image is created:

$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

...

holohub ngc-v3.5.0-dgpu c93e9f90a263 1 minutes ago 19.1GB

...Note: The holohub command line program will by default detect if the system is using an iGPU (integrated GPU) or a dGPU (discrete GPU) and use NGC's Holoscan SDK container for the Container build. See Advanced Container Build Options if you would like to use an older version of the SDK as a custom base image.

See the Developer Reference document for additional options.

Launch the Docker container environment:

./holohub run-container [my_project]You are now ready to build Holohub operators, applications, or packages!

Note The run-container option will use the default development container built using Holoscan SDK's container from NGC for the local GPU. The script will also inspect for available video devices (V4L2, AJA capture boards, Deltacast capture boards) and the presence of Deltacast's Videomaster SDK and map it into the development container.

See also: Advanced Launch Options

The development container has been tested on the following platforms:

- x86_64 workstation with multiple RTX GPUs

- Clara AGX Dev Kit (dGPU mode)

- IGX Orin Dev Kit (dGPU and iGPU mode)

- AGX Orin Dev Kit (iGPU)

Notes for AGX Orin Dev Kit:

(1) On AGX Orin Dev Kit the launch script will add --privileged and --group-add video to the docker run command for the reference applications to work. Please also make sure that the current user is member of the group video.

(2) When building Holoscan SDK on AGX Orin Dev Kit from source please add the option --build-args="--cudaarchs all" to the ./holohub build-container command to include support for AGX Orin's iGPU.

Make sure you have either launched your development container or set up your local environment before attempting to build Holohub components.

This repository provides a convenience holohub script to abstract some of the CMake build process below.

Run the following to list existing components available to build:

./holohub listThen run the following to build the component of your choice:

# Build using the component name

./holohub build <package|application|operator>

# Ex: ./holohub build endoscopy_tool_trackingThe build artifacts will be created under ./build/<component_name> by default to isolate them from other components which might have different build environment requirements. You can override this behavior and other defaults, see ./holohub build --help for more details.

To list all available applications you can run the following command:

./holohub listThen you can run the application using the command:

./holohub run <application>

# Ex: ./holohub run endoscopy_tool_trackingSeveral applications are implemented in both C++ and Python programming languages.

You can request a specific implementation as an argument to the ./holohub run command

or omit the argument to use the default language.

For instance, the following command will run the Python implementation of the tool tracking

endoscopy application:

./holohub run endoscopy_tool_tracking --language=pythonThe run script reads the "run" command from the metadata.json file for a given application and runs from the "workdir" directory. Make sure you build the application (if applicable) before running it.

You can run the command below to reset your build directory:

./holohub clear-cacheIn some cases you may also want to clear out datasets downloaded by applications to the data folder:

rm -rf ./dataNote that many applications supply custom container environments with build and runtime dependencies. Failing to clean the build cache between different applications may result in unexpected behavior where build tools or libraries appear to be broken or missing. Clearing the build cache is a good first check to address those issues.

The goal of this repository is to allow engineering teams to easily contribute and share new functionalities and to demonstrate applications. Please review the Contributing Guidelines for more information.

Many applications use the following keyword definitions in their README descriptions:

-

<HOLOHUB_SOURCE_DIR>: Path to the source directory -

<HOLOHUB_BUILD_DIR>: Path to the build directory -

<HOLOSCAN_INSTALL_DIR>: Path to the installation directory of Holoscan SDK -

<DATA_DIR>: Path to the top level directory containing the datasets for the reference applications -

<MODEL_DIR>: Path to the directory containing the inference model(s)

Refer to additional documentation:

You can find additional information on Holoscan SDK at:

- Holoscan GitHub organization

- Holoscan SDK repository

- Holoscan-GPT (requires an OpenAI account)

- Holoscan Support Forum

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for holohub

Similar Open Source Tools

holohub

Holohub is a central repository for the NVIDIA Holoscan AI sensor processing community to share reference applications, operators, tutorials, and benchmarks. It includes example applications, community components, package configurations, and tutorials. Users and developers of the Holoscan platform are invited to reuse and contribute to this repository. The repository provides detailed instructions on prerequisites, building, running applications, contributing, and glossary terms. It also offers a searchable catalog of available components on the Holoscan SDK User Guide website.

geti-sdk

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and enhancing collaboration between teams. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, setting project and model configuration, launching and monitoring training jobs, and media upload and prediction. The SDK also includes tutorial-style Jupyter notebooks demonstrating its usage.

geti-sdk

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and fostering collaboration. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, configuration management, training job monitoring, media upload, and prediction. The repository also includes tutorial-style Jupyter notebooks demonstrating SDK usage.

serverless-pdf-chat

The serverless-pdf-chat repository contains a sample application that allows users to ask natural language questions of any PDF document they upload. It leverages serverless services like Amazon Bedrock, AWS Lambda, and Amazon DynamoDB to provide text generation and analysis capabilities. The application architecture involves uploading a PDF document to an S3 bucket, extracting metadata, converting text to vectors, and using a LangChain to search for information related to user prompts. The application is not intended for production use and serves as a demonstration and educational tool.

unitycatalog

Unity Catalog is an open and interoperable catalog for data and AI, supporting multi-format tables, unstructured data, and AI assets. It offers plugin support for extensibility and interoperates with Delta Sharing protocol. The catalog is fully open with OpenAPI spec and OSS implementation, providing unified governance for data and AI with asset-level access control enforced through REST APIs.

vector-vein

VectorVein is a no-code AI workflow software inspired by LangChain and langflow, aiming to combine the powerful capabilities of large language models and enable users to achieve intelligent and automated daily workflows through simple drag-and-drop actions. Users can create powerful workflows without the need for programming, automating all tasks with ease. The software allows users to define inputs, outputs, and processing methods to create customized workflow processes for various tasks such as translation, mind mapping, summarizing web articles, and automatic categorization of customer reviews.

azure-search-openai-javascript

This sample demonstrates a few approaches for creating ChatGPT-like experiences over your own data using the Retrieval Augmented Generation pattern. It uses Azure OpenAI Service to access the ChatGPT model (gpt-35-turbo), and Azure AI Search for data indexing and retrieval.

guidellm

GuideLLM is a platform for evaluating and optimizing the deployment of large language models (LLMs). By simulating real-world inference workloads, GuideLLM enables users to assess the performance, resource requirements, and cost implications of deploying LLMs on various hardware configurations. This approach ensures efficient, scalable, and cost-effective LLM inference serving while maintaining high service quality. The tool provides features for performance evaluation, resource optimization, cost estimation, and scalability testing.

civitai

Civitai is a platform where people can share their stable diffusion models (textual inversions, hypernetworks, aesthetic gradients, VAEs, and any other crazy stuff people do to customize their AI generations), collaborate with others to improve them, and learn from each other's work. The platform allows users to create an account, upload their models, and browse models that have been shared by others. Users can also leave comments and feedback on each other's models to facilitate collaboration and knowledge sharing.

MCP2Lambda

MCP2Lambda is a server that acts as a bridge between MCP clients and AWS Lambda functions, allowing generative AI models to access and run Lambda functions as tools. It enables Large Language Models (LLMs) to interact with Lambda functions without code changes, providing access to private resources, AWS services, private networks, and the public internet. The server supports autodiscovery of Lambda functions and their invocation by name with parameters. It standardizes AI model access to external tools using the MCP protocol.

agentok

Agentok Studio is a visual tool built for AutoGen, a cutting-edge agent framework from Microsoft and various contributors. It offers intuitive visual tools to simplify the construction and management of complex agent-based workflows. Users can create workflows visually as graphs, chat with agents, and share flow templates. The tool is designed to streamline the development process for creators and developers working on next-generation Multi-Agent Applications.

knowledge-graph-of-thoughts

Knowledge Graph of Thoughts (KGoT) is an innovative AI assistant architecture that integrates LLM reasoning with dynamically constructed knowledge graphs (KGs). KGoT extracts and structures task-relevant knowledge into a dynamic KG representation, iteratively enhanced through external tools such as math solvers, web crawlers, and Python scripts. Such structured representation of task-relevant knowledge enables low-cost models to solve complex tasks effectively. The KGoT system consists of three main components: the Controller, the Graph Store, and the Integrated Tools, each playing a critical role in the task-solving process.

AgentIQ

AgentIQ is a flexible library designed to seamlessly integrate enterprise agents with various data sources and tools. It enables true composability by treating agents, tools, and workflows as simple function calls. With features like framework agnosticism, reusability, rapid development, profiling, observability, evaluation system, user interface, and MCP compatibility, AgentIQ empowers developers to move quickly, experiment freely, and ensure reliability across agent-driven projects.

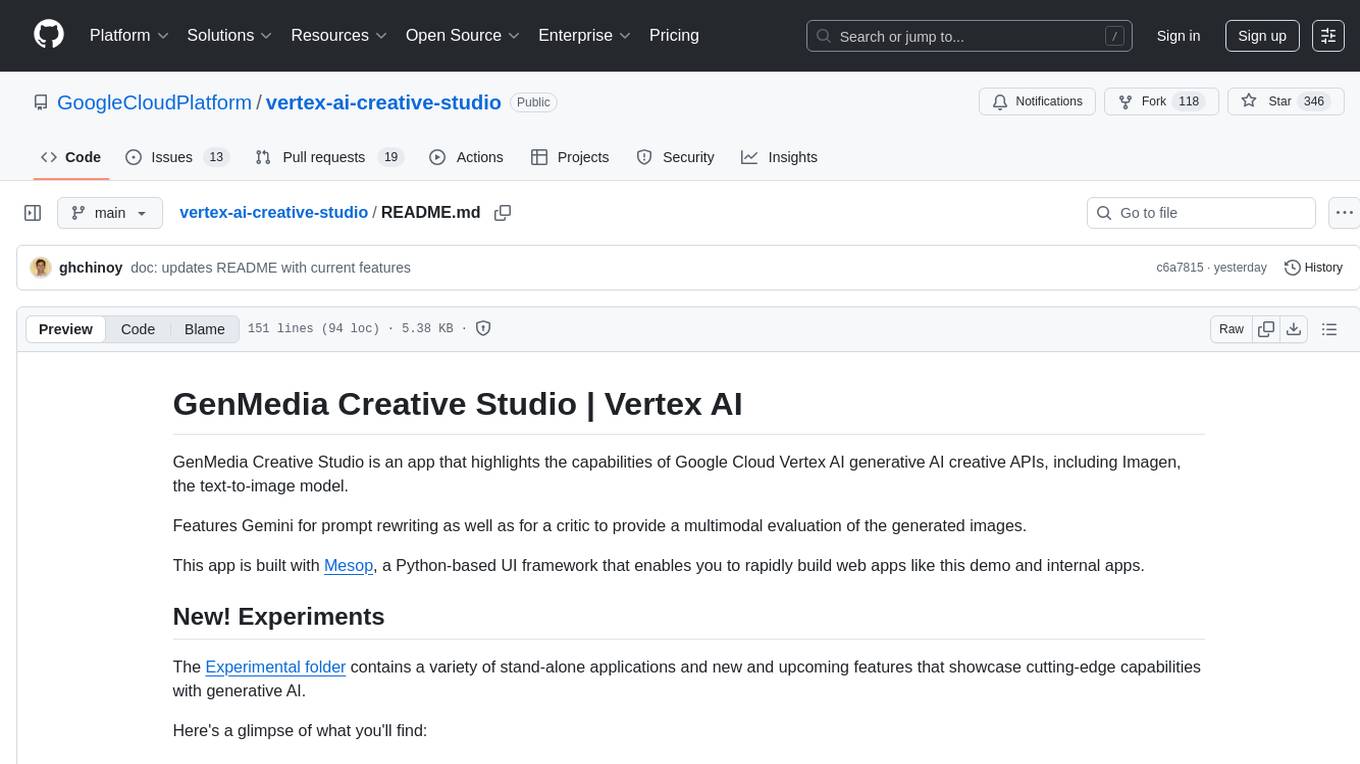

vertex-ai-creative-studio

GenMedia Creative Studio is an application showcasing the capabilities of Google Cloud Vertex AI generative AI creative APIs. It includes features like Gemini for prompt rewriting and multimodal evaluation of generated images. The app is built with Mesop, a Python-based UI framework, enabling rapid development of web and internal apps. The Experimental folder contains stand-alone applications and upcoming features demonstrating cutting-edge generative AI capabilities, such as image generation, prompting techniques, and audio/video tools.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

For similar tasks

python-tutorial-notebooks

This repository contains Jupyter-based tutorials for NLP, ML, AI in Python for classes in Computational Linguistics, Natural Language Processing (NLP), Machine Learning (ML), and Artificial Intelligence (AI) at Indiana University.

open-parse

Open Parse is a Python library for visually discerning document layouts and chunking them effectively. It is designed to fill the gap in open-source libraries for handling complex documents. Unlike text splitting, which converts a file to raw text and slices it up, Open Parse visually analyzes documents for superior LLM input. It also supports basic markdown for parsing headings, bold, and italics, and has high-precision table support, extracting tables into clean Markdown formats with accuracy that surpasses traditional tools. Open Parse is extensible, allowing users to easily implement their own post-processing steps. It is also intuitive, with great editor support and completion everywhere, making it easy to use and learn.

MoonshotAI-Cookbook

The MoonshotAI-Cookbook provides example code and guides for accomplishing common tasks with the MoonshotAI API. To run these examples, you'll need an MoonshotAI account and associated API key. Most code examples are written in Python, though the concepts can be applied in any language.

AHU-AI-Repository

This repository is dedicated to the learning and exchange of resources for the School of Artificial Intelligence at Anhui University. Notes will be published on this website first: https://www.aoaoaoao.cn and will be synchronized to the repository regularly. You can also contact me at [email protected].

modern_ai_for_beginners

This repository provides a comprehensive guide to modern AI for beginners, covering both theoretical foundations and practical implementation. It emphasizes the importance of understanding both the mathematical principles and the code implementation of AI models. The repository includes resources on PyTorch, deep learning fundamentals, mathematical foundations, transformer-based LLMs, diffusion models, software engineering, and full-stack development. It also features tutorials on natural language processing with transformers, reinforcement learning, and practical deep learning for coders.

Building-AI-Applications-with-ChatGPT-APIs

This repository is for the book 'Building AI Applications with ChatGPT APIs' published by Packt. It provides code examples and instructions for mastering ChatGPT, Whisper, and DALL-E APIs through building innovative AI projects. Readers will learn to develop AI applications using ChatGPT APIs, integrate them with frameworks like Flask and Django, create AI-generated art with DALL-E APIs, and optimize ChatGPT models through fine-tuning.

examples

This repository contains a collection of sample applications and Jupyter Notebooks for hands-on experience with Pinecone vector databases and common AI patterns, tools, and algorithms. It includes production-ready examples for review and support, as well as learning-optimized examples for exploring AI techniques and building applications. Users can contribute, provide feedback, and collaborate to improve the resource.

lingoose

LinGoose is a modular Go framework designed for building AI/LLM applications. It offers the flexibility to import only the necessary modules, abstracts features for customization, and provides a comprehensive solution for developing AI/LLM applications from scratch. The framework simplifies the process of creating intelligent applications by allowing users to choose preferred implementations or create their own. LinGoose empowers developers to leverage its capabilities to streamline the development of cutting-edge AI and LLM projects.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.