geti-sdk

Software Development Kit (SDK) for the Intel® Geti™ platform for Computer Vision AI model training.

Stars: 78

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and fostering collaboration. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, configuration management, training job monitoring, media upload, and prediction. The repository also includes tutorial-style Jupyter notebooks demonstrating SDK usage.

README:

Welcome to the Intel® Geti™ SDK! The Intel® Geti™ platform enables teams to rapidly develop AI models. The platform reduces the time needed to build models by easing the complexities of model development and harnessing greater collaboration between teams. Most importantly, the platform unlocks faster time-to-value for digitization initiatives with AI.

The Intel® Geti™ SDK is a python package which contains tools to interact with an Intel® Geti™ server via the REST API. It provides functionality for:

- Project creation from annotated datasets on disk

- Project downloading (images, videos, configuration, annotations, predictions and models)

- Project creation and upload from a previous download

- Deploying a project for local inference with OpenVINO

- Getting and setting project and model configuration

- Launching and monitoring training jobs

- Media upload and prediction

This repository also contains a set of (tutorial style) Jupyter notebooks that demonstrate how to use the SDK. We highly recommend checking them out to get a feeling for use cases for the package.

Using an environment manager such as miniforge or venv to create a new Python environment before installing the Intel® Geti™ SDK and its requirements is highly recommended.

NOTE: If you have installed multiple versions of Python, use

py -3.9 venv -m <env_name>when creating your virtual environment to specify a supported version (in this case 3.9). Once you activate the virtual environment <venv_path>/Scripts/activate, make sure to upgrade pip to the latest versionpython -m pip install --upgrade pip wheel setuptools.

Make sure to set up your environment using one of the supported Python versions for your operating system, as indicated in the table below.

| Python <= 3.8 | Python 3.9 | Python 3.10 | Python 3.11 | Python 3.12 | Python 3.13 | |

|---|---|---|---|---|---|---|

| Linux | ❌ | ✔️ | ✔️ | ✔️ | ✔️ | ❌ |

| Windows | ❌ | ✔️ | ✔️ | ✔️ | ✔️ | ❌ |

| MacOS | ❌ | ✔️ | ✔️ | ✔️ | ✔️ | ❌ |

Once you have created and activated a new environment, follow the steps below to install the package.

Use pip install geti-sdk to install the SDK from the Python Package Index (PyPI). To

install a specific version (for instance v1.5.0), use the command

pip install geti-sdk==1.5.0

-

Download or clone the repository and navigate to the root directory of the repo in your terminal.

-

Base installation Within this directory, install the SDK using

pip install .This command will install the package and its base dependencies in your environment. -

Notebooks installation (Optional) If you want to be able to run the notebooks, make sure to install the extra requirements using

pip install .[notebooks]This will install both the SDK and all other dependencies needed to run the notebooks in your environment -

Development installation (Optional) If you plan on running the tests or want to build the documentation, you can install the package extra requirements by doing for example

pip install -e .[dev]The valid options for the extra requirements are

[dev, docs, notebooks], corresponding to the following functionality:-

devInstall requirements to run the test suite on your local machine -

notebooksInstall requirements to run the Juypter notebooks in thenotebooksfolder in this repository. -

docsInstall requirements to build the documentation for the SDK from source on your machine

-

The SDK contains example code in various forms to help you get familiar with the package.

-

Code examples are short snippets that demonstrate how to perform several common tasks. This also shows how to configure the SDK to connect to your Intel® Geti™ server.

-

Jupyter notebooks are tutorial style notebooks that cover pretty much the full SDK functionality. These are the recommended way to get started with the SDK.

-

Example scripts are more extensive scripts that cover more advanced usage than the code examples, have a look at these if you don't like Jupyter.

The package provides a main class Geti that can be used for the following use cases

To establish a connection between the SDK running on your local machine, and the

Intel® Geti™ platform running on a remote server, the Geti class needs to know the

hostname or IP address for the server and it needs to have some form of authentication.

Instantiating the Geti class will establish the connection and perform authentication.

-

Personal Access Token

The recommended authentication method is the 'Personal Access Token'. The token can be obtained by following the steps below:

- Open the Intel® Geti™ user interface in your browser

- Click on the

Usermenu, in the top right corner of the page. The menu is accessible from any page inside the Intel® Geti™ interface. - In the dropdown menu that follows, click on

Personal access token, as shown in the image below. - In the screen that follows, go through the steps to create a token.

- Make sure to copy the token value!

Once you created a personal access token, it can be passed to the

Geticlass as follows:from geti_sdk import Geti geti = Geti( host="https://your_server_hostname_or_ip_address", token="your_personal_access_token" )

-

User Credentials

NOTE: For optimal security, using the token method outlined above is recommended.

In addition to the token, your username and password can also be used to connect to the server. They can be passed as follows:

from geti_sdk import Geti geti = Geti( host="https://your_server_hostname_or_ip_address", username="dummy_user", password="dummy_password" )

Here,

"dummy_user"and"dummy_password"should be replaced by your username and password for the Geti server. -

SSL certificate validation

By default, the SDK verifies the SSL certificate of your server before establishing a connection over HTTPS. If the certificate can't be validated, this will results in an error and the SDK will not be able to connect to the server.

However, this may not be appropriate or desirable in all cases, for instance if your Geti server does not have a certificate because you are running it in a private network environment. In that case, certificate validation can be disabled by passing

verify_certificate=Falseto theGeticonstructor. Please only disable certificate validation in a secure environment!

-

Project download The following python snippet is a minimal example of how to download a project using

Geti:from geti_sdk import Geti geti = Geti( host="https://your_server_hostname_or_ip_address", token="your_personal_access_token" ) geti.download_project_data(project_name="dummy_project")

Here, it is assumed that the project with name 'dummy_project' exists on the cluster. The

Getiinstance will create a folder named 'dummy_project' in your current working directory, and download the project parameters, images, videos, annotations, predictions and the active model for the project (including optimized models derived from it) to that folder.The method takes the following optional parameters:

-

target_folder-- Can be specified to change the directory to which the project data is saved. -

include_predictions-- Set to True to download the predictions for all images and videos in the project. Set to False to not download any predictions. -

include_active_model-- Set to True to download the active model for the project, and any optimized models derived from it. If set to False, no models are downloaded. False by default.

NOTE: During project downloading the Geti SDK stores data on local disk. If necessary, please apply additional security control to protect downloaded files (e.g., enforce access control, delete sensitive data securely).

-

-

Project upload The following python snippet is a minimal example of how to re-create a project on an Intel® Geti™ server using the data from a previously downloaded project:

from geti_sdk import Geti geti = Geti( host="https://your_server_hostname_or_ip_address", token="your_personal_access_token" ) geti.upload_project_data(target_folder="dummy_project")

The parameter

target_foldermust be a valid path to the directory holding the project data. If you want to create the project using a different name than the original project, you can pass an additional parameterproject_nameto the upload method.

The Geti instance can be used to either back-up a project (by downloading it and later

uploading it again to the same cluster), or to migrate a project to a different cluster

(download it, and upload it to the target cluster).

To up- or download all projects from a cluster, simply use the

geti.download_all_projects and geti.upload_all_projects methods instead of

the single project methods in the code snippets above.

The following code snippet shows how to create a deployment for local inference with OpenVINO:

import cv2

from geti_sdk import Geti

geti = Geti(

host="https://your_server_hostname_or_ip_address", token="your_personal_access_token"

)

# Download the model data and create a `Deployment`

deployment = geti.deploy_project(project_name="dummy_project")

# Load the inference models for all tasks in the project, for CPU inference

deployment.load_inference_models(device='CPU')

# Run inference

dummy_image = cv2.imread('dummy_image.png')

prediction = deployment.infer(image=dummy_image)

# Save the deployment to disk

deployment.save(path_to_folder="dummy_project")The deployment.infer method takes a numpy image as input.

The deployment.save method will save the deployment to the folder named

'dummy_project', on the local disk. The deployment can be reloaded again later using

Deployment.from_folder('dummy_project').

The examples

folder contains example scripts, showing various use cases for the package. They can

be run by navigating to the examples directory in your terminal, and simply running

the scripts like any other python script.

In addition, the notebooks

folder contains Jupyter notebooks with example use cases for the geti_sdk. To run

the notebooks, make sure that the requirements for the notebooks are installed in your

Python environment. If you have not installed these when you were installing the SDK,

you can install them at any time using

pip install -r requirements/requirements-notebooks.txt

Once the notebook requirements are installed, navigate to the notebooks directory in

your terminal. Then, launch JupyterLab by typing jupyter lab. This should open your

browser and take you to the JupyterLab landing page, with the SDK notebooks open (see

the screenshot below).

NOTE: Both the example scripts and the notebooks require access to a server running the Intel® Geti™ platform.

The Geti class provides the following methods:

-

download_project_data-- Downloads a project by project name (Geti-SDK representation), returns an interactive object. -

upload_project_data-- Uploads project (Geti-SDK representation) from a folder. -

download_all_projects-- Downloads all projects found on the server. -

upload_all_projects-- Uploads all projects found in a specified folder to the server. -

export_project-- Exports a project to an archive on disk. This method is useful for creating a backup of a project, or for migrating a project to a different cluster. -

import_project-- Imports a project from an archive on disk. This method is useful for restoring a project from a backup, or for migrating a project to a different cluster. -

export_dataset-- Exports a dataset to an archive on disk. This method is useful for creating a backup of a dataset, or for migrating a dataset to a different cluster. -

import_dataset-- Imports a dataset from an archive on disk. A new project will be created for the dataset. This method is useful for restoring a project from a dataset backup, or for migrating a dataset to a different cluster. -

upload_and_predict_image-- Uploads a single image to an existing project on the server, and requests a prediction for that image. Optionally, the prediction can be visualized as an overlay on the image. -

upload_and_predict_video-- Uploads a single video to an existing project on the server, and requests predictions for the frames in the video. As with upload_and_predict_image, the predictions can be visualized on the frames. The parameterframe_stridecan be used to control which frames are extracted for prediction. -

upload_and_predict_media_folder-- Uploads all media (images and videos) from a folder on local disk to an existing project on the server, and download predictions for all uploaded media. -

deploy_project-- Downloads the active model for all tasks in the project as an OpenVINO inference model. The resultingDeploymentcan be used to run inference for the project on a local machine. Pipeline inference is also supported. -

create_project_single_task_from_dataset-- Creates a single task project on the server, potentially using labels and uploading annotations from an external dataset. -

create_task_chain_project_from_dataset-- Creates a task chain project on the server, potentially using labels and uploading annotations from an external dataset.

For further details regarding these methods, please refer to the method documentation, the code snippets, and example scripts provided in this repo.

Please visit the full documentation for a complete API reference.

-

Creating projects. You can pass a variable

project_typeto control what kind of tasks will be created in the project pipeline. For example, if you want to create a single task segmentation project, you'd passproject_type='segmentation'. For a detection -> segmentation task chain, you can passproject_type=detection_to_segmentation. Please see the scripts in theexamplesfolder for examples on how to do this. -

Creating datasets and retrieving dataset statistics.

-

Uploading images, videos, annotations for images and video frames and configurations to a project.

-

Downloading images, videos, annotations, models and predictions for all images and videos/video frames in a project. Also downloading the full project configuration is supported.

-

Setting configuration for a project, like turning auto train on/off and setting number of iterations for all tasks.

-

Deploying a project to load OpenVINO inference models for all tasks in the pipeline, and running the full pipeline inference on a local machine.

-

Creating and restoring a backup of an existing project, using the code snippets provided above. Only annotations, media and configurations are backed up, models are not.

-

Launching and monitoring training jobs is straightforward with the

TrainingClient. Please refer to the notebook007_train_projectfor instructions. -

Authorization via Personal Access Token is available for both On-Prem and SaaS users.

-

Fetching the active dataset

-

Triggering (post-training) model optimization for model quantization and changing models precision.

-

Running model tests

-

Benchmarking models to measure inference throughput on different hardware. It allows for quick and easy comparison of inference framerates for different model architectures and precision levels for the specified project.

- Model upload

- Prediction upload

- Importing datasets to an existing project: For this, you can use the import functionality from the Intel® Geti™ user interface instead.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for geti-sdk

Similar Open Source Tools

geti-sdk

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and fostering collaboration. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, configuration management, training job monitoring, media upload, and prediction. The repository also includes tutorial-style Jupyter notebooks demonstrating SDK usage.

geti-sdk

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and enhancing collaboration between teams. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, setting project and model configuration, launching and monitoring training jobs, and media upload and prediction. The SDK also includes tutorial-style Jupyter notebooks demonstrating its usage.

cognita

Cognita is an open-source framework to organize your RAG codebase along with a frontend to play around with different RAG customizations. It provides a simple way to organize your codebase so that it becomes easy to test it locally while also being able to deploy it in a production ready environment. The key issues that arise while productionizing RAG system from a Jupyter Notebook are: 1. **Chunking and Embedding Job** : The chunking and embedding code usually needs to be abstracted out and deployed as a job. Sometimes the job will need to run on a schedule or be trigerred via an event to keep the data updated. 2. **Query Service** : The code that generates the answer from the query needs to be wrapped up in a api server like FastAPI and should be deployed as a service. This service should be able to handle multiple queries at the same time and also autoscale with higher traffic. 3. **LLM / Embedding Model Deployment** : Often times, if we are using open-source models, we load the model in the Jupyter notebook. This will need to be hosted as a separate service in production and model will need to be called as an API. 4. **Vector DB deployment** : Most testing happens on vector DBs in memory or on disk. However, in production, the DBs need to be deployed in a more scalable and reliable way. Cognita makes it really easy to customize and experiment everything about a RAG system and still be able to deploy it in a good way. It also ships with a UI that makes it easier to try out different RAG configurations and see the results in real time. You can use it locally or with/without using any Truefoundry components. However, using Truefoundry components makes it easier to test different models and deploy the system in a scalable way. Cognita allows you to host multiple RAG systems using one app. ### Advantages of using Cognita are: 1. A central reusable repository of parsers, loaders, embedders and retrievers. 2. Ability for non-technical users to play with UI - Upload documents and perform QnA using modules built by the development team. 3. Fully API driven - which allows integration with other systems. > If you use Cognita with Truefoundry AI Gateway, you can get logging, metrics and feedback mechanism for your user queries. ### Features: 1. Support for multiple document retrievers that use `Similarity Search`, `Query Decompostion`, `Document Reranking`, etc 2. Support for SOTA OpenSource embeddings and reranking from `mixedbread-ai` 3. Support for using LLMs using `Ollama` 4. Support for incremental indexing that ingests entire documents in batches (reduces compute burden), keeps track of already indexed documents and prevents re-indexing of those docs.

bedrock-claude-chatbot

Bedrock Claude ChatBot is a Streamlit application that provides a conversational interface for users to interact with various Large Language Models (LLMs) on Amazon Bedrock. Users can ask questions, upload documents, and receive responses from the AI assistant. The app features conversational UI, document upload, caching, chat history storage, session management, model selection, cost tracking, logging, and advanced data analytics tool integration. It can be customized using a config file and is extensible for implementing specialized tools using Docker containers and AWS Lambda. The app requires access to Amazon Bedrock Anthropic Claude Model, S3 bucket, Amazon DynamoDB, Amazon Textract, and optionally Amazon Elastic Container Registry and Amazon Athena for advanced analytics features.

nx_open

The `nx_open` repository contains open-source components for the Network Optix Meta Platform, used to build products like Nx Witness Video Management System. It includes source code, specifications, and a Desktop Client. The repository is licensed under Mozilla Public License 2.0. Users can build the Desktop Client and customize it using a zip file. The build environment supports Windows, Linux, and macOS platforms with specific prerequisites. The repository provides scripts for building, signing executable files, and running the Desktop Client. Compatibility with VMS Server versions is crucial, and automatic VMS updates are disabled for the open-source Desktop Client.

agentok

Agentok Studio is a visual tool built for AutoGen, a cutting-edge agent framework from Microsoft and various contributors. It offers intuitive visual tools to simplify the construction and management of complex agent-based workflows. Users can create workflows visually as graphs, chat with agents, and share flow templates. The tool is designed to streamline the development process for creators and developers working on next-generation Multi-Agent Applications.

holohub

Holohub is a central repository for the NVIDIA Holoscan AI sensor processing community to share reference applications, operators, tutorials, and benchmarks. It includes example applications, community components, package configurations, and tutorials. Users and developers of the Holoscan platform are invited to reuse and contribute to this repository. The repository provides detailed instructions on prerequisites, building, running applications, contributing, and glossary terms. It also offers a searchable catalog of available components on the Holoscan SDK User Guide website.

Open_Data_QnA

Open Data QnA is a Python library that allows users to interact with their PostgreSQL or BigQuery databases in a conversational manner, without needing to write SQL queries. The library leverages Large Language Models (LLMs) to bridge the gap between human language and database queries, enabling users to ask questions in natural language and receive informative responses. It offers features such as conversational querying with multiturn support, table grouping, multi schema/dataset support, SQL generation, query refinement, natural language responses, visualizations, and extensibility. The library is built on a modular design and supports various components like Database Connectors, Vector Stores, and Agents for SQL generation, validation, debugging, descriptions, embeddings, responses, and visualizations.

knowledge-graph-of-thoughts

Knowledge Graph of Thoughts (KGoT) is an innovative AI assistant architecture that integrates LLM reasoning with dynamically constructed knowledge graphs (KGs). KGoT extracts and structures task-relevant knowledge into a dynamic KG representation, iteratively enhanced through external tools such as math solvers, web crawlers, and Python scripts. Such structured representation of task-relevant knowledge enables low-cost models to solve complex tasks effectively. The KGoT system consists of three main components: the Controller, the Graph Store, and the Integrated Tools, each playing a critical role in the task-solving process.

serverless-pdf-chat

The serverless-pdf-chat repository contains a sample application that allows users to ask natural language questions of any PDF document they upload. It leverages serverless services like Amazon Bedrock, AWS Lambda, and Amazon DynamoDB to provide text generation and analysis capabilities. The application architecture involves uploading a PDF document to an S3 bucket, extracting metadata, converting text to vectors, and using a LangChain to search for information related to user prompts. The application is not intended for production use and serves as a demonstration and educational tool.

LARS

LARS is an application that enables users to run Large Language Models (LLMs) locally on their devices, upload their own documents, and engage in conversations where the LLM grounds its responses with the uploaded content. The application focuses on Retrieval Augmented Generation (RAG) to increase accuracy and reduce AI-generated inaccuracies. LARS provides advanced citations, supports various file formats, allows follow-up questions, provides full chat history, and offers customization options for LLM settings. Users can force enable or disable RAG, change system prompts, and tweak advanced LLM settings. The application also supports GPU-accelerated inferencing, multiple embedding models, and text extraction methods. LARS is open-source and aims to be the ultimate RAG-centric LLM application.

llm-subtrans

LLM-Subtrans is an open source subtitle translator that utilizes LLMs as a translation service. It supports translating subtitles between any language pairs supported by the language model. The application offers multiple subtitle formats support through a pluggable system, including .srt, .ssa/.ass, and .vtt files. Users can choose to use the packaged release for easy usage or install from source for more control over the setup. The tool requires an active internet connection as subtitles are sent to translation service providers' servers for translation.

warc-gpt

WARC-GPT is an experimental retrieval augmented generation pipeline for web archive collections. It allows users to interact with WARC files, extract text, generate text embeddings, visualize embeddings, and interact with a web UI and API. The tool is highly customizable, supporting various LLMs, providers, and embedding models. Users can configure the application using environment variables, ingest WARC files, start the server, and interact with the web UI and API to search for content and generate text completions. WARC-GPT is designed for exploration and experimentation in exploring web archives using AI.

ai-starter-kit

SambaNova AI Starter Kits is a collection of open-source examples and guides designed to facilitate the deployment of AI-driven use cases for developers and enterprises. The kits cover various categories such as Data Ingestion & Preparation, Model Development & Optimization, Intelligent Information Retrieval, and Advanced AI Capabilities. Users can obtain a free API key using SambaNova Cloud or deploy models using SambaStudio. Most examples are written in Python but can be applied to any programming language. The kits provide resources for tasks like text extraction, fine-tuning embeddings, prompt engineering, question-answering, image search, post-call analysis, and more.

generative-ai-application-builder-on-aws

The Generative AI Application Builder on AWS (GAAB) is a solution that provides a web-based management dashboard for deploying customizable Generative AI (Gen AI) use cases. Users can experiment with and compare different combinations of Large Language Model (LLM) use cases, configure and optimize their use cases, and integrate them into their applications for production. The solution is targeted at novice to experienced users who want to experiment and productionize different Gen AI use cases. It uses LangChain open-source software to configure connections to Large Language Models (LLMs) for various use cases, with the ability to deploy chat use cases that allow querying over users' enterprise data in a chatbot-style User Interface (UI) and support custom end-user implementations through an API.

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

For similar tasks

geti-sdk

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and enhancing collaboration between teams. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, setting project and model configuration, launching and monitoring training jobs, and media upload and prediction. The SDK also includes tutorial-style Jupyter notebooks demonstrating its usage.

lms

The `lms` Command Line Tool for LM Studio is a powerful tool built with `lmstudio.js` that allows users to interact with LM Studio functionalities through the command line interface. It provides a wide range of commands for managing models, starting and stopping servers, creating projects, and streaming logs. Users can easily bootstrap the tool and access detailed information about each subcommand. The tool is designed to enhance the user experience and streamline workflows when working with LM Studio.

geti-sdk

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and fostering collaboration. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, configuration management, training job monitoring, media upload, and prediction. The repository also includes tutorial-style Jupyter notebooks demonstrating SDK usage.

modelence

Modelence is an all-in-one TypeScript framework for startups shipping production apps, aiming to eliminate boilerplate for standard web app features. It provides authentication, database setup, cron jobs, AI observability, and email functionalities. Modelence requires Node.js 20.20 or higher. Developers can create projects, install dependencies, and start the development server quickly. For local development, contributors can clone the repository, install dependencies, build the package, and test changes in a real application. Modelence offers examples for further guidance.

chief

Chief is a tool designed to help users build big projects by breaking work into tasks and running Claude Code in a loop until they're done. It allows users to describe their projects as a series of tasks, with Chief running Claude one task at a time and committing changes per task to maintain a clean git history. Chief is ideal for managing large projects efficiently and collaboratively.

codewalk

CodeWalk is a native cross-platform client for OpenCode server mode, built with Flutter. It provides an AI chat interface for coding interactions, multi-server profile management, session lifecycle management, worktree management, speech-to-text input, and more. The project follows Clean Architecture with Flutter, Dart, Provider for state management, Dio for HTTP client, SharedPreferences for local storage, GetIt for dependency injection, and Material Design 3 for design system.

aipan-netdisk-search

Aipan-Netdisk-Search is a free and open-source web project for searching netdisk resources. It utilizes third-party APIs with IP access restrictions, suggesting self-deployment. The project can be easily deployed on Vercel and provides instructions for manual deployment. Users can clone the project, install dependencies, run it in the browser, and access it at localhost:3001. The project also includes documentation for deploying on personal servers using NUXT.JS. Additionally, there are options for donations and communication via WeChat.

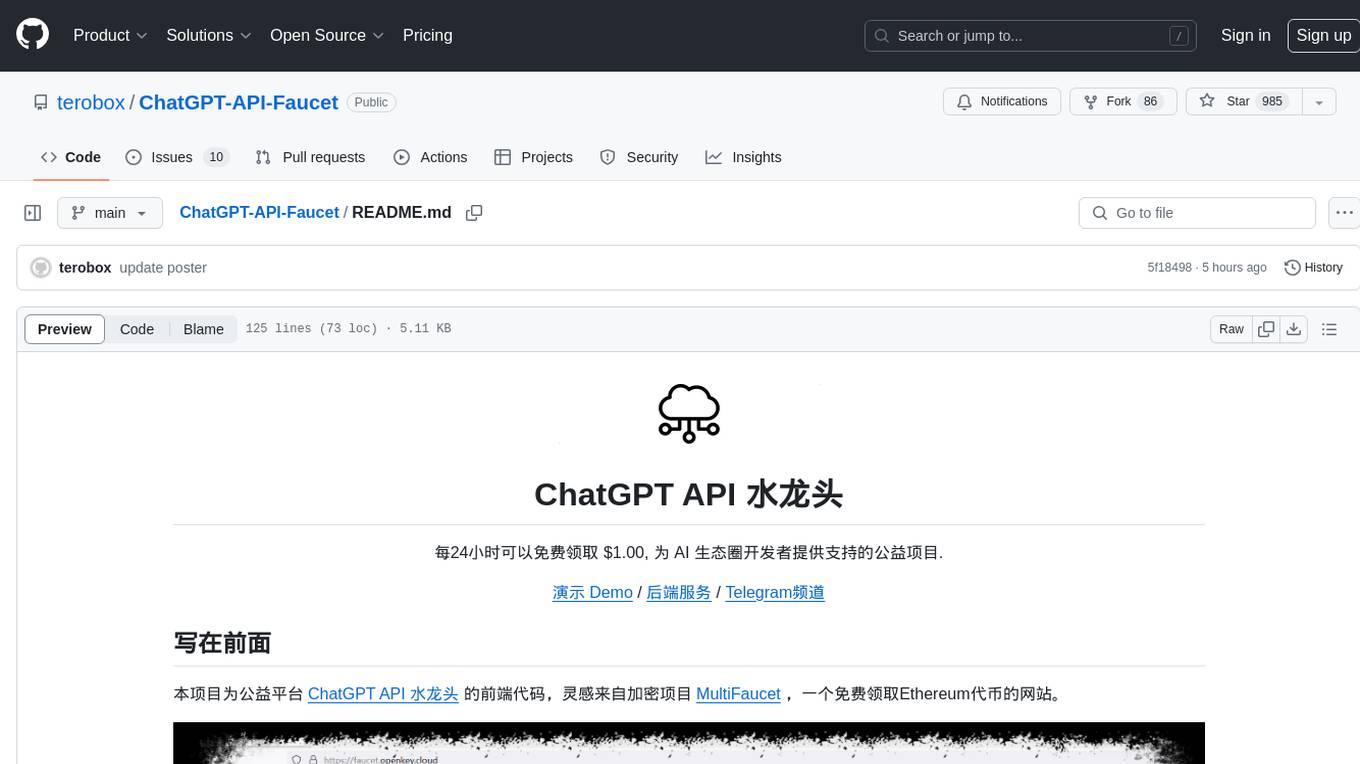

ChatGPT-API-Faucet

ChatGPT API Faucet is a frontend project for the public platform ChatGPT API Faucet, inspired by the crypto project MultiFaucet. It allows developers in the AI ecosystem to claim $1.00 for free every 24 hours. The program is developed using the Next.js framework and React library, with key components like _app.tsx for initializing pages, index.tsx for main modifications, and Layout.tsx for defining layout components. Users can deploy the project by installing dependencies, building the project, starting the project, configuring reverse proxies or using port:IP access, and running a development server. The tool also supports token balance queries and is related to projects like one-api, ChatGPT-Cost-Calculator, and Poe.Monster. It is licensed under the MIT license.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.

![Nightly Tests [Geti latest] Status](https://img.shields.io/github/actions/workflow/status/openvinotoolkit/geti-sdk/nightly-tests-geti-latest.yaml?label=nightly%20tests%20%5BGeti%20latest%5D&link=https%3A%2F%2Fgithub.com%2Fopenvinotoolkit%2Fgeti-sdk%2Factions%2Fworkflows%2Fnightly-tests-geti-latest.yaml)

![Nightly Tests [Geti develop] Status](https://img.shields.io/github/actions/workflow/status/openvinotoolkit/geti-sdk/nightly-tests-geti-develop.yaml?label=nightly%20tests%20%5BGeti%20develop%5D&link=https%3A%2F%2Fgithub.com%2Fopenvinotoolkit%2Fgeti-sdk%2Factions%2Fworkflows%2Fnightly-tests-geti-develop.yaml)