lms

LM Studio CLI

Stars: 4187

The `lms` Command Line Tool for LM Studio is a powerful tool built with `lmstudio.js` that allows users to interact with LM Studio functionalities through the command line interface. It provides a wide range of commands for managing models, starting and stopping servers, creating projects, and streaming logs. Users can easily bootstrap the tool and access detailed information about each subcommand. The tool is designed to enhance the user experience and streamline workflows when working with LM Studio.

README:

lms - Command Line Tool for LM Studio

Built with lmstudio.js

lms ships with LM Studio 0.2.22 and newer.

If you have trouble running the command, try running npx lmstudio install-cli to add it to path.

To check if the bootstrapping was successful, run the following in a 👉 new terminal window 👈:

lmsYou can use lms --help to see a list of all available subcommands.

For details about each subcommand, run lms <subcommand> --help.

Here are some frequently used commands:

-

lms status- To check the status of LM Studio. -

lms server start- To start the local API server. -

lms server stop- To stop the local API server. -

lms ls- To list all downloaded models.-

lms ls --json- To list all downloaded models in machine-readable JSON format.

-

-

lms ps- To list all loaded models available for inferencing.-

lms ps --json- To list all loaded models available for inferencing in machine-readable JSON format.

-

-

lms load- To load a model-

lms load <model path> -y- To load a model with maximum GPU acceleration without confirmation

-

-

lms unload <model identifier>- To unload a model-

lms unload --all- To unload all models

-

-

lms create- To create a new project with LM Studio SDK -

lms log stream- To stream logs from LM Studio

The CLI is part of the lmstudio.js monorepo and cannot be built standalone.

# Clone and build the entire monorepo

git clone https://github.com/lmstudio-ai/lmstudio-js.git --recursive

cd lmstudio-js

npm install

npm run build

# Test your CLI changes

node publish/cli/dist/index.js <subcommand>Example:

node publish/cli/dist/index.js --help

node publish/cli/dist/index.js statusSee CONTRIBUTING.md for more information.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for lms

Similar Open Source Tools

lms

The `lms` Command Line Tool for LM Studio is a powerful tool built with `lmstudio.js` that allows users to interact with LM Studio functionalities through the command line interface. It provides a wide range of commands for managing models, starting and stopping servers, creating projects, and streaming logs. Users can easily bootstrap the tool and access detailed information about each subcommand. The tool is designed to enhance the user experience and streamline workflows when working with LM Studio.

cortex

Nitro is a high-efficiency C++ inference engine for edge computing, powering Jan. It is lightweight and embeddable, ideal for product integration. The binary of nitro after zipped is only ~3mb in size with none to minimal dependencies (if you use a GPU need CUDA for example) make it desirable for any edge/server deployment.

loz

Loz is a command-line tool that integrates AI capabilities with Unix tools, enabling users to execute system commands and utilize Unix pipes. It supports multiple LLM services like OpenAI API, Microsoft Copilot, and Ollama. Users can run Linux commands based on natural language prompts, enhance Git commit formatting, and interact with the tool in safe mode. Loz can process input from other command-line tools through Unix pipes and automatically generate Git commit messages. It provides features like chat history access, configurable LLM settings, and contribution opportunities.

middleware

Middleware is an open-source engineering management tool that helps engineering leaders measure and analyze team effectiveness using DORA metrics. It integrates with CI/CD tools, automates DORA metric collection and analysis, visualizes key performance indicators, provides customizable reports and dashboards, and integrates with project management platforms. Users can set up Middleware using Docker or manually, generate encryption keys, set up backend and web servers, and access the application to view DORA metrics. The tool calculates DORA metrics using GitHub data, including Deployment Frequency, Lead Time for Changes, Mean Time to Restore, and Change Failure Rate. Middleware aims to provide DORA metrics to users based on their Git data, simplifying the process of tracking software delivery performance and operational efficiency.

frontend

Nuclia frontend apps and libraries repository contains various frontend applications and libraries for the Nuclia platform. It includes components such as Dashboard, Widget, SDK, Sistema (design system), NucliaDB admin, CI/CD Deployment, and Maintenance page. The repository provides detailed instructions on installation, dependencies, and usage of these components for both Nuclia employees and external developers. It also covers deployment processes for different components and tools like ArgoCD for monitoring deployments and logs. The repository aims to facilitate the development, testing, and deployment of frontend applications within the Nuclia ecosystem.

cli-agent

Pieces CLI for Developers is a comprehensive command-line interface (CLI) tool designed to interact seamlessly with Pieces OS. It provides functionalities such as asset management, application interaction, and integration with various Pieces OS features. The tool is compatible with Windows 10 or greater, Mac, and Windows operating systems. Users can install the tool by running 'pip install pieces-cli' or 'brew install pieces-cli'. After installation, users can access the tool's functionalities through the terminal by using the 'pieces' command followed by subcommands and options. The tool supports various commands, which can be found in the documentation. Developers can contribute to the project by forking and cloning the repository, setting up a virtual environment, installing dependencies with poetry, and running test cases with pytest and coverage.

orama-core

OramaCore is a database designed for AI projects, answer engines, copilots, and search functionalities. It offers features such as a full-text search engine, vector database, LLM interface, and various utilities. The tool is currently under active development and not recommended for production use due to potential API changes. OramaCore aims to provide a comprehensive solution for managing data and enabling advanced AI capabilities in projects.

ML-Bench

ML-Bench is a tool designed to evaluate large language models and agents for machine learning tasks on repository-level code. It provides functionalities for data preparation, environment setup, usage, API calling, open source model fine-tuning, and inference. Users can clone the repository, load datasets, run ML-LLM-Bench, prepare data, fine-tune models, and perform inference tasks. The tool aims to facilitate the evaluation of language models and agents in the context of machine learning tasks on code repositories.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

svelte-bench

SvelteBench is an LLM benchmark tool for evaluating Svelte components generated by large language models. It supports multiple LLM providers such as OpenAI, Anthropic, Google, and OpenRouter. Users can run predefined test suites to verify the functionality of the generated components. The tool allows configuration of API keys for different providers and offers debug mode for faster development. Users can provide a context file to improve component generation. Benchmark results are saved in JSON format for analysis and visualization.

aioli

Aioli is a library for running genomics command-line tools in the browser using WebAssembly. It creates a single WebWorker to run all WebAssembly tools, shares a filesystem across modules, and efficiently mounts local files. The tool encapsulates each module for loading, does WebAssembly feature detection, and communicates with the WebWorker using the Comlink library. Users can deploy new releases and versions, and benefit from code reuse by porting existing C/C++/Rust/etc tools to WebAssembly for browser use.

desktop

ComfyUI Desktop is a packaged desktop application that allows users to easily use ComfyUI with bundled features like ComfyUI source code, ComfyUI-Manager, and uv. It automatically installs necessary Python dependencies and updates with stable releases. The app comes with Electron, Chromium binaries, and node modules. Users can store ComfyUI files in a specified location and manage model paths. The tool requires Python 3.12+ and Visual Studio with Desktop C++ workload for Windows. It uses nvm to manage node versions and yarn as the package manager. Users can install ComfyUI and dependencies using comfy-cli, download uv, and build/launch the code. Troubleshooting steps include rebuilding modules and installing missing libraries. The tool supports debugging in VSCode and provides utility scripts for cleanup. Crash reports can be sent to help debug issues, but no personal data is included.

BotSharp-UI

BotSharp UI is a web app for managing agents and conversations. It allows users to build new AI assistants quickly using a Node-based Agent building experience. The project is written in SvelteKit v2 and utilizes BotSharp as the LLM services.

opencharacter

OpenCharacter is an open-source tool that allows users to create and run characters locally with local models or use the hosted version. The stack includes Next.js for frontend, TailwindCSS for styling, Drizzle ORM for database access, NextAuth for authentication, Cloudflare D1 for serverless databases, Cloudflare Pages for hosting, and ShadcnUI as the component library. Users can integrate OpenCharacter with OpenRouter by configuring the OpenRouter API key. The tool is fully scalable, composable, and cost-effective, with powerful tools like Wrangler for database management and migrations. No environment variables are needed, making it easy to use and deploy.

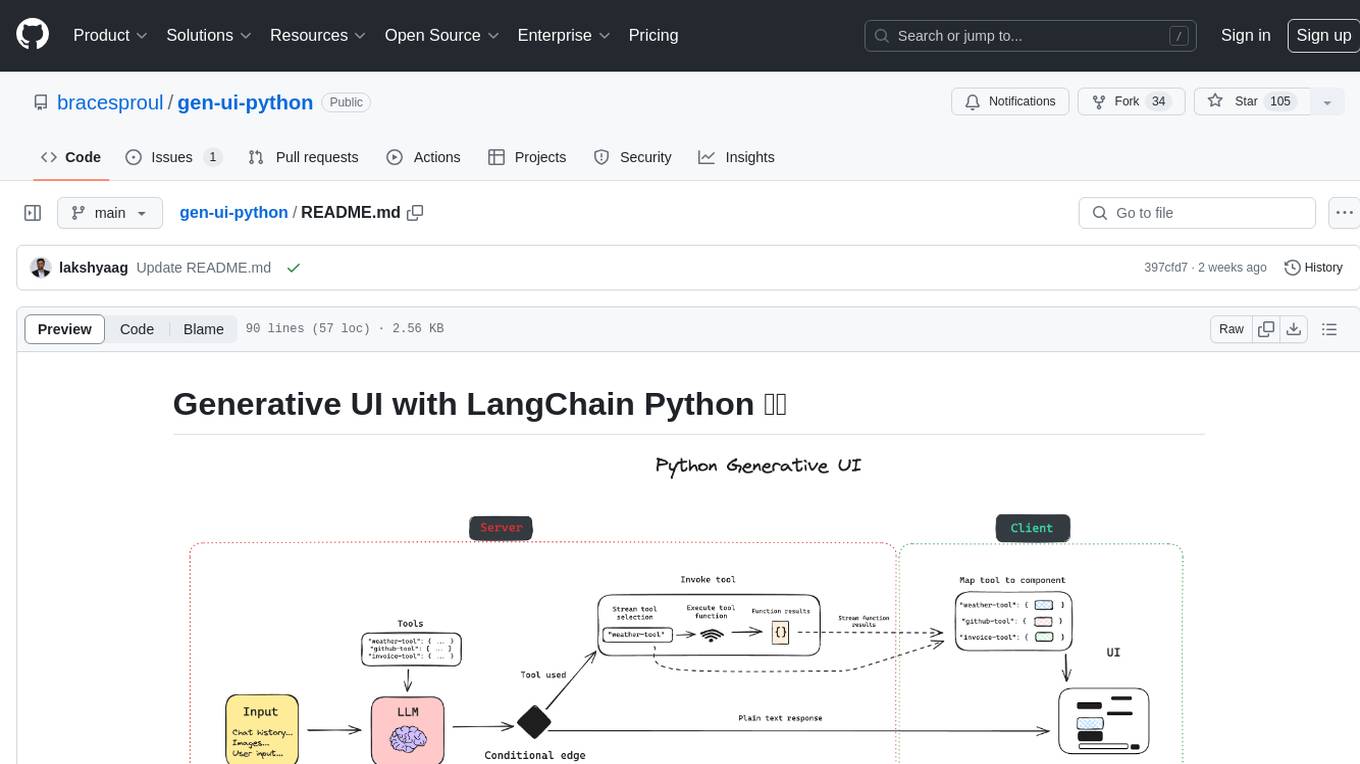

gen-ui-python

This application provides a template for building generative UI applications with LangChain Python. It includes pre-built UI components using Shadcn. Users can play around with gen ui features and customize the UI. The application requires setting environment variables for LangSmith keys, OpenAI API key, GitHub PAT, and Geocode API key. Users can further develop the application by generating React components, building custom components with LLM and Shadcn, using multiple tools and components, updating LangGraph agent, and rendering UI dynamically in different areas on the screen.

For similar tasks

geti-sdk

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and enhancing collaboration between teams. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, setting project and model configuration, launching and monitoring training jobs, and media upload and prediction. The SDK also includes tutorial-style Jupyter notebooks demonstrating its usage.

lms

The `lms` Command Line Tool for LM Studio is a powerful tool built with `lmstudio.js` that allows users to interact with LM Studio functionalities through the command line interface. It provides a wide range of commands for managing models, starting and stopping servers, creating projects, and streaming logs. Users can easily bootstrap the tool and access detailed information about each subcommand. The tool is designed to enhance the user experience and streamline workflows when working with LM Studio.

geti-sdk

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and fostering collaboration. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, configuration management, training job monitoring, media upload, and prediction. The repository also includes tutorial-style Jupyter notebooks demonstrating SDK usage.

modelence

Modelence is an all-in-one TypeScript framework for startups shipping production apps, aiming to eliminate boilerplate for standard web app features. It provides authentication, database setup, cron jobs, AI observability, and email functionalities. Modelence requires Node.js 20.20 or higher. Developers can create projects, install dependencies, and start the development server quickly. For local development, contributors can clone the repository, install dependencies, build the package, and test changes in a real application. Modelence offers examples for further guidance.

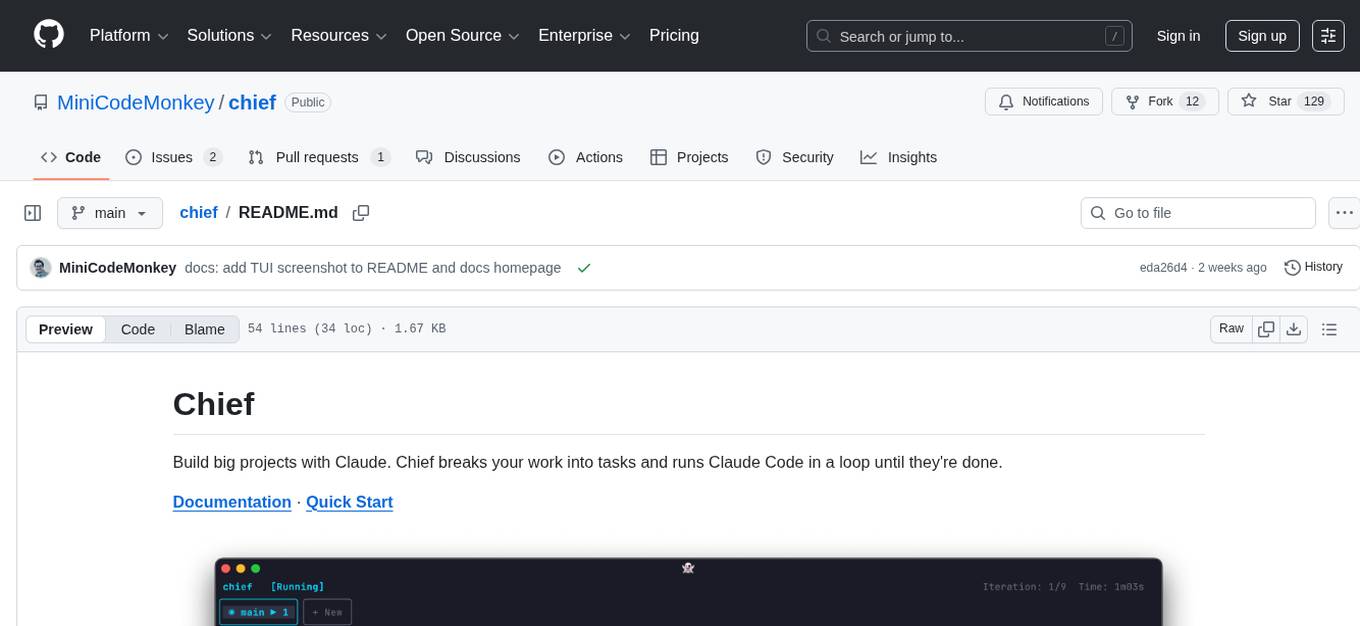

chief

Chief is a tool designed to help users build big projects by breaking work into tasks and running Claude Code in a loop until they're done. It allows users to describe their projects as a series of tasks, with Chief running Claude one task at a time and committing changes per task to maintain a clean git history. Chief is ideal for managing large projects efficiently and collaboratively.

mlflow

MLflow is a platform to streamline machine learning development, including tracking experiments, packaging code into reproducible runs, and sharing and deploying models. MLflow offers a set of lightweight APIs that can be used with any existing machine learning application or library (TensorFlow, PyTorch, XGBoost, etc), wherever you currently run ML code (e.g. in notebooks, standalone applications or the cloud). MLflow's current components are:

* `MLflow Tracking

model_server

OpenVINO™ Model Server (OVMS) is a high-performance system for serving models. Implemented in C++ for scalability and optimized for deployment on Intel architectures, the model server uses the same architecture and API as TensorFlow Serving and KServe while applying OpenVINO for inference execution. Inference service is provided via gRPC or REST API, making deploying new algorithms and AI experiments easy.

kitops

KitOps is a packaging and versioning system for AI/ML projects that uses open standards so it works with the AI/ML, development, and DevOps tools you are already using. KitOps simplifies the handoffs between data scientists, application developers, and SREs working with LLMs and other AI/ML models. KitOps' ModelKits are a standards-based package for models, their dependencies, configurations, and codebases. ModelKits are portable, reproducible, and work with the tools you already use.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.