bolt-python-ai-chatbot

Bring AI into your workspace using a chatbot powered by Anthropic and OpenAI

Stars: 52

The 'bolt-python-ai-chatbot' is a Slack chatbot app template that allows users to integrate AI-powered conversations into their Slack workspace. Users can interact with the bot in conversations and threads, send direct messages for private interactions, use commands to communicate with the bot, customize bot responses, and store user preferences. The app supports integration with Workflow Builder, custom language models, and different AI providers like OpenAI, Anthropic, and Google Cloud Vertex AI. Users can create user objects, manage user states, and select from various AI models for communication.

README:

This Slack chatbot app template offers a customizable solution for integrating AI-powered conversations into your Slack workspace. Here's what the app can do out of the box:

- Interact with the bot by mentioning it in conversations and threads

- Send direct messages to the bot for private interactions

- Use the

/ask-boltycommand to communicate with the bot in channels where it hasn't been added - Utilize a custom function for integration with Workflow Builder to summarize messages in conversations

- Select your preferred API/model from the app home to customize the bot's responses

- Bring Your Own Language Model BYO LLM for customization

- Custom FileStateStore creates a file in /data per user to store API/model preferences

Inspired by ChatGPT-in-Slack

Before getting started, make sure you have a development workspace where you have permissions to install apps. If you don’t have one setup, go ahead and create one.

- To use the OpenAI and Anthropic models, you must have an account with sufficient credits.

- To use the Vertex models, you must have a Google Cloud Provider project with sufficient credits.

- Open https://api.slack.com/apps/new and choose "From an app manifest"

- Choose the workspace you want to install the application to

- Copy the contents of manifest.json into the text box that says

*Paste your manifest code here*(within the JSON tab) and click Next - Review the configuration and click Create

- Click Install to Workspace and Allow on the screen that follows. You'll then be redirected to the App Configuration dashboard.

Before you can run the app, you'll need to store some environment variables.

- Open your apps configuration page from this list, click OAuth & Permissions in the left hand menu, then copy the Bot User OAuth Token. You will store this in your environment as

SLACK_BOT_TOKEN. - Click Basic Information from the left hand menu and follow the steps in the App-Level Tokens section to create an app-level token with the

connections:writescope. Copy this token. You will store this in your environment asSLACK_APP_TOKEN.

Next, set the gathered tokens as environment variables using the following commands:

# MacOS/Linux

export SLACK_BOT_TOKEN=<your-bot-token>

export SLACK_APP_TOKEN=<your-app-token># Windows

set SLACK_BOT_TOKEN=<your-bot-token>

set SLACK_APP_TOKEN=<your-app-token>Different models from different AI providers are available if the corresponding environment variable is added, as shown in the sections below.

To interact with Anthropic models, navigate to your Anthropic account dashboard to create an API key, then export the key as follows:

export ANTHROPIC_API_KEY=<your-api-key>To use Google Cloud Vertex AI, follow this quick start to create a project for sending requests to the Gemini API, then gather Application Default Credentials with the strategy to match your development environment.

Once your project and credentials are configured, export environment variables to select from Gemini models:

export VERTEX_AI_PROJECT_ID=<your-project-id>

export VERTEX_AI_LOCATION=<location-to-deploy-model>The project location can be located under the Region on the Vertex AI dashboard, as well as more details about available Gemini models.

Unlock the OpenAI models from your OpenAI account dashboard by clicking create a new secret key, then export the key like so:

export OPENAI_API_KEY=<your-api-key># Clone this project onto your machine

git clone https://github.com/slack-samples/bolt-python-ai-chatbot.git

# Change into this project directory

cd bolt-python-ai-chatbot

# Setup your python virtual environment

python3 -m venv .venv

source .venv/bin/activate

# Install the dependencies

pip install -r requirements.txt

# Start your local server

python3 app.py# Run flake8 from root directory for linting

flake8 *.py && flake8 listeners/

# Run black from root directory for code formatting

black .manifest.json is a configuration for Slack apps. With a manifest, you can create an app with a pre-defined configuration, or adjust the configuration of an existing app.

app.py is the entry point for the application and is the file you'll run to start the server. This project aims to keep this file as thin as possible, primarily using it as a way to route inbound requests.

Every incoming request is routed to a "listener". Inside this directory, we group each listener based on the Slack Platform feature used, so /listeners/commands handles incoming Slash Commands requests, /listeners/events handles Events and so on.

-

ai_constants.py: Defines constants used throughout the AI module.

This module contains classes for communicating with different API providers, such as Anthropic, OpenAI, and Vertex AI. To add your own LLM, create a new class for it using the base_api.py as an example, then update ai/providers/__init__.py to include and utilize your new class for API communication.

-

__init__.py: This file contains utility functions for handling responses from the provider APIs and retrieving available providers.

-

user_identity.py: This file defines the UserIdentity class for creating user objects. Each object represents a user with the user_id, provider, and model attributes. -

user_state_store.py: This file defines the base class for FileStateStore. -

file_state_store.py: This file defines the FileStateStore class which handles the logic for creating and managing files for each user. -

set_user_state.py: This file creates a user object and uses a FileStateStore to save the user's selected provider to a JSON file. -

get_user_state.py: This file retrieves a users selected provider from the JSON file created withset_user_state.py.

Only implement OAuth if you plan to distribute your application across multiple workspaces. A separate app_oauth.py file can be found with relevant OAuth settings.

When using OAuth, Slack requires a public URL where it can send requests. In this template app, we've used ngrok. Checkout this guide for setting it up.

Start ngrok to access the app on an external network and create a redirect URL for OAuth.

ngrok http 3000

This output should include a forwarding address for http and https (we'll use https). It should look something like the following:

Forwarding https://3cb89939.ngrok.io -> http://localhost:3000

Navigate to OAuth & Permissions in your app configuration and click Add a Redirect URL. The redirect URL should be set to your ngrok forwarding address with the slack/oauth_redirect path appended. For example:

https://3cb89939.ngrok.io/slack/oauth_redirect

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for bolt-python-ai-chatbot

Similar Open Source Tools

bolt-python-ai-chatbot

The 'bolt-python-ai-chatbot' is a Slack chatbot app template that allows users to integrate AI-powered conversations into their Slack workspace. Users can interact with the bot in conversations and threads, send direct messages for private interactions, use commands to communicate with the bot, customize bot responses, and store user preferences. The app supports integration with Workflow Builder, custom language models, and different AI providers like OpenAI, Anthropic, and Google Cloud Vertex AI. Users can create user objects, manage user states, and select from various AI models for communication.

aisheets

Hugging Face AI Sheets is an open-source tool for building, enriching, and transforming datasets using AI models with no code. It can be deployed locally or on the Hub, providing access to thousands of open models. Users can easily generate datasets, run data generation scripts, and customize inference endpoints for text generation. The tool supports custom LLMs and offers advanced configuration options for authentication, inference, and miscellaneous settings. With AI Sheets, users can leverage the power of AI models without writing any code, making dataset management and transformation efficient and accessible.

aider-composer

Aider Composer is a VSCode extension that integrates Aider into your development workflow. It allows users to easily add and remove files, toggle between read-only and editable modes, review code changes, use different chat modes, and reference files in the chat. The extension supports multiple models, code generation, code snippets, and settings customization. It has limitations such as lack of support for multiple workspaces, Git repository features, linting, testing, voice features, in-chat commands, and configuration options.

starter-monorepo

Starter Monorepo is a template repository for setting up a monorepo structure in your project. It provides a basic setup with configurations for managing multiple packages within a single repository. This template includes tools for package management, versioning, testing, and deployment. By using this template, you can streamline your development process, improve code sharing, and simplify dependency management across your project. Whether you are working on a small project or a large-scale application, Starter Monorepo can help you organize your codebase efficiently and enhance collaboration among team members.

ai-town

AI Town is a virtual town where AI characters live, chat, and socialize. This project provides a deployable starter kit for building and customizing your own version of AI Town. It features a game engine, database, vector search, auth, text model, deployment, pixel art generation, background music generation, and local inference. You can customize your own simulation by creating characters and stories, updating spritesheets, changing the background, and modifying the background music.

ai-starter-kit

SambaNova AI Starter Kits is a collection of open-source examples and guides designed to facilitate the deployment of AI-driven use cases for developers and enterprises. The kits cover various categories such as Data Ingestion & Preparation, Model Development & Optimization, Intelligent Information Retrieval, and Advanced AI Capabilities. Users can obtain a free API key using SambaNova Cloud or deploy models using SambaStudio. Most examples are written in Python but can be applied to any programming language. The kits provide resources for tasks like text extraction, fine-tuning embeddings, prompt engineering, question-answering, image search, post-call analysis, and more.

TypeGPT

TypeGPT is a Python application that enables users to interact with ChatGPT or Google Gemini from any text field in their operating system using keyboard shortcuts. It provides global accessibility, keyboard shortcuts for communication, and clipboard integration for larger text inputs. Users need to have Python 3.x installed along with specific packages and API keys from OpenAI for ChatGPT access. The tool allows users to run the program normally or in the background, manage processes, and stop the program. Users can use keyboard shortcuts like `/ask`, `/see`, `/stop`, `/chatgpt`, `/gemini`, `/check`, and `Shift + Cmd + Enter` to interact with the application in any text field. Customization options are available by modifying files like `keys.txt` and `system_prompt.txt`. Contributions are welcome, and future plans include adding support for other APIs and a user-friendly GUI.

qrev

QRev is an open-source alternative to Salesforce, offering AI agents to scale sales organizations infinitely. It aims to provide digital workers for various sales roles or a superagent named Qai. The tech stack includes TypeScript for frontend, NodeJS for backend, MongoDB for app server database, ChromaDB for vector database, SQLite for AI server SQL relational database, and Langchain for LLM tooling. The tool allows users to run client app, app server, and AI server components. It requires Node.js and MongoDB to be installed, and provides detailed setup instructions in the README file.

vector-vein

VectorVein is a no-code AI workflow software inspired by LangChain and langflow, aiming to combine the powerful capabilities of large language models and enable users to achieve intelligent and automated daily workflows through simple drag-and-drop actions. Users can create powerful workflows without the need for programming, automating all tasks with ease. The software allows users to define inputs, outputs, and processing methods to create customized workflow processes for various tasks such as translation, mind mapping, summarizing web articles, and automatic categorization of customer reviews.

serverless-pdf-chat

The serverless-pdf-chat repository contains a sample application that allows users to ask natural language questions of any PDF document they upload. It leverages serverless services like Amazon Bedrock, AWS Lambda, and Amazon DynamoDB to provide text generation and analysis capabilities. The application architecture involves uploading a PDF document to an S3 bucket, extracting metadata, converting text to vectors, and using a LangChain to search for information related to user prompts. The application is not intended for production use and serves as a demonstration and educational tool.

pagible

PagibleAI CMS is an easy, flexible, and scalable API-first content management system that allows users to manage structured content, define new content elements, assign shared content to multiple pages, save drafts, publish content, and revert drafts. It features an extremely fast JSON frontend API, versatile GraphQL admin API, multi-language support, multi-domain routing, multi-tenancy capability, soft-deletes support, and is fully open source. The system can scale from a single page with SQLite to millions of pages with DB clusters. It can be seamlessly integrated into any existing Laravel application.

Open_Data_QnA

Open Data QnA is a Python library that allows users to interact with their PostgreSQL or BigQuery databases in a conversational manner, without needing to write SQL queries. The library leverages Large Language Models (LLMs) to bridge the gap between human language and database queries, enabling users to ask questions in natural language and receive informative responses. It offers features such as conversational querying with multiturn support, table grouping, multi schema/dataset support, SQL generation, query refinement, natural language responses, visualizations, and extensibility. The library is built on a modular design and supports various components like Database Connectors, Vector Stores, and Agents for SQL generation, validation, debugging, descriptions, embeddings, responses, and visualizations.

talon-ai-tools

Control large language models and AI tools through voice commands using the Talon Voice dictation engine. This tool is designed to help users quickly edit text, code by voice, reduce keyboard use for those with health issues, and speed up workflow by using AI commands across the desktop. It prompts and extends tools like Github Copilot and OpenAI API for text and image generation. Users can set up the tool by downloading the repo, obtaining an OpenAI API key, and customizing the endpoint URL for preferred models. The tool can be used without an OpenAI key and can be exclusively used with Copilot for those not needing LLM integration.

honcho

Honcho is a platform for creating personalized AI agents and LLM powered applications for end users. The repository is a monorepo containing the server/API for managing database interactions and storing application state, along with a Python SDK. It utilizes FastAPI for user context management and Poetry for dependency management. The API can be run using Docker or manually by setting environment variables. The client SDK can be installed using pip or Poetry. The project is open source and welcomes contributions, following a fork and PR workflow. Honcho is licensed under the AGPL-3.0 License.

renumics-rag

Renumics RAG is a retrieval-augmented generation assistant demo that utilizes LangChain and Streamlit. It provides a tool for indexing documents and answering questions based on the indexed data. Users can explore and visualize RAG data, configure OpenAI and Hugging Face models, and interactively explore questions and document snippets. The tool supports GPU and CPU setups, offers a command-line interface for retrieving and answering questions, and includes a web application for easy access. It also allows users to customize retrieval settings, embeddings models, and database creation. Renumics RAG is designed to enhance the question-answering process by leveraging indexed documents and providing detailed answers with sources.

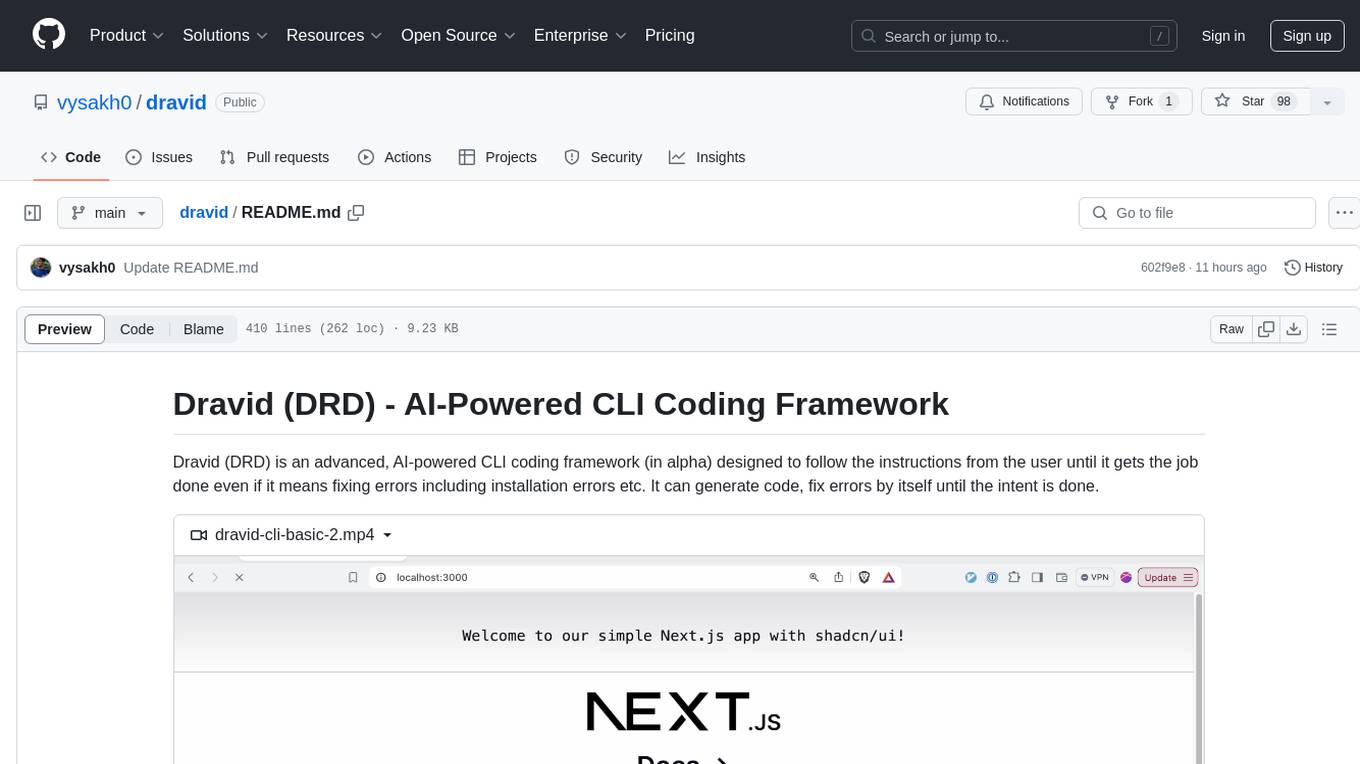

dravid

Dravid (DRD) is an advanced, AI-powered CLI coding framework designed to follow user instructions until the job is completed, including fixing errors. It can generate code, fix errors, handle image queries, manage file operations, integrate with external APIs, and provide a development server with error handling. Dravid is extensible and requires Python 3.7+ and CLAUDE_API_KEY. Users can interact with Dravid through CLI commands for various tasks like creating projects, asking questions, generating content, handling metadata, and file-specific queries. It supports use cases like Next.js project development, working with existing projects, exploring new languages, Ruby on Rails project development, and Python project development. Dravid's project structure includes directories for source code, CLI modules, API interaction, utility functions, AI prompt templates, metadata management, and tests. Contributions are welcome, and development setup involves cloning the repository, installing dependencies with Poetry, setting up environment variables, and using Dravid for project enhancements.

For similar tasks

bolt-python-ai-chatbot

The 'bolt-python-ai-chatbot' is a Slack chatbot app template that allows users to integrate AI-powered conversations into their Slack workspace. Users can interact with the bot in conversations and threads, send direct messages for private interactions, use commands to communicate with the bot, customize bot responses, and store user preferences. The app supports integration with Workflow Builder, custom language models, and different AI providers like OpenAI, Anthropic, and Google Cloud Vertex AI. Users can create user objects, manage user states, and select from various AI models for communication.

core

CORE is an open-source unified, persistent memory layer for all AI tools, allowing developers to maintain context across different tools like Cursor, ChatGPT, and Claude. It aims to solve the issue of context switching and information loss between sessions by creating a knowledge graph that remembers conversations, decisions, and insights. With features like unified memory, temporal knowledge graph, browser extension, chat with memory, auto-sync from apps, and MCP integration hub, CORE provides a seamless experience for managing and recalling context. The tool's ingestion pipeline captures evolving context through normalization, extraction, resolution, and graph integration, resulting in a dynamic memory that grows and changes with the user. When recalling from memory, CORE utilizes search, re-ranking, filtering, and output to provide relevant and contextual answers. Security measures include data encryption, authentication, access control, and vulnerability reporting.

IntelliChat

IntelliChat is an open-source AI chatbot tool designed to accelerate the integration of multiple language models into chatbot apps. Users can select their preferred AI provider and model from the UI, manage API keys, and access data using Intellinode. The tool is built with Intellinode and Next.js, and supports various AI providers such as OpenAI ChatGPT, Google Gemini, Azure Openai, Cohere Coral, Replicate, Mistral AI, Anthropic, and vLLM. It offers a user-friendly interface for developers to easily incorporate AI capabilities into their chatbot applications.

For similar jobs

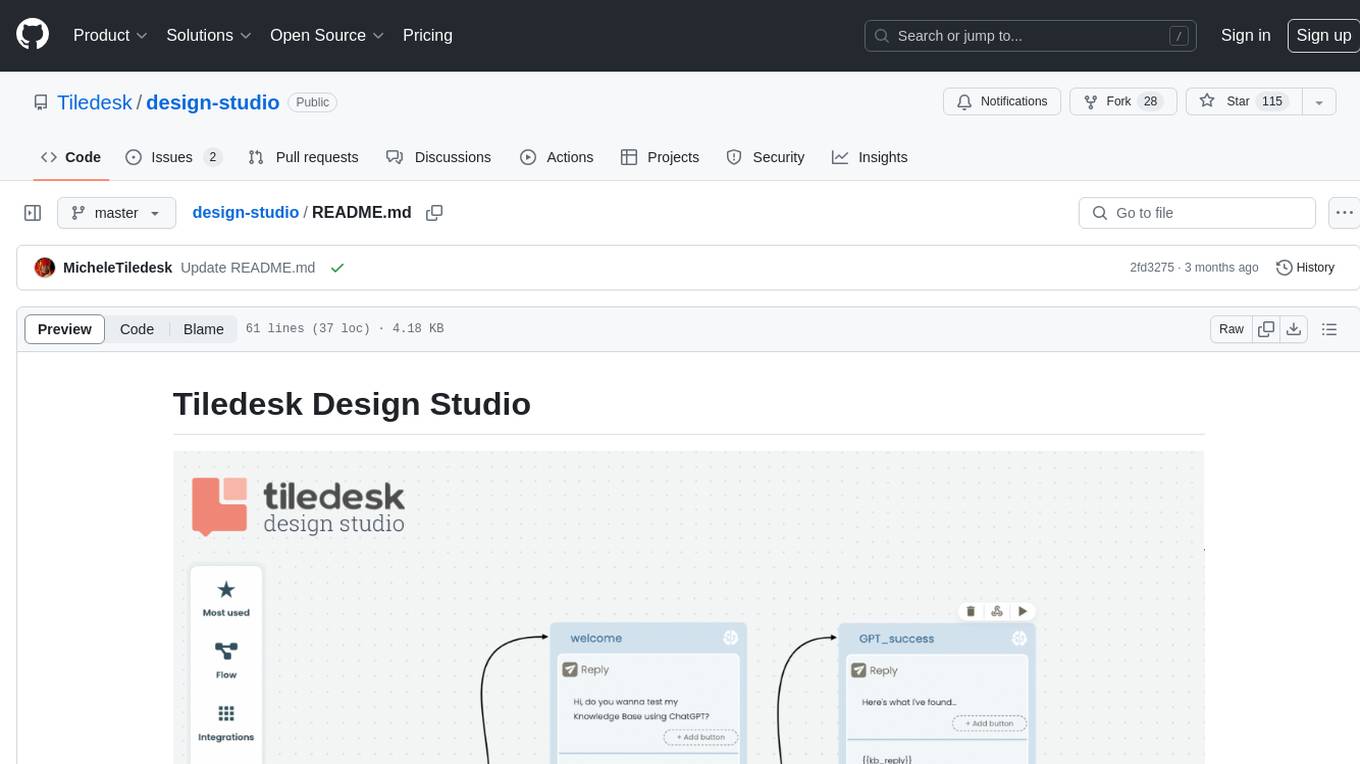

design-studio

Tiledesk Design Studio is an open-source, no-code development platform for creating chatbots and conversational apps. It offers a user-friendly, drag-and-drop interface with pre-ready actions and integrations. The platform combines the power of LLM/GPT AI with a flexible 'graph' approach for creating conversations and automations with ease. Users can automate customer conversations, prototype conversations, integrate ChatGPT, enhance user experience with multimedia, provide personalized product recommendations, set conditions, use random replies, connect to other tools like HubSpot CRM, integrate with WhatsApp, send emails, and seamlessly enhance existing setups.

telegram-llm

A Telegram LLM bot that allows users to deploy their own Telegram bot in 3 simple steps by creating a flow function, configuring access to the Telegram bot, and connecting to an LLM backend. Users need to sign into flows.network, have a bot token from Telegram, and an OpenAI API key. The bot can be customized with ChatGPT prompts and integrated with OpenAI and Telegram for various functionalities.

LogChat

LogChat is an open-source and free AI chat client that supports various chat models and technologies such as ChatGPT, 讯飞星火, DeepSeek, LLM, TTS, STT, and Live2D. The tool provides a user-friendly interface designed using Qt Creator and can be used on Windows systems without any additional environment requirements. Users can interact with different AI models, perform voice synthesis and recognition, and customize Live2D character models. LogChat also offers features like language translation, AI platform integration, and menu items like screenshot editing, clock, and application launcher.

AI-Agent-Starter-Kit

AI Agent Starter Kit is a modern full-stack AI-enabled template using Next.js for frontend and Express.js for backend, with Telegram and OpenAI integrations. It offers AI-assisted development, smart environment variable setup assistance, intelligent error resolution, context-aware code completion, and built-in debugging helpers. The kit provides a structured environment for developers to interact with AI tools seamlessly, enhancing the development process and productivity.

bolt-python-ai-chatbot

The 'bolt-python-ai-chatbot' is a Slack chatbot app template that allows users to integrate AI-powered conversations into their Slack workspace. Users can interact with the bot in conversations and threads, send direct messages for private interactions, use commands to communicate with the bot, customize bot responses, and store user preferences. The app supports integration with Workflow Builder, custom language models, and different AI providers like OpenAI, Anthropic, and Google Cloud Vertex AI. Users can create user objects, manage user states, and select from various AI models for communication.

MCPSpy

MCPSpy is a command-line tool leveraging eBPF technology to monitor Model Context Protocol (MCP) communication at the kernel level. It provides real-time visibility into JSON-RPC 2.0 messages exchanged between MCP clients and servers, supporting Stdio and HTTP transports. MCPSpy offers security analysis, debugging, performance monitoring, compliance assurance, and learning opportunities for understanding MCP communications. The tool consists of eBPF programs, an eBPF loader, an HTTP session manager, an MCP protocol parser, and output handlers for console display and JSONL output.

chatless

Chatless is a modern AI chat desktop application built on Tauri and Next.js. It supports multiple AI providers, can connect to local Ollama models, supports document parsing and knowledge base functions. All data is stored locally to protect user privacy. The application is lightweight, simple, starts quickly, and consumes minimal resources.

Windows-MCP

Windows-MCP is a lightweight, open-source project that enables seamless integration between AI agents and the Windows operating system. Acting as an MCP server bridges the gap between LLMs and the Windows operating system, allowing agents to perform tasks such as file navigation, application control, UI interaction, QA testing, and more. It provides seamless Windows integration, supports any LLM without traditional computer vision techniques, offers a rich toolset for UI automation, is lightweight and open-source, customizable and extendable, offers real-time interaction with low latency, includes a DOM mode for browser automation, and supports various tools for interacting with Windows applications and system components.