aider-composer

Aider's VSCode extension, seamlessly integrated into VSCode

Stars: 362

Aider Composer is a VSCode extension that integrates Aider into your development workflow. It allows users to easily add and remove files, toggle between read-only and editable modes, review code changes, use different chat modes, and reference files in the chat. The extension supports multiple models, code generation, code snippets, and settings customization. It has limitations such as lack of support for multiple workspaces, Git repository features, linting, testing, voice features, in-chat commands, and configuration options.

README:

Aider Composer is a VSCode extension that integrates Aider into your development workflow. This extension is highly inspired by cursor and cline.

It is highly recommended to read the Requirements and Extension Settings sections for initial configuration, otherwise the extension may not work correctly.

If you find this project helpful, consider supporting me to keep it alive and improving:

Your support is greatly appreciated and helps me maintain and improve this project. Thank you! 🎉

- Support VSCode Remote

-

Architect Mode, Note: the

editorpart will not show in the chat area. so you will see nothing after thearchitectpart.

- Multiple Models Support

-

Generate Code Mode

- Add Code Snippet to Chat

- Add Inline Diff Preview

- Easily add and remove files, and toggle between read-only and editable modes with just a click

- Most chat modes are supported, including

ask,diff,diff-fenced,udiff, andwhole, and you can easily switch between them - Review code changes before applying them, support both inline diff preview and diff editor preview (default).

- Chat history sessions are supported

- HTTP Proxy is supported (uses VSCode's

http.proxysetting, authentication not supported)

Due to certain limitations and other issues, this extension may not implement all features available in Aider. Some limitations include:

- Multiple workspaces are not supported

- Git repository features are not used

- Linting is not supported

- Testing is not supported

- Voice features are not supported

- In-chat commands are not usable

- Configuration options are not supported

This extension uses the Python packages aider-chat and flask to provide background services. You need to:

- Install Python (download from python.org or use other methods). For Mac or Python venv installations, please refer to this issue

- Install the required packages using:

pip install aider-chat flask

It is recommended to install Python from python.org in Windows. Other systems may be installed by the system package manager.

When you install Python, it is recommended to create a virtual environment and install the packages in the virtual environment. You can use the following command to create a virtual environment:

# create virtual environment, .venv is the name of the virtual environment, you can change it to any name you want

python -m venv .venv

# or in some systems, you may need to use python3

python3 -m venv .venv

# activate virtual environment

source .venv/bin/activate# create virtual environment, .venv is the name of the virtual environment, you can change it to any name you want

python -m venv .venv

# activate virtual environment

.venv\Scripts\activateVirtual environment is recommended because it can avoid conflicts with the system Python environment.

After you activate the virtual environment, you need to install aider-chat and flask packages in the virtual environment. You can use the following command to install the packages:

pip install aider-chat flaskAfter you install the packages, you can set the aider-composer.pythonPath to the directory containing the Python executable in the VSCode settings.

when in Linux or Mac, the path is in the virtual environment directory, like path/to/.venv/bin.

when in Windows, the path is in the virtual environment directory, like path/to/.venv/Scripts.

Since this extension needs extra things to run, how do I know the errors why it doesn't work? When the extension startup, it will execute a command to start the background service, you can see the output like below:

2025-01-19 13:55:33.344 [info] aider-chat process args: /home/lee/aider/bin/python -m flask -A /home/lee/.vscode-server/extensions/lee2py.aider-composer-1.10.0/server/main.py run --port 13329

The log above is the command used in VSCode SSH Remote. when it fails, you can see some error logs to diagnose the problem. But sometimes the error logs are not enough, you can use this command (/home/lee/aider/bin/python -m flask -A /home/lee/.vscode-server/extensions/lee2py.aider-composer-1.10.0/server/main.py run --port 13329) to execute and see the error logs in the terminal.

This extension contributes the following setting:

-

aider-composer.pythonPath: The directory containing the Python executable (not the Python executable path itself) whereaider.chatandflaskpackages are installed. This setting is required for the extension to activate.

Aider supports five chat modes: ask, diff, diff-fenced, udiff, and whole. In this extension, you can switch between them by clicking the mode name in the chat input area.

The chat modes are divided into three groups: ask, code and architect.

-

askmode is for general questions and will not modify any files -

codemode includes all other chat modes and is used for code modifications. The optimal chat mode may vary depending on your LLM model and programming language. For more information, refer to Aider's leaderboards. -

architectmode splits the chat into two parts:architectandeditor. Thearchitectpart is used for describing how to solve the coding problem and will not modify any files. Theeditorpart is used for code modifications.

To use architect mode, you need to set Editor Model in the settings page. And there is a limitation because of aider-chat's implementation. The editor will not show in the chat area. So you will see nothing after the architect part as shown below.

For more information about architect mode, please refer to Aider's documentation.

- If the LLM outputs an incorrect diff format, code modifications may fail. Try switching to a different diff format

- The leaderboard is tested with Python code, so optimal modes may differ for other languages

- The

wholemode may be the easiest for LLMs to understand but can consume more tokens

In Aider, you can reference files in the chat, file reference can be readonly or editable. a readonly file can't be modified.

In this extension, file reference is above the chat input area, you can click the file name to toggle the reference mode. when reference is highlighted border, it means the file is editable. there is two ways to add a new file reference:

- click add button and add references, this references is readonly by default.

- use

@to reference a file in chat input area, this file will be editable by default.

When Aider modifies code, it will show you the code. You have two review options:

- Use diff editor (default)

- Use inline diff preview

when Aider modify code, it will show you a diff editor, you can review the code changes and confirm to apply them by clicking the button ✔ at editor toolbar.

When Aider modifies code, it will show you an inline diff preview. You can review the code changes and accept or reject each snippet by clicking the accept or reject button before the diff.

To enable this feature, you need to set aider-composer.inlineDiff.enable to true in VSCode settings and restart VSCode.

You can add a code snippet to the chat by selecting code in the editor and pressing ctrl+shift+k.

You can enter generate code mode by pressing ctrl+shift+l in the editor. The current line will be highlighted, and the code generated by Aider will appear below the highlighted line.

You can add multiple models and switch between them in the settings page. When you switch model or add new model, you need to click save button on top right to take effect.

This extension supports VSCode Remote, but you need to set aider-composer.pythonPath to the Python executable path in the remote server. And the most important thing is you need to install Python and the required packages pip install aider-chat flask in the remote server.

Enjoy!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aider-composer

Similar Open Source Tools

aider-composer

Aider Composer is a VSCode extension that integrates Aider into your development workflow. It allows users to easily add and remove files, toggle between read-only and editable modes, review code changes, use different chat modes, and reference files in the chat. The extension supports multiple models, code generation, code snippets, and settings customization. It has limitations such as lack of support for multiple workspaces, Git repository features, linting, testing, voice features, in-chat commands, and configuration options.

CLI

Bito CLI provides a command line interface to the Bito AI chat functionality, allowing users to interact with the AI through commands. It supports complex automation and workflows, with features like long prompts and slash commands. Users can install Bito CLI on Mac, Linux, and Windows systems using various methods. The tool also offers configuration options for AI model type, access key management, and output language customization. Bito CLI is designed to enhance user experience in querying AI models and automating tasks through the command line interface.

SeaGOAT

SeaGOAT is a local search tool that leverages vector embeddings to enable you to search your codebase semantically. It is designed to work on Linux, macOS, and Windows and can process files in various formats, including text, Markdown, Python, C, C++, TypeScript, JavaScript, HTML, Go, Java, PHP, and Ruby. SeaGOAT uses a vector database called ChromaDB and a local vector embedding engine to provide fast and accurate search results. It also supports regular expression/keyword-based matches. SeaGOAT is open-source and licensed under an open-source license, and users are welcome to examine the source code, raise concerns, or create pull requests to fix problems.

vim-ollama

The 'vim-ollama' plugin for Vim adds Copilot-like code completion support using Ollama as a backend, enabling intelligent AI-based code completion and integrated chat support for code reviews. It does not rely on cloud services, preserving user privacy. The plugin communicates with Ollama via Python scripts for code completion and interactive chat, supporting Vim only. Users can configure LLM models for code completion tasks and interactive conversations, with detailed installation and usage instructions provided in the README.

TypeGPT

TypeGPT is a Python application that enables users to interact with ChatGPT or Google Gemini from any text field in their operating system using keyboard shortcuts. It provides global accessibility, keyboard shortcuts for communication, and clipboard integration for larger text inputs. Users need to have Python 3.x installed along with specific packages and API keys from OpenAI for ChatGPT access. The tool allows users to run the program normally or in the background, manage processes, and stop the program. Users can use keyboard shortcuts like `/ask`, `/see`, `/stop`, `/chatgpt`, `/gemini`, `/check`, and `Shift + Cmd + Enter` to interact with the application in any text field. Customization options are available by modifying files like `keys.txt` and `system_prompt.txt`. Contributions are welcome, and future plans include adding support for other APIs and a user-friendly GUI.

torchchat

torchchat is a codebase showcasing the ability to run large language models (LLMs) seamlessly. It allows running LLMs using Python in various environments such as desktop, server, iOS, and Android. The tool supports running models via PyTorch, chatting, generating text, running chat in the browser, and running models on desktop/server without Python. It also provides features like AOT Inductor for faster execution, running in C++ using the runner, and deploying and running on iOS and Android. The tool supports popular hardware and OS including Linux, Mac OS, Android, and iOS, with various data types and execution modes available.

fasttrackml

FastTrackML is an experiment tracking server focused on speed and scalability, fully compatible with MLFlow. It provides a user-friendly interface to track and visualize your machine learning experiments, making it easy to compare different models and identify the best performing ones. FastTrackML is open source and can be easily installed and run with pip or Docker. It is also compatible with the MLFlow Python package, making it easy to integrate with your existing MLFlow workflows.

termax

Termax is an LLM agent in your terminal that converts natural language to commands. It is featured by: - Personalized Experience: Optimize the command generation with RAG. - Various LLMs Support: OpenAI GPT, Anthropic Claude, Google Gemini, Mistral AI, and more. - Shell Extensions: Plugin with popular shells like `zsh`, `bash` and `fish`. - Cross Platform: Able to run on Windows, macOS, and Linux.

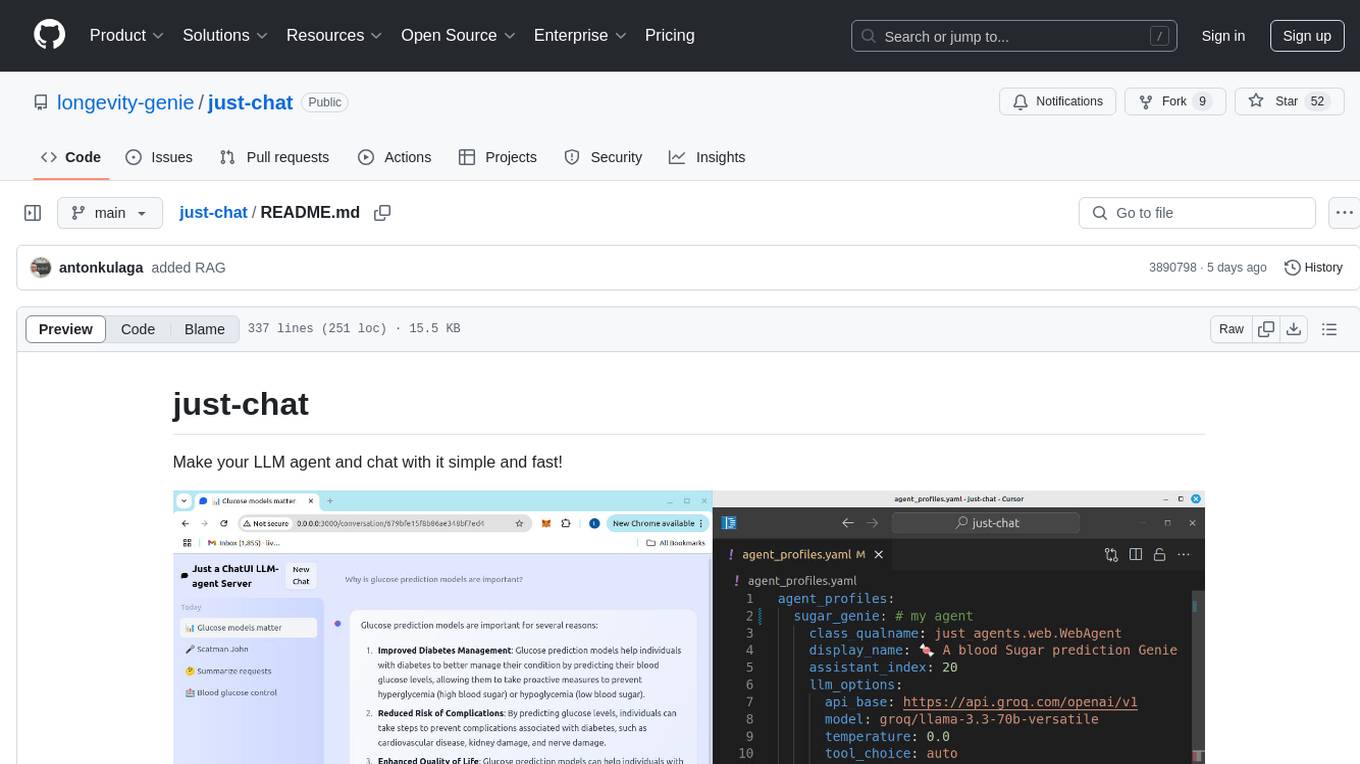

just-chat

Just-Chat is a containerized application that allows users to easily set up and chat with their AI agent. Users can customize their AI assistant using a YAML file, add new capabilities with Python tools, and interact with the agent through a chat web interface. The tool supports various modern models like DeepSeek Reasoner, ChatGPT, LLAMA3.3, etc. Users can also use semantic search capabilities with MeiliSearch to find and reference relevant information based on meaning. Just-Chat requires Docker or Podman for operation and provides detailed installation instructions for both Linux and Windows users.

openui

OpenUI is a tool designed to simplify the process of building UI components by allowing users to describe UI using their imagination and see it rendered live. It supports converting HTML to React, Svelte, Web Components, etc. The tool is open source and aims to make UI development fun, fast, and flexible. It integrates with various AI services like OpenAI, Groq, Gemini, Anthropic, Cohere, and Mistral, providing users with the flexibility to use different models. OpenUI also supports LiteLLM for connecting to various LLM services and allows users to create custom proxy configs. The tool can be run locally using Docker or Python, and it offers a development environment for quick setup and testing.

ai-town

AI Town is a virtual town where AI characters live, chat, and socialize. This project provides a deployable starter kit for building and customizing your own version of AI Town. It features a game engine, database, vector search, auth, text model, deployment, pixel art generation, background music generation, and local inference. You can customize your own simulation by creating characters and stories, updating spritesheets, changing the background, and modifying the background music.

starter-monorepo

Starter Monorepo is a template repository for setting up a monorepo structure in your project. It provides a basic setup with configurations for managing multiple packages within a single repository. This template includes tools for package management, versioning, testing, and deployment. By using this template, you can streamline your development process, improve code sharing, and simplify dependency management across your project. Whether you are working on a small project or a large-scale application, Starter Monorepo can help you organize your codebase efficiently and enhance collaboration among team members.

NeoGPT

NeoGPT is an AI assistant that transforms your local workspace into a powerhouse of productivity from your CLI. With features like code interpretation, multi-RAG support, vision models, and LLM integration, NeoGPT redefines how you work and create. It supports executing code seamlessly, multiple RAG techniques, vision models, and interacting with various language models. Users can run the CLI to start using NeoGPT and access features like Code Interpreter, building vector database, running Streamlit UI, and changing LLM models. The tool also offers magic commands for chat sessions, such as resetting chat history, saving conversations, exporting settings, and more. Join the NeoGPT community to experience a new era of efficiency and contribute to its evolution.

reai-ghidra

The RevEng.AI Ghidra Plugin by RevEng.ai allows users to interact with their API within Ghidra for Binary Code Similarity analysis to aid in Reverse Engineering stripped binaries. Users can upload binaries, rename functions above a confidence threshold, and view similar functions for a selected function.

bolt-python-ai-chatbot

The 'bolt-python-ai-chatbot' is a Slack chatbot app template that allows users to integrate AI-powered conversations into their Slack workspace. Users can interact with the bot in conversations and threads, send direct messages for private interactions, use commands to communicate with the bot, customize bot responses, and store user preferences. The app supports integration with Workflow Builder, custom language models, and different AI providers like OpenAI, Anthropic, and Google Cloud Vertex AI. Users can create user objects, manage user states, and select from various AI models for communication.

webwhiz

WebWhiz is an open-source tool that allows users to train ChatGPT on website data to build AI chatbots for customer queries. It offers easy integration, data-specific responses, regular data updates, no-code builder, chatbot customization, fine-tuning, and offline messaging. Users can create and train chatbots in a few simple steps by entering their website URL, automatically fetching and preparing training data, training ChatGPT, and embedding the chatbot on their website. WebWhiz can crawl websites monthly, collect text data and metadata, and process text data using tokens. Users can train custom data, but bringing custom open AI keys is not yet supported. The tool has no limitations on context size but may limit the number of pages based on the chosen plan. WebWhiz SDK is available on NPM, CDNs, and GitHub, and users can self-host it using Docker or manual setup involving MongoDB, Redis, Node, Python, and environment variables setup. For any issues, users can contact [email protected].

For similar tasks

aider-composer

Aider Composer is a VSCode extension that integrates Aider into your development workflow. It allows users to easily add and remove files, toggle between read-only and editable modes, review code changes, use different chat modes, and reference files in the chat. The extension supports multiple models, code generation, code snippets, and settings customization. It has limitations such as lack of support for multiple workspaces, Git repository features, linting, testing, voice features, in-chat commands, and configuration options.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

mistral.rs

Mistral.rs is a fast LLM inference platform written in Rust. We support inference on a variety of devices, quantization, and easy-to-use application with an Open-AI API compatible HTTP server and Python bindings.

generative-ai-python

The Google AI Python SDK is the easiest way for Python developers to build with the Gemini API. The Gemini API gives you access to Gemini models created by Google DeepMind. Gemini models are built from the ground up to be multimodal, so you can reason seamlessly across text, images, and code.

jetson-generative-ai-playground

This repo hosts tutorial documentation for running generative AI models on NVIDIA Jetson devices. The documentation is auto-generated and hosted on GitHub Pages using their CI/CD feature to automatically generate/update the HTML documentation site upon new commits.

chat-ui

A chat interface using open source models, eg OpenAssistant or Llama. It is a SvelteKit app and it powers the HuggingChat app on hf.co/chat.

MetaGPT

MetaGPT is a multi-agent framework that enables GPT to work in a software company, collaborating to tackle more complex tasks. It assigns different roles to GPTs to form a collaborative entity for complex tasks. MetaGPT takes a one-line requirement as input and outputs user stories, competitive analysis, requirements, data structures, APIs, documents, etc. Internally, MetaGPT includes product managers, architects, project managers, and engineers. It provides the entire process of a software company along with carefully orchestrated SOPs. MetaGPT's core philosophy is "Code = SOP(Team)", materializing SOP and applying it to teams composed of LLMs.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.