kitops

An open source DevOps tool for packaging and versioning AI/ML models, datasets, code, and configuration into an OCI artifact.

Stars: 736

KitOps is a packaging and versioning system for AI/ML projects that uses open standards so it works with the AI/ML, development, and DevOps tools you are already using. KitOps simplifies the handoffs between data scientists, application developers, and SREs working with LLMs and other AI/ML models. KitOps' ModelKits are a standards-based package for models, their dependencies, configurations, and codebases. ModelKits are portable, reproducible, and work with the tools you already use.

README:

KitOps is a packaging, versioning, and sharing system for AI/ML projects that uses open standards so it works with the AI/ML, development, and DevOps tools you are already using, and can be stored in your enterprise container registry. It's AI/ML platform engineering teams' preferred solution for securely packaging and versioning assets.

KitOps creates a ModelKit for your AI/ML project which includes everything you need to reproduce it locally or deploy it into production. You can even selectively unpack a ModelKit so different team members can save time and storage space by only grabbing what they need for a task. Because ModelKits are immutable, signable, and live in your existing container registry they're easy for organizations to track, control, and audit.

ModelKits simplify the handoffs between data scientists, application developers, and SREs working with LLMs and other AI/ML models. Teams and enterprises use KitOps as a secure storage throughout the AI/ML project lifecycle.

Use KitOps to speed up and de-risk all types of AI/ML projects:

- Predictive models

- Large language models

- Computer vision models

- Multi-modal models

- Audio models

- etc...

For our friends in the EU - ModelKits are the perfect way to create a library of model versions for EU AI Act compliance because they're tamper-proof, signable, and auditable.

- 🚢 Create a runnable container from a ModelKit with one command! Read KitOps deploy docs for details.

- 🥂 Get the most out of KitOps' ModelKits by using them with the Jozu Hub repository. Or, continue using ModelKits with your existing OCI registry (even on-premises and air-gapped).

- 🛠️ Use KitOps with Dagger pipelines using our modules from the Daggerverse.

- ⛑️ KitOps works great with Red Hat InstructLab and Quay.io products.

- 🎁 Unified packaging: A ModelKit package includes models, datasets, configurations, and code. Add as much or as little as your project needs.

- 🏭 Versioning: Each ModelKit is tagged so everyone knows which dataset and model work together.

- 🔒 Tamper-proofing: Each ModelKit package includes an SHA digest for itself, and every artifact it holds.

- 🤩 Selective-unpacking: Unpack only what you need from a ModelKit with the

kit unpack --filtercommand - just the model, just the dataset and code, or any other combination. - 🤖 Automation: Pack or unpack a ModelKit locally or as part of your CI/CD workflow for testing, integration, or deployment (e.g. GitHub Actions or Dagger.

- 🐳 Deploy containers: Generate a basic or custom docker container from any ModelKit.

- 🚢 Kubernetes-ready: Generate a Kubernetes / KServe deployment config from any ModelKit.

- 🪛 LLM fine-tuning: Use KitOps to fine-tune a large language model using LoRA.

- 🎯 RAG pipelines: Create a RAG pipeline for tailoring an LLM with KitOps.

- 📝 Artifact signing: ModelKits and their assets can be signed so you can be confident of their provenance.

- 🌈 Standards-based: Store ModelKits in any OCI 1.1-compliant container or artifact registry.

- 🥧 Simple syntax: Kitfiles are easy to write and read, using a familiar YAML syntax.

- 🩰 Flexible: Reference base models using

model parts, or store key-value pairs (or any YAML-compatible JSON data) in your Kitfile - use it to keep features, hyperparameters, links to MLOps tool experiments, or validation output. - 🏃♂️➡️ Run locally: Kit's Dev Mode lets you run an LLM locally, configure it, and prompt/chat with it instantly.

- 🤗 Universal: ModelKits can be used with any AI, ML, or LLM project - even multi-modal models.

There's a video of KitOps in action on the KitOps site.

- Install the CLI for your platform.

- Follow the Getting Started docs to learn to pack, unpack, and share a ModelKit.

- Test drive one of our ModelKit Quick Starts that includes everything you need to run your model including a codebase, dataset, documentation, and of course the model.

For those who prefer to build from the source, follow these steps to get the latest version from our repository.

ModelKit: At the heart of KitOps is the ModelKit, an OCI-compliant packaging format for sharing all AI project artifacts: datasets, code, configurations, and models. By standardizing the way these components are packaged, versioned, and shared, ModelKits facilitate a more streamlined and collaborative development process that is compatible with any MLOps or DevOps tool.

Kitfile: A ModelKit is defined by a Kitfile - your AI/ML project's blueprint. It uses YAML to describe where to find each of the artifacts that will be packaged into the ModelKit. The Kitfile outlines what each part of the project is.

Kit CLI: The Kit CLI not only enables users to create, manage, run, and deploy ModelKits -- it lets you pull only the pieces you need. Just need the serialized model for deployment? Use unpack --model, or maybe you just want the training datasets? unpack --datasets.

For support, release updates, and general KitOps discussion, please join the KitOps Discord. Follow KitOps on X for daily updates.

If you need help there are several ways to reach our community and Maintainers outlined in our support doc

Your insights help KitOps evolve as an open standard for AI/ML. We deeply value the issues and feature requests we get from users in our community 💖. To contribute your thoughts,navigate to the Issues tab and hitting the New Issue green button. Our templates guide you in providing essential details to address your request effectively.

We ❤️ our KitOps community and contributors. To learn more about the many ways you can contribute (you don't need to be a coder) and how to get started see our Contributor's Guide. Please read our Governance and our Code of Conduct before contributing.

At KitOps, inclusivity, empathy, and responsibility are at our core. Please read our Code of Conduct to understand the values guiding our community.

We share our roadmap openly so anyone in the community can provide feedback and ideas. Let us know what you'd like to see by pinging us on Discord or creating an issue.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for kitops

Similar Open Source Tools

kitops

KitOps is a packaging and versioning system for AI/ML projects that uses open standards so it works with the AI/ML, development, and DevOps tools you are already using. KitOps simplifies the handoffs between data scientists, application developers, and SREs working with LLMs and other AI/ML models. KitOps' ModelKits are a standards-based package for models, their dependencies, configurations, and codebases. ModelKits are portable, reproducible, and work with the tools you already use.

kitops

KitOps is a CNCF open standards project for packaging, versioning, and securely sharing AI/ML projects. It provides a unified solution for packaging, versioning, and managing assets in security-conscious enterprises, governments, and cloud operators. KitOps elevates AI artifacts to first-class, governed assets through ModelKits, which are tamper-proof, signable, and compatible with major container registries. The tool simplifies collaboration between data scientists, developers, and SREs, ensuring reliable and repeatable workflows for both development and operations. KitOps supports packaging for various types of models, including large language models, computer vision models, multi-modal models, predictive models, and audio models. It also facilitates compliance with the EU AI Act by offering tamper-proof, signable, and auditable ModelKits.

mastra

Mastra is an opinionated Typescript framework designed to help users quickly build AI applications and features. It provides primitives such as workflows, agents, RAG, integrations, syncs, and evals. Users can run Mastra locally or deploy it to a serverless cloud. The framework supports various LLM providers, offers tools for building language models, workflows, and accessing knowledge bases. It includes features like durable graph-based state machines, retrieval-augmented generation, integrations, syncs, and automated tests for evaluating LLM outputs.

OpenDAN-Personal-AI-OS

OpenDAN is an open source Personal AI OS that consolidates various AI modules for personal use. It empowers users to create powerful AI agents like assistants, tutors, and companions. The OS allows agents to collaborate, integrate with services, and control smart devices. OpenDAN offers features like rapid installation, AI agent customization, connectivity via Telegram/Email, building a local knowledge base, distributed AI computing, and more. It aims to simplify life by putting AI in users' hands. The project is in early stages with ongoing development and future plans for user and kernel mode separation, home IoT device control, and an official OpenDAN SDK release.

AutoGPT

AutoGPT is a revolutionary tool that empowers everyone to harness the power of AI. With AutoGPT, you can effortlessly build, test, and delegate tasks to AI agents, unlocking a world of possibilities. Our mission is to provide the tools you need to focus on what truly matters: innovation and creativity.

supervisely

Supervisely is a computer vision platform that provides a range of tools and services for developing and deploying computer vision solutions. It includes a data labeling platform, a model training platform, and a marketplace for computer vision apps. Supervisely is used by a variety of organizations, including Fortune 500 companies, research institutions, and government agencies.

langwatch

LangWatch is a monitoring and analytics platform designed to track, visualize, and analyze interactions with Large Language Models (LLMs). It offers real-time telemetry to optimize LLM cost and latency, a user-friendly interface for deep insights into LLM behavior, user analytics for engagement metrics, detailed debugging capabilities, and guardrails to monitor LLM outputs for issues like PII leaks and toxic language. The platform supports OpenAI and LangChain integrations, simplifying the process of tracing LLM calls and generating API keys for usage. LangWatch also provides documentation for easy integration and self-hosting options for interested users.

humanlayer

HumanLayer is a Python toolkit designed to enable AI agents to interact with humans in tool-based and asynchronous workflows. By incorporating humans-in-the-loop, agentic tools can access more powerful and meaningful tasks. The toolkit provides features like requiring human approval for function calls, human as a tool for contacting humans, omni-channel contact capabilities, granular routing, and support for various LLMs and orchestration frameworks. HumanLayer aims to ensure human oversight of high-stakes function calls, making AI agents more reliable and safe in executing impactful tasks.

deer-flow

DeerFlow is a community-driven Deep Research framework that combines language models with specialized tools for tasks like web search, crawling, and Python code execution. It supports FaaS deployment and one-click deployment based on Volcengine. The framework includes core capabilities like LLM integration, search and retrieval, RAG integration, MCP seamless integration, human collaboration, report post-editing, and content creation. The architecture is based on a modular multi-agent system with components like Coordinator, Planner, Research Team, and Text-to-Speech integration. DeerFlow also supports interactive mode, human-in-the-loop mechanism, and command-line arguments for customization.

hopsworks

Hopsworks is a data platform for ML with a Python-centric Feature Store and MLOps capabilities. It provides collaboration for ML teams, offering a secure, governed platform for developing, managing, and sharing ML assets. Hopsworks supports project-based multi-tenancy, team collaboration, development tools for Data Science, and is available on any platform including managed cloud services and on-premise installations. The platform enables end-to-end responsibility from raw data to managed features and models, supports versioning, lineage, and provenance, and facilitates the complete MLOps life cycle.

crewAI

crewAI is a cutting-edge framework for orchestrating role-playing, autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. It provides a flexible and structured approach to AI collaboration, enabling users to define agents with specific roles, goals, and tools, and assign them tasks within a customizable process. crewAI supports integration with various LLMs, including OpenAI, and offers features such as autonomous task delegation, flexible task management, and output parsing. It is open-source and welcomes contributions, with a focus on improving the library based on usage data collected through anonymous telemetry.

AgentForge

AgentForge is a low-code framework tailored for the rapid development, testing, and iteration of AI-powered autonomous agents and Cognitive Architectures. It is compatible with a range of LLM models and offers flexibility to run different models for different agents based on specific needs. The framework is designed for seamless extensibility and database-flexibility, making it an ideal playground for various AI projects. AgentForge is a beta-testing ground and future-proof hub for crafting intelligent, model-agnostic autonomous agents.

promptbook

Promptbook is a library designed to build responsible, controlled, and transparent applications on top of large language models (LLMs). It helps users overcome limitations of LLMs like hallucinations, off-topic responses, and poor quality output by offering features such as fine-tuning models, prompt-engineering, and orchestrating multiple prompts in a pipeline. The library separates concerns, establishes a common format for prompt business logic, and handles low-level details like model selection and context size. It also provides tools for pipeline execution, caching, fine-tuning, anomaly detection, and versioning. Promptbook supports advanced techniques like Retrieval-Augmented Generation (RAG) and knowledge utilization to enhance output quality.

ml-engineering

This repository provides a comprehensive collection of methodologies, tools, and step-by-step instructions for successful training of large language models (LLMs) and multi-modal models. It is a technical resource suitable for LLM/VLM training engineers and operators, containing numerous scripts and copy-n-paste commands to facilitate quick problem-solving. The repository is an ongoing compilation of the author's experiences training BLOOM-176B and IDEFICS-80B models, and currently focuses on the development and training of Retrieval Augmented Generation (RAG) models at Contextual.AI. The content is organized into six parts: Insights, Hardware, Orchestration, Training, Development, and Miscellaneous. It includes key comparison tables for high-end accelerators and networks, as well as shortcuts to frequently needed tools and guides. The repository is open to contributions and discussions, and is licensed under Attribution-ShareAlike 4.0 International.

CodeGPT

CodeGPT is an extension for JetBrains IDEs that provides access to state-of-the-art large language models (LLMs) for coding assistance. It offers a range of features to enhance the coding experience, including code completions, a ChatGPT-like interface for instant coding advice, commit message generation, reference file support, name suggestions, and offline development support. CodeGPT is designed to keep privacy in mind, ensuring that user data remains secure and private.

nexent

Nexent is a powerful tool for analyzing and visualizing network traffic data. It provides comprehensive insights into network behavior, helping users to identify patterns, anomalies, and potential security threats. With its user-friendly interface and advanced features, Nexent is suitable for network administrators, cybersecurity professionals, and anyone looking to gain a deeper understanding of their network infrastructure.

For similar tasks

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

ray

Ray is a unified framework for scaling AI and Python applications. It consists of a core distributed runtime and a set of AI libraries for simplifying ML compute, including Data, Train, Tune, RLlib, and Serve. Ray runs on any machine, cluster, cloud provider, and Kubernetes, and features a growing ecosystem of community integrations. With Ray, you can seamlessly scale the same code from a laptop to a cluster, making it easy to meet the compute-intensive demands of modern ML workloads.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

djl

Deep Java Library (DJL) is an open-source, high-level, engine-agnostic Java framework for deep learning. It is designed to be easy to get started with and simple to use for Java developers. DJL provides a native Java development experience and allows users to integrate machine learning and deep learning models with their Java applications. The framework is deep learning engine agnostic, enabling users to switch engines at any point for optimal performance. DJL's ergonomic API interface guides users with best practices to accomplish deep learning tasks, such as running inference and training neural networks.

mlflow

MLflow is a platform to streamline machine learning development, including tracking experiments, packaging code into reproducible runs, and sharing and deploying models. MLflow offers a set of lightweight APIs that can be used with any existing machine learning application or library (TensorFlow, PyTorch, XGBoost, etc), wherever you currently run ML code (e.g. in notebooks, standalone applications or the cloud). MLflow's current components are:

* `MLflow Tracking

tt-metal

TT-NN is a python & C++ Neural Network OP library. It provides a low-level programming model, TT-Metalium, enabling kernel development for Tenstorrent hardware.

burn

Burn is a new comprehensive dynamic Deep Learning Framework built using Rust with extreme flexibility, compute efficiency and portability as its primary goals.

awsome-distributed-training

This repository contains reference architectures and test cases for distributed model training with Amazon SageMaker Hyperpod, AWS ParallelCluster, AWS Batch, and Amazon EKS. The test cases cover different types and sizes of models as well as different frameworks and parallel optimizations (Pytorch DDP/FSDP, MegatronLM, NemoMegatron...).

For similar jobs

db2rest

DB2Rest is a modern low-code REST DATA API platform that simplifies the development of intelligent applications. It seamlessly integrates existing and new databases with language models (LMs/LLMs) and vector stores, enabling the rapid delivery of context-aware, reasoning applications without vendor lock-in.

kitops

KitOps is a packaging and versioning system for AI/ML projects that uses open standards so it works with the AI/ML, development, and DevOps tools you are already using. KitOps simplifies the handoffs between data scientists, application developers, and SREs working with LLMs and other AI/ML models. KitOps' ModelKits are a standards-based package for models, their dependencies, configurations, and codebases. ModelKits are portable, reproducible, and work with the tools you already use.

kweaver

KWeaver is an open-source cognitive intelligence development framework that provides data scientists, application developers, and domain experts with the ability for rapid development, comprehensive openness, and high-performance knowledge network generation and cognitive intelligence large model framework. It offers features such as automated and visual knowledge graph construction, visualization and analysis of knowledge graph data, knowledge graph integration, knowledge graph resource management, large model prompt engineering and debugging, and visual configuration for large model access.

honey

Bee is an ORM framework that provides easy and high-efficiency database operations, allowing developers to focus on business logic development. It supports various databases and features like automatic filtering, partial field queries, pagination, and JSON format results. Bee also offers advanced functionalities like sharding, transactions, complex queries, and MongoDB ORM. The tool is designed for rapid application development in Java, offering faster development for Java Web and Spring Cloud microservices. The Enterprise Edition provides additional features like financial computing support, automatic value insertion, desensitization, dictionary value conversion, multi-tenancy, and more.

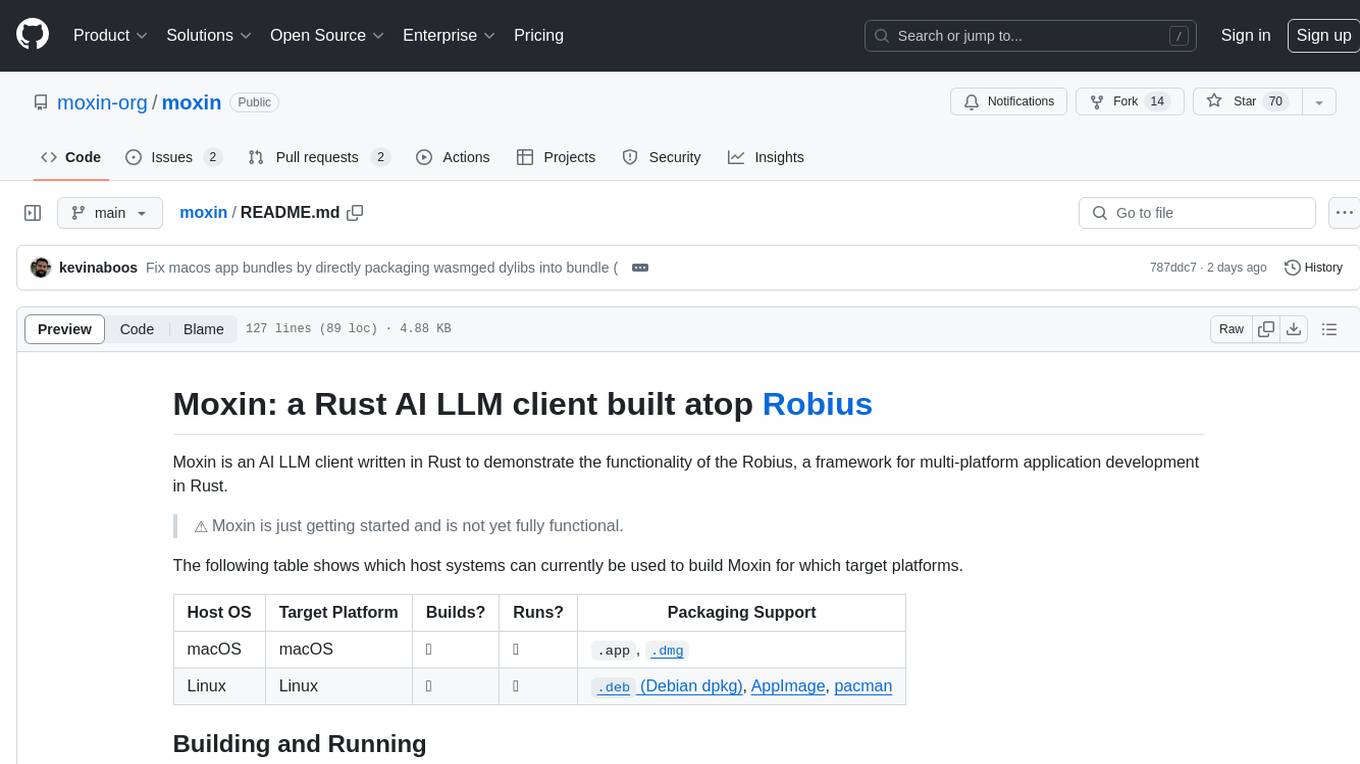

moxin

Moxin is an AI LLM client written in Rust to demonstrate the functionality of the Robius framework for multi-platform application development. It is currently in early stages of development and not fully functional. The tool supports building and running on macOS and Linux systems, with packaging options available for distribution. Users can install the required WasmEdge WASM runtime and dependencies to build and run Moxin. Packaging for distribution includes generating `.deb` Debian packages, AppImage, and pacman installation packages for Linux, as well as `.app` bundles and `.dmg` disk images for macOS. The macOS app is not signed, leading to a warning on installation, which can be resolved by removing the quarantine attribute from the installed app.

choco-builder

ChocoBuilder (aka Chocolate Factory) is an open-source LLM application development framework designed to help you easily create powerful software development SDLC + LLM generation assistants. It provides modules for integration into JVM projects, usage with RAGScript, and local deployment examples. ChocoBuilder follows a Domain Driven Problem-Solving design philosophy with key concepts like ProblemClarifier, ProblemAnalyzer, SolutionDesigner, SolutionReviewer, and SolutionExecutor. It offers use cases for desktop/IDE, server, and Android applications, with examples for frontend design, semantic code search, testcase generation, and code interpretation.

aidldemo

This repository demonstrates how to achieve cross-process bidirectional communication and large file transfer using AIDL and anonymous shared memory. AIDL is a way to implement Inter-Process Communication in Android, based on Binder. To overcome the data size limit of Binder, anonymous shared memory is used for large file transfer. Shared memory allows processes to share memory by mapping a common memory area into their respective process spaces. While efficient for transferring large data between processes, shared memory lacks synchronization mechanisms, requiring additional mechanisms like semaphores. Android's anonymous shared memory (Ashmem) is based on Linux shared memory and facilitates shared memory transfer using Binder and FileDescriptor. The repository provides practical examples of bidirectional communication and large file transfer between client and server using AIDL interfaces and MemoryFile in Android.

cube

Cube is a semantic layer for building data applications, helping data engineers and application developers access data from modern data stores, organize it into consistent definitions, and deliver it to every application. It works with SQL-enabled data sources, providing sub-second latency and high concurrency for API requests. Cube addresses SQL code organization, performance, and access control issues in data applications, enabling efficient data modeling, access control, and performance optimizations for various tools like embedded analytics, dashboarding, reporting, and data notebooks.

?style=for-the-badge)

?style=for-the-badge)