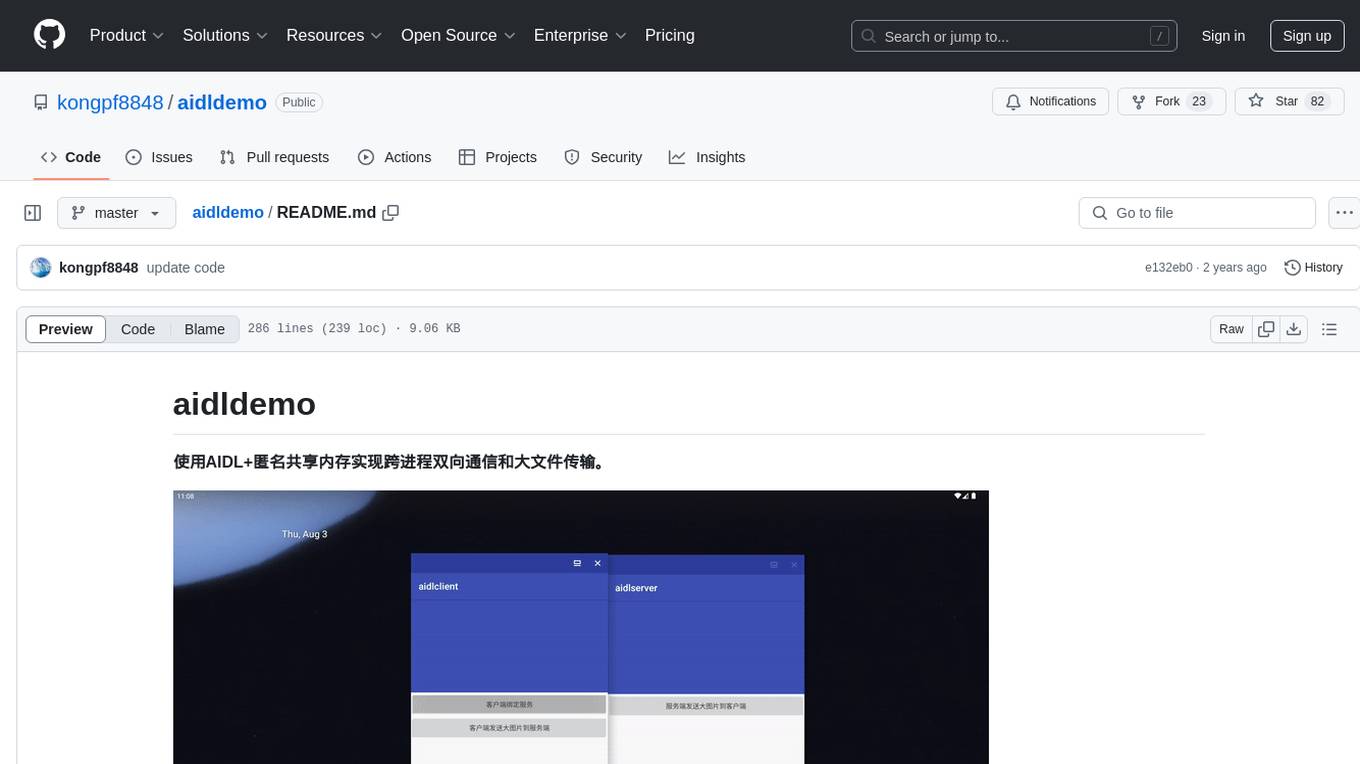

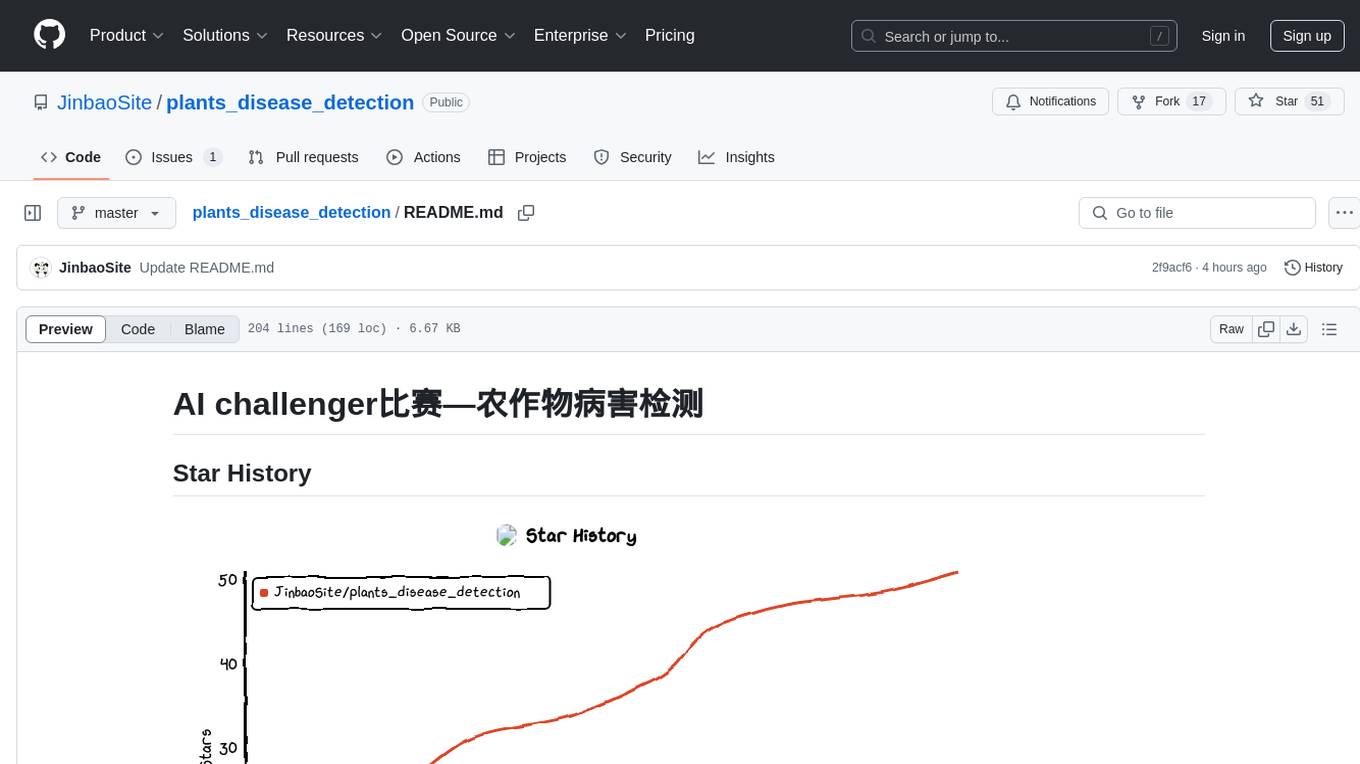

aidldemo

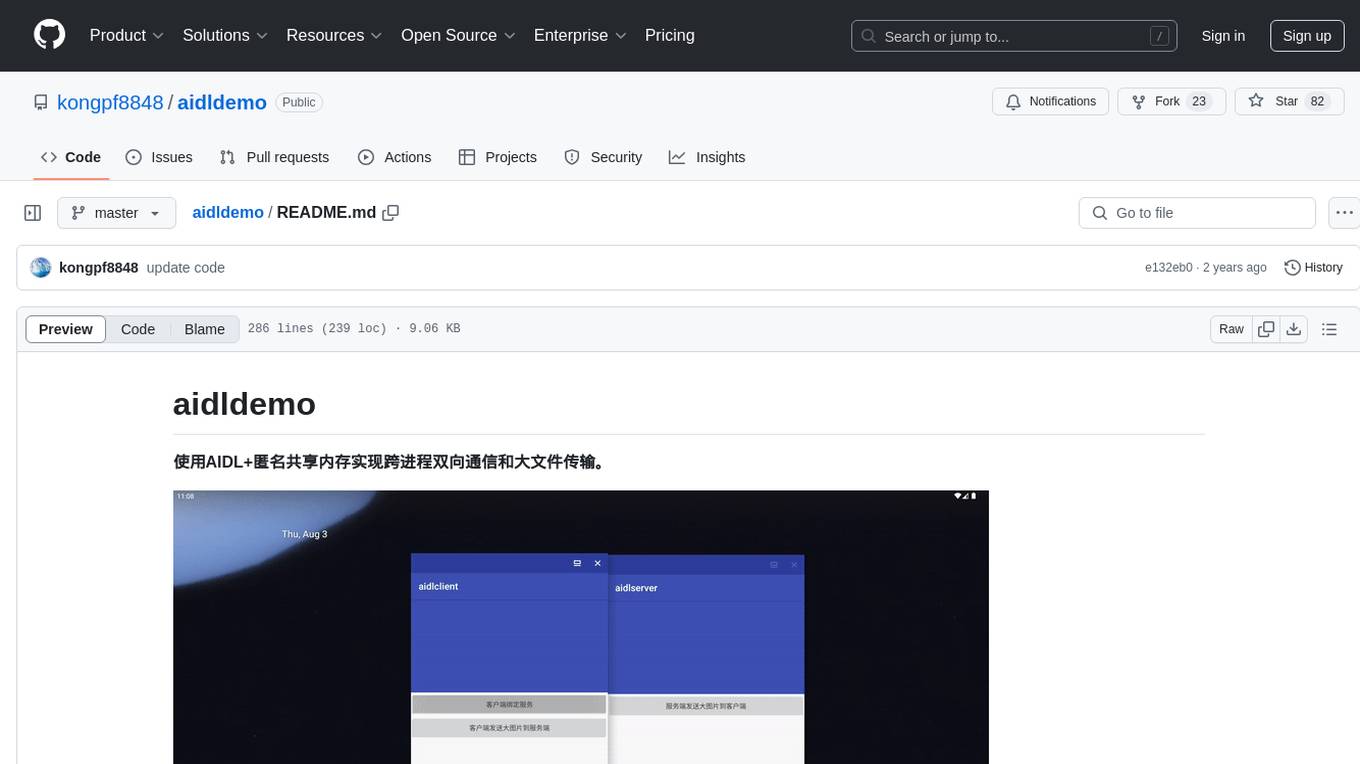

🔥使用AIDL+匿名共享内存实现跨进程双向通信和大文件传输。

Stars: 82

This repository demonstrates how to achieve cross-process bidirectional communication and large file transfer using AIDL and anonymous shared memory. AIDL is a way to implement Inter-Process Communication in Android, based on Binder. To overcome the data size limit of Binder, anonymous shared memory is used for large file transfer. Shared memory allows processes to share memory by mapping a common memory area into their respective process spaces. While efficient for transferring large data between processes, shared memory lacks synchronization mechanisms, requiring additional mechanisms like semaphores. Android's anonymous shared memory (Ashmem) is based on Linux shared memory and facilitates shared memory transfer using Binder and FileDescriptor. The repository provides practical examples of bidirectional communication and large file transfer between client and server using AIDL interfaces and MemoryFile in Android.

README:

使用AIDL+匿名共享内存实现跨进程双向通信和大文件传输。

AIDL是Android中实现跨进程通信(Inter-Process Communication)的一种方式。AIDL的传输数据机制基于Binder,Binder对传输数据大小有限制,

传输超过1M的文件就会报android.os.TransactionTooLargeException异常,一种解决办法就是使用匿名共享内存进行大文件传输。

共享内存是进程间通信的一种方式,通过映射一块公共内存到各自的进程空间来达到共享内存的目的。

对于进程间需要传递大量数据的场景下,这种通信方式是十分高效的,但是共享内存并未提供同步机制,也就是说,在第一个进程结束对共享内存的写操作之前,并无自动机制可以阻止第二个进程开始对它进行读取,所以我们通常需要用其他的机制来同步对共享内存的访问,例如信号量。

Android中的匿名共享内存(Ashmem)是基于Linux共享内存的,借助Binder+文件描述符(FileDescriptor)实现了共享内存的传递。它可以让多个进程操作同一块内存区域,并且除了物理内存限制,没有其他大小限制。相对于Linux的共享内存,Ashmem对内存的管理更加精细化,并且添加了互斥锁。Java层在使用时需要用到MemoryFile,它封装了native代码。Android平台上共享内存通常的做法如下:

- 进程A通过

MemoryFile创建共享内存,得到fd(FileDescriptor) - 进程A通过fd将数据写入共享内存

- 进程A将fd封装成实现

Parcelable接口的ParcelFileDescriptor对象,通过Binder将ParcelFileDescriptor对象发送给进程B - 进程B获从

ParcelFileDescriptor对象中获取fd,从fd中读取数据

我们先实现客户端向服务端传输大文件,然后再实现服务端向客户端传输大文件。

//IMyAidlInterface.aidl

interface IMyAidlInterface {

void client2server(in ParcelFileDescriptor pfd);

}- 实现

IMyAidlInterface接口

//AidlService.kt

class AidlService : Service() {

private val mStub: IMyAidlInterface.Stub = object : IMyAidlInterface.Stub() {

@Throws(RemoteException::class)

override fun sendData(pfd: ParcelFileDescriptor) {

}

}

override fun onBind(intent: Intent): IBinder {

return mStub

}

}- 接收数据

//AidlService.kt

@Throws(RemoteException::class)

override fun sendData(pfd: ParcelFileDescriptor) {

/**

* 从ParcelFileDescriptor中获取FileDescriptor

*/

val fileDescriptor = pfd.fileDescriptor

/**

* 根据FileDescriptor构建InputStream对象

*/

val fis = FileInputStream(fileDescriptor)

/**

* 从InputStream中读取字节数组

*/

val data = fis.readBytes()

......

}

-

绑定服务

- 在项目的

src目录中加入.aidl文件 - 声明一个

IMyAidlInterface接口实例(基于AIDL生成) - 创建

ServiceConnection实例,实现android.content.ServiceConnection接口 - 调用

Context.bindService()绑定服务,传入ServiceConnection实例 - 在

onServiceConnected()实现中,调用IMyAidlInterface.Stub.asInterface(binder),将返回参数转换为IMyAidlInterface类型

- 在项目的

//MainActivity.kt

class MainActivity : AppCompatActivity() {

private var mStub: IMyAidlInterface? = null

private val serviceConnection = object : ServiceConnection {

override fun onServiceConnected(name: ComponentName, binder: IBinder) {

mStub = IMyAidlInterface.Stub.asInterface(binder)

}

override fun onServiceDisconnected(name: ComponentName) {

mStub = null

}

}

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

setContentView(R.layout.activity_main)

button1.setOnClickListener {

bindService()

}

}

private fun bindService() {

if (mStub != null) {

return

}

val intent = Intent("com.example.aidl.server.AidlService")

intent.setClassName("com.example.aidl.server","com.example.aidl.server.AidlService")

try {

val bindSucc = bindService(intent, serviceConnection, Context.BIND_AUTO_CREATE)

if (bindSucc) {

Toast.makeText(this, "bind ok", Toast.LENGTH_SHORT).show()

} else {

Toast.makeText(this, "bind fail", Toast.LENGTH_SHORT).show()

}

} catch (e: Exception) {

e.printStackTrace()

}

}

override fun onDestroy() {

if(mStub!=null) {

unbindService(serviceConnection)

}

super.onDestroy()

}

}-

发送数据

- 将发送文件转换成字节数组

ByteArray - 创建

MemoryFile对象 - 向

MemoryFile对象中写入字节数组 - 获取

MemoryFile对应的FileDescriptor - 根据

FileDescriptor创建ParcelFileDescriptor - 调用

IPC方法,发送ParcelFileDescriptor对象

- 将发送文件转换成字节数组

//MainActivity.kt

private fun sendLargeData() {

if (mStub == null) {

return

}

try {

/**

* 读取assets目录下文件

*/

val inputStream = assets.open("large.jpg")

/**

* 将inputStream转换成字节数组

*/

val byteArray=inputStream.readBytes()

/**

* 创建MemoryFile

*/

val memoryFile=MemoryFile("image", byteArray.size)

/**

* 向MemoryFile中写入字节数组

*/

memoryFile.writeBytes(byteArray, 0, 0, byteArray.size)

/**

* 获取MemoryFile对应的FileDescriptor

*/

val fd=MemoryFileUtils.getFileDescriptor(memoryFile)

/**

* 根据FileDescriptor创建ParcelFileDescriptor

*/

val pfd= ParcelFileDescriptor.dup(fd)

/**

* 发送数据

*/

mStub?.client2server(pfd)

} catch (e: IOException) {

e.printStackTrace()

} catch (e: RemoteException) {

e.printStackTrace()

}

}至此,我们已经实现了客户端向服务端传输大文件,下面就继续实现服务端向客户端传输大文件功能。 服务端主动给客户端发送数据,客户端只需要进行监听即可。

- 定义监听回调接口

//ICallbackInterface.aidl

package com.example.aidl.aidl;

interface ICallbackInterface {

void server2client(in ParcelFileDescriptor pfd);

}- 在

IMyAidlInterface.aidl中添加注册回调和反注册回调方法,如下:

//IMyAidlInterface.aidl

import com.example.aidl.aidl.ICallbackInterface;

interface IMyAidlInterface {

......

void registerCallback(ICallbackInterface callback);

void unregisterCallback(ICallbackInterface callback);

}- 服务端实现接口方法

//AidlService.kt

private val callbacks=RemoteCallbackList<ICallbackInterface>()

private val mStub: IMyAidlInterface.Stub = object : IMyAidlInterface.Stub() {

......

override fun registerCallback(callback: ICallbackInterface) {

callbacks.register(callback)

}

override fun unregisterCallback(callback: ICallbackInterface) {

callbacks.unregister(callback)

}

}- 客户端绑定服务后注册回调

//MainActivity.kt

private val callback=object: ICallbackInterface.Stub() {

override fun server2client(pfd: ParcelFileDescriptor) {

val fileDescriptor = pfd.fileDescriptor

val fis = FileInputStream(fileDescriptor)

val bytes = fis.readBytes()

if (bytes != null && bytes.isNotEmpty()) {

......

}

}

}

private val serviceConnection = object : ServiceConnection {

override fun onServiceConnected(name: ComponentName, binder: IBinder) {

mStub = IMyAidlInterface.Stub.asInterface(binder)

mStub?.registerCallback(callback)

}

override fun onServiceDisconnected(name: ComponentName) {

mStub = null

}

}- 服务端发送文件,回调给客户端。此处仅贴出核心代码,如下:

//AidlService.kt

private fun server2client(pfd:ParcelFileDescriptor){

val n=callbacks.beginBroadcast()

for(i in 0 until n){

val callback=callbacks.getBroadcastItem(i);

if (callback!=null){

try {

callback.server2client(pfd)

} catch (e:RemoteException) {

e.printStackTrace()

}

}

}

callbacks.finishBroadcast()

}至此,我们实现了客户端和服务端双向通信和传输大文件😉😉😉

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aidldemo

Similar Open Source Tools

aidldemo

This repository demonstrates how to achieve cross-process bidirectional communication and large file transfer using AIDL and anonymous shared memory. AIDL is a way to implement Inter-Process Communication in Android, based on Binder. To overcome the data size limit of Binder, anonymous shared memory is used for large file transfer. Shared memory allows processes to share memory by mapping a common memory area into their respective process spaces. While efficient for transferring large data between processes, shared memory lacks synchronization mechanisms, requiring additional mechanisms like semaphores. Android's anonymous shared memory (Ashmem) is based on Linux shared memory and facilitates shared memory transfer using Binder and FileDescriptor. The repository provides practical examples of bidirectional communication and large file transfer between client and server using AIDL interfaces and MemoryFile in Android.

agents-flex

Agents-Flex is a LLM Application Framework like LangChain base on Java. It provides a set of tools and components for building LLM applications, including LLM Visit, Prompt and Prompt Template Loader, Function Calling Definer, Invoker and Running, Memory, Embedding, Vector Storage, Resource Loaders, Document, Splitter, Loader, Parser, LLMs Chain, and Agents Chain.

herc.ai

Herc.ai is a powerful library for interacting with the Herc.ai API. It offers free access to users and supports all languages. Users can benefit from Herc.ai's features unlimitedly with a one-time subscription and API key. The tool provides functionalities for question answering and text-to-image generation, with support for various models and customization options. Herc.ai can be easily integrated into CLI, CommonJS, TypeScript, and supports beta models for advanced usage. Developed by FiveSoBes and Luppux Development.

nb_utils

nb_utils is a Flutter package that provides a collection of useful methods, extensions, widgets, and utilities to simplify Flutter app development. It includes features like shared preferences, text styles, decorations, widgets, extensions for strings, colors, build context, date time, device, numbers, lists, scroll controllers, system methods, network utils, JWT decoding, and custom dialogs. The package aims to enhance productivity and streamline common tasks in Flutter development.

island-ai

island-ai is a TypeScript toolkit tailored for developers engaging with structured outputs from Large Language Models. It offers streamlined processes for handling, parsing, streaming, and leveraging AI-generated data across various applications. The toolkit includes packages like zod-stream for interfacing with LLM streams, stream-hooks for integrating streaming JSON data into React applications, and schema-stream for JSON streaming parsing based on Zod schemas. Additionally, related packages like @instructor-ai/instructor-js focus on data validation and retry mechanisms, enhancing the reliability of data processing workflows.

DeepMCPAgent

DeepMCPAgent is a model-agnostic tool that enables the creation of LangChain/LangGraph agents powered by MCP tools over HTTP/SSE. It allows for dynamic discovery of tools, connection to remote MCP servers, and integration with any LangChain chat model instance. The tool provides a deep agent loop for enhanced functionality and supports typed tool arguments for validated calls. DeepMCPAgent emphasizes the importance of MCP-first approach, where agents dynamically discover and call tools rather than hardcoding them.

Avalonia-Assistant

Avalonia-Assistant is an open-source desktop intelligent assistant that aims to provide a user-friendly interactive experience based on the Avalonia UI framework and the integration of Semantic Kernel with OpenAI or other large LLM models. By utilizing Avalonia-Assistant, you can perform various desktop operations through text or voice commands, enhancing your productivity and daily office experience.

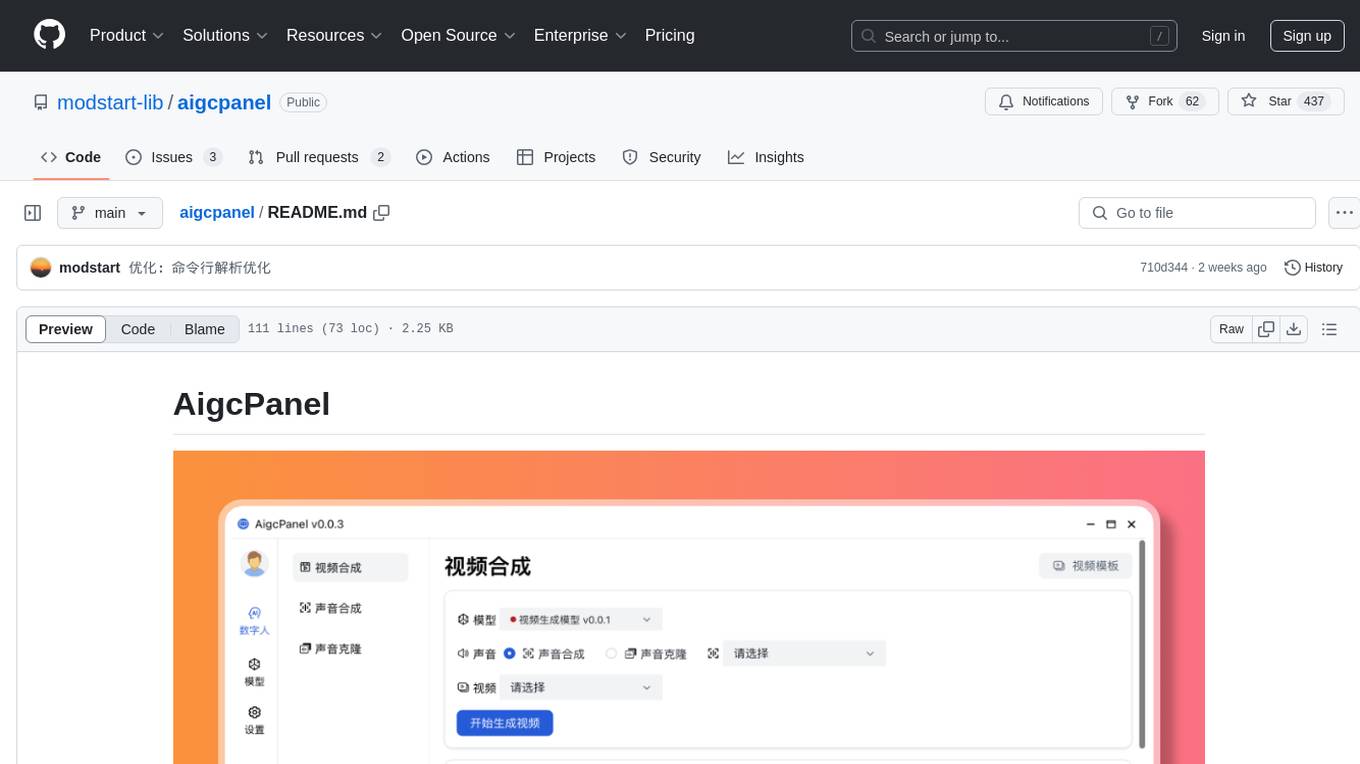

aigcpanel

AigcPanel is a simple and easy-to-use all-in-one AI digital human system that even beginners can use. It supports video synthesis, voice synthesis, voice cloning, simplifies local model management, and allows one-click import and use of AI models. It prohibits the use of this product for illegal activities and users must comply with the laws and regulations of the People's Republic of China.

orch

orch is a library for building language model powered applications and agents for the Rust programming language. It can be used for tasks such as text generation, streaming text generation, structured data generation, and embedding generation. The library provides functionalities for executing various language model tasks and can be integrated into different applications and contexts. It offers flexibility for developers to create language model-powered features and applications in Rust.

Janus

Janus is a series of unified multimodal understanding and generation models, including Janus-Pro, Janus, and JanusFlow. Janus-Pro is an advanced version that improves both multimodal understanding and visual generation significantly. Janus decouples visual encoding for unified multimodal understanding and generation, surpassing previous models. JanusFlow harmonizes autoregression and rectified flow for unified multimodal understanding and generation, achieving comparable or superior performance to specialized models. The models are available for download and usage, supporting a broad range of research in academic and commercial communities.

plants_disease_detection

This repository contains code for the AI challenger competition on plant disease detection. The goal is to classify nearly 50,000 plant leaf photos into 61 categories based on 'species-disease-severity'. The framework used is Keras with TensorFlow backend, implementing DenseNet for image classification. Data is uploaded to a private dataset on Kaggle for model training. The code includes data preparation, model training, and prediction steps.

MING

MING is an open-sourced Chinese medical consultation model fine-tuned based on medical instructions. The main functions of the model are as follows: Medical Q&A: answering medical questions and analyzing cases. Intelligent consultation: giving diagnosis results and suggestions after multiple rounds of consultation.

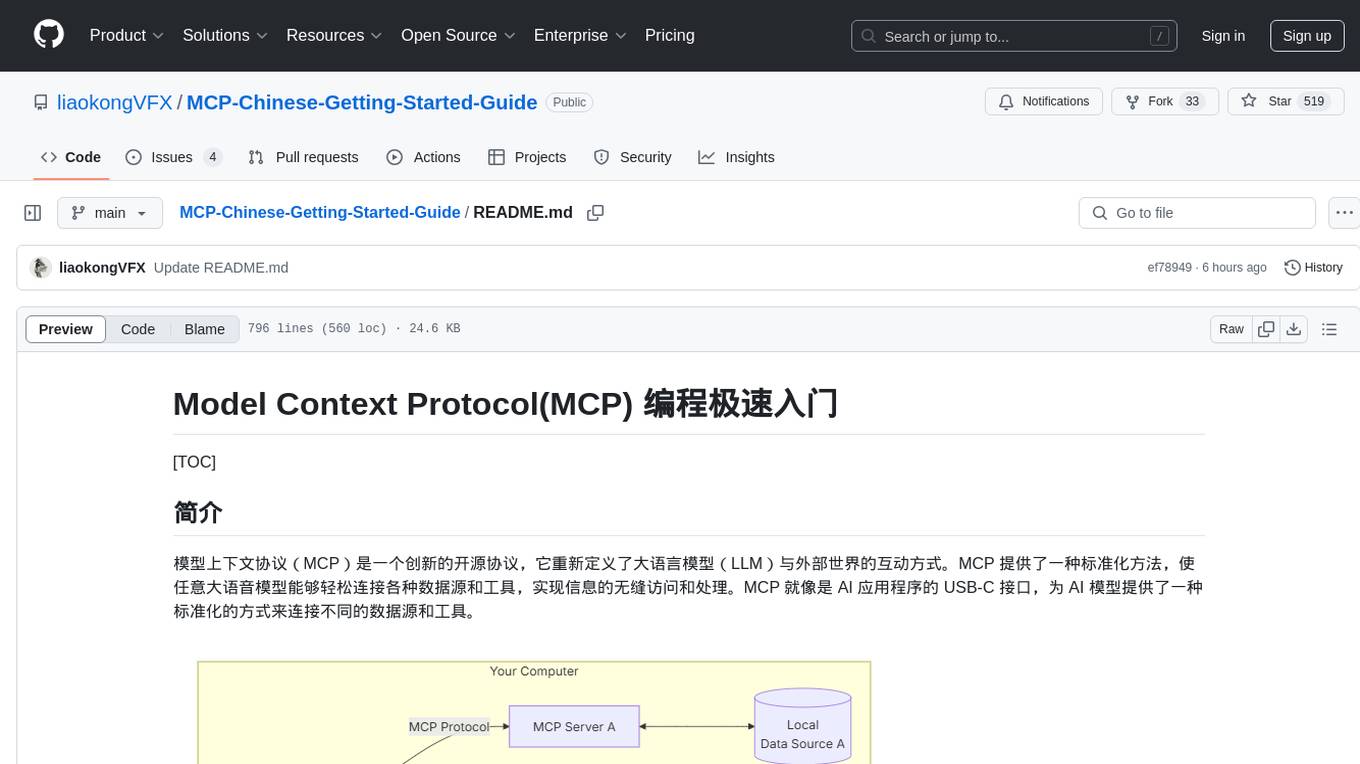

MCP-Chinese-Getting-Started-Guide

The Model Context Protocol (MCP) is an innovative open-source protocol that redefines the interaction between large language models (LLMs) and the external world. MCP provides a standardized approach for any large language model to easily connect to various data sources and tools, enabling seamless access and processing of information. MCP acts as a USB-C interface for AI applications, offering a standardized way for AI models to connect to different data sources and tools. The core functionalities of MCP include Resources, Prompts, Tools, Sampling, Roots, and Transports. This guide focuses on developing an MCP server for network search using Python and uv management. It covers initializing the project, installing dependencies, creating a server, implementing tool execution methods, and running the server. Additionally, it explains how to debug the MCP server using the Inspector tool, how to call tools from the server, and how to connect multiple MCP servers. The guide also introduces the Sampling feature, which allows pre- and post-tool execution operations, and demonstrates how to integrate MCP servers into LangChain for AI applications.

For similar tasks

aidldemo

This repository demonstrates how to achieve cross-process bidirectional communication and large file transfer using AIDL and anonymous shared memory. AIDL is a way to implement Inter-Process Communication in Android, based on Binder. To overcome the data size limit of Binder, anonymous shared memory is used for large file transfer. Shared memory allows processes to share memory by mapping a common memory area into their respective process spaces. While efficient for transferring large data between processes, shared memory lacks synchronization mechanisms, requiring additional mechanisms like semaphores. Android's anonymous shared memory (Ashmem) is based on Linux shared memory and facilitates shared memory transfer using Binder and FileDescriptor. The repository provides practical examples of bidirectional communication and large file transfer between client and server using AIDL interfaces and MemoryFile in Android.

For similar jobs

react-native-vision-camera

VisionCamera is a powerful, high-performance Camera library for React Native. It features Photo and Video capture, QR/Barcode scanner, Customizable devices and multi-cameras ("fish-eye" zoom), Customizable resolutions and aspect-ratios (4k/8k images), Customizable FPS (30..240 FPS), Frame Processors (JS worklets to run facial recognition, AI object detection, realtime video chats, ...), Smooth zooming (Reanimated), Fast pause and resume, HDR & Night modes, Custom C++/GPU accelerated video pipeline (OpenGL).

iris_android

This repository contains an offline Android chat application based on llama.cpp example. Users can install, download models, and run the app completely offline and privately. To use the app, users need to go to the releases page, download and install the app. Building the app requires downloading Android Studio, cloning the repository, and importing it into Android Studio. The app can be run offline by following specific steps such as enabling developer options, wireless debugging, and downloading the stable LM model. The project is maintained by Nerve Sparks and contributions are welcome through creating feature branches and pull requests.

aiolauncher_scripts

AIO Launcher Scripts is a collection of Lua scripts that can be used with AIO Launcher to enhance its functionality. These scripts can be used to create widget scripts, search scripts, and side menu scripts. They provide various functions such as displaying text, buttons, progress bars, charts, and interacting with app widgets. The scripts can be used to customize the appearance and behavior of the launcher, add new features, and interact with external services.

gemini-android

Gemini Android is a repository showcasing Google's Generative AI on Android using Stream Chat SDK for Compose. It demonstrates the Gemini API for Android, implements UI elements with Jetpack Compose, utilizes Android architecture components like Hilt and AppStartup, performs background tasks with Kotlin Coroutines, and integrates chat systems with Stream Chat Compose SDK for real-time event handling. The project also provides technical content, instructions on building the project, tech stack details, architecture overview, modularization strategies, and a contribution guideline. It follows Google's official architecture guidance and offers a real-world example of app architecture implementation.

blinkid-android

The BlinkID Android SDK is a comprehensive solution for implementing secure document scanning and extraction. It offers powerful capabilities for extracting data from a wide range of identification documents. The SDK provides features for integrating document scanning into Android apps, including camera requirements, SDK resource pre-bundling, customizing the UX, changing default strings and localization, troubleshooting integration difficulties, and using the SDK through various methods. It also offers options for completely custom UX with low-level API integration. The SDK size is optimized for different processor architectures, and API documentation is available for reference. For any questions or support, users can contact the Microblink team at help.microblink.com.

react-native-airship

React Native Airship is a module designed to integrate Airship's iOS and Android SDKs into React Native applications. It provides developers with the necessary tools to incorporate Airship's push notification services seamlessly. The module offers a simple and efficient way to leverage Airship's features within React Native projects, enhancing user engagement and retention through targeted notifications.

gpt_mobile

GPT Mobile is a chat assistant for Android that allows users to chat with multiple models at once. It supports various platforms such as OpenAI GPT, Anthropic Claude, and Google Gemini. Users can customize temperature, top p (Nucleus sampling), and system prompt. The app features local chat history, Material You style UI, dark mode support, and per app language setting for Android 13+. It is built using 100% Kotlin, Jetpack Compose, and follows a modern app architecture for Android developers.

Native-LLM-for-Android

This repository provides a demonstration of running a native Large Language Model (LLM) on Android devices. It supports various models such as Qwen2.5-Instruct, MiniCPM-DPO/SFT, Yuan2.0, Gemma2-it, StableLM2-Chat/Zephyr, and Phi3.5-mini-instruct. The demo models are optimized for extreme execution speed after being converted from HuggingFace or ModelScope. Users can download the demo models from the provided drive link, place them in the assets folder, and follow specific instructions for decompression and model export. The repository also includes information on quantization methods and performance benchmarks for different models on various devices.