DeepMCPAgent

Model-agnostic plug-n-play LangChain/LangGraph agents powered entirely by MCP tools over HTTP/SSE.

Stars: 212

DeepMCPAgent is a model-agnostic tool that enables the creation of LangChain/LangGraph agents powered by MCP tools over HTTP/SSE. It allows for dynamic discovery of tools, connection to remote MCP servers, and integration with any LangChain chat model instance. The tool provides a deep agent loop for enhanced functionality and supports typed tool arguments for validated calls. DeepMCPAgent emphasizes the importance of MCP-first approach, where agents dynamically discover and call tools rather than hardcoding them.

README:

Model-agnostic LangChain/LangGraph agents powered entirely by MCP tools over HTTP/SSE.

Discover MCP tools dynamically. Bring your own LangChain model. Build production-ready agents—fast.

📚 Documentation • 🛠 Issues

- 🔌 Zero manual tool wiring — tools are discovered dynamically from MCP servers (HTTP/SSE)

- 🌐 External APIs welcome — connect to remote MCP servers (with headers/auth)

- 🧠 Model-agnostic — pass any LangChain chat model instance (OpenAI, Anthropic, Ollama, Groq, local, …)

- ⚡ DeepAgents (optional) — if installed, you get a deep agent loop; otherwise robust LangGraph ReAct fallback

- 🛠️ Typed tool args — JSON-Schema → Pydantic → LangChain

BaseTool(typed, validated calls) - 🧪 Quality bar — mypy (strict), ruff, pytest, GitHub Actions, docs

MCP first. Agents shouldn’t hardcode tools — they should discover and call them. DeepMCPAgent builds that bridge.

Install from PyPI:

pip install "deepmcpagent[deep]"This installs DeepMCPAgent with DeepAgents support (recommended) for the best agent loop. Other optional extras:

-

dev→ linting, typing, tests -

docs→ MkDocs + Material + mkdocstrings -

examples→ dependencies used by bundled examples

# install with deepagents + dev tooling

pip install "deepmcpagent[deep,dev]"pip install "deepmcpagent[deep,dev]"python examples/servers/math_server.pyThis serves an MCP endpoint at: http://127.0.0.1:8000/mcp

python examples/use_agent.pyWhat you’ll see:

DeepMCPAgent lets you pass any LangChain chat model instance (or a provider id string if you prefer init_chat_model):

import asyncio

from deepmcpagent import HTTPServerSpec, build_deep_agent

# choose your model:

# from langchain_openai import ChatOpenAI

# model = ChatOpenAI(model="gpt-4.1")

# from langchain_anthropic import ChatAnthropic

# model = ChatAnthropic(model="claude-3-5-sonnet-latest")

# from langchain_community.chat_models import ChatOllama

# model = ChatOllama(model="llama3.1")

async def main():

servers = {

"math": HTTPServerSpec(

url="http://127.0.0.1:8000/mcp",

transport="http", # or "sse"

# headers={"Authorization": "Bearer <token>"},

),

}

graph, _ = await build_deep_agent(

servers=servers,

model=model,

instructions="Use MCP tools precisely."

)

out = await graph.ainvoke({"messages":[{"role":"user","content":"add 21 and 21 with tools"}]})

print(out)

asyncio.run(main())Tip: If you pass a string like

"openai:gpt-4.1", we’ll call LangChain’sinit_chat_model()for you (and it will read env vars likeOPENAI_API_KEY). Passing a model instance gives you full control.

# list tools from one or more HTTP servers

deepmcpagent list-tools \

--http name=math url=http://127.0.0.1:8000/mcp transport=http \

--model-id "openai:gpt-4.1"

# interactive agent chat (HTTP/SSE servers only)

deepmcpagent run \

--http name=math url=http://127.0.0.1:8000/mcp transport=http \

--model-id "openai:gpt-4.1"The CLI accepts repeated

--httpblocks; addheader.X=Ypairs for auth:--http name=ext url=https://api.example.com/mcp transport=http header.Authorization="Bearer TOKEN"

┌────────────────┐ list_tools / call_tool ┌─────────────────────────┐

│ LangChain/LLM │ ──────────────────────────────────▶ │ FastMCP Client (HTTP/SSE)│

│ (your model) │ └───────────┬──────────────┘

└──────┬─────────┘ tools (LC BaseTool) │

│ │

▼ ▼

LangGraph Agent One or many MCP servers (remote APIs)

(or DeepAgents) e.g., math, github, search, ...

-

HTTPServerSpec(...)→ FastMCP client (single client, multiple servers) -

Tool discovery → JSON-Schema → Pydantic → LangChain

BaseTool - Agent loop → DeepAgents (if installed) or LangGraph ReAct fallback

flowchart LR

%% Groupings

subgraph User["👤 User / App"]

Q["Prompt / Task"]

CLI["CLI (Typer)"]

PY["Python API"]

end

subgraph Agent["🤖 Agent Runtime"]

DIR["build_deep_agent()"]

PROMPT["prompt.py\n(DEFAULT_SYSTEM_PROMPT)"]

subgraph AGRT["Agent Graph"]

DA["DeepAgents loop\n(if installed)"]

REACT["LangGraph ReAct\n(fallback)"]

end

LLM["LangChain Model\n(instance or init_chat_model(provider-id))"]

TOOLS["LangChain Tools\n(BaseTool[])"]

end

subgraph MCP["🧰 Tooling Layer (MCP)"]

LOADER["MCPToolLoader\n(JSON-Schema ➜ Pydantic ➜ BaseTool)"]

TOOLWRAP["_FastMCPTool\n(async _arun → client.call_tool)"]

end

subgraph FMCP["🌐 FastMCP Client"]

CFG["servers_to_mcp_config()\n(mcpServers dict)"]

MULTI["FastMCPMulti\n(fastmcp.Client)"]

end

subgraph SRV["🛠 MCP Servers (HTTP/SSE)"]

S1["Server A\n(e.g., math)"]

S2["Server B\n(e.g., search)"]

S3["Server C\n(e.g., github)"]

end

%% Edges

Q -->|query| CLI

Q -->|query| PY

CLI --> DIR

PY --> DIR

DIR --> PROMPT

DIR --> LLM

DIR --> LOADER

DIR --> AGRT

LOADER --> MULTI

CFG --> MULTI

MULTI -->|list_tools| SRV

LOADER --> TOOLS

TOOLS --> AGRT

AGRT <-->|messages| LLM

AGRT -->|tool calls| TOOLWRAP

TOOLWRAP --> MULTI

MULTI -->|call_tool| SRV

SRV -->|tool result| MULTI --> TOOLWRAP --> AGRT -->|final answer| CLI

AGRT -->|final answer| PYsequenceDiagram

autonumber

participant U as User

participant CLI as CLI/Python

participant Builder as build_deep_agent()

participant Loader as MCPToolLoader

participant Graph as Agent Graph (DeepAgents or ReAct)

participant LLM as LangChain Model

participant Tool as _FastMCPTool

participant FMCP as FastMCP Client

participant S as MCP Server (HTTP/SSE)

U->>CLI: Enter prompt

CLI->>Builder: build_deep_agent(servers, model, instructions?)

Builder->>Loader: get_all_tools()

Loader->>FMCP: list_tools()

FMCP->>S: HTTP(S)/SSE list_tools

S-->>FMCP: tools + JSON-Schema

FMCP-->>Loader: tool specs

Loader-->>Builder: BaseTool[]

Builder-->>CLI: (Graph, Loader)

U->>Graph: ainvoke({messages:[user prompt]})

Graph->>LLM: Reason over system + messages + tool descriptions

LLM-->>Graph: Tool call (e.g., add(a=3,b=5))

Graph->>Tool: _arun(a=3,b=5)

Tool->>FMCP: call_tool("add", {a:3,b:5})

FMCP->>S: POST /mcp tools.call("add", {...})

S-->>FMCP: result { data: 8 }

FMCP-->>Tool: result

Tool-->>Graph: ToolMessage(content=8)

Graph->>LLM: Continue with observations

LLM-->>Graph: Final response "(3 + 5) * 7 = 56"

Graph-->>CLI: messages (incl. final LLM answer)stateDiagram-v2

[*] --> AcquireTools

AcquireTools: Discover MCP tools via FastMCP\n(JSON-Schema ➜ Pydantic ➜ BaseTool)

AcquireTools --> Plan

Plan: LLM plans next step\n(uses system prompt + tool descriptions)

Plan --> CallTool: if tool needed

Plan --> Respond: if direct answer sufficient

CallTool: _FastMCPTool._arun\n→ client.call_tool(name, args)

CallTool --> Observe: receive tool result

Observe: Parse result payload (data/text/content)

Observe --> Decide

Decide: More tools needed?

Decide --> Plan: yes

Decide --> Respond: no

Respond: LLM crafts final message

Respond --> [*]classDiagram

class StdioServerSpec {

+command: str

+args: List[str]

+env: Dict[str,str]

+cwd: Optional[str]

+keep_alive: bool

}

class HTTPServerSpec {

+url: str

+transport: Literal["http","streamable-http","sse"]

+headers: Dict[str,str]

+auth: Optional[str]

}

class FastMCPMulti {

-_client: fastmcp.Client

+client(): Client

}

class MCPToolLoader {

-_multi: FastMCPMulti

+get_all_tools(): List[BaseTool]

+list_tool_info(): List[ToolInfo]

}

class _FastMCPTool {

+name: str

+description: str

+args_schema: Type[BaseModel]

-_tool_name: str

-_client: Any

+_arun(**kwargs) async

}

class ToolInfo {

+server_guess: str

+name: str

+description: str

+input_schema: Dict[str,Any]

}

class build_deep_agent {

+servers: Mapping[str,ServerSpec]

+model: ModelLike

+instructions?: str

+returns: (graph, loader)

}

StdioServerSpec <|-- ServerSpec

HTTPServerSpec <|-- ServerSpec

FastMCPMulti o--> ServerSpec : uses servers_to_mcp_config()

MCPToolLoader o--> FastMCPMulti

MCPToolLoader --> _FastMCPTool : creates

_FastMCPTool ..> BaseTool

build_deep_agent --> MCPToolLoader : discovery

build_deep_agent --> _FastMCPTool : tools for agentflowchart TD

subgraph App["Your App / Service"]

UI["CLI / API / Notebook"]

Code["deepmcpagent (Python pkg)\n- config.py\n- clients.py\n- tools.py\n- agent.py\n- prompt.py"]

UI --> Code

end

subgraph Cloud["LLM Provider(s)"]

P1["OpenAI / Anthropic / Groq / Ollama..."]

end

subgraph Net["Network"]

direction LR

FMCP["FastMCP Client\n(HTTP/SSE)"]

FMCP ---|mcpServers| Code

end

subgraph Servers["MCP Servers"]

direction LR

A["Service A (HTTP)\n/path: /mcp"]

B["Service B (SSE)\n/path: /mcp"]

C["Service C (HTTP)\n/path: /mcp"]

end

Code -->|init_chat_model or model instance| P1

Code --> FMCP

FMCP --> A

FMCP --> B

FMCP --> Cflowchart TD

Start([Tool Call]) --> Try{"client.call_tool(name,args)"}

Try -- ok --> Parse["Extract data/text/content/result"]

Parse --> Return[Return ToolMessage to Agent]

Try -- raises --> Err["Tool/Transport Error"]

Err --> Wrap["ToolMessage(status=error, content=trace)"]

Wrap --> Agent["Agent observes error\nand may retry / alternate tool"]These diagrams reflect the current implementation:

- Model is required (string provider-id or LangChain model instance).

- MCP tools only, discovered at runtime via FastMCP (HTTP/SSE).

- Agent loop prefers DeepAgents if installed; otherwise LangGraph ReAct.

- Tools are typed via JSON-Schema ➜ Pydantic ➜ LangChain BaseTool.

- Fancy console output shows discovered tools, calls, results, and final answer.

# install dev tooling

pip install -e ".[dev]"

# lint & type-check

ruff check .

mypy

# run tests

pytest -q- Your keys, your model — we don’t enforce a provider; pass any LangChain model.

- Use HTTP headers in

HTTPServerSpecto deliver bearer/OAuth tokens to servers.

-

PEP 668: externally managed environment (macOS + Homebrew) Use a virtualenv:

python3 -m venv .venv source .venv/bin/activate -

404 Not Found when connecting Ensure your server uses a path (e.g.,

/mcp) and your client URL includes it. -

Tool calls failing / attribute errors Ensure you’re on the latest version; our tool wrapper uses

PrivateAttrfor client state. -

High token counts That’s normal with tool-calling models. Use smaller models for dev.

Apache-2.0 — see LICENSE.

- The MCP community for a clean protocol.

- LangChain and LangGraph for powerful agent runtimes.

- FastMCP for solid client & server implementations.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for DeepMCPAgent

Similar Open Source Tools

DeepMCPAgent

DeepMCPAgent is a model-agnostic tool that enables the creation of LangChain/LangGraph agents powered by MCP tools over HTTP/SSE. It allows for dynamic discovery of tools, connection to remote MCP servers, and integration with any LangChain chat model instance. The tool provides a deep agent loop for enhanced functionality and supports typed tool arguments for validated calls. DeepMCPAgent emphasizes the importance of MCP-first approach, where agents dynamically discover and call tools rather than hardcoding them.

Callytics

Callytics is an advanced call analytics solution that leverages speech recognition and large language models (LLMs) technologies to analyze phone conversations from customer service and call centers. By processing both the audio and text of each call, it provides insights such as sentiment analysis, topic detection, conflict detection, profanity word detection, and summary. These cutting-edge techniques help businesses optimize customer interactions, identify areas for improvement, and enhance overall service quality. When an audio file is placed in the .data/input directory, the entire pipeline automatically starts running, and the resulting data is inserted into the database. This is only a v1.1.0 version; many new features will be added, models will be fine-tuned or trained from scratch, and various optimization efforts will be applied.

Avalonia-Assistant

Avalonia-Assistant is an open-source desktop intelligent assistant that aims to provide a user-friendly interactive experience based on the Avalonia UI framework and the integration of Semantic Kernel with OpenAI or other large LLM models. By utilizing Avalonia-Assistant, you can perform various desktop operations through text or voice commands, enhancing your productivity and daily office experience.

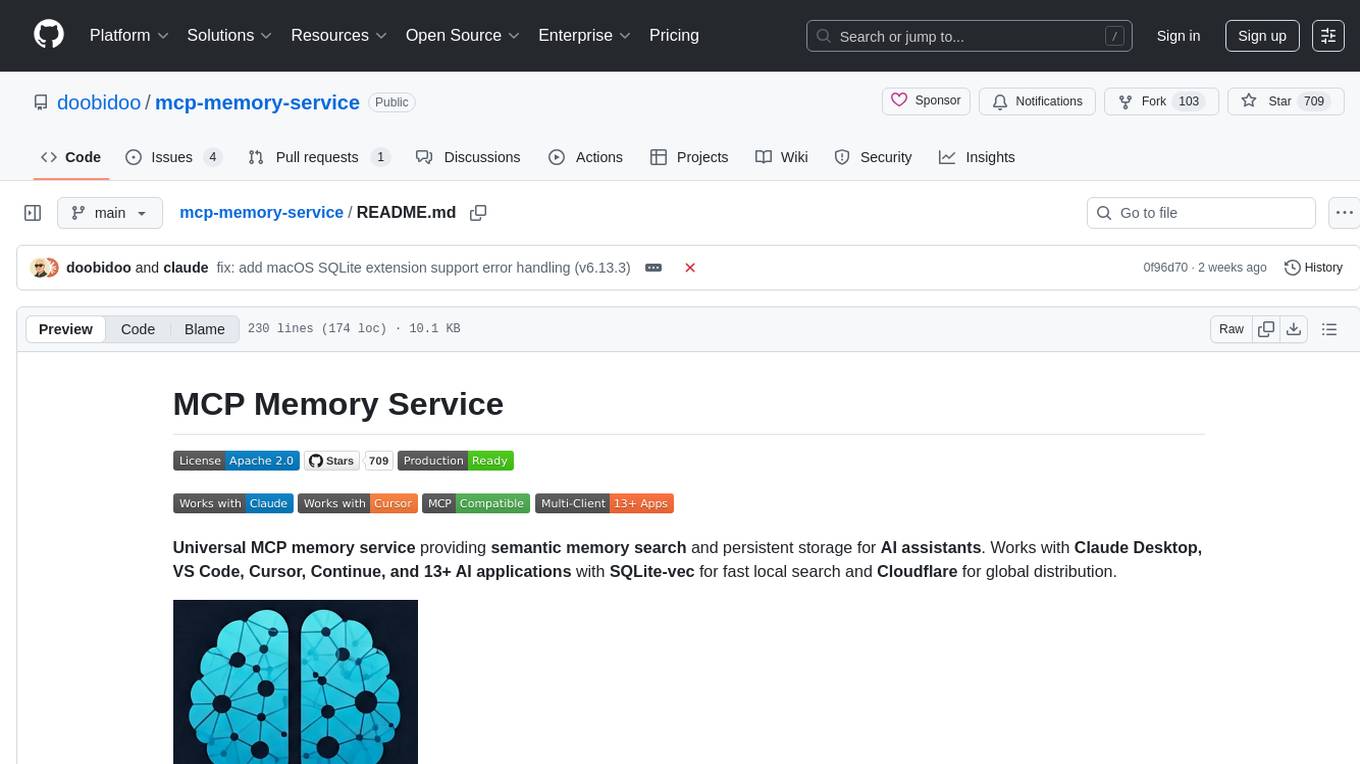

mcp-memory-service

The MCP Memory Service is a universal memory service designed for AI assistants, providing semantic memory search and persistent storage. It works with various AI applications and offers fast local search using SQLite-vec and global distribution through Cloudflare. The service supports intelligent memory management, universal compatibility with AI tools, flexible storage options, and is production-ready with cross-platform support and secure connections. Users can store and recall memories, search by tags, check system health, and configure the service for Claude Desktop integration and environment variables.

Matryoshka

Matryoshka is a tool that processes documents 100x larger than your LLM's context window without vector databases or chunking heuristics. It uses Recursive Language Models to reason about queries and output symbolic commands executed by a logic engine. The tool provides a constrained symbolic language called Nucleus based on S-expressions, ensuring reduced entropy, fail-fast validation, safe execution, and small model friendliness. It includes components like the Nucleus DSL, Lattice Engine, In-Memory Handle Storage, and the role of the LLM in reasoning. Matryoshka offers CLI tools for document analysis, MCP integration for token savings, and programmatic access. It supports symbol operations, collection operations, string operations, type coercion, program synthesis, cross-turn state, and final answer formatting.

LLM-TradeBot

LLM-TradeBot is an Intelligent Multi-Agent Quantitative Trading Bot based on the Adversarial Decision Framework (ADF). It achieves high win rates and low drawdown in automated futures trading through market regime detection, price position awareness, dynamic score calibration, and multi-layer physical auditing. The bot prioritizes judging 'IF we should trade' before deciding 'HOW to trade' and offers features like multi-agent collaboration, agent configuration, agent chatroom, AUTO1 symbol selection, multi-LLM support, multi-account trading, async concurrency, CLI headless mode, test/live mode toggle, safety mechanisms, and full-link auditing. The system architecture includes a multi-agent architecture with various agents responsible for different tasks, a four-layer strategy filter, and detailed data flow diagrams. The bot also supports backtesting, full-link data auditing, and safety warnings for users.

hugging-llm

HuggingLLM is a project that aims to introduce ChatGPT to a wider audience, particularly those interested in using the technology to create new products or applications. The project focuses on providing practical guidance on how to use ChatGPT-related APIs to create new features and applications. It also includes detailed background information and system design introductions for relevant tasks, as well as example code and implementation processes. The project is designed for individuals with some programming experience who are interested in using ChatGPT for practical applications, and it encourages users to experiment and create their own applications and demos.

supercompat

Supercompat is a tool that enables users to integrate various AI providers like Anthropic, Groq, or Mistral with the OpenAI-compatible Assistants API. It provides adapters for different AI services and storage options, allowing seamless communication between the user's application and the AI providers. With Supercompat, developers can easily leverage the capabilities of multiple AI services within their projects, enhancing the functionality and intelligence of their applications.

fit-framework

FIT Framework is a Java enterprise AI development framework that provides a multi-language function engine (FIT), a flow orchestration engine (WaterFlow), and a Java ecosystem alternative solution (FEL). It runs in native/Spring dual mode, supports plug-and-play and intelligent deployment, seamlessly unifying large models and business systems. FIT Core offers language-agnostic computation base with plugin hot-swapping and intelligent deployment. WaterFlow Engine breaks the dimensional barrier of BPM and reactive programming, enabling graphical orchestration and declarative API-driven logic composition. FEL revolutionizes LangChain for the Java ecosystem, encapsulating large models, knowledge bases, and toolchains to integrate AI capabilities into Java technology stack seamlessly. The framework emphasizes engineering practices with intelligent conventions to reduce boilerplate code and offers flexibility for deep customization in complex scenarios.

mcp-fusion

MCP Fusion is a Model-View-Agent framework for the Model Context Protocol, providing structured perception for AI agents with validated data, domain rules, UI blocks, and action affordances in every response. It introduces the MVA pattern, where a Presenter layer sits between data and the AI agent, ensuring consistent, validated, contextually-rich data across the API surface. The tool facilitates schema validation, system rules, UI blocks, cognitive guardrails, and action affordances for domain entities. It offers tools for defining actions, prompts, middleware, error handling, type-safe clients, observability, streaming progress, and more, all integrated with the Model Context Protocol SDK and Zod for type safety and validation.

GitVizz

GitVizz is an AI-powered repository analysis tool that helps developers understand and navigate codebases quickly. It transforms complex code structures into interactive documentation, dependency graphs, and intelligent conversations. With features like interactive dependency graphs, AI-powered code conversations, advanced code visualization, and automatic documentation generation, GitVizz offers instant understanding and insights for any repository. The tool is built with modern technologies like Next.js, FastAPI, and OpenAI, making it scalable and efficient for analyzing large codebases. GitVizz also provides a standalone Python library for core code analysis and dependency graph generation, offering multi-language parsing, AST analysis, dependency graphs, visualizations, and extensibility for custom applications.

forksilly.doc

ForkSilly.doc is a repository mainly for storing documentation of ForkSilly, an Android project developed using React Native/Expo. It is suitable for users with experience in SillyTavern. The project is self-shared and may not accept feature requests. It is designed for pure text cards, illustration cards, and Stable Diffusion text-image. It is compatible with SillyTavern V2 character cards, world books, regex, presets, and chat records. Users can import and export at any time. The tool supports various customization options such as chat font, background image, and quick toggle of preset entries. It also allows the use of various OpenAI-compatible APIs and provides built-in storage management features. Users can utilize text-image functionality and access free text-image services like pollinations.ai. Additionally, it supports Stable Diffusion text-image features and integration with silicon-based flow and Gemini embedding models. The tool does not support TTS or connecting to NAI.

langchain4j-aideepin

LangChain4j-AIDeepin is an open-source, offline deployable retrieval enhancement generation (RAG) project based on large language models such as ChatGPT and Langchain4j application framework. It offers features like registration & login, multi-session support, image generation, prompt words, quota control, knowledge base, model-based search, model switching, and search engine switching. The project integrates models like ChatGPT 3.5, Tongyi Qianwen, Wenxin Yiyuan, Ollama, and DALL-E 2. The backend uses technologies like JDK 17, Spring Boot 3.0.5, Langchain4j, and PostgreSQL with pgvector extension, while the frontend is built with Vue3, TypeScript, and PNPM.

mxcp

MXCP is an enterprise-grade MCP framework for building production-ready AI applications. It provides a structured methodology for data modeling, service design, smart implementation, quality assurance, and production operations. With built-in enterprise features like security, audit trail, type safety, testing framework, performance optimization, and drift detection, MXCP ensures comprehensive security, quality, and operations. The tool supports SQL for data queries and Python for complex logic, ML models, and integrations, allowing users to choose the right tool for each job while maintaining security and governance. MXCP's architecture includes LLM client, MXCP framework, implementations, security & policies, SQL endpoints, Python tools, type system, audit engine, validation & tests, data sources, and APIs. The tool enforces an organized project structure and offers CLI commands for initialization, quality assurance, data management, operations & monitoring, and LLM integration. MXCP is compatible with Claude Desktop, OpenAI-compatible tools, and custom integrations through the Model Context Protocol (MCP) specification. The tool is developed by RAW Labs for production data-to-AI workflows and is released under the Business Source License 1.1 (BSL), with commercial licensing required for certain production scenarios.

xln

XLN (Cross-Local Network) is a platform that enables instant off-chain settlement with on-chain finality. It combines Byzantine consensus, Bloomberg Terminal functionalities, and VR capabilities to run economic simulations in the browser without the need for a backend. The architecture includes layers for jurisdictions, entities, and accounts, with features like Solidity contracts, BFT consensus, and bilateral channels. The tool offers a panel system similar to Bloomberg Terminal for workspace organization and visualization, along with support for offline blockchain simulations in the browser and VR/Quest compatibility.

mcp-debugger

mcp-debugger is a Model Context Protocol (MCP) server that provides debugging tools as structured API calls. It enables AI agents to perform step-through debugging of multiple programming languages using the Debug Adapter Protocol (DAP). The tool supports multi-language debugging with clean adapter patterns, including Python debugging via debugpy, JavaScript (Node.js) debugging via js-debug, and Rust debugging via CodeLLDB. It offers features like mock adapter for testing, STDIO and SSE transport modes, zero-runtime dependencies, Docker and npm packages for deployment, structured JSON responses for easy parsing, path validation to prevent crashes, and AI-aware line context for intelligent breakpoint placement with code context.

For similar tasks

DeepMCPAgent

DeepMCPAgent is a model-agnostic tool that enables the creation of LangChain/LangGraph agents powered by MCP tools over HTTP/SSE. It allows for dynamic discovery of tools, connection to remote MCP servers, and integration with any LangChain chat model instance. The tool provides a deep agent loop for enhanced functionality and supports typed tool arguments for validated calls. DeepMCPAgent emphasizes the importance of MCP-first approach, where agents dynamically discover and call tools rather than hardcoding them.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.