fit-framework

FIT: 企业级AI开发框架,提供多语言函数引擎(FIT)、流式编排引擎(WaterFlow)及Java生态的LangChain替代方案(FEL)。原生/Spring双模运行,支持插件热插拔与智能聚散部署,无缝统一大模型与业务系统。

Stars: 1835

FIT Framework is a Java enterprise AI development framework that provides a multi-language function engine (FIT), a flow orchestration engine (WaterFlow), and a Java ecosystem alternative solution (FEL). It runs in native/Spring dual mode, supports plug-and-play and intelligent deployment, seamlessly unifying large models and business systems. FIT Core offers language-agnostic computation base with plugin hot-swapping and intelligent deployment. WaterFlow Engine breaks the dimensional barrier of BPM and reactive programming, enabling graphical orchestration and declarative API-driven logic composition. FEL revolutionizes LangChain for the Java ecosystem, encapsulating large models, knowledge bases, and toolchains to integrate AI capabilities into Java technology stack seamlessly. The framework emphasizes engineering practices with intelligent conventions to reduce boilerplate code and offers flexibility for deep customization in complex scenarios.

README:

Java 企业级 AI 开发框架,提供多语言函数引擎(FIT)、流式编排引擎(WaterFlow)及 Java 生态的 LangChain 替代方案(FEL)。原生 / Spring 双模运行,支持插件热插拔与智能聚散部署,无缝统一大模型与业务系统。

-

FIT Core:语言无界,算力随需

多语言函数计算底座(Java/Python/C++)支持插件化热插拔,独创智能聚散部署——代码无需修改,单体应用与分布式服务一键切换,运行时自动路由本地调用或 RPC,让基础设施成为「隐形的伙伴」。

-

WaterFlow Engine:流式智能,万物可编排

打破 BPM 与响应式编程的次元壁,图形化编排与声明式 API 双模驱动。业务逻辑可像乐高组合般动态拼接,从毫秒级微流程到跨系统长事务,皆以统一范式驾驭。

-

FEL (FIT Expression for LLM):Java 生态的 LangChain 革命

当 Python 阵营的 LangChain 重塑 AI 应用开发时,FEL 为 Java 开发者带来了更符合工程化实践的答案——基于标准化原语封装大模型、知识库与工具链,让 AI 能力真正融入 Java 技术栈的血脉。

- retrieve 样例

AiProcessFlow<Tip, Content> retrieveFlow = AiFlows.<Tip>create()

.runnableParallel(history(), passThrough())

.conditions()

.match(tip -> !tip.freeze().get(DEFAULT_HISTORY_KEY).text().isEmpty(),

node -> node.prompt(Prompts.human(REWRITE_PROMPT))

.generate(chatFlowModel)

.map(ChatMessage::text))

.others(node -> node.map(tip -> tip.freeze().get("query").text()))

.retrieve(new DefaultVectorRetriever(vectorStore, SearchOption.custom().topK(1).build()))

.synthesize(docs -> Content.from(docs.stream().map(Document::text).collect(Collectors.joining("\n\n"))))

.close();- agent 样例

AiProcessFlow<String, ChatMessage> agentFlow = AiFlows.<String>create()

.map(query -> Tip.fromArray(query))

.prompt(Prompts.human("{{0}}"))

.delegate(agent)

.close();-

约定优于配置的工程实践

FIT 通过智能约定大幅减少胶水代码:

- 部署无感化:聚散模式自动识别,开发者只需声明业务关系,无需手工标注远程/本地调用

- 协议透明化:HTTP/gRPC/共享内存等通信方式由框架按上下文智能选择

- 资源自管理:插件依赖自动注入,服务发现与熔断机制内置实现

这种「智能契约」机制,使得80%的通用场景实现零配置,同时保留20%复杂场景的深度定制能力。

- 填补空白:首个面向 Java 生态的 AI 全栈框架,让 Java 开发者无需切换技术栈即可构建现代 AI 应用

- 拒绝妥协:既保有 Python 生态的敏捷性,又继承 Java 体系的高性能与工程化优势

- 面向未来:从单机原型到云原生集群,架构弹性随业务共同进化

开发环境配置

- 开发环境:

IntelliJ IDEA - Java 17

- 代码格式化文件:CodeFormatterFromIdea.xml

-

Maven配置:推荐版本 Maven 3.8.8+

构建命令

mvn clean install

输出目录

build/

增加权限

chmod +x build/bin/*

启动命令

mkdir dynamic-plugins

cd dynamic-plugins

../build/bin/fit start

以上编译构建出的

fit命令可以通过系统操作(别名或添加系统路径)来简化输入。

配置系统环境变量及创建插件目录

- 首先用

maven编译打包./framework/fit/java,将build目录内容存储在本地fitframework目录下,此目录为 FIT 核心框架目录地址。 - 配置

FIT框架目录的系统环境变量,变量值为FIT核心框架目录地址,使fit命令可执行。例如FIT核心框架位置在/demo/fitframework,则变量值配置为/demo/fitframework。 - 新建任意目录作为插件目录,在该目录下存放插件,可在插件目录下使用命令

fit start启动服务。

以上环境配置步骤请根据使用的操作系统使用相应的路径分隔符和环境变量配置操作。

fit命令无法在包含 FIT 框架的目录中执行,只能够在一个新建的动态插件目录下执行。

- FIT 函数框架

- 请参考 FIT 快速开始,该指南将简单介绍 FIT 的核心设计概念,并指导您构建基础的应用。

- WaterFlow 流调度引擎

- 请参考 WaterFlow 快速开始,该指南将简单介绍 WaterFlow

声明式语法,并构建流程输出

hello world!。

- 请参考 WaterFlow 快速开始,该指南将简单介绍 WaterFlow

声明式语法,并构建流程输出

- FEL 标准原语

- 请参考 FEL 快速开始,该指南将简要介绍如何使用 FEL 构建端到端的大模型应用程序。

您可以从 docs 目录查看项目的完整文档,文档包含框架的快速入门指南和用户指导手册,并以一个基于本框架开发的大模型应用编排平台(Model

Engine)为例,向您介绍本框架在商业化的成熟产品中是如何应用的。

欢迎贡献者加入本项目。 请阅读 CONTRIBUTING.md,这将指导您完成分支管理、标签管理、提交规则、代码审查等内容。遵循这些指导有助于项目的高效开发和良好协作。

- 如果发现问题,可以在该项目的

Issue模块内提出。 - 微信公众号:

FitFramework。 - 微信技术交流群:通过公众号菜单“技术交流”点击获取最新群二维码。

- QQ技术交流群:

1029802553。

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for fit-framework

Similar Open Source Tools

fit-framework

FIT Framework is a Java enterprise AI development framework that provides a multi-language function engine (FIT), a flow orchestration engine (WaterFlow), and a Java ecosystem alternative solution (FEL). It runs in native/Spring dual mode, supports plug-and-play and intelligent deployment, seamlessly unifying large models and business systems. FIT Core offers language-agnostic computation base with plugin hot-swapping and intelligent deployment. WaterFlow Engine breaks the dimensional barrier of BPM and reactive programming, enabling graphical orchestration and declarative API-driven logic composition. FEL revolutionizes LangChain for the Java ecosystem, encapsulating large models, knowledge bases, and toolchains to integrate AI capabilities into Java technology stack seamlessly. The framework emphasizes engineering practices with intelligent conventions to reduce boilerplate code and offers flexibility for deep customization in complex scenarios.

aiotieba

Aiotieba is an asynchronous Python library for interacting with the Tieba API. It provides a comprehensive set of features for working with Tieba, including support for authentication, thread and post management, and image and file uploading. Aiotieba is well-documented and easy to use, making it a great choice for developers who want to build applications that interact with Tieba.

chatgpt-webui

ChatGPT WebUI is a user-friendly web graphical interface for various LLMs like ChatGPT, providing simplified features such as core ChatGPT conversation and document retrieval dialogues. It has been optimized for better RAG retrieval accuracy and supports various search engines. Users can deploy local language models easily and interact with different LLMs like GPT-4, Azure OpenAI, and more. The tool offers powerful functionalities like GPT4 API configuration, system prompt setup for role-playing, and basic conversation features. It also provides a history of conversations, customization options, and a seamless user experience with themes, dark mode, and PWA installation support.

DeepMCPAgent

DeepMCPAgent is a model-agnostic tool that enables the creation of LangChain/LangGraph agents powered by MCP tools over HTTP/SSE. It allows for dynamic discovery of tools, connection to remote MCP servers, and integration with any LangChain chat model instance. The tool provides a deep agent loop for enhanced functionality and supports typed tool arguments for validated calls. DeepMCPAgent emphasizes the importance of MCP-first approach, where agents dynamically discover and call tools rather than hardcoding them.

evalplus

EvalPlus is a rigorous evaluation framework for LLM4Code, providing HumanEval+ and MBPP+ tests to evaluate large language models on code generation tasks. It offers precise evaluation and ranking, coding rigorousness analysis, and pre-generated code samples. Users can use EvalPlus to generate code solutions, post-process code, and evaluate code quality. The tool includes tools for code generation and test input generation using various backends.

ai-real-estate-assistant

AI Real Estate Assistant is a modern platform that uses AI to assist real estate agencies in helping buyers and renters find their ideal properties. It features multiple AI model providers, intelligent query processing, advanced search and retrieval capabilities, and enhanced user experience. The tool is built with a FastAPI backend and Next.js frontend, offering semantic search, hybrid agent routing, and real-time analytics.

botserver

General Bots is a self-hosted AI automation platform and LLM conversational platform focused on convention over configuration and code-less approaches. It serves as the core API server handling LLM orchestration, business logic, database operations, and multi-channel communication. The platform offers features like multi-vendor LLM API, MCP + LLM Tools Generation, Semantic Caching, Web Automation Engine, Enterprise Data Connectors, and Git-like Version Control. It enforces a ZERO TOLERANCE POLICY for code quality and security, with strict guidelines for error handling, performance optimization, and code patterns. The project structure includes modules for core functionalities like Rhai BASIC interpreter, security, shared types, tasks, auto task system, file operations, learning system, and LLM assistance.

hugging-llm

HuggingLLM is a project that aims to introduce ChatGPT to a wider audience, particularly those interested in using the technology to create new products or applications. The project focuses on providing practical guidance on how to use ChatGPT-related APIs to create new features and applications. It also includes detailed background information and system design introductions for relevant tasks, as well as example code and implementation processes. The project is designed for individuals with some programming experience who are interested in using ChatGPT for practical applications, and it encourages users to experiment and create their own applications and demos.

QFurina

QFurina is a powerful and easily extensible Python QQ robot backend service that provides a range of automation and interactive features. It supports multiple messaging platforms and has a robust plugin system, allowing users to easily expand and customize functionality.

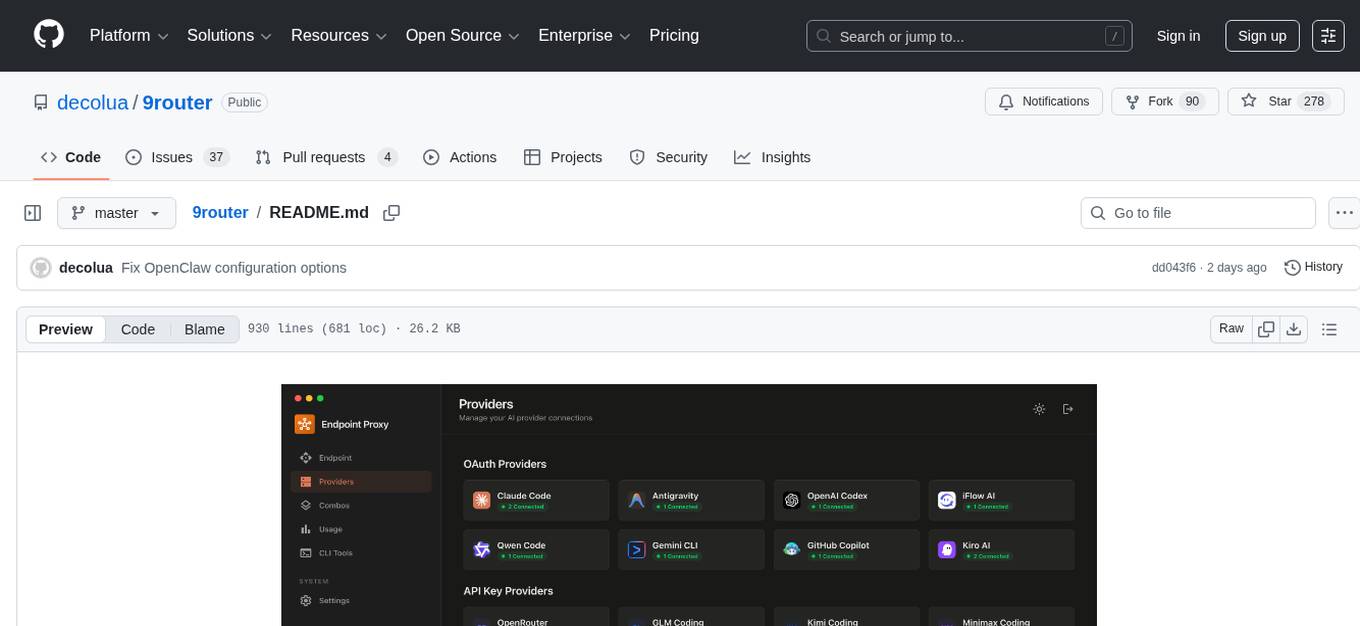

9router

9Router is a free AI router tool designed to help developers maximize their AI subscriptions, auto-route to free and cheap AI models with smart fallback, and avoid hitting limits and wasting money. It offers features like real-time quota tracking, format translation between OpenAI, Claude, and Gemini, multi-account support, auto token refresh, custom model combinations, request logging, cloud sync, usage analytics, and flexible deployment options. The tool supports various providers like Claude Code, Codex, Gemini CLI, GitHub Copilot, GLM, MiniMax, iFlow, Qwen, and Kiro, and allows users to create combos for different scenarios. Users can connect to the tool via CLI tools like Cursor, Claude Code, Codex, OpenClaw, and Cline, and deploy it on VPS, Docker, or Cloudflare Workers.

readme-ai

README-AI is a developer tool that auto-generates README.md files using a combination of data extraction and generative AI. It streamlines documentation creation and maintenance, enhancing developer productivity. This project aims to enable all skill levels, across all domains, to better understand, use, and contribute to open-source software. It offers flexible README generation, supports multiple large language models (LLMs), provides customizable output options, works with various programming languages and project types, and includes an offline mode for generating boilerplate README files without external API calls.

AutoAgents

AutoAgents is a cutting-edge multi-agent framework built in Rust that enables the creation of intelligent, autonomous agents powered by Large Language Models (LLMs) and Ractor. Designed for performance, safety, and scalability. AutoAgents provides a robust foundation for building complex AI systems that can reason, act, and collaborate. With AutoAgents you can create Cloud Native Agents, Edge Native Agents and Hybrid Models as well. It is so extensible that other ML Models can be used to create complex pipelines using Actor Framework.

yomitoku

YomiToku is a Japanese-focused AI document image analysis engine that provides full-text OCR and layout analysis capabilities for images. It recognizes, extracts, and converts text information and figures in images. It includes 4 AI models trained on Japanese datasets for tasks such as detecting text positions, recognizing text strings, analyzing layouts, and recognizing table structures. The models are specialized for Japanese document images, supporting recognition of over 7000 Japanese characters and analyzing layout structures specific to Japanese documents. It offers features like layout analysis, table structure analysis, and reading order estimation to extract information from document images without disrupting their semantic structure. YomiToku supports various output formats such as HTML, markdown, JSON, and CSV, and can also extract figures, tables, and images from documents. It operates efficiently in GPU environments, enabling fast and effective analysis of document transcriptions without requiring high-end GPUs.

ClaraVerse

ClaraVerse is a privacy-first AI assistant and agent builder that allows users to chat with AI, create intelligent agents, and turn them into fully functional apps. It operates entirely on open-source models running on the user's device, ensuring data privacy and security. With features like AI assistant, image generation, intelligent agent builder, and image gallery, ClaraVerse offers a versatile platform for AI interaction and app development. Users can install ClaraVerse through Docker, native desktop apps, or the web version, with detailed instructions provided for each option. The tool is designed to empower users with control over their AI stack and leverage community-driven innovations for AI development.

ChordMiniApp

ChordMini is an advanced music analysis platform with AI-powered chord recognition, beat detection, and synchronized lyrics. It features a clean and intuitive interface for YouTube search, chord progression visualization, interactive guitar diagrams with accurate fingering patterns, lead sheet with AI assistant for synchronized lyrics transcription, and various add-on features like Roman Numeral Analysis, Key Modulation Signals, Simplified Chord Notation, and Enhanced Chord Correction. The tool requires Node.js, Python 3.9+, and a Firebase account for setup. It offers a hybrid backend architecture for local development and production deployments, with features like beat detection, chord recognition, lyrics processing, rate limiting, and audio processing supporting MP3, WAV, and FLAC formats. ChordMini provides a comprehensive music analysis workflow from user input to visualization, including dual input support, environment-aware processing, intelligent caching, advanced ML pipeline, and rich visualization options.

HiveChat

HiveChat is an AI chat application designed for small and medium teams. It supports various models such as DeepSeek, Open AI, Claude, and Gemini. The tool allows easy configuration by one administrator for the entire team to use different AI models. It supports features like email or Feishu login, LaTeX and Markdown rendering, DeepSeek mind map display, image understanding, AI agents, cloud data storage, and integration with multiple large model service providers. Users can engage in conversations by logging in, while administrators can configure AI service providers, manage users, and control account registration. The technology stack includes Next.js, Tailwindcss, Auth.js, PostgreSQL, Drizzle ORM, and Ant Design.

For similar tasks

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.

AI-in-a-Box

AI-in-a-Box is a curated collection of solution accelerators that can help engineers establish their AI/ML environments and solutions rapidly and with minimal friction, while maintaining the highest standards of quality and efficiency. It provides essential guidance on the responsible use of AI and LLM technologies, specific security guidance for Generative AI (GenAI) applications, and best practices for scaling OpenAI applications within Azure. The available accelerators include: Azure ML Operationalization in-a-box, Edge AI in-a-box, Doc Intelligence in-a-box, Image and Video Analysis in-a-box, Cognitive Services Landing Zone in-a-box, Semantic Kernel Bot in-a-box, NLP to SQL in-a-box, Assistants API in-a-box, and Assistants API Bot in-a-box.

spring-ai

The Spring AI project provides a Spring-friendly API and abstractions for developing AI applications. It offers a portable client API for interacting with generative AI models, enabling developers to easily swap out implementations and access various models like OpenAI, Azure OpenAI, and HuggingFace. Spring AI also supports prompt engineering, providing classes and interfaces for creating and parsing prompts, as well as incorporating proprietary data into generative AI without retraining the model. This is achieved through Retrieval Augmented Generation (RAG), which involves extracting, transforming, and loading data into a vector database for use by AI models. Spring AI's VectorStore abstraction allows for seamless transitions between different vector database implementations.

ragstack-ai

RAGStack is an out-of-the-box solution simplifying Retrieval Augmented Generation (RAG) in GenAI apps. RAGStack includes the best open-source for implementing RAG, giving developers a comprehensive Gen AI Stack leveraging LangChain, CassIO, and more. RAGStack leverages the LangChain ecosystem and is fully compatible with LangSmith for monitoring your AI deployments.

breadboard

Breadboard is a library for prototyping generative AI applications. It is inspired by the hardware maker community and their boundless creativity. Breadboard makes it easy to wire prototypes and share, remix, reuse, and compose them. The library emphasizes ease and flexibility of wiring, as well as modularity and composability.

cloudflare-ai-web

Cloudflare-ai-web is a lightweight and easy-to-use tool that allows you to quickly deploy a multi-modal AI platform using Cloudflare Workers AI. It supports serverless deployment, password protection, and local storage of chat logs. With a size of only ~638 kB gzip, it is a great option for building AI-powered applications without the need for a dedicated server.

app-builder

AppBuilder SDK is a one-stop development tool for AI native applications, providing basic cloud resources, AI capability engine, Qianfan large model, and related capability components to improve the development efficiency of AI native applications.

cookbook

This repository contains community-driven practical examples of building AI applications and solving various tasks with AI using open-source tools and models. Everyone is welcome to contribute, and we value everybody's contribution! There are several ways you can contribute to the Open-Source AI Cookbook: Submit an idea for a desired example/guide via GitHub Issues. Contribute a new notebook with a practical example. Improve existing examples by fixing issues/typos. Before contributing, check currently open issues and pull requests to avoid working on something that someone else is already working on.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.